Abstract

Objective

To explore home care nurses’ numeracy and graph literacy and their relationship to comprehension of visualized data.

Materials and Methods

A multifactorial experimental design using online survey software. Nurses were recruited from 2 Medicare-certified home health agencies. Numeracy and graph literacy were measured using validated scales. Nurses were randomized to 1 of 4 experimental conditions. Each condition displayed data for 1 of 4 quality indicators, in 1 of 4 different visualized formats (bar graph, line graph, spider graph, table). A mixed linear model measured the impact of numeracy, graph literacy, and display format on data understanding.

Results

In all, 195 nurses took part in the study. They were slightly more numerate and graph literate than the general population. Overall, nurses understood information presented in bar graphs most easily (88% correct), followed by tables (81% correct), line graphs (77% correct), and spider graphs (41% correct). Individuals with low numeracy and low graph literacy had poorer comprehension of information displayed across all formats. High graph literacy appeared to enhance comprehension of data regardless of numeracy capabilities.

Discussion and Conclusion

Clinical dashboards are increasingly used to provide information to clinicians in visualized format, under the assumption that visual display reduces cognitive workload. Results of this study suggest that nurses’ comprehension of visualized information is influenced by their numeracy, graph literacy, and the display format of the data. Individual differences in numeracy and graph literacy skills need to be taken into account when designing dashboard technology.

Keywords: clinical dashboard, decision support systems, clinical, health literacy, graph literacy, numeracy, nursing informatics

BACKGROUND AND SIGNIFICANCE

Clinical dashboards are a form of health information technology that use data visualization techniques (graphical displays that summarize data) to provide feedback to health care professionals on their performance compared to quality metrics.1 Compared to other forms of feedback, clinical dashboards have the potential to provide feedback in real time when clinicians are engaged in care activities, rather than a retrospective summary of performance.2 Use of data visualization is thought to improve comprehension3,4 and reduce cognitive load,5 leading to more effective decision making. However, existing research on the use of clinical dashboards in health care settings has identified considerable variability in their impact on outcomes.6 This could be due to a number of factors, such as differences in the use of graphical displays (eg, bar graphs, line graphs) or how the dashboard is accessed by clinicians (either passively or proactively).6 It could also be due to variations in the levels of experience and expertise among clinicians.7,8 In addition, cognitive factors such as spatial ability affect how individuals perceive and use data presented in visualizations.9 Health care professionals are increasingly using diverse devices, including tablet computers and smartphones, to access health care data.10–12 However, there is currently little research exploring the impact of such devices on visualization comprehension in the context of nursing practice.10

Data visualization techniques such as those used in clinical dashboards assume that individual users are able to comprehend the data represented by the visualization. However, the ability to understand such information is often determined by the individual’s level of numeracy (the ability to understand numerical information) and graph literacy (the ability to understand information presented graphically).7,8,13–15 In general, high numeracy is related to one’s ability draw precise inferences from numerical data, such as interpreting and predicting numerical trends and understanding risk information.15 In contrast, individuals with low numeracy have limited ability to carry out basic mathematical operations, such as converting a percentage to a proportion.16 Graph literacy is a relatively recent concept15 that refers to how well individuals comprehend numeric information when it is displayed graphically. Gaissmaier et al.7 found that individuals with low graph literacy understood numerical information better if it was presented as numbers, whereas those with high graph literacy had better comprehension when the same information was presented in graphical format. The majority of research to date on the relationship between graph literacy and comprehension of health data has focused on risk communication with patients.8,14,17–21 Researchers have explored how different display types affect physicians’ decisions regarding clinical trial monitoring22 and the impact of graph literacy and numeracy in nurses.15,23 These studies highlight variations in decision outcomes depending on the type of graph display and suggest that when information is presented in a graphical format, nurses with higher graph literacy have better data comprehension than nurses with lower graph literacy.23 What is currently lacking is a specific exploration of how graph literacy and numeracy can interact and impact on clinicians’ (in this case nurses’) ability to interpret information presented in a visualized format.

OBJECTIVE

This study was designed to explore the impact of home care nurses’ numeracy and graph literacy on their comprehension of visualized data. The overall aim of our research is to develop dashboards to provide feedback to home care nurses at the point of care for patients with congestive heart failure (CHF) that are individualized to user characteristics, in order to increase data comprehension and support clinical decision making. In 2014, an estimated 3.4 million Medicare beneficiaries received skilled care from home health agencies in the United States,24 a number that is likely to increase with the aging population. Patients with CHF are at increased risk of rehospitalization,25 and home health patients with a primary diagnosis of heart failure have a 42% greater risk of hospital readmission (adjusted odds ratio 1.42, 95% confidence interval [CI], 1.37–1.48).26 Therefore, interventions designed to support clinicians in caring for CHF patients have the potential to improve quality outcomes in home care settings.

MATERIALS AND METHODS

The study used a multifactorial experimental design, with data collected via an online survey. The study was approved by the Institutional Review Boards at Columbia University Medical Center and the Visiting Nurse Service of New York.

Study measures and instrument development

Numeracy

Numeracy was measured using the expanded numeracy scale.16 This is an 11-item scale that evaluates numerical skills, such as the ability to identify differences in the size of health risks, conduct simple mathematical operations using percentages and proportions, convert percentages to proportions and vice versa, and convert probabilities to proportions. The scale has 3 general numeracy items and 8 expanded items that frame questions in the context of health risks. Higher scores on the scale are associated with higher numeracy. The scale has been widely used as a method for assessing objective numerical competence, with high reliability (Cronbach’s α ranging from 0.7 to 0.75). Using a median cutoff, individuals are categorized as having low numeracy if their score is <8 and high numeracy if their score is ≥8.16

Graph literacy

Graph literacy was measured using a scale developed by Galesic and Garcia-Retamero27 specifically for the health domain, to measure both basic and advanced graph reading skills and comprehension across different types of graphs.27 The scale consists of 13 items and measures 3 levels of graph comprehension: the ability to (1) read the data (eg, find specific information in a graph, such as a data point in a line graph), (2) read between the data (eg, identify relationships between data points on a graph, such as the difference in value between 2 data points), and (3) read beyond the data (eg, make inferences or predictions from the data, such as predicting a future trend from a line graph). The scale was originally tested in 2 samples from Germany and the United States, with high internal consistency (α = 0.74 and 0.79, respectively).27 A lower score on the scale represents lower graph literacy; using a median cutoff of 9, individuals with a score <9 are considered to have low graph literacy and those ≥9 high graph literacy.

Data and graph comprehension

Four indicators representing feedback about the quality of care provided to heart failure patients (percentage of CHF patients with a complete plan of care [last 8 weeks], percentage of high-risk CHF patients seen within 24 h of admission to home care, percentage of CHF patients with high risk of hospitalization, patient daily weight record) were developed. The data for each quality indicator could be presented in 1 of 4 presentation formats (bar graph, line graph, spider/radar graph, or table/text). These 4 presentation formats were chosen to reflect common ways of displaying quality information (tables, line graphs, bar graphs) and as a format that is possibly less familiar to nurses but is being used to display quality-related information (spider/radar graph).28 Following presentation of each quality indicator, participants were asked questions regarding their comprehension of the data presented in the graph/table (see Figure 1 for an example).

Figure 1.

Examples of 4 different formats of data presentation

Questions to capture demographic characteristics and to measure numeracy, graph literacy, and comprehension were combined into a single survey instrument with a total of 58 items (which could be accessed/completed on a mobile/tablet device or a personal computer), formatted using online software (www.surveygizmo.com). The software enables construction of different types of survey questions (such as multiple choice or free-text responses). Using the software, we translated the questions from the graph literacy scale and the numeracy scale into a computer-based format (exactly as they appear on paper). Each screen or page of the survey represented a different scale or measure. In addition, for the comprehension questions, nurses were presented with the information in 1 of the 4 experimental graph formats, followed by questions to test their understanding of that information.

Study sample, setting, and recruitment procedure

Eligibility criteria consisted of: (1) registered nurses (RNs) who were currently employed at a certified home health agency and (2) were visiting patients once or more per week. A convenience sample of nurses was recruited from 2 large, not-for-profit Medicare-certified home health agencies located in the Northeast region of the United States.

All potential participants (ie, all RNs identified by the 2 agencies’ Human Resources departments as working at the agencies) were sent an e-mail describing the purpose of the study, with a live link to the survey website. Each link was unique to the user, allowing him or her to save it and return to complete it at a later date. Reminders were sent to all nonresponders 2, 3, and 4 weeks after the initial e-mail. On completion, nurses could provide their contact details (separate from survey responses) to receive a $20 gift voucher in recognition of their time. We did not conduct a power analysis for estimating sample size; the total population of eligible nurses was contacted in both agencies, and those who chose to respond were included in our analysis. As this is the first study to explore the interaction between numeracy and graph literacy and the impact on understanding, we sought to recruit as many nurses as possible to identify potential differences that could be explored in future research.

Participants first completed screening questions to assess their eligibility to participate in the study and were asked to complete a demographic questionnaire, followed by the graph literacy scale and numeracy scale. They were then randomized (using the logic programming from the survey software) into 1 of 4 experimental groups (Table 1). The study was designed so that each participant received data for each quality indicator in 1 of each of the formats of data presentation. This method ensured that any differences observed in comprehension across graph formats was related to differences in numeracy or graph literacy, not the type of data displayed or the characteristics of nurses.

Table 1.

Experimental group conditions

| Experimental Group | Quality Indicator 1 | Quality Indicator 2 | Quality Indicator 3 | Quality Indicator 4 |

|---|---|---|---|---|

| 1 | Table | Line Graph | Bar Chart | Spider Graph |

| 2 | Line Graph | Bar Chart | Spider Graph | Table |

| 3 | Bar Chart | Spider Graph | Table | Line Graph |

| 4 | Spider Graph | Table | Line Graph | Bar Chart |

Outcome – data comprehension for quality indicators

Binary variables were created identifying participants’ correct interpretation for each of the 4 quality indicators presented in the graph/table format.

Predictors

Participants were first categorized by their numeracy (low or high) and second by their graph literacy (low or high).16,27 We then constructed 4 groups to reflect an individual’s combination of numeracy and graph literacy: low numeracy and low graph literacy, low numeracy and high graph literacy, high numeracy and low graph literacy, and high numeracy and high graph literacy. Type of data displayed (bar graph, line graph, spider graph, or table) and type of quality indicator (% CHF patients with a complete plan of care [last 8 weeks], % of high-risk CHF patients seen within 24 h of admission to home care, % of CHF patients with high risk of hospitalization, patient daily weight record) were also predictors of interest.

Confounders

Demographic characteristics obtained were age, sex, race/ethnicity (white vs other), highest level of education (associate’s degree/diploma, bachelor’s degree, postgraduate degree), highest level of professional nursing training (pre-bachelor’s, bachelor’s, postgraduate), years working as a nurse, agency tenure in years, and type of nursing position in the agency (staff vs per diem), as well as the agency worked for.

Data analysis

Descriptive statistics were used to examine the demographic characteristics of the sample population, and chi-square or T test was used to compare these characteristics between the 2 agencies. Mixed linear models were used to explore the impact of different display types on data comprehension, and to separately estimate the interaction of the effect between high-low numeracy and high-low graph literacy on data comprehension. In addition, a mixed linear model was used to estimate the interaction effects between the 4 groups of numeracy and graph literacy and type of display on data comprehension. Analyses were conducted separately for each agency as well as for all participants. Results were adjusted for multiple comparisons. All data were analyzed using SAS (version 9.4)

RESULTS

The survey was conducted between February 16 and May 26, 2016. E-mail invitations were sent out to 1052 nurses across the 2 agencies. In all, 322 individuals accessed the survey (30.6 % response rate); of these, 125 did not complete all of the survey questions. Twenty-three participants were disqualified (did not meet the inclusion criteria) and 102 gave partial responses (of whom 9 completed screening questions, 35 completed screening and demographic questions, 8 dropped out during completion of the graph literacy scale, 37 completed the graph literacy scale but did not start the numeracy scale, 1 started but did not complete the numeracy scale, and 12 completed the graph literacy and numeracy scales but did not take the rest of the survey). Overall, we received a total of 195 completed responses (129 from Agency 1 and 66 from Agency 2). Reliability of the numeracy scale for our sample was α = 0.68 and for the graph literacy scale was α = 0.76. Similar to previous studies,27 the numeracy and graph literacy scales were correlated (r = 0.56; P < .001), but with enough variance to indicate that they were measuring different concepts.

Characteristics of study participants

Table 2 summarizes characteristics of the study participants. They were predominantly female (89.7%), with an average age of 49 years (SD 11.0) and experienced (average years as a nurse, 19.7 [SD 11.4]). There were differences between the 2 agencies; nurses in Agency 1 were more ethnically diverse (41.9% vs 65.2% white race), were more likely to have a postgraduate degree (24.8% vs 11.1%), and had worked for a longer period of time with the agency (12.0 vs 5.5 years). There was no difference in mean numeracy or graph literacy between nurses at the 2 agencies; the mean numeracy score for the total sample was 8.4 (SD 2.0) and for numeracy was 9.7 (SD 2.4). In bivariate and multivariate analyses, mean graph literacy did not differ by sex, age, race (white vs non-white), education level, staff or per diem, agency, experience (years as a nurse), or agency tenure. Mean numeracy was slightly and inversely associated with years as a nurse (each year of additional nursing experience was associated with a 0.03 lower numeracy score [P = .014]) in bivariate analyses. After adjusting for other characteristics in multivariate analyses, years as a nurse was no longer significant (for an extra year, the nurse had a 0.032 lower numeracy score [P = .08]).

Table 2.

Characteristics of study participants

| Characteristic | Total Sample N = 195 | Agency 1 N = 129 | Agency 2 N = 66 | P-value |

|---|---|---|---|---|

| Female sex (%) | 175 (89.7) | 115 (89.2) | 60 (90.9) | .70 |

| Mean age in years (SD) | 49.0 (11.0) | 48.3 (11.0) | 50.5 (11.0) | .19 |

| Race/ethnicity (%) | ||||

| American Indian/Alaska native | 1 (0.5) | 0 | 1 (1.5) | |

| Asian | 28 (14.4) | 23 (17.8) | 5 (7.6) | |

| African American/black | 46 (23.6) | 33 (25.6) | 13 (19.7) | |

| Native Hawaiian/other Pacific Islander | 2 (1.0) | 2 (1.6) | 0 | |

| White | 97 (49.7) | 54 (41.8) | 43 (65.2) | |

| More than one race | 11 (5.6) | 9 (7.0) | 2 (3.0) | |

| Other | 10 (5.1) | 8 (6.2) | 2 (3.0) | |

| Education | <.001 | |||

| Associate’s degree/diploma (%) | 57 (29.2) | 27 (20.9) | 30 (45.5) | |

| Bachelor’s degree (%) | 102 (52.3) | 70 (54.3) | 32 (48.5) | |

| Postgraduate degree (%) | 36 (18.5) | 32 (24.8) | 4 (11.1) | |

| Nursing training level | <.001 | |||

| Pre-bachelor’s (%) | 69 (35.4) | 34 (26.4) | 35 (53.0) | |

| Bachelor’s (%) | 103 (52.8) | 75 (58.1) | 28 (42.4) | |

| Postgraduate (%) | 23 (11.8) | 20 (15.5) | 3 (4.6) | |

| Mean years as a nurse (SD) | 19.7 (11.4) | 20.2 (11.4) | 18.7 (11.4) | .39 |

| Mean agency tenure (years) (SD) | 9.8 (8.1) | 12.0 (7.7) | 5.5 (6.9) | <.001 |

| Staff nurse (vs per diem) (%) | 150 (76.9) | 101 (78.3) | 49 (74.2) | .53 |

| Mean total numeracy score (SD) | 8.4 (2.0) | 8.4 (2.0) | 8.5 (2.0) | .86 |

| Mean graphical score (SD) | 9.7 (2.4) | 9.5 (2.5) | 9.9 (2.2) | .34 |

Demographic characteristics of the survey respondents were compared to characteristics of the entire population of licensed practical nurses and registered nurses employed, using data supplied by the Human Resources departments of both agencies (Supplementary Table 1). Survey respondents tended to be better educated (a higher proportion with a postgraduate degree and fewer with an associate’s degree or diploma). There was a greater proportion of whites and a smaller proportion of African Americans than in the population as a whole. We found no significant difference in demographic characteristics of nurses who partially (n = 93) and fully completed the survey (Supplementary Table 2). However, a comparison of mean graph literacy scores between partial (n = 50) and complete survey responses found that individuals who completed the survey had significantly higher graph literacy scores (Supplementary Table 3). Mean numeracy scores did not differ between partial (n = 12) and complete survey responses.

Comprehension of display information

Across the whole sample, nurses understood information presented in the bar graph format most often (mean 88% correct responses), followed by table (81% correct responses), line graph (77% correct responses), and spider/radar graph (41% correct responses). There were no differences in comprehension of data by gender, age, race, education, experience, employment status, or home care agency.

Impact of numeracy and graph literacy on display comprehension

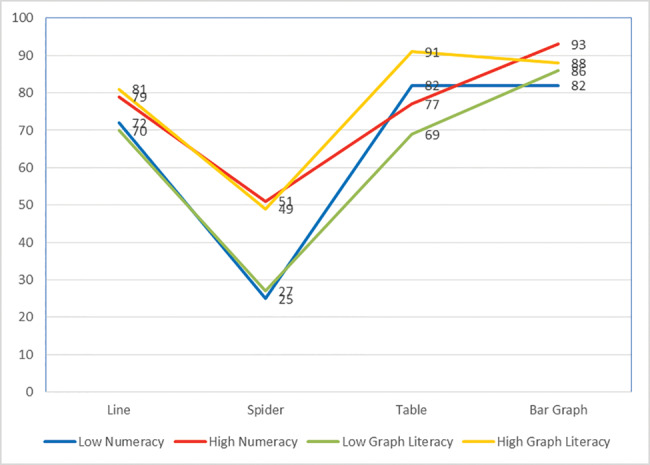

We categorized nurses as having either high (N = 147, 75%) or low (N = 48, 25%) numeracy and high (N = 146, 75%) or low (N = 49, 25%) graph literacy. Both numeracy and graph literacy were significantly related to data comprehension; nurses with low numeracy had lower overall comprehension (average 65% correct responses vs 75% for individuals with high numeracy; percent point difference: −10.0 [SE 3.6], P = .006), and nurses with low graph literacy also had lower overall comprehension (average 63% correct responses vs 77% for individuals with high graph literacy; percent point difference: −14.4 [SE 3.6], P < .001).

There were also interactions between numeracy and graph literacy and display type (Figure 2 and Table 3). Individuals with low numeracy were significantly less likely to comprehend data in spider/radar graphs compared to individuals with high numeracy. Individuals with low graph literacy were significantly less likely to comprehend data in both spider/radar graphs and tables compared to individuals with high graph literacy.

Figure 2.

Interactions between numeracy, graph literacy, and display type

Table 3.

Interaction between numeracy, graph literacy, and display type

| Numeracy/Graph Literacy Profile | Percent Point Difference (SE) | P-value |

|---|---|---|

| Numeracy (low vs high) | ||

| Line graph | −7.0 (6.0) | .27 |

| Spider/radar graph | −26.0 (6.0) | <.01** |

| Table | 4.0 (7.0) | .53 |

| Bar graph | −11.0 (6.0) | .09 |

| Graph literacy (low vs high) | ||

| Line graph | −11.0 (6.0) | .1 |

| Spider/radar graph | −22.0 (6.0) | <.01** |

| Table | −22.0 (7.0) | <.01** |

| Bar graph | −2.0 (6.0) | .78 |

*P < .05; **P < .01.

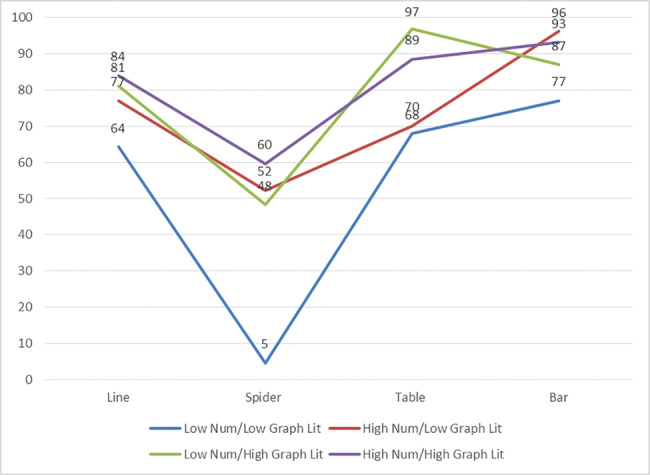

Nurse responders were then tabulated into 4 categories: (1) low numeracy and low graph literacy (n = 24; 12%), (2) high numeracy and low graph literacy (n = 24; 12%), (3) low numeracy and high graph literacy (n = 25; 13%), and (4) high numeracy and high graph literacy (n = 122; 63%). Nurses with high numeracy and high graph literacy had a mean of 81% correct responses, those with low numeracy and high graph literacy had a mean of 78% correct responses, those with high numeracy and low graph literacy had a mean of 74% correct responses, and those with low numeracy and low graph literacy had a mean of 54% correct responses. A mixed linear model was used to identify potential interactions between graph literacy and numeracy and information display type (Figure 3 ).

Figure 3.

Percentage of correct responses for each information display type, according to participants’ numeracy and graph literacy

There were significant interaction effects depending on an individual’s graph literacy and numeracy (Table 4). Nurses with low numeracy and low graph literacy were less able to understand and interpret information compared to nurses with high numeracy and high graph literacy across all 4 display types. High numeracy and/or high graph literacy appears to be associated with improved understanding of spider/radar graphs, and individuals with high graph literacy appear to have greater comprehension of information presented in a table format compared to individuals with low graph literacy.

Table 4.

Interactions between numeracy, graph literacy, and display type

| Numeracy/Graph Literacy Profile | Percent Point Difference (SE) | P-value |

|---|---|---|

| Line graph | ||

| LNLG vs HNLG | −12.7 (10.2) | .227 |

| LNLG vs LNHG | −16.7 (10.6) | .114 |

| LNLG vs HNHG | −19.7 (8.4) | .02* |

| HNLG vs LNHG | −4 .0 (1.0) | .692 |

| HNLG vs HNHG | −7.0 (7.9) | .376 |

| LNHG vs HNHG | −3.0 (7.9) | .707 |

| Spider graph | ||

| LNLG vs HNLG | −47.8 (10.4) | <.0001** |

| LNLG vs LNHG | −43.8 (10.3) | <.0001** |

| LNLG vs HNHG | −55.2 (7.8) | <.0001** |

| HNLG vs LNHG | 4.0 (10.7) | .707 |

| HNLG vs HNHG | −7.4 (8.3) | .377 |

| LNHG vs HNHG | −11.4 (8.3) | .169 |

| Table | ||

| LNLG vs HNLG | −21.0 (1.1) | .847 |

| LNLG vs LNHG | −28.9 (1.1) | .01* |

| LNLG vs HNHG | −20.6 (8.9) | .02* |

| HNLG vs LNHG | −26.8 (1.04) | .01* |

| HNLG vs HNHG | −18.5 (8.0) | .02* |

| LNHG vs HNHG | 8.3 (8.0) | .31 |

| Bar graph | ||

| LNLG vs HNLG | −19.1 (10.4) | .069 |

| LNLG vs LNHG | −9.9 (10.2) | .333 |

| LNLG vs HNHG | −16.1 (8.1) | .049* |

| HNLG vs LNHG | 9.1 (10.2) | .369 |

| HNLG vs HNHG | 3.0 (8.1) | .714 |

| LNHG vs HNHG | −6.2 (7.8) | .431 |

LNLG = low numeracy, low graph literacy (numeracy < 8, graph literacy < 9)

HNLG = high numeracy, low graph literacy (numeracy ≥ 8, graph literacy < 9)

LNHG = low numeracy, high graph literacy (numeracy < 8, graph literacy ≥ 9)

HNHG = high numeracy, high graph literacy (numeracy ≥ 8, graph literacy ≥ 9)

*P < .05; **P < .01.

DISCUSSION

Our study highlights the importance of understanding the numeracy and graph literacy abilities of home care nurses when considering how to develop tools to provide information effectively at the point of care to inform decision making. We found variations in numeracy and graph literacy in our sample population; while our sample had higher numeracy and graph literacy skills than identified in the wider US population,27 a significant proportion of nurses would be classified has having low numeracy and low graph literacy. In addition, a greater proportion of nurses in our study scored high on measures of both numeracy and graph literacy compared to previous studies examining nurses.15 This difference may be due to variations in the methods of measuring numeracy; our study used an objective measure of numeracy that requires individuals to provide answers to numerical problems, compared to a subjective measure that asks respondents to provide their subjective perception of numerical ability, and variations in the cutoffs used to distinguish between high and low numeracy and graph literacy.

The findings also highlight the importance of considering numeracy and graph literacy when evaluating data presentation formats. Our results suggest that individuals with low numeracy and low graph literacy have considerable comprehension issues regardless of how data are presented. In contrast, individuals with high graph literacy (regardless of their numeracy) are able to comprehend data presented quantitatively more effectively than those with low graph literacy. Our findings are similar to those of previous studies exploring nurses’ understanding of data presented in different formats in a clinical decision support system23 and of studies conducted among the general public.17 In general, those with higher graph literacy skills benefit more from data presented visually in formats such as icon arrays and bar and line graphs, particularly if they have low numeracy.17 In contrast, individuals with low numeracy skills may benefit from having data presented in a specific format, such as bar graphs or tables.

These findings may explain the lack of impact of some clinical dashboards on patient care outcomes. The dashboards that were evaluated in a recent systematic review varied considerably in the ways they displayed information to clinicians.6 This included using different types of graphical interface, such as bar graphs, line graphs, and thermometer icons,29 and combinations of text and color indicators.30–35 To our knowledge, no existing research to date has explored in detail whether or how these various types of data displays enhance or decrease clinicians’ understanding of data. It is therefore equally unclear how their behavior might change on the basis of how data are displayed in clinical dashboards. Of particular concern is the large number of studies that predominantly used data displays in the form of tables (enhanced by color indicators). Individuals who have low numeracy and low graph literacy may have specific issues around comprehending this type of data effectively, indicating a need for dashboard designers to consider alternative forms of data display.

The findings of our study have important implications for the design of technology systems such as clinical dashboards. In general, there is an assumption that presenting data in a visualized format will improve data comprehension, by reducing the cognitive workload associated with processing the information.3,4 However, our findings would suggest that consideration also needs to be given to individual variations in clinicians’ ability to understand information. Although individuals with high graph literacy may be able to comprehend a large proportion of data presented in dashboard visualizations, those with low graph literacy or numeracy may not.

Strengths and weaknesses of the study

Strengths of our study include a large home care nurse sample recruited from 2 health care agencies, with variations in age, experience, and race/ethnicity; use of a validated objective measure of numeracy (compared to previous studies that used subjective measures)15,23; a validated measure of graph literacy; and an experimental research design. By manipulating different types of information display and randomizing participants to different experimental groups, we were able to explore the impact of numeracy and graph literacy while controlling for order effects if we had held the presentation of quality indicators and graphical displays constant.

There are some limitations that need to be highlighted. Our sample was self-selected (in that participants chose to respond to the survey invitation) and we had a large dropout rate for individuals who started but did not complete the survey. Analysis of the uncompleted responses may indicate that our sample is more graph literate and numerate than the overall home care nurse population, and this may have affected the results of our study. Further, we used specific graphical displays, some of which may not be commonly used as methods of providing data in clinical dashboards to individuals in a health care setting. Commonly, dashboards provide data on a number of quality indicators at once and can have a mixture of different types of data display on 1 screen. Further research will be required to explore the impact of more complex data displays on understanding. In addition, our study was limited to 1 type of clinician population (home care nurses) and the results may not be generalizable to other health care settings or other populations of clinicians.

Future research

This study explored how variations in numeracy and graph literacy in home care nurses could influence their understanding of data displayed in different formats. Further research to explore numeracy and graph literacy in populations of clinicians across health care settings and professional groups would provide valuable insights into whether and how variations might impact the ability to comprehend data presented in different visualized formats. In addition, further research into how to provide support for clinicians to enable them to comprehend data presented in visualizations such as dashboards might be required before they can be fully utilized in a clinical setting.

Individual clinician differences are only 1 of a number of factors that can influence how dashboards are used in clinical practice settings. Further research needs to consider other aspects of dashboard design (such as the availability of dashboards during clinical workflow), user requirements (how the dashboard fits with existing workflow and technology systems), and the effect of different types of device interface (such as mobile devices) as well as explore the impact of dashboard technology on clinician behavior. Dashboard designers need to consider developing technology that can be adapted to individual user characteristics, so that the way data are displayed maximizes the user’s ability to comprehend the data.

CONCLUSION

Clinical dashboards are increasingly used as a way to provide information to clinicians in a visualized format regarding specific quality of care indicators, with the aim of improving decision making and care quality. Our findings strongly suggest that developers of dashboard systems need to consider providing flexibility for users to display the same information in different ways (eg, as a table, line graph, or bar graph). Our findings further confirm the need to evaluate end users’ ability to understand information displays before a dashboard for general use in a health care setting is released.

Funding

This project was supported by grant number R21HS023855 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

Competing Interests

None.

Contributors

DD designed the study, contributed to data collection, revised the manuscript, and is the guarantor. DR and JM helped design the study, contributed to data collection, and revised the manuscript. YB conducted the analysis and revised the manuscript. NO helped design the survey, conducted data collection, and revised the manuscript. RR contributed to data collection and revised the manuscript. All authors had full access to the data, including statistical reports and tables in the study, and take responsibility for the integrity of the data and the accuracy of the data analysis. All authors approved the final version of the manuscript.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

References

- 1. Daley K, Richardson J, James I, Chambers A, Corbett D. Clinical dashboard: use in older adult mental health wards. The Psychiatrist. 2013;37:85–88. [Google Scholar]

- 2. Bennett JW, Glasziou PP. Computerised reminders and feedback in medication management: a systematic review of randomised controlled trials. Med J Australia. 2003;178:217–22. [DOI] [PubMed] [Google Scholar]

- 3. Van Der Meulen M, Logie RH, Freer Y, Sykes C, McIntosh N, Hunter J. When a graph is poorer than 100 words: a comparison of computerised natural language generation, human generated descriptions and graphical displays in neonatal intensive care. Appl Cogn Psychol. 2010;241:77–89. [Google Scholar]

- 4. Hutchinson J, Alba JW, Eisenstein EM. Heuristics and biases in data-based decision making: effects of experience, training, and graphical data displays. J Market Res. 2010;474:627–42. [Google Scholar]

- 5. Harvey N. Learning judgment and decision making from feedback. In: Dhami M, Schlottmann A, Waldmann M, eds. Judgement and Decision Making as a Skill Learning, Development and Evolution. Cambridge, UK: Cambridge University Press; 2013: 199–226. [Google Scholar]

- 6. Dowding D, Randell R, Gardner P, et al. Dashboards for improving patient care: review of the literature. Int J Med Inform. 2015;842:87–100. [DOI] [PubMed] [Google Scholar]

- 7. Gaissmaier W, Wegwarth O, Skopec D, Muller AS, Broschinski S, Politi MC. Numbers can be worth a thousand pictures: individual differences in understanding graphical and numerical representations of health-related information. Health Psychol. 2012;313:286–96. [DOI] [PubMed] [Google Scholar]

- 8. Okan Y, Garcia-Retamero R, Cokely ET, Maldonado A. Individual differences in graph literacy: overcoming denominator neglect in risk comprehension. J Behav Decis Mak. 2012;254:390–401. [Google Scholar]

- 9. Ziemkiewicz C, Ottley A, Crouser R, Chauncey K, Su S, Chang R. Understanding visualization by understanding individual users. IEEE Comput Graphics Appl. 2012;326:88–94. [DOI] [PubMed] [Google Scholar]

- 10. Dexheimer J, Borycki E. Use of mobile devices in the emergency department: a scoping review. Health Inform J. 2015;214:306–15. [DOI] [PubMed] [Google Scholar]

- 11. Bang M, Solnevik K, Eriksson H, eds. The nurse watch: design and evaluation of a smart watch application with vital sign monitoring and checklist reminders. AMIA Ann Symp Proc. 2015;2015:314–19. [PMC free article] [PubMed] [Google Scholar]

- 12. Mickan S, Atherton H, Roberts N, Heneghan C, Tilson J. Use of handheld computers in clinical practice: a systematic review. BMC Med Inform Decis Mak. 2014;14:56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Okan Y, Galesic M, Garcia-Retamero R. How people with low and high graph literacy process health graphs: evidence from eye-tracking. J Behav Decis Mak. 2016;29(2–3):271–94. [Google Scholar]

- 14. Okan Y, Garcia-Retamero R, Galesic M, Cokely ET. When higher bars are not larger quantities: on individual differences in the use of spatial information in graph comprehension. Spatial Cogn Comput. 2012;12(2–3):195–218. [Google Scholar]

- 15. Lopez KD, Wilkie DJ, Yao YP, et al. Nurses’ numeracy and graphical literacy: informing studies of clinical decision support interfaces. J Nurs Care Qual. 2016;312:124–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Lipkus IM, Samsa G, Rimer BK. General performance on a numeracy scale among highly educated samples. Med Decis Mak. 2001;211:37–44. [DOI] [PubMed] [Google Scholar]

- 17. Garcia-Retamero R, Cokely ET. Communicating health risks with visual aids. Curr Direct Psychol Sci. 2013;225:392–99. [Google Scholar]

- 18. Garcia-Retamero R, Galesic M. How to reduce the effect of framing on messages about health. J Gen Int Med. 2010;2512:1323–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Garcia-Retamero R, Galesic M, Gigerenzer G. Improving comprehension and communication of risks about health. Psicothema. 2011;234:599–605. [PubMed] [Google Scholar]

- 20. Garcia-Retamero R, Munoz R. How to improve comprehension of medical risks in older adults. Revista Latinoamericana De Psicol. 2013;452:253–64. [Google Scholar]

- 21. Rodriguez V, Andrade AD, Garcia-Retamero R, et al. Health literacy, numeracy, and graphical literacy among veterans in primary care and their effect on shared decision making and trust in physicians. J Health Commun. 2013;18:273–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Elting L, Martin C, Cantor S, Rubenstein E. Influence of data display formats on physician investigators’ decisions to stop clinical trials: prospective trial with repeated measures. Brit Med J. 1999;318:1527–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Febretti A, Sousa VEC, Lopez KD, et al. One Size Doesn’t Fit All: The Efficiency of Graphical, Numerical and Textual Clinical Decision Support for Nurses. In: Proceedings of the IEEE VIS Workshop on Visualization of Electronic Health Records, Paris, France. 2014. http://www.cs.umd.edu/hcil/parisehrvis/. [Google Scholar]

- 24. Centers for Medicare and Medicaid Services. Process-Based Quality Improvement Manual (PBQI). Baltimore, MD: Centers for Medicare and Medicaid Services; 2010. [Google Scholar]

- 25. Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. New Engl J Med. 2009;36014:1418–28. [DOI] [PubMed] [Google Scholar]

- 26. Fortinsky R, Madigan E, Sheehan T, Tullai-McGuinness S, Kleppinger A. Risk factors for hospitalization in a national sample of Medicare home health care patients. J Appl Gerontol. 2014;334:474–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Galesic M, Garcia-Retamero R. Graph literacy: a cross-cultural comparison. Med Decis Mak. 2011;313:444–57. [DOI] [PubMed] [Google Scholar]

- 28. Kobuse H, Morishima T, Tanaka M, Murakami G, Hirose M, Imanaka Y. Visualizing variations in organizational safety culture across an inter-hospital multifaceted workforce. J Eval Clin Pract. 2014;20:273–80. [DOI] [PubMed] [Google Scholar]

- 29. Ahern DK, Stinson LJ, Uebelacker LA, Wroblewski JP, McMurray JH, Eaton CB. E-health blood pressure control program. J Med Pract Manag 2012;282:91–100. [PubMed] [Google Scholar]

- 30. Batley NJ, Osman HO, Kazzi AA, Musallam KM. Implementation of an emergency department computer system: design features that users value. J Emerg Med. 2011;416:693–700. [DOI] [PubMed] [Google Scholar]

- 31. Koopman RJ, Kochendorfer KM, Moore JL, et al. A diabetes dashboard and physician efficiency and accuracy in accessing data needed for high-quality diabetes care. Ann Fam Med. 2011;9:398–405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Zayfudim V, Dossett LA, Starmer JM, et al. Implementation of a real-time compliance dashboard to help reduce SICU ventilator-associated pneumonia with the ventilator bundle. Arch Surg 2009;1447:656–62. [DOI] [PubMed] [Google Scholar]

- 33. Morgan MB, Branstetter BI, Lionetti DM, Richardson JS, Chang PJ. The radiology digital dashboard: effects on report turnaround time. J Digital Imag. 2008;211:50–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. McMenamin J, Nicholson R, Leech K. Patient dashboard: the use of a colour-coded computerised clinical reminder in Whanganui regional general practices. J Primary Health Care. 2011;34:307–10. [PubMed] [Google Scholar]

- 35. Waitman LR, Phillips IE, McCoy AB, et al. Adopting real-time surveillance dashboards as a component of an enterprisewide medication safety strategy. Jt Comm J Qual Patient Safety. 2011;37: 326–32. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.