Abstract

Introduction

A growing variety of diverse data sources is emerging to better inform health care delivery and health outcomes. We sought to evaluate the capacity for clinical, socioeconomic, and public health data sources to predict the need for various social service referrals among patients at a safety-net hospital.

Materials and Methods

We integrated patient clinical data and community-level data representing patients’ social determinants of health (SDH) obtained from multiple sources to build random forest decision models to predict the need for any, mental health, dietitian, social work, or other SDH service referrals. To assess the impact of SDH on improving performance, we built separate decision models using clinical and SDH determinants and clinical data only.

Results

Decision models predicting the need for any, mental health, and dietitian referrals yielded sensitivity, specificity, and accuracy measures ranging between 60% and 75%. Specificity and accuracy scores for social work and other SDH services ranged between 67% and 77%, while sensitivity scores were between 50% and 63%. Area under the receiver operating characteristic curve values for the decision models ranged between 70% and 78%. Models for predicting the need for any services reported positive predictive values between 65% and 73%. Positive predictive values for predicting individual outcomes were below 40%.

Discussion

The need for various social service referrals can be predicted with considerable accuracy using a wide range of readily available clinical and community data that measure socioeconomic and public health conditions. While the use of SDH did not result in significant performance improvements, our approach represents a novel and important application of risk predictive modeling.

Keywords: social determinants of health, supervised machine learning, delivery of health care, integrated, wraparound social services

INTRODUCTION

An unprecedented and ever-increasing availability of diverse data sources has the potential to better inform health services delivery and health system performance.1 The widespread adoption of electronic health records (EHRs) has increased the volume of electronically captured clinical data.2 Additionally, the growing use of interoperable systems and health information exchange (HIE) promotes easier access to more actionable information across different systems.3 Moreover, a growing number of social determinants of health (SDH) datasets describing social, physical, and policy environments in communities can be integrated with clinical information to augment overall data utility.4 Not surprisingly, researchers, policy-makers, and practitioners find the prospect of data reflective of individual health and society-level determinants potentially transformative.5

Predictive modeling is one area of health care where emerging nonclinical data sources can be leveraged,6–9 and it commonly supports organizational planning, intervention allocation, risk adjustment, research, and health policy.9–11 Also, predictive models that include broader measures of patient SDH may help align precision medicine and population health approaches for improving health.12,13 While efforts to build predictive risk models using additional nonclinical data describing social and environmental contexts are gradually becoming more widespread,14–18 examples of such approaches tend to be limited to a minimal number of additional measures or data sources and are applied to a limited set of clinical outcomes. Further, the value of adding nonclinical (SDH) data has not been firmly established, with studies reporting significant and minimal performance improvements.19

Growing computing power, storage capacity, and data availability present the opportunity to develop, implement, and study predictive models using SDH data. We sought to evaluate the performance characteristics of predictive models that use combinations of clinical, socioeconomic, and public health data. Moreover, we sought to evaluate the contributions of these data to the novel outcome of referrals to social services that inherently address patients’ SDH (eg, dietetics, social work, mental health). A focus on referrals to such services is especially relevant, given that these services are intended to directly address the risk factors represented by many of the new nonclinical data sources,20 and health organizations are exploring risk prediction to better match patients to nonmedical services.21 We tested the performance of risk prediction models developed with and without additional community-level socioeconomic public health indicators in an adult primary care safety-net population.

MATERIALS AND METHODS

Patient sample

We identified a population of 84 317 adult patients (≥18 years old) who had at least one outpatient visit between 2011 and 2016 at Eskenazi Health, the public safety-net health system of Marion County in Indianapolis, Indiana, comprising a hospital and several federally qualified health center sites.

Data sources

The primary data sources for the patient cohort were Eskenazi Health’s EHR22 and the local HIE, known as the Indiana Network for Patient Care (INPC).23,24 Both structured and unstructured clinical data were derived from the EHR, and the INPC provided out-of-network encounter data from health care facilities across the state. To these we added local area socioeconomic indicator data largely derived from the US Census Bureau and public health indicators from the Marion County Public Health Department’s vital statistics system and community health surveys. Data from US Census Bureau indicators were measured at the census tract level, while the public health indicators were measured at the zip code level.

Outcome measures: referrals to social determinants of health services

During the study period, Eskenazi Health offered multiple social services designed to address patients’ SDH. These services were co-located within federally qualified health centers with primary care clinics. For the current study, we developed models predicting the need for referrals to any social services overall and for referrals to individual SDH services, and the union of all services. SDH services included mental health services, dietitian counseling, social work services, and all other social services (including respiratory therapy, financial planning, medical-legal partnership assistance, patient navigation, and pharmacist consultation). Any service delivered to <5% of the cohort yielded insufficient individual power and was therefore included in the aggregate “other” category. Using the Eskenazi EHR billing and encounter data, scheduling system data (including kept, missed, and cancelled appointments), and unstructured EHR orders and notes, we identified the specific SDH services for which patients were referred during the 4-year study period. We assumed that patients receiving a particular SDH service had been referred for that service, even of no referral record was found.

Indicators: preprocessing and selection

From the EHR and INPC data we abstracted patient diagnoses (International Classification of Diseases, Ninth and Tenth Revision codes), patient demographics (age, race/ethnicity, and gender), and counts of health care encounters. The dataset covered all health care visits captured by the INPC. For decision modeling purposes, we processed the data as follows:

Diagnoses were reduced to binary indicators (present, absent) for the 20 most common chronic conditions25 and tobacco use.26 We also calculated Charlson comorbidity index scores for each patient27 using diagnosis codes.

Race and ethnicity were coded as series of mutually exclusive binary indicators for Hispanic, African American, white (non-Hispanic), other, and unknown. Gender was expressed as a binary indicator. Patient age was determined at the study period midpoint and included as an integer variable.

Encounter frequency was reported as counts stratified by outpatient visits, emergency department encounters, and inpatient admissions.

The above approach to structuring clinical diagnosis, demographic, and encounter data yielded 41 features, which comprised our clinical data vector.

A total of 48 socioeconomic and public health indicators were selected to represent economic stability, neighborhood and physical environment, education, food, community and social context, and health care system–based categories included in the Kaiser Family Foundation framework for social determinants of health28 (see Supplementary Appendix A). Due to the high variability among distributions for each feature, we classified features into bin sizes determined by Sturges’ rule.29 The master data vector comprises both the clinical data vector and the aforementioned social determinants.

Decision models

We split the patient population of each data vector into 2 randomly selected groups: 90% of the patient population (training data) and 10% of the patient population (test data). Low prevalence of any outcome can produce an imbalanced dataset, and thus negatively impact decision model performance.30 To overcome this, we oversampled the 90% training dataset using the Synthetic Minority Over-sampling Technique.31

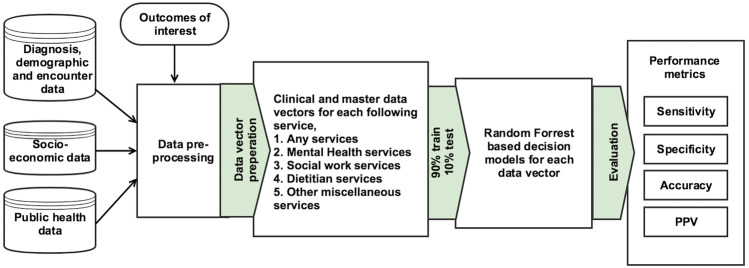

Each training dataset was used to build a decision model using the random forest classification algorithm.32 Random forest was selected due to its proven track record in health care decision-making applications, together with its ability to perform internal feature selection.33 We configured a total of 10 decision models for 10 different datasets (2 data vectors × 5 outcomes of interest). All data cleaning, decision model development, and testing were performed using Python and scikit-learn software.34Figure 1 presents a flow diagram depicting our study approach.

Figure 1.

The complete study approach, from data collection and decision model building to evaluation of results.

Analysis

We assessed the performance of each decision model using the 10% test dataset. For each outcome under test, we used a paired sample t-test to compare the performance of decision models built using clinical and master datasets.35 For each record in the 10% test dataset, each decision model produced a predicted outcome (needs referral/does not need referral) together with a predicted probability score. We used the Youden index36 to calculate optimal sensitivity and specificity scores for each decision model. This was done to calculate sensitivity, specificity, accuracy, positive predictive value (PPV), and area under the receiver operating curve (ROC) for each of the models, together with 95% confidence intervals (CIs).

RESULTS

The patients included in the study (see Table 1) reflected an adult, urban, primary care safety-net population: predominantly female (64.9%), ethnically diverse (only 1 out of 4 patients was white, non-Hispanic), and with high chronic disease burdens. As expected, patients who had referrals for any social service presented a greater illness burden than those who did not.

Table 1.

Characteristics of the adult primary care patient sample included in risk predictive modeling

| Characteristic of interest | Prevalence across all patients (%) | Prevalence across patients with need for any social services (%) | Prevalence across patients without need for any social services (%) |

|---|---|---|---|

| Demographics | |||

| Age (mean, SD) | 43.9 (15.6) | 44.9 (14.8) | 42.7 (16.4) |

| Male gender | 35.1 | 32.2 | 38.4 |

| Race/ethnicity | |||

| White, non-Hispanic | 25.2 | 27.4 | 22.8 |

| African American, non-Hispanic | 37.2 | 39.2 | 35 |

| Hispanic | 19.5 | 19.7 | 19.2 |

| Other | 18.1 | 13.7 | 23 |

| Diagnosis | |||

| Hypertension | 38.7 | 48.2 | 27.9 |

| Congestive heart failure | 4.5 | 5.8 | 3.1 |

| Coronary artery disease | 6.6 | 8.2 | 4.7 |

| Cardiac arrhythmias | 7.5 | 9.1 | 5.7 |

| Hyperlipidemia | 18.6 | 24.3 | 12.1 |

| Stroke | 3.6 | 4.5 | 2.6 |

| Arthritis | 9.5 | 12.2 | 6.4 |

| Asthma | 7.9 | 10.6 | 4.7 |

| Cancer | 7.6 | 8.7 | 6.3 |

| Chronic obstructive pulmonary disease | 9.5 | 12.8 | 5.8 |

| Depression | 19 | 27.3 | 9.8 |

| Diabetes | 20.3 | 28.6 | 10.9 |

| Hepatitis | 3.8 | 4.7 | 2.7 |

| HIV | 0.8 | 0.8 | 0.9 |

| Osteoporosis | 1.7 | 1.8 | 1.4 |

| Schizophrenia | 3.1 | 3.9 | 2.1 |

| Substance abuse | 15.1 | 18.7 | 11 |

| External injury | 17.3 | 20.4 | 13.9 |

| Charlson index score (mean, SD) | 0.8 (1.3) | 1 (1.3) | 0.5 (1.0) |

| Tobacco use | 21.3 | 26.4 | 15.6 |

| Hospitalizations (mean, SD) | 1.4 (5.1) | 1.4 (6.1) | 0.9 (2.7) |

| Emergency department visits (mean, SD) | 8.0 (20.8) | 8.4 (23.4) | 5.2 (14) |

| Outpatient (mean, SD) | 28.2 (28.7) | 30.9 (30.6) | 17.1 (23.8) |

SD, standard deviation.

The majority of patients (53%) were referred to at least one social service. The most commonly referred service was dietitian (33%), followed by mental health services (19%) and social work (9%). Approximately 1 in 5 patients was referred to at least one of the remaining low-prevalence services, which included respiratory therapy, financial planning, medical-legal partnership assistance, patient navigation, and pharmacist consultation (Table 2).

Table 3.

Area under the ROC curve values for each decision model

| Clinical data vector model | Master data vector model | |

|---|---|---|

| Any referrals | 0.7454 | 0.741 |

| Mental health referrals | 0.7849 | 0.7776 |

| Social work referrals | 0.7311 | 0.7136 |

| Dietitian referrals | 0.7426 | 0.7302 |

| Other social determinants of health service referrals | 0.7106 | 0.7082 |

Table 2.

Distribution of need for social services across overall patient population and across patients with a need for any social services

| Need for social services | Prevalence across all patients (%) | Prevalence across patients with need for any social services (%) |

|---|---|---|

| Any services | 53.04 | 100 |

| Mental health services | 18.51 | 34.9 |

| Social work services | 8.69 | 16.39 |

| Dietitian services | 32.57 | 61.42 |

| Other miscellaneous services | 20.01 | 19.3 |

Using the aforementioned Synthetic Minority Over-sampling Technique, we increased the rate of social work referrals in the training dataset from 9% to 20% prevalence to address data imbalance for decision model building.

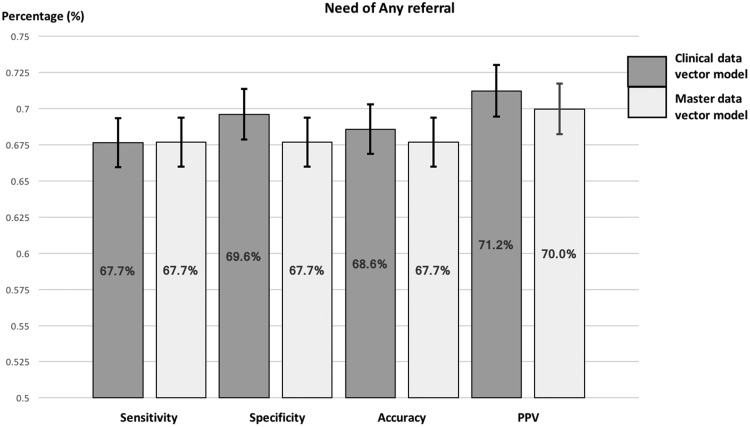

Need for any referrals

As presented in Figure 2 and the results table (Supplementary Appendix B), the decision model built using the clinical data vector demonstrated useful discriminating power with an area under the curve (AUC) value of 0.745. This model had a sensitivity of 67.6%, specificity of 69.6%, accuracy of 68.6%, and PPV of 71.2%. In comparison, the decision model built using the master data vector, which included socioeconomic and public health features, reported a sensitivity of 67.7%, specificity of 67.7%, accuracy of 67.7%, PPV of 70.0%, and an AUC value of 0.741. These measures did not differ significantly from those produced by the clinical data vector model (P > 0.05), as evidenced by the overlapping 95% CIs.

Figure 2.

Sensitivity, specificity, accuracy, PPV: the sensitivity values of decision models to predict the need for any referrals using the clinical and master data vectors, together with 95% confidence interval for each performance metric.

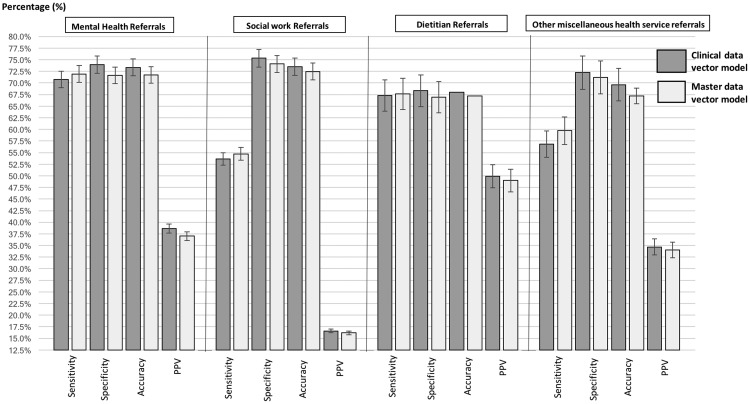

Figure 3.

Sensitivity, specificity, accuracy, PPV: the sensitivity values of decision models to predict the need for mental health, social work, dietitian, and other SDH service referrals using the clinical and master data vectors, together with 95% confidence interval for each performance metric.

Need for other types of referrals

As presented in Figure 2 and the results table (Supplementary Appendix B), the decision model built using the clinical data vector predicted the need for mental health referrals with an AUC of 0.785. This model reported a sensitivity of 70.7%, specificity of 74.0%, and accuracy of 73.4%. In comparison, the master data vector model reported an AUC of 0.778, sensitivity of 71.9%, specificity of 71.7%, and accuracy of 71.7%. However, these results were not significantly different. Both models produced comparatively low, although statistically similar, PPV measures: 38.6% using clinical data only and 37.0% using the master data vector.

In predicting social work referrals, the clinical data vector model demonstrated a sensitivity of 53.6%, specificity of 75.3%, accuracy of 73.5%, and PPV of 16.6%. In comparison, the master data vector reported a sensitivity of 53.6%, specificity of 74.1%, accuracy of 72.5%, and PPV of 16.6%. The clinical data model reported an AUC value of 0.731, while the master data model reported a AUC value of 0.713. None of these results were significantly different. However, we note that sensitivity and PPV values reported by both models were considerably smaller than the other models. Additionally, both sensitivity measures reported large 95% CIs. The clinical data vector model for predicting a need for dietitian referrals reported a sensitivity of 67.3%, specificity of 68.3%, accuracy of 67.9%, PPV of 49.9%, and an AUC of 0.743, while the decision model built using the master data vector reported a sensitivity of 67.3%, specificity of 66.9%, accuracy of 67.2, PPV of 49%, and an AUC of 0.73. As evidenced by the overlapping 95% CIs, there was no statistically significant difference across any of the performance metrics reported for both models.

The clinical data vector model for predicting other miscellaneous health service referrals demonstrated useful explanatory power, with an AUC value of 0.711. This model had a sensitivity of 56.8%, specificity of 72.2%, accuracy of 69.6%, and PPV of 34.7%. In comparison, the master data vector model reported an AUC value of 0.708, sensitivity of 59.7%, specificity of 71.2%, accuracy of 68.9%, and PPV of 34.1%. Again, none of these measures presented a significant difference between the 2 models.

DISCUSSION

This study indicates that it is feasible to predict a need for various social services with considerable sensitivity, specificity, and accuracy using a range of readily available clinical and community data, including socioeconomic and public health data. Thus, our results suggest reasons to further integrate existing social service information into the health care delivery process.

First, our prediction models perform within the range of useful discriminatory ability.37 The estimated AUC ranged from 70% to 78%. While performance can be improved, our findings are consistent with the global performance of prediction models in the areas of mortality,38 hospital readmissions,39 and individual disease development.40 However, PPV scores were weaker for several outcomes. While decision models predicting a need for any service exhibited PPV values greater than 65%, similar models predicting a need for individual services yielded PPVs below 40%. We attribute smaller PPV values to the low rate of some service referrals. Because PPV evaluates the probability that a patient predicted to need a service truly needs the service, risk prediction is more suitable for more prevalent outcomes of interest.

Moreover, the addition of socioeconomic and public health data did not lead to statistically significant performance improvement in any of our predictive models. While one may hypothesize that additional social determinants data can augment predictive models, the literature has been mixed,19 and this study does not help to resolve that ambiguity. The lack of significant model performance improvement when adding socioeconomic and public health data may be due to the study population, which reflects patients seeking care within a relatively constrained safety-net geography. The similar socioeconomic and public health contexts may have limited the overall discriminatory power of these factors in our sample. A larger, granular, and more socioeconomically diverse sample would present greater variance, and thus may lead to different findings.

Regardless of the contribution of socioeconomic and public health data, this study represents a novel and important application of predictive risk modeling. Predicting the need for social services referrals is responsive to recent calls for analytics that better match patients to services based on need, and also to match patients to services that address the upstream determinants of health.12,41 More importantly, services such as social work, mental health, dietitian counseling, medical-legal partnerships, and others are of growing importance to health care organizations that, under changing reimbursement policies, are incentivized to prevent illness and promote health. The services delivered by these professionals directly address the determinants of health and support prevention activities.42,43 Because physicians are not trained to provide these services,44 patient receipt of the services depends on referrals to partner organizations or other care team members.45 Accurate stratification by risk is critical for the efficient and effective delivery of such services.46

For health care organizations considering predictive modeling as a method to support patient segmentation and service matching, these findings provide some caveats and practical advice. Although structured data such as patient diagnoses, demographics, and encounter data were critical to the prediction models, we obtained data on actual referrals for social determinants of health services from unstructured sources. Consequently, implementing such analytics may require new data-collection processes. Also, even with complete data, referrals must be sufficiently prevalent to support effective modeling. Further, while incorporating socioeconomic and public health data into predictive risk modeling is feasible, more study is needed to elucidate the specific risks identified by these new data sources and to understand the implications for health care intervention. Lastly, we inferred referrals from the presence of provider-entered orders or patient encounters for the service of interest. Thus, our study did not examine the effectiveness of risk prediction models against a more formal gold standard. The effectiveness of these models and the utility of their prediction accuracy will likely depend on the role of the user (eg, clinician, administrator, or program manager).

We identified several avenues for future research. In the current study, we adopted a binary (present/absent) outcome flag to train each decision model. We hypothesize that increasing the granularity of the feature vector by tabulating counts of referral types for each patient will enrich outcome measures, and thereby increase model performance. Missed appointments have also demonstrated an adverse impact on patient outcomes, and thus warrant explicit inclusion in revised versions of our models.47,48 Finally, future research should consider time duration between initiating a referral and experiencing an encounter event such as a documented visit or a missed appointment.

Limitations

We note several limitations of this study. As presented in Tables 1 and 2 and Supplementary Appendix A, socioeconomic and public health indicators of the study population were consistent with an urban, disadvantaged safety-net population. Patients resided in areas with lower-than-average life expectancies and incomes and a higher proportion of poverty. Additionally, the prevalence of tobacco use (24.6%) was higher than the national average. As a result, our models may not generalize to other commercially insured or broader populations. Due to the unavailability of a full record review of all patients who should have received a referral, our study adopted provider referrals as a gold standard. Doing so may have impacted the accuracy of our results. Also, the specific social determinants of health and HIE data sources may not be available in other settings. While we leveraged structured clinical data and socioeconomic indicators from the US Census Bureau, we also relied on unstructured clinical data to measure the need for services designed to address social determinants of health, including HIE data and local public health surveys, which may not have direct parallels in other locations.

Our approach used only population-level SDH factors. Patient-level SDH factors, while difficult, can be obtained from existing EHR data.49 Including such factors may have improved model performance. Furthermore, model performance was related to the prevalence of service referrals. Settings with different referral rates, or those that do not offer social determinant of health services as co-located services with primary care, may have different results. Additionally, we considered all patient data recorded during the 5-year period under study, and not necessarily only data on events that occurred prior to the referrals of interest. Failing to consider temporal relationships between diagnosis and services may have negatively impacted decision model performance. Lastly, our patient data were not exhaustive; we may have failed to obtain data that were not part of the INPC or were not available in structured format.

CONCLUSION

Advances in interoperability, health information infrastructure, and curation of various sources of data have paved the way to leveraging a broad range of clinical and social determinants of health for informed decision-making for health care. Our results indicate the potential to predict the need for various social services with considerable accuracy and represent a model for reimplementation across other datasets, health outcomes, and patient populations.

Funding

This research was supported by the Robert Wood Johnson Foundation through the Systems for Action National Coordinating Center, ID 73485.

Competing interests

The authors declare no competing interests.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

We thank the Indiana University Polis Center, Indianapolis, for providing community-level social determinants data used in this study, and the Regenstrief Data Core for its role in obtaining clinical data. We also thank Eskenazi Health of Indianapolis for its support in completing this study.

References

- 1. Roski J, Bo-Linn GW, Andrews TA. Creating value in health care through big data: opportunities and policy implications. Health Affairs. 2014;337:1115–22. [DOI] [PubMed] [Google Scholar]

- 2. Charles D, Gabriel M, Searcy T. Adoption of Electronic Health Record Systems among US Non-Federal Acute Care Hospitals: 2008–2014. Washington, DC: Office of the National Coordinator for Health Information Technology; 2016. [Google Scholar]

- 3. Furukawa MF, Patel V, Charles D, Swain M, Mostashari F. Hospital electronic health information exchange grew substantially in 2008–12. Health Affairs. 2013;328:1346–54. [DOI] [PubMed] [Google Scholar]

- 4. Comer KF, Grannis S, Dixon BE, Bodenhamer DJ, Wiehe SE. Incorporating geospatial capacity within clinical data systems to address social determinants of health. Public Health Rep. 2011;126(3 Suppl):54–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Murdoch TB, Detsky AS. The inevitable application of big data to health care. JAMA. 2013;30913:1351–52. [DOI] [PubMed] [Google Scholar]

- 6. Schneeweiss S. Learning from big health care data. New Engl J Med. 2014;37023:2161–63. [DOI] [PubMed] [Google Scholar]

- 7. Bates DW, Saria S, Ohno-Machado L, Shah A, Escobar G. Big data in health care: using analytics to identify and manage high-risk and high-cost patients. Health Affairs. 2014;337:1123–31. [DOI] [PubMed] [Google Scholar]

- 8. Krumholz HM. Big data and new knowledge in medicine: the thinking, training, and tools needed for a learning health system. Health Affairs. 2014;337:1163–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Kansagara D, Englander H, Salanitro A, et al. Risk prediction models for hospital readmission: a systematic review. JAMA. 2011;306:1688–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Cousins MS, Shickle LM, Bander JA. An introduction to predictive modeling for disease management risk stratification. Dis Manage. 2002;5:157–67. [Google Scholar]

- 11. Vuik SI, Mayer EK, Darzi A. Patient segmentation analysis offers significant benefits for integrated care and support. Health Affairs. 2016;35:769–75. [DOI] [PubMed] [Google Scholar]

- 12. Vest JR, Harle CA, Schleyer T, et al. Getting from here to there: health IT needs for population health. Am J Managed Care. 2016;2212:827–29. [PubMed] [Google Scholar]

- 13. Khoury MJ, Galea S. Will precision medicine improve population health? JAMA. 2016;31613:1357–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Corrigan JD, Bogner J, Pretz C, et al. Use of neighborhood characteristics to improve prediction of psychosocial outcomes: a traumatic brain injury model systems investigation. Arch Phys Med Rehabil. 2012;938:1350–8. e2. [DOI] [PubMed] [Google Scholar]

- 15. Baldwin L-M, Klabunde CN, Green P, Barlow W, Wright G. In search of the perfect comorbidity measure for use with administrative claims data: does it exist? Med Care. 2006;448:745–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Zolfaghar K, Meadem N, Teredesai A, Roy SB, Chin S-C, Muckian B, ed. Big data solutions for predicting risk-of-readmission for congestive heart failure patients. 2013 IEEE International Conference on Big Data. 2013. [Google Scholar]

- 17. Fiscella K, Tancredi D. Socioeconomic status and coronary heart disease risk prediction. JAMA. 2008;30022:2666–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Khalilia M, Chakraborty S, Popescu M. Predicting disease risks from highly imbalanced data using random forest. BMC Med Inform Dec Mak. 2011;111:51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Franks P, Tancredi DJ, Winters P, Fiscella K. Including socioeconomic status in coronary heart disease risk estimation. Ann Fam Med. 2010;85:447–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Institute of Medicine, Board on Population Health and Public Health Practice, Committee on the Recommended Social and Behavioral Domains and Measures for Electronic Health Records. Capturing Social and Behavioral Domains in Electronic Health Records, Phase 1. Washington, DC: Institute of Medicine; 2014. [Google Scholar]

- 21. Haas LR, Takahashi PY, Shah ND, et al. Risk-stratification methods for identifying patients for care coordination. Am J Managed Care. 2013;199:725–32. [PubMed] [Google Scholar]

- 22. McDonald CJ, Tierney WM, Overhage JM, Martin D, Wilson G. The Regenstrief Medical Record System: 20 years of experience in hospitals, clinics, and neighborhood health centers. MD Comput. 1991;94:206–17. [PubMed] [Google Scholar]

- 23. McDonald CJ, Overhage JM, Barnes M, et al. The Indiana network for patient care: a working local health information infrastructure. Health Affairs. 2005;245:1214–20. [DOI] [PubMed] [Google Scholar]

- 24. Overhage JM. The Indiana health information exchange. In: Dixon BE, ed. Health Information Exchange: Navigating and Managing a Network of Health Information Systems. 1st ed.Waltham, MA: Academic Press; 2016: 267–79. [Google Scholar]

- 25. Goodman RA, Posner SF, Huang ES, Parekh AK, Koh HK. Peer reviewed: defining and measuring chronic conditions: imperatives for research, policy, program, and practice. Prev Chronic Dis. 2013;10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Wiley LK, Shah A, Xu H, Bush WS. ICD-9 tobacco use codes are effective identifiers of smoking status. J Am Med Inform Assoc. 2013;204:652–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Charlson ME, Charlson RE, Peterson JC, Marinopoulos SS, Briggs WM, Hollenberg JP. The Charlson comorbidity index is adapted to predict costs of chronic disease in primary care patients. J Clin Epidemiol. 2008;6112:1234–40. [DOI] [PubMed] [Google Scholar]

- 28. Heiman HJ, Artiga S. Beyond health care: the role of social determinants in promoting health and health equity. Health. 2015;2010. [Google Scholar]

- 29. Scott DW. Sturges’ rule. Wiley Interdiscip Rev Comput Stat. 2009;13:303–06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Longadge R, Dongre S. Class imbalance problem in data mining review. arXiv preprint arXiv:13051707. 2013. [Google Scholar]

- 31. Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP. SMOTE: synthetic minority over-sampling technique. J Artif Intell Res. 2002;16:321–57. [Google Scholar]

- 32. Breiman L. Bagging predictors. Mach Learn. 1996;242:123–40. [Google Scholar]

- 33. Liaw A, Wiener M. Classification and regression by randomForest. R News. 2002;23:18–22. [Google Scholar]

- 34. Pedregosa F, Varoquaux G, Gramfort A, et al. Scikit-learn: machine learning in Python. J Mach Learn Res. 2011;12:2825–30. [Google Scholar]

- 35. Haynes W. Student’s t-test. In: Encyclopedia of Systems Biology. New York: Springer; 2013: 2023–25. [Google Scholar]

- 36. Youden WJ. Index for rating diagnostic tests. Cancer. 1950;31:32–35. [DOI] [PubMed] [Google Scholar]

- 37. Swets JA. Measuring the accuracy of diagnostic systems. Science. 1988;2404857:1285. [DOI] [PubMed] [Google Scholar]

- 38. Alba AC, Agoritsas T, Jankowski M, et al. Risk prediction models for mortality in ambulatory heart failure patients: a systematic review. Circ Heart Fail. 2013;65:881–89. [DOI] [PubMed] [Google Scholar]

- 39. Kansagara D, Englander H, Salanitro A, et al. Risk prediction models for hospital readmission: a systematic review. JAMA. 2011;30615:1688–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Echouffo-Tcheugui JB, Batty GD, Kivimäki M, Kengne AP. Risk models to predict hypertension: a systematic review. PLoS ONE. 2013;87:e67370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Powers BW, Navathe AS, Aung K-K, Jain SH, ed. Patients as customers: applying service industry lessons to health care. Healthcare. Elsevier; 2013. [DOI] [PubMed] [Google Scholar]

- 42. Stanhope V, Videka L, Thorning H, McKay M. Moving toward integrated health: an opportunity for social work. Soc Work Health Care. 2015;545:383–407. [DOI] [PubMed] [Google Scholar]

- 43. Sandel M, Hansen M, Kahn R, et al. Medical-legal partnerships: transforming primary care by addressing the legal needs of vulnerable populations. Health Affairs. 2010;299:1697–705. [DOI] [PubMed] [Google Scholar]

- 44. Loeb DF, Binswanger IA, Candrian C, Bayliss EA. Primary care physician insights into a typology of the complex patient in primary care. Ann Fam Med. 2015;135:451–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Jackson GL, Powers BJ, Chatterjee R, et al. The patient-centered medical home: a systematic review. Ann Int Med. 2013;1583:169–78. [DOI] [PubMed] [Google Scholar]

- 46. Hewner S, Casucci S, Sullivan S, et al. Integrating social determinants of health into primary care clinical and informational workflow during care transitions. eGEMS. 2017;52:2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Nguyen DL, DeJesus RS, Wieland ML. Missed appointments in resident continuity clinic: patient characteristics and health care outcomes. J Grad Med Educ. 2011;33:350–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Horberg MA, Hurley LB, Silverberg MJ, Klein DB, Quesenberry CP, Mugavero MJ. Missed office visits and risk of mortality among HIV-infected subjects in a large healthcare system in the United States. AIDS Patient Care STDs. 2013;278:442–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Hollister BM, Restrepo NA, Farber-Eger E, Crawford DC, Aldrich MC, Non A. Development and performance of text-mining algorithms to extract socioeconomic status from de-identified electronic health records. Pac Symp Biocomput. 2017;22:230–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.