Abstract

Background

Drug prescription errors are made, worldwide, on a daily basis, resulting in a high burden of morbidity and mortality. Existing rule-based systems for prevention of such errors are unsuccessful and associated with substantial burden of false alerts.

Objective

In this prospective study, we evaluated the accuracy, validity, and clinical usefulness of medication error alerts generated by a novel system using outlier detection screening algorithms, used on top of a legacy standard system, in a real-life inpatient setting.

Materials and Methods

We integrated a novel outlier system into an existing electronic medical record system, in a single medical ward in a tertiary medical center. The system monitored all drug prescriptions written during 16 months. The department’s staff assessed all alerts for accuracy, clinical validity, and usefulness. We recorded all physician’s real-time responses to alerts generated.

Results

The alert burden generated by the system was low, with alerts generated for 0.4% of all medication orders. Sixty percent of the alerts were flagged after the medication was already dispensed following changes in patients’ status which necessitated medication changes (eg, changes in vital signs). Eighty-five percent of the alerts were confirmed clinically valid, and 80% were considered clinically useful. Forty-three percent of the alerts caused changes in subsequent medical orders.

Conclusion

A clinical decision support system that used a probabilistic, machine-learning approach based on statistically derived outliers to detect medication errors generated clinically useful alerts. The system had high accuracy, low alert burden and low false-positive rate, and led to changes in subsequent orders.

Keywords: drug prescription errors, adverse drug events, outlier system, drug safety, patient safety, in-patient setting

INTRODUCTION

Preventable prescription errors and adverse drug events (ADEs) are estimated to account for 1 out of 131 outpatient deaths and 1 out of 854 inpatient deaths in the US,1 with a direct cost of more than $20 billion and liability cost of more than $13 billion.2–4 It is recognized that while prescription errors and ADEs are ultimately caused by errors made by individuals, they are failures in computerized health information systems.5

Current approaches to minimize such errors include various clinical decision support (CDS) alerting systems, but they often identify only a small fraction of the errors and suffer from high incidence of false alerts, resulting in “alert fatigue,” inevitably disrupting workflows.6–8 In addition, being based on a predetermined database and rules, such CDS systems (CDSS) inherently miss error types that have not been anticipated or programmed into the decision support software rules.9 Moreover, in contrast to rule-based CDSS that monitor the actual instance of drug prescription and no longer affect the ongoing treatment of patients, there is a need for systems that could actively monitor and identify emerging ADEs along the evolution of the hospitalization to enable early intervention and reduce harm.10 The dynamic nature of patient status during hospitalization, due to either clinical improvement or deterioration, raises a need for more dynamic CDSS in order to better ensure patient safety.

MedAware (Raanana, Israel) is a commercial software screening system developed for identification and prevention of prescription errors. It uses machine-learning algorithms to identify and intercept potential medication prescription errors. In a previous study performed on retrospective clinical data extracted from an electronic health system, the system generated alerts that might otherwise be missed with existing CDS systems. The majority (75%) of these alerts were found to be clinically useful.11

In this study, we evaluated the system’s performance in a real-life setting, identifying medication errors and ADEs in a 38-bed inpatient department of internal medicine in a tertiary medical center. The system was operating on top of a legacy standard CDSS.12 The metrics measured were: 1) alert burden, 2) alert accuracy and clinical relevance, and 3) physician’s response to the alerts.

MATERIALS AND METHODS

The computerized decision support system

The CDSS studied in this study uses machine-learning algorithms to identify and intercept potential medication prescription errors in real time. After analyzing historical electronic medical records, the system automatically generates, for each medication, a computational model that captures the population of patients that are most likely to be prescribed a certain medication and the clinical environment and temporal circumstances in which it is likely to be prescribed. This model can then be used to identify prescriptions that are significant statistical outliers given patients’ clinical situations. Examples for such outliers are medications rarely or never prescribed to patients in certain situations, such as birth control pills to a baby boy, or an oral hypoglycemic medication to a patient without diabetes. Such prescriptions are flagged by the system as potential medication errors during real-time prescribing events. The system intervenes at 2 points in the physician’s workflow: 1) synchronous alerts—the alert pops up during the prescribing process if the physician chooses a medication which is an outlier to the patient’s clinical general characteristic and current clinical situation, and 2) asynchronous alerts—generated after the medication order was already entered into the system following a relevant change in the patient’s profile (eg, new laboratory test results or a change in vital signs that have rendered 1 of the active medications an outlier).

Synchronous alert types include:

Time-dependent irregularities (synchronous): an alert flagged when existing data in the patient’s profile render the prescribed medication inappropriate or dangerous (eg, prescribing an anti-hypertensive medication to a patient in septic shock).

Clinical outliers: an alert flagged when a certain medication does not fit the patient’s clinical profile (eg, when a hypoglycemic drug prescribed to a patient without a diagnosis of diabetes mellitus nor indices indicative of such a disease [such as hyperglycemia or previous hypoglycemic drugs]).

Dosage outliers: an alert flagged when a certain medication dosage is considered as an outlier with respect to the machine-learned dosage distribution of that medication in the population and/or the patient’s own history (eg, rare dose, rare dosage unit, rare frequency, rare route).

Drug overlap: an alert flagged when parallel treatment with 2 medications of the same group (or the same indication) prescribed in circumstances that defy the usage of such regimens (eg, 2 types of statins).

Asynchronous alert types include:

Time-dependent irregularities (asynchronous): an alert flagged when changes in the patient’s profile occur after the prescription was made, rendering a certain medication as inappropriate or dangerous to continue (eg, when the blood pressure drops and continuation of anti-hypertensive medications becomes potentially harmful).

Study setting and patient population

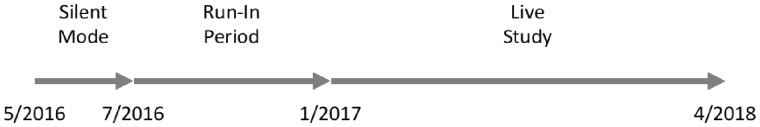

In April 2016, after Sheba Medical Center’s ethics committee approved this study, the system was installed in a single 38-bed internal medicine department at Sheba Medical Center, a 1800-bed academic medical center in Israel. Initially, the system operated in a “silent mode” for several months. During this period, drug prescriptions were monitored, patients’ profiles analyzed, outliers detected, and appropriate alerts generated. Still, alerts were not evident to the department’s physicians. Once performance level was acceptable, in July 1 2016, the system switched to live mode, and the physicians started receiving the alerts in the electronic health record (EHR) environment (Chameleon by Elad Systems, Tel Aviv, Israel) and could respond to them (ie, accept or reject both synchronous and asynchronous scenarios).

The first 6 months of live operation were considered a “run-in” period, in which data consistency problems were identified and handled. These included identification of abnormal laboratory results due to technical issues such as a hemolytic blood samples and analytics of bodily fluids other than blood (eg, pleural and peritoneal fluids), identification, and temporarily overlooking of medications on “hold”. Following the “run-in” period, the system was declared operational and has been active ever since. For this study, data were collected and analyzed from all inpatient EHR files for patients admitted to Internal Medicine “T” in Sheba Medical Center, Israel, between July 1, 2016 and April 30, 2018 (Figure 1).

Figure 1.

Study duration.

Types of alerts and their process of validation

Data on the alert types, alert burden, and physicians’ responses, were extracted from the system’s database. Assessment of the alerts’ accuracy and clinical relevance were based on the physician’s response to the alerts in real time and then validated in biweekly interviews with the clinical champion (GS) in the medical department, in which all alerts were manually reviewed and ranked according to their:

Accuracy—were there any data-related issues that caused a false alarm? If yes, then accuracy = zero; if not, accuracy = one. For example, initially, antiplatelet agents were flagged as inappropriate after thrombocytopenia was detected in fluids other than blood (accuracy = 0, validity = 0, usefulness = 0). Such accuracy issues were handled by assimilating the difference between different origins of laboratory samples into the CDSS algorithms. As a result, such inaccuracies diminished significantly.

Clinical Validity—was there clinical justification, as documented in the medical record, for this medication to be prescribed to the patient? If yes, then validity = zero; if not (ie, the medication had no clinical justification and, hence, is a likely error), then validity = one. For example, anti-thyroid medications prescribed to a patient without any documentation of thyroid disease and flagged as inappropriate (accuracy = 1, validity = 1, usefulness = 2).

Clinical Usefulness—was the alert clinically useful to the physician? (Zero = alert irrelevant to this patient; 1 = no clinical relevance; 2 = clinically relevant alert even if physician overrides it; 3 = clinically relevant alert and the physician should modify treatment accordingly). For example, many patients who suffer from chronic lung diseases have a high partial pressure of CO2 in their blood. For such patients, sedative medications are potentially harmful since they might worsen respiratory failure. In many instances, the warning against using such medications in the face of high CO2 was considered valid and clinically relevant but still overridden by the attending physician who considered the medication’s effects to be safely tolerated (accuracy = 1, validity = 1, usefulness = 2).

Thus, each alert received 3 scores (ie, [accuracy = one, validity = zero, usefulness = zero] = no data issues were found; however, we found a justification for the medication in the patient records. For example, an alert on a new prescription for anticoagulation, while a physician’s note states that the patient has atrial fibrillation: [Accuracy = 1, validity = 1, usefulness = 3] = a clinically relevant alert, for example, a drug name mix-up resulting in prescribing chemotherapy to a healthy individual).

To accurately rank the algorithm-generated alerts, the patient’s complete longitudinal electronic medical record, was manually reviewed by the clinical champion, including structured data as well as unstructured data, such as physician’s notes, textual problem list, etc.

Data analysis and statistical methods

Patient IDs were anonymized: a random number was generated for each ID and a conversion table saved in a secured location on Sheba Medical Center’s servers. The anonymized file was then subjected to statistical analysis, including 1) distribution of the number of alerts per type (time-dependent, dosage, etc), 2) alert accuracy and clinical relevance, calculated according to the clinical champion’s classification of each alert; 3) physician’s response to the alert assessed by analyzing changes in prescription (hold/stop/dosage change) immediately following synchronous alerts and within a few hours following asynchronous alerts.

RESULTS

Alert timing and burden

During the period of this study, there were 4533 admissions of 3160 patients, all of whom were included in the study. Physicians prescribed 78 017 medication orders. The alert burden generated by the system throughout the duration of the study was low with 315 alerts on 282 prescriptions, which were 0.4% of all prescriptions or an average of 4.5 alerts every week for the whole department.

Of the alerts generated, 40% were flagged synchronously during the medication order process, and 60% were flagged later during the monitoring phase, after the medication orders were already active, following a change in the patient clinical state (ie, new lab result, vital signs, etc).

Time-dependent alerts were the most common (64.8%), followed by dosage outliers (30.2%), clinical outliers (3.8%), and drug overlap (1.3%). A detailed list of the synchronous and asynchronous alerts is presented in Tables 1 and 2, respectively. It is notable that clinical outlier alerts were generated on a wide range of medications, without a “common alert.”

Table 1.

Synchronous alert distribution

| Alert Type | Most common Clinical scenario | Most common medications flagged | Percent |

|---|---|---|---|

| Time dependent alerts (synchronous) | 47% | ||

| Hypercarbia | Respiratory failure, sedation | Sedatives, opioid narcotics | 22% |

| Hyponatremia | SIADH | thiazide diuretics and SSRI's | 5% |

| Disrupted liver function | Hepatitis, Sepsis and septic shock | Statins | 3% |

| Bradycardia | Cardiac arrhythmia | Beta blockers, calcium channel blockers | 3% |

| Hyperkalemia | Acute kidney injury | Potassium sparing diuretics, Angiotensin-Converting-Enzyme inhibitors | 3% |

| Thrombocytopenia | Sepsis and DIC | Anticoagulants, anti-aggregates | 3% |

| Hypotension | Sepsis and septic shock, sedation | Vasodilators | 3% |

| Disrupted coagulation tests (prolonged PT/INR) | sepsis | Anticoagulants | 2% |

| Hypokalemia | sepsis | Diuretics, potassium associating resins | 2% |

| Hypoglycemia | Sepsis and septic shock | Insulin, oral hypoglycemic drugs | 1% |

| Dosage alerts | 42% | ||

| High dosage | 23% | ||

| Low dosage | 18% | ||

| Rare unit | 1% | ||

| Clinical alerts | 11% | ||

Abbreviations: DIC, disseminated intravascular coagulation; SIADH, syndrome of inappropriate antidiuretic hormone secretion; SSRI, selective serotonin reuptake inhibitor.

Table 2.

Asynchronous time-dependent alert distribution

| Time-Dependent Alert Typea | Most common Clinical scenario | Most common medications flagged | Percent |

|---|---|---|---|

| Hypercarbia | Respiratory failure, sedation | Sedatives, opioid narcotics | 11.52% |

| Bradycardia | Cardiac arrhythmia | Beta blockers, calcium channel blockers | 10.29% |

| Hypotension | Sepsis and septic shock, sedation | Vasodilators | 4.90% |

| Thrombocytopenia | Sepsis and DIC | Anticoagulants, anti-aggregates | 4.66% |

| Disrupted liver function | Hepatitis, sepsis, and septic shock | Statins | 4.41% |

| Hypoglycemia | Sepsis and septic shock | Insulin, oral hypoglycemic drugs | 4.17% |

| Hyperkalemia | Acute kidney injury | Potassium sparing diuretics, Angiotensin-Converting-Enzyme inhibitors | 2.94% |

| Disrupted coagulation tests (prolonged PT/INR) | Sepsis | Anticoagulants | 2.45% |

| Hypokalemia | Sepsis | Diuretics, potassium associating resins | 1.72% |

| Hyponatremia | SIADH | Thiazide diuretics and SSRI's | 0.49% |

| Acute kidney injury (elevated creatinine) | Sepsis and septic shock | Angiotensin-Converting-Enzyme inhibitors | 0.25% |

| Hypercalcemia | Malignancy | calcium and vitamin D derivatives | 0.25% |

| Elevated CPK levels | Hepatitis, convulsions, rhabdomyolysis | Statins | 0.25% |

Abbreviations: DIC, disseminated intravascular coagulation; SIADH, syndrome of inappropriate antidiuretic hormone secretion; SSRI, selective serotonin reuptake inhibitor.

Asynchronous event/change in the patient's profile necessitating certain medications to be flagged.

Alert accuracy and clinical relevance

Of the alerts generated during the study period, 89% were accurate (EHR data support the alert), 85% were clinically valid (no justification found in the EHR to support the medication), and 80% were clinically useful (alert clinically justified).

Physician’s response to the alerts

During the 16-month duration of the study, 135 medication orders (48% of the accurate alerts) were stopped or modified within a short time (median of 1 hour, interquartile range: [0.07, 4] hours) following alert generation by MedAware’s system. Of these, 39% of the erroneous medication orders were modified during the order of the medication (synchronous flags), and 61% were modified during monitoring phase (asynchronous flags) following a change in laboratory results or vital signs.

The most common alerts causing a change in physician’s behavior (ie, alerted medication stopped or modified) were dosage alerts—followed by time-dependent alerts triggered by bradycardia, elevated liver function tests, and hypotension (Table 3).

Table 3.

Most common alerts causing physician behavior change

| Alert Type | Most common Clinical scenario | Most common medications flagged | Percent |

|---|---|---|---|

| High dosage | 26% | ||

| Low dosage | 18% | ||

| Bradycardia | Cardiac arrhythmia | Beta blockers | 9% |

| Disrupted liver function | Hepatitis, sepsis and septic shock | Statins | 8% |

| Hypotension | Sepsis and septic shock, sedation | Vasodilators | 8% |

Comparison to the medical center’s legacy CDSS

The performance of Sheba Medical Center’s legacy CDSS was assessed in a recent study by Zenziper Straichman et al.12 The legacy CDSS alerts mostly on drug-to-drug interactions, dosage, and drug overlap. Compared to the legacy CDSS, the system generated almost a 100 times fewer alerts, which were 5 times more clinically relevant and caused more than 8 times as many changes in prescribing (Table 4).

Table 4.

Comparison between the probabilistic, machine-learning approach-based system and the legacy CDS

| Legacy CDS | The System | |

|---|---|---|

| Alert Burden (% of prescriptions) | 37.10% | 0.40% |

| Clinically relevant (% of alerts) | 16% | 85% |

| Caused a change in practice (% of alerts) | 5.30% | 43% |

| Post prescribing surveillance (% of alerts) | 0% | 60% |

| Alert types: | ||

| Clinical outliers | No | Yes |

| Time-dependent | No | Yes |

| High dosage | Yes | Yes |

| Low dosage | No | Yes |

| Rare dosage unit | No | Yes |

| Drug-drug interaction | Yes | No |

| Drug overlap | Yes | Yes |

The legacy CDSS generated a high alert burden (37% of prescriptions were flagged) with a high dismissal rate by physicians (∼95% of the alerts were ignored). Most of the alerts generated by the legacy CDSS were related to drug-to-drug interactions and dosages. The MedAware system was deployed as an add-on to the legacy CDSS (ie, the system’s alerts were displayed in addition to the legacy CDS system’s, they did not replace them) and differentiated itself from the legacy system by using a different look-and-feel user interface. As the system’s alerts did not relate to drug-drug interactions, there was minimal overlap between the systems involving only high-dosage alerts. In these few cases, physicians received 2 alerts on the same prescription.

There were no conflicts between the systems as each reflected different knowledge types: the legacy system reflected what is described in the drug leaflet, and MedAware reflected the statistics based on physician’s practice.

DISCUSSION

Prescription errors and ADEs are associated with substantial morbidity and mortality and with a significant preventable wasteful health care cost. Currently available CDS systems targeted to address this problem suffer from several flaws: 1) low coverage (ie, they identify only a small predefined subset of errors, such as drug interactions and allergies); 2) high alert burden (flagging more than 10% of prescriptions in best cases); and 3) high false-alarm rate, exceeding 90% in most cases.12–14 These result in “alert fatigue,” causing physicians to ignore these alerts altogether.12,13 Moreover, most systems focus on identifying the potential errors at the prescribing event but fail to identify ADEs that occur in ongoing active prescriptions due to the frequent changes, which are characteristic of patients during their in-hospital stay.

Indeed, research has shown that a wide variability exists regarding the ability of common vendor-developed computerized physician order entry systems with CDS to flag errors in medication prescriptions. One study used a simulation tool designed to assess how well safety decision support worked when applied to medication orders in computerized order entry.15 The researchers found a large variability in performance both between vendor systems and between hospitals using similar vendor systems—ranging from an ability to flag 80% of errors to some systems being able to flag only 10%. In another study, researchers found that, in community hospitals implementing computerized physician order entry with some level of CDS, only a modest decrease in ADEs with an increase in potential ADEs (errors that did not cause harm) occurred.16 A return on investment analysis done for these hospitals did not show significant financial returns.17

MedAware is a CDSS that uses statistically derived outliers to detect potential medication errors and ADEs. Here, for the first time, we describe the performance of such a system in a live inpatient setting following integration with the local EHR. This novel approach targets the challenges of current CDS systems: 1) Wider span/coverage of potential errors flagged: the system is not limited to predefined types of errors and identifies a wide range of outliers, including unanticipated types of errors;11 2) Reduced burden of alerts: the system was associated with a very low alert burden and flagged 0.4% of medical orders as potentially dangerous prescription errors; 3) Decreased false-alarm rate: 80% of alerts were considered clinically justified by the physicians, and only 20% were regarded as false alarms. In subsequent analysis, most of the errors related to data acquisition issues; 4) Diminished alert fatigue: due to its low alert burden and low false-alarm rate, physicians heeded alerts provided by the system (evidently, they differentiated between such alerts and alerts flagged by the legacy system that continued working in the background). This is evident by the fact that prescribing physicians changed their behavior and modified/stopped the medical orders following 43% of the alerts; 5) Surveillance of post prescribing events: the system monitors changes in the patient record to identify potentially emerging ADEs which may be associated with an active medication. This resulted in 60% of the alerts generated after the medication was already prescribed. As most current systems do not flag already active prescriptions, they would likely not have identified these ADEs.

As the system is based on probabilistic outliers, and, as most of the alerts generated by the system are unique and not addressed by legacy CDS systems, it is difficult to estimate its sensitivity (ie, what errors are missed by the system). We assume that not a few errors are missed and there is no “perfect” system that can catch all, but our belief is that getting physicians to pay attention to the alerts is key to reducing harm—even at the cost of missing a few relevant alerts. Moreover, the system does not currently intend to replace the legacy systems but be an add-on to current CDS systems, adding an additional layer of safety.

We anticipate that the main unique impact of the system in addition to the legacy CDSS will mostly be in:

Addition of clinical outlier alerts (ie, wrong drug to the wrong patient)

Postprescribing surveillance (ie, continuously evaluating the risk of a medication after the medication was ordered, following a change in the patient’s lab profile, vitals, etc)

Optimization of the alerts, resulting in low alert burden, high clinical relevance, and physician response

Moreover, we anticipate that probabilistic analysis will be implemented in the next-generation rule-based systems to optimize and personalize their alerts based on their performance on large-scale real-time clinical data.

Our study is limited in scope as it was conducted in a single internal medicine department in 1 hospital. In addition, the clinical accuracy of alerts was determined by a single “clinical champion,” a potential drawback of the validation process. Moreover, the legacy CDSS was assessed in a different study using different methodologies, which might influence the results of the comparison between them. Further analysis of this CDS system’s potential should be sought after deployment in other departments and several hospitals.

CONCLUSION

To conclude, in this study we have shown that outlier detection, based on machine learning and statistical methods, generates clinically relevant alerts with good physician response and low alert fatigue in a live, busy, hospital inpatient setting.

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

CONTRIBUTIONS

SG, EZ, and YW engaged with study design and methodology, data analysis, manuscript writing, and editing. AS, AB, and YL engaged with data acquisition, data analysis, and manuscript proofing.

Conflict of interest statement

None declared.

REFERENCES

- 1. National Academies Press. To Err Is Human. Washington, DC: National Academies Press; 2000. [Google Scholar]

- 2. James JT. A new, evidence-based estimate of patient harms associated with hospital care. J Patient Saf 2013; 93: 122–8. [DOI] [PubMed] [Google Scholar]

- 3. Mello MM, Chandra A, Gawande AA, Studdert DM.. National costs of the medical liability system. Health Aff (Millwood) 2010; 299: 1569–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Bishop TF, Ryan AM, Ryan AK, Casalino LP.. Paid malpractice claims for adverse events in inpatient and outpatient settings. JAMA 2011; 30523: 2427–31. [DOI] [PubMed] [Google Scholar]

- 5. Velo GP, Minuz P.. Medication errors: prescribing faults and prescription errors. Br J Clin Pharmacol 2009; 676: 624–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. McCoy AB, Thomas EJ, Krousel-Wood M, Sittig DF.. Clinical decision support alert appropriateness: a review and proposal for improvement. Ochsner J 2014; 142: 195–202. [PMC free article] [PubMed] [Google Scholar]

- 7. Hoffman S, Podgursky A.. Drug-drug interaction alerts: emphasizing the evidence. St Louis Univ J Health Law Pol 2012; 5: 297–310. [Google Scholar]

- 8. Glassman PA, Simon B, Belperio P, Lanto A.. Improving recognition of drug interactions: benefits and barriers to using automated drug alerts. Med Care 2002; 4012: 1161–71. [DOI] [PubMed] [Google Scholar]

- 9. van der Sijs H, Aarts J, van Gelder T, Berg M, Vulto A.. Turning off frequently overridden drug alerts: limited opportunities for doing it safely. J Am Med Inform Assoc 2008; 154: 439–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. AHRQ Agency for Healthcare Research and Quality. Reducing and Preventing Adverse Drug Events to Decrease Hospital Costs. Rockville, MD: AHRQ Agency for Healthcare Research and Quality; 2001. [Google Scholar]

- 11. Schiff GD, Volk LA, Volodarskaya M, et al. Screening for medication errors using an outlier detection system. J Am Med Inform Assoc 2017; 242: 281–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Zenziper Straichman Y, Kurnik D, Matok I, et al. Prescriber response to computerized drug alerts for electronic prescriptions among hospitalized patients. Int J Med Inform 2017; 107: 70–5. [DOI] [PubMed] [Google Scholar]

- 13. Bryant AD, Fletcher GS, Payne TH.. Drug interaction alert override rates in the meaningful use era: no evidence of progress. Appl Clin Inform 2014; 53: 802–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Tolley CL, Slight SP, Husband AK, Watson N, Bates DW.. Improving medication-related clinical decision support. Am J Heal Pharm 2018; 754: 239–46. [DOI] [PubMed] [Google Scholar]

- 15. Metzger J, Welebob E, Bates DW, Lipsitz S, Classen DC.. Mixed results in the safety performance of computerized physician order entry. Health Aff 2010; 29: 655–63. [DOI] [PubMed] [Google Scholar]

- 16. Leung AA, Schiff G, Keohane C, et al. Impact of vendor computerized physician order entry on patients with renal impairment in community hospitals. J Hosp Med 2013; 27 (7): 801–7. [DOI] [PubMed] [Google Scholar]

- 17. Zimlichman E, Keohane C, Franz C, et al. Return on investment for vendor computerized physician order entry in four community hospitals: the importance of decision support. Jt Comm J Qual Patient Saf 2013; 39 (7): 312–8. [DOI] [PubMed]