Abstract

Objective

This systematic review aims to analyze current capabilities, challenges, and impact of self-directed mobile health (mHealth) research applications such as those based on the ResearchKit platform.

Materials and Methods

A systematic review was conducted according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement. English publications were included if: 1) mobile applications were used in the context of large-scale collection of data for biomedical research, and not as medical or behavioral intervention of any kind, and 2) all activities related to participating in research and data collection methods were executed remotely without any face-to-face interaction between researchers and study participants.

Results

Thirty-six unique ResearchKit apps were identified. The majority of the apps were used to conduct observational studies on general citizens and generate large datasets for secondary research. Nearly half of the apps were focused on chronic conditions in adults.

Discussion

The ability to generate large biomedical datasets on diverse populations that can be broadly shared and re-used was identified as a promising feature of mHealth research apps. Common challenges were low participation retention, uncertainty regarding how use patterns influence data quality, need for data validation, and privacy concerns.

Conclusion

ResearchKit and other mHealth-based studies are well positioned to enhance development and validation of novel digital biomarkers as well as generate new biomedical knowledge through retrospective studies. However, in order to capitalize on these benefits, mHealth research studies must strive to improve retention rates, implement rigorous data validation strategies, and address emerging privacy and security challenges.

Keywords: mHealth, mobile applications, ResearchKit, smartphone

BACKGROUND AND SIGNIFICANCE

The increasing prevalence of mobile devices among the general population provides a unique opportunity to conduct social and biomedical research at-scale. Currently, there are more than 50 000 non-interventional, observational studies registered in ClinicalTrials.gov.1 The vast majority of these studies are coordinated in-person, require direct interaction with researchers, and the sample sizes are limited by time, geographic, and budgetary constraints. In order to break through many of these longstanding barriers to biomedical research studies, several open-source research platforms (eg, ResearchKit and ResearchStack) have leveraged smartphones as research tools to appeal to widespread, multi-demographic groups and enable any eligible person to consent and contribute data remotely through his or her own smartphone.2 In particular, the release of Apple’s open-source platform (ResearchKit) in March 2015 has led to widespread use of mobile health (mHealth) applications (apps) to collect and curate large amounts of data that will enable researchers to generate and test hypotheses of significance to public health, including chronic conditions, behavioral and mental health, and disability.

Compared to traditional in-person, biomedical studies, some of the benefits that mobile applications for medical research might provide include improved subject recruitment, comprehensibility of consent and data collection processes, cost effectiveness, elimination of geographic barriers to participation, broader participation from the community including individuals from marginalized subpopulations, and the potential to create large datasets for secondary analyses. On the other hand, there are several limitations to consider, including but not limited to, participant retention, self-selection bias, reliability and quality of patient-generated data collected through mobile applications, and ethical, legal, and social issues pertaining to citizen science and mHealth technology. In this paper, the term “citizen science” is typically used to refer to any large-scale, non-interventional study that solicits voluntary participation of general citizens in scientific research.3,4

Prior studies on mHealth have been focused largely on using mobile apps for personal health monitoring or as an intervention to improve care processes.5 However, studies related to use of mobile apps for large-scale health-related research are limited. The objective of this systematic review is to analyze the current capabilities, challenges, and impact of non-interventional, self-directed mHealth research applications.

MATERIALS AND METHODS

Search strategy

A systematic literature review was performed using methods specified in the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement for reporting systematic reviews and meta-analyses.6 Both controlled vocabulary terms (eg, MeSH, EmTree) and key words were utilized to search the following databases for publications that focused on the development or use of smartphone applications to gather data remotely in the context of medical research: Ovid/MEDLINE, PubMed/MEDLINE, Elsevier/Embase, Elsevier/Scopus, Wiley/Cochrane Library, Thomson-Reuters/Web of Science, EBSCO/CINAHL, Elsevier/El Compendex, IEEE Xplore, and ClinicalTrials.gov. The Apple App store and Google Play were also searched for apps that were developed to gather medical research data remotely. Literature searches were completed on July 25, 2018. The complete PubMed/MEDLINE search strategy is available in Supplementary Appendix S1.

Inclusion criteria were 1) mobile applications were used in the context of large-scale collection of data for biomedical or clinical research, and not as medical or behavioral intervention of any kind; 2) all activities such as informed consent to participate in research and methods used for data collection were executed remotely without any face-to-face interaction between researchers and study participants; 3) publications and applications were in English; and 4) dates of publication were after January 1, 2006. All study and publication types were considered with the exception of reviews, systematic reviews, meta-analyses, and editorials/opinion pieces; conference abstracts and ongoing and completed clinical trial listings were included. During the first set of searches completed on April 17, 2017, 2 independent reviewers screened titles and abstracts of retrieved references for relevance and resolved disagreements by consensus. They similarly screened the full text of the articles selected from title and abstract screening to ensure that they met the full set of inclusion criteria and resolved disagreements by consensus. Searches were rerun on February 26–27, 2018, and again on July 25, 2018; titles and abstracts for the updated searches were screened by the primary author, as were the full text of selected studies.

RESULTS

Four open-source platforms were identified that enable development of mobile applications for research purposes: 1) EmotionSense, 2) Ohmage, 3) ResearchKit, and 4) ResearchStack. ResearchKit and ResearchStack are, respectively, Apple’s and Android’s open-source platforms, which enable rapid development of mHealth research apps that require data collection related to overall health and well-being such as cognition, fitness, hand dexterity, motor activities, and voice. EmotionSense, on the other hand, collects data from questionnaires, location, and voice recordings specifically for assessing emotions; as such, it is used primarily for social and psychological research.7 EmotionSense was validated as an individual app, and the literature search did not locate any additional studies conducted through the app.7 Ramanathan et al.8 states that, at the time of the publication, the Ohmage platform was being validated by 7 independent studies of different populations, including recent cancer survivors, men at risk for HIV, and individuals with ADHD; however, no additional literature was identified on any such studies. ResearchKit emerged as the predominant platform implemented in existing mHealth research apps; thus, we focus on ResearchKit-based apps and studies in this review.

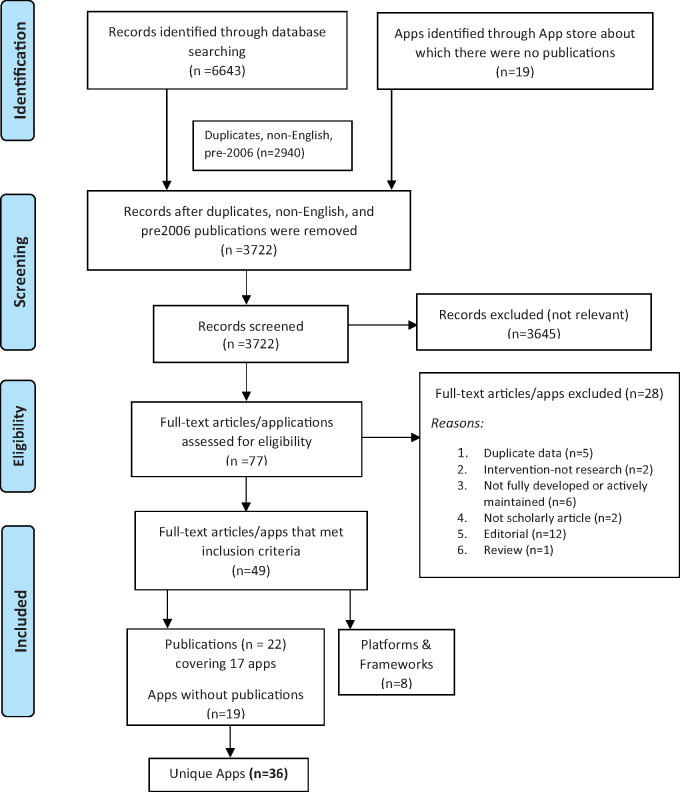

Database searches resulted in the identification of 6643 studies. Of the 3703 articles that remained after duplicates, non-English, and pre-2006 publications were removed, 3645 were excluded because of irrelevance to the topic (Figure 1). Strict inclusion criteria, as outlined in the Methods section, were applied to the full text of 58 articles and an additional 19 mobile apps identified through the Apple App and Google Play stores. Of these 77 resources, 49 met the full set of criteria; this included 22 articles (relevant to 17 distinct ResearchKit apps),5,7–34 the additional 19 apps (without related publications) found on Apple App and Google Play stores,35–53 and 8 articles related to mHealth platforms and frameworks. Overall, 36 unique ResearchKit apps were identified. Three apps had been reproduced using ResearchStack and made available through Google Play.15,18,19 The ResearchKit platform has been available only since March 2015. Thus, the majority of ResearchKit-based studies cited here have not yet progressed to the point of published data; only 7 apps were identified to have published results.11,14,19,21,22,28,34

Figure 1.

PRISMA flowchart illustrating the process of literature search and extraction of studies meeting the inclusion criteria.

A summary of research purpose and mode of data collection for apps identified through the database searches and apps identified through the app stores is described in Tables 1 and 2, respectively. Additionally, information regarding the purpose of each framework can be found in Table 3. Of 36 apps included in the review, 16 of them (44%) investigate chronic conditions such as Parkinson’s disease, diabetes, COPD, and heart disease.9,11,14,20,21,28,36,37,44,46,49,50,52,53,56,57 Three of the applications (8%) focus on screening conditions such as mole growth, depression, and emotions.19,34,47 For example, the developers of Autism & Beyond are using facial expressions to determine the emotions being displayed. Four applications (11%) are intended to address behavioral issues such as impulsiveness, photo-sharing on social media, energy drink consumption, and relating gait to emotions.15,38,41,43 The most commonly addressed conditions are heart disease (4 apps)14,17,44,57 and cancer (3 apps).19,21,53

Table 1.

Apps identified through database searches

| App Name | Condition | Purpose (To determine…) | Data Typesa(Mode of Collection) | 1st Author/ Sponsor, Year, Reference |

|---|---|---|---|---|

| Apps that collect both survey and sensor-based data | ||||

| mPower | Parkinson's disease (PD) | Potential to monitor PD symptoms and their severity and sensitivity to medication and to assess qualitative patient experience of eConsent | Parkinson Disease Questionnaire 8 (survey), short-term spatial memory and dexterity and speed (screen tapping), gait and balance (accelerometer and gyroscope), sustained phonation (microphone) | Abujrida et al., 201731; Bot et al., 201628; Doerr et al., 201730; Neto et al., 201629 |

| VascTrac | Peripheral artery disease (PAD) | Trends between patient-reported PAD outcomes and walking data | Medical data (survey), physical activity (motion sensor) | Ata et al., 201732 |

| 6th Vital Sign | General health | Method to measure walking speed that is comparable across subjects | Personal health (survey), walking speed (motion sensor) | Bettger et al., 201633 |

| C Tracker | Hepatitis C | Feasibility of monitoring changes in medication therapy and associated effects on workforce productivity and physical limitations | Changes in medication therapy (survey), physical activity (motion sensor) | Boston Children's Hospital, 20176 |

| EpiWatch | Epilepsy | Whether EpiWatch can be validated to detect seizures and to monitor seizure experience, study medication side effects, and identify triggers | Heart rate and movement (Apple Watch motion sensors), seizure experience (survey) | Carmenate et al., 201710 |

| Asthma Health | Asthma | Asthma trigger data, quality of self-reported data, app user characteristics, and clinical impact | Symptoms, triggers, medication adherence, healthcare utilization, and quality of life (survey), geographic conditions (GPS) | Chan et al., 201711; Gowda et al., 201612 |

| SleepHealth | Sleep | Correlations between sleep habits and physical activity and alertness | Sleep assessment activities (motion sensor) | Deering et al., 201713 |

| Heart & Brain | Heart attack/ stroke | Feasibility of collecting quality data for heart and brain health | Stroke risk factors and quality of life (questionnaire), pulse rate (Apple Watch), neurological data (gyro sensor, camera for face/limb/motor disorders) | Kimura et al., 201614 |

| MyHeart Counts | Cardiovascular health/physical activity | Effect of daily activity (step count) measures on cardiovascular health, whether 4 different activity prompts impact physical activity, the correlation of genetic data | Stepcount frequency and duration and sleep duration (motion sensors and survey), reported happiness and cardiovascular disease risk (survey) | Stanford University, 201717 |

| AMWALKS or America Walksd | Walking behavior | U.S. walking behavior and the correlation between perceived and actual walking activity | Stepcount (survey and motion sensor) | TrialX, Inc, 201618 |

| Mole Mapperd | Melanoma | Potential to collect data pertinent to melanoma risk | Melanoma risk factors (survey), mole sizes (phone camera) | Webster et al., 201719 |

| Apps that predominantly collect survey-based data | ||||

| Depression Monitor | Depression | Characteristics of depression app users, and if a depression intervention is necessary | Risk of depression (patient health questionnaire, PHQ-9) | BinDhim et al., 201534 |

| DMTbmPulsed | Impulsive behavior | Frequency of voluntary app use, quality of impulsivity assessment | Impulsivity self-assessment (survey), performance tasks, passive data collection | Northwell Health, 201815 |

| PARADEc | Rheumatoid arthritis (RA) | RA symptoms, mood, and medications over time | Symptoms, medications, and comorbidities (surveys) | Williams et al., 201720 |

| Prostrate PRO-Tracker | Prostate cancer radiation therapy | Potential to collect patient-reported outcomes throughout prostrate radiotherapy | Urinary, bowel, sexual, and hormonal quality of life data (Expanded Prostrate Index Composite for Clinical Practice (EPIC-CP) survey | Yang et al., 201621 |

| Back on Track | Acute anterior cruciateLigament (ACL) tears | Outcomes of different treatment methods for acute ACL tears | Subjective experience of injury (survey) | Zens et al., 201722 |

| WebMD Pregnancy | Pregnancy | Effect of weight on pregnancy outcomes | Weight, pregnancy complications, birth outcomes (surveys) | Radin et al., 201816 |

Demographic surveys are not listed under “Data Types” because this feature is common to all cited apps.

DMT: Digital Marshmallow Test.

PARADE: Patient Rheumatoid Arthritis Data from the real world.

Available on ResearchKit and ResearchStack platforms.

Table 2.

Apps identified through Apple app and Google Play Stores

| App Name | Clinical Condition | Purpose (to determine…) | Data Type Catogorya | Sponsor, Reference |

|---|---|---|---|---|

| Feverprints | Diseases characterized by fevers | How body temperature varies between individuals and the correlation of body temperature patterns with different diseases | Survey, external service/feature | Boston Children’s Hospital35 |

| STOPCOPD | Chronic obstructive pulmonary disease (COPD) | Correlations between physical activity, peak expiratory flow, caloric intake, heart rate, oxygen saturation, and sleep patterns | Fitness | COPD Foundation, DatStat team36 |

| Hand in Hand | HIV associated neurocognitive disorders (HAND) | Cognitive effects of HAND | Cognition | Digital Artefacts, UNMC46 |

| Autism & Beyond | Autism and other mental health disorders | whether video collection can be validated as a means to identify emotions and behavioral characteristics | Camera | Duke Medicine47 |

| TeamStudy | Football, long-term effects | Improved diagnostic and treatment interventions through analysis of memory, balance, heart health, pain, and mobility | Survey, cognition, motor activities, fitness | Harvard University48 |

| COPD Navigator | COPD | Correlations between geographic data (ie air quality, weather, allergens) impact COPD, medications | Survey, location | LifeMap Solutions, MSSM49 |

| GlucoSuccess | Diabetes (type II) | Correlations between blood glucose values, dietary choices, activity levels, and health behavior questionnaires (sleep quality, foot inspections, medication adherence) | Survey, fitness | Massachusetts General Hospital50 |

| Concussion Tracker | Concussion | Whether Concussion Tracker can be validated to collect patient-reported outcomes (heart rate, physical and cognitive function) | Survey, fitness | NYU Langone Health51 |

| Sarcoidosis | Sarcoidosis | Variables impacting quality of life for patients with sarcoidosis | Survey | Penn Medicine52 |

| Share the Journey | Health after breast cancer treatment | Trends in fatigue, mood and cognitive changes, sleep disturbances, reduction in exercise for people who have been treated for breast cancer | Survey | Sage Bionetworks53 |

| Neurons | Multiple sclerosis | MS symptoms through walking, dexterity, and Paced Auditory Serial Addition Test (PASAT) activities | Fitness, cognition, hand dexterity | Shazino37 |

| Saker | Gait and emotions | When someone is scared based on motion sensors that monitor the user’s gait | Motor activities | Thomas Morrell38 |

| uBiome | Gut bacteria/ microbiome | Relationship between weight management and the microbiome | Survey, external service/feature | uBiome39 |

| PPD ACTb | Postpartum depression (PPD) | Why specific subpopulations experience PPD | Survey | UNC School of Medicine40 |

| mTECH | Energy drink consumption | Correlations between energy drink consumption and blood pressure, heart rate, daily step count, and sleep activity | Survey, fitness | University of the Pacific41 |

| PRIDE Study | LGBTQ health | Health correlations among the LGBTQ population | Survey | UC San Francisco, THREAD Research42 |

| Biogram 2 | Photo-sharing behavior | Whether there is a relation between photo-sharing in social media and heart rate, weight, and step count | Survey, fitness | USC Center for Body Computing, Medable43 |

| Yale Cardiomyopathy Index | Cardiomyopathy | How cardiomyopathies or risks of developing cardiomyopathies affect perceived quality of life and physical health (heart rate and walking limitations) | Survey, fitness | Yale University44 |

| Yale EPV | Pregnancy | Correlations between estimated placental volume (EPV) and birth outcomes | External service/feature | Yale University45 |

The data type category divisions were adapted from the ResearchKit Active Tasks categories (motor activities, fitness, cognition, voice, audio, hand dexterity),54 along with a few additional categories (surveys, camera, location, and external service/feature). The “External service/feature” category refers to the requirement of any service or technology that is not provided from within the app itself (eg, thermometer, external devices such as Apple Watch).

PPD ACT: Postpartum Depression Action towards Causes and Treatment.

Table 3.

Frameworks

| Frameworks | Purpose | 1st Author/ Sponsor, Year, Reference |

|---|---|---|

| Fast Healthcare Interoperability Resources (FHIRframe) | To establish data standards and an application program interface (API) for the exchange of health data | Anwar, 201523 |

| Ontology for Defining mHealth and Mapping Research Foci | To establish an ontology to provide a common definition for mHealth and as a resource to quantify areas of mHealth research | Cameron, 20175 |

| Comparison of ResearchKit Consent to Standard Consent | To validate the eConsent process used in ResearchKit | Duke University, 201724 |

| REDCap/ResearchKit Clinical Decision Support | To design a clinical decision support tool compatible between ResearchKit and REDCap API | Metts, 201725 |

| C3-PRO: the Consent, Contact, and Community framework for Patient Reported Outcomes | To design an open-source toolchain for connecting ResearchKit apps with the clinical research IT infrastructure i2b2 by implementing FHIR | Pfiffner, 201626 |

| Open Source Ecosystem Health Operationalization (OSEHO) | To evaluate the quality and health of open-source software ecosystem | Vulpen, 201755 |

Most apps enrolled participants 17/18+ years old; 3 allow children to participate with a guardian’s permission or co-participation.35,38,47 Two allow participation from 12+ year old.40,43,56 Several of the apps allow subjects who have not been diagnosed with the disease/condition of interest to participate as controls, while a few of the apps accept only subjects with self-reported, clinical diagnosis. The majority of apps are restricted to iPhone users only (with the exception of 4 apps available on Android),15,18,19,40 English only (3 exceptions),38,40,47 and U.S. only (1 exception).47

Most apps were conducting longitudinal, observational studies or simply amassing data that could be analyzed at a later time. Given the novelty of mHealth apps, a component of the purpose for all apps was to conduct preliminary research to validate the ability to recruit a large number of participants. Recruitment and consent data and demographic data for apps that had such data available are listed in Tables 4 and 5, respectively. Many studies relied heavily on survey data, whereas others utilized built-in phone sensors (see Table 1). Two applications required additional devices or services in order to utilize the app; EpiWatch required an AppleWatch, and uBiome required DNA-sequencing of gut bacteria.39,56

Table 4.

Available recruitment and consent data

| App | Number of Downloads | # Participants | Consent Rate (persons/month) | Study Period (months) |

|---|---|---|---|---|

| Asthma Health | 40 683 | 6470 | 1078 | 6 |

| Back on Track | 953 | 549 | 61 | 9 |

| Depression Monitor | 8241 | 6089 | 1522 | 4 |

| Mole Mapper | 11 056 | 2069 | 373 | 7.5 |

| mPower | 48 104 | 9846 | 75 | 12 |

| PARADE | 1170 | 399 | 399 | 1 |

| WebMD Pregnancy | 49 411 | 1889 | 210 | 9 |

| 6th Vital Sign | 771 | 361 | 226 | 1.6 |

Table 5.

Available demographic statistics

| App | Gender (% female participants) | Age in Yearsmean ± SD (range) | % Participants in 18–34 age range | % Participants with more than high school education |

|---|---|---|---|---|

| Asthma Health | 39% | NR(18–65+) | 60% | 89% |

| Back on Track | 26% | NR (18–54) | 54% | NR |

| Depression Monitor | 57.8% | 29.4 ± 10.8 (18–72) | 70% | 46.4% |

| Mole Mapper | 41.7% | NR (18–85+) | ∼50% | NR |

| mPower | 21.6% | 35.9 (NR) | NR | 57.5% |

NR = not reported.

All publications and apps included in the review were analyzed for recurring themes and challenges related to research protocols and technology implementation. Prominent themes include drop-offs in recruitment rates and low patient retention, non-representative demographic distributions, lack of information on how variable mobile use patterns could influence data quality, need for data validation, and privacy and security concerns related to use of mHealth apps for biomedical research and citizen science.

DISCUSSION

Based on our review and how traditional clinical trials are designed and deployed, we discuss 3 major areas as they relate to mHealth research apps, specifically those that are ResearchKit-based: 1) data generation and sharing, 2) participant recruitment and retention, and 3) privacy and security challenges.

Data generation and sharing

One of the most promising features of mHealth research apps is the ability to generate large biomedical datasets on diverse populations that can be shared and re-used for purposes of secondary research. To this end, it is critical to strategically plan and implement infrastructure that can promote data sharing and data quality through findable, accessible, interoperable, and reusable (FAIR) principles58 and to implement safeguards to protect data privacy.

To address data quality, apps such as Depression Monitor and Back on Track relied on surveys that were previously validated for self-administration.22,34 Other apps that used mobile sensor data employed methods to validate specific tasks such as motion sensing in the mPower app28 and self-directed skin examination and documentation in the Mole Mapper app.19 Nevertheless, there is a need for provisions to validate and ensure high quality of mHealth data. Currently, multiple frameworks have been proposed to evaluate general mHealth apps, but a single recognized framework specifically for mHealth research apps has yet to be established.59

Vulpen et al. applied select metrics from the Open Source Ecosystem Health Operationalization (OSEHO) framework to evaluate the quality of the ResearchKit platform as a whole.55 This study showed that ResearchKit enables productive app development through predesigned features; however, the robustness is seriously threatened by links to the several external, third-party tools and systems, which are being further incentivized by potential selection bias of Apple users.55 While this indicates that market attention will likely shift away from ResearchKit in the future, the lessons learned and data generated from the first wave of ResearchKit apps will nevertheless be informative for the next generation of mHealth research apps.

Several collaborative research programs are actively developing tools to increase the quality of mHealth data. Supported by the Center of Excellence for Mobile Sensor Data-to-Knowledge (MD2K), mProv is an initiative focused on the development of cyberinfrastructure for annotating mobile sensor data with source, provenance, validation metrics, and semantics.60 MD2K also aims to develop and validate new digital biomarkers from mHealth data.61 Additionally, the Open Humans Project provides a centralized platform to deposit, share, and privately store personal health data, including datasets generated and curated through the ResearchKit apps.62

To promote interoperability, the Fast Healthcare Interoperability Resources (FHIR) framework seeks to establish standards and a common application program interface for the exchange of health data between apps and personalized health records.23 FHIR details the need for in-app embedded design and platform-agnostic services. This level of interoperability is yet to be widely implemented in mHealth research apps. For example, the Consent, Contact, and Communication framework for Patient-Reported Outcomes (C3-PRO) provides an open-source toolchain to connect ResearchKit apps with clinical research infrastructure such as Informatics for Integrating Biology and the Bedside (i2b2).26 These efforts, together, demonstrate a promising trend towards a comprehensive ecosystem of tools and resources to deploy mHealth technology for biomedical research.

Retention and recruitment

Participant retention and recruitment are major pitfalls of clinical research that mHealth studies may remedy to an extent. Relative to traditional in-person clinical trials, mHealth studies have the ability to recruit from larger and more diverse populations in a more cost-effective manner.2 mHealth apps such as those based on ResearchKit allow for recruitment of subjects with rare conditions, stigmatized disorders, as well as those from marginalized populations.63–65 However, mHealth recruitment does face challenges in promoting the study and fostering an understanding of consent remotely. Additionally, it should be noted that development, deployment, and maintenance of ResearchKit-based apps is complex, often requires support from third-party vendors, and adds significant cost overhead to the overall study. With more demand and availability of readily interoperable systems (eg, Medable), these costs may be reduced in the future.66

Recruitment strategies

Potential study participants typically learn about clinical trials through listings in the ClinicalTrials.gov database or physician recommendation. However, the majority of apps were not listed on ClinicalTrials.gov. Physician recommendation is not yet viable on the national scale relevant to mHealth apps. Alternatively, exposure to ResearchKit apps was possible in news articles, eg, Forbes67 and The New York Times;68 within social media, eg, Twitter;69 and listings within the Apple App store such as search results in the “Health and Fitness” category.

Several publications commented on potential influences on study exposure. Multiple studies noted higher recruitment rates nearest the times of app release.11,34 The Depression Monitor research found that its initially high enrollment rate was well correlated to when the app was unintentionally featured in the Apple App Store.34 Asthma Health hypothesized that its initially high recruitment rate was a result of higher media attention upon release.11 Given that ResearchKit apps were relatively novel throughout the duration of these studies, media attention could be influencing the recruitment rates for all ResearchKit apps. Given time, passive recruitment rates may rise due to increased prevalence and common knowledge of self-directed mHealth research.

Retention

Both mHealth and standard clinical studies experience problems with patient retention. However, given the potential for large-scale data collection in mHealth studies, data trends and participant feedback can be utilized to improve retention. The main solutions that were proposed in the literature to combat low patient retention were increased participant engagement and feedback through 1 or more strategies such as gamification, monetary rewards, and utilization of push notifications.11,22,30 Until participant retention can be improved, mHealth studies can still be useful for developing large datasets that can be used as hypotheses-generating tools.11

In spite of retention difficulties, mHealth studies have demonstrated higher overall retention numbers than standard clinical trials. One of the first ResearchKit-based apps that was developed and deployed to study Parkinson’s Disease (PD) – mPower, recruited more than 9000 participants, of which 898 consented to broad data sharing and contributed 5 days’ worth of data.28 Traditional PD-related clinical trials, on the other hand, had sample sizes ranging from a few dozen to around 150 participants.70. Even with its steep dropoff rate, the mPower study was able to recruit and retain more subjects than any standard clinical trials for PD. Consent and recruitment rates for all ResearchKit apps are summarized in Table 4.

Electronic consent

The majority of people who downloaded any ResearchKit app did not consent and contribute data to the study (see Table 4). This suggests that many people were interested in the study but were deterred by the enrollment and electronic consent (eConsent) processes. Challenges in communicating an understanding of consent documents warrants careful consideration by apps necessitating self-administered consent because, unlike standard in-person studies, a researcher cannot be present during the consent process to answer any questions and aid in a participants’ understanding of the research and potential risks associated with it.

The mPower app attempted to improve the experience of the remote consent process by using visually engaging graphics and maintaining transparency through incorporating an explicit decision point to determine the extent of data sharing.28 Furthermore, several apps’ consent processes required that subjects pass brief quizzes to demonstrate their comprehension of the study principles, their own rights, and data sharing options.16,19,28 The mPower study team conducted a qualitative study using open-ended responses from their participants on the consent process. In doing so, they were able to demonstrate that despite their strategies to communicate consent, many of its control group participants (those without PD) misunderstood their role in the study.30 Further research is needed to demonstrate how comprehension of eConsent protocols compare to standard in-person consent.

Potential selection bias

All but 3 of the apps15,18,19 were available only to Apple iPhone users with additional restrictions on the operating system version, which may lead to participant selection bias. Even among those who own iPhones, network data requirements could prevent some users from participation. The population that tends to use iPhones most frequently is more educated, younger, and wealthier than the average American citizen.71 Five out of the 7 apps that have published results included demographic data.11,19,22,30,34 Three out of the 5 had a majority of male participants.11,19,22 All 5 indicated that the most common age for participants was less than 35. Multiple studies suggested expanding their app to Android operating systems, the user base of which better represents the American population.11,19 Alternatively, the Back on Track study justified that it was exempt from this bias because its app engaged a target population with demographics comparable to those of iPhone users.22 In addition to smartphone requirements, some apps were further restricted by the requirement of additional devices or services to utilize the app; the EpiWatch app required an AppleWatch, and the uBiome app required DNA-sequencing of gut bacteria.39,56 These requirements may further exclude low-income populations.

The source from which participants learn from about the mHealth study is another potential source of bias in the demographic distribution of subjects. While none of the studies reported whether they included surveys or other strategies to track how participants learned about the studies, general media and social media were 2 channels of exposure to such studies. Also, the greater mistrust of clinical trials, in general, among underrepresented minority groups is likely to amplify the representation of majority groups in mHealth citizen science efforts.72 Contrastingly, monetary compensation could bias studies in favor of low-income populations. Most studies included in this review are currently not using monetary incentives (with the exception of DMT mPulse), although there are plans for implementing such practices in the future.11,73

Use patterns

The use of mHealth for biomedical research and citizen science is relatively novel, and so little research has been conducted as to how variable use patterns could bias research studies. For example, one of the Depression Monitor study findings showed that users who completed their survey more than once were most at risk of a higher depression severity score.34 This phenomenon has yet to be studied to determine whether those who completed the survey more than once were truly more prone to depression or if the higher score can be correlated to the use pattern of taking the survey more than once. In addition to repeating surveys, other examples of variable use patterns include varied interpretation of the app’s purpose, benefits, or instructions.

Studies may use open-response questions embedded in the app or other strategies such as instrumentation to monitor use patterns. An ancillary study of the mPower project to investigate participants’ experience in the eConsent process showed that some individuals use the app with the incorrect expectation that it will deliver some therapeutic benefit, and even those who understood there would be no therapeutic benefit were nonetheless frustrated with restricted access to their own data.30

Privacy and security

There are widespread concerns over privacy and security in mHealth, given the sensitive nature of the data as well as methods used for user and data authentication.74 While there have been attempts to mitigate such risks, all mHealth research apps pose several privacy challenges, including the ability to identify participants or their behavioral characteristics from imaging data,19 multimodal sensor data, or open-ended prompts in which some participants included their contact information, despite instructions to the contrary.30

Current federal policies have been demonstrated to be insufficiently equipped to secure electronic protected health information (ePHI). Apps that enable biomedical citizen science grant access of data generated by general citizens to the broader community for secondary research. Despite this intimate level of data sharing, these apps are generally exempt from the same degree of federal regulation as traditional clinical trials.3,4 Additionally, the Federal Trade Commission (FTC) has tried many cases against use of mHealth apps and mobile devices to collect users’ information, violating the Electronic Communications Privacy Act of 1986 (ECPA or The Wiretap Act).75 Furthermore, given threats posted by breaches of portable electronic devices75 and the susceptibility to privacy invasion,76 the emerging mHealth research apps need a deeper assessment of current privacy laws and their relevance to research and citizen science.

CONCLUSION

Self-directed mHealth research applications, particularly apps that are based on open-source platforms such as ResearchKit, hold significant promise for biomedical research and citizen science. This review of existing mHealth research studies and platforms highlights their ability to recruit, consent, and retain far greater numbers of participants than traditional clinical trials in a cost-effective manner, along with representation of diverse populations. In particular, the large datasets that are being generated from these studies will allow for development and validation of novel digital biomarkers as well as retrospective studies. However, in order to capitalize on these benefits, mHealth research studies must strive to improve retention rates, implement rigorous data validation strategies, incorporate FAIR data principles, and address emerging privacy and security challenges.

FUNDING

This work was supported in part by the Office of Research, Discovery, Innovation (RDI) at the University of Arizona.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Conflict of interest statement. None declared.

Supplementary Material

REFERENCES

- 1.Trends, Charts, and Maps. ClinicalTrials.gov. 2018. https://clinicaltrials.gov/ct2/resources/trends. Accessed April 3, 2018.

- 2. Powell MR, To WJ. Redesigning the research design: accelerating the pace of research through technology innovation. In: 2016 IEEE Int Conf Serious Games Appl Heal SeGAH2016. doi: 10.1109/SeGAH.2016.7586269.

- 3.FDAAA 801 and the Final Rule. ClinicalTrials.gov. 2017. https://clinicaltrials.gov/ct2/manage-recs/fdaaa. Accessed July 30, 2018.

- 4. Rothstein MA, Wilbanks JT, Brothers KB.. Citizen science on your smartphone: an ELSI research agenda. J Law Med Ethics 2015; 43: 897–903. [DOI] [PubMed] [Google Scholar]

- 5. Cameron JD, Ramaprasad A, Syn T.. An ontology of and roadmap for mHealth research. Int J Med Inform 2017; 100: 16–25. [DOI] [PubMed] [Google Scholar]

- 6. Liberati A, Altman D, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med 2009; 6: doi: e1000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Rachuri KK, Musolesi M, Mascolo C, et al. EmotionSense: A Mobile Phones based Adaptive Platform for Experimental Social Psychology Research. In: proceedings of the 12th ACM International Conference on Ubiquitous Computing—Ubicomp ’10 ACM 2010. 281–90. doi: 10.1145/1864349.1864393.

- 8. Ramanathan N, Alquaddoomi F, Falaki H, et al. Ohmage: an open mobile system for activity and experience sampling. In: International Conference on Pervasive Health 2012. 203–4.

- 9. Tracker C, Hepatitis C, Care & Collaboration—Patient Reported Outcomes Survey Study (CTracker). ClinicalTrials.gov. 2015. https://clinicaltrials.gov/ct2/show/NCT02568540. Accessed March 31, 2018.

- 10. Carmenate YI, Gonzales EM, Ge A, et al. Tracking heart rate changes during seizures: a national study using ResearchKit and Apple Watch to collect biosensor and response data during seizures. Epilepsia 2017; 58: S48. [Google Scholar]

- 11. Chan Y-FY, Wang P, Rogers L, et al. The Asthma Mobile Health Study, a large-scale clinical observational study using ResearchKit. Nat Biotechnol 2017; 35 (4): 354–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Gowda I, Genes N, Tignor N, et al. The Asthma Mobile Health Study: wildfires and asthma exacerbations, just blowing smoke or is there a correlation? Ann Emerg Med 2016; 68 (4): S69–70. [Google Scholar]

- 13. Deering S, Amdur A, Borelli J, et al. SleepHealth Mobile App Study: bringing the sleep lab to you. Sleep 2017; 40 (Suppl 1): A287–8. [Google Scholar]

- 14. Kimura T, Takatsuki S, Nakajima K, et al. Surveillance of heart beats and strokes using the iphone app in Japan: a preliminary report of the clinical study named ‘heart & brain’ using researchkit. Hear Rhythm 2016; 13 (S607). http://ovidsp.ovid.com/ovidweb.cgi? T=JS&PAGE=reference&D=emed18a&NEWS=N&AN=72284246. Accessed April 7, 2018. [Google Scholar]

- 15. Understanding Daily Fluctuations in Self-Regulation. ClinicalTrials.gov. 2016. https://clinicaltrials.gov/ct2/show/NCT03006653. Accessed April 7, 2018.

- 16. Radin J, Steinhubl S, Su A, et al. The healthy pregnancy research program: transforming pregnancy research through a ResearchKit app. Npj Digital Med 2018; 1 (1): 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. MyHeart Counts Cardiovascular Health Study (MHC). ClinicalTrials.gov. 2017. https://clinicaltrials.gov/ct2/show/NCT03090321. Accessed March 31, 2018.

- 18. America Walks: An Observational Study of Walking Behavior Based on Passive Step Tracking (AMWALKS). ClinicalTrials.gov. 2016. https://clinicaltrials.gov/ct2/show/NCT02792439. Accessed March 31, 2018.

- 19. Webster DE, Suver C, Doerr M, et al. The Mole Mapper Study, mobile phone skin imaging and melanoma risk data collected using ResearchKit. Sci Data 2017; 4: 170005–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Williams R, Quattrocchi E, Watts S, et al. Patient rheumatoid arthritis data from the real world (PARADE) study: preliminary results from an apple researchkitTM mobile app-based real world study in the United States. Arthritis Rheumatol 2017; 69: 10–2. [Google Scholar]

- 21. Yang D, Thea J, An Y, et al. Digital health application for real-time patient-reported outcomes during prostrate radiotherapy. J Clin Oncol 2016; 34: 157. [Google Scholar]

- 22. Zens M, Woias P, Suedkamp NP, et al. “ Back on track”: a mobile app observational study using Apple’s ResearchKit framework. JMIR mHealth uHealth 2017; 5 (2): e23–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Anwar M, Doss C.. Lighting the mobile information FHIR. J AHIMA 2015; 86 (9): 30–4. [PubMed] [Google Scholar]

- 24. Electronic Consent of Numerous Subjects Employing Novel Techniques Trial. ClinicalTrials.gov. 2016. https://clinicaltrials.gov/ct2/show/NCT02799407. Accessed April 1, 2018.

- 25. Metts C. REDCap, iOS, and ResearchKit integration for clinical decision support. Am J Clin Pathol 2017; 147 (Suppl 2): S177 [Google Scholar]

- 26. Pfiffner PB, Pinyol I, Natter MD, et al. C3-PRO: connecting ResearchKit to the health system using i2b2 and FHIR. PLoS One 2016; 11 (3): e0152722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Chan YY, Bot BM, Zweig M, et al. The asthma mobile health study, smartphone data collected using ResearchKit. Sci Data 2018; 5: 180096.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Bot BM, Suver C, Neto EC, et al. The mPower study, Parkinson disease mobile data collected using ResearchKit. Sci Data 2016; 11: 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Neto EC, Bot B, Perumal T, et al. Personalized Hypothesis Tests for Detecting Medication Response in Parkinson Disease Patients using iPhone Sensor Data. In: Biocomputing2016. WORLD SCIENTIFIC 2016. 273–84. doi: 10.1142/9789814749411_0026. [PubMed]

- 30. Doerr M, Maguire Truong A, Bot BM, et al. Formative evaluation of participant experience with mobile eConsent in the app-mediated Parkinson mPower Study: a mixed methods study. JMIR mHealth uHealth 2017; 5 (2): 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Abujrida H, Agu E, Pahlavan K. Smartphone-based gait assessment to infer Parkinson’s disease severity using crowdsourced data. Paper presented at 2017 IEEE Healthcare Innovations and Point of Care Technologies (HI-POCT). Bethesda, MD. IEEE. Retrieved April 9, 2018 (2017; pp.208-11), from IEEE Xplore. [Google Scholar]

- 32. Ata R, Gandhi N, Rasmussen H, et al. VascTrac: a study of peripheral artery disease via smartphones to improve remote disease monitoring and postoperative surveillance. J Vasc Surg 2017; 65 (6): 115S–6S. [Google Scholar]

- 33. Prvu Bettger J, Shaw R, Kelly J, et al. Early national dissemination and adoption of the sixth vital sign: a mobile app for population health surveillance. Circulation 2016; 134.http://circ.ahajournals.org/content/134/Suppl_1/A20560. Accessed April 16, 2018. [Google Scholar]

- 34. BinDhim NF, Shaman AM, Trevena L, et al. Depression screening via a smartphone app: cross-country user characteristics and feasibility. J Am Med Inform Assoc 2015; 22 (1): 29–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Feverprints. Bost. Child. Hosp. 2016. http://feverprints.com/. Accessed April 2, 2018.

- 36.StopCOPD. itunes. 2018. https://itunes.apple.com/us/app/stopcopd/id1020845469? mt=8. Accessed April 2, 2018.

- 37.Neurons. itunes. 2015. https://itunes.apple.com/app/neurons/id1060631628? ls=1&mt=8. Accessed April 2, 2018.

- 38.Saker. itunes. 2016. https://itunes.apple.com/gb/app/saker/id1093325855? mt=8. Accessed April 2, 2018.

- 39.uBiome. uBiome Launches First Microbiome App Using ResearchKit; Initial Focus is on Relationship Between Gut Bacteria and Weight Loss. uBiome. 2015. https://ubiomeblog.com/2015/10/09/ubiome-launches-first-microbiome-app-using-researchkit-initial-focus-is-on-relationship-between-gut-bacteria-and-weight-loss/. Accessed April 2, 2018.

- 40.Postpartum Depression: Action Towards Causes and Treatment. PPD ACT. 2017. http://www.pactforthecure.com/. Accessed April 17, 2018.

- 41. mTECH—An Energy Drink and Health Outcomes Study. AppShopper. 2015. http://appshopper.com/medical/mtech-–-an-energy-drink-and-health-outcomes-study. Accessed April 2, 2018.

- 42.The PRIDE Study. University of California, San Francisco. 2018. https://www.pridestudy.org/study. Accessed April 2, 2018.

- 43.Biogram 2. itunes. 2015. https://itunes.apple.com/us/app/biogram-2/id1043761259? mt=8. Accessed April 2, 2018.

- 44.Yale Cardiomyopathy Index. itunes. 2015. https://itunes.apple.com/us/app/yale-cardiomyopathy-index/id1043339894? mt=8. Accessed April 2, 2018.

- 45.Estimated Placental Volume (EPV). Yale Sch. Med. 2018. https://medicine.yale.edu/obgyn/kliman/placenta/epv/. Accessed April 2, 2018.

- 46.Hand in Hand Studies. University of Nebraska Medical Center. 2015. https://www.handinhandstudies.org/. Accessed April 2, 2018.

- 47.Autism & Beyond. itunes. 2015. https://itunes.apple.com/us/app/autism-beyond/id1025327516? mt=8. Accessed April 2, 2018.

- 48. Shridhare L. TeamStudy App Supports NFL Players Health Research. Harvard Medical School. 2016. https://hms.harvard.edu/news/teamstudy-app-supports-nfl-players-health-research. Accessed April 2, 2018. [Google Scholar]

- 49. Misra S. New COPD app launched by team behind popular Asthma Health ResearchKit app. iMedicalApps. 2015. https://www.imedicalapps.com/2015/12/copd-app-researchkit-lifemap/#. Accessed April 2, 2018. [Google Scholar]

- 50.GlucoSuccess by the Massachusetts General Hospital. App Advice. 2015. https://appadvice.com/app/glucosuccess/972143976. Accessed April 2, 2018.

- 51.Concussion Tracker App. NYU Langone Heal. 2018. https://nyulangone.org/apps/concussion-tracker-app. Accessed April 2, 2018.

- 52.Sarcoidosis. itunes. 2017https://itunes.apple.com/us/app/sarcoidosis/id1188827249? mt=8. Accessed April 17, 2018.

- 53. Share the Journey: Mind, Body, and Wellness after Breast Cancer. Sage Bionetworks. 2015. http://sharethejourneyapp.org/. Accessed April 2, 2018.

- 54.ActiveTasks Document. ResearchKit. 2017. http://researchkit.org/docs/docs/ActiveTasks/ActiveTasks.html. Accessed August 5, 2018.

- 55. Vulpen PV, Menkveld A, Jansen S.. Health measurement of data-scarce software ecosystems: a case study of Apple’s In: Ojala A, Holmström, Olsson H, Werder K, eds. Software Business. Paper presented at the 8th International Conference on Software Business (ICSOB) 2017: 131–45. Essen, Germany: Springer International. [Google Scholar]

- 56.Johns Hopkins EpiWatch: App and Research Study. Johns Hopkins Medicine. https://www.hopkinsmedicine.org/epiwatch/index.html#.WSYUsmjyvIU. Accessed April 2, 2018.

- 57. VascTrac. Standford Med. http://vasctrac.stanford.edu/. Accessed April 2, 2018.

- 58. Wilkinson MD, Dumontier M, Aalbersberg IJJ, et al. The FAIR guiding principles for scientific data management and stewardship. Sci Data 2016; 3: 160018.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Nouri R, Kalhori SRN, Ghazisaeedi M, et al. Review criteria for assessing the quality of mHealth apps: a systematic review. J Am Med Informatics Assoc 2018; 25 (8): 1089–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Haddad D. mProv: a new initiative to create provenance infrastructure to enable sharing of high-frequency mobile sensor data. Open mHealth. 2016. http://www.openmhealth.org/mprov-press-release/. Accessed April 3, 2018. [Google Scholar]

- 61. Kumar S, Abowd G, Abraham WT, et al. Center of excellence for mobile sensor data-to-knowledge (MD2K). IEEE Pervasive Comput 2017; 16 (2): 18–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. About Open Humans. OpenHumans.org. 2015. https://www.openhumans.org/about/. Accessed June 21, 2018.

- 63. Mohammadi D. ResearchKit: a clever tool to gather clinical data. Pharm J 2015; 294: 513–6. [Google Scholar]

- 64. Morgan AJ, Jorm AF, Mackinnon AJ.. Internet-based recruitment to a depression prevention intervention: lessons from the Mood Memos study. J Med Internet Res 2013; 15 (2): e31.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Dolan B. How mobiles make clinical trials bigger, faster and more efficient | MobiHealthNews. mobihealth news. 2013. http://www.mobihealthnews.com/28198/how-mobiles-make-clinical-trials-bigger-faster-and-more-efficient. Accessed April 18, 2018. [Google Scholar]

- 66. Pennic J. Medable Launches Axon to Build ResearchKit Apps without a Developer. HIT Consult. 2016. https://hitconsultant.net/2016/09/27/medable-launches-axon/. Accessed July 30, 2018. [Google Scholar]

- 67. Utley T. Could This New ResearchKit App Help Develop The Fitness Tracker For The Brain? Forbes 2017: 1–2. https://www.forbes.com/sites/toriutley/2017/05/31/could-this-new-researchkit-app-help-develop-the-fitness-tracker-of-the-brain/#5bcb6c1a77c9. Accessed March 27, 2018. [Google Scholar]

- 68. Singer N. Apple, in Sign of Health Ambitions, Adds Medical Records Feature for iPhone—The New York Times. New York Times. 2018. https://www.nytimes.com/2018/01/24/technology/Apple-iPhone-medical-records.html. Accessed March 27, 2018.

- 69.#researchkit hashtag on Twitter. https://twitter.com/hashtag/researchkit? lang=en. Accessed March 27, 2018.

- 70. Allen NE, Sherrington C, Suriyarachchi GD, et al. Exercise and motor training in people with Parkinson’s disease: a systematic review of participant characteristics, intervention delivery, retention rates, adherence, and adverse events in clinical trials. Parkinsons Dis 2012; 2012: 854328.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Smith A. Smartphone Ownership 2013. Pewinternet.org. 2013. http://www.pewinternet.org/2013/06/05/smartphone-ownership-2013/. Accessed March 27, 2018. [Google Scholar]

- 72. Langford A, Resnicow K, An L.. Clinical trial awareness among racial/ethnic minorities in HINTS 2007: sociodemographic, attitudinal, and knowledge correlates. J Health Commun 2010; 15 (Suppl 3): 92–101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. The Digital Marshamallow Test. DMT. 2018. http://digitalmarshmallow.org/. Accessed June 1, 2018.

- 74. Kotz D, Gunter CA, Kumar S, et al. Privacy and security in mobile health: a research agenda. Computer (Long Beach Calif) 2016; 49 (6): 22–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Helm A, Georgatos D.. Privacy and mHealth: how mobile health ‘apps’ fit into a privacy framework not limited to HIPAA. Syracuse Law Rev 2014; 64: 132–70. https://papers.ssrn.com/sol3/papers.cfm? abstract_id=2465131. Accessed March 29, 2018. [Google Scholar]

- 76. He D, Naveed M, Gunter CA, et al. Security concerns in Android mHealth apps. AMIA Annu Symp Proc 2014; 2014; 2014: 645–54. http://www.ncbi.nlm.nih.gov/pubmed/25954370. Accessed March 31, 2018. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.