Abstract

Objective

Conduct a cluster analysis of inpatient portal (IPP) users from an academic medical center to improve understanding of who uses these portals and how.

Methods

We used 18 months of data from audit log files, which recorded IPP user actions, of 2815 patient admissions. A hierarchical clustering algorithm was executed to group patient admissions on the basis of proportion of use for each of 10 IPP features. Post-hoc analyses were conducted to further understand IPP use.

Results

Five cluster solutions were developed for the study sample. Our taxonomy included users with high levels of accessing features that were linked to reviewing schedules, results, tutorials, and ordering food. Patients tended to stay within their clusters over multiple admissions, and the clusters had differences based on patient and clinical characteristics.

Discussion

Distinct groups of users exist among IPP users, suggesting that training on IPP use to enhance patient engagement could be tailored to patients. More exploration is also needed to understand why certain features were not used across all clusters.

Conclusions

It is important to understand the specifics about how patients use IPPs to help them better engage with their healthcare. Our taxonomy enabled characterization of 5 groups of IPP users who demonstrated distinct preferences. These results may inform targeted improvements to IPP tools, could provide insights to improve patient training around portal use, and may help care team members effectively engage patients in the use of IPPs. We also discuss the implications of our findings for future research.

Keywords: patient portals, cluster analysis, health information technology, technology acceptance, technology use

INTRODUCTION

Technology has transformed the relationship between patients and the systems that deliver care through the development of tools and applications that allow patients to engage with both their health information and their care providers using the convenience of an Internet connection. Patient portals, most frequently made available to patients outside the clinical encounter, allow patients to view their medical and laboratory information and help them to communicate with their providers.1,2 However, a relatively new class of patient portals offers these same applications concurrent with the clinical encounter.

Called inpatient portals (IPPs),3,4 these applications have been developed for use on electronic tablets as part of the delivery of care during a patient’s hospital stay and offer a suite of features appropriate to the inpatient care process. For example, IPPs can provide telemetry data and can offer communication applications that enable patients to follow their treatments and care as they change over the course of the clinical visit. While the absence of the policy stimulus that fostered widespread adoption of outpatient portals (OPPs) has resulted in slower adoption of facility-based IPPs,4 these applications have the potential to empower patients during hospitalization,5 a time during which they may feel acutely vulnerable to their health condition.6 As a result, IPPs are seen as transformative to the inpatient care experience.7

Given the different contexts, toolsets, and dynamics associated with the use of IPPs in relation to their outpatient analogues, understanding the specifics about how patients use this technology is critical to enabling hospitals and healthcare systems to realize the potential positive impact of IPPs on both patient engagement and health outcomes. Research exploring the use of portals has focused on specific groups of patients or organizational units; portals have rarely been studied in any wide-scale implementation.8,9 One notable exception was a study by Jones and colleagues10 of OPP users with specific chronic conditions at a large, academic medical center (AMC); using a hierarchical cluster analysis of patient portal use and demographic characteristics, the researchers categorized 8 distinct groups of OPP users. For example, “eDabblers” were identified as patients who engaged in a few short sessions on the portal, while “Infrequent, Intense Users” showed low frequency of use but high intensity when they used the portal. Although this study offered early insight into how patients use portals, it was limited to the outpatient setting and the use patterns of a select group of patients.

Our study is designed to assess how hospitalized patients across an AMC use IPPs during their stay. By empirically investigating portal use in this context, we aim to understand who uses IPPs and how IPPs are used. Improving our understanding of portal use among inpatients can provide researchers with profiles of use behavior that can be applied in hypothesis testing about the effect of patient and contextual attributes. Moreover, identifying and characterizing different groups of users may help providers and organizations to better communicate and work collaboratively with patients based on different portal use patterns.

The inpatient portal system

Our Midwestern AMC performed a staged system-wide implementation of the Epic Systems’ MyChart Bedside (MCB) product across its 6 hospitals beginning in 2016.3,11 When provisioned with a tablet during their inpatient stay, patients, their families, and caregivers have access to MCB features provided by the AMC; data generated throughout the inpatient stay are subsequently linked to their electronic health record (EHR) and become accessible through Epic’s ambulatory portal system, MyChart Ambulatory. Table 1 presents the 10 features provided by the AMC’s MCB application.

Table 1.

MyChart bedside inpatient portal features

| Feature | Description |

|---|---|

|

Review scheduled upcoming interactions with the care team |

|

Request one of a number of ancillary services |

|

Order a meal from a predefined menu |

|

Access tutorials on the use of MyChart Bedside |

|

Review vitals and laboratory test results |

|

Send a secure message as part of an ongoing conversation |

|

Look at the ongoing secure message conversation |

|

Record and review personal notes (audio and written) |

|

Review active members of the care team |

|

Access training materials through a link to an external health information content provider |

METHODS

Overview

This study was part of a larger, ongoing assessment of MCB at our AMC as described by McAlearney and colleagues;12 their assessment involved the use of a randomized controlled trial to compare effects of levels of access to IPP features and training on IPP use and clinical outcomes. Upon admission to all units in the 6 hospitals, patients were automatically randomized using a script built into the Epic EHR system. This script assigned patients to an arm of the study based on predefined random numbers that corresponded to the last 4 digits of the patient’s medical record number. The randomization assigned patients along 2 dimensions: technology and touch. For the technology assignment, the options were high-tech (full MCB features) or low-tech (limited MCB features); for the touch assignment, the options were high-touch (in-person training) or low-touch (video training built into MCB). Nurses were blind to the assigned study arm of a patient.

Upon admission, nurses conducted an assessment to determine whether the patient should be offered an Android tablet equipped with MCB. Patients who were over age 18, English speaking, not incarcerated, and physically and cognitively capable of managing the technology were eligible to receive tablets. After patients agreed that they would like access to the tablet during their hospital stay, the nurse initialized the tablet with the patient’s MCB account. The initialization process automatically loaded the correct (ie, high-tech or low-tech) version of MCB. When the patient logged into MCB for the first time and accepted the terms of use, the patient was directed to an electronic study consent page in order to ask about study participation. Study participation was voluntary, and participants were entered into a weekly raffle for a $100 gift card as a token of appreciation for their participation. This study was approved by the AMC’s Institutional Review Board.

Study sample

For the purposes of this study of IPP use, only patients assigned to the high-tech, low-touch group were included in the study sample. This decision was made due to 2 main factors: 1) patients in the high-tech group had access to all MCB features; and 2) patients’ use of MCB in the low-touch group would not be influenced by the in-person training delivered to patients in the high-touch intervention group.

Additional exclusion criteria for the study sample in these analyses included: 1) length of stay beyond 30 days; and 2) tablet provisioned on the day of discharge. For the first exclusion criterion, we excluded the small number of patients who had inpatient stays beyond 30 days because their tablet use may be noticeably different from that of the vast majority of patients with shorter stays. The second exclusion criterion was designed to ensure we included patients who used the tablet for at least 1 day.

Our final study sample contained 2815 patient admissions from 1793 unique patients, of which 543 patients had multiple admissions.

Data sources

Our primary sources of data were audit log files from January 2017 to June 2018, a period of time following the initial MCB rollout that can be characterized as stable with respect to MCB implementation and use. Audit log files are server-based records of patient MCB user actions. Log file data analysis has been cited by researchers as creating opportunities to identify patterns in the use of specific features as opposed to simply measuring the length of exposure an individual has to a technology.13,14

Log file data extracted from the AMC Information Warehouse contained 3 primary variables pertinent to this study: 1) coded medical record number; 2) activity code (ie, accessing a specific MCB feature); and 3) timestamp when the activity took place. The data were processed to ensure a patient was associated with his or her relevant actions in the MCB application. This data set was linked to admission, discharge, and transfer records; hospital charges; and the annual patient summary report by a patient’s medical record number. In some circumstances, other unique identifiers such as time of admission or discharge and hospital account identifiers also were available and were linked to the data as appropriate.

Variable creation

Variables created for the study analyses are listed and described in Table 2. The study authors initially defined a rubric that identified which features were considered “active” in the data. This rubric was subsequently validated by a lead MCB project manager from the information technology department. This process helped identify MCB tasks recorded in the log files that require action by the user (active) in comparison to those tasks that are navigational (not active) and occur automatically. Using data from each admission of a MCB user, the number of times a patient accessed the 10 MCB features during an admission was first counted and stored as a variable. Since patients stay in the hospital for varying lengths of time, the count variables for each feature were then divided by the patient’s total use of all features during an admission. The resulting measure can be interpreted as the proportion of use for each feature available on MCB. This approach was used to standardize each patient’s MCB use in order to allow for user group identification through clustering.

Table 2.

Description of study data sources and variables

| Variable | Source (Variable Construction) |

|---|---|

| Admission and discharge date/time | Admission, Discharge, and Transfer Record |

| Clinical unit of admission | Admission, Discharge, and Transfer Record |

| Length of stay | Admission, Discharge, and Transfer Record (Discharge date subtracted from admission date) |

| MyChart Ambulatory/bedside use | Epic Audit Log File |

| Age | Annual Patient Summary Report |

| Gender | Annual Patient Summary Report |

| Count of diagnoses | Hospital Charge Data |

| Principal diagnosis | Hospital Charge Data |

| Charlson Comorbidity Index | Hospital Charge Data (Weighted score of 17 conditions15) |

| Provision length | Epic Audit Log File (Discharge date subtracted from first portal use day) |

| Session | Epic Audit Log File (Discrete use [ie first active task within an admission or any active task after 15 minutes of inactivity] of MyChart Bedside) |

| Frequency of use of each feature | Epic Audit Log File (Sum of use of a feature on provisioned days) |

| Comprehensiveness of use | Epic Audit Log File (Sum of use of features on provisioned days) |

| Proportion of feature use | Epic Audit Log File (Sum of use of a feature divided by sum of use of features on provisioned days) |

| Sessions per provisioned day | Epic Audit Log File (Sum of sessions divided by sum of provisioned days) |

| Daily feature use | Epic Audit Log File (Sum of use of a feature on a provision day divided by sum of use of features on a provisioned day) |

| Lifetime feature use | Epic Audit Log File (Sum of use of a feature over the lifetime of MyChart Bedside use) |

Analysis

We used cluster methods and statistical comparisons of means to analyze the study data. A hierarchical agglomerative clustering algorithm was used to group patient admissions on the basis of proportion of IPP feature use, in which a patient admission was initially placed in a separate cluster and subsequently joined by the 2 most similar clusters. Ward’s method was used to conduct this analysis, in which clusters that minimized the within-cluster sum of squares across the complete set of proportion of feature use variables were identified as being similar.16

Our final cluster solution placed each admission into a unique group that minimized differences in portal feature use within the cluster and maximized differences in feature use between all other clusters. An optimal number of clusters was determined using the stopping rules proposed by the Calinski/Harabasz pseudo-F index,17 Duda/Hart scores,18 and inspection of the cluster dendrogram. Major steps of cluster analysis included: 1) exploring the data for atypical values that may be potential outliers; here we explored portal use by provision day to identify unusual differences among users and did not find any with such variation; 2) conducting split-half cluster analysis, in which observations were split randomly into 2 halves and the clustering approach was applied; this was performed in order to assess the reliability of our cluster solutions; and 3) applying our cluster method to the study sample and comparing the optimal number of clusters with those identified through the split-half analyses (in terms of distinguishing features and cluster size). Based on our results from the study sample, we explored how those patients with multiple admissions (210 patients) moved across clusters based on their first 3 admissions. We examined differences in typical patient demographic and clinical characteristics to assess variability across clusters. We also identified the top 5 clinical units (ie, based on admissions) associated with each cluster in which the patients primarily used the inpatient portal (87% of our admissions had all of their portal use in 1 unit).

We conducted 3 post-hoc analyses to further understand IPP use. First, we applied our clustering method to patient admissions that had lengths of stay of less than 3 days, 3 to 7 days, and more than 7 days. Second, we applied our clustering method to a subset of patients who were first-time users of the IPP and had 4 consecutive days of portal use. Namely, we performed a clustering analysis of their lifetime use of features and 4 separate analyses of their daily use of features. The construction of these variables is described in Table 2. Third, we applied our cluster method to 2 separate analyses. We compared patient admissions that used the AMC’s OPP (ie, MyChart Ambulatory) before admission with IPP use against admissions that did not have OPP use before admission with IPP use. We also compared patients who used the OPP during their admission concurrent with IPP use against patients who did not use the OPP during their admission concurrent with IPP use.

Although iterative partitioning or non-hierarchical partitioning (eg, K-means clustering) is an alternative approach to clustering, we did not use this method given the lack of a priori information about the number of clusters one should expect from this data set. To examine differences between the clusters of IPP user groups and specific patient characteristics, we conducted statistical comparison of means tests (eg, one-way ANOVA and Kruskal–Wallis equality-of-populations rank test) between the clusters identified in our final solution. All statistical analyses were performed using Stata 15.1. Specific to our clustering method, we used the Stata cluster command with Ward’s linkage and squared Euclidean distance.

RESULTS

Our study sample of MCB users consisted of patients who were generally younger, more likely to be female, and had a similar Charlson Comorbidity Index (range 0 to 17) and diagnosis count compared to patients in the general AMC population, as shown in Table 3. Supplementary Results 1.a provides the summary statistics of the variables used in our clustering method for the study sample.

Table 3.

Summary statistics of patient characteristics for study sample and general AMC population

| Study sample (N = 2815) | General AMC population (N = 58 054) | |

|---|---|---|

| Age (SD) | 45.46 (14.91) | 53.13 (18.14) |

| Female | 59% | 53% |

| Charlson Comorbidity Index (SD) | 2.25 (2.47) | 2.78 (3.14) |

| Diagnoses (SD) | 16.94 (8.85) | 16.62 (9.76) |

Inspection of the split-half cluster analyses (Supplementary Results 1.b), the Calinski/Harabasz pseudo-F index, Duda/Hart scores (Supplementary Results 1.c), and the dendrogram for the cluster analysis using the study sample (Supplementary Results 1.d) led to the selection of a 5-cluster solution to categorize portal users.

We found that IPP users primarily spent their time using the Happening Soon (35%) and Dining on Demand (24%) features, with some of their time also spent using the My Health (14%) and Getting Started (5%) features. This use pattern is presented as the “Study Sample” column in Table 4, and it was used as a basis for characterizing the 5 clusters of IPP user groups that were each distinguished by a unique collective use pattern for the 10 features.

Table 4.

Proportions of portal features used and summary statistics by inpatient portal cluster types and study sample

| Average Users (N = 1201) Cluster 1 | Monitors (N = 518) Cluster 2 | Results Viewers (N = 278) Cluster 3 | Diners (N = 551) Cluster 4 | Tutorial Viewers (N = 267) Cluster 5 | Study sample (N = 2815) | |

|---|---|---|---|---|---|---|

| Happening Soon | 0.39 | 0.71 | 0.15 | 0.11 | 0.17 | 0.35 |

| I Would Like | 0.03 | 0.01 | 0.02 | 0.02 | 0.02 | 0.02 |

| Dining on Demand | 0.19 | 0.09 | 0.12 | 0.61 | 0.12 | 0.24 |

| Getting Started | 0.08 | 0.05 | 0.04 | 0.10 | 0.52 | 0.12 |

| My Health | 0.14 | 0.06 | 0.57 | 0.04 | 0.04 | 0.14 |

| Message Send | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Check Message | 0.04 | 0.01 | 0.02 | 0.02 | 0.03 | 0.03 |

| Notes | 0.03 | 0.01 | 0.02 | 0.02 | 0.02 | 0.02 |

| Taking Care of Me | 0.04 | 0.03 | 0.03 | 0.04 | 0.03 | 0.04 |

| To Learn | 0.05 | 0.02 | 0.02 | 0.05 | 0.06 | 0.04 |

| Sessions per provisioned day*** (SD) | 6.63(5.33) | 8.08(6.56) | 7.02(5.88) | 3.74(2.82) | 3.18(3.04) | 6.04(5.38) |

| Comprehensiveness of use*** (SD) | 9.46(2.23) | 8.86(2.63) | 9.52(2.14) | 5.93(2.78) | 5.76(2.66) | 8.31(2.92) |

| Length of stay (SD) | 6.05(5.01) | 6.42(5.14) | 6.47(4.95) | 6.63(5.50) | 5.75(4.84) | 6.25(5.12) |

| Provision length*** (SD) | 4.65(4.32) | 5.04(4.67) | 5.08(4.70) | 5.31(4.87) | 3.83(3.89) | 4.81(4.51) |

P < 0.001.

Cluster 1, labeled “Average Users,” included patients who used IPP features that closely corresponded with the study sample. They primarily spent their time using the Happening Soon (39%) and Dining on Demand (19%) features, and spent roughly 22% of their time on the My Health and Getting Started features. These patients accounted for most of the participants (43%) from our study sample. Cluster 2, labeled “Monitors,” consisted of patients who spent 71% of their time using the Happening Soon feature and approximately 15% of their time using the My Health and Dining on Demand features. This cluster accounted for 18% of patients from the study sample. Cluster 3, labeled “Results Viewers,” included patients who spent 57% of their time using the My Health feature, 15% of their time using the Happening Soon feature, and 12% of their time using the Dining on Demand feature. This group represented 10% of the study sample. Cluster 4, labeled “Diners,” included patients who spent 61% of their time using the Dining on Demand feature, 11% of their time using the Happening Soon feature, and 10% of their time using the Getting Started feature. This cluster represented 20% of patients from the study sample. Finally, Cluster 5, labeled “Tutorial Viewers,” contained patients who spent 52% of their time using the Getting Started feature, 17% of their time using the Happening Soon feature, and 12% of their time using the Dining on Demand feature. This group contained 10% of patients from the study sample.

Use of the Dining on Demand, My Health, Getting Started, and Happening Soon features was present across the clusters. Other features such as Message Send, Check Message, and I Would Like were rarely used. In terms of average sessions per provisioned day, “Diners” had the fewest sessions (M = 3.74); “Monitors” had the most sessions (M = 8.08), with about 2 more sessions than the study sample average. With respect to the comprehensiveness of MCB use, “Tutorial Viewers” used the smallest number of features (M = 5.76); “Results Viewers” used the most features (M = 9.52), using approximately 1 more feature than the study sample average. With respect to provision length, “Diners” had the longest exposure to the inpatient portal (M = 5.31), and “Tutorial Viewers” had the shortest exposure (M = 3.83). Lengths of stay for admissions did not vary by cluster.

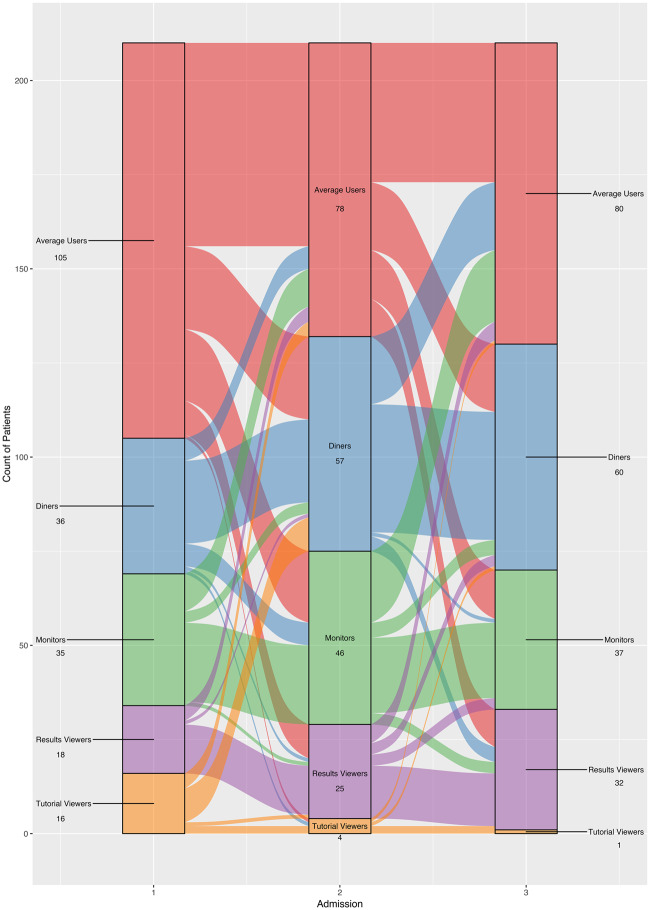

Figure 1 illustrates how patients with 3 admissions (N = 210 patients) moved across clusters with respect to their admissions. Overall, patients tended to stay within their clusters across their admissions. An exception to this was the “Tutorial Viewers” cluster; this cluster had very few patients associated with it subsequent to the first admission. Patients in the “Average Users” cluster tended to migrate to the “Monitors,” “Results Viewers,” and “Diners” clusters after their first or second admissions.

Figure 1.

Sankey graph illustrating movement of clusters for patients with 3 admissions during the study period (N = 210). Blocks represent specific cluster names (eg, Diners) and numbers next to the names are the frequency of patients within that cluster for a given admission. The height of the block represents the size of a cluster. The colors of the streams indicate which clusters the patients are coming out of in the first and second admissions, and the streams between the blocks represent the changes in the composition of the clusters between admissions.

Table 5 displays the patient demographic and clinical status characteristics across the 5 clusters of IPP users and the study sample. Statistical tests of the cluster means across the patient characteristics indicated the clusters differed by age, gender, and comorbidity. We report the top 5 conditions that contributed to the Charlson Comorbidity Index scores by each cluster to provide additional clinical context for our findings. There were no notable differences across clusters based on clinical conditions, and chronic pulmonary disease and congestive heart failure were the more prevalent conditions across clusters (Supplementary Results 1.e). Our statistical tests did not show significant differences by count of patients’ diagnoses. We also did not identify any notable differences across clusters in terms of the top 5 clinical units associated with patients’ primary use of the IPP (Supplementary Results 1.f)).

Table 5.

Summary statistics of patient characteristics by inpatient portal cluster types and study sample

| Average Users (N = 1201) Cluster 1 | Monitors (N = 518) Cluster 2 | Results Viewers (N = 278) Cluster 3 | Diners (N = 551) Cluster 4 | Tutorial Viewers (N = 267) Cluster 5 | Study sample (N = 2815) | |

|---|---|---|---|---|---|---|

| Age***(SD) | 44.85 (14.82) | 43.31 (13.39) | 44.40 (14.69) | 44.97 (15.31) | 54.46 (14.46) | 45.46 (14.91) |

| Female** | 62% | 56% | 60% | 61% | 49% | 59% |

| Charlson Comorbidity Index**(SD) | 2.10 (2.37) | 2.13 (2.29) | 2.23 (2.38) | 2.65 (2.86) | 2.38 (2.42) | 2.25 (2.47) |

| Diagnoses (SD) | 17.03 (9.10) | 16.47 (9.00) | 16.93 (8.17) | 17.03 (8.61) | 17.21 (8.73) | 16.94 (8.85) |

P < 0.001, **P < 0.001.

Post-hoc analyses

Applying our clustering method to patient admissions that had lengths of stay of less than 3 days, 3 to 7 days, and more than 7 days yielded clusters that resembled those present in Table 4. All of the length of stay groups contained “Average Users,” “Monitors,” “Diners,” and “Results Viewers” clusters. Their proportions remained stable across the length of stay categories, and the “Monitors” cluster had the highest representation from the total samples across the length of stay categories. The 3 to 7 days and more than 7 days categories also included “Tutorial Viewers” clusters, which were relatively small proportions of their total samples (Supplementary Results 2.a).

In order to further examine IPP feature use, we also inspected lifetime use and daily use for a subset of patients who were first-time users of the IPP and had 4 consecutive days of portal use (648). With respect to lifetime use, our cluster method yielded 4 clusters: “Average Users” (41%), “Monitors” (26%), “Diners” (17%), and “Results Viewers” (15%). In regard to use by provision day, we applied our clustering method to each of the 4 provision days. Across each day, we found 2 clusters: “Diners” and new clusters that resembled “Average Users” but had differences in the amount of use for the My Health, Happening Soon, or Dining on Demand features. The “Monitors” clusters were present in the first, third, and fourth provision days, and the “Tutorial Viewers” cluster appeared in the second and fourth provision days. None of the aforementioned clusters dominated in terms of count across the first 4 provision days (See Supplementary Results 2.b and 2.c for the lifetime use and first 4 provision day results, respectively).

In regard to experience with the AMC’s OPP, we identified 915 admissions with OPP use before the admissions with IPP use and 1900 admissions that had no OPP use before the admissions with IPP use in our study sample. Analyses of these subsets yielded similar clusters: “Diners,” “Monitors,” “Results Viewers,” and “Lite Tutorial Viewers.” However, we obtained 3 additional clusters from the no prior OPP use subset: “Tutorial Viewers,” “Average Users,” and “General Health,” who spent 68% of their time on the Happening Soon and My Health features combined. We also obtained 1 additional cluster from the subset of admissions with prior OPP use: “Lite Monitors.” Differences in the proportions of the clusters with respect to their total sample size for those with prior OPP use and no prior OPP use were trivial for the “Diners,” “Monitors,” and “Results Viewers” groups. The “Lite Monitors” group and the “Monitors” group were the largest in relation to the subset with prior OPP use and the subset with admissions with no prior OPP use, respectively (Supplementary Results 2.d).

We identified 96 admissions in which the OPP was used during admissions concurrent with IPP use and 1804 admissions in which the OPP was not used during admissions concurrent with IPP use. For both these groups, our cluster analyses demonstrated the presence of “Average Users,” “Monitors,” “Diners,” and “Results Viewers.” These clusters were generally the same size for both groups; however, the “Monitors” cluster was larger (8 percentage points) for the group with no OPP use during admissions. Among the group with the OPP users during admissions, we also found 2 additional clusters: “Lite Tutorial Viewers” and “Extreme Tutorial Viewers,” although the latter group was a very small proportion of the total sample size. The group that did not have the OPP users during admissions also had 2 other clusters, “Tutorial Viewers” and “Extreme Diners.” The “Average Users” and “Monitors” clusters were the largest clusters for both groups (Supplementary Results 2.e).

DISCUSSION

Across users of an IPP at a large AMC, we found 5 unique clusters of user groups: “Average Users,” “Monitors,” “Results Viewers,” “Tutorial Viewers,” and “Diners.” These heterogeneous user groups showed different patterns of IPP use; our discussion below highlights the specifics associated with each cluster of users, in addition to describing potential tradeoffs in IPP use by patients associated with each cluster. Our study contributes to the existing literature on portals by expanding the focus on portal use beyond the outpatient setting and, moreover, is one of the first studies to generate a taxonomy of IPP users. These findings provide insight to organizations attempting to implement and support IPP use and offer preliminary evidence that user types vary with respect to both activities and experiences.

The “Average Users” represented a group of patients who essentially mirrored use patterns of the average IPP user: patients who focused on following their daily healthcare and dietary activities and spent a little time reviewing results or learning about how to use the portal. “Monitors” represented a group of patients who spent a relatively large proportion of their IPP time checking upcoming interactions with their care team. “Results Viewers” included patients who frequently checked their test results and schedules, but did not often seek information about their health condition. “Tutorial Viewers” primarily used the Getting Started feature to learn how to use the portal, and they spent some time following their daily healthcare and dietary activities. Finally, “Diners” used the portal primarily to order food; they did not commonly access information about their health through their IPP. Interestingly, our study’s portal cluster user groups corresponded with some of the cluster user groups identified in Jones and colleagues10’ study of OPP users; specifically, “Appointment Preparers” seemed consistent with our IPP “Monitors” group, and their “Lab Trackers” group was similar to our IPP “Results Viewers” group.

The identification of these different clusters of IPP users suggests opportunities to provide patient training about portal features and portal use in general. For example, our results showed that some groups used the portal to access information about their health and healthcare, suggesting that certain user groups may be more interested in monitoring their health and the care process, and this interest can be supported through an IPP. In the outpatient setting, portal use has been linked to better disease management and greater engagement in care.4,19–22 Interestingly, the “Results Viewers” and “Monitors” groups in our study—groups that contained relatively younger and less sick patients (ie, lower Charlson Comorbidity Index)—used IPP features analogous to those OPP features that enhance engagement in the outpatient setting, suggesting that use of these features may similarly facilitate greater patient engagement. Interventions that encourage those who infrequently use such features in the inpatient environment may also improve inpatient engagement. For example, “Average Users” and “Diners” may have the technical ability to navigate the portal, but could benefit from training to support their use of more clinically oriented portal features;23 in turn, expanded use of the available portal features may increase these users’ engagement in their care. Conversely, the “Tutorial Viewers”—a group consisting of patients who were older, sicker, and predominantly male—may not have used the portal very much after going through the instructions on how to use the application. This suggests there may be a group of patients who need either further training or additional motivation to use the IPP. Our post-hoc analysis suggested the clusters of IPP users we found were stable over different lengths of stay as well as across IPP lifetime use. However, we also found considerable variability in use by provision day, a finding that may be expected given this was patients’ initial exposure to the portal. As illustrated in Figure 1, it appears, nonetheless, that patients can gain experience with portal use over time, and this may contribute to shifts in how they use the portal overall. We also found greater variation in the types of clusters from admissions with patients who had no prior use of the AMC’s OPP before their admission with IPP use than those who did have prior experience with the AMC’s OPP. Our results also indicated the group with no prior OPP use had a cluster of users who spent a majority of their time watching the tutorial. This phenomenon was also present in our analysis of admissions in which OPP use took place during admissions with IPP use. These findings may highlight the need to allocate special attention to helping train patients with no portal experience.

It is important to acknowledge the lack of use of several IPP features that could have specifically enhanced patient engagement. Particularly relevant to our study, the secure messaging feature of OPPs has shown increased use over time and helps patients engage in their care by providing an electronic record of communication with their providers as well as allowing them to communicate asynchronously.24 The availability of this feature can also facilitate a greater sense of collaboration with and trust in providers.25 Patients in our study, however, showed very little use of the secure messaging feature to communicate with their care team. As the inpatient environment offers more opportunities for in-person communication than the outpatient environment, patients and care team members may undervalue the secure messaging feature of the IPP in this context. In-person communication has many benefits, but unlike secure messaging facilitated by an IPP, it does not result in an electronic record of questions asked and answers received or easily allow asynchronous communication such as with family members who may not be present during care team visits. In our current study, the limited use of the messaging, notes, and request ancillary services features suggests that further research is needed to better understand why these features are not regularly used by hospitalized patients.

Our results suggest many new avenues for research. Notably, the groups of IPP users that we characterized could be used as a framework upon which to generate new hypotheses and explore patterns of outcomes that may be associated with specific user groups. For example, it would be interesting to see whether “Results Viewers,” the group viewing their health results through the IPP, experience better clinical outcomes in comparison to “Diners,” the group that primarily used the IPP to order food, as well as examine whether any differences persist over time. Given the resources needed to promote successful implementation and use of an IPP, it is important to continue to improve our understanding of the nature of portal use, including how it could be leveraged to help improve cost, efficiency, and quality concerns around healthcare delivery.26

These findings should be interpreted in light of several limitations. Our study timeframe reflects a period during which the healthcare system and patients were learning to use the portal. While MCB implementation had reached a stable point in the health system, the presence of a portal in the inpatient environment was still novel. In practice, care team members must know how to use the application and understand its value to be able to support patients in portal use.27 Related, a care team’s encouragement to use the portal could differ across units. Further work is needed to understand whether and how use patterns may change as patients and care teams gain experience with this application. The effects of the care team and site characteristics additionally relate to the patient population seen at this AMC. Usage patterns may look different in this population that may have higher needs than the general population. Our study can serve as a point of comparison as assessment and documentation of IPP use advances across institutions serving diverse populations. We additionally recognize there may be differences between our study sample and the AMC general population because our study participants had to agree to the study and the use of the tablet. There could be implications for future IPP users as a result of any such differences.

The exploratory analysis regarding the relationship between clinical and demographic characteristics and usage patterns was limited by the data available for our analyses. Future studies could test new hypotheses involving different clinical and demographic characteristics such as race, ethnicity, literacy, and socioeconomic status to examine differences in IPP use patterns among user group clusters. We also acknowledge the need to conduct additional research in order to situate our findings around the clinical context, which could help further explain IPP use and inform its future use. Lastly, our approach to measuring IPP use for the cluster analysis was primarily based on examining the proportion of use for each feature available in the IPP during an admission. In practice, defining patient portal use remains a complex and evolving science, as it requires choices to be made related to the frequency and comprehensiveness of use.28

CONCLUSION

IPPs are a new class of patient portals that offer features analogous to OPPs concurrent with a patient’s clinical encounter. In order to realize the potential to engage patients with their healthcare, it is important to understand the specifics about how patients use this application in the inpatient context. Our identification of clusters of IPP users enabled characterization of groups that demonstrated distinct IPP use preferences. The results of our analysis may allow more targeted improvements to the portal application itself as well as provide insight that could be applied to improve patient training in the use of portals; our results also may help care team members more effectively engage patients in the use of IPPs.

Supplementary Material

ACKNOWLEDGMENTS

This research was supported by grants from the Agency for Healthcare Research on Quality [Grant# P30HS024379, Grant# R01HS024091 and Grant #R21HS024767]. Conduct of this research was reviewed and approved by the Institutional Review Board of the authors. The authors wish to thank Sarah MacEwan, Shonda Vink, Lindsey Sova, Lakshmi Gupta, and Tyler Griesenbrock, all affiliated with the authors’ organization, for their assistance with this project.

Funding

This research was supported by grants from the Agency for Healthcare Research on Quality [Grant# P30HS024379, Grant# R01HS024091 and Grant #R21HS024767].

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Contributors

NF, DW, CS, TH, and AS contributed to the conceptualization and drafting of the analysis. NF, DW, RT, and SS performed data collection and data analysis.

Conflict of interest statement. None declared.

REFERENCES

- 1. Phelps RG, Taylor J, Simpson K.. Patients’ continuing use of an online health record: a quantitative evaluation of 14, 000 patient years of access data. J Med Internet Res 2014; 1610: e241.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Tulu B, Trudel J, Strong DM, et al. Patient portals: an underused resource for improving patient engagement. Chest 2016; 1491: 272–7. [DOI] [PubMed] [Google Scholar]

- 3. Huerta TR, McAlearney AS, Rizer MK.. Introducing a patient portal and electronic tablets to inpatient care. Ann Intern Med 2017; 16711: 816–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Walker DM, Sieck CJ, Menser T, et al. Information technology to support patient engagement: where do we stand and where can we go? J Am Med Inform Assoc 2017; 246: 1088–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Prey JE, Woollen J, Wilcox L. Patient engagement in the inpatient setting: a systematic review. J Am Med Inform Assoc 2014; 214: 742–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Lau K, Freyer-Adam J, Gaertner B, et al. Motivation to change risky drinking and motivation to seek help for alcohol risk drinking among general hospital inpatients with problem drinking and alcohol-related diseases. Gen Hosp Psychiatry 2010; 321: 86–93. [DOI] [PubMed] [Google Scholar]

- 7. O’Leary KJ, Lohman ME, Culver E. The effect of tablet computers with a mobile patient portal application on hospitalized patients’ knowledge and activation. J Am Med Inform Assoc 2016; 231: 159–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Kelly MM, Hoonakker PL, Dean SM.. Using an inpatient portal to engage families in pediatric hospital care. J Am Med Inform Assoc 2017; 241: 153–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Vawdrey DK, Wilcox LG, Collins SA. A tablet computer application for patients to participate in their hospital care. AMIA Annu Symp Proc 2011; 2011: 1428–35. [PMC free article] [PubMed] [Google Scholar]

- 10. Jones JB, Weiner JP, Shah NR. The wired patient: patterns of electronic patient portal use among patients with cardiac disease or diabetes. J Med Internet Res 2015; 172: e42.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hefner JL, Sieck CJ, Walker DM. System-wide inpatient portal implementation: survey of health care team perceptions. JMIR Med Inform 2017; 53: e31.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. McAlearney AS, Sieck CJ, Hefner JL, et al. High touch and high tech (HT2) proposal: transforming patient engagement throughout the continuum of care by engaging patients with portal technology at the bedside. JMIR Res Protoc 2016; 54: e221.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Han JY. Transaction logfile analysis in health communication research: challenges and opportunities. Patient Educ Couns 2011; 823: 307–12. [DOI] [PubMed] [Google Scholar]

- 14. Sieverink F, Kelders SM, Braakman-Jansen LM, et al. The added value of log file analyses of the use of a personal health record for patients with type 2 diabetes mellitus: preliminary results. J Diabetes Sci Technol 2014; 82: 247–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Quan H, Sundararajan V, Halfon P. Coding algorithms for defining comorbidities in ICD-9-CM and ICD-10 administrative data. Med Care 2005; 4311: 1130–9. [DOI] [PubMed] [Google Scholar]

- 16. Hair J, Black B, Babin B, Anderson R, Tatham R.. Multivariate Data Analysis. 6th ed.Upper Saddle River, NJ: Pearson; 2006. [Google Scholar]

- 17. Calinski T, Harabasz J.. A dendrite method for cluster analysis. Comm Stat Theory Methods 1974; 31: 1–27. [Google Scholar]

- 18. Duda RO, Hart PE, Stork DG.. Pattern Classification. 2nd ed New York: Wiley; 2001. [Google Scholar]

- 19. Ammenwerth E, Schnell-Inderst P, Hoerbst A.. The impact of electronic patient portals on patient care: a systematic review of controlled trials. J Med Internet Res 2012; 146: e162.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Krist AH, Woolf SH, Rothemich SF, et al. Interactive preventive health record to enhance delivery of recommended care: a randomized trial. Ann Fam Med 2012; 104: 312–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Osborn CY, Mayberry LS, Mulvaney SA, et al. Patient web portals to improve diabetes outcomes: a systematic review. Curr Diab Rep 2010; 106: 422–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Shah SD, Liebovitz D.. It takes two to tango: engaging patients and providers with portals. PM R 2017; 95: S85–97. [DOI] [PubMed] [Google Scholar]

- 23. Walker D, Menser T, Yen P-Y, et al. Optimizing the user experience: identifying opportunities to improve use of an inpatient portal. Appl Clin Inform 2018; 0901: 105–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Sieck Cynthia J, Hefner Jennifer L, Schnierle Jeanette. The rules of engagement: perspectives on secure messaging from experienced ambulatory patient portal users. JMIR Med Inform 2017; 53: e13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Sieck CJ, Hefner J, McAlearney AS.. Improving the patient experience through patient portals: insights from experienced users. Patient Exp J 2018; 53: 47–54. [Google Scholar]

- 26. Berwick DM, Nolan TW, Whittington J.. The triple aim: care, health, and cost. Health Aff (Millwood) 2008; 273: 759–69. [DOI] [PubMed] [Google Scholar]

- 27. Walker DM, Hefner JL, Sieck CJ. Framework for evaluating and implementing inpatient portals: a multi-stakeholder perspective. J Med Syst 2018; 429: 158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Fareed N, Huerta T, McAlearney A.. Inpatient Portals: Identifying User Groups. Verona, WI: Epic User Group Meeting; 2018. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.