Abstract

Objective

The study sought to develop a criteria-based scoring tool for assessing drug-disease knowledge base content and creation of a subset and to implement the subset across multiple Kaiser Permanente (KP) regions.

Materials and Methods

In Phase I, the scoring tool was developed, used to create a drug-disease alert subset, and validated by surveying physicians and pharmacists from KP Northern California. In Phase II, KP enabled the alert subset in July 2015 in silent mode to collect alert firing rates and confirmed that alert burden was adequately reduced. The alert subset was subsequently rolled out to users in KP Northern California. Alert data was collected September 2015 to August 2016 to monitor relevancy and override rates.

Results

Drug-disease alert scoring identified 1211 of 4111 contraindicated drug-disease pairs for inclusion in the subset. The survey results showed clinician agreement with subset examples 92.3%-98.5% of the time. Postsurvey adjustments to the subset resulted in KP implementation of 1189 drug-disease alerts. The subset resulted in a decrease in monthly alerts from 32 045 to 1168. Postimplementation monthly physician alert acceptance rates ranged from 20.2% to 29.8%.

Discussion

Our study shows that drug-disease alert scoring resulted in an alert subset that generated acceptable interruptive alerts while decreasing overall potential alert burden. Following the initial testing and implementation in its Northern California region, KP successfully implemented the disease interaction subset in 4 regions with additional regions planned.

Conclusions

Our approach could prevent undue alert burden when new alert categories are implemented, circumventing the need for trial live activations of full alert category knowledge bases.

Keywords: drug-disease interaction, implementation, subset, knowledge base, alert fatigue

INTRODUCTION

Drug-disease interaction clinical care guidance is increasingly deployed in electronic health record (EHR) systems, yet physician and pharmacist acceptance remains low due to poor alert relevance and resulting alert fatigue.1 Based on work with several large EHR vendors and clinician users of healthcare organizations (including Kaiser Permanente [KP]) since 2011, we identified 5 contributing factors to low specificity and poor usability of alerts:

EHR patient problem lists coded with broad or poorly specified concepts (eg, International Classification of Diseases–Tenth Revision–Clinical Modification [ICD-10-CM]), leading to irrelevant alerts that are intended for a more specific form of the patient’s condition

Neglected problem list maintenance, in which resolved conditions remain documented as active problems and still trigger disease alerts

Limitations in the disease terminology standards that result in unavoidably broad interoperability terminology mappings between select problem list terms, which can lead to irrelevant disease alerts

Inconsistent descriptions of drug-disease warning information across U.S. Food and Drug Administration (FDA) drug labeling creates representation difficulties in drug-disease knowledge bases warnings

The exhaustive, and sometimes hypothetical, nature of source drug-disease information resulting in knowledge base vendors needing to stratify disease alerts across 3 severity management levels, which can generate high alert burdens if unfiltered

The First Databank (FDB) drug-disease knowledge base was used in all phases of the study. The knowledge base consists of drug-disease alert pairs with the following assigned severity levels: 1 (contraindicated), 2 (severe warning), or 3 (moderate warning). This extensive drug-disease knowledge base consists of over 20 000 alert pairs and over 1700 drug groupings, most of which are based on a unique drug ingredient. The disease terms are proprietary to FDB and include mappings to standard terminologies such as ICD-9-CM, ICD-10-CM, ICD-10 Procedure Coding System, and SNOMED CT (Systematized Nomenclature of Medicine–Clinical Terms) U.S and International Editions.2

Given the expansive coverage by drug-disease knowledge bases, vendors and healthcare organizations could play a significant role in developing much smaller subsets of the most clinically relevant drug-disease interaction knowledge base content for EHR implementation. Similar efforts have been attempted to develop the most clinically relevant subsets of drug-drug interaction content using clinical consensus methodologies.3 While several groups have assembled small subsets of drug-disease alert lists, these lists are predominantly for the geriatric patient population. Such efforts have resulted in the Beers Criteria and the Healthcare Effectiveness Data and Information Set Potentially Harmful Drug-Disease Interactions in the Elderly list.4,5 To date, no such effort has been attempted to develop a more generally applicable drug-disease alert subset. Therefore, we set out to identify the most clinically relevant subset of drug-disease interaction content. This was done not by clinical consensus methodologies, but rather by developing a standard evaluation methodology that could be used by knowledge base content experts. A drug-disease alert scoring methodology was developed to standardize assessment and systematic stratification of the FDB drug-disease knowledge base and to identify a subset of the most significant contraindicated drug-disease alerts.6

MATERIALS AND METHODS

This study was considered exempt from review by the KP Northern California institutional review board.

Phase I: drug-disease scoring methodology overview

Drug-disease knowledge base warnings were scored using multiple, equally weighted positive and negative scoring criteria that are then applied to the FDB drug-disease knowledge base contraindicated drug and disease data pairs. The scoring criteria were developed based on exploratory work conducted by FDB with several large EHR vendors and clinician end users since 2012 followed by further review and clinical consensus between clinical pharmacist and physician collaborators from FDB and KP.

Positively weighted criteria identify drug-diagnosis pairs that may be uncommon or are less familiar to practicing physicians and pharmacists. These criteria also identify high-alert drugs and conditions. Each of the following criteria is scored with a value of 1 for a possible 5-point total for these criteria:

The condition is a rare diagnosis

The drug associated with the condition alert is a rarely used drug

The drug-disease alert is associated with an FDA boxed warning

The drug-disease alert is associated with a FDA MedWatch alert

The condition carries high morbidity or mortality risk

Negatively weighted criteria identify drug-diagnosis pairs that are generally more common, familiar to clinicians, and already routinely monitored. Each criterion is given a value of –1 for a possible negative 4-point total score for these criteria. Additionally, these criteria identify diagnoses that have been found to be problematic for drug-disease checking due to issues with EHR problem list coding and limitations with some ICD-9-CM and ICD-10-CM codes resulting in imprecision of the alert for the end-user clinician:

The condition is a common diagnosis

The condition is easily monitored

The condition has acute and chronic phases or is transitory

The condition can only be mapped to poorly granular ICD codes for interoperability

The scores were totaled for each drug-disease pair and stratified with a total score between +5 and –4. The highest scoring strata are hypothesized to create the most meaningful and patient specific drug alerting.

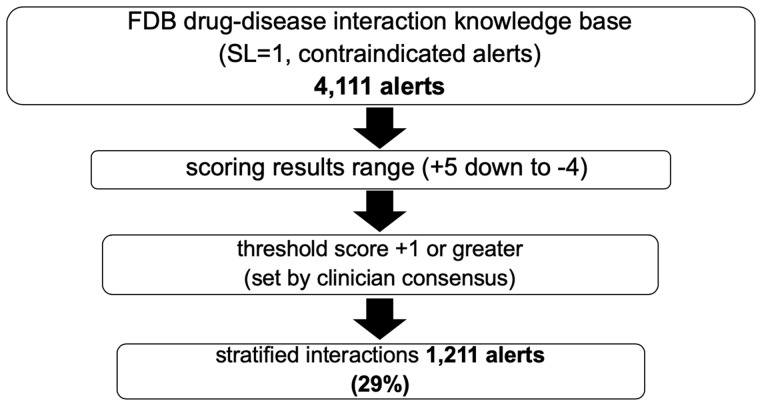

Two FDB clinical pharmacists independently scored the FDB drug-disease interaction knowledge base contraindication (severity level 1) alert content. Any scoring conflicts were reviewed by a third independent clinical pharmacist and final scoring settled by consensus. At the time of scoring (December 2013 to March 2014), 4111 drug-disease pairs were scored. Drug-disease pair scores ranged from +5 to –4. A scoring threshold of +1 or greater was determined by the consensus of 3 FDB clinical pharmacists and 2 KP physicians. Using the scoring threshold of +1 or greater, the reviewed FDB content stratified 1211 (29%) drug-disease pairs for inclusion in the project subset (Figure 1).

Figure 1.

Subset derivation from severity level 1 disease interaction knowledge base by alert scoring and stratification. (FDB: First Databank; SL: severity level).

Validation of drug-disease scoring methodology with a survey of physicians and pharmacists

Each drug-disease interaction survey question was created from the scored stratified drug-disease interaction alert subset and formatted similarly to drug-disease alerts displayed in the KP EHR. Questions presented sample alerts and asked if the alert should be interruptive in either the inpatient or outpatient care settings, or in both settings. Three opportunities were offered to select “don’t show the alert” to avoid bias for selection of favorable responses (eg, “show the alert”).

Sample survey questions:

Your patient has a diagnosis of “Congenital Long QT Syndrome” and you are ordering: “Clarithromycin”

Your patient has a diagnosis of “Active Tuberculosis” and you are ordering: “a Monoclonal Antibody Specific for Human Tumor Necrosis Factor (TNF) eg, infliximab”

Survey question response choices:

Agree this Alert Should be Shown in Both Inpatient and Outpatient Settings

Don't Show Alert for Either Inpatient or Outpatient Setting

Don't Show Alert for Inpatient Setting

Don't Show Alert for Outpatient Setting

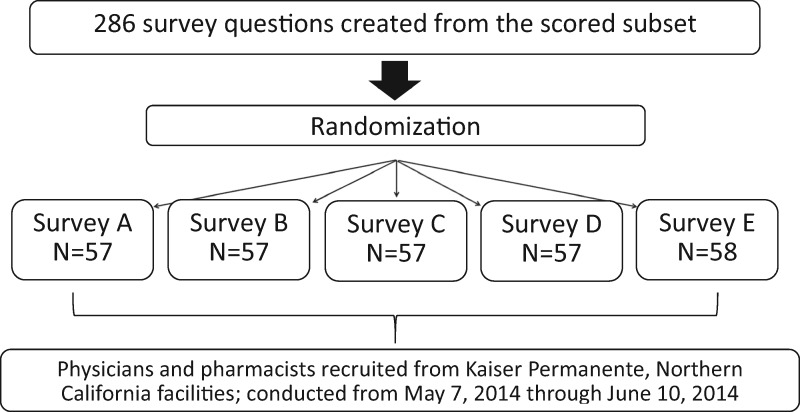

Two hundred eighty-six survey questions were created from the drug-disease scored subset then randomized and sequentially distributed across 5 individual surveys (Figure 2). This method was used to keep survey length brief to encourage survey participation. Each of the 5 surveys contained either 57 or 58 questions. Physician and pharmacist candidates were identified by KP investigators and recruited by email invitation from KP Northern California regional facilities. The electronic survey was made available from May 7, 2014, to June 10, 2014. A reminder email was sent to nonresponders twice during the active survey period.

Figure 2.

Kaiser Permanente survey versions and randomization summary.

Survey analysis

Survey “responders” were defined as any individual who responded to 1 or more survey questions. “Nonresponders” did not respond to any questions or did not access the electronic survey. Response rate for “responders” was calculated from the proportion of “responders” to total survey invitees. The “responder” group was characterized by provider specialty and duration of practice experience. Raw counts for “show alert” survey question responses were collected, and the counts stratified by drug-disease pair score. The survey questions with “don’t show alert” responses were evaluated and categorized across 5 possible review criteria or action categories: (1) drug-disease scoring criteria re-evaluation required, (2) drug-disease rescoring required, (3) drug evidence re-review required, (4) “don’t show alert” response chosen for venue-specific response, and (5) no changes to scoring or knowledge base content. Totals for each review category were calculated and drug-disease pair rescoring was carried out when clinically relevant to do so. This re-review was used as a second check on scoring of drug-disease paired alerts.

Phase II: KP implementation and evaluation

The validated drug-disease subset was recreated using the FDB AlertSpace knowledge base editing tool so that the customized drug-disease file could be built for KP’s FDB file load. The customized drug-disease file contains the same record count as the standard FDB drug-disease file. The difference with the customized file is that contraindicated alerts (severity level 1) that did not score at or above the scoring threshold of 1 were demoted to a lesser severity level (severity level 2) using AlertSpace. To display only the drug-disease subset to end users, the EHR drug-disease alert display filter was set to only display severity level 1 alerts. All nonsubset alerts, therefore, were allowed to fire in the background and are available for future analysis and consideration for addition to the subset. The interaction subset was implemented in the electronic health records of the KP Northern California region facilities in August 2015. Data for drug-disease alerts that were contraindicated (severity level = 1) were gathered for 1 service area within the region, consisting of 3 hospitals and 19 medical offices.

Data were gathered for physician providers (defined as physicians, doctors of osteopathy, and physician residents), nurse practitioners, and pharmacists. The number of alerts fired was collected during the preimplementation period from January 2015 to July 2015. During the postimplementation period from September 2015 to August 2016, data were collected for the number of alerts fired, and the rate of acceptance was defined as removal of the medication order. Data were not collected during the study period for nonsubset alerts that fired in the background of the KP EHR because this was not within the scope of the current study.

During the preimplementation period, the interaction subset was set to silent mode and not visible to providers, as the potential alert burden was not yet known. The acceptance rate was collected during the postimplementation period when the subset was visible to providers. Acceptance of the drug-disease alerts was defined as providers removing the alerting drug from the medication order.

RESULTS

Phase I: drug-disease alert scoring results

Drug-disease alert pair scoring resulted in stratification and inclusion of 1211 contraindicated drug-disease interactions in the drug-disease subset of the total 4111 scored. A large sample of the drug-disease subset with scoring is available in Supplementary Appendix A. A sample of scored drug-disease pairs that did not meet the inclusion threshold are available in Supplementary Appendix B.

Survey question coverage of the drug-disease scoring–derived subset

Two hundred eighty-six survey questions were derived from the scoring stratified subset of 1211 drug-disease paired alerts. The survey questions covered 100% (n = 134) of qualifying diseases that met the scoring threshold and 50% (n = 349 of 692) of the drug ingredient or drug classes included in the subset.

Survey response rates and demographics

One hundred eight KP physicians and pharmacists were invited to take the survey (Table 1). Seventy-three (68%) of the invited clinicians answered at least 1 survey question, responding to a total of 3822 questions. Forty-three (40%) responded to all survey questions while 30 (28%) responded to at least 1 question. These participants were considered responders. Thirty-five (32%) were considered nonresponders because none of these survey invitees responded to any question, even though 13 individuals from the nonresponder group accessed the electronic survey while 22 individuals did not.

Table 1.

Survey response rates

| Number invited to take survey | 108 (100) |

| Responder participants | |

| Answered all survey questions | 43 (40) |

| Answered some drug alert survey questions | 30 (28) |

| Total responder participants | 73 (68) |

| Nonresponders | |

| Accessed survey but did not answer | 13 (12) |

| Did not access electronic survey | 22 (20) |

| Totals | 35 (32) |

Values are n (%).

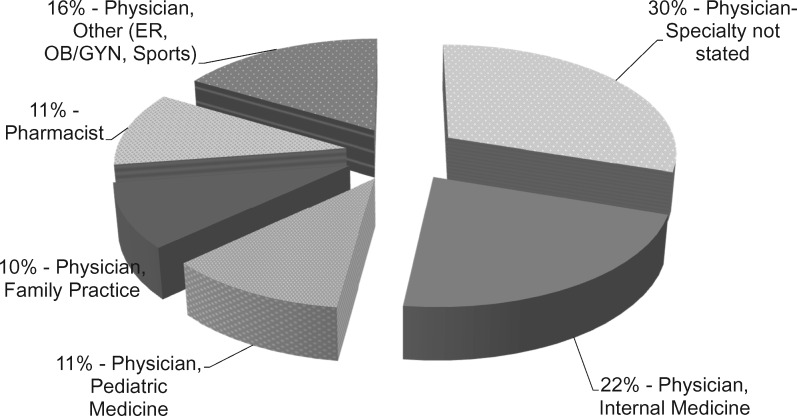

Of the 73 responders, 89% were physicians and 11% pharmacists (Figure 3). Thirty percent of the physicians did not state their specialty while 59% did state their specialty. Specialties included internal medicine, pediatric medicine, family practice, emergency medicine, obstetrics and gynecology, and sports medicine. Years of practice experience ranged from <5 years to >20 years (Table 2). Participants with 5-20 years’ experience, on average, completed a larger percentage of the survey questions (Table 2).

Figure 3.

Survey responders clinical practice demographics. (ER: emergency medicine; OB/GYN: obstetrics and gynecology).

Table 2.

Survey responders years of practice experience vs survey completion

| Practice Experience (y) | Responder Participant Count | Total Responder Participants (%) | Average Percentage of Survey Answered (%) |

|---|---|---|---|

| <5 | 6 | 8 | 82 |

| 5-10 | 25 | 34 | 93 |

| 11-20 | 25 | 34 | 96 |

| >20 | 17 | 23 | 86 |

Survey response analysis showed a high confirmatory rate of inclusion for drug-disease alerting pairs with scores ranging from +1 up to +5 (Table 3). “Show alert” responses ranged from 92.3% up to 98.5% depending on the drug-disease pair score stratification.

Table 3.

Survey analysis: responses vs drug-disease pair score

| Drug-Disease Pair Score | Show Alert for Inpatient and Outpatient (%) | Any “Don’t Show Alert” Survey Response (%) |

|---|---|---|

| 5 | 98.4 | 1.6 |

| 4 | 94.2 | 5.8 |

| 3 | 98.5 | 1.5 |

| 2 | 93.7 | 6.3 |

| 1 | 92.3 | 7.7 |

Two-hundred fifty-one (6.0%) “don’t show alert” responses were made by responder participants of a total of 3822 responses. One hundred thirty-six survey questions of the 286 unique survey questions had at least 1 “don’t show alert” response. These responses were categorized using the 5 possible review action categories enumerated in the Survey Analysis section. Of the 5 initial categories, only 3 categories could result in changes to the drug-disease subset. Two of these categories had responses: “drug-disease alert rescoring required” and “drug evidence re-review required.” This resulted in score changes affecting 22 survey questions (Table 4).

Table 4.

“Don’t Show Alert” analysis and required drug-disease pair rescoring

| “Don't Show Alert” Response Review Criteria/Action Category | Could Result in Change to Subset | Re-evaluated Survey Question Count | Total Re-evaluated Survey Questions (%) |

|---|---|---|---|

| Drug-disease scoring criteria re-evaluation | No | 0/136 | 0 |

| Drug-disease rescoring required | Yes | 1/136 | 0.7 |

| Drug evidence re-review required | Yes | 21/136 | 15.4 |

| Venue specificity reason given for “don’t show alert” response | No | 110/136 | 80.9 |

| No changes to scoring or knowledge base content | No | 4/136 | 2.9 |

Values are n/n unless otherwise indicated.

A score change ultimately affected the total scores of 22 survey questions equivalent to 22 drug-disease pairs, such that the pairs were removed from the initial drug-disease subset (n = 1211) leaving 1189 pairs, or a decrease of 1.8%. The final subset of 1189 drug-disease pairs was tested in Phase II.

Phase II: KP alert rates

During the preimplementation period, the total number of contraindicated drug-disease alerts that fired was decreased by the drug-disease interaction subset implementation from 32 045 to 1168 alerts per month. During the postimplementation period, the average number of alerts seen by users was 1452 alerts per month. During the postimplementation period, physician providers accepted the alert at a rate of 21.6% (Table 5). Nurse practitioners had a similar acceptance rate (21.8%). Pharmacists had a lower acceptance rate (5.4%). As physician providers and nurse practitioners removed medication orders that fired drug-disease alerts, pharmacists saw fewer orders with severity level 1 alerts. Additionally, physician providers and nurse practitioners were required to provide reasons for overriding the alerts, which are visible to the pharmacist during order verification.

Table 5.

Kaiser Permanente accepted alerts for drug-disease interaction subset during postimplementation period

| Accepted Alerts During Postimplementation Period (12 mo) | Total Drug-Disease Alerts | Accepted Drug-Disease Alerts | Alert Acceptance (%) | Monthly Alert Acceptance Range (%) |

|---|---|---|---|---|

| Nurse practitioners | 386 | 84 | 21.8 | 11.1-64.0 |

| Pharmacists | 5599 | 301 | 5.4 | 2.9-11 |

| Physician providers | 10 592 | 2292 | 21.6 | 20.2-29.8 |

Analysis of specific drug-disease alert pairs indicated higher acceptance rates for certain drug-disease pairs. For example, alerts for an interaction between fluoroquinolones and myasthenia gravis did not fire frequently due to the rarity of the disease. However, the rate of alert acceptance by users during the postimplementation period was 68.9% (Table 6).

Table 6.

Kaiser Permanente accepted alerts for drug-disease interaction pairs examples

| Drug-Disease Interaction Pair During Postimplementation Period (12 mo) | Total Alerts | Accepted Alerts | Alert Acceptance (%) |

|---|---|---|---|

| Fluoroquinolones–myasthenia gravis | 74 | 51 | 68.9 |

| Ondansetron–QT prolongation | 2625 | 901 | 34.3 |

In comparison, the most frequently fired interaction alert was for the common drug-disease interacting pair of ondansetron and QT prolongation. The average acceptance rate for this interaction alert was 34.3%, which was higher than the average acceptance rate for the entire drug-disease interaction subset.

DISCUSSION

The total number of contraindicated alerts was significantly decreased by the drug-disease interaction subset implementation during the preimplementation period. In 2011, KP conducted a trial implementation of the full drug-disease knowledge base with activation of all 3 severity level interactions which included alerts relevant to patient monitoring only, and received wide spread negative feedback from physician end users resulting in suspension of live drug-disease checking after 24 hours. Live drug-disease checking is desirable for several reasons: (1) patient safety and (2) LeapFrog certification. The installed drug-disease interaction subset, in contrast, did not receive any negative feedback. The interaction subset alerts were accepted at the second highest acceptance rate by KP physician providers, positioned between dose checking alerts (highest rank) and drug-allergy alerts (third highest).

During the postimplementation period, an increasing trend was noted in the total number of contraindicated alerts fired from April to June 2016. In addition to normal monthly fluctuation in alert occurrence, a notable contributor to the trend was a newly added drug-disease interaction alert between metformin and chronic kidney disease. These revisions to the FDB drug-disease knowledge base now included differentiation between various stages of chronic kidney disease, increasing the number of alerts fired. This illustrated the evolving nature of drug-disease alerts and the subsequent importance of monitoring alerts visible to end users.

Additionally, analysis of interaction alert data for individual drug-disease pairs illustrated instances of high acceptance rates for rare drug-disease interactions as well as above-average acceptance rates for common drug-disease interactions. Evaluation of alert interaction occurrences for a specific patient population allows for further validation of the subset or potential opportunities for refinement following successful implementation.

Implementers of such drug-disease subsets will need to plan resources for initial subset creation, which at a minimum would cover drug-disease knowledge base scoring followed by relevant hospital oversite committee review of methodology and subset content. Given our experience in conducting this study, institutions may need to plan at least 1 clinical staff position and 6-12 months for all preimplementation activities. Postimplementation will require ongoing monitoring and analysis of both alerts that fire and are displayed to users as well as nonsubset alerts that continue to fire in the background.

CONCLUSION

Implementation of alert categories with high alert burdens could potentially benefit from utilizing a criteria-based scoring methodology to create clinical work flow specific subsets. Furthermore, validation and refinement by clinician end-user surveys could add to clinician acceptance. This approach could prevent undue alert burden when other alert categories (eg, drug-drug interaction, age-related alert content) are implemented, circumventing the need for trial live activations of full alert category knowledge bases. Since the initial study, 4 additional regions of KP have implemented the drug-disease alert subset as of June 2018, and additional regions are planned.

There are 2 study limitations. The scope of this study did not include data collection and analysis of alerts not displayed to end users though firing in the background. For ongoing safety, it is advised that such an analysis be conducted and correlated with new patient safety–related events. This information could further identify drug-disease alerts that should be added to an institutions subset. The study also did not evaluate drug-disease alerts that scored below the scoring threshold. It is possible that some subset of those alerts may have been found acceptable during the healthcare provider survey phase of the study and added to the final implemented subset.

Implementation of any drug-disease subset will require ongoing maintenance, and part of this maintenance includes continuous collection and analysis of background firing alerts emphasizing correlation of those background alerts with observed patient drug-disease safety–related events. This type of ongoing analysis can inform healthcare systems about background alerts that may need to be added to a drug-disease subset and displayed to end users. Furthermore, as new drug-disease information becomes available, considerations will be required for scoring and evaluation of new drug-disease alerts for addition to the displayed subset.

AUTHOR CONTRIBUTIONS

JLB was involved in the study design, data collection, data analysis and interpretation, drafting of the manuscript, and critical revision of the manuscript. MAP was involved with the data collection, data analysis and interpretation, drafting of the manuscript, and critical revision of the manuscript. JK-U was involved in the study design, data collection, data analysis and interpretation, and critical revision of the manuscript. TD was involved in the data analysis and interpretation and critical revision of the manuscript. KM was involved in the study design and critical revision of the manuscript. DL was involved in the study design, coordination of the Kaiser Permanente clinician survey, and critical revision of the manuscript. KC was involved in data collection, data analysis and interpretation, and critical revision of the manuscript. SS was involved in the study design and coordination of Kaiser Permanente clinician survey. BH was involved in the study design, data interpretation, and critical revision of the manuscript. All authors approved the final manuscript version.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

The authors acknowledge Christine Cheng, PharmD, for assistance with knowledge base scoring and Emanuel Kwahk, PharmD, for assistance with the survey analysis.

CONFLICT OF INTEREST STATEMENT

JLB is employed by First Databank Inc. All other authors have no competing interests to declare.

REFERENCES

- 1. Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc 2006; 131: 5–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. FDB MedKnowledgeTM U.S. Documentation. South San Francisco, CA: First Databank, Inc; 2018. [Google Scholar]

- 3. Phansalkar S, Desai AA, Bell D, et al. High-priority drug–drug interactions for use in electronic health records. J Am Med Inform Assoc 2012; 195: 735–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. American Geriatrics Society 2012 Beers Criteria Update Expert Panel. American Geriatrics Society updated Beers Criteria for potentially inappropriate medication use in older adults. J Am Geriatr Soc 2012; 60: 616–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. National Committee for Quality Assurance. Medication Management in the Elderly (DAE/DDE). 2018. https://www.ncqa.org/hedis/measures/medication-management-in-the-elderly/ Accessed July 8, 2018.

- 6. Bubp J, Kapusnik-Uner J, Hoberman B, et al. Description and validation of the Disease Interaction Scoring Tool (DIST) for an Electronic Health Record (EHR) integrated drug knowledgebase [abstract]. Pharmacotherapy 2014; 34: e216. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.