Abstract

Objective

The study sought to develop a comprehensive and current description of what Clinical Informatics Subspecialty (CIS) physician diplomates do and what they need to know.

Materials and Methods

Three independent subject matter expert panels drawn from and representative of the 1695 CIS diplomates certified by the American Board of Preventive Medicine contributed to the development of a draft CIS delineation of practice (DoP). An online survey was distributed to all CIS diplomates in July 2018 to validate the draft DoP. A total of 316 (18.8%) diplomates completed the survey. Survey respondents provided domain, task, and knowledge and skill (KS) ratings; qualitative feedback on the completeness of the DoP; and detailed professional background and demographic information.

Results

This practice analysis resulted in a validated, comprehensive, and contemporary DoP comprising 5 domains, 42 tasks, and 139 KS statements.

Discussion

The DoP that emerged from this study differs from the 2009 CIS Core Content in 2 respects. First, the DoP reflects the growth in amount, types, and utilization of health data through the addition of a practice domain, tasks, and KS statements focused on data analytics and governance. Second, the final DoP describes CIS practice in terms of tasks in addition to identifying knowledge required for competent practice.

Conclusions

This study (1) articulates CIS diplomate tasks and knowledge used in practice, (2) provides data that will enable the American Board of Preventive Medicine CIS examination to align with current practice, (3) informs clinical informatics fellowship program requirements, and (4) provides insight into maintenance of certification requirements.

Keywords: practice analysis, clinical informatics subspecialty, delineation of practice, workforce development, Physician Board Certification

INTRODUCTION

Physicians who practice clinical informatics analyze, design, implement, and evaluate information systems to enhance individual and population health outcomes, improve patient care, and strengthen the clinician-patient relationship.1 The establishment of the Clinical Informatics Subspecialty (CIS) for physicians in 2011 highlighted the growing importance of this role and recognized the knowledge and skills required for CIS practice.2 The accreditation of the first clinical informatics fellowship programs in 2014 marked another milestone in the maturation of the new subspecialty. In 2018, there were 1695 American Board of Preventive Medicine (ABPM) CIS diplomates and 35 Accreditation Council for Graduate Medical Education (ACGME) accredited clinical informatics fellowship programs.

The American Medical Informatics Association’s (AMIA) development of the Core Content for the Subspecialty of Clinical Informatics (CIS Core Content) was pivotal to the establishment of the CIS and the clinical informatics fellowship programs.1,3,4 In that document, Gardner et al1 described clinical informatics practice in terms of 4 major domains and knowledge associated with each domain. The CIS Core Content was a key component of ABPM’s application to the American Board of Medical Specialties to establish the CIS and informed the development of ACGME Clinical Informatics Fellowship Program Requirements. Further, the CIS Core Content is the foundation for development of ABPM CIS examination, board examination review materials, and maintenance of certification requirements and programming.5 Thus, it is essential that this document continuously provide a comprehensive and contemporary description of CIS practice.

Since publication of the Core Content in 2009, CIS practice has evolved in response to the following6:

increased focus on using the data from electronic health records to support research, precision medicine, public health, and population health

scientific advances such as phenomics that stimulated development and deployment of innovative data analytic methodologies

expanded knowledge of how integrating health information technology into clinical processes impacts clinician productivity and patient satisfaction

growing expectations among users (both clinicians and patients) for how they interact with computational resources.

Awareness of these changes prompted CIS leaders to consider how to update the CIS Core Content to reflect current CIS practice. Additionally, as clinical informatics fellowship program directors gained experience in training and assessing fellows, it became clear that the knowledge outline in the CIS Core Content was not sufficient for developing competencies on which fellows could be assessed.7 To provide more specific guidance on competencies required for fellows, the CIS Core Content needed to be expanded to include tasks performed by CIS practitioners.

To address these issues, AMIA and ABPM agreed to update and expand the CIS Core Content using a formal practice analysis methodology. Practice analysis, sometimes called job or task analysis, “is the systematic definition of the components of work and essential knowledge, skill, and other abilities at the level required for competent performance in a profession, occupation, or role.”8 Conducting a rigorous practice analysis provides a direct link between what professionals do and how their competence is assessed for certification and is integral to the development and operation of high-stakes professional certification programs. Two key elements of this methodology include (1) a structured consensus process to develop a delineation of the practice (DoP) in terms of domains, tasks, and knowledge and skills (KSs) and (2) a survey of active professionals to determine how well the DoP describes their practice.

MATERIALS AND METHODS

Objectives

The objectives of this project were to develop a robust, relevant, and contemporary CIS DoP in terms of domains, tasks, and KSs that would serve as the basis for ABPM’s CIS examination blueprint, inform Clinical Informatics Fellowship training and program accreditation requirements, and provide insight into CIS maintenance of certification requirements.

Project initiation and organization

AMIA conducted the CIS practice analysis in collaboration with ABPM and with the support of the American Board of Pathology (ABPath). After AMIA and ABPM agreed on the project objectives, AMIA contracted with a nonprofit consulting organization with more than 45 years of credentialing advisory services experience. The consulting staff planned and led all meetings, managed the peer review process, performed all qualitative and quantitative data collection and analyses based on industry standards, and facilitated discussion and approval of the analytics and results by the Oversight Panel (see the following).

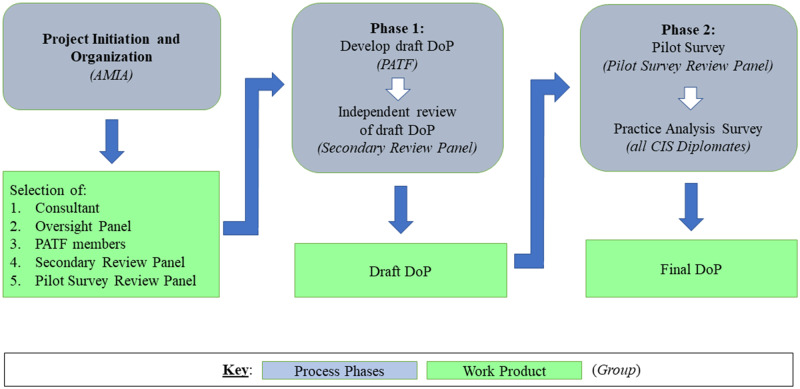

AMIA, ABPM, and ABPath sought to acquire input from CIS diplomates who were representative of the CIS community and to ensure that the CIS practice analysis results would serve multiple uses. To achieve these ends, AMIA established the Oversight Panel to provide executive guidance and the Practice Analysis Task Force (PATF) to provide subject matter expertise. AMIA recruited other subject matter experts to provide additional input and feedback during the process (see Figure 1 and Table 1).

Figure 1.

This figure provides an overview of Clinical Informatics Subspecialty (CIS) Practice Analysis processes and workflows, work products, and groups. The CIS Practice Analysis was initiated by the American Medical Informatics Association (AMIA) and carried out in collaboration with the American Board of Preventive Medicine and the American Board of Pathology. DoP: delineation of practice; PATF: Practice Analysis Task Force.

Table 1.

CIS practice analysis oversight panel and Task Force Members

| Oversight Panel | Task Force (All CIS Diplomates) |

|---|---|

| Douglas B. Fridsma, MD, PhD | Jeanne Ballard, MD (Obstetrics and Gynecology) |

| AMIA | Roper Saint Francis Healthcare, Charleston, SC |

| Christoph U. Lehmann, MD | Brad Brimhall, MD, MPH (Pathology) |

| CIS Diplomate, ABPM Exam Committee | University of Texas Health System, San Antonio, TX |

| Vanderbilt University | |

| John T. Finnell, MD, MSc (Emergency Medicine) | |

| Benson Munger, PhD | Eskenazi Health/Indiana University, Indianapolis, IN |

| Consultant | |

| Carolyn Murray, MD, MPH | David Hurwitz, MD (Internal Medicine) |

| ABPM | Allscripts, Chicago, IL |

| Christopher J. Ondrula, JD | Christoph U. Lehmann, MD (Pediatrics) |

| ABPM | ABPM Exam Committee; Vanderbilt University, Nashville TN |

| Natalie Pageler, MD, MEd CIS Diplomate, Stanford Children's Health | Robert C. Marshall, MD, MPH, MISM (Family Medicine) |

| Madigan Army Medical Center, Tacoma, WA | |

| Howard Silverman, MD, MS (Family Medicine) The University of Arizona College of Medicine—Phoenix | Shelly Nash, DO (Obstetrics and Gynecology) |

| Adventist Health System, Altamonte Springs, FL | |

| Francine Sandrow, MD, MSSM (Emergency Medicine) | |

| Elaine B. Steen, MAAMIA | Veterans Health Administration, Philadelphia, PA |

| Howard Silverman, MD, MS (Family Medicine) | |

| Jeffrey J. Williamson, MAAMIA | The University of Arizona College of Medicine—Phoenix, AZ |

| Julia Skapik, MD, MPH (Internal Medicine) | |

| Cognitive Medical Systems, San Diego, CA | |

| James Whitfill, MD (Internal Medicine) | |

| HonorHealth, Scottsdale Arizona | |

| Keith F. Woeltje, MD, PhD (Internal Medicine) | |

| BJC HealthCare, St. Louis, MO |

ABPM: American Board of Preventive Medicine; AMIA: American Medical Informatics Association; CIS: Clinical Informatics Subspecialty.

The project was divided into 2 phases. In the first phase, the PATF generated a draft DoP. During the second phase, all CIS diplomates were surveyed to validate the draft DoP and identify any missing components (see Figure 1).

Oversight panel

The Oversight Panel included 9 individuals representing AMIA, ABPM, and CIS diplomates, as well as an individual with expertise in the development and management of medical subspecialties. This group was responsible for articulating the vision and goals for the practice analysis process, providing guidance to the PATF, and ensuring that the practice analysis aligned with project objectives.

Practice analysis task force

The PATF comprised 12 CIS diplomates representing a broad range of primary specialties, levels of experience, practice settings, and geographic locations. Two members of the Oversight Panel also served on the PATF to facilitate communication between the 2 groups. The PATF was responsible for performing the work of the practice analysis as described subsequently.

Additional participants

In addition to the Oversight Panel and the PATF, 25 subject matter experts contributed to the CIS practice analysis by serving as interviewees (n = 6), Secondary Review Panel members (n = 10), and Pilot Survey Review Panel members (n = 9). AMIA staff identified interviewees with extensive experience using the current CIS Core Content for exam development and in clinical informatics fellowship programs. AMIA invited all CIS diplomates to indicate their interest in serving on the PATF, Secondary Review Panel, or Pilot Survey Review Panel. A subgroup of the Oversight Panel reviewed all volunteer profiles and developed rosters for each subject matter expert group that were representative of the CIS community (eg primary specialty, practice setting, years of experience).

Phase 1: developing the draft DoP

In the first phase of the practice analysis, the PATF developed a draft DoP. To inform the work of the PATF, the consultants conducted stakeholder interviews, analyzed job descriptions from current CIS diplomates, summarized foundational articles identified by AMIA staff and the Oversight Panel, and compiled their findings in a PATF briefing document. The Oversight Panel charged the PATF to be forward leaning in their deliberations and consider how the field will develop over the next 3-5 years.

During a 2-day PATF meeting, the consultants facilitated a series of activities that enabled participants to (1) create and refine a domain structure, ensuring that the domains identified were mutually exclusive and collectively comprehensive; (2) articulate specific tasks performed by clinical informaticians; and (3) specify the required KSs for performance of these tasks. After the meeting, the draft DoP was circulated to the PATF for review and critique and then revised based on feedback received.

To bring additional perspectives to the practice analysis, the Secondary Review Panel conducted an independent review of the draft DoP (see Figure 1). This group was instructed to assess whether each element of the draft DoP was clear, and if the delineation provided a comprehensive and contemporary description of CIS practice. Oversight Panel members were also invited to participate in this review. During two 2-hour virtual meetings, the PATF considered each reviewer comment and reached consensus on revisions to the draft DoP.

Phase 2: practice analysis survey

In the second phase of the study, the consultants developed, piloted, and administered an online survey to determine if the draft DoP accurately and completely described the work of practicing CIS professionals. During a 1-week interval in June 2018, Pilot Survey Review Panel members completed a pilot test of the survey and the Oversight Panel finalized the survey based on their feedback (see Figure 1). The final survey was open from July 10 to 31, 2018, and was distributed by email to all CIS diplomates in the ABPM and ABPath databases (N = 1695). There was a vigorous communication campaign consisting of 11 email and listserve communications from AMIA, ABPM, and ABPath to encourage CIS diplomates to complete the survey.

Survey respondents used customized rating scales to report how the domains of practice, tasks, and KSs relate to effective clinical informatics practice. As outlined in Table 2, there were 2 rating scales associated with the 5 domains of practice and 42 tasks, and 3 rating scales associated with 142 KSs. Because gathering data on 3 rating scales across all 142 KSs would be burdensome on respondents, survey participants were randomly routed to 1 of 2 versions of the survey (see Table 2). All survey respondents also provided qualitative feedback on the completeness of the DoP and completed the professional background and demographic questionnaire. Open-ended questions allowed respondents to identify any domains, tasks, or KSs that they thought were missing.

Table 2.

Practice analysis survey rating scales

| Survey Element | Rating Scales |

|---|---|

| Domains of practice |

|

| Tasks |

|

| Knowledge and skills | Version A and Version B included:

|

ACGME: Accreditation Council for Graduate Medical Education.

Survey analysis methodology

Cronbach’s alpha (α) was calculated to measure internal consistency and scale reliability for the frequency and importance rating scales. Frequency distributions and descriptive statistics were calculated for all ordinal (frequency, importance) and ratio (percentage of time) scales. For the level of mastery ratings, respondents indicated what level of mastery of the knowledge or skill should be required at the time of clinical informatics subspecialty certification. For this nominal variable, a frequency distribution of responses was calculated. Subgroup analyses of the data based on key factors (including years of experience, practice setting, and percentage of work time performing clinical informatics tasks) were performed to explore similarities and differences in patterns of practice based on these characteristics.

Mean values were generated for frequency and importance ratings by assigning numerical values to each response option as follows: for frequency 1 = never, 2 = rarely, 3 = occasionally, 4 = frequently, and 5 = very frequently; for importance, 1 = not important, 2 = minimally important, 3 = moderately important, and 4 = highly important. Accordingly, a mean frequency rating of 3.5 indicates that respondents performed the task or used knowledge, on average, occasionally to frequently. A mean importance rating of 3.2 indicates that a task was at least moderately important to effective clinical informatics practice or a KS was at least moderately important for inclusion in an ACGME fellowship program.

The PATF reviewed the results of the survey during a virtual meeting and developed recommendations regarding the final DoP. The Oversight Panel reviewed and affirmed the PATF recommendations.

One of the primary uses of practice analysis results is to develop empirically-derived examination specifications for a certification exam. Toward this end, 2 sets of domain weights were prepared for PATF and Oversight Panel review. The first set was based on the responses to the following question: what percentage of the Clinical Informatics Subspecialty examination should focus on each of the five domains? The second set was derived from responses to the percentage of time and importance ratings for the 4 task-based domains, as well as the percentage proposed for the fundamental knowledge and skills domain. The PATF considered the results of this weighting analysis and unanimously recommended updated examination specifications. The Oversight Panel subsequently reviewed and approved the recommended examination specifications. AMIA forwarded these recommendations to ABPM as the administrative board for the CIS. ABPM will make the final determination on changes to CIS examination specifications.

RESULTS

The draft DoP generated and reviewed during phase 1 comprised 5 mutually exclusive domains (domain 1 included only fundamental KSs but no task statements), 42 task statements, and 142 KS statements (see Table 3 for CIS Domains of Practice and domain definitions).

Table 3.

CIS domains of practice

| Domain 1: Fundamental Knowledge and Skills |

| Fundamental knowledge and skills which provide clinical informaticians with a common vocabulary, basic knowledge across all Clinical Informatics domains, and understanding of the environment in which they function. |

| Domain 2: Improving Care Delivery and Outcomes |

| Develop, implement, evaluate, monitor, and maintain clinical decision support; analyze existing health processes and identify ways that health data and health information systems can enable improved outcomes; support innovation in the health system through informatics tools and processes. |

| Domain 3: Enterprise Information Systems |

| Develop and deploy health information systems that are integrated with existing information technology systems across the continuum of care, including clinical, consumer, and public health domains. Develop, curate, and maintain institutional knowledge repositories while addressing security, privacy, and safety considerations. |

| Domain 4: Data Governance and Data Analytics |

| Establish and maintain data governance structures, policies, and processes. Incorporate information from emerging data sources; acquire, manage, and analyze health-related data; ensure data quality and meaning across settings; and derive insights to optimize clinical and business decision making. |

| Domain 5: Leadership and Professionalism |

| Build support and create alignment for informatics best practices; lead health informatics initiatives and innovation through collaboration and stakeholder engagement across organizations and systems. |

See Supplementary Appendix B for complete delineation of practice.

CIS: Clinical Informatics Subspecialty.

Response rate

Of the 1695 survey invitations, 17 were undeliverable due to invalid e-mail addresses and 316 CIS diplomates completed the survey, yielding a response rate of 18.8%. The number of responses was sufficient to meet the requirements for conducting statistical analyses and exceeded the threshold of 313 suggested by a sample size calculation using a confidence interval of 4.97 and a 95% confidence level.9

Demographic and professional characteristics of respondents

Respondents had an average of 13.6 years of clinical informatics experience and spent an average of 62% of their work time engaged in activities directly related to clinical informatics, although the large standard deviation (28%) suggested wide variability in the actual amount of time engaged in clinical informatics activities. The majority of respondents had earned an MD as their primary degree (92%, n = 292), and 38% (n = 120) of the sample had earned additional degrees (eg master of health informatics, biomedical informatics, public health, or business administration). Respondents represented 18 different primary board specialties, with the largest number holding board specialties in internal medicine (32%, n = 101), pediatrics (21%, n = 65), family medicine (16%, n = 51), emergency medicine (10%, n = 30), and pathology (8%, n = 25).

Survey respondents worked in 16 different practice settings. The greatest number of respondents worked in not-for-profit academic or university-based health systems, hospitals, or ambulatory care (38%, n = 120); followed by not-for-profit nongovernmental health system, hospital, or ambulatory care (23%, n = 71); federal government health system, hospital, or ambulatory care (10%, n = 30); and university or health professional school (8%, n = 24). Demographic characteristics of respondents are presented in Table 4.

Table 4.

Demographic characteristics of validation survey respondents

| n | % | |

|---|---|---|

| Age | ||

| 25-34 y | 7 | 2.2 |

| 35-44 y | 78 | 24.7 |

| 45-54 y | 109 | 34.5 |

| 55-65 y | 85 | 26.9 |

| 65 y or older | 18 | 5.7 |

| I prefer not to answer | 13 | 4.1 |

| Missing | 6 | 1.9 |

| Sex | ||

| Female | 71 | 22.5 |

| Male | 224 | 70.9 |

| I prefer not to answer | 14 | 4.4 |

| Missing | 7 | 2.2 |

| Race/ethnicity | ||

| American Indian or Alaska Native | 1 | 0.3 |

| African American or Black | 4 | 1.3 |

| Asian | 45 | 14.2 |

| Caucasian/White | 222 | 70.3 |

| Hispanic, Latino, or Spanish origin | 9 | 2.8 |

| Native Hawaiian or Other Pacific Islander | 0 | 0.0 |

| Some other race, ethnicity, or origin | 4 | 1.3 |

| I prefer not to answer | 26 | 8.2 |

| Missing | 5 | 1.6 |

Comparable information on existing CIS diplomates to support a rigorous comparison of survey respondents to the general CIS diplomate population was not available. After reviewing respondent data regarding 17 respondent professional and demographic background characteristics, the PATF concluded that the relevant characteristics of survey respondents were generally representative of the broader CIS diplomate population.

Domain ratings

Respondents spent the greatest percentage (32%) of their clinical informatics work time in the improving care delivery and outcomes domain, 26% in the leadership and professionalism domain, 18% in the enterprise information systems domain, and 18% in the data governance and data analytics domain. Respondents reported, on average, that only 6% of their clinical informatics work time was focused on tasks related to an “other” domain option. The members of the PATF reviewed all write-in responses for this “other” domain and determined them to be addressed within the draft DoP, attesting to the completeness of the domain structure. Respondents rated all domains as moderately to highly important to effective clinical informatics practice, with mean importance ratings of 3.3 to 3.9 on a 4-point scale.

Task ratings

The Cronbach’s alpha value exceeded .90 for both task ratings scales: frequency (α = .95) and importance (α = .93). Mean task ratings for frequency and importance for the total sample of respondents are documented in Supplementary Appendix A. With respect to frequency of task performance, 3 tasks were rated 4.0 or higher (performed at least frequently), 18 were rated between 3.1 and 3.9 (performed occasionally to frequently), and 21 tasks were rated less than 3.0 (performed less than occasionally). With respect to importance, 37 of the 42 tasks were rated 3.0 or higher on the 4-point scale, indicating that these tasks were moderately to highly important to effective clinical informatics practice. The remaining 5 tasks were rated lower on importance (range, 2.8-2.9).

For the subgroups based on years of clinical informatics experience (1-7 years, 8-15 years, or more than 15 years), there were no differences greater than 0.5 in either the frequency or importance ratings. For the subgroups based on percentage of work time focused on clinical informatics (50% or less vs more than 50%), there were no differences greater than 0.5 between the subgroups with regard to the importance ratings. For the frequency ratings, differences greater than 0.5 were identified for 4 tasks—in each case, the task was performed more frequently by those who spend more time focused on clinical informatics. For the 9 subgroups based on practice setting, 13 tasks had differences in ratings greater than 0.5 on either the importance or frequency scales, but because of the small number of respondents in the various settings, it was not possible to draw any conclusions.

Knowledge and skills ratings

Of the 316 respondents, 151 completed version A of the KS section and 165 completed version B of the KS section (see Table 2). The Cronbach’s alpha value of the KS frequency rating scale was 0.98. Of the 142 KSs, 6 statements received mean frequency ratings above 4.0, indicating that they were used frequently to very frequently; 70 received mean frequency ratings of 3.1-3.9, indicating that they were used occasionally to frequently; and 66 received mean frequency ratings between 2.0 and 3.0, indicating that they were used rarely to occasionally.

The Cronbach’s alpha value of the KS importance for inclusion in ACGME Clinical Informatics Fellowship Training rating scale was .98. Of the 142 KS statements, 114 were rated 3.0 or higher on the 4-point importance for inclusion scale, indicating that they were moderately to highly important to include; 27 were rated between 2.5 and 3.0, indicating that they fell at least midway between minimally to moderately important; and only 1 (international clinical informatics practices) received a mean importance for inclusion rating below 2.5.

For the level of mastery rating, more than 50% of respondents rated 107 KSs as required at the recall and recognition level and 19 statements as required at the analysis, synthesis, and application levels. However, we note that 28 of the 107 KSs supported at the lower level received support from more than one third of respondents for mastery at the higher level. In the case of only 1 statement (international clinical informatics practices) did a majority of respondents rate the statement as not required at the time of CIS certification. Inspection of the remaining 15 KSs showed varying levels of support for both levels of mastery without either level receiving a simple majority support.

Validation decisions

Using content validity as a guiding principle for validating the DoP, the majority of tasks (36 of 42) were rated high enough to warrant inclusion.10,11 That is, they received mean frequency ratings of 2.5 or more (performed at least rarely to occasionally) and mean importance ratings of 3.0 or more (moderately to highly important). The remaining 6 task statements received lower mean frequency or importance ratings and required additional PATF discussion before final validation. The PATF and Oversight Panel considered these 6 tasks to determine if the relatively lower frequency ratings were reasonable given the nature of the task, or that the relatively low frequency ratings were more than balanced by high importance ratings, or that the tasks described recent key trends and changes occurring in clinical informatics practice that may not yet have been universally adopted. Using these criteria, PATF and Oversight Panel members agreed that that all 6 of these tasks were valid for inclusion in the final DoP.

Similarly, the majority of KSs (128 of 142) received mean both frequency ratings of 2.5 or higher (performed rarely to occasionally) and mean importance for inclusion ratings of 3.0 or higher. Accordingly, they were judged to be validated for inclusion. The remaining 14 KSs fell below the midpoint of the range and required additional discussion by the PATF and Oversight Panel to make final validation decisions. After this review, 3 of these 14 KSs were eliminated from the DoP because they were not directly related to CIS practice (ie, international clinical informatics practices) or too specific as written and addressed by other knowledge statements (ie, National Academy of Medicine safe, timely, efficient, effective, equitable, patient-centered aims; genomic and proteomic data in clinical care [bioinformatics]). The remaining 11 KSs were retained because they had high importance ratings (K011, K048, K113), were considered basic knowledge (K064), or were recognized as knowledge needed for emerging tasks (K083, K084, K095, K096, K103, K104, K105) (see Supplementary Appendix B).

Completeness of the DoP

Survey respondents provided write-in responses to questions asking if tasks they performed or KSs they used in their clinical informatics work were missing from the survey. PATF members reviewed each response and determined that the write-in responses reflected:

either more general or more specific instances of content already covered,

“missing” tasks that were covered by the KS statements and “missing” KSs that were covered by the task statements, or

content that was not specific to clinical informatics practice.

As a result, no new tasks or KSs were added. The complete validated final DoP is available in Supplementary Appendix B consisting of 5 domains, 42 tasks, and 139 KS statements.

DISCUSSION

The CIS practice analysis represents the first time that CIS diplomates have been surveyed to validate their practice. The resulting DoP constitutes a comprehensive and current description of what CIS diplomates do and what they need to know. The DoP that emerged from this study differs from the original CIS Core Content in 2 key respects. First, the DoP reflects changes in practice since publication of CIS Core Content. In particular, the increase in and effective utilization of health data generated by electronic health records and other sources resulted in the addition of a practice domain, tasks, and KSs focused on data governance and analytics.

Second, the DoP describes CIS practice in terms of tasks in addition to identifying the knowledge required for competent CIS practice. These tasks provide context for the KS statements and shed light on how a CIS diplomate uses the knowledge in practice. The task statements are likely to be important inputs into ABPM CIS exam development as well as clinical informatics fellowship program requirement updates, curricula, and fellow assessments. For example, the Task Frequency and Importance Ratings in Supplementary Appendix A would allow the ABPM to identify high frequency, high importance tasks and associated KS statements for certification examination development.

The discipline of Clinical Informatics is evolving rapidly and predicting the future is difficult. Anticipated areas of growth or decline may not come to pass so it will be important to perform periodic practice analysis studies (typically every 5-7 years) to ensure the DoP remains accurate, comprehensive, and contemporary. Given the rate of change in CIS practice, however, it may be necessary to employ interim data collection procedures (eg, focus panels, mini surveys, questionnaires) to ensure no part of the DoP becomes obsolete and no significant changes in practice are missing. At the same time, the currency of the CIS DoP must be balanced against the need for the CIS examination to test established knowledge and foundational principles of practice. Thus, the 7 KSs associated with emerging tasks will require review over time by ABPM to ensure that test questions on this content reflect those areas considered to be foundational principles of CIS practice.

The field of clinical informatics is highly multidisciplinary, however this study focused exclusively on physicians who were CIS diplomates. AMIA conducted a practice analysis of health informatics professionals (June 2018 to January 2019) and future work will include comparison of the results of the CIS and Health Informatics practice analyses.

CONCLUSION

We anticipate that the final DoP will have a visible impact on the CIS in the near term by allowing the ABPM to align the CIS certification exam with current practice and by supporting evolution of ACGME Clinical Informatics Fellowship Requirements. AMIA’s Community of Clinical Informatics Program Directors has already begun work on a “Model Curriculum” for clinical informatics fellowship programs based on the final DoP with the intent to inform ACGME’s next update of Clinical Informatics Fellowship Program Requirements. Additional downstream use cases for the CIS practice analysis include the creation of educational or conference programming as well as maintenance of certification activities.

In the longer term, we envision that the CIS practice analysis and DoP will impact more than AMIA educational programming, ABPM CIS examination specifications, ACGME accreditation requirements, clinical informatics fellowship curriculum development, and fellow assessments. The DoP may eventually inform future job descriptions, hiring decisions, performance evaluations, and professional development choices. Perhaps most important, by capturing the full range of work performed by informaticians, the CIS and forthcoming health informatics DoPs will help organizations understand the unique capacity that informatics professionals possess and the value they offer to healthcare organizations.

FUNDING

This work was supported by the American Medical Informatics Association.

AUTHOR CONTRIBUTIONS

Each of the authors (HDS, EBS, JNC, CJO, JJW, and DBF) contributed substantially to the article as follows: 1. substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data for the work; AND 2. drafting the work or revising it critically for important intellectual content; AND 3. final approval of the version to be published; AND 4. agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGEMENTS

The authors would like to acknowledge the contributions of the Oversight Panel and the PATF (Table 1) as well as the individuals who shared their expertise and time through interviews, serving as independent reviewers, and participating in the survey pilot. We acknowledge the support of the American Board of Preventive Medicine and the American Board of Pathology in the survey distribution. Further, Carla Caro of ACT-ProExam co-facilitated this effort, and Sandy Greenberg supported the practice analysis planning and implementation.

Conflict of interest statement

None declared.

REFERENCES

- 1. Gardner RM, Overhage JM, Steen EB, et al. Core content for the subspecialty of clinical informatics. J Am Med Inform Assoc 2009; 162: 153–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Shortliffe EH, Detmer DE, Munger BS.. Clinical informatics: emergence of a new profession In: Finnell J, Dixon B, eds. Clinical Informatics Study Guide: Text and Review. Basel, Switzerland: Springer; 2016: 3–21. [Google Scholar]

- 3. Detmer DE, Lumpkin JR, Williamson JJ.. Defining the medical subspecialty of clinical informatics. J Am Med Inform Assoc 2009; 162: 167–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Safran C, Shabot MM, Munger BS, et al. Program requirements for fellowship education in the subspecialty of clinical informatics. J Am Med Inform Assoc 2009; 162: 158–66. Erratum in: J Am Med Inform Assoc 2009; 16: 605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Lehmann CU, Gundlapalli AV, Williamson JJ, et al. Five years of clinical informatics board certification for physicians in the United States of America. Yearb Med Inform 2018; 27: 237–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Fridsma DB. Health informatics: a required skill for 21st century clinicians. BMJ 2018; 362: k3043.. [DOI] [PubMed] [Google Scholar]

- 7. Silverman HS, Lehmann CU, Munger BS.. Milestones: critical elements in clinical informatics fellowship programs. Appl Clin Inform 2016; 7: 177–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Henderson JP, Smith C.. Job/practice analysis In: Knapp J, Anderson L, Wild C, eds. Certification: The ICE Handbook. 2nd ed Washington, DC: Institute for Credentialing Excellence; 2016: 1221–46. [Google Scholar]

- 9. Cohen J. Statistical Power Analysis for the Behavioral Sciences. Revised ed New York, NY: Academic Press; 1977. [Google Scholar]

- 10. American Educational Research Association, American Psychological Association, & National Council on Measurement in Education . Standards for Educational and Psychological Testing. Washington, DC: American Education and Research Association; 2014. [Google Scholar]

- 11. Commission for Certifying Agencies. Standards for the Accreditation of Certification Programs. Washington, DC: Institute for Credentialing Excellence; 2014. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.