Abstract

Objective

Cohort selection for clinical trials is a key step for clinical research. We proposed a hierarchical neural network to determine whether a patient satisfied selection criteria or not.

Materials and Methods

We designed a hierarchical neural network (denoted as CNN-Highway-LSTM or LSTM-Highway-LSTM) for the track 1 of the national natural language processing (NLP) clinical challenge (n2c2) on cohort selection for clinical trials in 2018. The neural network is composed of 5 components: (1) sentence representation using convolutional neural network (CNN) or long short-term memory (LSTM) network; (2) a highway network to adjust information flow; (3) a self-attention neural network to reweight sentences; (4) document representation using LSTM, which takes sentence representations in chronological order as input; (5) a fully connected neural network to determine whether each criterion is met or not. We compared the proposed method with its variants, including the methods only using the first component to represent documents directly and the fully connected neural network for classification (denoted as CNN-only or LSTM-only) and the methods without using the highway network (denoted as CNN-LSTM or LSTM-LSTM). The performance of all methods was measured by micro-averaged precision, recall, and F1 score.

Results

The micro-averaged F1 scores of CNN-only, LSTM-only, CNN-LSTM, LSTM-LSTM, CNN-Highway-LSTM, and LSTM-Highway-LSTM were 85.24%, 84.25%, 87.27%, 88.68%, 88.48%, and 90.21%, respectively. The highest micro-averaged F1 score is higher than our submitted 1 of 88.55%, which is 1 of the top-ranked results in the challenge. The results indicate that the proposed method is effective for cohort selection for clinical trials.

Discussion

Although the proposed method achieved promising results, some mistakes were caused by word ambiguity, negation, number analysis and incomplete dictionary. Moreover, imbalanced data was another challenge that needs to be tackled in the future.

Conclusion

In this article, we proposed a hierarchical neural network for cohort selection. Experimental results show that this method is good at selecting cohort.

Keywords: cohort selection, clinical trials, hierarchical neural network, classification, mental health records

INTRODUCTION

Cohort studies are an important type of medical research used to evaluate associations between diseases and exposures. Cohort selection that selects patients who meet predefined criteria is a necessary prerequisite for cohort studies. The most common cohort selection, namely phenotyping, is based on electronic health records (EHRs), which can be extremely time-consuming and challenging due to the complexity of the criteria and the diversity of EHR data. Matching the criteria for cohort selection need to access and analyze multiple documents across different subsystems of a patient. The data include structured tables (such as laboratory results and medications) and unstructured text (such as admission notes, radiology reports, progress notes, and discharge summaries). Extracting meaningful information from the mix of structured and unstructured data is not easy because of the presence of ungrammatical text, abbreviations, misspellings, local dialectal phrases, and quantitative information in various formats. Moreover, cohort selection requires further information consolidation.

In the past decades, a large number of studies have been proposed for cohort selection using EHR and various phenotypes, such as cancer,1 diabetes,2 heart failure,3 smoking status,4 obesity,5 etc., from different data sources including clinical notes, imaging data, insurance claim data, etc., have been considered in selection criterion. These methods may fall into the following 3 categories: rule-based methods,6–11 machine learning-based methods,12,13 and hybrid methods.14,15

The rule-based methods usually need medical experts to design intricate rules based on cohort selection criterion, such as logical constraints like (hemoglobin < 10 and age > 50). A representative work on rule-based methods was developed by the Electronic Medical Records and Genomics (eMERGE)16 consortium. The eMERGE consortium developed a series of algorithms to define phenotypes and implement and validate rules for a number of diseases. Some complex rules such as nested Boolean logic and negation, cardinality constraints, and temporal logic were included in the eMERGE project. The Phenotype KnowledgeBase (PheKB)17 repository integrated the algorithms from eMERGE as well as from other studies. The rule-based methods are effective but have their own drawbacks. First, it is almost impossible to apply each existing algorithm to new data directly because of various data formats. Second, the existing algorithms only cover a small part of diseases or phenotypes. Developing a rule-based system for new diseases and phenotypes is a very time-consuming and laborious process.

Machine learning methods that have the ability to automatically learn patterns from data have attracted more and more attention. For instance, Mani et al18 compared classical machine learning methods such as k-Nearest-Neighbors (KNN), Naïve Bayes (NB), decision tree (DT), random forest (RF), Classification and Regression Tree (CART), support vector machine (SVM) and logistic regression (LR) for type 2 diabetes risk forecasting. Zheng et al2 compared all these methods except CART to identify type 2 diabetes on another corpus. Recently, a few researchers have attempted to introduce deep learning methods for cohort selection and obtained promising results.19

Hybrid methods combine rule-based methods and machine learning methods in a number of ways. The 2 typical ways are: 1) features extracted by rules are fed into machine learning methods together with other features; 2) rule-based methods are used as a post-processing module to fix errors caused by machine learning methods. For example, Xu et al14 proposed a hybrid method to detect patients with colorectal cancer, using the machine learning method for colorectal cancer case determination on the free text and rule-based method on structured data.

The 3 categories of methods usually use natural language processing (NLP) techniques to extract useful information such as clinical entities, quantitative information, temporal information, and so on. The most frequently used NLP tools are MetaMap,20 HITex,4 CUIMANDREef,3 REX,21 EPiDEA,22 MedLEE,23 cTAKES,24 and Negex.25

Although lots of methods have been proposed for cohort selection, it is still difficult to compare them with each other due to the lack of public data. The Integrating Biology and the Bedside (i2b2) center organized 2 shared tasks on smoking detection26 and obesity identification5 in 2006 and 2008, respectively. The rule-based methods performed just as well as other methods in the i2b2 2006 challenge and outperformed other methods in the i2b2 2008 challenge. The limitation of the 2 challenges is that they only considered 1 phenotype. In 2016, a challenge on symptom severity prediction from neuropsychiatric clinical records was organized,27 and the ensemble system of association rules and machine learning methods won the challenge. The machine learning methods used in the ensemble system include SVM, RF, NB, Adaboost, and deep neural network.

In 2018, the national NLP clinical challenges (n2c2), which has a track (track 1) on cohort selection for clinical trials considering 13 criteria (ie, drug abuse), was launched. In this challenge, the organizer provided the de-identified mental health records (MHRs) of 288 patients for system development and test. We participated in this challenge and proposed a hierarchical neural network. Inspired by Yang et al,28 we used a hierarchical network to represent documents based on sentence representation. In the hierarchical network, a convolutional neural network (CNN) and a long short-term memory (LSTM) network were used for sentence representation, a highway network29 was applied to adjust information flow, a self-attention mechanism30 was adopted to reweight sentences, LSTM was used for document representation, and a weighted cross entropy was used as loss function to relieve the data imbalance problem. Experimental results showed that our proposed hierarchical neural network was powerful for cohort selection for clinical trials.

MATERIALS AND METHODS

Data sets

The organizers of the n2c2 2018 challenge provided a corpus composed of MHRs of 288 patients manually annotated with indicators of 13 predefined selection criteria. The corpus was split into a training set of MHRs of 202 patients and a test set of MHRs of 86 patients. The predefined selection criteria are described in Supplementary Table 1 in detail. In this corpus, each patient had 2-–5 records. Figure 1 lists the data distribution on the 13 selection criteria, some of which data distribution is extremely imbalanced, such as ALCOHOL-ABUSE, ASP-FOR-MI, DRUG-ABUSE, ENGLISH, KETO-1YR, MAKES-DECISIONS, and MI-6MOS.

Figure 1.

Data distribution on cohort selection criteria.

Data processing

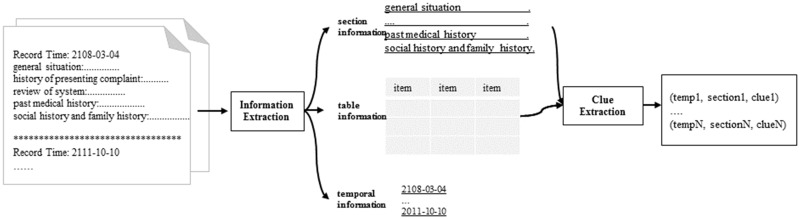

The task of cohort selection for clinical trials is to determine which criteria a patient satisfies among the 13 predefined criteria according to the patient’s MHRs. In order to tackle this task in a unified framework, we processed the MHRs of each patient as shown in Figure 2. First, we extracted section information, tables, and temporal information from the MHRs. We followed previous studies31 to extract section information and temporal information. To extract information from tables, we fixed their position by finding table heads followed by consecutive short lines (eg, “—-“) and consecutive blank rows, and then obtained items and values by splitting the sentence by 2–4 consecutive spaces. The table heads included “data,” “labs,” “labs/studies,” “chemistries,” “selected recent labs,” “data: pending,” “database,” “chemistry,” “laboratory,” “labs/rads,” “laboratory data,” “lab results,” “laboratories,” “lab data,” and “results and diagnostic testing.” To represent table information, we converted items with number values into items with text values such as high, low, normal, or unknown. In this way “HCT 35.6” was converted into “HCT L” (the normal value of HCT is 36.0–46.0. Second, we designed simple rules based on a key word dictionary to extract sentences related to each criterion (called clues) and ranked them in chronological order. The clues were sentences containing some key words we collected as shown in Supplementary Table 2. Finally, we obtained 12 235 clues and each clue was represented by (temporal information, section information, clue) such as (“2071/02/13”,“general situation”, “that he has had renal insufficiency in the past”).

Figure 2.

Data processing flow.

Task definition

We recognized the cohort selection for clinical trials as 13 binary classification tasks corresponding to the 13 criteria. Formally, each patient p is labeled with corresponding to the i-th criterion, where and . The clues we extracted for each selection criterion form an instance , where is a clue containing words . All clues in E are ranked in chronological order.

Hierarchical neural network

Following Yang et al.'s work [28], we used the hierarchical neural network called CNN-Highway-LSTM and LSTM-Highway-LSTM, as shown in Figure 3 to classify an instance into 0 or 1, where 0 stands for “not met” and 1 for “met”. The neural network includes 5 components: (1) a neural network for sentence representation using CNN or LSTM, (2) a highway network to adjust information flow, (3) a self-attention neural network to reweight sentences, (4) a neural network for document representation network using LSTM, and (5) a fully connected neural network for instance classification. In the following sections, we present the components one-by-one in detail.

Figure 3.

Overview architecture of the hierarchical neural network for instance classification.

Sentence representation

For clue , each word was represented with a d dimensional word embedding. We applied CNN or LSTM to obtain the representation of , denoted by . In CNN, convolution operation was applied on using g sliding filters of different size l, and max pooling was adopted for down-sampling. In LSTM, we used the following bidirectional LSTM to represent :

| (1) |

| (2) |

where and are hidden states of from forward and backward directions. The 2 last hidden states were concatenated together to form the representation of , that is, .

Highway network

We used a highway network to adjust information flow as follows:

| (3) |

where is the highway output of the input , , and are parameters, H is a transformation function, in our case, the ReLU function. T is a transformation gate:

| (4) |

C is a carrying gate usually set to

Self-attention

As different clues may be of different importance, we reweighted all clues using self-attention as follows:

| (5) |

| (6) |

where is the weight of , and is the output of the input .

Document representation

To take advantage of the temporal relationship among the clues of an instance (also called document in this study) , we used the following LSTM to represent the instance:

| (7) |

where is the hidden state at time . The last hidden state of the LSTM is used as the representation of , denoted by .

Classifier

We fed the representation of instance (ie, ) into a multi-layer perceptron network for classification:

| (8) |

where is a weight matrix, is a bias vector, and is the label for the i-th criterion predicted by our model.

As the data distribution on some cohort selection criteria is extremely imbalanced, we leveraged class weights when model training. For the i-th criterion, the weight of class c is calculated by

| (9) |

where is the weight of class c, is the number of samples in .

Then, weighted cross entropy was used as classification loss , which can be written as

| (10) |

where is the probability of label .

EXPERIMENTS

The baseline methods used in our study were the following variants of our method (CNN-Highway-LSTM or LSTM-Highway-LSTM): (1) CNN-only or LSTM-only that only contains CNN or LSTM to represent documents directly and multi-layer perceptron for classification; (2) CNN-LSTM or LSTM-LSTM that contains all components except the highway network. We used the same hyperparameters for all models. The word embedding dimension (d) was set to 200, the sizes of sliding filters in CNN (l) were set to 3 and 5, the number of filters of the same length in CNN (g) was set to 150. the dimension of hidden states in LSTM was set to 100, the learning rate was set to 0.001, and the dropout probability was set to 0.5. The epoch was tuned from 1 to 80 via 5-fold cross-validation on the training set. We implemented all methods using Tensorflow, and pretrained the word embeddings on the “NOTEEVENTS” of MIMIC III using the Word2vc tool (https://radimrehurek.com/gensim/models/word2vec.html) with default parameters. All models were evaluated on the independent test set, and their performance was measured by micro-averaged precision (P), recall (R), and F1 score (F), calculated by the office tool provided by the challenge organizers.

RESULTS

Supplementary Table 3 shows the overall performance of LSTM-Highway-LSTM and its variants. LSTM-Highway-LSTM achieved the highest micro-averaged F1 score of 0.9021, much higher than that of the baseline methods. The F1-score difference between LSTM-Highway-LSTM and the baseline methods ranges from 1.53% to 4.97%. Both the highway network and the LSTM for document representation showed performance improvement. For example, for LSTM-Highway-LSTM, the improvement gain from the highway network is 1.53% in F1-score (LSTM-Highway-LSTM vs LSTM-LSTM), and the improvement gain from both the highway network and the LSTM for document representation is 5.96% in F1-score (LSTM-Highway-LSTM vs LSTM-only). The methods using LSTM for sentence representation outperform the methods using CNN. However, CNN-only performs better than LSTM-only.

It should be stated that the best F1-score of our submission for the challenge is 0.8855 achieved by an ensemble method of CNN-only and CNN-Highway-LSTM. The ensemble strategy is to select better results from them on each criterion.

Moreover, we further investigated the performance of CNN-Highway-LSTM and LSTM-Highway-LSTM on each selection criterion. From Supplementary Table 4, we can see that LSTM-Highway-LSTM performs better than CNN-Highway-LSTM on all cohort selection criteria except ADVANCED-CAD and DRUG-ABUSE. Among 13 criteria LSTM-Highway-LSTM does not perform very well on ABDOMINAL, ALCOHOL-ABUSE, ASP-FOR-MI, DRUG-ABUSE, KETO-1YR, MAKES-DECISIONS, and MI-6MOS, with an F1-score lower than 0.8000.

DISCUSSION

In this study, we proposed a hierarchical neural network for cohort selection for clinical trials and evaluated it on the corpus of the n2c2 challenge in 2018. Our proposed method achieves promising performance, but does not perform well on some cohort selection criteria, especially on the imbalanced criteria. Among 7 criteria on which the F1-score of LSTM-Highway-LSTM is lower than 0.8000 as mentioned above, 6 criteria are extremely imbalanced. Nevertheless, the data imbalance problem is partly relieved by introducing class weights on all the 6 imbalanced criteria except ALCOHOL-ABUSE. We checked the ALCOHOL-ABUSE results and found that the clues varied dramatically in the description. The average improvement gain from class weights is more than 9% in F1-score for the other 5 imbalanced criteria.

The reason why CNN-only outperforms LSTM-only may be that a certain number of documents are too long. In the corpus of the n2c2 2018 challenge, there are totally 3744 = 288*13 documents (ie, instances) composed of 12 235 sentences (ie, clues). Of the documents, 770 do not contain any sentence. The average length of the documents is near 100, and the max length of the documents exceeds 2000. For the same reason, we introduced hierarchical architecture to represent documents. It is easy to understand that LSTM-Highway-LSTM performs better than CNN-Highway-LSTM when documents are split into sentences with an average length of 24, since LSTM has the advantage of representing sentences over CNN.

To further understand the errors of our model, 20 wrongly classified examples are randomly selected and analyzed. Misunderstanding of clues causes most errors. There are 3 cases as follows:

(1) word ambiguity. For example, “cr” in (“2093/07/09,”“medication,”“toprol xl (metoprolol succinate extended release) 100mg tablet cr 24hr take 1.5 tablet(s) po qd”) is wrongly recognized as “creatinine” instead of “chromium.”

(2) negation. For example, “MI” should be negative in (“2104/03/15,” “health maintenance,” “to obs for ro mi”) as “ro” stands for “rule out.”

(3) number. For example, it is difficult to determine whether “1.5” is high or not in (“2069/06/27,” “labs,” “bun and creatinine of 36 and 1.5, which is”) as no clear reference value is provided for creatinine.

There also a few errors caused by the use of an incomplete dictionary used for clue extraction, such as the diet supplement dictionary. Because of the incomplete diet supplement dictionary, no clue can be extracted for patients taking diet supplements.

There may be 3 major future directions for further improvement: (1) searching more effective methods to tackle imbalanced data, (2) using NLP techniques to help us understand clues better, and (3) expanding incomplete dictionaries.

CONCLUSION

In this article, we proposed a 5-layer hierarchical neural network for cohort selection for clinical trials. In this network, LSTM and CNN were individually used to represent sentences, the highway network was used to adjust information flow, the self-attention mechanism was used to reweight sentences, LSTM was used to represent documents in chronological order, and weighted cross entropy was used as classification loss to relieve the class imbalance problem. Experiments on a benchmark data set show that the proposed method is effective for cohort selection for clinical trials, and the highway network, self-attention mechanism, and weighted cross entropy also demonstrate their advantages.

FUNDING

This paper is supported in part by grants: NSFCs (National Natural Science Foundations of China) (U1813215, 61876052 and 61573118), Special Foundation for Technology Research Program of Guangdong Province (2015B010131010), Strategic Emerging Industry Development Special Funds of Shenzhen (JCYJ20170307150528934 and JCYJ20180306172232154), Innovation Fund of Harbin Institute of Technology (HIT.NSRIF.2017052).

AUTHOR CONTRIBUTIONS

The work presented here was carried out in collaboration between all authors. YX, XS, and BT designed the methods and experiments. YX and XS conducted the experiment. All authors analyzed the data and interpreted the results. YX and BT wrote the paper. All authors approved the final manuscript.

PATIENT CONSENT

Obtained.

PROVENANCE AND PEER REVIEW

Not commissioned; externally peer reviewed.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Conflict of interest statement

None declared.

Supplementary Material

REFERENCE

- 1. Nguyen AN, Lawley MJ, Hansen DP, et al. Symbolic rule-based classification of lung cancer stages from free-text pathology reports. J Am Med Inform Assoc 2010; 174: 440–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Zheng T, Xie W, Xu L, et al. A machine learning-based framework to identify type 2 diabetes through electronic health records. Int J Med Inform 2017; 97: 120–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Garvin JH, DuVall SL, South BR, et al. Automated extraction of ejection fraction for quality measurement using regular expressions in Unstructured Information Management Architecture (UIMA) for heart failure. J Am Med Inform Assoc 2012; 195: 859–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Zeng QT, Goryachev S, Weiss S, Sordo M, Murphy SN, Lazarus R.. Extracting principal diagnosis, co-morbidity and smoking status for asthma research: evaluation of a natural language processing system. BMC Med Inform Dec Making 2006; 61: 30.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Uzuner Ö. Recognizing obesity and comorbidities in sparse data. J Am Med Inform Assoc 2009; 164: 561–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Shivade C, Raghavan P, Fosler-Lussier E, et al. A review of approaches to identifying patient phenotype cohorts using electronic health records. J Am Med Inform Assoc 2014; 212: 221–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Halpern Y, Horng S, Choi Y, Sontag D.. Electronic medical record phenotyping using the anchor and learn framework. J Am Med Inform Assoc 2016; 234: 731–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Penberthy L, Brown R, Puma F, Dahman B.. Automated matching software for clinical trials eligibility: measuring efficiency and flexibility. Contemporary Clinical Trials 2010; 313: 207–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Kho AN, Hayes MG, Rasmussen-Torvik L, et al. Use of diverse electronic medical record systems to identify genetic risk for type 2 diabetes within a genome-wide association study. J Am Med Inform Assoc 2012; 192: 212–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Klompas M, Haney G, Church D, Lazarus R, Hou X, Platt R.. Automated identification of acute hepatitis B using electronic medical record data to facilitate public health surveillance. PLOS One 2008; 37: e2626.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hebert PL, Geiss LS, Tierney EF, Engelgau MM, Yawn BP, McBean AM.. Identifying persons with diabetes using Medicare claims data. Am J Med Qual 1999; 146: 270–7. [DOI] [PubMed] [Google Scholar]

- 12. Lehman L, Saeed M, Long W, Lee J, Mark R.. Risk stratification of ICU patients using topic models inferred from unstructured progress notes. AMIA Annu Symp Proc 2012; 2012: 505–11.. [PMC free article] [PubMed] [Google Scholar]

- 13. Kim D, Shin H, Song YS, Kim JH.. Synergistic effect of different levels of genomic data for cancer clinical outcome prediction. J. Biomed Inform 2012; 456: 1191–8. [DOI] [PubMed] [Google Scholar]

- 14. Xu H, Fu Z, Shah A, et al. Extracting and integrating data from entire electronic health records for detecting colorectal cancer cases. AMIA Annu Symp Proc 2011; 2011: 1564. [PMC free article] [PubMed] [Google Scholar]

- 15. Yu S, Liao KP, Shaw SY, et al. Toward high-throughput phenotyping: unbiased automated feature extraction and selection from knowledge sources. J Am Med Inform Assoc 2015; 225: 993–1000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Gottesman O, Kuivaniemi H, Tromp G, et al. The electronic medical records and genomics (eMERGE) network: past, present, and future. Genet Med 2013; 1510: 761.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Kirby JC, Speltz P, Rasmussen LV, et al. PheKB: a catalog and workflow for creating electronic phenotype algorithms for transportability. J Am Med Inform Assoc 2016; 236: 1046–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Mani S, Chen Y, Elasy T, Clayton W, Denny J.. Type 2 diabetes risk forecasting from EMR data using machine learning. AMIA Annu Symp Proc 2012; 2012: 606.. [PMC free article] [PubMed] [Google Scholar]

- 19. Che Z, Cheng Y, Sun Z, Liu Y. Exploiting convolutional neural network for risk prediction with medical feature embedding. arXiv preprint arXiv: 170107474; 2017.

- 20. Aronson AR. Effective mapping of biomedical text to the UMLS Metathesaurus: the MetaMap program. In: Proceedings of the AMIA Symposium American Medical Informatics Association 2001;2001: 17–21. [PMC free article] [PubMed]

- 21. Friedlin J, Overhage M, Al-Haddad MA, et al. Comparing methods for identifying pancreatic cancer patients using electronic data sources. AMIA Annu Symp Proc 2010;2010: 237. [PMC free article] [PubMed] [Google Scholar]

- 22. Cui L, Bozorgi A, Lhatoo SD, Zhang G-Q, Sahoo SS.. EpiDEA: extracting structured epilepsy and seizure information from patient discharge summaries for cohort identification. AMIA Annu Symp Proc 2012; 2012: 1191. [PMC free article] [PubMed] [Google Scholar]

- 23. Jain NL, Knirsch CA, Friedman C, Hripcsak G. Identification of suspected tuberculosis patients based on natural language processing of chest radiograph reports. In: Proceedings of the AMIA Annual Fall Symposium. American Medical Informatics Association 1996; 1996:542. [PMC free article] [PubMed]

- 24. Savova GK, Masanz JJ, Ogren PV, et al. Mayo clinical Text Analysis and Knowledge Extraction System (cTAKES): architecture, component evaluation and applications. J Am Med Inform Assoc 2010; 175: 507–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Chapman WW, Bridewell W, Hanbury P, Cooper GF, Buchanan BG.. A simple algorithm for identifying negated findings and diseases in discharge summaries. J Biomed Inform 2001; 345: 301–10. [DOI] [PubMed] [Google Scholar]

- 26. Uzuner Ö, Goldstein I, Luo Y, Kohane I.. Identifying patient smoking status from medical discharge records. J Am Med Inform Assoc 2008; 151: 14–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Filannino M, Stubbs A, Uzuner Ö.. Symptom severity prediction from neuropsychiatric clinical records: overview of 2016 CEGS N-GRID shared tasks track 2. J Biomed Inform 2017; 75: S62–S70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Yang Z, Yang D, Dyer C, He X, Smola A, Hovy E. Hierarchical attention networks for document classification. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies;San Diego, California: Association for Computational Linguistics; 2016:1480-1489. doi:10.18653/v1/N16-1174.

- 29. Srivastava RK, Greff K, Schmidhuber J. Training very deep networks. In: Advances in Neural Information Processing Systems; 2015: 2377–85.

- 30. Bahdanau D, Cho K, Bengio Y, Huang PBJ. Neural machine translation by jointly learning to align and translate. In: International Conference on Learning Representations 2015. [Google Scholar]

- 31. Tang B, Wu Y, Jiang M, Chen Y, Denny JC, Xu H.. A hybrid system for temporal information extraction from clinical text. J Am Med Inform Assoc 2013; 205: 828–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.