Abstract

Objective

Electronic health records (EHRs) were expected to yield numerous benefits. However, early studies found mixed evidence of this. We sought to determine whether widespread adoption of modern EHRs in the US has improved clinical care.

Methods

We studied hospitals reporting performance measures from 2008–2015 in the Centers for Medicare and Medicaid Services Hospital Compare database that also reported having an EHR in the American Hospital Association 2015 IT supplement. Using interrupted time-series analysis, we examined the association of EHR implementation, EHR vendor, and Meaningful Use status with 11 process measures and 30-day hospital readmission and mortality rates for heart failure, pneumonia, and acute myocardial infarction.

Results

A total of 1246 hospitals contributed 8222 hospital-years. Compared to hospitals without EHRs, hospitals with EHRs had significant improvements over time on 5 of 11 process measures. There were no substantial differences in readmission or mortality rates. Hospitals with CPSI EHR systems performed worse on several process and outcome measures. Otherwise, we found no substantial improvements in process measures or condition-specific outcomes by duration of EHR use, EHR vendor, or a hospital’s Meaningful Use Stage 1 or Stage 2 status.

Conclusion

In this national study of hospitals with modern EHRs, EHR use was associated with better process of care measure performance but did not improve condition-specific readmission or mortality rates regardless of duration of EHR use, vendor choice, or Meaningful Use status. Further research is required to understand why EHRs have yet to improve standard outcome measures and how to better realize the potential benefits of EHR systems.

Keywords: electronic health record, process measures, hospital readmission, hospital mortality, meaningful use

INTRODUCTION

The widespread adoption of electronic health record (EHR) systems has long been heralded as transformative for the health care industry.1–3 The HITECH Act, passed in 2009, authorized the spending of over $30 billion to expand EHR adoption. Since then, EHR installations have increased tremendously; between 2010 and 2014, the number of hospitals with a basic EHR system rose from 15.6% to 75.5%.4

The HITECH Act further required that EHR systems fulfill “Meaningful Use” criteria, standards representing those functionalities of EHR systems believed to be most promising for improving care delivery and coordination.3,5,6 Expected benefits from EHRs included eliminating medication administration errors, enhancing accessibility of patient data, improving clinical decision-making through reminders and automated decision support, and facilitating transitions of care, among many others.3,5–8

While health information technology interventions in general have had promising results,6,9 studies concentrating specifically on EHRs have been underwhelming. Indeed, early research examining associations between EHR adoption and clinical outcomes have not clearly demonstrated that EHRs have significantly improved care. While some studies reported positive associations between EHR usage and process of care measures,10–15 others demonstrated little to no relationship.16–18 Similarly, there have been mixed results in examining the association between EHR adoption and outcomes. Studies have shown either no relationship or a worsening of hospital readmission rates after implementing EHR systems.19–22 There are also conflicting results in studies examining the association of EHR use with mortality rates.19,20,22–24 However, the majority of these studies were done with data prior to passage of the HITECH Act and Meaningful Use certification, when hospitals had significantly less advanced and less standardized systems. As hospitals and EHR systems have continued to co-evolve, there has been only 1 recent study assessing the relationship between duration of EHR use and outcomes,24 and no contemporary studies assessing the association between duration of EHR use and processes of care. In addition, there are no prior studies evaluating whether working with a particular EHR vendor affects outcomes or process measures.

Given the large national investment in EHRs, it is important to understand whether modern versions of EHRs have impacted care and if so, which ones. Therefore, we examined the association between EHR usage, process of care measures, and hospital readmission and mortality rates for heart failure (HF), pneumonia, and acute myocardial infarction (AMI). We further investigated whether duration of EHR use, EHR vendor, and a hospital’s Meaningful Use status were associated with process of care measures or condition-specific outcomes.

MATERIALS AND METHODS

Sample and data sources

We studied a retrospective cohort of hospitals that reported performance measures in the CMS Hospital Compare database from 2008–2015 and had data on EHR system implementation from the American Hospital Association 2015 IT supplement, a database containing information on duration of EHR use and primary inpatient EHR vendor.20,25,26 We labeled data according to the last year in which they were predominantly gathered rather than the year they were reported due to delays in reporting. Outcomes were reported by CMS as a 3-year moving average. An outcome measure from 2010, for example, was the average of the outcome from 2007 to 2010.

Dependent variables

We studied all CMS process of care measures that were measured for at least 5 consecutive years and did not have missing data from 2009–2011 (a critical period when most EHRs were implemented).27,28 We limited our sample for each process measure to hospitals reporting that measure for at least 30 patients.10 Process measures were scored as the percentage of patients receiving the appropriate care except for the measure reporting median number of minutes before patients with suspected AMI received an ECG.

Outcomes measures were CMS risk-adjusted hospital 30-day readmission and mortality rates for HF, pneumonia, and AMI for years 2008–2015.

Key predictors of interest

Key predictors were whether or not a hospital had an EHR, number of years since EHR implementation, inpatient EHR vendor, and whether a hospital’s use of their EHR met Meaningful Use Stage 1 or Stage 2 criteria. These data were derived from the American Heart Association IT supplement as well as publically available reporting files for Meaningful Use certification. Based on EHR vendor prevalence, we distinguished among the top 5 companies (Epic, Meditech, Cerner, McKesson, and CPSI), which accounted for 88.6% of the EHRs in our sample, and then grouped all other vendors into ‘Other’.

Covariates

We adjusted for hospital characteristics including teaching status, ownership (private nonprofit, private for-profit, public), disproportionate share index (a marker of the size of the low-income population a hospital serves), bed size, urban or rural location, and Census region. These values were derived for each hospital for every year using CMS payment files. Since performance had large temporal trends, we controlled for year as a fixed effect. We did not use additional risk adjustment given that the outcomes were already risk-adjusted by CMS for each condition measure.

STATISTICAL ANALYSIS

A summary of our models and analyses is presented in Supplementary Table S1. Hospital characteristics were summarized by frequency and by mean and standard deviation when appropriate. Process measures and condition-specific hospital readmission and mortality rates were adjusted by hospital characteristics and then reported as average values with 95% confidence intervals. Some models additionally adjusted for outcome year, duration of EHR use, and/or hospital Meaningful Use Stage 1 certification status.

To establish the association of adopting an EHR with baseline performance after implementation as well as changes in performance with additional years of EHR use, we used an interrupted time series analysis, which is a quasi-experimental design to estimate intervention effects in nonrandomized settings. Segmented regression analysis allowed us to control for both temporal trends in performance while also studying change in response to implementing an EHR system.29 We used mixed effects linear regression models with fixed effects for year and hospital characteristics (teaching status, ownership, disproportionate share index, number of beds, urban or rural location, and Census region) and random effects by hospital, a method recommended for assessing hospital performance according to an appointed CMS committee.30

In summary, for hospital i in year t, hospital outcome or process measure Y(i, t) was modeled as

where EHR (i, t) = 1 if the hospital had an EHR and 0 if the hospital did not, yearsOfEHR is the number of years since EHR installation, vector hospChar denotes hospital characteristics, u(i) denotes hospital random effects (to capture time-invariant differences in characteristics of hospitals that could be correlated with EHR adoption and with outcomes), and m(t) denotes year fixed effects that capture national trends in outcomes. We interpreted coefficient b as the baseline change in outcome that occurred within the first months of EHR implementation. We interpreted coefficient c as the change in outcome with each additional year of EHR usage.

For condition-specific readmission and mortality rates, since rates were reported by CMS as 3-year moving averages, we introduced an additional exposure variable to our models to account for the fact that for some outcomes years, during only a portion of the 3-year period did the hospital have an EHR. For instance, if a hospital implemented an EHR in 2008, for an outcome reported in 2010, the outcome would reflect the average outcome from 2007 to 2010. In this case, the exposure variable would be two-thirds, since the hospital had an EHR during only two-thirds of the reported outcome period. This technique has been used in a prior study using CMS data.31 Process measures were reported annually and therefore did not require such an adjustment.

To study the association of EHR vendor choice with performance, we added fixed effects terms for EHR vendor and included an interaction term between EHR vendor and the number of years since EHR implementation. Similarly, when modeling the effect of a hospital meeting Meaningful Use criteria, we included an indicator variable for whether a hospital’s EHR use met Meaningful Use criteria and an interaction term between this indicator variable and the number of years since EHR implementation. We felt that linear mixed effects models were adequate given that the outcomes were approximately normally distributed.

In studying the difference between hospitals with an EHR and those with no EHR, we limited our analysis to years prior to 2013, given that almost all hospitals had adopted an EHR by 2013. Including hospital data after 2013 would enrich the group of hospitals with EHR with data from later years and therefore introduce additional time related biases. Since Meaningful Use Stage 1 criteria were first measured in 2011, the models studying Meaningful Use Stage 1 used data from 2011 onward. Meaningful Use Stage 2 certification was first recorded in 2014, so models studying Meaningful Use Stage 2 used data from 2014 onward. All analyses looking at pneumonia mortality rates excluded 2015 data given an unexplained significant increase in mortality in that year. Analyses were performed in Stata version 14.1 (StataCorp, College Station, TX). Data were visualized using R software (version 3.4.1, Vienna, Austria).

RESULTS

Our sample included 8222 hospital-years of data from 2008 to 2015 from 1246 hospitals. Hospital characteristics are listed in Table 1. Certain analyses looked at subsets of this sample based on date as explained in the methods. The EHR vendor with the most hospital-years was Epic (26.1%), followed by Cerner (20.1%) and Meditech (15.9%). In the sample comparing hospitals that had installed an EHR versus those that had not, the mean duration of EHR use was 1.9 years (SD = 2.2 years). In the samples comparing hospitals that had installed EHRs meeting Meaningful Use criteria, 78.7% of hospitals had an EHR with Meaningful Use Stage 1 certification and 69.2% had an EHR with Meaningful Use Stage 2 certification.

Table 1.

Hospital characteristics for different subsamples by date range. Each of the 8222 data points represents a specific hospital for a specific year. Percentages represent the percent of hospital years accounted for by a particular characteristic. Altogether there were 1246 unique hospitals with each hospital contributing an average of 6.6 hospital-year data points. Data from 2008 to 2013 were used for comparing hospitals with an EHR versus hospitals without an her, given that nearly all hospitals had installed an EHR system by 2013. Data from 2011 to 2015 were used for comparing hospitals with an EHR meeting Meaningful Use Stage 1 criteria versus hospitals with an EHR that did not, given that Meaningful Use Stage 1 certification began in 2011. Data from 2014 to 2015 were used for comparing hospitals with an EHR meeting Meaningful Use Stage 2 criteria versus hospitals with an EHR that did not, given that Meaningful Use Stage 2 certification began in 2014.

| Sample Characteristics | 2008–2015 | 2008–2012 | 2011–2015 | 2014–2015 |

|---|---|---|---|---|

| Hospital-years | 8222 | 4482 | 5572 | 2937 |

| Number of unique hospitals | 1246 | 1242 | 1201 | 1183 |

| EHR Vendor | ||||

| CPSI | 5.5% | 4.9% | 6.0% | 6.3% |

| Cerner | 20.1% | 19.9% | 19.7% | 20.4% |

| Epic | 26.1% | 22.8% | 27.9% | 30.1% |

| McKesson | 7.1% | 6.1% | 7.6% | 8.2% |

| Meditech | 15.9% | 14.7% | 16.4% | 17.4% |

| Other | 15.7% | 17.5% | 16.6% | 17.7% |

| None | 9.6% | 14.1% | 6.0% | 0% |

| Years since EHR installation (mean±SD) | 3.1±2.8 | 1.9±2.2 | 3.7±2.8 | 5.0±2.6 |

| Hospital meets Meaningful Use Stage 1 | 60.3% | 27.1% | 78.7% | 94.0% |

| Hospital meets Meaningful Use Stage 2 | 23.1% | 0% | 30.1% | 69.2% |

| Teaching | 39.8% | 40.5% | 39.0% | 39.1% |

| Ownership | ||||

| Private nonprofit | 70.9% | 71.8% | 70.0% | 69.7% |

| Private for-profit | 7.6% | 6.9% | 8.3% | 8.4% |

| Public | 21.6% | 21.3% | 21.8% | 21.8% |

| Disproportionate share index (mean±SD) | 27.4±16.0% | 26.5±16.0% | 28.2±16.1% | 28.6±16.0% |

| Beds (mean±SD) | 231.1±205.1 | 233.3±204.3 | 228.2±204.3 | 228.7±207.3 |

| Rural | 24.8% | 25.3% | 24.7% | 23.9% |

| Region | ||||

| East North Central | 19.1% | 19.1% | 19.0% | 19.0% |

| East South Central | 3.8% | 4.0% | 3.7% | 3.6% |

| Middle Atlantic | 14.8% | 14.9% | 14.8% | 14.7% |

| Mountain | 5.0% | 4.8 % | 5.1% | 5.2% |

| New England | 3.6% | 3.8% | 3.5% | 3.5% |

| Pacific | 10.2% | 9.6% | 10.8% | 11.0% |

| South Atlantic | 15.5% | 15.7% | 15.3% | 15.3% |

| West North Central | 14.2% | 14.7% | 13.8% | 13.6% |

| West South Central | 13.8% | 13.4% | 14.0% | 14.2% |

Abbreviations: EHR, electronic health record; SD, standard deviation.

Hospitals with EHR compared to hospitals without EHR

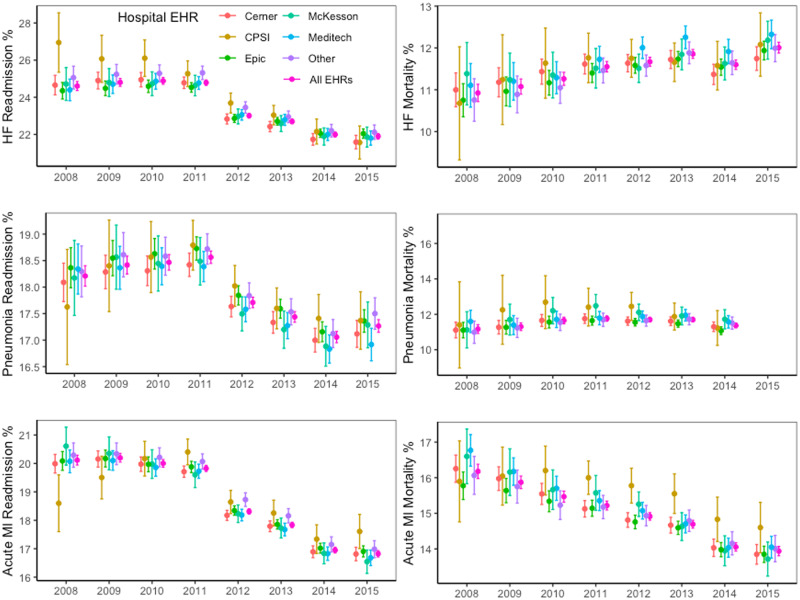

Compared to hospitals without an EHR, those with an EHR had significantly higher process of care scores for 5 out of 11 measures (Table 2). There were 5 measures that showed improvements which became greater with additional years of EHR use. For 1 measure, patients with AMI given aspirin at discharge, there was a decrease in performance with longer EHR use. For outcomes measures, hospitals with an EHR did not have significantly different HF, pneumonia, or AMI mortality or readmission rates both at baseline after implementation and after additional years of EHR use compared with hospitals without EHRs (Figure 1, Table 3). The 1 exception was a small significant decrease in pneumonia mortality of 0.08% per year of EHR use.

Table 2.

Differences in performance on process of care measures (scored as percentage of patients receiving appropriate process or average number of minutes) between hospitals with an EHR system and those without an EHR both at baseline after implementation and after additional years of EHR use. Measures were adjusted for year and hospital characteristics.

| Process of Care Measure | Avg Adherence Rate in Hospitals with No EHR | Baseline Diff after EHR between Hospitals with EHR vs No EHR (%) | Increase per Additional Year of EHR Use (%) |

|---|---|---|---|

| Patients with chest pain or suspected AMI given aspirin within 24 hours of arrival | 95.7% | -0.07 | +0.04 |

| Patients with AMI given aspirin at discharge | 98.2% | −0.03 | −0.09b |

| Patients given PCI within 90 minutes of arrival | 90.8% | -0.02 | +0.20 |

| Average number of minutes before patients with chest pain or possible AMI got an ECG | 8.6 min | +0.23 min | +0.04 min |

| Patients given ACE inhibitor or ARB for left ventricular systolic dysfunction | 94.9% | +0.28 | +0.15b |

| Patients admitted with HF given an evaluation of left ventricular systolic dysfunction | 97.3% | +0.33a | +0.06 |

| Patients admitted with HF given discharge instructions | 88.8% | +2.00b | +0.51b |

| Pneumonia patients assessed and given influenza vaccination | 90.2% | +1.59b | +0.39b |

| Patients assessed and given pneumococcal vaccination | 91.1% | +1.60b | +0.43b |

| Patients given the most appropriate initial antibiotics for pneumonia | 92.5% | +0.12 | +0.07 |

| Patients whose initial emergency room blood culture were performed prior to administration of antibiotics | 95.8% | +0.62b | +0.13b |

Abbreviations: ACE, angiotensin-converting enzyme; AMI, acute myocardial infarction; ARB, angiotensin receptor blocker; ECG, electrocardiogram; EHR, electronic health record; HF, heart failure; min, minutes; PCI, Percutaneous Coronary Intervention

P < .05

P < .01

Figure 1.

Average condition-specific readmission and mortality rates for hospitals with EHRs compared to hospitals without EHRs stratified by year. Rates are 3 year moving averages ending with the displayed year. Rates were adjusted for years of EHR use and hospital characteristics. Error bars represent 95% confidence intervals.

Abbreviation: EHR, electronic health record.

Table 3.

Differences in hospital readmission and mortality rates both at baseline after EHR implementation and with each additional year of EHR use in hospitals with an EHR compared to those who did not have an EHR. Outcomes were adjusted for outcome year and hospital characteristics.

| HF Readmission Rate (% Points) | PN Readmission Rate (% Points) | AMI Readmission Rate (% Points) | HF Mortality Rate (% Points) | PN Mortality Rate (% Points) | AMI Mortality Rate (% Points) | |

|---|---|---|---|---|---|---|

| Has no EHR | 24.20% | 18.31% | 19.47% | 11.36% | 11.62% | 15.46% |

| Baseline rate | ||||||

| (Reference) | ||||||

| Has EHR | +0.05 | -0.03 | +0.04 | +0.01 | +0.00 | -0.00 |

| Baseline diff | ||||||

| Diff per additional year | −0.02 | −0.01 | −0.05 | −0.01 | −0.08a | −0.04 |

Abbreviations: AMI, acute myocardial infarction; EHR, electronic health record; HF, heart failure; diff, difference; PN, pneumonia.

P < .05

Comparisons between EHR vendors

There were significant differences in process of care measures by vendor (Figure 2). Hospitals with CPSI scored worse on several process measures. No EHR performed consistently better. To study how these trends changed with additional years of EHR use, we looked at differences in process measures at baseline after EHR implementation and then with each additional year (Table 4). We used Epic as the reference EHR given that it had the largest market share. Compared to hospitals with Epic, those with CPSI scored significantly worse at baseline on 8 of 11 measures, but no EHR scored consistently better across multiple measures. EHRs with worse baseline hospital performance on a particular measure had comparably more improvement on that measure over time. For example, at baseline, hospitals with CPSI had a 11.5% (P < .01) lower rate of HF patients receiving an evaluation of left ventricular systolic function compared to hospitals with Epic, but this rate improved by 1.47% more per year (P < .01) among hospitals with CPSI.

Figure 2.

Differences in process of care measures by EHR vendor. Measures were adjusted for outcome year, duration of EHR use, hospital Meaningful Use Stage 1 status, and hospital characteristics.

Abbreviations: diff = difference; EHR, electronic health record.

Table 4.

Differences in process of care measures both at baseline after EHR implementation and with each year of EHR use in hospitals with specific EHR vendors compared to hospitals with Epic. Measures were adjusted for outcome year, hospital Meaningful Use Stage 1 status and hospital characteristics.

| Quality of Care Process Measure | Epic |

CPSI |

Cerner |

McKesson |

Meditech |

Other |

|||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | Baseline Diff | Diff per year | Baseline Diff | Diff per year | Baseline Diff | Diff per year | Baseline Diff | Diff per year | Baseline Diff | Diff per year | |

| Patients with chest pain or suspected AMI given aspirin within 24 hours of arrival | 96.38% | −3.61 b | +0.20 | −0.05 | +0.02 | −0.92 | −0.17 | +0.18 | +0.05 | +0.22 | −0.06 |

| Patients with AMI given aspirin at discharge | 98.76% | -6.13 b | +0.69 b | −0.39 | +0.09 | -1.02 b | +0.19a | −0.29 | +0.04 | −0.78 b | +0.17 b |

| Patients given PCI within 90 minutes of arrival | 94.32% | −6.50 | +0.00 | −4.02 b | +0.70 b | −6.08 b | +1.54 b | −2.20a | +0.45 | −0.67 | −0.06 |

| Average number of minutes before patients with chest pain or possible AMI got an ECG | 8.38 min | +4.49 b | −0.68 b | -1.20 | +0.12 | +0.17 | +0.02 | +0.83 | −0.16 | −0.57 | +0.02 |

| Patients given ACE inhibitor or ARB for left ventricular systolic dysfunction | 95.83% | −0.30 | +0.05 | −0.79 | +0.12 | −1.78 b | +0.04 | −1.23a | −0.07 | −0.34 | −0.19 |

| Patients admitted with HF given an evaluation of left ventricular systolic dysfunction | 99.05% | -11.52 b | +1.47 b | +0.21 | −0.01 | -2.90 b | +0.23a | -1.82 b | +0.29 b | -2.74 b | +0.27 b |

| Patients admitted with HF given discharge instructions | 90.51% | −10.39 b | +0.33 | −0.76 | −0.16 | −4.62 b | +0.30 | −1.26 | −0.73 b | −3.49 b | −0.53a |

| Pneumonia patients assessed and given influenza vaccination | 90.10% | -4.84 b | −0.53 | +2.41 b | −0.37 b | -2.12 | −0.17 | +0.37 | −0.13 | −0.54 | −0.45 b |

| Patients assessed and given pneumococcal vaccination | 90.29% | −8.09 | +0.99a | +1.24 | −0.24 | −2.18 | +0.63 | −0.20 | +0.02 | −2.37a | +0.13 |

| Patients given the most appropriate initial antibiotics for pneumonia | 95.27% | -7.67 b | +0.13 | +0.35 | +0.01 | -3.91 b | +0.39 b | -2.40 b | +0.35 b | -1.62 b | −0.13 |

| Patients whose initial emergency room blood culture were performed prior to administration of antibiotics | 95.92% | −2.84 b | +0.38 | −0.20 | +0.05 | −1.02a | +0.16 | −0.55 | +0.15 | −0.51 | −0.08 |

Abbreviations: ACE, angiotensin-converting enzyme; AMI, acute myocardial infarction; ARB, angiotensin receptor blocker; diff = difference; ECG, electrocardiogram; EHR, electronic health record; HF, heart failure; PCI, Percutaneous Coronary Intervention

P < .05

P < .01

We visualized the absolute adjusted readmission and mortality rates by outcome year across different vendors and in comparison to the overall group (Figure 3). CPSI was the only EHR that displayed significant differences and showed higher HF readmission rates but lower AMI readmission rates during early years as well as higher AMI mortality rates during later years.

Figure 3.

Average hospital condition-specific readmission and mortality rates by EHR vendor for each year. Rates were adjusted for duration of EHR use, hospital Meaningful Use Stage 1 status, and hospital characteristics. Error bars represent 95% confidence intervals.

Abbreviation: EHR, electronic health record.

Hospitals meeting Meaningful Use compared to those that did not

Hospitals with EHR use that met Meaningful Use Stage 1 criteria had mixed results on process measures. These hospitals had lower scores for evaluating systolic function in HF patients over time when compared to hospitals with EHRs not meeting these criteria (-0.18% per year, −0.26– −0.09, P < .01) (Supplementary Table S2). They had higher baseline scores for assessment for flu vaccination and prescribing appropriate pneumonia antibiotics (+3.84%, 2.82– 4.86, P < .01; +0.68%, 0.10-1.26, P = .02). However these differences waned with time (−0.59% per year, −0.79– −0.38, P < .01; −0.16% per year, −0.28– −0.04, P = .01, respectively). EHR use that met Meaningful Use Stage 1 criteria was not significantly associated with baseline differences in HF, pneumonia, or AMI readmission or mortality rates nor differences in rates over time (Figure 4, Supplementary Table S4).

Figure 4.

Average condition-specific outcome rates for hospitals that met Meaningful Use Stage 1 criteria compared to hospitals with EHRs that didn’t meet any Meaningful Use criteria (Stage 1 or Stage 2) stratified by year. Rates are 3 year moving averages ending with the displayed year. All rates were adjusted for years of EHR use, and hospital characteristics. Error bars represent 95% confidence intervals.

Abbreviation: EHR, electronic health record.

There were fewer reported process measures in 2014 and 2015, the years during which hospitals began receiving certification for Meaningful Use Stage 2 (Supplementary Table S3). Hospitals that met Meaningful Use Stage 2 had higher baseline scores for assessment for flu vaccination (+1.73%, 0.41–3.05, P = .01). But these differences waned with time (−0.39% per year, −0.58– −0.20, P < .01). Hospitals meeting Meaningful Use Stage 2 had higher AMI mortality rates at baseline that decreased with time (+0.24%, 0.05– 0.42, P = .01; −0.05% per year, −0.08– −0.02, P < .01) (Supplementary Table S5). Otherwise, hospitals meeting Meaningful Use Stage 2 criteria had no significant differences in HF, pneumonia, or AMI readmission or mortality rates both at baseline and over time.

DISCUSSION

In this national study of hospitals with modern, commercially available EHR systems, we found that hospitals with EHRs showed significant improvements in 5 of 11 process measures compared to hospitals without EHRs. However, we found no substantial association of EHR use with hospital 30-day readmission or mortality rates for HF, pneumonia, or AMI. This was true after implementation and did not change with additional years of EHR use. Hospitals with CPSI EHR systems performed worse on several process and outcome measures compared to hospitals with other EHRs. Otherwise, choice of EHR vendor and meeting Meaningful Use Stage 1 or 2 criteria had little or no association with improvement in hospital performance for either process of care or outcome measures.

This is 1 of the first studies to assess the effects of modern EHRs over time, after hospitals have had several years of usage experience. Prior studies examining the effects of EHRs on clinical care had largely negative findings, which could be explained by their early timing, since most of them used data from before the bulk of hospitals had adopted EHRs. Significant experience with EHRs may be required to accumulate a critical mass of patient information to fully realize the benefits of EHR core functions such as increased information accessibility, manipulation, and searchability. Additionally, EHRs have upfront implementation costs that may counteract their benefits. Hospitals may expend significant effort training clinicians and support staff, building infrastructure, creating workflow patterns, and changing hospital culture to encourage use of EHRs.32–34 A lag in benefits from EHRs therefore seems intuitive, and a recent study by Lin et al suggested that both duration of use and number of EHR functions may be associated with lower mortality for Medicare fee-for-service patients, although this effect was limited to nonteaching hospitals and smaller hospitals.24

We report clear evidence that EHRs have begun to be associated with significant improvements in hospital process measures. Prior studies showed that among hospitals with EHRs, more advanced EHR features may improve process measures.10,35 Our results further quantify these effects on process measures and show for the first time that implementing an EHR system may provide improvements in process measures with additional benefits that accrue with time. Compared to hospitals without EHRs, hospitals with EHR were more likely to ensure that HF patients received mortality-reducing medications, had appropriate evaluations of systolic function, and were given discharge instructions. These hospitals were also more likely to administer influenza and pneumococcal vaccinations and draw blood cultures before administering antibiotics to patients with pneumonia. Such improvements are especially significant given that almost all of the process of care measures already had on average > 90% adherence rates in hospitals without EHRs, making further additional improvements generally more challenging.

It would seem intuitive that improvements in measures such as starting HF patients on appropriate medical therapy and giving such patients discharge instructions would improve readmission and mortality rates. However, we did not find any significant association between EHR use and condition-specific mortality or readmission rates to mirror the observed process measure improvements. There was only a very small decrease in pneumonia mortality rates with additional years of EHR use. Thus, the most recently available data continue to suggest that changes in outcomes associated with EHR use have been slow to develop or have not occurred at all for many hospitals.

In an attempt to further understand whether certain types of EHRs may be more effective in both improving process measures and possibly even affecting clinical outcomes, we stratified EHR systems by vendor. This is 1 of the first studies to do so and improves upon prior research that studied heterogeneous groups of EHRs by grouping together older, often homegrown and less standardized, EHR systems. Today, with the commercialization of EHR systems, we are able to study large numbers of hospitals that have implemented EHRs from the same vendor and compare vendor products to each other. EHRs vary substantially by vendor in their graphical displays, user interface interactions, system architecture, and functionalities, which is why accounting for these differences is important. Such differences may explain, in part, why analyses of single EHR components, such as computerized physician order entry or electronic medication administration records, have yielded mixed results when studied in different EHR systems.36–39 A recent study found that significant variations in EHR vendor design choices may translate to different rates of success in Meaningful Use certification.38

We found significant differences in process measures and outcomes between hospitals with certain EHR vendors. For example, CPSI hospitals had significantly lower rates of key HF and AMI process measures including evaluating left ventricular function, providing HF discharge instructions, prescribing aspirin for chest pain patients within 24 hours of arrival and for AMI patients at discharge. This, in turn, was mirrored by significantly higher HF readmission and AMI mortality rates in CPSI hospitals.

Overall, we found that for process measures and most outcome measures, EHR vendors with significantly outlying performance tended to trend towards the mean with time. We are not able to discern whether this is because EHRs with worse performance had more room for improvement, the intrinsic differences between EHR vendors, or differences in EHR vendor implementation processes. More research is needed to understand whether vendor-specific EHR properties explain the differences in outcomes. For the specific case of CPSI, it should also be noted that the number of hospitals with CPSI in our sample was small (5.5% of sample), which could magnify outlier effects despite efforts to control for hospital characteristics. It also implies that performance by CPSI hospitals is unlikely to have significantly affected our overall lack of an observed association between having an EHR and outcomes.

We additionally sought to understand whether hospitals with EHRs that fulfilled Meaningful Use criteria performed better. Despite the fact that specific EHR components that are required by Meaningful Use, such as computerized physician order entry and clinical decision support tools, have been shown to improve outcomes,20,26 research on Meaningful Use as a whole has revealed mixed and largely negative results.10,40 Our results are consistent with prior studies showing little to no association between meeting Meaningful Use Stage 1 and 2 criteria and process of care and outcome measures. The reason for this remains unclear. It may be that while individual EHR components are important, the implementation of many interventions together offset their effects. Meaningful Use Stage 1 and 2 criteria may also be too elementary and only with more demanding criteria, such as those in subsequent Meaningful Use stages, will there be a significant effect on process of care measures and outcomes. It may also be too early to see the full effects of Meaningful Use Stage 2 since, by the end of our observations in 2015, hospital EHRs had been certified for Meaningful Use Stage 2 for up to only 2 years.

In summary, despite demonstrating that EHRs are associated with improvements in hospital process measures, contemporary data continue to show a lack of association between EHR adoption and hospital condition-specific readmission and mortality rates even after years of EHR use. This lack of a substantial improvement of outcomes endured even after stratifying EHRs by vendor or by fulfillment of Meaningful Use Stage 1 or Stage 2 criteria. While it could be argued that readmission and mortality outcomes are difficult to improve, the implementation of EHR systems has often been conceived of as a revolutionary change to the health care system that is supposed to improve care in fundamental ways.3 Our results do not imply that EHRs cannot produce measurable improvements in clinical outcomes in the future. However, they do underscore that the benefits of EHR use should not be assumed. With the persistent lack of a clear association between EHR implementation and clinical outcomes even with contemporary, longitudinal data, renewed attention should be devoted to identifying barriers to care that may coincide with EHR use and how to more effectively harness EHR capabilities. For example, prior studies have described unintended consequences of implementing EHR systems that range from decreasing face-to-face interactions between physicians and nurses to alert fatigue, excessive time spent on documentation, and over-reliance on computerized safety checks.41–44 It may be that these effects play a larger role than previously suspected and offset some of the expected positive effects of EHRs. A more nuanced definition of what constitutes Meaningful Use is likely to help further guide effective EHR use in the future, although more research is needed to define what those future Meaningful Use criteria should include.

There are several limitations to our study. The process and outcomes measures used were based on Medicare claims, which may hamper full risk adjustment. However, Medicare data have the strength of allowing for analysis across many hospitals, which is required when studying EHRs since these systems exist on the hospital level. Furthermore, these measures remain important markers of performance, since they are used for policy decisions and Medicare reimbursements. Our study was limited to 3 disease-specific process measures and outcomes. However, HF, pneumonia, and AMI together constitute a significant proportion of inpatient stays, each ranking within the top 10 most common hospital diagnoses.45 These conditions also have the highest quality longitudinal data, and we would reasonably expect that any significant EHR benefit that affects the care of many possible conditions, would also affect the care of these 3 conditions. The Medicare outcome measures were 3-year moving averages, which could have diluted the magnitude of the observed effects of EHRs, although significant effects should still be detectable with time. As this is an observational study, there also may be selection bias as to which hospitals had the resources to implement EHR systems. However, we found a diversity of hospitals had EHRs and controlled for standard hospital characteristics. Furthermore, since well-resourced hospitals might be expected to improve more with time, if this confounding influence were true, we would expect to see a divergence in performance by EHR adoption, which we did not observe. Given that widespread EHR adoption has already occurred, large-scale prospective studies of EHRs are also not possible. A limitation with respect to our analysis of EHR vendors was that we were unable to account for hospitals switching EHRs during the observed time period. However, given the significant costs associated with changing EHR vendors, hospital EHR switches were likely limited.32,33 Lastly, there may be confounding in our results based on time. Consistent with prior studies, we found improvements in process measures, condition-specific readmission rates, and AMI mortality rates with time as well as increases in HF and pneumonia mortality rates with time. We attempted to control for time’s effect by modeling the year as a fixed effect. If time continued to have an effect despite our adjustments, it may have produced false associations between duration of EHR usage and process or outcomes measures, which is something we did not find.

CONCLUSION

In this national study of hospitals with modern EHRs, EHR adoption was associated with better performance on process measures, but has yet to improve condition-specific outcomes. This is likely not attributable to insufficient time with EHR usage, large differences by EHR vendor, or lack of standardization according to Meaningful Use criteria. Future studies should examine whether this lack of an association with outcomes is due to unintended consequences endemic to EHRs or other external factors that may be diminishing the impact of EHRs. Understanding these factors will allow for the necessary improvements to fully realize the promise of EHR systems.

FUNDING

This work was supported by a Research Evaluation and Allocation Committee (REAC) grant from the University of California, San Francisco (UCSF) Clinical and Translational Sciences Institute (CTSI).

AUTHOR CONTRIBUTIONS

NY, RD, GL contributed to the study design. NY and WB performed the statistical analysis and data visualization. NY wrote the manuscript with input from all authors.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Conflict of interest statement

None declared.

Supplementary Material

REFERENCES

- 1. Institute of Medicine. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press; 2000. https://www.nap.edu/read/9728/chapter/1. Accessed February 19, 2017. [Google Scholar]

- 2. Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- 3. Institute of Medicine. Key Capabilities of Electronic Health Record. Washington, DC: National Academy Press; 2003. [Google Scholar]

- 4. The Office of the National Coordinator for Health Information Technology. EHR Vendors Reported by Providers Participating in Federal Programs. https://dashboard.healthit.gov/datadashboard/documentation/ehr-vendors-reported-CMS-ONC-data-documentation.php. Accessed February 19, 2017.

- 5. Blumenthal D. Launching HITECH. N Engl J Med 2010; 3625: 382–5. [DOI] [PubMed] [Google Scholar]

- 6. Buntin MB, Jain SH, Blumenthal D.. Health information technology: laying the infrastructure for national health reform. Health Aff (Millwood) 2010; 296: 1214–9. [DOI] [PubMed] [Google Scholar]

- 7. Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006; 14410: 742–52. [DOI] [PubMed] [Google Scholar]

- 8. Kaushal R, Shojania KG, Bates DW.. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med 2003; 16312: 1409–16. [DOI] [PubMed] [Google Scholar]

- 9. Kruse CS, Beane A.. Health information technology continues to show positive effect on medical outcomes: systematic review. J Med Internet Res 2018; 202: e41.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Appari A, Eric Johnson M, Anthony DL.. Meaningful use of electronic health record systems and process quality of care: evidence from a panel data analysis of U.S. acute-care hospitals. Health Serv Res 2013; 48 (2, pt 1): 354–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Kazley AS, Ozcan YA.. Do hospitals with electronic medical records (EMRs) provide higher quality care? An examination of three clinical conditions. Med Care Res Rev 2008; 654: 496–513. [DOI] [PubMed] [Google Scholar]

- 12. Yu FB, Menachemi N, Berner ES, et al. Full implementation of computerized physician order entry and medication-related quality outcomes: a study of 3364 hospitals. Am J Med Qual 2009; 244: 278–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. McCullough JS, Casey M, Moscovice I, et al. The effect of health information technology on quality in U.S. hospitals. Health Aff (Millwood) 2010; 294: 647–54. [DOI] [PubMed] [Google Scholar]

- 14. Cebul RD, Love TE, Jain AK, et al. Electronic health records and quality of diabetes care. N Engl J Med 2011; 3659: 825–33. [DOI] [PubMed] [Google Scholar]

- 15. Herrin J, da Graca B, Nicewander D, et al. The effectiveness of implementing an electronic health record on diabetes care and outcomes. Health Serv Res 2012; 474: 1522–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Himmelstein DU, Wright A, Woolhandler S.. Hospital computing and the costs and quality of care: a national study. Am J Med 2010; 1231: 40–6. [DOI] [PubMed] [Google Scholar]

- 17. Jones SS, Adams JL, Schneider EC, et al. Electronic health record adoption and quality improvement in US hospitals. Am J Manag Care 2010; 16 (12 Suppl HIT): SP64–71. [PubMed] [Google Scholar]

- 18. Parente ST, McCullough JS.. Health information technology and patient safety: evidence from panel data. Health Aff (Millwood) 2009; 282: 357–60. [DOI] [PubMed] [Google Scholar]

- 19. Lee J, Kuo Y-F, Goodwin JS.. The effect of electronic medical record adoption on outcomes in US hospitals. BMC Health Serv Res 2013; 13: 39.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. DesRoches CM, Campbell EG, Vogeli C, et al. Electronic health records’ limited successes suggest more targeted uses. Health Aff (Millwood) 2010; 294: 639–46. [DOI] [PubMed] [Google Scholar]

- 21. Patterson ME, Marken P, Zhong Y, et al. Comprehensive electronic medical record implementation levels not associated with 30-day all-cause readmissions within Medicare beneficiaries with heart failure. Appl Clin Inform 2014; 5: 670–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Yanamadala S, Morrison D, Curtin C, et al. Electronic health records and quality of care. Medicine (Baltimore) 2016; 95: e3332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Furukawa MF, Raghu TS, Shao B.. Electronic medical records, nurse staffing, and nurse-sensitive patient outcomes: evidence from California hospitals, 1998–2007. Health Serv Res 2010; 454: 941–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Lin SC, Jha AK, Adler-Milstein J.. Electronic health records associated with lower hospital mortality after systems have time to mature. Health Aff (Millwood) 2018; 377: 1128–35. [DOI] [PubMed] [Google Scholar]

- 25. Lammers EJ, McLaughlin CG, Barna M.. Physician EHR adoption and potentially preventable hospital admissions among medicare beneficiaries: panel data evidence, 2010–2013. Health Serv Res 2016; 516: 2056–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Jones SS, Heaton P, Friedberg MW, et al. Today’s “meaningful use” standard for medication orders by hospitals may save few lives; later stages may do more. Health Aff (Millwood) 2011; 3010: 2005–12. [DOI] [PubMed] [Google Scholar]

- 27. Centers for Medicare and Medicaid Services. Overview of Specifications of Measures Displayed on Hospital Compare. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/Downloads/HospitalOverviewOfSpecs200512.pdf. Accessed April 5, 2019.

- 28. QualityNet. Archived Measure Resources for Inpatient Hospital Quality Measures. https://www.qualitynet.org/dcs/ContentServer? c=Page&pagename=QnetPublic%2FPage%2FQnetTier4&cid=1228775411587. Accessed April 5, 2019.

- 29. Wagner AK, Soumerai SB, Zhang F, et al. Segmented regression analysis of interrupted time series studies in medication use research. J Clin Pharm Ther 2002; 274: 299–309. [DOI] [PubMed] [Google Scholar]

- 30. Ash A, Fienberg S, Louis T, et al. Statistical Issues in Assessing Hospital Performance. Quantitative Health Sciences Publications and Presentations; 2012. https://escholarship.umassmed.edu/qhs_pp/1114/. Accessed December 6, 2018. [Google Scholar]

- 31. Ryan AM, Krinsky S, Adler-Milstein J, et al. Association between hospitals’ engagement in value-based reforms and readmission reduction in the hospital readmission reduction program. JAMA Intern Med 2017; 1776: 862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Miller RH, Sim I.. Physicians’ use of electronic medical records: barriers and solutions. Health Aff (Millwood) 2004; 232: 116–26. [DOI] [PubMed] [Google Scholar]

- 33. Heisey-Grove D, Danehy L-N, Consolazio M, et al. A national study of challenges to electronic health record adoption and meaningful use. Med Care 2014; 522: 144–8. [DOI] [PubMed] [Google Scholar]

- 34. Gabriel MH. The implementation and use of electronic health records to achieve meaningful use among critical access hospitals. Office of the National Coordinator for Health Information Technology Data Brief 2013; 12 https://www.healthit.gov/sites/default/files/cahdata_brief12.pdf [Google Scholar]

- 35. Jarvis B, Johnson T, Butler P, et al. Assessing the impact of electronic health records as an enabler of hospital quality and patient satisfaction. Acad Med 2013; 8810: 1471–7. [DOI] [PubMed] [Google Scholar]

- 36. Sittig DF, Murphy DR, Smith MW, et al. Graphical display of diagnostic test results in electronic health records: a comparison of 8 systems. J Am Med Inform Assoc 2015; 224: 900–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Ratwani RM, Fairbanks RJ, Hettinger AZ, et al. Electronic health record usability: analysis of the user-centered design processes of eleven electronic health record vendors. J Am Med Inform Assoc 2015; 226: 1179–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Holmgren AJ, Adler-Milstein J, McCullough J.. Are all certified EHRs created equal? Assessing the relationship between EHR vendor and hospital meaningful use performance. J Am Med Inform Assoc 2018; 256: 654–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Black AD, Car J, Pagliari C, et al. The impact of eHealth on the quality and safety of health care: a systematic overview. PLoS Med 2011; 81: e1000387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Brice YN, Joynt KE, Tompkins CP, et al. Meaningful use and hospital performance on post-acute utilization indicators. Health Serv Res 2018; 532: 803–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Harrison MI, Koppel R, Bar-Lev S.. Unintended consequences of information technologies in health care—an interactive sociotechnical analysis. J Am Med Inform Assoc 2007; 145: 542–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Ash JS, Berg M, Coiera E.. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc 2004 2003; 112: 104–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Ash JS, Sittig DF, Poon EG, et al. The extent and importance of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2007; 144: 415–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Poissant L, Pereira J, Tamblyn R, et al. The impact of electronic health records on time efficiency of physicians and nurses: a systematic review. J Am Med Inform Assoc 2005; 125: 505–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. McDermott KW, Elixhauser A., Sun Ruirui.. Trends in Hospital Inpatient Stays in the United States, 2005–2014. Healthcare Cost and Utilization Project (HCUP) Statistical Briefs 2017; 225 https://www.hcup-us.ahrq.gov/reports/statbriefs/sb225-Inpatient-US-Stays-Trends.jsp? utm_source=ahrq&utm_medium=en1&utm_term=&utm_content=1&utm_campaign=ahrq_en7_5_2017. Accessed 17 August 2018. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.