Abstract

Objective

Our objectives were to identify educational interventions designed to equip medical students or residents with knowledge or skills related to various uses of electronic health records (EHRs), summarize and synthesize the results of formal evaluations of these initiatives, and compare the aims of these initiatives with the prescribed EHR-specific competencies for undergraduate and postgraduate medical education.

Materials and Methods

We conducted a systematic review of the literature following PRISMA (Preferred Reporting Items for Systematic Reviews and Meta Analyses) guidelines. We searched for English-language, peer-reviewed studies across 6 databases using a combination of Medical Subject Headings and keywords. We summarized the quantitative and qualitative results of included studies and rated studies according to the Best Evidence in Medical Education system.

Results

Our search yielded 619 citations, of which 11 studies were included. Seven studies involved medical students, 3 studies involved residents, and 1 study involved both groups. All interventions used a practical component involving entering information into a simulated or prototypical EHR. None of the interventions involved extracting, aggregating, or visualizing clinical data for panels of patients or specific populations.

Discussion

This review reveals few high-quality initiatives focused on training learners to engage with EHRs for both individual patient care and population health improvement. In comparing these interventions with the broad set of electronic records competencies expected of matriculating physicians, critical gaps in undergraduate and postgraduate medical education remain.

Conclusions

With the increasing adoption of EHRs and rise of competency-based medical education, educators should address the gaps in the training of future physicians to better prepare them to provide high quality care for their patients and communities.

Keywords: electronic health records, electronic medical records, medical students, resident physicians, training

INTRODUCTION

The adoption of electronic health records (EHRs) is rapidly accelerating across the continent.1 In addition to playing a critical role in facilitating and delivering patient care, the record serves as a medicolegal instrument and the data stored within are used for other administrative activities, including coding and billing. Healthcare systems are also beginning to harness the information contained in these records using advanced analytical methods (eg, machine learning, natural language processing) for research and quality improvement.2 Given these applications and their increasing role in health systems in delivering and overseeing care, current trainees need basic knowledge and skills to be able to work effectively within this ecosystem to provide and improve patient care.2,3

Specifically, medical students and residents will need to become comfortable with exercising the broad potential of the EHR: using it to capture structured (eg, demographic, medications) and unstructured (eg, progress notes) data during the patient encounter (primary use) and harnessing and analyzing these aggregate data for individual and population health (secondary use).4 Although competencies have been outlined in these areas at the undergraduate and postgraduate levels, learners receive limited exposure to EHR training during their formal education.5,6 Additionally, inadequate EHR training can impair the ability of trainees in carrying out their clinical responsibilities, increasing inefficiencies and user frustration.7

While previous reviews on related topics exist, they are quite dated. A review examining the scope of informatics training in family medicine residency programs found only 6 articles discussing the impact of EHRs on trainees and was published 20 years ago.8 Another review examining the published literature dealing with information technology (IT) in medical education found very little on introducing and teaching electronic medical records (EMRs) to medical students and residents and was published in 2009.9 Other reviews have been more comprehensive and rigorous, but have focused on the impact of health IT on the clinical encounter (eg, doctor-patient communication).10 Given that EHRs have become more common and more intensely used over the last decade, a review of the recent evidence is warranted.

Currently, the scope of educational initiatives in practice targeting knowledge and skill development in the primary and secondary use of EHRs is unknown. Recognizing that previous reviews uncovered limited literature, we sought to be comprehensive and methodologically rigorous in our approach.

We conducted a systematic review of the literature following the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta Analyses) guidelines to (1) identify educational interventions designed to equip medical students or residents with knowledge or skills related to the primary and secondary uses of EHRs; (2) summarize and, where possible, synthesize the quantitative and qualitative results of any formal evaluation of these training initiatives; and (3) compare the aims or results of these initiatives with the prescribed EHR-specific competencies for undergraduate and postgraduate medical education.

MATERIALS AND METHODS

As all data were previously published and publicly available, this investigation did not meet the criteria for local institutional review board ethics approval.

Literature search

We searched for relevant English-language studies in CINAHL, Cochrane Reviews, Embase, Eric, Medline, and PsycInfo with a specific focus on peer-reviewed journal articles, meta analyses, observational studies, and randomized controlled trials. We restricted our search results to articles published in English, but did not restrict dates. The end date for our initial and updated searches were August 9, 2018, and March 13, 2019, respectively.

Search strategy

Table 1 outlines the terms used in our search strategy. Our search strategy employed a combination of Medical Subject Headings terms and keywords, including medical students, interns, and internship; residents and residency; medical education—undergraduate and medical education—postgraduate; electronic health record or electronic medical records; and curriculum and teaching. Recognizing that electronic records are classified according to the purpose and setting of use, we use EHR broadly to include any record system used to collect information about a patient’s health.11

Table 1.

Search terms for systematic review

| Component of review topic | MeSH and or keyword search terms |

|---|---|

| Population | residents OR interns |

| Residency OR internship OR | |

| medical students OR | |

| education OR medical education, | |

| AND | |

| Intervention | Electronic health record OR |

| Electronic medical records | |

| AND | |

| “Outcome” | Curriculum OR curricul* |

| Teaching OR teach* |

MeSH: Medical Subject Headings.

Although we recognize that there are differences in EHR privileges of medical students and residents, students are increasingly being exposed to clinical clerkships in which they are responsible for accessing EHRs for the purposes of retrieving or entering information.12

We also hand-searched the bibliographies of all included articles.

Eligibility

We included studies if they described an educational intervention with the primary goal of exposing medical students or residents to the spectrum of activities involving the EHR (eg, entry, extraction, visualization, and analysis of data; navigation), outlined teaching methods, and evaluated the effect of the intervention on educational outcomes (ie, changes in knowledge, attitudes, or skills).

During level 1 and level 2 screening, we excluded those studies focusing exclusively on practicing clinicians, studies discussing interventions delivered in settings that were not part of a learner-focused course, curriculum, or initiative (ie, learners participating in training because the department of the rotation he or she was on was undergoing training); and studies using EHRs as a vehicle for teaching other concepts (eg, quality improvement, patient safety), assessing history-taking or physical examination skills, or improving performance in other areas (eg, discharge summaries, e-prescribing). We also excluded studies that did not evaluate outcomes related to the specific intervention tested (eg, testing the accuracy of notes following EHR training) as well as editorials, commentaries, conference abstracts or proceedings, reviews, and studies that were not peer reviewed.

Two reviewers (Z.H., N.P.) independently screened abstracts identified by the literature search for inclusion using a screening form (level 1 screening). To ensure consistency in the application of criteria, both reviewers screened a random sample of approximately 5% of the same articles and interrater reliability was confirmed. Both reviewers then obtained and screened the full text of included articles to determine final inclusion (level 2 screening). A third reviewer (A.R.) was available to resolve discrepancies.

Data abstraction

Two reviewers (Z.H., N.P.) independently abstracted all data using a standardized data abstraction form. Consistent with previous reviews, we extracted curricular descriptors and key methodological features and classified learning outcomes using Kirkpatrick’s model (level 1: participation; level 2a: attitudes; level 2b: knowledge or skills; level 3: behavior; level 4a: organizational practice; and level 4b: benefit to patients).13

Assessment of study quality

Two reviewers (Z.H., N.P.) independently assessed included studies using the Best Evidence Medical Education (BEME) review protocol, which has a 5-level rating system for strength of findings. BEME considers evidence-based teaching to be a spectrum with no evidence available on one end and detailed evidence on the other.14 To assist with grading this evidence, the protocol takes into consideration the quality (eg, randomized controlled trial vs cohort study), utility (ie, generalizability to other settings), extent (eg, single vs multiple studies), strength, target, and setting (ie, context) of the evidence.14 The grades itself are on a spectrum, with level 1 describing studies without clear conclusions and level 5 indicating results that are unequivocal. In the case of this review, these ratings were guided by an assessment of the study results, including the strength of the intervention, number of enrolled learners, congruency between the desired learning outcome and the measure used to demonstrate change, validity of the measure, quantitative change and its statistical significance, and extent to which the intervention could be transferred and adopted without modification.

Analysis

Given the anticipated heterogeneity in curricula, we did not aggregate abstracted data. We summarize educational content, teaching methods, and learning outcomes using descriptive statistics.

RESULTS

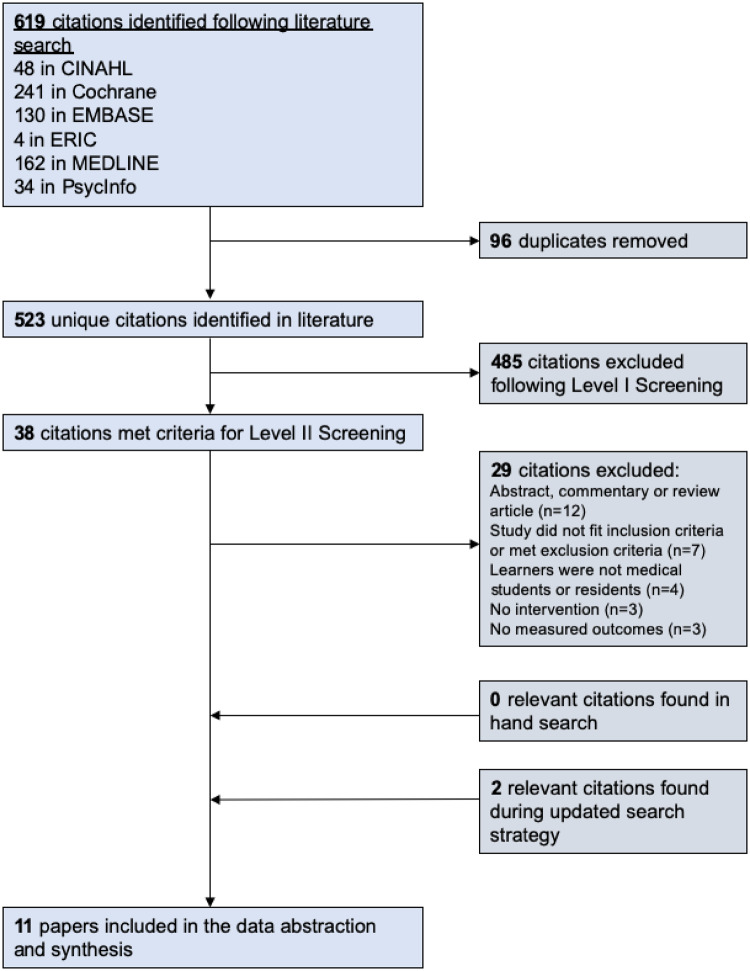

Our electronic search yielded 619 citations, of which 11 studies met inclusion criteria for qualitative synthesis. Figure 1 summarizes the process through which studies were excluded. During level 1 screening, 485 articles were excluded. A total of 411 articles were found to be unrelated to the topic at hand; 33 articles did not test the effect of an intervention (eg, editorials, commentaries, or conference abstracts), 24 articles used EMRs as a vehicle for teaching other concepts, 10 studies focused on practicing clinicians, and 7 studies did not evaluate outcomes related to the specific intervention tested.

Figure 1.

PRISMA (Preferred Reporting Items for Systematic Reviews and Meta Analyses) flow diagram.

All included studies involved learners at a single university.15–25 A total of 10 (91%) programs were based at universities in the United States15–21,23–25 and 1 (9%) program was based in Canada.22 Seven (63%) studies involved medical students16–20,23,25 and 3 (27%) studies involved residents.15,22,24 One (9%) study involved both medical students and residents.21

Educational interventions and features

Supplementary Table I provides an overview of each study and its characteristics, including the length and timing of the intervention, descriptors, and content. Programs varied significantly in length and scheduling: from a single 60-minute self-paced module reviewing screenshots of different EHR sections20 and tasks to a 5-week simulated EHR curriculum involving a virtual patient and chart exercises.21 All interventions used a practical component involving entering information into a simulated or prototypical EHR and 2 interventions provided students with an opportunity to practice entering information with standardized patients.16,23 Where interventions incorporated didactic methods, content generally focused on principles of documentation, querying the record for specific information (eg, lab results, medications), coding, adding new problems or medications, applying preventive guidelines, and ordering investigations or medications. None of the interventions involved extracting, aggregating, or visualizing clinical data for panels of patients or specific populations.

Study designs, learning outcomes, and evaluation methods

Supplementary Table II summarizes the study designs, learning outcomes, evaluation methods, and main findings by the BEME rating assigned. More than one-third of studies employed some form of a pre/posttest design15,17,22,24 and 3 studies used a defined control group.15,16,23 Nine (82%) studies were focused on changing skills15–19,22–25 and 2 (18%) were focused on changing attitudes.20,21 Diverse evaluation methods were used, including quizzes and surveys to assess knowledge and attitudes (27%),17,20,25 self-reported questionnaires to assess knowledge or skill acquisition (27%),15,22,24 assessments of learners’ electronic charts (18%),19,21 and objective structured clinical examinations with standardized patients.16,23

Main findings

Where quizzes and surveys were used as evaluation methods, learners generally performed well following completion of the educational activity and reported high levels of satisfaction with the activity.17,20,21,24 In the case of studies employing a control group, results were mixed. Two (18%) studies demonstrated no difference in assessed outcomes between learners exposed to the educational intervention or activity and the control group.15,16 The other study demonstrated that learners participating in the educational activity scored higher on standardized patient assessments during an objective structured clinical examination.23

BEME ratings

One (9%) study was classified as level I (no clear conclusions),20 2 (18%) studies were classified as level II (results are ambiguous but exhibit a trend),18,21 5 (45%) studies were rated as level III (conclusions can probably be based on results),15–17,22,24 and 3 were classified as level IV (results are very clear and likely to be true).19,23,25 The level II studies either used a retrospective design18 or did not report student performance on assessments of skill acquisition.21 Level III studies often employed a prospective pre/posttest design and reported the results of their evaluation measures. In addition to using prospective posttest designs, all level IV studies involved more than 100 learners and used more objective measures to assess changes in learning outcomes among participants.19,23,25

DISCUSSION

We identified 11 studies describing educational interventions focused on training undergraduate or postgraduate medical learners in the uses of EHRs. The majority of studies targeted medical students and used a prospective, posttest design with quantitative measures to assess skill acquisition. With the recent shift to competency-based medical education and a focus on core entrustable professional activities, a number of competencies regarding EHR use have been proposed.5,6,26 Unfortunately, gaps remain when comparing the educational activities and learning outcomes identified by our review to these competencies.

For example, before beginning clerkship, students are expected to be able to describe the components, benefits, and limitations of EHRs; the principles of managing and using aggregated electronic health information, including tenets of electronic documentation as well as differences between unstructured and structured data entry; and articulate standards for recording, communicating, sharing, and classifying electronic health information in the context of a medical team.26 They are also expected to be able to identify how systems may generate inaccurate data, discuss how data entry affects direct patient care and healthcare policy, gather relevant data from EHRs, and assess the reliability and quality of these data.26

Building on these milestones, clinical clerks are expected to document and retrieve information from EHRs in an accurate and timely fashion; enter data in EHRs in a way that supports clinical decision making, patient care, and tracking of outcomes; use decision support tools within the EHR; use outputs from an EHR to develop tailored resources for patients and families; and report deficiencies or errors in EHRs that may impact the integrity of health information.26 They are also to develop an awareness and working knowledge of health informatics through chart audits and research projects, describe examples of how health system use of data are relevant in medical practice, and demonstrate use of structured data.26

In the context of these proposed competencies, our findings suggest critical gaps. The majority of EHR educational activities we reviewed targeted clinical clerks as opposed to first- and second-year medical students. Where interventions were designed for preclerkship students, they primarily involved patient-centered use of the EHR and documentation with no focus on the secondary aggregation, extraction, and appraisal of these data. Similarly, studies involving clerks primarily involved documenting in or updating the EHR and applying best practices with respect to EHR use in patient encounters (eg, being seated facing the patient, sharing the screen). None of the studies we reviewed involved learners using adjunctive tools (eg, decision supports), creating patient resources, or conducting audits of recorded information.

We were surprised by the small number of studies targeting residents, especially in light of the significant time they spend interacting with the electronic record.27 We anticipated that this review would reveal a large number of studies targeting the development of EHR competencies among diverse groups of residents, especially those in specialties where information retrieval and analysis are closely linked to the provision of comprehensive and continuous (eg, family medicine, internal medicine) or episodic (eg, emergency medicine) care. In contrast, only 1 study involved internal medicine interns21 and none of the included studies included emergency medicine trainees.

Despite the limited number, studies involving residents employed more robust educational interventions (eg, simulated EHRs with virtual patients) and focused on a greater diversity of topics (eg, maintaining an accurate EHR, ordering laboratory tests and imaging, ensuring the privacy and security of health information, coding and classifying information). Given these strengths, other postgraduate programs may consider structuring new EHR training curricula on these existing interventions, but incorporating more objective ways of testing competency development as opposed to self-reported changes in knowledge and skill acquisition. A competency assessment tool for first-year residents developed by Nuovo et al7 is one potential method applicable to a number of different specialties working in different clinical environments.7

Others have explored the barriers for competency development in this area among learners. Borycki et al11 described a shortage of health professional faculty with health informatics competencies and experiences capable of designing curricula and teaching students. Folded into this constraint is the issue of limited time and competing demands within undergraduate and postgraduate medical curricula to incorporate learning events (eg, lectures, problem-based sessions) and experiential opportunities (eg, research projects). As EHRs are often managed by departments within hospitals or health networks whose primary focus is patient care and not education, tailoring systems often requires time and money for reconfiguration, authorization, and maintenance.28 Finally, as students and residents train in a variety of settings, they may be over- or underexposed to different types of EHRs, making it difficult to develop fluency with key tasks.11,28

Limitations

We provide several reasons to interpret our results with caution. First, as with any review, our search strategy focused on the published literature and may have missed studies in the peer-reviewed and grey literature. We attempted to mitigate this publication bias by using a broad set of Medical Subject Headings terms, keywords and databases. We also acknowledge that institutions may have educational activities related to the EHR in place as part of orientation programming, but have not studied the impacts of these activities or published the results of evaluations.

Our small sample size is another limitation. This highlights the limited literature on educational interventions related to EHR use targeting medical students and residents that have an evaluation of learning outcomes. The paucity of studies also serves a likely marker of little educational activity in this domain and underscores the importance of continued research in these areas. The substantial heterogeneity in design, use of measurement instruments, and outcomes also prevented more in-depth quantitative analyses and comparisons across studies.

We also recognize that a significant number of trainees have had exposure to the EHR before their medical training as scribes and that inclusion of these individuals in studies may have affected the results. The articles included in this review did not list the prior academic training of included learners, making it challenging to determine whether the results of interventions were impacted. While this limitation could not have been easily mitigated, it represents an important consideration for future work in this area.

CONCLUSION

Following a systematic review of the literature, we identified a limited number of studies highlighting educational interventions targeting medical students and residents in the primary uses of EHRs. In the context of increasing adoption of EHRs, the use of advanced analytical methods to extract and analyze patient data stored in the electronic record, and competency-based medical education, critical gaps in the training of future physicians remain. Future work should seek to close these gaps by addressing barriers to EHR use, building on existing initiatives, and sharing best practices and successes.

AUTHOR CONTRIBUTIONS

AR conceptualized the review, developed the search strategy, and wrote the first draft of the manuscript. NP conducted the literature search. ZH and NP reviewed articles and extracted data under the guidance of AR. JN and BW advised on the conceptualization of the review, supervised development and execution of the search strategy, and provided feedback on drafts.

Supplementary Material

ACKNOWLEDGMENTS

The authors wish to thank Sandra Halliday for her assistance with developing the search strategy.

CONFLICT OF INTEREST STATEMENT

None declared.

REFERENCES

- 1. Henry JHP,Y, Searcy T, Patel V. Adoption of Electronic Health Record Systems among U.S. Non-Federal Acute Care Hospitals: 2008-2015. 2016. https://www.healthit.gov/sites/default/files/briefs/2015_hospital_adoption_db_v17.pdf. Accessed August 11, 2019.

- 2. Mamdani M, Laupacis A.. Laying the digital and analytical foundations for Canada's future health care system. CMAJ 2018; 190 (1): E1–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Moskowitz A, McSparron J, Stone DJ, Celi LA.. Preparing a new generation of clinicians for the era of Big Data. Harv Med Stud Rev 2015; 2 (1): 24–7. [PMC free article] [PubMed] [Google Scholar]

- 4. Fridsma DB. Health informatics: a required skill for 21st century clinicians. BMJ 2018; 362: k3043. [published Online First: Epub Date]|. [DOI] [PubMed] [Google Scholar]

- 5. Hammoud MM, Dalrymple JL, Christner JG, et al. Medical student documentation in electronic health records: a collaborative statement from the alliance for clinical education. Teach Learn Med 2012; 24 (3): 257–66. [DOI] [PubMed] [Google Scholar]

- 6. Englander R, Flynn T, Call S, et al. Toward defining the foundation of the MD degree: core entrustable professional activities for entering residency. Acad Med 2016; 91 (10): 1352–8. [DOI] [PubMed] [Google Scholar]

- 7. Nuovo J, Hutchinson D, Balsbaugh T, Keenan C.. Establishing electronic health record competency testing for first-year residents. J Grad Med Educ 2013; 5 (4): 658–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Jerant AF. Training residents in medical informatics. Fam Med 1999; 31 (7): 465–72. [PubMed] [Google Scholar]

- 9. Otto A, Kushniruk A.. Incorporation of medical informatics and information technology as core components of undergraduate medical education - time for change! Stud Health Technol Inform 2009; 143: 62–7. [PubMed] [Google Scholar]

- 10. Crampton NH, Reis S, Shachak A.. Computers in the clinical encounter: a scoping review and thematic analysis. J Am Med Inform Assoc 2016; 23 (3): 654–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Borycki E, Joe R, Armstrong B, Bellwood P, Rebecca C.. Educating health professionals about the electronic health record (EHR): removing the barriers to adoption. Knowl Manag E-Learn 2011; 3 (1): 102 http://www.kmel-journal.org/ojs/index.php/online-publication/article/view/102/106 [Google Scholar]

- 12. Foster LM, Cuddy MM, Swanson DB, Holtzman KZ, Hammoud MM, Wallach PM.. Medical student use of electronic and paper health records during inpatient clinical clerkships: results of a national longitudinal study. Acad Med 2018; 93 (11S): S14–20. [DOI] [PubMed] [Google Scholar]

- 13. Kirkpatrick DL. Evaluation of training In: Craig RL, Bittel LR, eds. Training and Development Handbook. New York, NY: McGraw-Hill; 1967: 87–112. [Google Scholar]

- 14. Harden RM, Grant J, Buckley G, Hart IR.. BEME Guide No. 1: best evidence medical education. Med Teach 1999; 21 (6): 553–62. [DOI] [PubMed] [Google Scholar]

- 15. Zelnick CJ, Nelson DAF.. A medical informatics curriculum for 21st century family practice residencies. Fam Med 2002; 34 (9): 685–91. [PubMed] [Google Scholar]

- 16. Morrow JB, Dobbie AE, Jenkins C, Long R, Mihalic A, Wagner J.. First-year medical students can demonstrate EHR-specific communication skills: a control-group study. Fam Med 2009; 41 (1): 28–33. [PubMed] [Google Scholar]

- 17. Stephens MB, Williams PM.. Teaching principles of practice management and electronic medical record clinical documentation to third-year medical students. J Med Pract Manage 2010; 25 (4): 222–5. [PubMed] [Google Scholar]

- 18. Wagner DP, Roskos S, Demuth R, Mavis B.. Development and evaluation of a Health Record Online Submission Tool (HOST). Med Educ Online 2010; 15 (1): 5350.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Ferenchick GS, Solomon D, Mohmand A, et al. Are students ready for meaningful use? Med Educ Online 2013; 18 (1): 22495.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Gomes AW, Linton A, Abate L.. Strengthening our collaborations: building an electronic health record educational module. J Electron Resources Med Libr 2013; 10 (1): 1–10. [Google Scholar]

- 21. Milano CE, Hardman JA, Plesiu A, Rdesinski RE, Biagioli FE.. Simulated electronic health record (Sim-EHR) curriculum: Teaching EHR skills and use of the EHR for disease management and prevention. Acad Med 2014; 89 (3): 399–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Shachak A, Domb S, Borycki E, et al. A pilot study of computer-based simulation training for enhancing family medicine residents' competence in computerized settings. Stud Health Technol Inform 2015; 216: 506–10. [PubMed] [Google Scholar]

- 23. Lee WW, Alkureishi ML, Wroblewski KE, Farnan JM, Arora VM.. Incorporating the human touch: piloting a curriculum for patient-centered electronic health record use. Med Educ Online 2017; 22 (1): 1396171.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Stroup K, Sanders B, Bernstein B, Scherzer L, Pachter LM.. A new EHR training curriculum and assessment for pediatric residents. Appl Clin Inform 2017; 8 (4): 994–1002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Pereira AG, Kim M, Seywerd M, Nesbitt B, Pitt MB;Minnesota Epic101 Collaborative. Collaborating for competency-a model for single electronic health record onboarding for medical students rotating among separate health systems. Appl Clin Inform 2018; 9 (1): 199–204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.eHealth Competencies for Undergraduate Medical Education. 2017. https://www.infoway-inforoute.ca/en/component/edocman/resources/reports/clinical-adoption/3400-ehealth-competencies-for-undergraduate-medical-education? Itemid=101. Accessed April 24, 2019.

- 27. Wenger N, Mean M, Castioni J, Marques-Vidal P, Waeber G, Garnier A.. Allocation of internal medicine resident time in a Swiss hospital: a time and motion study of day and evening shifts. Ann Intern Med 2017; 166 (8): 579–86. [DOI] [PubMed] [Google Scholar]

- 28. Welcher CM, Hersh W, Takesue B, Stagg Elliott V, Hawkins RE.. Barriers to medical students' electronic health record access can impede their preparedness for practice. Acad Med 2018; 93 (1): 48–53. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.