Abstract

The rise of clinician burnout has been correlated with the increased adoption of electronic health records (EHRs). Some vendors have used data entry logs to measure the amount of time spent using the EHR and have developed metrics of provider efficiency. Initial attempts to utilize these data have proven difficult as it is not always apparent whether variations reflect provider behavior or simply the metric definitions. Metric definitions are also updated intermittently without warning, making longitudinal assessment problematic. Because the metrics are based on proprietary algorithms, they are impossible to validate without costly time–motion studies and are also difficult to compare across institutions and vendors. Clinical informaticians must partner with vendors in order to develop industry standards of EHR use, which could then be used to examine the impact of EHRs on clinician burnout.

Keywords: Electronic health record, provider efficiency, physician burnout

As physician burnout reaches epidemic proportions, health care delivery centers are seeking ways to stem the growing unrest among clinicians.1 Most providers are frustrated with the amount of time they spend in the electronic health record (EHR), especially time spent after hours and on the weekends.2 Increased burnout among physicians has been linked to the rise of the EHR and the increasing administrative burden placed on providers.3 While the EHR does not inherently create additional work for providers,4 it does provide a platform to more easily enforce regulatory requirements.5 Early programs, such as “Home4Dinner,” have demonstrated promise at decreasing burnout,6 and another focusing on decreasing “Pajama Time” used vendor EHR analytics tools that provide physician scorecards and dashboards.7 While encouraging, these pre- and postintervention studies are not definitive, and more investigation is needed to better quantify the impact of such programs. Because the EHR can track every user keystroke, click, and mouse mile, this data can be used to compare EHR efficiency of clinicians and target low efficiency users who might benefit from additional training or clinical decision support tools. EHRs, however, are complex and implemented differently in each institution. There are no industry-standard metrics to analyze and report provider time spent in the EHR, making it impossible to compare across vendor EHRs. Furthermore, in our experience, vendor provided dashboards utilize proprietary algorithms that cannot be validated by the institutions using them. Health care systems would be wise to ensure they truly understand the vendor’s data before sharing it with clinicians.

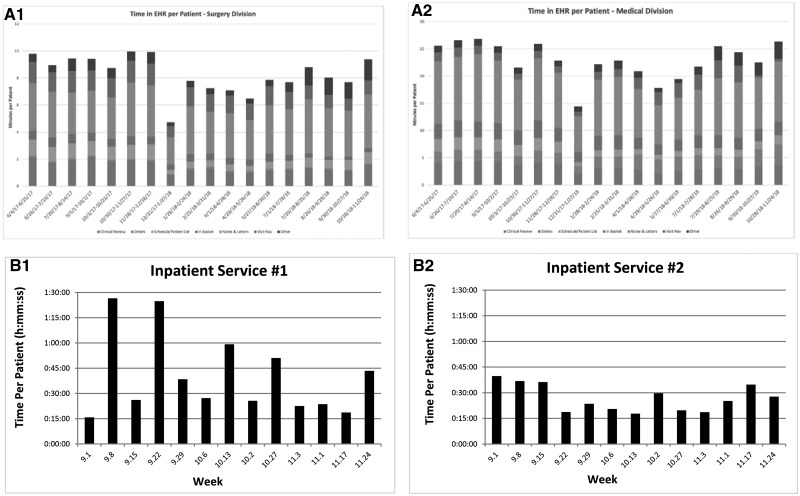

Measuring the EHR burden on providers is hard. Quantitative models such as time–motion analysis can be effective but are time-consuming, costly, and may be limited by bias and the inability to account well for multitasking. Qualitative models can measure perceived time in the EHR, but rely on surveys given to already-burnt out providers. A more accurate and convenient model would be to utilize data from EHR vendors’ access logs to compare the documentation burden between providers. While this approach offers potential advantages, validating the time-in-EHR data proves to be difficult. Looking at 2 vendor dashboards, plotting time in the EHR per patient shows a lot of variation (Figure 1), which is mirrored across multiple specialties. This variation begs the question: is this signal or noise? Initial attempts to validate and interpret this data have proven difficult for a number of reasons, most of which involve the vendors’ proprietary algorithms which manipulate the raw data.

Figure 1.

Comparison of 2 vendors’ reporting time in the electronic health record per patient seen. Panels A1 and A2 use data from 1 vendor to compare time spent in the EHR per patient in 2 ambulatory clinics, stratified by the EHR activity. Panels B1 and B2 use data from another vendor at a different institution and compare time spent in chart review and documentation per patient for 2 inpatient services.

In order to develop usable metrics of time spent using the EHR, vendors have developed algorithms to present the data in a meaningful way. This involves decisions on how to categorize tasks, assign time to a given task, when to stop the timer for a task, and how to normalize data for comparison between users. In the access logs, everything a user does, such as look at a report or enter data into a flowsheet, is assigned to a “task.” The algorithms then take each task and assign them to larger categories (eg, Orders or Clinical Review). However, because many of these tasks are vaguely named, it is very difficult to validate that they have been assigned to the correct category. For example, in 1 vendor’s logs, “notes viewed” is assigned to the category of “Notes and Letters” while “notes viewed in chart review” is assigned to “Clinical Review.” Outside of time–motion data, validating the accuracy of these assignments is nearly impossible. Vendor algorithms also must account for when a user is interrupted or walks away from the computer. Depending on how long the algorithm waits before timing, out it may under- or overestimate the time spent on a particular task. Without an industry standard, it is difficult to know whether or not one can compare time spent on a task across vendors.

In order to normalize the data, vendor algorithms may calculate the “time per patient” so that providers with varying clinical loads can be compared. This model works well for outpatient or emergency department encounters, where all actions on that encounter can be reasonably ascribed to a single patient. However the model breaks down in the inpatient venue, where a single patient encounter spans multiple days. One vendor tries to overcome this by calculating the time per day per patient documented on. While this may help estimate inpatient care burden, how does one account for cross-coverage during off hours? Overnight a clinician might open a chart and enter an order for a patient but never actually document a note or even see the patient.

Another way 1 vendor normalizes EHR data is by adjusting the time spent per patient based on the provider’s EHR adoption. For example, if a resident writes a note and forwards it to an attending physician, the attending will likely spend considerably less time reviewing and cosigning the note than the resident did creating it. In an effort to compare the resident’s efficiency to the attending’s, each provider is assigned an adoption percentage based on the number of notes they personally authored. Based on the percentage of notes authored, a multiplier then estimates the time spent per patient if the provider authored all of their notes. While this strategy may be useful in normalizing the data and comparing clinicians within a health system, it can be problematic. Clinicians have different roles and responsibilities, so it doesn’t necessarily make sense to normalize and compare them all. Why does it matter how long it might have taken an attending to create a note from scratch if they’re not responsible for that? Moreover, how is the algorithm accounting for the attending’s expertise, typing skills and other factors compared to the resident? When inquiring about these algorithms the authors have found that vendors are either unwilling or unable to provide detailed information. This makes interpreting the resulting data problematic, but moreover it makes it impossible to compare clinicians across health systems with different vendors.

In an effort to improve their data analytic tools, vendors will occasionally change their algorithm (typically without notice). This makes it difficult to follow these metrics longitudinally. If the vendors continue to keep their algorithms proprietary, the burden of validation will lie on the hospital systems themselves. However, the access logs are extraordinarily complex, and very few, if any, systems have the resources to create the queries needed.

By developing reports and dashboards that provide information on clinician EHR use vendors have taken a big step toward helping us understand the needs of our users. However, in order to make that data meaningful and actionable, it is critical that institutions work with their vendors to validate the data within their EHR implementation. While understanding the vendors’ desire to keep their algorithms proprietary, we feel it is also critical that standard metrics of time spent using the EHR are developed and incorporated into vendor EHRs. Ideal provider efficiency metrics might also measure efficiency of common work flows (eg, ordering an antibiotic) and isolate time spent on purely administrative tasks. There are numerous large health information technology conferences where clinicians, vendors, and other health IT specialists could partner to develop such industry standards. This new transparency will achieve 3 important goals. First will be a much-improved, if not ideal, representation of the EHR’s burden on providers. Second, the vendors will be seen as eager to participate in the solution to EHR-related burnout and not just the problem. Third, this will open up the possibility of cross-platform benchmarks and targets for which all health care organizations can aim. Provider burnout is a real and growing problem but understanding the EHR’s true burden and accurately measuring it over time will be an enormous, welcome step toward fixing it.

FUNDING

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

AUTHOR CONTRIBUTIONS

Both authors made substantial contributions to the conception, data acquisition and interpretation, drafting, and final approval of this perspective piece and take accountability for all aspects of the work.

Conflict of Interest statement

None to declare.

REFERENCES

- 1. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Informatics Assoc 2019; 26 (2): 106–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Gawande A. Why doctors hate their computers. The New Yorker, Annals of Medicine November 12, 2018 issue; 1–24. [Google Scholar]

- 3. Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: primary care physician workload assessment using EHR event log data and time-motion observations. Ann Fam Med 2017; 15 (5): 419–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Downing NL, Bates DW, Longhurst CA.. Physician burnout in the electronic health record era: are we ignoring the real cause? Ann Intern Med 2018; 169 (1): 50–1. [DOI] [PubMed] [Google Scholar]

- 5. Ash JS, Sittig DF, Poon EG, Guappone K, Campbell E, Dykstra RH.. The extent and importance of unintended consequences related to computerized provider order entry. J Am Med Informatics Assoc 2007; 14 (4): 415–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Stevens L, DiAngi Y, Schremp J, et al. Designing an individualized EHR learning plan for providers. Appl Clin Inform 2017; 08 (03): 924–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Webber E, Schaffer J, Willey C, Aldrich J.. Targeting pajama time: efforts to reduce physician burnout through electronic medical record (EMR) improvements. Pediatrics 2018; 142 (1 Meeting Abstract): 611. [Google Scholar]