Abstract

The rapid detection of the novel coronavirus disease, COVID-19, has a positive effect on preventing propagation and enhancing therapeutic outcomes. This article focuses on the rapid detection of COVID-19. We propose an ensemble deep learning model for novel COVID-19 detection from CT images. 2933 lung CT images from COVID-19 patients were obtained from previous publications, authoritative media reports, and public databases. The images were preprocessed to obtain 2500 high-quality images. 2500 CT images of lung tumor and 2500 from normal lung were obtained from a hospital. Transfer learning was used to initialize model parameters and pretrain three deep convolutional neural network models: AlexNet, GoogleNet, and ResNet. These models were used for feature extraction on all images. Softmax was used as the classification algorithm of the fully connected layer. The ensemble classifier EDL-COVID was obtained via relative majority voting. Finally, the ensemble classifier was compared with three component classifiers to evaluate accuracy, sensitivity, specificity, F value, and Matthews correlation coefficient. The results showed that the overall classification performance of the ensemble model was better than that of the component classifier. The evaluation indexes were also higher. This algorithm can better meet the rapid detection requirements of the novel coronavirus disease COVID-19.

Keywords: COVID-19, Lung CT images, Deep learning, Ensemble learning

1. Introduction

A sudden outbreak of pneumonia of unknown cause started in December 2019, in Wuhan, China. On February 7, 2020, the Chinese National Health Commission tentatively named this virus-induced pneumonia as “novel coronavirus pneumonia”. Due to the high infection rates of this virus, it has rapidly spread all over the world. On February 11, 2020, the World Health Organization (WHO) named it coronavirus disease (COVID-19) [1]. On April 18, 2020, the World Health Organization (WHO) declared COVID-19 an epidemic. According to the Global COVID-19 Real Time Query System of Hopkins University [2] diagnosed in China. 2,179,992 cases of COVID-19 have been diagnosed in many countries other than China, with a total of 150,212 deaths. The disease is caused by the severe acute respiratory syndrome Coronavirus 2 (SARS-CoV-2). The common symptoms of infected patients are fever, cough, sputum, shortness of breath, chest tightness, fatigue, and dizziness. Patients with severe pneumonia will have difficulty breathing or hypoxemia after a week of symptoms. Rapid disease progression can lead to acute respiratory distress syndrome, septic shock, metabolic acidosis, and coagulopathy [3].

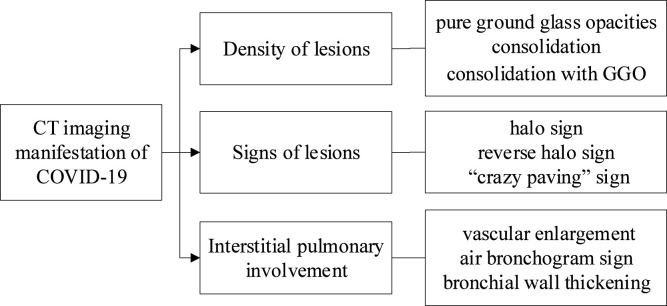

Since COVID-19 is highly contagious [4], [5], it is vital to detect viruses quickly and accurately to prevent propagation and provide timely treatment for the disease. Common detection methods of COVID-19 include nucleic acid reagent detection and CT examination. Clinical studies shown high rates of false-negatives on suspected patients at the first attempt with nucleic acid reagent detection. Additionally, there are problems such as high detection environment requirements, strict and time-consuming procedures, which are not easy to adopt on a large scale. Computer tomography (CT) detection of COVID-19 possesses high sensitivity, low misdiagnosis rate, and high commercial availability [6]. It can thus complement the nucleic acid reagent detection [7]. The early CT image of lungs in COVID-19 patients presents mainly ground-glass opaque shadows [8], and a “crazy paving pattern” is evident [9]. A few days later, the density of the lesions increase significantly and halo and reversed-halo signs appear [10]. With the aggravation of the disease, bilateral lung lesions resemble “white lungs” [11]. In the later stage, the density of the lesion gradually decreases, and the area of the lesion narrows. According to the characteristics of the lung CT images of COVID-19 patients, the disease can be divided into four stages: early, progressive, peak, and absorption [12]. Fig. 1 shows the main CT image features of COVID-19.

Fig. 1.

Image features of CT image of COVID-19.

Table 1 shows the current clinical value of lung CT images on COVID-19.

Table 1.

The clinical value of chest CT in COVID-19.

| Author | Conclusion |

|---|---|

| Fan L [13] | Summarizes the CT imaging characteristics of COVID-19, clinical category, CT imaging performance of COVID-19 children, CT imaging difference between COVID-19 patients and other pulmonary inflammation patients. |

| Iwasawa T [14] | Ultra-high-resolution CT image can identify the terminal bronchiole in normal lungs. U-HR-CT can be used to detect abnormal lung volume reduction, which is essential for the early diagnosis and timely treatment of critical illness in COVID-19 patients. |

| Song F [15] | The most common feature of COVID-19 on CT images are pure ground-glass opacity (GGO). If patients present GGO in the peripheral and posterior lungs on chest CT images as well as cough and/or fever, a history of epidemic exposure, and normal or decreased white blood cells, then COVID-19 infection is highly suspected. |

| K. Wang [16] | The author made a summary of the location, distribution, morphology, and density of the lesions in CT images of 114 COVID-19 patients. SPO2 and lymphocytes can reflect lung inflammation. The diagnostic sensitivity and accuracy of spiral CT testing was higher than nucleic acid detection. This method can be applied to early diagnosis and treatment of COVID-19 patients. |

| Huanhuan Liu [17] | Chest CT image features of pregnant women with COVID-19 pneumonia were atypical. It was observed from the CT images that the lungs of pregnant patients were more susceptible to the disease. The CT image features of children were non-specific. Therefore, the combination of other diagnostic methods can be used to diagnose children. |

| Agostini A [18] | Because of radiation exposure and motion artifacts in CT images, patients need to be imaged multiple times. The author performed ultra-low-dose, dual-source, rapid CT imaging on 10 patients with confirmed COVID-19. This image method can provide a reliable diagnosis and can reduce motion artifacts and dose. |

At present, COVID-19 has already spread to all over the world. With the sharp increase in COVID-19 patients, existing medical resources and diagnostic capabilities are insufficient. In addition, staff density in hospitals in core epidemic areas has increased, along with the risk of cross-infection. The development of COVID-19 computer-aided diagnosis models based on CT images of lungs is thus increasingly important. According to the National Health Commission of the People’s Republic of China (2020), “Diagnosis and protocol of COVID-19”, trial version 6 [19], CT detection is not only one of the diagnostic standards for COVID-19, but also has an important significance in the treatment of COVID-19. Ai, T. [20] found that chest CT had a higher sensitivity for diagnosing COVID-19 over RT-PCR. Chest CT may thus be considered as a primary tool for COVID-19 detection in epidemic areas. Himoto Y. [21] pointed out that CT images can distinguish COVID-19 from other similar respiratory diseases. Lin Li [22] proposed a COVNet model based on deep learning to distinguish COVID-19 from community-acquired pneumonia (CAP) in chest CT images. CT images were segmented via U-net, then COVNet distinguished between COVID-19 and CAP. Wang [23] proposed a fully automatic deep learning system for COVID-19 diagnosis and prognosis based on CT image analysis. First, they made a DenseNet121-FPN for lung segmentation in chest CT images and the proposed novel COVID-19Net for COVID-19 diagnosis and prognosis. This system can categorize patients into low- and high-risk groups according to the severity of disease, and can automatically identify the lesion area. COVID-19 computer-aided diagnosis combined with deep learning and lung CT images plays an important role in quickly classifying and identifying COVID-19, improving diagnosis efficiency, saving doctors’ energy, and optimizing medical resources.

2. Basic knowledge

2.1. Convolutional neural networks

Convolutional neural networks (CNNs) are multi-layer networks composed of overlapping convolutional layers for feature extraction and down sampling layers for feature processing. Fig. 2 shows the structure of a typical convolutional neural network. CNNs can automatically extract features from images, and thus have become a research hot topic. CNNs are perceptron-based models [24]. Their advantage is that they can directly receive original images to avoid excessive image preprocessing. Through the local receptive field, weight sharing, and pooling functions, CNNs can lower the complexity of models, by making full use of the local and global information of the image, they are therefore capable of robust translation, rotation, and scaling. Classic CNNs include: residual neural network (ResNet), AlexNet, and GoogleNet.

Fig. 2.

Convolutional neural network structure diagram.

2.1.1. AlexNet

AlexNet [25] was designed by the 2012 ImageNet competition winners, Hinton and Alex Krizhevsky. AlexNet uses 5 convolutional layers, including three pooling layers and two norm layers. Three of them are fully connected layers with a total of 60 million parameters.

Fig. 3 shows the specific network parameters. Each input image is scaled to 256 256, and square blocks (of 224 224) are randomly intercepted from them, and then input in three dimensions of RGB. Due to GPU performance limitations at the time, Alex Krizhevsky et al. Processed AlexNet in two GPUs in parallel, therefore the hidden layer in the figure is shown as two simultaneous calculations. The five layers in front of the AlexNet network are convolutional layers.

Fig. 3.

AlexNet network structure diagram.

Taking the first layer as an example, 96 feature maps of 55 55 nodes are generated. Each feature map is composed of a convolution kernel with a size of 11 11 and step size of four. After convolution filtering, the output excitation of the convolution layer is obtained through a ReLU activation function and then output to the next convolutional layer after the local response normalization and maximum pooling down-sampling. A three-layer fully connected network is added as a classifier for the five-layer convolutional network, the high-dimensional convolutional features are classified to obtain a class label. The fully connected network finally outputs a response with a dimension of 1000, corresponding to the 1000 categories of images to be classified.

2.1.2. GoogleNet

GoogleNet [26] consists of a cascade of multiple basic inception modules, having a depth of 22 layers. Fig. 4 shows the structure of GoogleNet. Features are extracted at different scales from the previous input layer with three different convolution kernels of different sizes. The information is combined and passed to the next layer. Inception has 13, and 5 5 convolution kernels, of which the 1 1 convolution kernel has a lower dimension than the previous layer, which is mainly used for data dimensionality reduction. This is passed to the latter 3 3 and 5 5 convolutional layers to reduce their convolution calculations and avoid large calculations caused by increasing the network scale. After combining the features of the four channels, the next layer can extract more useful features from different scales.

Fig. 4.

GoogleNet network structure diagram.

2.1.3. Residual neural network

The residual neural network (ResNet) is composed of a convolutional layer for feature extraction and a pooling layer for feature processing. A multi-layer neural network solves the problem of degradation and gradient disappearance. With the deepening of the network, the gradient of the convolutional neural network gradually disappears, and the shallow parameters cannot be updated. The structure of shortcut connections ensures the update of the backpropagation parameters and avoids the problem of gradient disappearance caused by backpropagation. The residual neural network makes it easier to optimize deep models. After the input image undergoes several convolution and pooling operations, the classification effect is achieved in the fully connected layer network. Layer connection implements identity mapping [27]. The identity mapping ensures that the network performance will not degrade, so that the network learns new features based on the input features, and the identity mapping does not add an extra to the network. The parameters and calculation amount can accelerate the training speed of the model and optimize the training effect.

2.2. Transfer learning

Transfer learning [28] is a machine learning method that uses existing knowledge to solve problems in different, albeit related fields. It relaxes two basic assumptions in traditional machine learning with the purpose is to transfer existing knowledge to solve the learning problem in the target field where there is none, or only a small amount of labeled sample data. Transfer learning exists widely in all human activities. The more factors are shared between two different fields, the easier transfer learning is. Otherwise, it can be more difficult, or even negative transfer can occur to a deleterious effect. The purpose of this technique is to solve the learning problem of insufficient training samples in the target domain and make it possible to transfer some knowledge acquired in other related source domains to the target domain.

According to whether the samples are marked in the source and target fields and whether the tasks are the same [29], transfer learning can be divided into inductive and unsupervised transfer learning. According to the content of transfer learning, methods can be divided into feature representation, transfer, instance transfer, parameter transfer, and association relationship transfer. According to whether the feature space of the source domain and the target domain are the same, it can be divided into homogeneous transfer learning and heterogeneous transfer learning.

2.3. Ensemble learning

Ensemble learning [30] methods mainly include bagging,boosting, and stacking. Ensemble learning can significantly improve the generalization ability of the learning system. At present, the common methods for generating base classifiers can be divided into two categories: one is to apply different types of learning algorithms to the same data set [31]. The base classifiers obtained by this method are usually different. A heterogeneous classifier. The other is to apply the same learning algorithm to different training sets. The base classifier obtained by this method is called a homogeneous classifier. The combination strategy of integrated learning on classifiers mainly includes average, voting, and learning methods. According to the different uses of integrated learning, different combination methods are usually selected. For example, if the purpose of integrated learning is regression estimation, the prediction results of each individual learner are usually averaged or weighted average. If integrated learning is used for classification, each individual classification result is voted to obtain the final classification result. The voting method is divided into absolute and relative majority voting methods. Absolute majority voting method, that is, where more than half of individual learners output the same classification result, the result is the final classification result of integrated learning output. In the relative majority voting method, the number of individual learners that output a certain classification result is the largest, and the result is the final classification result of the integrated learning output.

3. Ensemble deep learning model for novel COVID-19 on CT images

Some new methods are used for image classification, for example, enhanced learning, GA-SVM [32], and dense-MobileNet models [33]. We propose an ensemble deep learning model for novel COVID-19 on CT images. The overview of the model is as follows:

(1) Data collection. 2933 lung CT images of COVID-19 patients were obtained from previous publications, authoritative media reports, and public databases [34]. The images are preprocessed to obtain 2500 high-quality CT images. 2500 lung tumor CT images and 2500 normal lung CT images were obtained from general hospital of Ningxia medical university in China. Fig. 5 shows examples of lung CT images collected for this study.

Fig. 5.

Lung CT images.

(2) Sample set partition. Sample_Lung refers to the Lung CT image sample set. Sample size is . According to the type of medical image (NormalLung, LungTumor, COVID), lung medical image sample set Sample_lung is divided into three sample subsets: Sample_NormalLung, Sample_LungTumor, and Sample_COVID. The sample sizes are .

(3) Resize. Sample_Lung resize(Sample_Lung);

(4) The training and test sample sets were constructed using the 5-fold crossover method in the three sample subsets Sample_NormalLung, Sample_LungTumor, and Sample_COVID. Using a partition algorithm, the subset of each samples were divided into 5 uniform parts, 500 samples per part, obtaining sample sets of 5 fold cross.

(5) Individual classifiers were generated by pretraining the network via transfer learning.

AlexNet, GoogleNet and ResNet are generated by pre_training the network with transfer learning while using these parameters in the pre_trained network as the initialization parameters of AlexNet, GoogleNet and ResNet respectively.

(6) In the training sample set Sample_Lung_TrainingSet, Training AlexNet_Softmax, GoogleNet_Softmax, and ResNet_Softmax, respectively, Individual classifiers are obtained.

(7) Ensemble ResNet_NRC classifier. Using the relative majority voting method to integrate three individual classifiers.

Fig. 6 shows the algorithm flow chart.

Fig. 6.

Algorithm flow chart of this model.

4. Experimental results and analysis

4.1. Experimental environment

Software environment: Windows 10 operating system, MatlabR2019a.

Hardware environment: the hardware platform used for the simulation experiment was an Intel (R) Core (TM) i5-7200U CPU @ 2.50 GHz 2.70 GHz, 4.0 GB RAM, and 500 GB hard disk.

4.2. Evaluation index

The measuring of the performances of the models, accuracy, sensitivity, specificity, F-score, Matthews correlation coefficient, is as follows:

Accuracy is the most common evaluation index. The higher the accuracy, the better the classifier performance. The formulation is as follows:

| (1) |

Sensitivity and specificity measure the classifier’s ability to recognize positive and negative examples, respectively. The larger the value, the higher the recognition performance. The formulation is as follows:

| (2) |

| (3) |

F-score is the weighted harmonic average of recall rate and precision rate. It is used to weigh accuracy and recall rate. The formulation is as follows:

| (4) |

MCC is a correlation coefficient that describes the actual classification and the predicted classification. It comprehensively considers true positives, true negatives, false positives, and false negatives. It is a more balanced indicator. Its value range is [−1,1], and the closer the value is to 1, the more accurate the prediction of the test subject. The formulation is as follows:

| (5) |

where true positive (TP) represents the number of samples that are benign and correctly predicted, whereas true negative (TN) represents the malignant and correctly predicted number of samples. Also, false positive (FP) represents the number of samples that are actually malignant but incorrectly predicted while false negative (FN) represents the number of samples that are actually benign but incorrectly predicted.

4.3. Experimental data

2933 lung CT images of COVID-19 patients were obtained from previous publications, authoritative media reports, and public databases. Among them, 1752 cases were obtained from both domestic and foreign journals such as Sciencedirect, Nature,Springer Link, and China CNKI. 1012 cases were obtained from authoritative media reports such as the New York Times, Daily Mail (United Kingdom), The Times (United Kingdom), CNN, The Verge (United States), Avvenire (Italy), LaNuovaFerrara (Italy), People’s Daily, Toutiao News, and Dr. Lilac. 68 cases were obtained from the sirm.org public database. 101 cases were obtained from a GitHub public database (see Table 2, Table 3, Table 4).

Table 2.

COVID-19CT images from academic journals.

| Total | Sciencedirect | Springer link | cnki.net | Other |

|---|---|---|---|---|

| 1752 | 745 | 135 | 634 | 238 |

Table 3.

COVID-19CT images from authoritative media reports.

| Total | Daily mail | The verge | LaNuovaFerrara | People’s network | Toutiao news | Doctor Lilac | Video PPT | Other |

|---|---|---|---|---|---|---|---|---|

| 1012 | 72 | 70 | 69 | 226 | 136 | 205 | 156 | 78 |

Table 4.

COVID-19 CT images from public databases.

| Total | sirm.org | GitHub |

|---|---|---|

| 169 | 68 | 101 |

The lung CT images of the COVID-19 patients obtained in this study were all from third-party platforms. Images from different platforms were different in size and format, and contained different degrees of noise, such as watermarks and mark instructions. The research directions were thus different. for example: some studies were statistical analyses of COVID-19 cases, others tracked and analyzed the same patient, others analyzed patients of different ages and genders. It is necessary the comparison of image characteristics for different clinical classifications. Hence, there are differences in data modalities, such as horizontal position or coronal position. CT images were preprocessed, for example, by deleting images with large noise and coronal position. The unified image format were .JPG. All images were normalized at the same time, converting the image to a size of 64 64. Finally, we obtained 2500 high quality CT images of the novel COVID-19.

4.4. Algorithm simulation experiment and analysis

Five-fold cross-validation was used for training. Each experimental result was averaged to obtain the final experimental result. The number of training samples per time was 2000 3 6000. The number of test samples was 500 3 1500. Five experiments were used to calculate the average value. The experiments were carried out on CT image data sets of normal lungs, lung tumors, and COVID-19. Identification and classification was performed using AlexNet-Softmax, GoogleNet-Softmax, and ResNet-Softmax, respectively. Then, ensemble deep learning model EDL-COVID was used for classification. Finally, the accuracy, sensitivity, specificity, F-Score, and Matthews correlation coefficient were used for evaluation.

4.4.1. Experiment one: AlexNet-Softmax classifier experiment

In first experiment, deep learning model uses AlexNet model, classification model uses Softmax, named AlexNet-Softmax. This experiment mainly discusses the recognition accuracy, training time and evaluation index of AlexNet_Softmax in training and recognition on the sample space of three different modalities (normal lung ct, lung tumor ct, COVID-19 ct). Classification accuracy and training time are shown in Table 5, classification evaluation index are shown in Table 6. In first 5-fold cross experiment of Table 5, we can seen that 473 samples are recognized correctly, 27 samples are recognized error under normal lung ct data set, 487 samples are recognized correctly, 13 samples are recognized error under lung tumor ct data set, 495 samples are recognized correctly, 5 samples are recognized error under COVID-19 ct data set. the average classification accuracy of AlexNet_Softmax is 98.16% of 5 times 5-fold cross experiment and running time is 354.47 s. Deviation of classification accuracy is 0.41432, standard deviation is 0.7196. that is to say, in 5 5-fold cross experiment, the classification accuracy has changed very little, the algorithm is not sensitive to samples, and has good stability and strong fault tolerance. Of course the model has a more faster detection speed and accuracy than nucleic acid reagent detection. In Table 6, average value of the sensitivity (SEN), specificity (SPE), F-Score, and Matthews correlation coefficient (MCC) are 98.16%, 99.36%, 97.3%, and 95.95%, respectively. The results showed that the model had the ability to recognize positives and negatives and have a good capability for correlation description between real and prediction classification ability.

Table 5.

AlexNet_Softmax classification results.

| Five_fold cross | Accuracy (/%) | Normal lung |

Lung tumor |

COVID-19 |

Time (/s) | |||

|---|---|---|---|---|---|---|---|---|

| Correct | mis-c | Correct | mis-c | Correct | mis-c | |||

| Fold 1 | 97.00 | 473 | 27 | 487 | 13 | 495 | 5 | 342.92 |

| Fold 2 | 98.47 | 495 | 5 | 484 | 16 | 498 | 2 | 383.89 |

| Fold 3 | 98.07 | 488 | 12 | 486 | 14 | 497 | 3 | 350.25 |

| Fold 4 | 98.93 | 494 | 6 | 493 | 7 | 497 | 3 | 347.70 |

| Fold 5 | 98.33 | 498 | 2 | 480 | 20 | 497 | 3 | 347.60 |

| Average | 98.16 | 2448 | 52 | 2430 | 70 | 2484 | 16 | 354.47 |

Table 6.

AlexNet_Softmax classification evaluation index.

| Five_fold cross | SEN (%) | SPE (%) | F (%) | MCC (%) |

|---|---|---|---|---|

| Fold 1 | 97.33 | 98.4 | 96.09 | 94.13 |

| Fold 2 | 98.47 | 99.6 | 97.74 | 96.62 |

| Fold 3 | 97.67 | 99.0 | 96.59 | 94.88 |

| Fold 4 | 98.93 | 99.0 | 98.12 | 97.17 |

| Fold 5 | 99.07 | 99.4 | 97.55 | 96.32 |

| Average | 98.16 | 99.36 | 97.3 | 95.95 |

4.4.2. Experiment two: GoogleNet_Softmax classifier experiment

GoogleNet_Softmax is adopted in second experiment. This experiment discusses the recognition accuracy, training time and evaluation index of GoogleNet_Softmax when training and recognition ct image data sets of normal lungs, lung tumors and COVID-19. Classification accuracy and training time are shown in Table 7, classification evaluation index are shown in Table 8. In first 5_fold cross experiment of Table 7, we can seen that 471 samples are recognized correctly, 29 samples are recognized error under normal lung ct data set, 497 samples are recognized correctly, 3 samples are recognized error under lung tumor ct data set, 492 samples are recognized correctly, 8 samples are recognized error under COVID-19 ct data set. the average classification accuracy of AlexNet_Softmax is 98.25% of 5 times 5_fold cross experiment, and running time is 934.31 s. Deviation of classification accuracy is 0.4267, standard deviation is 0.7304, deviation of running time is 49.2489. Just like as AlexNet_Softmax, GoogleNet_Softmax is not sensitive to samples, and has good stability and strong fault tolerance. Table 8 shows the classification evaluation indicators. average value of sensitivity (SEN), specificity (SPE), F_Score, and Matthews correlation coefficient (MCC) are 98.25%, 99.2%, 97.43%, and 96.14%, respectively. The experiment results show that GoogleNet_Softmax have the ability to detect COVID-19 patient quickly and accurately under non-contact testing and treatment.

Table 7.

GoogLeNet_Softmax classification result.

| Five_Fold cross | Accuracy (/%) | Normal |

Lung tumor |

COVID-19 |

Time (/s) | |||

|---|---|---|---|---|---|---|---|---|

| Correct | mis_c | Correct | mis_c | Correct | mis_c | |||

| Fold1 | 97.33 | 471 | 29 | 497 | 3 | 492 | 8 | 934.31 |

| Fold2 | 98.47 | 492 | 8 | 487 | 13 | 498 | 2 | 937.04 |

| Fold3 | 97.67 | 488 | 12 | 482 | 18 | 495 | 5 | 930.6 |

| Fold4 | 98.73 | 499 | 1 | 487 | 13 | 495 | 5 | 924.04 |

| Fold5 | 99.07 | 498 | 2 | 488 | 12 | 500 | 0 | 917.75 |

| Average | 98.25 | 2448 | 52 | 2441 | 59 | 2480 | 20 | 928.74 |

Table 8.

GoogLeNet_Softmax classification evaluation index.

| Five_fold cross | SEN (%) | SPE (%) | F (%) | MCC (%) |

|---|---|---|---|---|

| Fold 1 | 97.33 | 98.4 | 96.09 | 94.13 |

| Fold 2 | 98.47 | 99.6 | 97.74 | 96.62 |

| Fold 3 | 97.67 | 99.0 | 96.59 | 94.88 |

| Fold 4 | 98.73 | 99.0 | 98.12 | 97.17 |

| Fold 5 | 99.07 | 100 | 98.62 | 97.94 |

| Average | 98.25 | 99.2 | 97.43 | 96.14 |

4.4.3. Experiment three: ResNet_Softmax classifier experiment

The third experiment is ResNet_Softmax classifier experiment. Classification accuracy and training time are shown in Table 9, classification evaluation index are shown in Table 10 on three sample spaces (normal lung ct, lung tumor ct, COVID-19 ct). In first 5-fold cross experiment of Table 9, we can seen that 481 samples are recognized correctly, 19 samples are recognized error under normal lung ct data set, 495 samples are recognized correctly, 5 samples are recognized error under lung tumor ct data set, 494 samples are recognized correctly, 6 samples are recognized error under COVID-19 ct data set. the average classification accuracy of ResNet_Softmax is 98.56% of 5 times 5-fold cross experiment and running time is 990.58 s. Deviation of classification accuracy is 0.1075, standard deviation is 0.3667, deviation of running time is 383.5619. comparing with AlexNet and GoogleNet, Deviation of classification accuracy dropped by 74%, that is very high. That is to say that algorithm stability and fault tolerance of ResNet is more better than AlexNet and GoogleNet in the same classification accuracy. In Table 10, we can seen that the sensitivity (SEN), specificity (SPE), F_Score(F), and Matthews correlation coefficient (MCC) are 98.56%, 99.4%, 97.87%, and 96.81%, respectively. The results show that the ResNet_Softmaxmodel have a better generalization performance to recognize positives and negatives.

Table 9.

ResNet_Softmax classification result.

| Five_fold cross | Accuracy (/%) | Normal |

Lung tumor |

COVID-19 |

Time (/s) | |||

|---|---|---|---|---|---|---|---|---|

| Correct | mis_c | Correct | mis_c | Correct | mis_c | |||

| Fold1 | 98.00 | 481 | 19 | 495 | 5 | 494 | 6 | 998.46 |

| Fold2 | 98.53 | 490 | 10 | 490 | 10 | 498 | 2 | 1023.85 |

| Fold3 | 98.53 | 495 | 5 | 483 | 17 | 500 | 0 | 985.42 |

| Fold4 | 99.00 | 494 | 6 | 492 | 8 | 499 | 1 | 978.73 |

| Fold5 | 98.73 | 495 | 5 | 492 | 8 | 494 | 6 | 966.46 |

| Average | 98.56 | 2455 | 45 | 2452 | 48 | 2485 | 15 | 990.58 |

Table 10.

ResNet_Softmax classification evaluation index.

| Five_fold cross | SEN (%) | SPE (%) | F (%) | MCC (%) |

|---|---|---|---|---|

| Fold 1 | 98.0 | 98.8 | 97.05 | 95.57 |

| Fold 2 | 98.53 | 99.6 | 97.84 | 97.76 |

| Fold 3 | 98.53 | 100 | 97.85 | 96.79 |

| Fold 4 | 99.0 | 99.8 | 98.52 | 97.78 |

| Fold 5 | 98.73 | 98.8 | 98.11 | 97.17 |

| Average | 98.56 | 99.4 | 97.87 | 96.81 |

In the three experiments, three different classification models were used, AlexNet_Softmax, GoogleNet_Softmax, and ResNet_Softmax. From comparing Tables 5, 7, and 9, we can observe that the classification accuracy of ResNet_Softmax improved 0.4% over AlexNet_Softmax. From the prospect of individual classifier, ResNet_Softmax is the best classifier to detect COVID-19 patient quickly, however, its speed is most slow under non-contact testing. The detect time is increased to from 354.47 s to 990.58 s. Time and accuracy are both contradictory and unified. In the actual application process, overall consideration should be given. From comparing Tables 6, 8, and 10, we can observe that the sensitivity (SEN), specificity (SPE), F_Score(F), and Matthews correlation coefficient (MCC) of ResNet_Softmax increased to 0.4%, 0.4%, 0.04%, 0.57%, and 0.86% over than AlexNet_Softmax, respectively. Specificity and sensitivity to negative classes have been improved. It can be see that the deeper layers in the network, richer image feature extraction, and higher classification accuracy, increased training time significantly.

4.4.4. Experiment four: EDL_COVID classifier experiment

In this experiment, individual classfier are AlexNet, Google_Net and ResNet, softmax is used as the classification algorithm of the fully connected layer. The ensemble classifier EDL-COVID is obtained via relative majority voting. In order to illustrate EDL-COVID performance, sensitivity, specificity, F_Score, and Matthew correlation coefficient are used to evaluate the algorithm. Classification results and evaluation index are shown in Table 11, Table 12, respectively.

Table 11.

EDL_COVID classification result.

| Five_fold cross | Accuracy (/%) | Normal |

Lung tumor |

COVID-19 |

Time (/s) | |||

|---|---|---|---|---|---|---|---|---|

| Correct | mis_c | Correct | mis_c | Correct | mis_c | |||

| Fold1 | 98.53 | 486 | 14 | 496 | 4 | 496 | 4 | 2275.81 |

| Fold2 | 99.07 | 496 | 4 | 492 | 8 | 498 | 2 | 2234.81 |

| Fold3 | 98.93 | 497 | 3 | 489 | 11 | 498 | 2 | 2266.31 |

| Fold4 | 99.27 | 500 | 0 | 490 | 10 | 499 | 1 | 2250.53 |

| Fold5 | 99.47 | 500 | 0 | 493 | 7 | 499 | 1 | 2231.85 |

| Average | 99.054 | 2479 | 21 | 2460 | 40 | 2490 | 10 | 2251.86 |

Table 12.

EDL_COVID classification evaluationindex.

| Five_fold cross | SEN (%) | SPE (%) | F (%) | MCC (%) |

|---|---|---|---|---|

| Fold 1 | 98.53 | 99.2 | 97.83 | 96.74 |

| Fold 2 | 99.07 | 99.6 | 98.61 | 97.92 |

| Fold 3 | 98.93 | 99.6 | 98.42 | 97.63 |

| Fold 4 | 99.27 | 99.8 | 98.91 | 98.37 |

| Fold 5 | 99.47 | 99.8 | 99.2 | 98.81 |

| Average | 99.05 | 99.6 | 98.59 | 97.89 |

Fig. 7 shows the average value of five indexes, which indicates the differences of different algorithms on various index.

Fig. 7.

Five evaluation indicators of different models.

Table 11 shows the experimental accuracy after the five_fold cross_validation and the final average. The average classification accuracy of the EDL_COVID is 99.054%, and running time is 2251.86 s. Deviation of classification accuracy is 0.1019, standard deviation is 0.357, deviation of running time is 295.0553. Deviation of classification accuracy of EDL_COVID model is same with ResNet_Softmax, and deviation of running time tends to be stable. Table 12 shows the classification evaluation index. The sensitivity(SEN), specificity(SPE), F_Score(F), and Matthews correlation coefficient (MCC) are 99.05%, 99.6%, 98.59%, and 97.89%, respectively. According to our experimental results on the three individual classifiers performance, accuracy, sensitivity, specificity, F_score, and MCC of the ResNet_Softmax are the highest, but the running time is also the highest. AlexNet_Softmax is the least time_consuming, with an average time of 354.475 s. The accuracy, sensitivity, specificity, F_Score, and MCC of AlexNet_Softmax are the lowest. Comparing with the single classifier, the classification accuracy of the EDL_COVID model is higher than the AlexNet_Softmax, GoogleNet_Softmax, and ResNet_Softmax, and classification accuracy i improved to 0.89%, 0.80%, and 0.49%, respectively. The training time is increased to 1897.39, 1323.12, and 1261.28 s, respectively. It can be seen that the classification accuracy of the EDL_COVID model is better than a single classifier. Deep learning classifiers, such as AlexNet_Softmax, GoogleNet_Softmax, and ResNet_Softmax, have a faster detection speed than nucleic acid reagent detection with a higher detection accuracy. Ensemble learning such as EDL_COVID can improve the classification accuracy over individual classifier.

5. Summary

Because the COVID-19 is highly contagious, its transmission route is difficult to effectively control, it is vital to detect viruses quickly and accurately to prevent propagation and provide timely treatment for the disease. Computer tomography (CT) detection of COVID-19 possesses high sensitivity, low misdiagnosis rate, and high commercial availability.hence, Artificial intelligence, esp deep learning based on CT are used to detect COVID-19 patient. That is a good approach. In this paper, We proposed an ensemble deep learning model (EDL_COVID) based on COVID_19 lung CT images to rapidly detect the novel coronavirus COVID-19. 2500 CT images of COVID-19 lungs were obtained from previous publications, news reports, public databases, and other channels. 2500 CT images of lung tumors and normal lung were obtained from three grade A hospitals in Ningxia, China. Transfer learning was used to pretrain three deep convolutional neural network models, namely, AlexNet, GoogleNet, and ResNet, and initialization parameters were obtained. Using softmax as the classification algorithm of the fully connected layer, three component classifiers, AlexNet_Softmax, GoogleNet_Softmax, and ResNet_Softmax were constructed. The ensemble classifier EDL_COVID was obtained by the method of relative majority vote algorithm. Our results showed that the overall classification performance of our EDL_COVID model was better than a single individual classifier with the fastest detection speed of 342.92 s and an accuracy of 97%, the ensemble accuracy can thus reach 99.05%. Evaluation indexes such as specificity and sensitivity were also high, outlining its potential use for the rapid detection of COVID-19.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

This work is supported by Natural Science Foundation of China (Grant No. 62062003), North Minzu University Research Project of Talent Introduction, China (No. 2020KYQD08).

References

- 1.Holshue M.L., DeBolt C., Lindquist S. First case of 2019 novel coronavirus in the United States. New Engl. J. Med. 2019;382(10):929–936. doi: 10.1056/NEJMoa2001191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.CORONAVIRUS Resource Center. Johns Hopkins University, 2020, Available: https://coronavirus.jhu.edu/map.html.

- 3.Huang C.L., Wang Y.M., Li X.W., Ren L.L., Zhao J.P. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet. 2020;395(10223):497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wang D.W., Hu B., Hu C., Zhu F.F., Liu X. Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus–infected pneumonia in Wuhan, China. J. Am. Med. Assoc. (JAMA) 2020;323(11):1061–1069. doi: 10.1001/jama.2020.1585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jiang X., Coffee M., Bari A. Towards an artificial intelligence framework for data-driven prediction of coronavirus clinical severity. Comput. Mater. Continua. 2020;63(1):537–551. [Google Scholar]

- 6.Long C.Q., Xu H.X., Shen Q.L., Zhang X.H., Fan B. Diagnosis of the coronavirus disease (COVID-19): rRT-PCR or CT? Eur. J. Radiol. 2020 doi: 10.1016/j.ejrad.2020.108961. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Xie X.Z., Zhong Z., Zhao W., Zheng C., Wang F. Chest CT for typical 2019-nCoV pneumonia: relationship to negative RT-PCR testing. Radiology. 2020 doi: 10.1148/radiol.2020200343. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lei J.Q., Li J.F., Li X., Qi X.L. Ct imaging of the 2019 novel coronavirus(2019-ncov) pneumonia. Radiology. 2020;295(1):18. doi: 10.1148/radiol.2020200236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ye Z., Zhang Y., Wang Y., Huang Z.X., Song B. Chest CT manifestations of new coronavirus disease 2019 (COVID-19): a pictorial review. Eur. Radiol. 2020 doi: 10.1007/s00330-020-06801-0. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Li Y., Xia L.M. Coronavirus disease 2019 (covid-19): role of chest ct in diagnosis and management. Am. J. Roentgenol. 2020 doi: 10.2214/AJR.20.22954. (in press) [DOI] [PubMed] [Google Scholar]

- 11.Chung M., Bernheim A., Mei X.Y. Ct imaging features of 2019 novel coronavirus (2019-ncov) Radiology. 2020;295(1):202–207. doi: 10.1148/radiol.2020200230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pan F., Ye T.H., Sun P., Gui S., Liang B. Time course of lung changes on chest CT during recovery from 2019 novel coronavirus(COVID-19) Pneumonia. Radiology. 2020 doi: 10.1148/radiol.2020200370. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fan L., Li D., Xue H., Zhang L.J., Liu Z.Y. Progress and prospect on imaging diagnosis of covid-19. Chin. J. Acad. Radiol. 2020;3(1):4–13. doi: 10.1007/s42058-020-00031-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Iwasawa T., Sato M., Yamaya T., Sato Y., Uchida Y. Ultra-high-resolution computed tomography can demonstrate alveolar collapse in novel coronavirus (COVID-19) pneumonia. Japan. J. Radiol. 2020;38(1):497–506. doi: 10.1007/s11604-020-00956-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Song F.X., Shi N.N., Shan F., Zhang Z.Y., Shen J. others. Emerging coronavirus 2019-nCoV pneumonia. Radiology. 2020;295(1):210–217. doi: 10.1148/radiol.2020200274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang K., Kang S., Tian R., Zhang X., Zhang X. Imaging manifestations and diagnostic value of chest CT of coronavirus disease 2019 (COVID-19) in the Xiaogan area. Clin. Radiol. 2020;75(5):341–347. doi: 10.1016/j.crad.2020.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Liu H.H., Liu F., Li J.N., Zhang T.T., Wang D.B. Clinical and ct imaging features of the COVID-19 pneumonia: focus on pregnant women and children. J. Infect. 2020 doi: 10.1016/j.jinf.2020.03.007. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Agostini A., Floridi C., Borgheresi A., Badaloni M., Pirani P. Esposto, Terilli F., Ottaviani L., Giovagnoni A. Proposal of a low-dose, long-pitch, dual-source chest ct protocol on third-generation dual-source ct using a tin filter for spectral shaping at 100 kVp for CoronaVirus Disease 2019 (COVID-19) patients: a feasibility study. Radiol. Med. 2020;125(4):365–373. doi: 10.1007/s11547-020-01179-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.National Health Commission of the People’s Republic of China . 2020. Diagnosis and protocol of COVID-19, trial version 6. [Online]. Available: http://www.gov.cn/zhengce/zhengceku/2020-02/19/content_5480948.htm. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ai T., Yang Z.L., Hou H.Y., Zhan C.N., Chen C., Lv W.Z., Tao Q., Sun Z.Y., Xia L.M. Correlation of chest ct and rt-pcr testing in coronavirus disease 2019 (covid-19) in China: a report of 1014 cases. Radiology. 2020 doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Himoto Y., Sakata A., Kirita M. Diagnostic performance of chest ct to differentiate covid-19 pneumonia in non-high-epidemic area in Japan. Japan. J. Radiol. 2020;38(4) doi: 10.1007/s11604-020-00958-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Li L., Qin L.X., Xu Z.G., Yin Y.B., X. Wang. Artificial intelligence distinguishes covid-19 from community acquired pneumonia on chest ct. Radiology. 2020 doi: 10.1148/radiol.2020200905. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wang S., Zha Y.F., Li W.M., Wu Q.X., Li X.H. A fully automatic deep learning system for COVID-19 diagnostic and prognostic analysis. medRxiv. 2020 doi: 10.1101/2020.03.24.20042317. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Xiao H.H., Yuan C.L., Feng S.T., Luo Y.J., Huang B.S. Research progress of computer-aided classification and diagnosis of cancer based on deep learning. Int. J. Med. Radiol. 2019;42(1):22–25. [Google Scholar]

- 25.A. Krizhevsky, I. Sutskever, G.E. Hinton, Imagenet classification with deep convolutional neural networks, in: Proceedings of the Neural Information Processing Systems, Lake Tahoe, USA, 2012, pp. 1097–1105.

- 26.C. Szegedy, W. Liu, Y.Q. Jia, P. Sermanet, S. others Reed, Going deeper with convolutions, in: 2015 IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Boston, MA, USA, 2015, pp. 1–9.

- 27.K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in: IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 2016, pp. 770–778.

- 28.Li M.Y., Yang L., Hu Q.H. Research progress on the theory and algorithm of isomorphic transfer learning. J. Nanjing Univ. Inf. Sci. Technol. (Nat. Sci. Ed.) 2019;11(03):269–277. [Google Scholar]

- 29.Hang W.L., Jiang Y.Z., Liu J.F., Wang S.T. Migration nearest neighbor propagation clustering algorithm. J. Softw. 2016;27(11):2796–2813. [Google Scholar]

- 30.Chen S.L., Shen S.Q., Li D.S. Ensemble learning method for imbalanced data based on sample weight updating. Comput. Sci. 2018;45(07):31–37. [Google Scholar]

- 31.Zhang Z., Li Y.B., Wang C., Wang M.Y., Tu Y. An ensemble learning method for wireless multimedia device identification. Secur. Commun. Netw. 2018 doi: 10.1155/2018/5264526. [DOI] [Google Scholar]

- 32.Zhou T., Lu H.L., Wang W.W., Yong X. GA-SVM based feature selection and parameter optimization in hospitalization expense. Appl. Soft Comput. 2019;175:323–332. doi: 10.1016/j.asoc.2018.11.001. [DOI] [Google Scholar]

- 33.Wang W., Li Y.T., Zou T., Wang X., You J.Y. others. A novel image classification approach via dense-mobilenet models. Mob. Inf. Syst. 2020 doi: 10.1155/2020/7602384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Zhou T., Lu H.L., Hu F.Y., Qiu S., Wu C.Y. A model of high-dimensional feature reduction based on variable precision rough set and genetic algorithm in medical image. Math. Probl. Eng. 2020;2020 doi: 10.1155/2020/7653946. 18 pages. [DOI] [Google Scholar]