Abstract

Background: Practice guidelines recommend anticoagulation therapy for patients with atrial fibrillation (AF) who have other risk factors putting them at an elevated risk of stroke. These patients remain undertreated, but, with increasing use of electronic healthcare records (EHRs), it may be possible to identify candidates for treatment.

Objective: To test algorithms for identifying AF patients who also have known risk factors for stroke and major bleeding using EHR data.

Materials and Methods: We evaluated the performance of algorithms using EHR data from the Partners Healthcare System at identifying AF patients and 16 additional conditions that are risk factors in the CHA2DS2-VASc and HAS-BLED risk scores for stroke and major bleeding. Algorithms were based on information contained in problem lists, billing codes, laboratory data, prescription data, vital status, and clinical notes. The performance of candidate algorithms in 1000 bootstrap resamples was compared to a gold standard of manual chart review by experienced resident physicians.

Results: Physicians reviewed 480 patient charts. For 11 conditions, the median positive predictive value (PPV) of the EHR-derived algorithms was greater than 0.90. Although the PPV for some risk factors was poor, the median PPV for identifying patients with a CHA2DS2-VASc score ≥2 or a HAS-BLED score ≥3 was 1.00 and 0.92, respectively.

Discussion: We developed and tested a set of algorithms to identify AF patients and known risk factors for stroke and major bleeding using EHR data. Algorithms such as these can be built into EHR systems to facilitate informed decision making and help shift population health management efforts towards patients with the greatest need.

Keywords: anticoagulation, stroke, chronic disease, outcomes, quality improvement, algorithms, natural language processing

Background and Significance

There is a wealth of evidence about the effectiveness of anticoagulation therapy to prevent stroke and thromboembolism, with studies reporting up to 68% reduction in risk of ischemic stroke per year among non-valvular atrial fibrillation (AF) patients.1–3 Although anticoagulants have been demonstrated to be highly effective at preventing stroke and embolic events among AF patients, they are also known to increase the risk of major bleeding events.3 Many clinical practice guidelines provide recommendations for a tiered approach to managing AF in which the initiation of preventive anticoagulation therapy is based on estimated risk of stroke. In spite of these guidelines, an estimated 40% of AF patients at high risk of stroke are not treated with anticoagulants.2

The large proportion of patients with AF who are not managed with anticoagulation therapy can be attributed to many factors. Some of these patients have true contraindications for anticoagulation therapy, but, in other cases, there is a lack of physician awareness of the patient’s condition or of guidelines for treatment; a lack of care coordination (regarding which provider is responsible for managing a patient’s AF); a lack of time in the face of competing comorbidities; or exaggerated patient or physician perception of the risk of bleeding associated with anticoagulation therapy.1,4 The relative proportions of patients in each of these categories are not known.

A key frontier in clinical informatics is leveraging the data in electronic health records (EHRs) to improve the quality of care. However, despite large increases in the adoption rate of EHRs associated with the Meaningful Use program (especially Stage 1), EHR-based interventions appear to have had mixed effects on care quality.5,6 One of the problems with using EHRs to improve care quality has been that the variables needed to measure and improve care quality may not yet be represented in EHRs in ways that make these data easy to leverage for these purposes. In spite of these challenges, the ability of health information technology to deliver large volumes of data could help increase the proportion of AF patients who are appropriately treated with anticoagulants. Through the application of validated algorithms to EHR data, a health system could flag patients who are at high risk of stroke and have strong indications for treatment; quantify the risk of adverse outcomes, such as major bleeding; and provide this information to patients, physicians, or population health services for targeted interventions to facilitate informed decision making.

For AF, there are two scores that have strong evidence to support their use. The CHA2DS2-VASc score quantifies AF patients’ risk of stroke,7 and the HAS-BLED score measures the risk of major bleeding.8 Each score ranges from 0 to 9, with higher numbers indicative of higher risk. These scores have been validated and are currently used in clinical guidelines for treating patients with AF.9–11 AF patients with a CHA2DS2-VASc score ≥2 are recognized as being at a high risk of stroke, and should begin anticoagulation therapy.10 A patient with a HAS-BLED score ≥3 is considered to be at high risk for major bleeding.10,11

The objective of this study was to use data from EHRs to develop and test algorithms for identifying patients with AF as well as the presence or absence of known risk factors for stroke and major bleeding included in the CHA2DS2-VASc and HAS-BLED scores, and to assess how well the data extracted from the EHRs could be used to measure these scores.

Materials and Methods

We extracted data from the Partners HealthCare System Research Patient Data Registry (RPDR), linked to the Centers for Medicare and Medicaid Services (CMS) Part A, B, and D data. The RPDR is a centralized clinical data warehouse for patients seen within the Partners Healthcare System that has been developed to facilitate research.12 It includes a “rolled-up” subset of information in the EHR, including patient demographics, vital signs, labs and test results, problem list entries, prescribed medications, billing codes, and free text notes for healthcare services provided within the system. The CMS data included claims for dispensed prescriptions as well as diagnoses and procedures for claims stemming from any healthcare system in which the patients received reimbursable care.

We used the RPDR data to identify patients with any mention of AF or atrial flutter in either a billing code or a problem list entry in their EHR between January 1, 2009 and December 31, 2011. We restricted the study population to patients with at least two encounters with either a Brigham and Women’s Hospital (BWH) primary care clinic or cardiology unit between January 1, 2009 and December 31, 2011 to capture patients who were “active users” of the healthcare system. We included only patients who could be linked to Medicare claims. From these patients, we randomly sampled 480 patient charts for physician review to establish our gold-standard determinations of the presence or absence of AF and 16 known risk factors for stroke or major bleeding in AF patients. These risk factors included a history of ischemic stroke, transient ischemic attack, systemic embolism, peripheral arterial disease, myocardial infarction, valvular heart disease, hypertension, heart failure, diabetes mellitus, gastrointestinal hemorrhage, intracranial hemorrhage, renal disease, liver disease, medications that cause a predisposal to bleeding (eg, antiplatelet drugs, non-steroidal anti-inflammatory drugs [NSAIDs]), and alcohol abuse.7,8 For the gold-standard assessment, we considered all information contained in the EHR prior to December 31, 2011.

Four senior medical resident physicians practicing at BWH and who are familiar with the EHR system conducted chart reviews for this project. Each followed a common training protocol that specified criteria for determining the presence or absence of relevant conditions within patient charts. Resident physicians reviewed the sampled patient charts using the same EHR interface (QPID Health13) as they used when providing care for patients at BWH. We assessed interrater reliability by assigning each pair of residents 40–50 of the same patient charts to review independently. In cases of disagreement, senior author M.A.F., a practicing physician in the BWH system with over 10 years of experience using the EHR, served as adjudicator. The remaining patient charts were reviewed by one of the resident physicians. We calculated kappa statistics to evaluate interrater reliability. Because the kappa statistic is known to be sensitive to the prevalence of the evaluated conditions, we also calculated prevalence and bias-adjusted kappa values.14 Study data from the resident physicians were collected and managed using the Research Electronic Data Capture electronic data capture tools hosted by Harvard Medical School.15

We considered several candidate algorithms to identify the presence or absence of each patient condition, including:

Only information from the problem list.

Application of validated claims-based International Classification of Diseases 9 (ICD-9) or Current Procedure Terminology (CPT) code algorithms to billing codes.

A combination of problem list and validated claims-based algorithms.

A combination of 1, 2, or 3 with information from vital signs, labs, or other information extracted from clinical notes.

We evaluated the sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) of each candidate algorithm. The study team selected the best performing algorithms for each condition. The selection was generally based on maximizing PPV and specificity balanced against face validity and the additional complexity of extracting information from unstructured text. We prioritized PPV and specificity over NPV and sensitivity because the intended use of these algorithms would be to flag high-risk patients for targeted intervention. In order to have greater assurance that patients identified as high risk from the algorithms were truly high-risk patients, we chose to minimize false positives at the expense of potentially not capturing all existing patient comorbidities. After selecting the best-performing algorithms, we took 1000 samples with replacement to show variability in performance under repeated sampling.

This project was approved by the BWH Institutional Review Board. The analyses were conducted with RStudio Version 0.99.441 and SAS 9.3.

Results

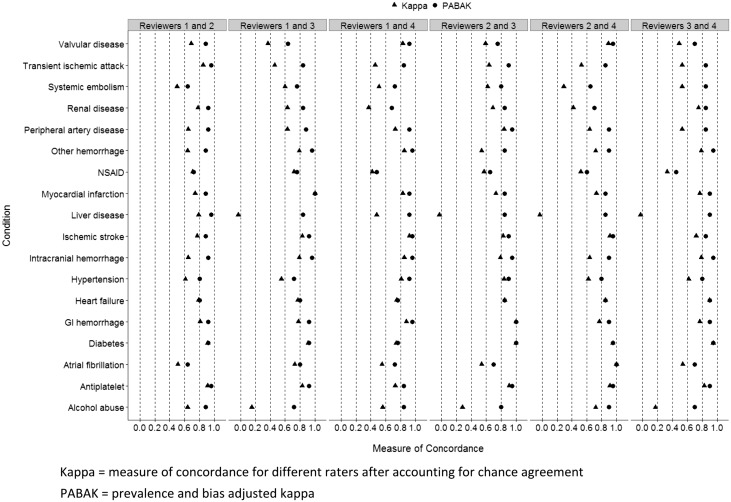

The initial extraction of patient data was based on a relatively broad definition that included patients who had any evidence of AF. The gold-standard chart review determined that 85.2% of the patient included in the study had AF. The prevalence of risk factors for stroke or major bleeding ranged from a low of 3.5% (liver disease) to 87.3% (hypertension) (Table 1). The concordance between medical chart reviewers was generally good, defined as kappa >0.60 (Figure 1). Including all conditions assessed in 480 patients by paired reviewers, the median kappa was 0.72, with an interquartile range of 0.54–0.83. The median prevalence and bias-adjusted kappa was 0.88, with an interquartile range of 0.80–0.92.

Table 1.

Prevalence of Conditions from the Gold-Standard Chart Review

| Condition | N = 480 |

|---|---|

| Atrial fibrillation | 85.2% |

| Alcohol abuse | 7.3% |

| Antiplatelet use | 84.8% |

| Diabetes mellitus | 36.0% |

| Gastrointestinal hemorrhage | 17.1% |

| Heart failure | 41.7% |

| Hypertension | 87.3% |

| Intracranial hemorrhage | 8.8% |

| Ischemic stroke | 23.1% |

| Liver disease | 3.5% |

| NSAID | 30.8% |

| Peripheral artery disease | 14.4% |

| Myocardial infarction | 27.7% |

| Renal disease | 25.4% |

| Systemic embolism | 15.4% |

| Transient ischemic attack | 11.7% |

| Valvular disease | 22.5% |

NSAID, non-steroidal anti-inflammatory drug.

Figure 1.

Concordance between chart reviewers. All reviewer pairs including reviewer 1, independently reviewed 50 of the same charts. Reviewer pairs that did not include reviewer 1 independently reviewed 40 of the same charts. GI, gastrointestinal; Kappa, measure of concordance for different raters after accounting for chance agreement; NSAID, non-steroidal anti-inflammatory drug; PABAK, prevalence and bias-adjusted kappa.

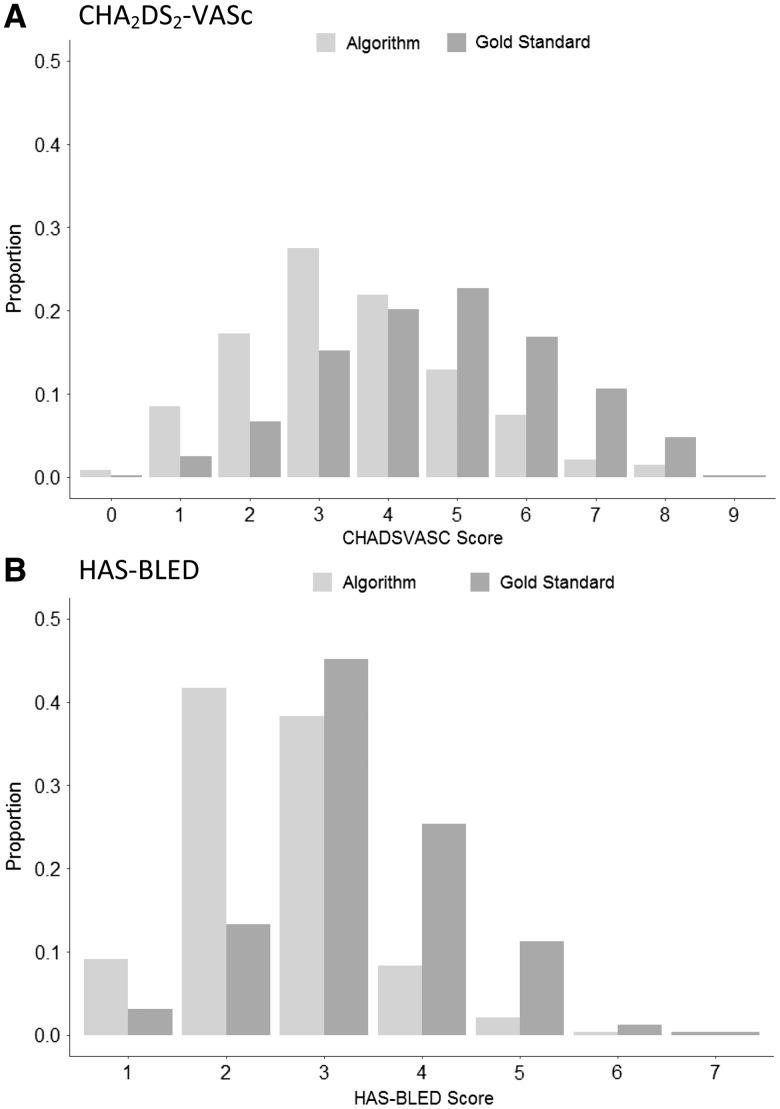

The selected algorithms to capture conditions from the EHR had a median PPV greater than 0.90 in 1000 bootstrap samples for 11 of 17 conditions (Figure 2, and Supplementary Appendix Table). The remaining conditions had a median PPV between 0.53 and 0.87. Although PPV for some risk factors was poor, we designed the algorithms to identify conditions that could be aggregated into risk scores, and the median PPV for identifying patients with a CHA2DS2-VASc score ≥2 or a HAS-BLED score ≥3 was 1.00 and 0.92, respectively. The specificity of the selected algorithms was also high for most of the conditions, with a median specificity of 14 out of 17 algorithms greater than 0.90. This high PPV and specificity came at a price with respect to sensitivity, which ranged from 0.09–0.88. NPV was also sacrificed to maximize PPV; however, 14 of 17 algorithms still had a median NPV greater than 0.75. The selected algorithms as well as the candidate algorithms considered are included in the Supplementary Appendix.

Figure 2.

Sensitivity, specificity, PPV, and NPV of selected algorithms. GI, gastrointestinal; PPV, positive predictive value; NPV, negative predictive value; NSAID, non-steroidal anti-inflammatory drug; Spe, Specificity; Sen, Sensitivity.

In Figure 3, we show the distribution of CHA2DS2-VASc and HAS-BLED scores as calculated based on gold-standard chart reviews as well as the selected algorithms. These figures reflect the high PPV and low sensitivity of the selected algorithms, and show that the algorithms consistently underestimated the gold-standard risk scores. The median difference in CHA2DS2-VASc scores based on the selected algorithms compared to the gold-standard chart reviews was –1 (interquartile range: –2, 0). The median difference in HAS-BLED scores based on the algorithms compared to the gold-standard chart reviews was 0 (interquartile range: –1, 0).

Figure 3.

Distribution of selected algorithm versus gold-standard calculated risk scores. (A) CHA2DS2-VASc scores, and (B) HAS-BLED scores. CHA2DS2-VASc score includes: female gender, age 65–74, age ≥75, prior ischemic stroke/transient ischemic attack/systemic embolism, congestive heart failure, hypertension, diabetes, vascular disease (myocardial infarction/peripheral artery disease). HAS-BLED score includes: age >65, hypertension, renal disease, liver disease, prior ischemic stroke/transient ischemic attack, prior bleeds (intracranial or gastrointestinal), NSAID/antiplatelet use, alcohol use disorder. NSAID, non-steroidal anti-inflammatory drug.

Discussion and Conclusion

Adoption rates of EHRs have increased rapidly; however, their potential for improving and measuring care quality has not yet been widely realized. This is in part because widespread use of EHRs is still new, but also because the quality of the data they capture is considered suspect by many. AF carries important risks of morbidity and mortality. We developed and tested algorithms to identify patients with AF and known risk factors for stroke and major bleeding using EHR data. Rule-based algorithms such as these can be used to facilitate targeted interventions for patients with chronic diseases – in this case, anticoagulation therapy for AF patients with a high risk of stroke. We also calculated the risk of major bleeding at the point of care to inform decision making. The role of EHRs in improving quality of care is complicated by the sociology of the processes through which clinical data is captured within EHRs; however, we demonstrate that algorithms that combine data from multiple streams of data within EHRs can quite accurately identify complex patients for intervention and measurement of quality.

Our algorithms used many sources of information available in EHRs, including diagnosis codes, laboratory values, prescriptions, and clinical notes. The majority of our selected algorithms were highly effective at identifying true positive AF cases. Their performance relative to algorithms based solely on problem lists highlight what others have shown regarding the underuse of problem lists in clinical practice and the necessity of developing more complex rules to identify patient conditions from EHRs.16 However, the performance of these algorithms also make it clear that risk scores can be assessed effectively using data from this EHR, through consideration of multiple sources of data.

There were several reasons for the lower PPV for a few of the investigated conditions. Systemic embolism and use of NSAIDs were conditions that had relatively low agreement between chart reviewers. The lower PPV of the selected algorithms for these conditions reflected the variability in reviewers’ assessments and the nature of these conditions. Systemic embolism includes a very large range of possible outcomes, and patients may have small embolic events of limited or uncertain clinical significance. Because NSAIDs are available over-the-counter, they are not always found in medication lists or paid claims records and exposure may be hard to determine, though it can be assessed by evaluating free text mentions in clinician notes. In contrast, although reviewer assessments were highly concordant for gastrointestinal hemorrhage, the PPV of the selected algorithm was only 0.75. The lower PPV was likely due to the reviewers’ ability to find additional evidence from free text notes and assess the severity of bleeding. Some of our candidate models did include some natural language processing for additional evidence on gastrointestinal hemorrhage; however, those algorithms were not selected due to the greatly reduced sensitivity compared to other candidate algorithms. For diabetes mellitus and intracranial hemorrhage, although some candidate algorithms did have a PPV greater than 0.90, we selected algorithms for which sensitivity or NPV were greatly increased at the expense of small decreases in PPV or specificity.

The strengths of our study include the high concordance between our chart reviewers and the use of data collected by many structured and unstructured fields within the EHR. In addition to developing algorithms with a high PPV for identifying individual conditions, these algorithms have immediate utility in a clinical context. Together, they capture a patient’s overall risk of stroke or major bleeding via established risk scores such as the CHA2DS2-VASc and HAS-BLED scores. By design, the algorithms were chosen to have a higher PPV at the expense of sensitivity and NPV. This means that although the algorithms may miss some patients who are at high risk of stroke or bleeding, clinicians may be confident that patients who are identified by the EHR-based algorithms as being high-risk patients are truly at a high risk. We reviewed a large random sample of 480 patient charts that we can expect to be reflective of all patient charts meeting the selection criteria of this study.

Our next step will be to take these algorithms one step further and test their effectiveness at targeting population health efforts and facilitating informed decision making. We are launching a randomized intervention study that will use these algorithms to identify patients within BWH primary care clinics who should receive anticoagulation therapy due to having a high risk of stroke. If these patients are not treated, they will be flagged for additional intervention from a specialized care team. Reasons for not giving patients anticoagulation therapy will be recorded to reveal barriers and potential solutions as the intervention evolves within a learning healthcare system.17 Our approach to harnessing the EHR for generating practice-based evidence18 is readily adaptable to other clinical contexts.

The Health Information Technology Policy Committee recommended active maintenance of problem lists in the 2009 “Meaningful Use” guidelines.19 Detailed problem lists can provide a valuable tool for clinicians and researchers to make better evidence-based decisions. The problem lists in BWH EHRs may already be better than those in many other organizations as a result of systematic efforts to improve them.16,20,21 However, in this study, although problem list entries had a high PPV and specificity by themselves, these entries captured fewer than 40% of conditions that were found by the gold-standard chart reviews. Nevertheless, problem list entries were important components of algorithms designed to identify conditions. Continued maintenance of problem lists can be enhanced via EHR algorithm-identified suggestions.16 Timely updates may help improve the value of EHR systems. In addition, utilizing multiple approaches to improve problem lists represents an important frontier in informatics, especially because problem lists are foundationally important for improving and measuring care quality.

Our study has a number of limitations. One is the low sensitivity and NPV of many algorithms. However, for the intended purposes of the algorithms, selecting high-sensitivity algorithms at the expense of PPV and specificity would be inappropriate. Those algorithms would result in many false positive assessments that would decrease clinician trust in the algorithms, cause unnecessary interruptions to workflow, and waste the time and effort of population health management teams. A limitation of the EHR is that it does not perfectly capture all patient conditions. Some conditions are generally under-recorded (eg, alcohol abuse, use of over-the-counter medications such as NSAIDs). Items that are not recorded within the EHR cannot be captured by algorithms or chart reviewers. The data from EHRs that is extracted for the RPDR has a few additional limitations. The RPDR captures only a subset of all types of data captured by any underlying EHR system. For example, scheduling of upcoming visits is not included. However, the types of data used by resident physicians in their gold standard assessment of the conditions under investigation were included in the RPDR extracts we obtained. Due to the 6- to 8-week lag to extract, transform, and load updated data, RPDR data by itself can be limited for studies that require real or near-real time patient information. This study was conducted at a single site within one EHR, and the results might not be generalizable to other sites or EHRs. However, since the study was conducted, BWH has adopted a vendor-developed EHR that is very broadly utilized, so the general approach described in this paper should be broadly generalizable in the future.

Management of AF should include shared decision making between patients and their clinicians.22 Patients may choose not to be treated after weighing the benefits and risks of anticoagulant treatment and the effects of the treatment on their quality of life, but ensuring that guideline-recommended treatment options are presented to patients when appropriate is critical, so that patients’ decision making can be appropriately informed. We developed algorithms that use data from EHRs to identify patients with AF with strong indication for anticoagulation therapy. Algorithms such as these can be built into EHR systems to target population health management efforts toward complex patients with the greatest need for intervention as well as to facilitate informed decision making. These efforts would improve care quality directly as well as enable the measurement of care quality for this important condition as well as many others.

Contributors

All authors contributed to the study design. S.V.W., J.R.R., and Y.J. analyzed the data. M.A.F. and D.W.B. contributed to the evaluation of the clinical aspects of the study and data interpretation. S.V.W., J.R.R., and Y.J. wrote the manuscript. All authors contributed to the review and revisions of the manuscript. All the authors have approved the final version of the manuscript.

Funding

S.V.W. was supported by grant number R00HS022193 from the Agency for Healthcare Research and Quality (AHRQ). M.A.F. is the Director of the National Resource Center for Academic Detailing, which is supported by Grant No. R18HS023236 from AHRQ. The content is solely the responsibility of the authors and does not necessarily represent the official views of the AHRQ.

Competing interests

S.V.W. is a paid consultant to Aetion, Inc., a software company.

Supplementary Material

ACKNOWLEDGMENTS

This study was funded by a grant from the Agency for Healthcare Research and Quality (AHRQ). This study used data from the Partners HealthCare System RPDR. The authors thank Shawn Murphy, Henry Chueh, and the Partners HealthCare RPDR group for facilitating the use of their database. The authors thank Kathleen Degnan MD, Brenton Fargnoli MD, Omar Badri MD, and Neelam Shah MD for their assistance with chart reviews.

REFERENCES

- 1. Bungard TJ, Ghali WA, Teo KK, McAlister FA, Tsuyuki RT. Why do patients with atrial fibrillation not receive warfarin? Arch Int Med 2000;160(1):41–46. [DOI] [PubMed] [Google Scholar]

- 2. Ogilvie IM, Newton N, Welner SA, Cowell W, Lip GY. Underuse of oral anticoagulants in atrial fibrillation: a systematic review. Am J Med 2010;123(7):638–645, e634. [DOI] [PubMed] [Google Scholar]

- 3. Camm AJ, Lip GY, De Caterina R, et al. 2012 focused update of the ESC Guidelines for the management of atrial fibrillation: an update of the 2010 ESC Guidelines for the management of atrial fibrillation. Developed with the special contribution of the European Heart Rhythm Association. Eur Heart J 2012;33(21):2719–2747. [DOI] [PubMed] [Google Scholar]

- 4. McDonald KM, Sundaram V, Bravata DM, Lewis R, Lin N, Kraft SA, McKinnon M, Paguntalan H, Owens DK. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies: Volume 7 – Care Coordination Agency for Healthcare Research and Quality, Rockland, MD; 2007. [PubMed] [Google Scholar]

- 5. Samal L, Wright A, Healey MJ, Linder JA, Bates DW. Meaningful use and quality of care. JAMA Int Med 2014;174(6):997–998. [DOI] [PubMed] [Google Scholar]

- 6. Kern LM, Edwards A, Kaushal R, Investigators wtH. The meaningful use of electronic health records and health care quality. Am J Med Qual 2014;2014:512–519. [DOI] [PubMed] [Google Scholar]

- 7. Lip GYH, Nieuwlaat R, Pisters R, Lane DA, Crijns HJGM. Refining clinical risk stratification for predicting stroke and thromboembolism in atrial fibrillation using a novel risk factor-based approach: The Euro Heart Survey on Atrial Fibrillation. Chest 2010;137(2):263–272. [DOI] [PubMed] [Google Scholar]

- 8. Pisters R, Lane DA, Nieuwlaat R, de Vos CB, Crijns HJGM, Lip GYH. A novel user-friendly score (HAS-BLED) to assess 1-year risk of major bleeding in patients with atrial fibrillation: The Euro Heart Survey. Chest 2010;138(5):1093–1100. [DOI] [PubMed] [Google Scholar]

- 9. Guo Y, Apostolakis S, Blann AD, et al. Validation of contemporary stroke and bleeding risk stratification scores in non-anticoagulated Chinese patients with atrial fibrillation. Int J Cardiol 2013;168(2):904–909. [DOI] [PubMed] [Google Scholar]

- 10. Lip GYH. Stroke and bleeding risk assessment in atrial fibrillation: when, how, and why? Eur Heart J. 2013;34(14):1041–1049. [DOI] [PubMed] [Google Scholar]

- 11. Okumura K, Inoue H, Atarashi H, Yamashita T, Tomita H, Origasa H. Validation of CHA(2)DS(2)-VASc and HAS-BLED scores in Japanese patients with nonvalvular atrial fibrillation: an analysis of the J-RHYTHM Registry. Circulation J 2014;78(7):1593–1599. [DOI] [PubMed] [Google Scholar]

- 12. Murphy SN, Chueh HC. A security architecture for query tools used to access large biomedical databases. Proceedings/AMIA… Annual Symposium. AMIA Symposium 2002:552–556. [PMC free article] [PubMed] [Google Scholar]

- 13. QPID Health. http://www.qpidhealth.com. Accessed March 28, 2016. [Google Scholar]

- 14. Byrt T, Bishop J, Carlin JB. Bias, prevalence and kappa. J Clin Epidemiol 1993;46(5):423–429. [DOI] [PubMed] [Google Scholar]

- 15. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) – a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009;42(2):377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Wright A, Pang J, Feblowitz JC, et al. A method and knowledge base for automated inference of patient problems from structured data in an electronic medical record. J Am Med Inform Assoc 2011;18(6):859–867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Aisner D, Olsen L, McGinnis JM. The Learning Healthcare System:: Workshop Summary (IOM Roundtable on Evidence-Based Medicine) National Academies Press, Washington, DC; 2007. [PubMed] [Google Scholar]

- 18. Horn SD, Gassaway J. Practice-based evidence study design for comparative effectiveness research. Med Care 2007;45(10):S50–S57. [DOI] [PubMed] [Google Scholar]

- 19. McCoy AB, Thomas EJ, Krousel-Wood M, Sittig DF. Clinical decision support alert appropriateness: a review and proposal for improvement. Ochsner J 2014;14(2):195–202. [PMC free article] [PubMed] [Google Scholar]

- 20. Wright A, McCoy AB, Hickman TT, et al. Problem list completeness in electronic health records: a multi-site study and assessment of success factors. Int J Med Inform 2015;84(10):784–790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Wright A, Pang J, Feblowitz JC, et al. Improving completeness of electronic problem lists through clinical decision support: a randomized, controlled trial. J Am Med Inform Assoc 2012;19(4):555–561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Seaburg L, Hess EP, Coylewright M, Ting HH, McLeod CJ, Montori VM. Shared decision making in atrial fibrillation: where we are and where we should be going. Circulation 2014;129(6):704–710. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.