Abstract

Objective: To report on the state of the science of clinical decision support (CDS) for hospital bedside nurses.

Materials and Methods: We performed an integrative review of qualitative and quantitative peer-reviewed original research studies using a structured search of PubMed, Embase, Cumulative Index to Nursing and Applied Health Literature (CINAHL), Scopus, Web of Science, and IEEE Xplore (Institute of Electrical and Electronics Engineers Xplore Digital Library). We included articles that reported on CDS targeting bedside nurses and excluded in stages based on rules for titles, abstracts, and full articles. We extracted research design and methods, CDS purpose, electronic health record integration, usability, and process and patient outcomes.

Results: Our search yielded 3157 articles. After removing duplicates and applying exclusion rules, 28 articles met the inclusion criteria. The majority of studies were single-site, descriptive or qualitative (43%) or quasi-experimental (36%). There was only 1 randomized controlled trial. The purpose of most CDS was to support diagnostic decision-making (36%), guideline adherence (32%), medication management (29%), and situational awareness (25%). All the studies that included process outcomes (7) and usability outcomes (4) and also had analytic procedures to detect changes in outcomes demonstrated statistically significant improvements. Three of 4 studies that included patient outcomes and also had analytic procedures to detect change showed statistically significant improvements. No negative effects of CDS were found on process, usability, or patient outcomes.

Discussion and Conclusions: Clinical support systems targeting bedside nurses have positive effects on outcomes and hold promise for improving care quality; however, this research is lagging behind studies of CDS targeting medical decision-making in both volume and level of evidence.

Keywords: computerized clinical decision support, registered nurse, nursing informatics, decision-making, review

BACKGROUND AND SIGNFICANCE

In our fast-paced and error-laden health care system,1 with a rapidly changing landscape of health information technology, clinicians rely on a variety of information sources to guide decisions. The design and adoption of clinical decision support (CDS) integrated within the electronic health record (EHR) has been heralded as a method to improve decision-making and thereby ensure care quality and safety.2,3 CDS is defined broadly by Teich and colleagues4 as “providing clinicians (nurses) with computer-generated clinical knowledge and patient related information which is intelligently filtered and presented at appropriate times to enhance patient care.” Although CDS is a mature intervention introduced 40 years ago by Shortliffe and colleagues,5 optimization of CDS on clinical workflow and outcomes continues to be refined.

Several reviews of CDS targeting medical decision-making highlight the promise of CDS for improving care quality and cost. For example, Bright and colleagues6 conducted a systematic review of 148 randomized controlled trials. When the effect of CDS was assessed, reduced risk of morbidity was identified (relative risk, 0.88 [95% CI, 0.80-0.96]), although effects on mortality were inconclusive (n = 6; odds ratio [OR], 0.79 [95% CI, 0.54-1.15]). Of the studies reviewed, 86% addressed processes of care, 20% addressed clinical outcomes, and 15% addressed cost impacts. The strongest effects of CDS were noted for improvement in performance of preventive services (n = 25; OR, 1.42 [95% CI, 1.27-1.58]), better ordering of clinical tests (n = 20, OR, 1.57 [95% CI, 1.47-2.00]), and appropriateness of prescriptions (n = 46, OR, 1.57 [95% CI, 1.35-1.82]), and 70.5% showed an impact on an explicit financial outcome or financial proxy measure.7 Such processes best reflect functions of a clinician who directs care (eg, physician or advanced practitioner) vs one who supports, coordinates, delivers, and monitors care (eg, registered nurse [RN]). Kawamoto and colleagues8 found that the most effective CDS has 4 components: it is designed in line with workflow (P < .001), offers recommendations with assessments (P = .019), provides guidance at the time and location when decisions are made (P = .026), and is computer based (P = .029). Of systems that had all 4 components, 94% showed benefit.8 As direct providers for much of in-hospital care, nurses make multiple decisions that can be supported by CDS. For example, nurses recognize patient deterioration (which requires situational awareness) and determine which patient conditions have clinical guidelines that apply, which patient receives care first (often as triage in the emergency department), which nursing interventions are appropriate, and what are ways to promote patient-centeredness of medical interventions. Recognition of the role of CDS in RN decision-making began in 2008 when Staggers et al.9 reviewed computerized provider order entry–integrated CDS used by nurses. They noted that the mechanics of providing decision support for nursing in a computerized provider order entry system had not been well studied to date and concluded that nurses are often viewed as data collectors, not decision-makers. The same year, Anderson and Willson10 published a metasynthesis on CDS to support nursing (bedside RN and advanced practice nurses such as nurse practitioners) evidence-based practice. Based on the 6 included studies, they concluded that nurses are receptive to CDS, but barriers include poor administrative support, time to learn and implement, and EHR deficiencies that must be overcome to achieve effectiveness. More recently, Piscotty and Kalisch11 conducted a nonsystematic narrative review that found that alignment with workflow, nurse characteristics (eg, age, experience), and organizational characteristics (eg, vendor support) impacted nurses’ use of CDS.

Across the published reviews of prescribers’ experiences 6,8,12 and nursing experience broadly,9–11 we identified that there is a need to understand: (a) the use of CDS for the role of RNs as decision-makers, particularly in acute care settings, and (b) the impact of nurse-targeted CDS on outcomes. To address this, we conducted an integrative review of nursing CDS studies.

OBJECTIVE

The objective of this review is to report on the state of the science of CDS targeting direct-care bedside RN decision-making in terms of methodological features, CDS characteristics, usability, and process and patient outcomes.

METHODS

We took an integrative review approach to synthesize the diverse methods and findings across studies, following Whittemore and Knafl’s13 methodology. The advantage of integrative reviews over other review methods is the ability to synthesize literature that uses varying methodologies such as quasi-experimental, experimental, and qualitative in a rigorous and well-defined manner. Including both designs allows us to broadly cover design, testing, and evaluation and is particularly important for CDS research, as many of the studies take a user-centered qualitative design approach in the early stages of research.

Data sources

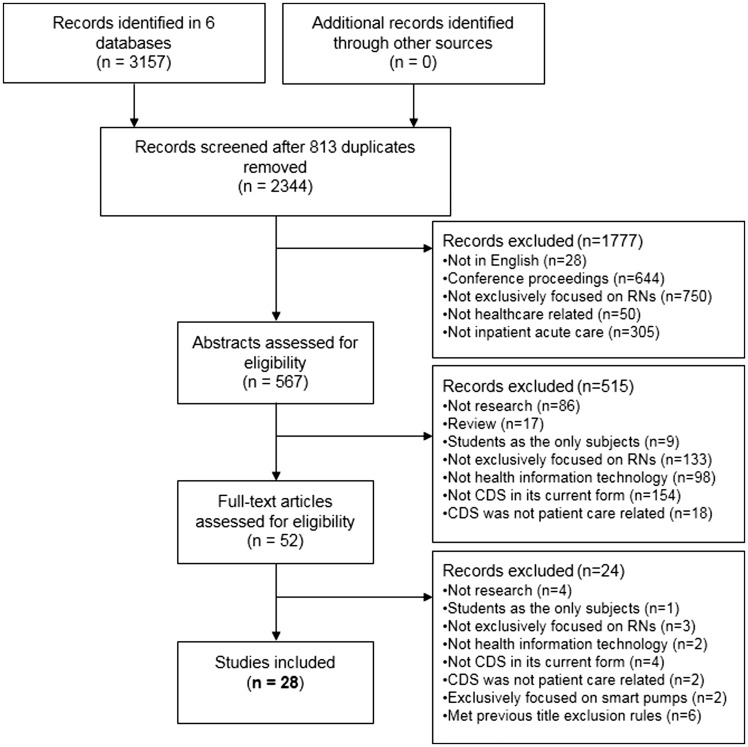

Literature searches of original research articles published between 2006 and 2013 were conducted within the following databases: CINAHL Plus with Full Text, Embase, IEEE Explorer, MEDLINE via PubMed, Scopus, and Web of Science. Subject headings and keyword searches were done by a health sciences librarian (R.R.) using variations of the words computer-assisted decision making, computers, clinical decision support systems, expert systems, information systems, hospital, nursing informatics, nursing homes, nursing staff, and reminder systems, and derivatives of the word nurse (see Supplemental File 1). These searches yielded 3157 articles. After 813 duplicates were removed (see Figure 1), our final sample of 2344 articles was considered for inclusion.

Figure 1.

Records screened and included

Study selection and exclusion criteria

Following PRISMA’s guidelines,14 we identified, screened, and selected papers based on prespecified criteria in 3 stages: titles and citations (1777), abstracts (515), and full-text articles (24) (see Figure 1). Title and citation exclusions included not English (28), conference proceeding (644), not exclusively RN-focused (750), not health care (50), and not inpatient acute care (305). We reviewed the abstracts of any articles that did not fit the title exclusion categories. Abstract exclusions included not research (86), review papers (17), students as only subjects (9), not exclusively RN-focused (133), not health information technology (98), not currently CDS (154), and CDS not patient care–related (18). We reviewed the full articles of any abstracts that did not fit the abstract exclusion categories. Full article exclusions included not research (4), students as only subjects (1), not exclusively RN-focused (3), not about health information technology (2), not CDS (4), CDS not patient care–related (2), focus on smart pumps (2), and met previous title exclusion rules (6). Full articles that did not meet these criteria were included in the analysis.

Each round of exclusion (title, abstract, and full article) was assessed by multiple authors initially using a sample of 5–10% of the articles. When disagreements arose, they were resolved by discussion. Multiple assessors were used for 3–4 rounds until interrater agreement was established for each step at ≥ 89%.

Data extraction and analysis

We used 2 methods to develop categories for extraction (see Supplemental File 2). First, we used Polit and Beck’s Levels of Evidence (evidence hierarchy includes single descriptive through systematic reviews of randomized controlled trials [RCTs]),15 which is used frequently in systematic and integrative reviews.16–18 Second, we developed other categories (eg, CDS purpose) based on author expertise in nursing CDS research (eg, support guidelines adherence), tested the categories by abstracting from a subsample of articles, and added new labels inductively19 when existing categories did not fit, until no additional categories emerged.

Three authors (K.D.L., S.G., and J.A.) entered data into a Google DocsTM form with defined categories from a subsample of articles and assessed interrater agreement in rounds. Once agreement reached ≥89%, the authors worked independently to extract information into the data form. The data form was then exported into an Excel spreadsheet for analysis. Simple counts were conducted for categorical variables. Narrative variables were grouped thematically by 1 author (K.D.L.), examined independently by 2 authors (S.G. and J.A.), and discussed among all 3 to achieve consensus.

Quality appraisal

Quality appraisal of articles is common in systematic reviews, but is challenging in integrative reviews due to the study design–specific nature of available appraisal tools that make it difficult to apply across multiple designs. To address this challenge, we used a quality appraisal tool for integrative reviews.20,21 The tool measures quality and study rigor across 4 criteria: study type, sampling method, data collection method detail, and analysis that has been used in other reviews.20 The possible score range for this scoring method is 4 (qualitative design, sampling, and data collection not explained, and narrative analysis) to 13 (quantitative experimental design, random sampling, data collection explained, and inferential statistics).20 As with the previous stages of the review, authors independently scored the articles in rounds until ≥89% agreement was achieved.

RESULTS

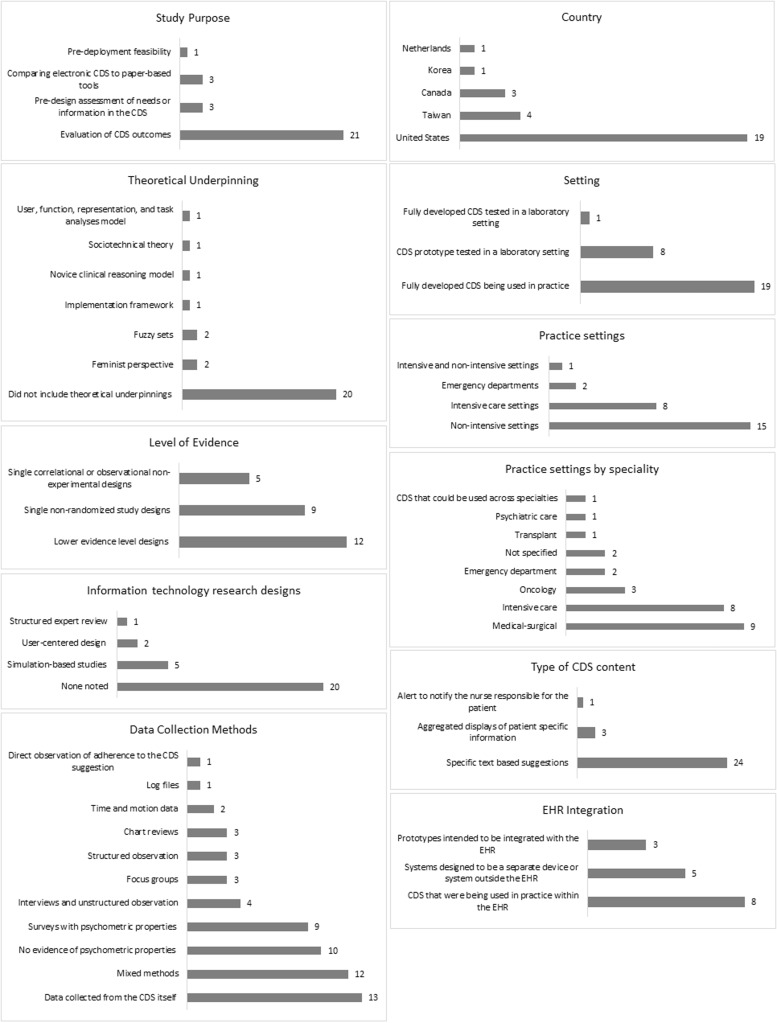

We identified 28 studies that met the criteria for inclusion. We report on the current state of science related to CDS targeted for RN decisions with regard to the methodological features of the research, characteristics of the CDS studied, and CDS outcomes divided into usability and process and patient outcomes (see Supplemental File 3). Methodological features and CDS characteristics are summarized in Figure 2.

Figure 2.

Methodological features and CDS characteristics

Methodological features

Study purpose and theoretical underpinning

The purpose of the included studies broke out into 4 broad areas: predesign needs assessment or information to validate the knowledge in CDS,22–24 predeployment feasibility,25 comparison to paper-based tools,26–28 and evaluation (acceptance, usability, accuracy, and patient and process outcomes).29–49

Of the 28 articles, a majority (20) did not include theoretical underpinnings. The theories and conceptual models used by the 8 remaining studies included diffusion of innovation,29 feminist perspective,23,30 fuzzy sets,23,31 implementation framework,32 novice clinical reasoning model, 22 sociotechnical theory,33 and user, function, representation, and task analysis model.34

Study design and level of evidence

We identified 1 RCT35 as the highest level of evidence of the included studies, followed by (in decreasing levels of evidence) 9 single nonrandomized study designs,25,26,29,33,36–40 5 single correlational or observational nonexperimental designs,23,27,30,31,41 and 12 lower evidence–level designs (single simple descriptive designs that do not include inferential statistics or qualitative studies).22,24,28,32,34,42–48 In addition, we coded studies using methods often used in information technology research:50 (1) user-centered design (multiple rounds of data collection with data-based iterative improvement of the technology between rounds), applied in 2 studies,23,43 (2) simulation-based studies (includes clinical scenarios, role playing, and/or realistic simulated clinical environments), used by 5 studies,22,27,34,38,49 and (3) structured expert review (eg, heuristic review), reported in only 1 study.35

Data collection methods

A variety of data-collection methods were used, with almost half the studies using mixed methods.22,25–27,30–34,39,40,43,49 Surveys were commonly used, both with23,25,27,30,31,33,38,39,49 and without28,30–32,37,40,43,48,49,51 evidence of psychometric properties. The next most common method (13) was data collected from the CDS itself (eg, decision overrides).22,24–28,31–35,40,44 Four studies used interviews and unstructured observation,34,36,41,52 3 studies used focus groups,22,43,47 3 studies used structured observation,26,33,37 3 studies used chart reviews,26,39,44–46 2 studies used time and motion data,27,37 1 study used log files,48 and 1 conducted direct observation of adherence to the CDS suggestion.42

Quality of studies

The quality scores ranged from 5 to 13 (mean 9.5, SD = 2). The most common sampling method was convenience (14), 9 had either purposive, case matching, or random sampling, and 5 did not provide any explanation of sampling. All authors reported information about data-collection methods and tools. Sixteen of the studies reported inferential statistics, 8 used descriptive, and 4 used narrative as their highest level of analysis (See Table 1).

Table 1.

Quality scoring of included studies 4678

| First author | Study typea | Samplingb | Method detailc | Analysisd | Score |

|---|---|---|---|---|---|

| Dumont, 2012 | 6 | 3 | 1 | 3 | 13 |

| Okon, 2009 | 6 | 3 | 1 | 3 | 13 |

| Sawyer, 2011 | 6 | 3 | 1 | 3 | 13 |

| Hoekstra, 2010 | 5 | 3 | 1 | 3 | 12 |

| Anders, 2012 | 6 | 1 | 1 | 3 | 11 |

| Cho, 2010 | 6 | 1 | 1 | 3 | 11 |

| Effken, 2008 | 6 | 1 | 1 | 3 | 11 |

| Fick, 2011 | 5 | 2 | 1 | 3 | 11 |

| Lee, 2010 | 6 | 1 | 1 | 3 | 11 |

| Ng, 2011 | 4 | 3 | 1 | 3 | 11 |

| Chin, 2006 | 5 | 2 | 1 | 2 | 10 |

| Im, 2011 | 5 | 1 | 1 | 3 | 10 |

| Lyerla, 2010 | 6 | 0 | 1 | 3 | 10 |

| Yeh, 2011 | 6 | 1 | 1 | 2 | 10 |

| Alvey, 2012 | 4 | 1 | 1 | 3 | 9 |

| DiPietro, 2008 | 6 | 1 | 1 | 1 | 9 |

| Dong, 2006 | 4 | 1 | 1 | 3 | 9 |

| Im, 2006 (CIN article) | 4 | 1 | 1 | 3 | 9 |

| Sward, 2008 | 3 | 3 | 1 | 2 | 9 |

| Im, 2006 (ONF article) | 4 | 1 | 1 | 2 | 8 |

| Narasimhadevara, 2008 | 5 | 0 | 1 | 2 | 8 |

| Polen, 2009 | 4 | 0 | 1 | 3 | 8 |

| Tseng, 2012 | 4 | 1 | 1 | 2 | 8 |

| Yuan, 2013 | 5 | 0 | 1 | 2 | 8 |

| Lee, 2006 | 3 | 2 | 1 | 1 | 7 |

| O’Neil, 2006 | 3 | 1 | 1 | 2 | 7 |

| Sidebottom, 2012 | 3 | 1 | 1 | 1 | 6 |

| Campion, 2011 | 3 | 0 | 1 | 1 | 5 |

| Range | 3–6 | 0–3 | 1–1 | 1–3 | 5–13 |

| Mean | 4.75 | 1.36 | 1.00 | 2.43 | 9.54 |

aStudy design scores: 3 = qualitative design; 4 = quantitative descriptive design; 5 = mixed qualitative and quantitative descriptive; 6 = quantitative experimental and quasi-experimental bSampling (for primary study aim): 0 = Not explained; 1 = Convenience; 2 = Purposive or Case matching/cohort; 3 = Random or 100% cMethod detail: 1 = Methods and tools; 0 = Not explained dAnalysis: (highest level reported): 1= Narrative; 2 = Descriptive statistics; 3 = Inferential statistics.

CDS characteristics

Study settings and samples

The settings and samples yielded important information about the characteristics of the CDS. The studies were conducted in 5 countries, with the majority (19) in the United States,22–25,27,30–37,39,42,45–47,49 followed by 4 in Taiwan,28,41,44,48 3 in Canada,26,29,44 and 1 each in Korea38 and the Netherlands.40 A majority of studies (19) reported on CDS that was fully developed and tested as it was being used in practice, 8 studied a CDS prototype that was tested in a laboratory-type setting,22,27,33–35,37,38,49 and 1 tested CDS that was fully developed but tested in a laboratory/lab-like setting.24 A majority (15) were designed for nonintensive settings (eg, medical-surgical units),22,23,25,28–31,34,37,38,41,43,45,46,48 8 for intensive care settings,27,32,33,36,39,40,42,49 2 for emergency departments, and 1 for both intensive and nonintensive settings.24 The nurse samples involved in the CDS evaluation included medical-surgical (9),22,25,28,34,37,38,41,46 intensive care (8),27,32,33,36,39,40,49 oncology (3, authored by 1 research team),23,26,30,31,44,53 emergency department (2),26,44 transplant (1),43,48 and psychiatric care25 (1). Two studies did not specify the nursing population35,47 and only 1 study (a nurse-specific drug guide)24 developed a CDS system that could be used across specialties.

CDS purpose and content

The CDS systems were designed to address a variety of purposes, with several of them serving more than 1 purpose. The most common purpose was nursing diagnostic support (10),23,25,29–31,37,38,41,47,48 followed by medication management (8),24,27,29,32,36,39,40,47 improving situational awareness (7),28,33,34,43,46,47,49 supporting guideline adherence (9),22,28–30,35,37,45,47,54 triage (2),26,44 and non-medication–based nursing interventions (eg, calming techniques, memory support) (2).45,48 The type of CDS content was also examined. A majority (24) included specific text-based suggestions, 3 included aggregated displays of patient-specific information,33,43,49 and 1 was an alert to the charge nurses to notify the nurse responsible for the patient.46

EHR integration

Approximately half the studies (15) provided enough details to determine the degree of integration of CDS with the EHR. Eight studies reported on CDS systems that were being used in practice within the EHR and25,36,40–42,45–47 3 were prototypes intended to be integrated with the EHR,27,33,49 but 5 were designed to be a separate device (eg, personal digital assistant) or system outside the EHR.23,29–31,37

Data sources underlying CDS content

We further categorized data sources based on whether the CDS: (1) uses real-time patient-specific information that is pulled in automatically from the EHR, (2) uses real-time patient-specific information that nurses must input into the tool, (3) provides non-patient-specific general nursing advice analogous to a handbook or published set of guidelines, or (4) delivers patient education materials based on nurse input of patient data. Seven studies used real-time patient-specific data that is pulled in automatically from the EHR.25,33,40,45–47,49 Half the CDS tool studies (14) required nurses to input data,23,27–29,31,32,34,36–39,42–44 6 offered general nonspecific patient advice,22,24,26,30,35,41 and 1 tailored patient education based on input of patient data by nurses.48

CDS outcomes

Process outcomes

Process outcomes are components of the decision-making process that have an effect on clinical outcomes. All but 6 studies reported process outcomes,23,25,30,39–41 with many studying more than 1. Ten studies measured situational awareness (ie, comprehension of meaning of variables that allow prediction of a future event),22,24,28,33,38,42,43,46,47,49 8 studied CDS information accuracy (ie, the system provides accurate information such as correct insulin dose),24,31,32,36,44,45,47,48 7 studied subject accuracy (ie, the subject accurately interprets the CDS message or intent),22,26,32,35,44,48,49 6 studied workload (eg, cognitive workload),28,29,33,34,36,49 6 studied efficiency (eg, how quickly decisions were made),27,34,37,43,45,47 5 studied failures (ie, failure mode analysis, detection, and recovery from errors),24,27,32,36,45 and 1 studied technical issues (eg, wireless connectivity).29

Only 7 out of the 22 studies that included process outcomes reported quantitative measures and study designs with inferential statistics to detect an outcome change.27,33,35,42,45,46,49 Statistically significant improvement was found for subject accuracy (2),35,49 situational awareness (3),42,46,49 efficiency (2),27,45 errors (2),27,45 and information accuracy (1).45 Results indicated no statistically significant difference in workload (2).33,49 One study had mixed results, with unchanged situational awareness and improved efficiency, but only under specific conditions (ie, if the CDS display included a high number of patient variables).33 There were no statistically significant decreases in process outcomes in any of the studies. In summary, 7 out of the 7 studies with designs, measures, and analytic procedures to detect changes associated with the CDS27,33,35,42,45,46,49 demonstrated statistically significant improvement in 1 or more process outcomes.

Usability outcomes

Usability refers to whether the CDS system is easy to learn, use, and remember, has few errors, and is subjectively satisfying.55 Nineteen studies included 1 or more usability outcomes. Five studies included global usability (measures that did not specify type of usability, such as the System Usability Scale),23,29,34,37,38 11 studies included subjective satisfaction,27,28,31,33,38,39,47–49 9 studies included value or usefulness (including subjects specifying a specific value of the CDS to their work, such as more efficient, more accurate),28,32,36,37,40,41,43,48,49 8 studies included learnability (ease of learning),28–30,37,40,43,48,49 and 1 study included memorability (ease with which it is remembered after a period of non-use).43 In a majority of the studies, measures to assess usability relied heavily on investigator-developed questionnaires that did not specify usability type (13), with a minority using the Questionnaire for User Interaction Satisfaction (4), the Software Usability Measurement Inventory (1), or the Use of Technology Instrument (1).

Only 4 of these studies included quantitative measures, study designs that could demonstrate outcome changes, and inferential statistics to determine whether CDS was associated with improved usability outcomes.27,33,39,49 Statistically significant improvements were found in subjective satisfaction (4),27,33,39,49 global usability (1),49 and value (1).49 There were no statistically significant decreases in process outcomes in any of the studies. In summary, 4 out of the 4 studies that had designs, measures, and analytic procedures to detect changes associated with CDS demonstrated statistically significant improvement in 1 or more usability outcomes.

Patient outcomes

Twelve studies included real patient data, but only 5 included patient outcomes.25,39,40,44,46 Three studies included single patient outcomes: blood sugar regulation,39 mental status,25 and potassium regulation.40 Sawyer (sepsis alert) and Ng measured multiple outcomes focusing on patient resource utilization outcomes such as intensive care unit transfer, in-hospital mortality, length of stay, and post–CDS alert length of stay.46

Both of the medication management CDS systems showed statistically significant improvement, 1 for blood sugar39 and the other for potassium regulation.40 Results on the impact of CDS on resource utilization outcomes were mixed: Ng’s study of an emergency department triage tool showed statistically significantly decreased length of stay,44 but Sawyer’s sepsis alert did not show statistical differences in intensive care unit transfers, length of stay, post-alert length of stay, or mortality, despite multiple statistically improved process outcomes (such as antibiotic escalation and oxygen therapy). None of the studies had unchanged or negative-effect patient outcomes. In summary, 3 out of the 4 studies that had designs, measures, and analytic procedures to detect changes in patient outcomes associated with CDS demonstrated statistically significantly improved patient outcomes.

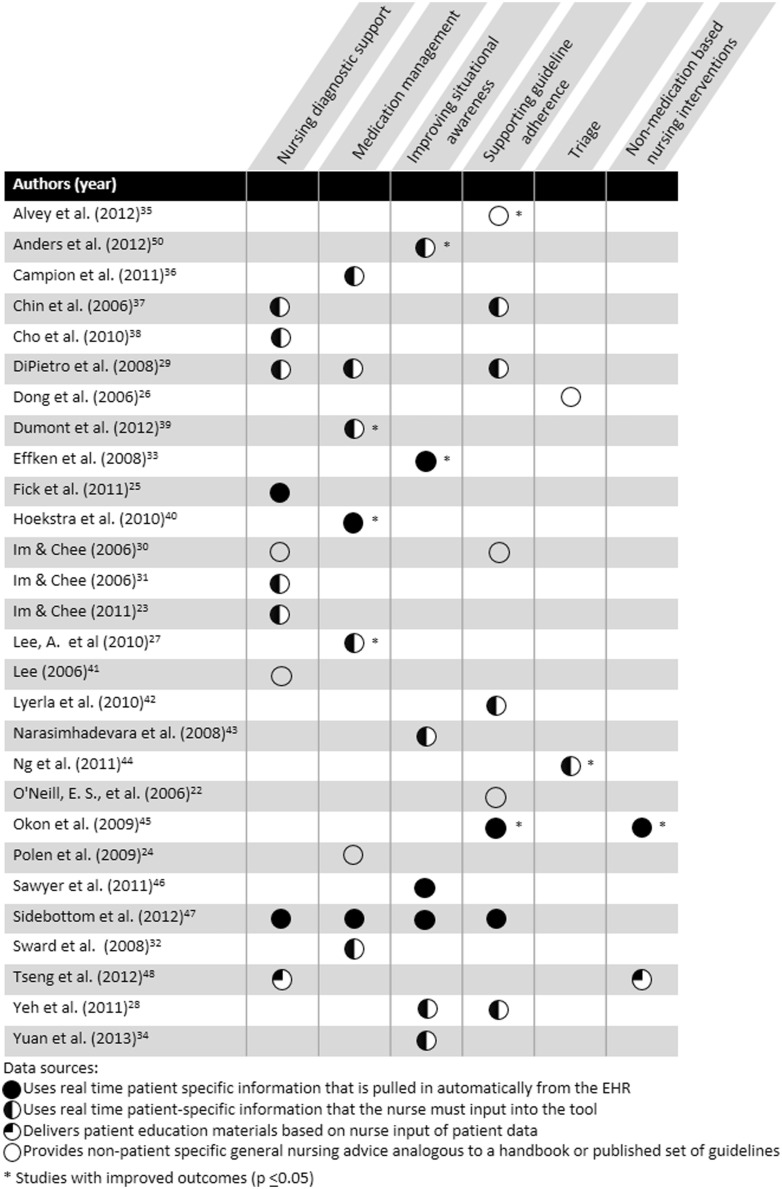

Summary: CDS purpose, data source, and outcomes

The intersection of CDS purpose, data sources, and outcomes is shown in Figure 3. There were statistically improved outcomes across 5 out of 6 CDS purposes (not: nursing diagnosis support) and 3 out of 4 data sources (not: delivers patient education information based on nurse input of patient data).

Figure 3.

CDS purpose and data source matrix

Qualitative and secondary findings

In addition to the above outcomes, there were secondary and qualitative findings that inform CDS science. Display of CDS was shown in 4 studies to affect accuracy, treatment efficiency, and adherence to protocol.27,33,39,49 Anders, the only study to include >1 site, found site differences in overall accuracy and display type accuracy (ie, graph vs table) across the 2 sites.49 Campion showed that in spite of attempts to automate work, nurses continue to use paper as an intermediary between CDS and the EHR.36 Lyerla and colleagues42 found that nurses rate CDS guideline adherence significantly differently by patient characteristics, suggesting that nurses are applying critical thinking and patient-specific knowledge along with CDS to make decisions. Similarly, Lee concluded that CDS enhanced critical thinking.41 Finally, O’Neil and colleagues22 found that novice RNs had difficulty making decisions quickly and determining important patient cues.

DISCUSSION

CDS that targets direct bedside RN decision-making shows promise for improving the quality of care. We identified significant improvements in 14 of 15 outcomes measured across 10 studies that included designs and measurement to support this inference. Furthermore, across all 28 studies, no negative effects on process, usability, or patient outcomes were reported. We also found that there were statistically significant improvements across CDS types, except for nursing diagnostic support. The lack of improvement in process or usability outcomes in this particular CDS type is not surprising, since a nurse naturally takes more time to infer a diagnosis from structured terminology compared with using a nonstructured process such as memory. In addition, neither of the studies that supported nursing diagnostic decision-making were designed to determine statistical improvements.25,48 Opportunities to strengthen the science and practice of CDS for acute care RNs abound. There is a clear need to increase the sophistication and rigor of the study designs used. A single RCT was found, in contrast to the 186 RCTs identified in a 2012 review of CDS aimed at prescribers.6 Some of this may relate to the difficulty of randomizing an intervention in work environments that are clustered by units; yet even in lab settings, randomization was missing. Another potential explanation for the paucity of RCTs may be the high cost of conducting trials and the limited amount of external funding for this area of study. Of the 28 articles included in this review, 13 reported no source of funding.22–24,27,31,34,35,38–40,42,43,47 Only 5 studies were funded by the National Institutes of Health.25,32,36,37,49 Three were funded intramurally with funds likely insufficient to support a large multisite randomized trial,26,33,48 and 6 were funded from foundations or nongovernmental sources.28–30,41,45,46 We also note that all the CDS systems targeted single conditions, such as pressure ulcers or delirium, or processes, such as triage. Even for CDS in early development, we found no studies attempting to analyze EHR data to generate knowledge for nurse decision-making across conditions. Furthermore, only 7 (25%) of the CDS studies were fully integrated in the EHR using real-time patient data to provide decision support. This means that for many CDS systems designed for direct-care RNs, data needed to be entered manually or the system had to be retrieved by opening additional parts of the record. This lack of integration hinders the efficiency of RNs who often have high temporal demands and function as chief coordinators and deliverers of care in acute care settings, and also increases the chance of error.

Despite the availability of several psychometrically tested usability measures,37,56–60 of the 19 studies that included usability outcomes, only 5 used measures with psychometric properties,27,30,31,39,61 making issues of measurement reliability and validity hard to assess in our review. We found 13 studies that used data-collection instruments developed by their own authors or adapted from items from other questionnaires. Most of these instruments were submitted to face validity only and do not cover all usability attributes such as learnability, efficiency, memorability, few errors, and satisfaction.50 Moreover, using adapted instruments without carefully attending to the appropriate steps of instrument development/assessment and reporting psychometrics may compromise the real-world impact of the findings.62

The use of lab settings for assessing usability was limited to 9 studies. Only 1 study reported using statistics of the CDS system.31 No studies were found that addressed the continuum of CDS development (design, prototype testing, iterative design improvements, testing in actual clinical environments), and usability overall was constrained by low integration of nursing CDS within the EHR. This suggests that some CDS systems targeting RN decision-making may be used in practice without rigorous testing and refinement to optimize usability. Finally, with the limited number of studies funded externally, it is possible that research dollars are spent on CDS prototype development that is never funded for testing in actual practice.We were surprised and disheartened to see the small number of articles (2 studies) that addressed user-centered design incorporating rapid cycling of formative design and testing, long considered the foundational level of engineering usability to optimize technology’s design, usability, and usefulness. Similarly, simulation studies that use realistic simulated tasks, clinical environments, and decision-making for summative testing were sparse (4 studies). In addition to predicting effectiveness in clinical practices, such studies can shed light on unintended consequences of CDS before deploying it live, to allow such consequences to be identified and mitigated. We also found that very few studies were guided by theory or conducted to develop theory in this area. Theories used in 8 (29%) of the total studies were diverse, including feminist perspectives, diffusion of innovation, implementation frameworks, novice clinical reasoning model, sociotechnical theory, and the user, function, representation, and task analysis (UFuRT) methodology. We believe that use of theories could accelerate advancements in this field, as others have noted.63

LIMITATIONS

While efforts were made to uphold rigor for an integrative review, limitations are worth noting. We limited our inclusion criteria to papers published in English and thus did not capture research published in other languages and clinical but unstudied/unpublished CDS. Papers with similar research that did not use our broad search criteria may have been automatically excluded during the initial search. Our exclusion rules also caused us to miss some CDS systems that may have been developed for acute care registered nurses. Importantly, because we focused on published peer-reviewed articles, the state of CDS practice in real-world settings is not clear and likely reflects a broader use of CDS that has not infiltrated the published literature. This exclusion rule also meant that we excluded conference papers (eg, AMIA Symposiums) unless they were also published in peer-reviewed journals. However, excluding conference papers is a common practice in reviews because of the difficulty in assessing the adequacy of peer review. We also note that there may be CDS systems that are used by multiple members of the health care team, including RNs, and papers about the effects of EHRs in general, rather than a particular CDS, that were excluded. While all categories we excluded could represent topics that play a needed role in improving patient outcomes and care efficiency, these studies were excluded to align with the study’s purpose to focus on findings from research of CDS targeting the decisions direct-care bedside acute care registered nurses make independently.

Finally, after evaluating several other scoring methods, we note weaknesses in the method selected. The strength of this method is its ability to score both qualitative and quantitative studies,21 but we note that this method did not appropriately weigh the degree of rigor in qualitative studies. This resulted in a lower average quality score for some of the included studies than may be appropriate.

CONCLUSIONS

CDS is used in acute care environments to support direct care nursing decisions with nearly consistent positive effects on outcomes. However, lagging behind counts and the complexity of studies of physician use of CDS, the small number of funded studies identified in this review suggests an increasing and immediate need to allocate research dollars to support nursing CDS research, perhaps outside of the already marginally funded National Institute of Nursing Research budgets. The science of CDS targeting nurses as decision-makers can be greatly accelerated by increasing multisite research, using common outcome measures, and developing a collaborative central repository for tracking and reporting results of CDS projects to strengthen the collective knowledge.

Supplementary Material

Contributors

S.G. and K.D.L. conceived the study, abstracted the articles, and participated in the writing and analysis; R.R. refined the methodology for searching databases and conducted the searches; and J.A. abstracted the articles and contributed to the quality scoring, analysis, and writing. V.S. contributed to article abstraction, analysis, manuscript writing, and revisions. L.P. participated in the title and abstract review. All authors interpreted the data and wrote or revised the article for intellectual content and approved the final version.

Funding

Dr Gephart is supported by the Agency for Healthcare Research and Quality (1K08HS022908-01A1) and the Robert Wood Johnson Foundation Nurse Faculty Scholars Program. Dr Sousa is supported by the Brazilian National Council for Scientific and Technological Development (Postgraduate Fellowship Programme). Content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research Quality, the Robert Wood Johnson Foundation, or the Brazilian National Council for Scientific and Technological Development.

Competing Interests

All authors have completed the Unified Competing Interest form at http://www.icmje.org/coi_disclosure.pdf (available on request from the corresponding author) and declare no financial relationships with any other organizations that might have an interest in the submitted work in the previous 3 years and no other relationships or activities that could appear to have influenced the submitted work.

Supplementary Material

Supplementary material are available at Journal of the American Medical Informatics Association online.

REFERENCES

- 1. Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC; 2001. [Google Scholar]

- 2. Buntin MB, Jain SH, Blumenthal D. Health information technology: laying the infrastructure for national health reform. Health Aff (Millwood) 2010;29(6):1214–1219. [DOI] [PubMed] [Google Scholar]

- 3. Blumenthal D. Launching HITECH. N Engl J Med 2010;362 (5):382–385. [DOI] [PubMed] [Google Scholar]

- 4. Teich JM, Osheroff JA, Pifer EA, Sittig DF, Jenders RA. Clinical decision support in electronic prescribing: recommendations and an action plan. J Am Med Inform Assoc 2005;12(4):365–376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Shortliffe EH, Davis R, Axline SG, et al. Computer-based consultations in clinical therapeutics: explanation and rule acquisition capabilities of the MYCIN system. Comput Biomed Res 1975;8(4):303–320. [DOI] [PubMed] [Google Scholar]

- 6. Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med 2012;157(1):29–43. [DOI] [PubMed] [Google Scholar]

- 7. Fillmore CL, Bray BE, Kawamoto K. Systematic review of clinical decision support interventions with potential for inpatient cost reduction. BMC Med Inform Decis Mak 2013;13:135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ (Clinical research ed.) 2005;330(7494):765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Staggers N, Weir C, Phansalkar S. Advances in patient safety and health information technology: role of the electronic health record. In: Hughes RG, ed. Patient Safety and Quality: An Evidence-Based Handbook for Nurses Rockville, MD: Agency for Healthcare Research and Quality; 2008. [PubMed] [Google Scholar]

- 10. Anderson JA, Willson P. Clinical decision support systems in nursing. Comput Inform Nursing 2008;26(3):151–158. [DOI] [PubMed] [Google Scholar]

- 11. Piscotty R, Kalisch B. Nurses' use of clinical decision support: a literature review. Comput Inform Nursing 2014;32(12):562–568. [DOI] [PubMed] [Google Scholar]

- 12. Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293(10):1223–1238. [DOI] [PubMed] [Google Scholar]

- 13. Whittemore R, Knafl K. The integrative review: updated methodology. J Advanced Nursing 2005;52(5):546–553. [DOI] [PubMed] [Google Scholar]

- 14. Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA Statement. PLoS Med 2009;6(7):e1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Polit DF, Beck CT. Resource Manual for Nursing Research: Generating and Assessing Evidence for Nursing Practice. Wolters Kluwer Health/Lippincott Williams & Wilkins; 2012. [Google Scholar]

- 16. Tastan S, Linch GC, Keenan GM, et al. Evidence for the existing American Nurses Association-recognized standardized nursing terminologies: A systematic review. Int J Nursing Stud 2014;51(8):1160–1170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Deneckere S, Euwema M, Van Herck P, et al. Care pathways lead to better teamwork: results of a systematic review. Soc Sci Med 2012;75(2):264–268. [DOI] [PubMed] [Google Scholar]

- 18. Kullberg A, Larsen J, Sharp L. ‘Why is there another person's name on my infusion bag?’Patient safety in chemotherapy care–A review of the literature. Eur J Oncol Nurs 2013;17(2):228–235. [DOI] [PubMed] [Google Scholar]

- 19. Fore AM, Sculli GL. A concept analysis of situational awareness in nursing. J Adv Nurs 2013;69(12):2613–2621. [DOI] [PubMed] [Google Scholar]

- 20. Pfaff K, Baxter P, Jack S, Ploeg J. An integrative review of the factors influencing new graduate nurse engagement in interprofessional collaboration. J Adv Nurs 2014;70(1):4–20. [DOI] [PubMed] [Google Scholar]

- 21. Olsen J, Baisch MJ. An integrative review of information systems and terminologies used in local health departments. J Am Med Inform Assoc 2014;21(e1):e20–e27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. O'Neill ES, Dluhy NM, Hansen AS, Ryan JR. Coupling the N-CODES system with actual nurse decision-making. Comput Inform Nurs 2006;24(1):28–36. [DOI] [PubMed] [Google Scholar]

- 23. Im EO, Chee W. The DSCP-CA: A decision support computer program—cancer pain management. Comput Inform Nurs 2011;29(5):289–296. [DOI] [PubMed] [Google Scholar]

- 24. Polen HH, Clauson KA, Thomson W, Zapantis A, Lou JQ. Evaluation of nursing-specific drug information PDA databases used as clinical decision support tools. Int J Med Inform 2009;78(10):679–687. [DOI] [PubMed] [Google Scholar]

- 25. Fick DM, Steis MR, Mion LC, Walls JL. Computerized decision support for delirium superimposed on dementia in older adults. J Gerontol Nurs 2011;37(4):39–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Dong SL, Bullard MJ, Meurer DP, et al. Reliability of computerized emergency triage. Acad Emerg Med 2006;13(3):269–275. [DOI] [PubMed] [Google Scholar]

- 27. Lee A, Faddoul B, Sowan A, Johnson KL, Silver KD, Vaidya V. Computerisation of a paper-based intravenous insulin protocol reduces errors in a prospective crossover simulated tight glycaemic control study. Intens Crit Care Nurs 2010;26(3):161–168. [DOI] [PubMed] [Google Scholar]

- 28. Yeh SP, Chang CW, Chen JC, et al. A well-designed online transfusion reaction reporting system improves the estimation of transfusion reaction incidence and quality of care in transfusion practice. Am J Clin Pathol 2011;136(6):842–847. [DOI] [PubMed] [Google Scholar]

- 29. Di Pietro T, Coburn G, Dharamshi N, et al. What nurses want: diffusion of an innovation. J Nurs Care Qual 2008;23(2):140–146. [DOI] [PubMed] [Google Scholar]

- 30. Im E, Chee W. Nurses' acceptance of the decision support computer program for cancer pain management. Comput Inform Nurs 2006;24(2):95–104. [DOI] [PubMed] [Google Scholar]

- 31. Im EO, Chee W. Evaluation of the decision support computer program for cancer pain management. Oncol Nurs Forum 2006;33(5):977–982. [DOI] [PubMed] [Google Scholar]

- 32. Sward K, Orme J, Sorenson D, Baumann L, Morris AH, Reengn Crit Care Clin Res I. Reasons for declining computerized insulin protocol recommendations: applications of a framework. J Biomed Inform 2008;41(3):488–497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Effken JA, Loeb RG, Kang Y, Lin ZC. Clinical information displays to improve ICU outcomes. Int J Med Inform 2008;77(11):765–777. [DOI] [PubMed] [Google Scholar]

- 34. Yuan MJ, Finley GM, Long J, Mills C, Johnson RK. Evaluation of user interface and workflow design of a bedside nursing clinical decision support system. J Med Internet Res 2013;2(1):e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Alvey B, Hennen N, Heard H. Improving accuracy of pressure ulcer staging and documentation using a computerized clinical decision support system. J Wound Ostomy Continence Nurs 2012;39(6):607–612. [DOI] [PubMed] [Google Scholar]

- 36. Campion TR, Jr, Waitman LR, Lorenzi NM, May AK, Gadd CS. Barriers and facilitators to the use of computer-based intensive insulin therapy. Int J Med Inform 2011;80(12):863–871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Chin EF, Sosa M, O'Neill ES. The N-CODES project moves to user testing. Comput Inform Nurs 2006;24(4):214–219. [DOI] [PubMed] [Google Scholar]

- 38. Cho I, Staggers N, Park I. Nurses' responses to differing amounts and information content in a diagnostic computer-based decision support application. Comput Inform Nurs 2010;28(2):95–102. [DOI] [PubMed] [Google Scholar]

- 39. Dumont C, Bourguignon C. Effect of a computerized insulin dose calculator on the process of glycemic control. Am J Crit Care 2012;21(2):106–115. [DOI] [PubMed] [Google Scholar]

- 40. Hoekstra M, Vogelzang M, Drost JT, et al. Implementation and evaluation of a nurse-centered computerized potassium regulation protocol in the intensive care unit—a before and after analysis. BMC Med Inform Decis Mak 2010;10:5–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Lee T. Nurses' perceptions of their documentation experiences in a computerized nursing care planning system. J Clin Nurs 2006;15(11):1376–1382. [DOI] [PubMed] [Google Scholar]

- 42. Lyerla F, LeRouge C, Cooke DA, Turpin D, Wilson L. A nursing clinical decision support system and potential predictors of head-of-bed position for patients receiving mechanical ventilation. Am J Crit Care 2010;19(1):39–47. [DOI] [PubMed] [Google Scholar]

- 43. Narasimhadevara A, Radhakrishnan T, Leung B, Jayakurnar R. On designing a usable interactive system to support transplant nursing. J Biomed Inform 2008;41(1):137–151. [DOI] [PubMed] [Google Scholar]

- 44. Ng CJ, Yen ZS, Tsai JCH, et al. Validation of the Taiwan triage and acuity scale: a new computerised five-level triage system. Emerg Med J 2011;28(12):1026–1031. [DOI] [PubMed] [Google Scholar]

- 45. Okon TR, Lutz PS, Liang H. Improved pain resolution in hospitalized patients through targeting of pain mismanagement as medical error. J Pain Symptom Manag 2009;37(6):1039–1049. [DOI] [PubMed] [Google Scholar]

- 46. Sawyer AM, Deal EN, Labelle AJ, et al. Implementation of a real-time computerized sepsis alert in nonintensive care unit patients. Crit Care Med 2011;39(3):469–473. [DOI] [PubMed] [Google Scholar]

- 47. Sidebottom AC, Collins B, Winden TJ, Knutson A, Britt HR. Reactions of nurses to the use of electronic health record alert features in an inpatient setting. Comput Inform Nurs 2012;30(4):218–226;quiz 227-218. [DOI] [PubMed] [Google Scholar]

- 48. Tseng KJ, Liou TH, Chiu HW. Development of a computer-aided clinical patient education system to provide appropriate individual nursing care for psychiatric patients. J Med Sys 2012;36(3):1373–1379. [DOI] [PubMed] [Google Scholar]

- 49. Anders S, Albert R, Miller A, et al. Evaluation of an integrated graphical display to promote acute change detection in ICU patients. Int J Med Inform 2012;81(12):842–851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Nielsen J. Usability Engineering. Mountain View, CA: Morgan Kaufmann; 1993. [Google Scholar]

- 51. Boustani M, Munger S, Beck R, Campbell N, Weiner M. A gero-informatics tool to enhance the care of hospitalized older adults with cognitive impairment. Clin Intervent Aging 2007;2(2):247–253. [PMC free article] [PubMed] [Google Scholar]

- 52. Di Pietro TL, Nguyen H, Doran DM. Usability evaluation results from “evaluation of mobile information technology to improve nurses' access to and use of research evidence.” Comput Inform Nurs 2012;30(8):440–448. [DOI] [PubMed] [Google Scholar]

- 53. Su KW, Liu CL. A mobile nursing information system based on human-computer interaction design for improving quality of nursing. J Med Sys 2012;36(3):1139–1153. [DOI] [PubMed] [Google Scholar]

- 54. Lyerla F. Design and implementation of a nursing clinical decision support system to promote guideline adherence. Comput Inform Nurs 2008;26(4):227–233. [DOI] [PubMed] [Google Scholar]

- 55. Nielsen J. What is usability. In: Nielsen J, ed. Usability Engineering. Mountain View, CA: Elsevier; 1994. [Google Scholar]

- 56. Brooke J. SUS-A quick and dirty usability scale. Usability Eval Industry 1996;189(194):4–7. [Google Scholar]

- 57. Lewis JR. Psychometric evaluation of the PSSUQ using data from five years of usability studies. Int J Human-Comput Interact 2002;14(3-4):463–488. [Google Scholar]

- 58. Venkatesh V, Morris MG, Davis GB, Davis FD. User acceptance of information technology: toward a unified view. MIS Quart 2003;27(3):425–478. [Google Scholar]

- 59. Hao AT-H, Wu L-P, Kumar A, et al. Nursing process decision support system for urology ward. Int J Med Inform 2013;82(7):604–612. [DOI] [PubMed] [Google Scholar]

- 60. Nahm E, Resnick B, Mills ME. Development and pilot-testing of the perceived health Web site usability questionnaire (PHWSUQ) for older adults. Stud Health Technol Inform 2006;122:38. [PubMed] [Google Scholar]

- 61. Cho I, Staggers N, Park I. Nurses' responses to differing amounts and information content in a diagnostic computer-based decision support application. Comput Inform Nurs 2010;28(2):95–102. [DOI] [PubMed] [Google Scholar]

- 62. Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Policy Mental Health 2011;38(2):65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Khong PC, Holroyd E, Wang W. A critical review of the theoretical frameworks and the conceptual factors in the adoption of clinical decision support systems. Comput Inform Nurs 2015;33(12):555–570. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.