Abstract

Objective: To better understand clinician information needs and learning opportunities by exploring the use of best-practice algorithms across different training levels and specialties.

Methods: We developed interactive online algorithms (care process models [CPMs]) that integrate current guidelines, recent evidence, and local expertise to represent cross-disciplinary best practices for managing clinical problems. We reviewed CPM usage logs from January 2014 to June 2015 and compared usage across specialty and provider type.

Results: During the study period, 4009 clinicians (2014 physicians in practice, 1117 resident physicians, and 878 nurse practitioners/physician assistants [NP/PAs]) viewed 140 CPMs a total of 81 764 times. Usage varied from 1 to 809 views per person, and from 9 to 4615 views per CPM. Residents and NP/PAs viewed CPMs more often than practicing physicians. Among 2742 users with known specialties, generalists (N = 1397) used CPMs more often (mean 31.8, median 7 views) than specialists (N = 1345; mean 6.8, median 2; P < .0001). The topics used by specialists largely aligned with topics within their specialties. The top 20% of available CPMs (28/140) collectively accounted for 61% of uses. In all, 2106 clinicians (52%) returned to the same CPM more than once (average 7.8 views per topic; median 4, maximum 195). Generalists revisited topics more often than specialists (mean 8.8 vs 5.1 views per topic; P < .0001).

Conclusions: CPM usage varied widely across topics, specialties, and individual clinicians. Frequently viewed and recurrently viewed topics might warrant special attention. Specialists usually view topics within their specialty and may have unique information needs.

Keywords: point-of-care learning, critical pathways, decision support systems, clinical, practice guidelines as topic

INTRODUCTION

Although estimates vary, clinicians typically identify a clinical question (ie, a question that could potentially be answered by referencing a knowledge resource) for at least half of the patients they see.1,2 Yet they seek answers for only about half of these questions.2–5 The barriers to answering clinical questions remain incompletely understood, but include lack of time, information overload, difficulty selecting among various knowledge resources, and doubt that an answer will be easily found.2,6–10 Helping clinicians to overcome these information-seeking barriers could enhance patient care and facilitate lifelong learning.11–14

Computer-based information technologies have long been proposed as a means to support information seeking at the point of care.15–18 Various computer knowledge resources exist for this purpose, including information repositories (such as UpToDate, http://www.uptodate.com, and Micromedex, www.micromedexsolutions.com), diagnosis support tools (such as DXplain, www.mghlcs.org/projects/dxplain), literature search resources (such as PubMed, www.pubmed.gov), and context-sensitive links in the electronic health record (EHR) (such as infobuttons19,20). These and other tools vary widely in their intended purpose (ie, diagnosis, treatment options, drug dosing, or patient education) and in the degree to which they incorporate essential design features such as efficiency, integration with the clinical workflow, credibility, and reflection of local best practices.17,21

Understanding clinician information needs constitutes an important step in developing effective, efficient computer knowledge resources. Analyzing clinicians' use of knowledge resources during their daily workflow offers one useful approach to investigating such needs. This has been done with, for example, infobuttons,19,20 a commercial resource (UpToDate22), and a library Web page.23 Work to date has emphasized the frequency of questions2 and highlighted differences across clinical roles (eg, physician vs nurse) and EHR context (problem list, lab result, treatment).20,24,25 Less is known about the specific topics pursued, and especially how topics vary across clinical specialties and training levels.

The purpose of this study was to characterize clinician information needs and learning opportunities by exploring the use of clinical best-practice algorithms (locally developed “care process models” [CPMs]) across different training levels and specialties. Specific questions and anticipated results included:

How often do clinicians access CPMs, and what are the most frequently accessed topics? We expected that a small number of model topics (largely reflecting conditions frequently seen in primary care practice) would account for the majority of use.

How does usage vary across specialties? We expected that generalists would use CPMs more often than specialists, and that specialists would most often access topics unrelated to their own specialty.

How often do clinicians return to the same topic? We expected that individual clinicians would recurrently visit the same model.

METHODS

This was a retrospective study using data from computer logs. The study was deemed exempt by the Mayo Clinic Institutional Review Board.

Care process model development and description

Within our institution, we recognized the need for a tool that would provide rapid, relevant answers that reflect institution-accepted best practices. To meet this need, we developed MayoExpert,26 a multipronged online platform that unifies 5 core elements: (1) a repository of concise and clinically relevant information, (2) CPMs (described below), (3) an expert directory, (4) clinical notifications of high-priority test results, and (5) links to other information and resources (eg, clinical calculators, patient education materials). Two previously described26 elements of MayoExpert, self-assessment questions and a learning portfolio, were no longer fully implemented during the time of this study. This study focused on the usage of CPMs.

We developed CPMs as online best-practice algorithms for managing clinical problems.27 The intent was not to replace national guidelines, but to facilitate the application of guideline recommendations in practice. Model content integrated guidelines available from national expert panels, government task forces, and professional organizations, and supplemented and extended guideline-based recommendations with emerging evidence and local expertise. These recommendations were distilled into a format that clinicians could quickly and easily apply at the point of care. For example, when guidelines allowed selection among equally effective diagnostic or treatment pathways, CPMs would highlight an institution-preferred approach if such existed (eg, a cost-effective test or drug). Models also reflected, when possible, practical aspects of care within our health system (eg, when and how to request a specific test, procedure, or referral). Each model was developed by a team of at least 1 physician content expert, a clinician with expertise in algorithm elicitation and development, and a user experience designer. Each model was revised as needed and then approved by other topic-relevant experts at each of the Mayo sites (in Rochester, Minnesota, Jacksonville, Florida, Scottsdale/Phoenix, Arizona, and a group of community practices in Minnesota, Iowa, and Wisconsin). The authoring expert and a content board of at least 3 subspecialist experts reviewed each model annually. Models thus represented cross-disciplinary institutional best practices for that clinical problem. Model development was prioritized toward problems with evolving standards (eg, revisions to national guidelines), complex or institution-specific management approaches, suboptimal cost or practice patterns, or potential patient safety issues. Most topics during the study period reflected medical (vs surgical) practice and addressed specific diagnoses rather than symptoms.

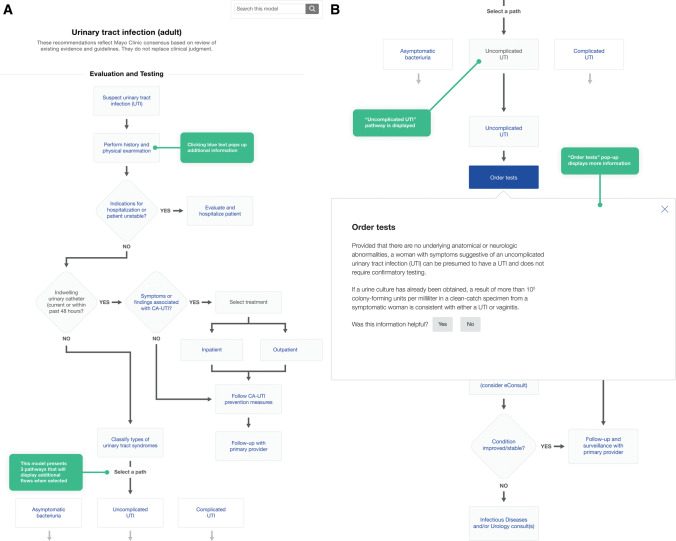

Each model consisted of several nodes reflecting key decision or action points (see Figure 1, and the more detailed example in Supplementary eFigure 1). Information was provided at each node (eg, elements of history to solicit from the patient or medical record, suggested tests and their interpretation, or potential management strategies) to guide clinicians in making decisions or taking needed actions. Nodes and branches expanded or collapsed, and additional information was revealed or hidden, as users clicked on interactive links. CPMs were accessible online from any computer or mobile device with appropriate institutional access (Mayo sites or affiliated partners). We actively sought and responded to user suggestions to improve both model content and functionality. We supported integration into the clinical workflow by embedding direct links to CPMs from EHR test result notification messages for topics including prolonged QT, heart failure with reduced ejection fraction, and ST-elevation myocardial infarction, and by including models among the resources indexed by EHR infobuttons. High-priority updated or new topics were commonly announced in staff newsletters at the time of release.

Figure 1.

Typical care process model. (A) Screenshot of the Urinary Tract Infection (Adult) model, illustrating the decision nodes and expandable pathways. (B) Screenshot illustrating the additional information available as a popup within most model elements.

Data collection

We obtained raw data on CPM use, including topic and clinician unique identifier, from computer log files from January 1, 2014, to June 30, 2015. We matched unique identifier with clinician training level and, when available, main clinical specialty. We classified as “generalists” all clinicians who practiced or trained in family medicine, internal medicine, or pediatrics without a subspecialty designation. All other clinicians were classified as specialists.

Data analysis

Given the skewed distribution of usage rates, we report means, medians, and ranges but not standard deviations. We used nonparametric tests to compare usage rates across demographic subgroups: we used the Wilcoxon rank-sum test to compare generalists and specialists, and general linear models applied to ranked usage data for comparisons across training levels. We analyzed changes in the monthly usage rates (standardized to 30 days) for the top 10 topics across all users over the final 12 months.

RESULTS

By January 2014 we had developed 106 models, and as of June 30, 2015, we had 140 models. Over the 18-month study period, 4009 clinicians (60% of approximately 6700 clinicians with access) viewed CPMs a total of 81 764 times. These clinicians comprised 2014 physicians in practice, 1117 postgraduate physician trainees (residents and fellows), and 878 nurse practitioners (NPs) or physician assistants (PAs). Usage varied from 1 to 809 views per person, and from 9 to 4615 views per model. As shown in Table 1, postgraduate trainees used models most often, followed closely by NP/PAs (usage rates not statistically significantly different, P = .99), and then by physicians in practice (P < .001 compared with both other groups). Monthly usage varied widely, starting at 2655 views in January 2014, peaking at 6421 views in December 2014, and ending at 5313 views in June 2015 (all usage standardized to a 30-day month; see Supplementary eFigure 2).

Table 1.

Overall frequency of use of care process models over 18 months, by training status and specialty

| Demographic | Role or specialty (no. of users) | Total uses per persona | Repeat use (same user, same model)a |

|---|---|---|---|

| Training | Resident physician (N = 1117) | 26.9 (4; 602) | 2.7 (1; 104) |

| Nurse practitioner/physician assistant (N = 878) | 24.5 (4; 809) | 3.0 (1; 120) | |

| Board-certified physician (N = 2014) | 15.0 (3; 577) | 2.4 (1; 195) | |

| Generalistb | Generalist (N = 1397) | 31.8 (7; 809) | 2.8 (1; 129) |

| Specialist (N = 1345) | 6.8 (2; 161) | 1.9 (1; 78) | |

| Specialtyb | Internal medicine, general (N = 383) | 40.1 (11; 577) | 2.8 (1; 129) |

| General practice nurse practitioner/physician assistant (N = 608)b | 31.3 (6; 809) | 3.1 (1; 120) | |

| Family medicine (N = 343)b | 25.2 (7; 278) | 2.3 (1; 100) | |

| Pediatrics, general (N = 63)b | 22.4 (4; 187) | 3 (1; 36) | |

| Emergency/urgent care (N = 104) | 10.4 (4; 152) | 2.1 (1; 19) | |

| Obstetrics-gynecology (N = 52) | 9.8 (3.5; 95) | 2.7 (1; 78) | |

| Internal medicine specialty (N = 514) | 9.3 (3; 161) | 2 (1; 67) | |

| Pediatric specialty (N = 39) | 8.6 (2; 134) | 2.3 (1; 22) | |

| Diagnostic (laboratory, pathology) (N = 32) | 6.7 (2; 89) | 1.7 (1; 16) | |

| Physical medicine (N = 35) | 7.6 (3; 63) | 1.8 (1; 52) | |

| Hospitalist (N = 35) | 5.9 (4; 48) | 1.7 (1; 11) | |

| Neurology (N = 91) | 4.4 (3; 32) | 1.4 (1; 28) | |

| Surgery (N = 119) | 3.0 (2; 31) | 1.3 (1; 8) | |

| Anesthesiology (N = 148) | 2.9 (1.5; 37) | 1.6 (1; 21) | |

| Radiology (N = 82) | 2.9 (2; 29) | 1.5 (1; 17) | |

| Psychiatry (N = 63) | 2.8 (2; 13) | 1.4 (1; 9) | |

| Dermatology (N = 26) | 2.5 (2; 9) | 1.3 (1; 4) | |

| Other clinical specialty (N = 5) | 1.4 (1; 2) | 1 (1; 1) |

aMean number of views (median; maximum); minimum of 1 view required for inclusion in this study.

bInformation on specialty was available for 2742 clinicians. Clinicians in general internal medicine, family medicine, and pediatrics were classified as generalists; all others were classified as specialists.

We were able to determine the clinical specialty for 2742 users (68%). Among these, generalists (N = 1397) used models much more often (mean 31.8, median 7 views) than specialists (N = 1345; mean 6.8, median 2; P < .0001); see Table 1. We also examined use across medical and surgical specialties. As shown in Table 1, clinicians in general internal medicine used models more often (per clinician) than those in family medicine or pediatric practice. Those in turn used models more often than emergency medicine clinicians, internal medicine subspecialists, or other surgical and medical specialists.

Table 2 lists the 30 most frequently viewed topics for all clinician types and specialties, while Table 3 reports the top 10 topics for selected specialties. Across all specialties, frequently visited topics reflect a mix of outpatient (eg, screening recommendations and hyperlipidemia) and acute care/inpatient (eg, Clostridium difficile colitis, myocardial infarction) medical problems. Contrary to our expectation, the topics used by specialists (Table 3) largely aligned with topics specific to their specialty (eg, gastroenterologists most often viewed topics related to bowel and liver disease rather than cardiology, and cardiologists most often viewed topics related to heart disease). The least-viewed topics focused on narrow patient populations (eg, Joint Infections [Pediatric], 12 views) or niche specialty issues (eg, Cardiac Surgery Excessive Bleeding, 20 views; Enhanced Recovery Pathway: Elective Colorectal Surgery, 25 views).

Table 2.

Thirty most highly used care process models over 18 months

| Topic (no. of uses; % of totala) |

|---|

|

aA total of 81 764 uses across all 140 topics and 4009 clinicians. Repeated uses by clinicians are included in this total, but maximum 1 use per topic per day per clinician.

Table 3.

Most highly used care process models for selected specialties over 18 months

| Specialty (no. of clinicians in that specialtya) | Topic (no. of uses by clinicians in that specialty) |

|---|---|

| Generalistb (1397 clinicians) |

|

| Specialistb (1345 clinicians) |

|

| Cardiology (141 clinicians) |

|

| Endocrinology (48 clinicians) |

|

| Gastroenterology (93 clinicians) |

|

| Emergency medicine/urgent care (104 clinicians) |

|

| Neurology (91 clinicians) |

|

| Surgery (N = 119 clinicians) |

|

aNumber of clinicians who used at least 1 care process model.

bClinicians in general internal medicine, family medicine, and pediatrics were classified as generalists; all others were classified as specialists.

We explored whether usage followed the Pareto principle, ie, that a small percentage of topics would account for the majority of usage. We found that the top 20% of available models (28/140) collectively accounted for 61% of uses, and 80% of total uses arose from the top 54 models (38%).

In all, 2106 clinicians (52%) returned to the same model topic more than once over the 18-month study period, with an average of 7.8 views per topic (median 4, maximum 195); 733 clinicians revisited the same topic >5 times and 168 revisited the same topic >20 times. Generalists (N = 926) revisited topics more often than specialists (N = 560; mean 8.8 vs 5.1 views [median 4 vs 3]; P < .0001).

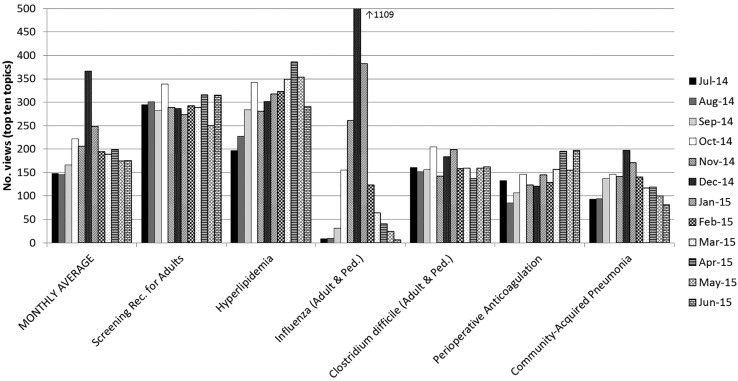

We examined the variation in usage over time for the 10 most frequently viewed topics from July 2014 to June 2015. Figure 2 shows a subset of these data (6 topics) to illustrate some of these trends, while Supplementary eFigure 3 shows all 10 topics. Two seasonal illnesses (influenza and community-acquired pneumonia) showed a peak in December–January, and in fact influenza accounted for 18% (1109/6421) of all views in December 2014. Other topics, such as screening recommendations for adults and Clostridium difficile colitis, remained nearly flat, while others, such as hyperlipidemia and perioperative anticoagulation, seemed to show an overall rise over time. The latter upward trends could reflect ongoing institutional efforts to increase the visibility of new hyperlipidemia guidelines and the appropriate periprocedural management of novel anticoagulants, which might have prompted clinicians to seek additional guidance on these topics.

Figure 2.

Care process model usage for select topics over 12 months. This figure illustrates usage patterns for models with relatively stable use over time (Screening Rec. for Adults and Clostridium difficile), models with seasonal variation in usage (Influenza and Community-Acquired Pneumonia), and models addressing topics relevant to institution-wide quality improvement efforts (Hyperlipidemia and Perioperative Anticoagulation). Numbers of views per month were standardized to a 30-day period. Abbreviations: Rec = recommendation; Ped = pediatrics.

DISCUSSION

We created over 140 CPMs defining institution-accepted best practices in the form of interactive online algorithms. During an 18-month period, over 60% of the clinicians with access used these models at least once, with some clinicians using them with high frequency. A relatively small subset of topics accounted for the vast majority of use, and many individuals repeatedly visited the same model. Generalists used models more often than specialists, and postgraduate trainees and NPs/PAs used models more often than board-certified physicians. Specialists tended to access topics related to their own specialty. We continue to create new CPMs, update existing models, and explore how to more effectively integrate these into clinicians' workflow.

Limitations

The data in this retrospective study are limited to usage and simple demographics. Although our purpose was to understand information needs, we evaluated this indirectly by analyzing information-seeking behaviors. We do not know the denominators for questions (ie, the total number of questions raised, including those not pursued, those pursued using other knowledge resources, or those that remained unanswered), clinicians within specialties (eg, the number of cardiologists vs the number of anesthesiologists), or the time spent in practice by a given clinician (which would determine in part the number of patients seen and thereby the number of questions generated). Regarding the latter, it is likely that residents, NPs, and PAs spend a higher proportion of their work hours in clinical practice than physicians, who have more time allotted to other academic responsibilities. We also do not know the actual clinical questions pursued nor how often answers were found in the CPMs. Perceived success with initial use (eg, finding answers and usability) might influence subsequent usage, which in turn could have influenced results generally (across all topics) or for specific topics. Finally, these data reflect 1 institution’s experience, and the topics emphasized specific diagnoses rather than symptoms. Relative usage frequencies (eg, over time or across specialties) may support more generalizable conclusions than absolute frequencies.

Strengths include the large sample size, the detailed information on topics pursued, and the granularity of specialty (known for two-thirds of the clinicians).

Integration with previous work

This study contributes to our understanding of the nature of clinical work28 and complements prior studies exploring the frequency and type of clinical questions,1–3,5,29 the barriers encountered in quickly answering clinical questions,6,7,9 and the type of resources employed in seeking such answers.16,17,30 Our work further builds upon studies exploring information needs using infobuttons19,20 and UpToDate,22 clarifying the topics pursued and highlighting between-specialty differences in information-seeking behaviors.

Wide variation in clinical practice31 has been well demonstrated at national,32 regional,33,34 and local35 levels. Best-practice algorithms such as CPMs could, in theory, help to standardize practice within and between institutions36,37 and improve the quality, efficiency, and overall value of patient care. Our CPMs are intended to facilitate the point-of-care application of best practices, and other institutions have developed clinical care pathways with similar intent, including Standardized Clinical Assessment and Management Plans,38 Institute for Clinical Systems Improvement guidelines (www.icsi.org/guidelines__more), and Intermountain Health Care CPMs (https://intermountainphysician.org/clinical/Pages/Care-Process-Models-(CPMs).aspx). However, the aspirational goal of practice standardization must be counterbalanced by the unique needs of individual patients38 and the logistical, legal, and financial differences that exist across institutions, social contexts, and regulatory environments.26

Implications for current practice and future research

Our findings have implications for current practice. First, we examined the usage patterns of generalists and specialists and used these patterns to identify high-priority information needs. We note that a subset of topics accounts for the majority of use, although the distribution was wider than the “classic” Pareto distribution (ie, 20% of topics accounted for only 61% of usage rather than the classic 80%). These highly used topics might warrant greater attention through, for example, more rigorous content development, more frequent updates, more robust usability testing, and development of models on related subtopics. We also speculate (based on anecdotal feedback) that in some cases clinicians recurrently seek a specific resource that could be made available more efficiently in other ways (eg, medication dosing table, timeline for follow-up, risk calculator, decision aid, or patient education handout). Finally, cataloging the topics commonly sought by clinicians can help to prioritize other efforts to support clinician decisions (eg, through infobuttons19,20 or other decision support tools22) and shared decision-making with patients.39,40

Second, usage varied widely across individuals (1–809 views per person) and specialties (within-specialty average 1.4–40.1 views per person). The higher use by generalists likely reflects a combination of the information needs of this group and our initial targeting of this audience in topic selection and level of detail. We do not know what prompted a subset of clinicians to use models with very high frequency, and conversely we do not know why 40% of potential users never accessed a model during the period of this study. Low usage and nonusage might reflect preferences for other knowledge resources, the absence of relevant topics, access difficulties, insufficient time to seek answers to clinical questions, management of patient problems that do not generate new questions (eg, niche subspecialist), or less time dedicated to clinical practice. Regular use of institution-approved knowledge resources has the potential advantage of updating and standardizing the practice. Future work might explore what factors promote and inhibit use, when and how clinicians prefer to answer their clinical questions, and how clinicians determine when the information they get is sufficient to answer their question.6 Cataloging clinical questions that remain unanswered would help prioritize topics for future development.

Third, some clinicians commonly revisit the same topics numerous times. Such recurrent use might reflect a focused need for a given resource accessible from the model (eg, medication dosing). However, recurrent use might also reflect a failure to retain knowledge gained during an earlier visit. Information obtained when using a knowledge resource can facilitate immediate decision-making without any lasting memory (“decision support”); if the same question subsequently recurs, the user will need to revisit the knowledge resource. Alternatively, information can be linked with existing knowledge structures and stored for future application without the need to rely on external support (“learning”). We suspect that much point-of-care learning is really point-of-care decision support, and that true learning may at times be minimal. Decision support as thus defined isn't necessarily bad, provided that the knowledge resource can efficiently provide an answer each time it is required. True learning is most needed when seeking an answer impairs clinical efficiency or when the knowledge resource is not immediately available. The balance between decision support and learning, and how and when to promote the latter, is an important topic for future study. Future work might also explore other factors that prompt repeated use and investigate how online resources could be optimized to help clinicians efficiently answer questions they recurrently encounter. Finally, at the other extreme, it will at times be necessary to notify users who do not recurrently revisit a topic that they should do so, eg, after a practice change or if evidence emerges that their practice is not aligned with current standards.

Finally, given the frequent use by generalists, it makes sense to continue to target them in the development and implementation of current and future models. This raises questions about their unique information needs, not only in topics (which we have cataloged herein) but also in the depth, breadth, and presentation of information. Generalists seem to prefer answers that are concise and straightforward.41 However, although the overall usage per specialist was much lower, the number of specialist and generalist users was nearly the same. Specialists may reflect a large potential audience with possibly unique information needs. Our finding that specialists most often viewed topics related to their own specialty is particularly salient, and suggests the need for further development of focused topics within a given specialty. It also raises questions about the ideal depth, breadth, and presentation of information for specialists, which might differ from that for generalists (eg, specialists might prefer longer answers that more completely capture the complexity of issues for which evidence is incomplete or controversial). Research comparing the information-seeking needs of generalists and specialists is limited.41

Conclusions

CPM usage varied widely across topics, individuals, and specialties; future work might identify factors that promote and inhibit use. High-usage topics might warrant greater attention in development and maintenance. We identify 2 complementary purposes in point-of-care knowledge resources, namely decision support (which might require recurrent use with subsequent, similar decisions) and promotion of learning (which lessens the need for recurrent use). Specialists constitute a large potential audience with possibly unique information needs.

Supplementary Material

ACKNOWLEDGMENTS

The authors wish to thank John B Bundrick, Scott D Eggers, Michelle L Felten, Aida N Lteif, Rick A Nishimura, Steve R Ommen, Jane L Shellum, and Sam D Smelter for their involvement in the creation, implementation, and continued growth of care process models at Mayo Clinic.

Contributors

Authors KJS, JAL, and DJR were intimately involved in developing the care process models. DAC conceived the study, analyzed data, and drafted and revised the manuscript. KJS and LJP aided in acquisition of data. All authors participated in data interpretation, manuscript revisions, and approval of the final manuscript.

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sector.

Competing interests

We have no competing interests to declare.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

References

- 1. Smith R. What clinical information do doctors need? BMJ. 1996;313:1062–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Del Fiol G, Workman TE, Gorman PN. Clinical questions raised by clinicians at the point of care: a systematic review. JAMA Intern Med. 2014;174:710–18. [DOI] [PubMed] [Google Scholar]

- 3. Ely JW, Osheroff JA, Ebell MH et al. . Analysis of questions asked by family doctors regarding patient care. BMJ. 1999;319:358–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Davies K, Harrison J. The information-seeking behaviour of doctors: a review of the evidence. Health Info Libr J. 2007;24:78–94. [DOI] [PubMed] [Google Scholar]

- 5. Coumou HC, Meijman FJ. How do primary care physicians seek answers to clinical questions? A literature review. J Med Libr Assoc. 2006;94:55–60. [PMC free article] [PubMed] [Google Scholar]

- 6. Cook DA, Sorensen KJ, Wilkinson JM, Berger RA. Barriers and decisions when answering clinical questions at the point of care: a grounded theory study. JAMA Internal Med. 2013;173:1962–69. [DOI] [PubMed] [Google Scholar]

- 7. Ely JW, Osheroff JA, Ebell MH et al. . Obstacles to answering doctors' questions about patient care with evidence: qualitative study. BMJ. 2002;324:710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Ely JW, Osheroff JA, Chambliss ML, Ebell MH, Rosenbaum ME. Answering physicians' clinical questions: obstacles and potential solutions. J Am Med Inform Assoc. 2005;12:217–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Green ML, Ruff TR. Why do residents fail to answer their clinical questions? A qualitative study of barriers to practicing evidence-based medicine. Acad Med. 2005;80:176–82. [DOI] [PubMed] [Google Scholar]

- 10. Bennett NL, Casebeer LL, Zheng S, Kristofco R. Information-seeking behaviors and reflective practice. J Contin Educ Health Prof. 2006;26:120–27. [DOI] [PubMed] [Google Scholar]

- 11. Reed DA, West CP, Holmboe ES et al. . Relationship of electronic medical knowledge resource use and practice characteristics with Internal Medicine Maintenance of Certification Examination scores. J Gen Intern Med. 2012;27:917–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. McDonald FS, Zeger SL, Kolars JC. Factors associated with medical knowledge acquisition during internal medicine residency. J Gen Intern Med. 2007;22:962–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Isaac T, Zheng J, Jha A. Use of UpToDate and outcomes in US hospitals. J Hosp Med. 2012;7:85–90. [DOI] [PubMed] [Google Scholar]

- 14. Bonis PA, Pickens GT, Rind DM, Foster DA. Association of a clinical knowledge support system with improved patient safety, reduced complications and shorter length of stay among Medicare beneficiaries in acute care hospitals in the United States. Int J Med Inform. 2008;77:745–53. [DOI] [PubMed] [Google Scholar]

- 15. Garg AX, Adhikari NKJ, McDonald H et al. . Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293:1223–38. [DOI] [PubMed] [Google Scholar]

- 16. Chaudhry B, Wang J, Wu S et al. . Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144:742–52. [DOI] [PubMed] [Google Scholar]

- 17. Lobach D, Sanders GD, Bright TJ et al. . Enabling Health Care Decisionmaking Through Clinical Decision Support and Knowledge Management. Evidence Report No. 203 .Rockville, MD: Agency for Healthcare Research and Quality; 2012. [PMC free article] [PubMed] [Google Scholar]

- 18. Jones SS, Rudin RS, Perry T, Shekelle PG. Health information technology: an updated systematic review with a focus on meaningful use. Ann Int Med .2014;1601:48–54. [DOI] [PubMed] [Google Scholar]

- 19. Chen ES, Bakken S, Currie LM, Patel VL, Cimino JJ. An automated approach to studying health resource and infobutton use. Stud Health Technol Inform. 2006;122:273–78. [PubMed] [Google Scholar]

- 20. Del Fiol G, Cimino JJ, Maviglia SM, Strasberg HR, Jackson BR, Hulse NC. A large-scale knowledge management method based on the analysis of the use of online knowledge resources. AMIA Annu Symp Proc. 2010;2010:142–46. [PMC free article] [PubMed] [Google Scholar]

- 21. Cook DA, Sorensen KJ, Hersh W, Berger RA, Wilkinson JM. Features of effective medical knowledge resources to support point of care learning: a focus group study. PLoS ONE. 2013;811:e80318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Callahan A, Pernek I, Stiglic G, Leskovec J, Strasberg HR, Shah NH. Analyzing information seeking and drug-safety alert response by health care professionals as new methods for surveillance. J Med Internet Res. 2015;17:e204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Bracke PJ. Web usage mining at an academic health sciences library: an exploratory study. J Med Libr Assoc. 2004;92:421–28. [PMC free article] [PubMed] [Google Scholar]

- 24. Cimino JJ. The contribution of observational studies and clinical context information for guiding the integration of infobuttons into clinical information systems. AMIA Annual Symp Proc/AMIA Symp. 2009;2009:109–13. [PMC free article] [PubMed] [Google Scholar]

- 25. Hunt S, Cimino JJ, Koziol DE. A comparison of clinicians' access to online knowledge resources using two types of information retrieval applications in an academic hospital setting. J Med Library Assoc. 2013;1011:26–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Cook DA, Sorensen KJ, Nishimura RA, Ommen SR, Lloyd FJ. A comprehensive system to support physician learning at the point of care. Acad Med. 2015;90:33–39. [DOI] [PubMed] [Google Scholar]

- 27. Society for Medical Decision Making Committee on Standardization of Clinical Algorithms. Proposal for clinical algorithm standards. Med Decis Making. 1992;122:149–54. [PubMed] [Google Scholar]

- 28. Wears RL, Berg M. Computer technology and clinical work: still waiting for Godot. JAMA. 2005;293:1261–63. [DOI] [PubMed] [Google Scholar]

- 29. Gonzalez-Gonzalez AI, Dawes M, Sanchez-Mateos J et al. . Information needs and information-seeking behavior of primary care physicians. Ann Fam Med. 2007;5:345–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Roshanov PS, Fernandes N, Wilczynski JM et al. . Features of effective computerised clinical decision support systems: meta-regression of 162 randomised trials. BMJ. 2013;346:f657. [DOI] [PubMed] [Google Scholar]

- 31. Wennberg JE. Unwarranted variations in healthcare delivery: implications for academic medical centres. BMJ. 2002;325:961–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Corallo AN, Croxford R, Goodman DC, Bryan EL, Srivastava D, Stukel TA. A systematic review of medical practice variation in OECD countries. Health Policy. 2014;114:5–14. [DOI] [PubMed] [Google Scholar]

- 33. Sheffield KM, Han Y, Kuo YF, Riall TS, Goodwin JS. Potentially inappropriate screening colonoscopy in Medicare patients: variation by physician and geographic region. JAMA Intern Med. 2013;173:542–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Tu JV, Ko DT, Guo H et al. . Determinants of variations in coronary revascularization practices. CMAJ. 2012;184:179–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. McKinlay JB, Link CL, Freund KM, Marceau LD, O'Donnell AB, Lutfey KL. Sources of variation in physician adherence with clinical guidelines: results from a factorial experiment. J Gen Intern Med. 2007;22:289–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Marrie TJ, Lau CY, Wheeler SL, Wong CJ, Vandervoort MK, Feagan BG. A controlled trial of a critical pathway for treatment of community-acquired pneumonia. CAPITAL Study Investigators. JAMA. 2000;283:749–55. [DOI] [PubMed] [Google Scholar]

- 37. Panella M, Marchisio S, Demarchi ML, Manzoli L, Di Stanislao F. Reduced in-hospital mortality for heart failure with clinical pathways: the results of a cluster randomised controlled trial. Qual Saf Health Care. 2009;185:369–73. [DOI] [PubMed] [Google Scholar]

- 38. Farias M, Jenkins K, Lock J et al. . Standardized Clinical Assessment and Management Plans (SCAMPs) provide a better alternative to clinical practice guidelines. Health Aff (Millwood). 2013;325:911–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Barry MJ, Edgman-Levitan S. Shared decision making: the pinnacle of patient-centered care. New Engl J Med. 2012;366:780–81. [DOI] [PubMed] [Google Scholar]

- 40. Stacey D, Légaré F, Col NF et al. . Decision aids for people facing health treatment or screening decisions. Cochrane Database Systematic Rev. 2014;Article no. CD001431. [DOI] [PubMed] [Google Scholar]

- 41. Bennett NL, Casebeer LL, Kristofco R, Collins BC. Family physicians' information seeking behaviors: a survey comparison with other specialties. BMC Med Inform Decis Mak .2005;5:9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.