Abstract

Objective

Improved methods to identify nonmedical opioid use can help direct health care resources to individuals who need them. Automated algorithms that use large databases of electronic health care claims or records for surveillance are a potential means to achieve this goal. In this systematic review, we reviewed the utility, attempts at validation, and application of such algorithms to detect nonmedical opioid use.

Materials and Methods

We searched PubMed and Embase for articles describing automatable algorithms that used electronic health care claims or records to identify patients or prescribers with likely nonmedical opioid use. We assessed algorithm development, validation, and performance characteristics and the settings where they were applied. Study variability precluded a meta-analysis.

Results

Of 15 included algorithms, 10 targeted patients, 2 targeted providers, 2 targeted both, and 1 identified medications with high abuse potential. Most patient-focused algorithms (67%) used prescription drug claims and/or medical claims, with diagnosis codes of substance abuse and/or dependence as the reference standard. Eleven algorithms were developed via regression modeling. Four used natural language processing, data mining, audit analysis, or factor analysis.

Discussion

Automated algorithms can facilitate population-level surveillance. However, there is no true gold standard for determining nonmedical opioid use. Users must recognize the implications of identifying false positives and, conversely, false negatives. Few algorithms have been applied in real-world settings.

Conclusion

Automated algorithms may facilitate identification of patients and/or providers most likely to need more intensive screening and/or intervention for nonmedical opioid use. Additional implementation research in real-world settings would clarify their utility.

Keywords: automated algorithms, electronic claims data, electronic health record, nonmedical opioid use, screening

BACKGROUND AND SIGNIFICANCE

Between 2000 and 2014, the United States saw a nearly 2.5-fold increase in the prevalence of drug overdoses, from 6.2/100 000 in 2000 to 14.7/100 000 in 2014.1 Drug overdose, whether prescription or nonmedical, is now the leading cause of accidental death in the United States, and prescription opioid medications are a key driver of this statistic. In 2014, deaths from prescription opioids exceeded deaths caused by heroin, methamphetamine, and cocaine combined.1

With the rapid growth of opioid prescriptions in the United States comes an increasing demand to identify and stem the rise of existing or potential nonmedical use. To prevent and treat nonmedical opioid use, providers who prescribe unscrupulously must be prohibited from doing so, and patients with nonmedical opioid use must be provided with treatment and rehabilitation. Health care payers seek to both improve patients’ health and minimize fraud and waste. The government – a major health care payer and steward of public health – has also gotten involved. In 2013, the US Food and Drug Administration mandated that pharmaceutical manufacturers conduct postmarketing surveillance on their extended-release and long-acting products.2 All but one state now have prescription drug monitoring programs (PDMPs) in place, with retail pharmacists able to screen prescriptions for potential fraud, waste, and/or nonmedical use.3

When patients are available to self-report behaviors, there are numerous validated survey tools that identify nonmedical opioid use.4–9,10 While useful in health care settings, these tools miss patients who do not use the health care system regularly and are not able to identify at-risk patients who misrepresent their actual opioid use. Manual survey-based tools are also costly, human resource–intensive, and difficult to implement across large populations. Alternatives are needed to address these shortcomings.

Electronic claims data offer a promising approach for population-level surveillance among patients engaged with the health care system. Health care payers, providers, and pharmaceutical manufacturers have ready access to electronic prescription drug and medical claims databases or electronic health records for large populations. Retail pharmacists have PDMPs. These data contain a record of opioid and other prescription drug filling, dates of dispensing, and/or medical diagnoses that together can highlight potential nonmedical use. With innovative approaches to using these data, such as decision trees, neural networks, and natural language processing, researchers have developed automated, electronic claims–based algorithms that can screen large populations and have the potential to facilitate identification of nonmedical opioid use and then direct health care dollars to those patients who are in need of treatment and rehabilitation. To date, it is not clear whether this potential has been realized in regular use in real-world settings. This systematic review investigates whether electronic claims data, and the algorithms they enable, have been or can be meaningfully applied to surveil large populations for nonmedical opioid use and identify patients trying to fill prescriptions or prescribers writing fraudulently at the very times they are engaged with the health care system.

OBJECTIVE

We conducted the present systematic review to assess the current state of algorithm-driven surveillance for nonmedical opioid use and to identify whether there are opportunities for growth and improvement. To this end, we reviewed what algorithms are currently available, whether they are being used in everyday practice and how often, and what kinds of organizations are using them. For each study, we discuss the data and analytic resources needed to replicate the algorithms, the quality of the data used in screening for nonmedical opioid use, and practical observations about their utility and implementation in real-world settings.

MATERIALS AND METHODS

Data sources

We searched PubMed and Embase on November 21, 2015, using 3 search strategies that incorporated Medical Subject Heading terms as available (Supplementary Appendix S1). The first focused on controlled substances, with terms such as “analgesic,” “opioid,” “controlled substance,” and “narcotic.” The second search strategy focused on detection methodologies and surveillance, using terms such as “algorithm,” “data mining,” and “automated.” Data sources drove the third search strategy and included terms such as “claims” and “health record.” The 3 strategies were then combined to identify articles fitting all selection criteria, described in the Study selection section below. An informationist from the Johns Hopkins Bloomberg School of Public Health assisted with refinement of the search strategy.

Study selection

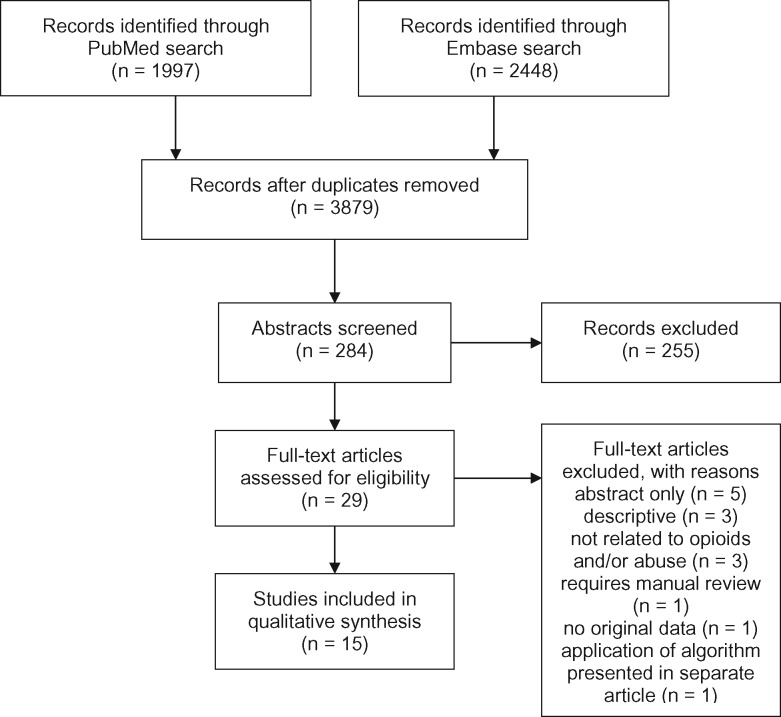

Two independent reviewers (CC and MK) screened titles and abstracts for potentially relevant articles, retaining those that described nonmedical opioid use, utilized electronic data, and presented novel data (Figure 1 ). From retained abstracts, CC and MK conducted a full-text review and selected final articles. At both the abstract and full-text article review stages, JP resolved inclusion/exclusion judgment discrepancies. Final articles described a novel algorithm to identify opioid misuse or nonmedical use via automatable algorithms. We defined automatable algorithms as those that could be deployed repeatedly by running the same programming code. We excluded articles for 1 or more of the following reasons: review articles, methodology could not be automated, not available as full text (were only descriptive), not related to opioids and/or nonmedical use, application of an algorithm presented in a separate article.

Figure 1.

Flow diagram: studies identified, excluded, and included using search strategy

Data extraction, synthesis, and quality assessment

A single author (CC or MK) extracted information on each algorithm, including data sources, development and validation, utility in different health care settings, algorithm performance metrics (eg, sensitivity, specificity, positive predictive value, and/or C-statistic), and key strengths and limitations. Variability in each study’s design and conduct precluded quantitative pooling of results.

Search results

In total, 3595 of 3879 retrieved articles were excluded based on title alone. Of the 284 remaining articles, abstract review identified 29 articles for full-text review. Fifteen articles required reconciliation at the abstract screening stage (κ = 0.74) and 3 at the full-text screening stage (κ = 0.79) (Figure 1). Fifteen articles were included in the final systematic review (Table 1).

Table 1.

Descriptions of studies

| Author, publication year | Data sources | Study design, number in study sample, and time period | Target population | Algorithm development method | Reported algorithm performance metrics |

|---|---|---|---|---|---|

| Birt et al. (2014) | Prescription drug claims data from a large, commercial insurance plans (Market Scan database) | Cohort; 6 291 810 patients; 2008–2009 | Individual medication | Compare Lorenz curves at 1% and 50% values, medication possession ratio, and proportion of days covered values for medications prone to nonmedical use with medications that are not | Discriminatory ability of metric using C-statistic Concordance of medication possession ratio vs proportion of days covered, vs Lorenz 1% and Lorenz 50% |

| Parente et al. (2004) | Prescription drug claims data from a multistate database with a mix of health plan types, including indemnity fee-for-service plans, preferred provider organizations, independent practice associations, and health maintenance organizations | Cohort; 7 million patients; 2000 | Patients (primarily) or prescribers | Controlled substance patterns of utilization requiring evaluation system, top 10 patterns evaluated | Algorithm sensitivity Pseudo R2 of model |

| Sullivan et al. (2010) | Administrative claims data, including demographic, clinical, and drug utilization data; commercial insurance and Medicaid insurance databases were used | Cohort; 31 845; 2000–2005 | Patients | Polytomous logistic regression; test for linear trend | Criterion validity: test for linear trend |

| Yang et al. (2015) | Prescription drug claims data from a multistate Medicaid database | Cohort; 90 010 patients; 2008–2010 | Patients | 2 indicators: pharmacy shopping indicator, overlapping prescription indicator diagnostic odds ratio, defined as the ratio of the odds of being identified as having nonmedical use when the patient actually has nonmedical use (true positives) divided by the odds of being identified as having nonmedical use when the patient does not have nonmedical use (false positives) | Criterion validity of the 2 indicators |

| Mailloux et al. (2010) | Prescription drug claims data from Wisconsin’s Medicaid database | Cohort; 190 patients; 1998–1999 | Patients | Decision tree | Sensitivity, specificity, positive predictive value, negative predictive value, validation attempts (concordance between results and gold standard as defined) |

| Iyengar et al. (2014) | Prescription drug and medical claims data from a large health insurance plan | Cohort; 2.3 million patients; 99 000 prescribers; 2011 | Patients and providers | Auditing analysis | Area under receiving operating curve (C-statistic) |

| White et al. (2009) | Administrative claims data, including demographic, clinical, and drug utilization data from a private health insurance plan | Case-control; 632 000 patients; 2005–2006 | Patients | Logistic regression | C-statistic Pseudo R2Model parsimony |

| Rice et al. (2012) | Administrative claims data, including demographic, clinical, and drug utilization data from a private health insurance plan | Case-control; 821 916 patients; 1999–2009 | Patients | Logistic regression | C-statistic |

| Cochran et al. (2014) | Administrative claims data, including demographic, clinical, and utilization data from a large health insurance plan | Case-control; 2 841 793 patients; 2000–2008 | Patients | Logistic regression | Sensitivity |

| Dufour et al. (2014) | Administrative claims data, including demographic, clinical, and drug utilization data from Humana and Truven | Case-control; 3567 patients 2009–2011 | Patients | Logistic regression | Sensitivity, specificity, positive predictive value, negative predictive value, validation attempts (concordance between results and gold standard as defined) |

| Carrell et al. (2015) | Free text from electronic health records; computer-assisted manual review of clinical notes | Descriptive; 22 142 patients; 2006–2012 | Patients | Natural language processing plus computer-assisted manual record review | False positive rate that compared natural language processing plus computer-assisted manual record review approach to traditional diagnostic codes in electronic health records |

| Hylan et al. (2015) | Free text from electronic health records | Cohort; 2752 patients; 2008–2012 | Patients | Logistic regression | Sensitivity, specificity, positive predictive value, negative predictive value C-statistic |

| Epstein et al. (2011) | Automated drug dispensing carts and anesthesia information management systems from a hospital | Case series; 158 providers 2007–2011 | Providers | Data mining | Sensitivity, specificity, positive predictive value, negative predictive value |

| Ringwalt et al. (2015) | Prescription drug monitoring program data from North Carolina | Cohort; 33 635 providers; 2009–2013 | Providers | Subject matter/clinical expertise | Criterion validity |

| Derrington et al. (2015) | ICD-9-CM codes from hospital discharge data in Massachusetts | Descriptive; 1 728 027 patients; 2002–2008 | Patients | Factor analysis | Number, percentage, and 95% confidence intervals for substance use disorders identified by algorithm compared to gold standard |

RESULTS

Data sources, study design, and target population

Researchers typically used data from 2000 to 2012. Prescription drug claims (4 studies) and combined administrative claims databases (medical + drug claims + demographic data; 6 studies) were the most popular data sources for algorithm development (Table 1). Two studies used free-text notes in electronic health records. One study each used hospital discharge, PDMP, or automated drug dispensing carts and anesthesia information management systems data.

Most studies8 relied on a cohort design and used patients’ baseline period demographic, drug, and/or clinical characteristics to build a model. A case-control design was the second most popular approach, utilized in 4 studies. Most algorithms were designed to identify nonmedical use among patients, while 2 specifically identified potential fraud or nonmedical use by providers,11,12 and 2 others applied to either patients or providers.13,14 One unique algorithm targeted neither patients nor providers, but identified medications with high abuse potential.15

Analytic approach and validation metrics

Six studies used logistic regression modeling in algorithm development, with priority given to the model’s parsimony and predictive ability.16–20,21 In these studies, algorithm performance was assessed in comparison to a gold standard “reference standard” using classification measures such as sensitivity, specificity, and positive (or negative) predictive value. Researchers used the C-statistic to assess model discrimination and R2 to measure the proportion of variability in outcomes that was explained by the model.

In 4 studies, authors elicited experts’ recommendations and applied existing criteria to develop algorithms.11,13,15,22,23 In these studies, criterion validity – the extent to which the algorithm was related to the authors’ gold standard – was often used to assess algorithm performance. The 4 remaining algorithms employed unique methodologies: natural language processing (in one case, plus computer-assisted manual record review for validation),24 data mining,12 factor analysis,25 and an application of audit analysis.14 Algorithm performance metrics in these studies included sensitivity, positive predictive value, criterion validity, and C-statistics.

Approaches to algorithm development

Across studies, researchers began algorithm development by identifying candidate variables. Authors explicitly relied on subject matter experts’ recommendations and/or applied existing criteria or known predictors to develop algorithms (Supplementary Table S1). Birt et al.15 chose to examine 3 well-known measures of medication utilization: proportion of days covered, medication possession ratio, and Lorenz curves. Yang et al.23 focused exclusively on different definitions of pharmacy shopping. Sullivan, Ringwalt, Parente, and Derrington each began algorithm development using recommendations from expert panels and/or existing scoring approaches.11,13,22,25

Two sets of researchers likewise began with a set of candidate measures or variables, and then additionally capitalized on the depth and breadth of the data to identify additional variables they had not originally selected. In developing their natural language processing algorithm, Carrell et al.24 first defined candidate text strings to look for opioid abuse in electronic health record notes. They then used “snowball” querying of the data, letting the data itself identify similar synonyms, abbreviations, variant spellings, and grammatical constructions. In this way, they expanded their candidate terms to a total of 1288. They then used computer-assisted manual record review to further refine the algorithm. Epstein et al.12 used data mining, a technique in which patterns are identified in data, to select possible indicators of opioid diversion.

Common predictors across algorithms

Final algorithms often included indicators for overlapping prescriptions, pharmacy shopping, multiple prescribers, total days’ supply of opioids, and number of prescriptions dispensed, all readily available in prescription drug claims data. When medical claims were available, diagnoses of substance use disorders, mental health conditions, chronic pain, or hepatitis were often included in algorithms. Concepts such as pharmacy shopping and overlapping prescriptions varied both within and across studies, making it difficult to compare the relative superiority/inferiority of one algorithm approach over another.

Algorithm validation

Researchers commonly used coded administrative data (eg, ICD codes) of opioid abuse or dependence as the gold, or reference, standard for algorithm validation.16–19,22,23 Three studies used manual record or chart review as the gold standard in developing their algorithms to identify a larger group of potential patients with nonmedical opioid use (high sensitivity).13,21,24 Two sets of researchers used more unconventional gold standards, namely, other algorithms. Hylan et al.20 used Carrell et al.’s natural language processing approach,16 while the Clinical Classifications Software for Mental Health and Substance Abuse algorithm was used to validate a new female-specific algorithm.25

Algorithm performance

Five studies reported the sensitivity and specificity of their algorithms, which varied with differing cutoff values for the unique outcomes. Using electronic health records and a natural language processing approach, Hylan et al.’s algorithm had a sensitivity of 60.1% and specificity of 71.6%.20 In another study that used pharmacy claims, the reported sensitivity ranged from 47% to 70%, depending on the criteria used to define the outcome: pharmacy shopping by patients (specificity not provided).23 White et al.’s16 approach to identify patients at high risk for opioid abuse yielded 95% sensitivity and 71% specificity. Using a decision tree modeling approach and pharmacy claims for 190 patients, Mailloux et al.’s21 algorithm identified patients with current abuse and/or fraud with high sensitivity and specificity, 87% and 97%, respectively. Another algorithm that was optimized to minimize false positives resulted in poor sensitivity (<20%) but high specificity (>98%).19 Of 6 studies that reported the algorithm’s C-statistic, 3 studies reported C-statistics between 0.9 and 1.0,15–17 2 between 0.8 and 0.9,19,14 and 1 between 0.7 and 0.8.20

Seven studies used alternative measures of model performance. Parente et al.13 used prescription drug claims for 500 000 health plan members to develop an algorithm that identified controlled substance patterns requiring further evaluation with >50% concordance for 9 of the 10 algorithm variables. A second stated that the odds of nonmedical opioid use increased linearly with each increasing value of their misuse score.22 An algorithm to predict opioid dependence within 2 years of an initial prescription noted >79.5% prediction concordance between the algorithm development and validation datasets18 and >80% with an algorithm developed using natural language processing and electronic health records.

DISCUSSION

In a systematic review, we identified 15 automatable algorithms to identify patients and/or prescribers at risk of nonmedical opioid use. Most algorithms relied on electronic medical, demographic, and/or prescription drug claims, data that are increasingly available to insurers, pharmacy benefits managers (PBMs), and health systems. With the exponential growth of nonmedical opioid use, identifying candidate automatable algorithms for real-world, population-level surveillance is critical in targeting patient outreach and rehabilitation and minimizing provider fraud. Below, we highlight the practical implications of our review.

Selection of predictors

Many studies relied on known predictors of nonmedical opioid use to develop algorithms. While capitalizing on existing knowledge ensures that important predictors are taken into consideration, it is important that researchers then go further, testing whether these a priori variables actually merit inclusion in the new algorithm. Without a new, empirical appraisal of candidate variables, researchers risk merely replicating the work of others and/or including variables that are not useful. Even with statistical testing, however, researchers may miss other clues (predictors) in their data. Therefore, when possible, we recommend that researchers take a third, data-driven step and employ advanced techniques like chi-square automated interaction detection, machine learning, neural networks, and data mining. For example, Cochran et al.18 and Mailloux et al.21 used chi-square automated interaction testing, while Epstein used data mining. Researchers who let the data “talk” via these techniques often find patterns in the data that were not obvious before, and may identify additional predictors or interactions to optimize their algorithms. Recognizing that all methodologic approaches have their limitations, researchers can also try multiple complementary approaches. Combining a priori and data-driven variable identification is not yet the most common approach for detecting nonmedical opioid use, but the combined techniques hold promise for creating algorithms that are accurate and reliable.

Absence of a true reference standard

Many of the studies lacked a true reference standard (gold standard) against which to evaluate automated algorithm performance. The absence of a reference standard is not surprising, given the challenge in assessing whether nonmedical use is actually present for any particular opioid user (an issue that even a treating physician may find difficult to assess). Several studies made rather strong assumptions about nonmedical opioid use (eg, that the presence of particular diagnosis codes indicates nonmedical use while their absence indicates no issue), assumptions that are far from precise. While algorithms trained to this standard likely have higher specificity, they are likely to have comparably low sensitivity, missing many patients with nonmedical use who have not received a medical diagnosis, especially if they are “doctor shopping” or otherwise able to hide their opioid use, and/or if their prescribers are hesitant to enter an administrative code for nonmedical opioid use. Alternatively, there may be a high false positive rate if prescribers start to enter nonmedical opioid diagnoses as “rule-out” diagnoses.

It is likely that reliable identification of nonmedical opiate use will continue to be an issue. Indeed, in their 2015 systematic review of the published literature, Cochran et al.26 found broad variation and inconsistency in defining and validating nonmedical opioid use, leading to considerable disparities in rates of nonmedical opioid use in populations. Our review 2 years later, which focused exclusively on automated algorithms, found no improvements in definition consistency.

Person power

For readers considering implementing one of these automated algorithms in their own setting, feasibility will depend on both the availability and analytic sophistication of person power. Some of the algorithms utilized simple count measures or weighted scores that can be integrated into data systems quickly and easily with minimal training. More sophisticated algorithms, such as the decision tree described by Mailloux et al.,21 the prediction model developed by Iyengar et al.,14 and the natural language processing technique described by Carrell et al.,24 require intensive programming and an initial commitment of considerable resources and expertise. Implementing these algorithms may not be cost-effective or even feasible.

Data

Data availability and sources are key considerations for all end users who wish to implement automated detection algorithms. In this review, prescription drug claims, available to managed care organizations, PBMs, and other health insurers, were the most common data source for algorithm development. On the other hand, drug claims–based algorithms are useless to the practicing clinician, who may only have access to electronic health record data and no prescription drug claims at all. Even for the PBM, drug claims do not tell the whole story; cash purchases are not recorded in claims data, so there remains the potential for data being missed.

Surveillance to identify potential nonmedical opioid use, not to obtain proof of such use

Finally, the utility of automated claims-based algorithms lies in their capacity to screen large populations for nonmedical opioid use quickly and cost-efficiently by employing the same algorithm criteria each time the screening is performed. As surveillance tools, the automated algorithms we reviewed will falsely identify some patients and/or providers as having nonmedical opioid use when they do not (false positives). If the goal of the algorithm is to find any patient or provider who may have nonmedical use, then false positives are the cost of avoiding missing potential cases that do need follow-up. Additionally, more specific testing should then be performed to confirm nonmedical opioid use; more on this below. If the algorithm is relied upon for definitive diagnosis, patient trust could be irreparably broken or a provider could lose his/her license to practice. Similar to a tuberculin sensitivity test, administered quickly and cheaply to screen for tuberculosis, initial screening must be followed by additional testing, as the consequences of being falsely diagnosed with tuberculosis are not trivial.

Conversely, the automated algorithms will also miss some patients and/or providers who do have nonmedical use but are not identified (false negatives). Missing cases of nonmedical opioid use is concerning, as patients will not receive care and intervention, and providers may continue with diversion or fraud. We recommend repeated screening, again similar to that for tuberculosis and other diseases, as well as incorporating new information outside the algorithm’s purview (eg, a patient tells his doctor that he has been stealing a family member’s opioid medications) as part of ongoing surveillance activities.

CONCLUSION

In this systematic review, we identified 15 automated algorithms to describe and identify problematic nonmedical opioid use. In contrast to manual record review or face-to-face patient assessment, automated algorithms are well suited to large population-level surveillance and are less costly and resource-intensive, making them most useful for organizations such as health insurers, PBMs, and pharmaceutical companies. Even with these advantages, our review highlights the limitations of such algorithms. In particular, there is no one gold standard for determining nonmedical opioid use. Analytic sophistication and data availability will influence whether algorithm implementation is feasible. Finally, users must recognize the implications of identifying false positives and, conversely, false negatives. With these advantages and limitations in mind, we recommend using the insights in this review as a jumping-off point for developing an algorithm implementation plan that is best suited to the users’ specific setting.

Supplementary Material

ACKNOWLEDGMENTS

None.

Funding

This study was funded by CVS Health.

COMPETING INTERESTS

GCA is chair of the Food and Drug Administration’s Peripheral and Central Nervous System Advisory Committee; serves as a paid consultant to PainNavigator, a mobile startup to improve patients’ pain management; serves as a paid consultant to IMS Health; and serves on an IMS Health scientific advisory board. This arrangement has been reviewed and approved by Johns Hopkins University in accordance with its conflict of interest policies. JMP and TAP are employees of and hold stock in CVS Health. CC and MKK are employees of CVS Health. At the time of the study, WHS was an employee of CVS Health.

Contributors

All authors contributed significantly to the work. CC, JMP, WHS, TAB, and GCA designed the study. CC and MKK acquired the data. CC, MKK, and JMP analyzed the data. CC, MKK, JMP, WHS, TAB, and GCA interpreted the data. CC drafted the manuscript, and MKK, JMP, WHS, TAB, and GCA revised the manuscript for important intellectual content. All authors gave final approval of the manuscript and agreed to accountability for all aspects of the work.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

References

- 1. Rudd RA, Aleshire N, Zibbell JE. et al. Increases in Drug and Opioid Overdose Deaths — United States, 2000–2014. Morbid Mortal Week Rep. 2016;64;1378–82. [DOI] [PubMed] [Google Scholar]

- 2. Food and Drug Administration. FDA Announces Safety Labeling Changes and Postmarket Study Requirements for Extended-release and Long-acting Opioid Analgesics. 2013. http://www.fda.gov/NewsEvents/Newsroom/PressAnnouncements/ucm367726.htm. Accessed February 1, 2017.

- 3. Prescription Drug Monitoring Program Center for Technical Assistance and Training. Prescription Drug Monitoring Frequently Asked Questions (FAQ). 2015. http://www.pdmpassist.org/content/prescription-drug-monitoring-frequently-asked-questions-faq. Accessed April 30, 2016.

- 4. Butler SF, Budman SH, Fernandez K. et al. Validation of a screener and opioid assessment measure for patients with chronic pain. Pain. 2004;112:65–75. [DOI] [PubMed] [Google Scholar]

- 5. Butler SF, Fernandez K, Benoit C. et al. Validation of the revised Screener and Opioid Assessment for Patients with Pain (SOAPP-R). J Pain. 2008;9:360–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Webster LR, Webster RM. Predicting aberrant behaviors in opioid-treated patients: preliminary validation of the Opioid Risk Tool. Pain Med. 2005;6:432–42. [DOI] [PubMed] [Google Scholar]

- 7. Butler SF, Budman SH, Fernandez KC. et al. Development and validation of the Current Opioid Misuse Measure. Pain. 2007;130:144–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Compton P, Darakjian J, Miotto K. Screening for addiction in patients with chronic pain and “problematic” substance use: evaluation of a pilot assessment tool. J Pain Symptom Manag. 1998;16:355–63. [DOI] [PubMed] [Google Scholar]

- 9. Compton PA, Wu SM, Schieffer B. et al. Introduction of a self-report version of the Prescription Drug Use Questionnaire and relationship to medication agreement noncompliance. J Pain Symptom Manag. 2008;36:383–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Sehgal N, Manchikanti L, Smith HS. Prescription opioid abuse in chronic pain: a review of opioid abuse predictors and strategies to curb opioid abuse. Pain Physician. 2012;15:ES67–92. [PubMed] [Google Scholar]

- 11. Ringwalt C, Schiro S, Shanahan M. et al. The use of a prescription drug monitoring program to develop algorithms to identify providers with unusual prescribing practices for controlled substances. J Prim Prev. 2015;36:287–99. [DOI] [PubMed] [Google Scholar]

- 12. Epstein RH, Gratch DM, McNulty S. et al. Validation of a system to detect scheduled drug diversion by anesthesia care providers. Anesth Analg. 2011;113:160–64. [DOI] [PubMed] [Google Scholar]

- 13. Parente ST, Kim SS, Finch MD. et al. Identifying controlled substance patterns of utilization requiring evaluation using administrative claims data. Am J Manag Care. 2004;10:783–90. [PubMed] [Google Scholar]

- 14. Iyengar VS, Hermiz KB, Natarajan R. Computer-aided auditing of prescription drug claims. Health Care Manag Sci. 2014;17:203–14. [DOI] [PubMed] [Google Scholar]

- 15. Birt J, Johnston J, Nelson D. Exploration of claims-based utilization measures for detecting potential nonmedical use of prescription drugs. J Manag Care Spec Pharm. 2014;20:639–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. White AG, Birnbaum HG, Schiller M. et al. Analytic models to identify patients at risk for prescription opioid abuse. Am J Manag Care. 2009;15:897–906. [PubMed] [Google Scholar]

- 17. Rice JB, White AG, Birnbaum HG. et al. A model to identify patients at risk for prescription opioid abuse, dependence, and misuse. Pain Med. 2012;13:1162–73. [DOI] [PubMed] [Google Scholar]

- 18. Cochran BN, Flentje A, Heck NC. et al. Factors predicting development of opioid use disorders among individuals who receive an initial opioid prescription: mathematical modeling using a database of commercially-insured individuals. Drug Alcohol Depend. 2014;138:202–08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Dufour R, Markekian MK, Schaaf D, Andrews G, Patel N. Understanding predictors of opioid abuse: predictive model development and validation. Am J Pharm Benefits. 2014;6:208–16. [Google Scholar]

- 20. Hylan TR, Von Korff M, Saunders K. et al. Automated prediction of risk for problem opioid use in a primary care setting. J Pain. 2015;16:380–07. [DOI] [PubMed] [Google Scholar]

- 21. Mailloux AT, Cummings SW, Mugdh M. A decision support tool for identifying abuse of controlled substances by ForwardHealth Medicaid members. J Hosp Mark Public Relations. 2010;20:34–55. [DOI] [PubMed] [Google Scholar]

- 22. Sullivan MD, Edlund MJ, Fan M-Y. et al. Risks for possible and probable opioid misuse among recipients of chronic opioid therapy in commercial and medicaid insurance plans: The TROUP Study. Pain. 2010;150:332–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Yang Z, Wilsey B, Bohm M. et al. Defining risk for prescription opioid overdose: pharmacy shopping and overlapping prescriptions among long-term opioid users in medicaid. J Pain. 2015;16:445–53. [DOI] [PubMed] [Google Scholar]

- 24. Carrell DS, Cronkite D, Palmer RE. et al. Using natural language processing to identify problem usage of prescription opioids. Internat J Med Inform. 2015;84:1057–64. [DOI] [PubMed] [Google Scholar]

- 25. Derrington TM, Bernstein J, Belanoff C. et al. Refining measurement of substance use disorders among women of child-bearing age using hospital records: the development of the Explicit-Mention Substance Abuse Need for Treatment in Women (EMSANT-W) Algorithm. Matern Child Health J. 2015;19:2168–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Cochran G, Woo B, Lo-Ciganic W-H. et al. Defining nonmedical use of prescription opioids within health care claims: a systematic review. Subst Abus. 2015;36:192–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.