Abstract

Objective

To compare the efficiency and safety of using speech recognition (SR) assisted clinical documentation within an electronic health record (EHR) system with use of keyboard and mouse (KBM).

Methods

Thirty-five emergency department clinicians undertook randomly allocated clinical documentation tasks using KBM or SR on a commercial EHR system. Tasks were simple or complex, and with or without interruption. Outcome measures included task completion times and observed errors. Errors were classed by their potential for patient harm. Error causes were classified as due to IT system/system integration, user interaction, comprehension, or as typographical. User-related errors could be by either omission or commission.

Results

Mean task completion times were 18.11% slower overall when using SR compared to KBM (P = .001), 16.95% slower for simple tasks (P = .050), and 18.40% slower for complex tasks (P = .009). Increased errors were observed with use of SR (KBM 32, SR 138) for both simple (KBM 9, SR 75; P < 0.001) and complex (KBM 23, SR 63; P < 0.001) tasks. Interruptions did not significantly affect task completion times or error rates for either modality.

Discussion

For clinical documentation, SR was slower and increased the risk of documentation errors, including errors with the potential to cause clinical harm compared to KBM. Some of the observed increase in errors may be due to suboptimal SR to EHR integration and workflow.

Conclusion

Use of SR to drive interactive clinical documentation in the EHR requires careful evaluation. Current generation implementations may require significant development before they are safe and effective. Improving system integration and workflow, as well as SR accuracy and user-focused error correction strategies, may improve SR performance.

Keywords: patient safety, electronic health record, speech recognition, documentation, medical errors

BACKGROUND AND SIGNIFICANCE

Electronic health records (EHRs) are rapidly becoming mandatory for clinical documentation worldwide, and can result in improved documentation completeness and accuracy.1 The use of EHRs is, however, often associated with increased documentation time for clinicians compared to use of paper records.2 Speech recognition (SR) systems are seen as an alternative input modality that may be faster and more acceptable to clinicians than the use of keyboard and mouse (KBM) alone.

In the clinical dictation setting, such as radiology results reporting, SR can decrease overall document turnaround time compared to transcription services.3 SR is also considered a viable option for clinicians who cannot touch-type or are untrained in EHR use.4,5

SR is an increasingly common input modality across a range of consumer devices, from smartphones to refrigerators,6 and has become the standard method for documentation in specialty areas such as radiology results reporting. Given the significant time clinicians spend on the ubiquitous task of clinical documentation, it is surprising that the benefits of SR for EHR documentation and navigation have been relatively unexplored. Our recent review of the literature identified only a few studies that compared SR to dictation and transcription for clinical report creation. None explored the use of SR for general input and navigation of the EHR, and very few directly compared SR to the use of KBM, the most common input modality for EHR documentation.3

OBJECTIVE

The objective of this study was to compare the impact of using SR on the efficiency and safety of EHR documentation compared to KBM alone, using a common commercial EHR in a controlled experimental setting. The 2 input modalities were compared for efficiency (measured by time to complete tasks) and safety (measured by occurrence of documentation errors). The potential influence of task complexity and interruptions on documentation performance was also examined.

MATERIALS AND METHODS

A within-subject experimental study was undertaken with emergency department (ED) physicians, with each assigned 8 standardized clinical documentation tasks using a commercial EHR. Participants navigated the EHR and documented patient information for simulated patients. The order of task completion was allocated randomly, with half of the tasks assigned to KBM and half toSR.

The 8 documentation tasks were representative of those commonly undertaken within an EHR by ED physicians and included patient assignment, patient assessment, diagnosis, orders, and patient discharge. Tasks were chosen in consultation with senior ED clinicians, who did not further participate in the trials. All simulated patients had active records available in the experimental version of the standard ED EHR (see Supplementary Appendix A).

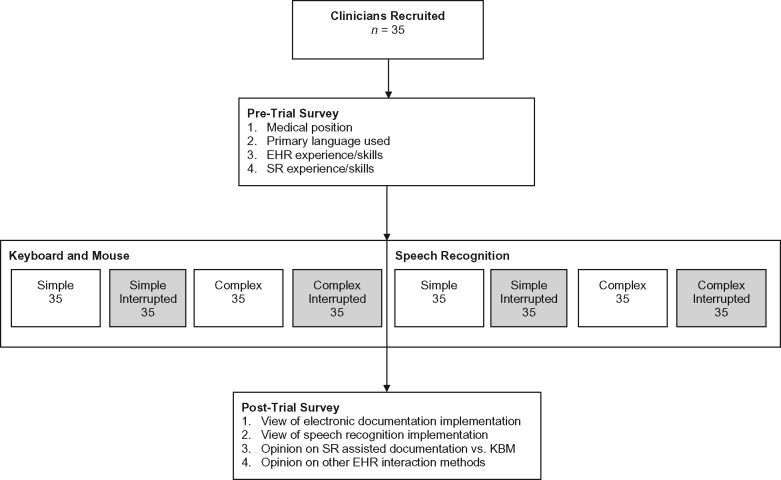

To allow for variation in task complexity, 4 of the 8 tasks were designed to be simple and 4 complex. Complexity was measured by the number of subtasks, with the simple tasks having 2 subtasks and complex tasks having 4. Given that interruptions are commonplace in clinical settings and can contribute to documentation error, 4 of the 8 tasks included a randomly assigned interruption condition.7,8 Interrupts were generated by a popup with a multiple-choice question taken from an Australasian College for Emergency Medicine fellowship exam practice set, and occurred at the same predefined stage of task completion. A similar interrupt was generated for simple and complex tasks in the KBM and SR conditions (see Figure 1 ).

Figure 1.

Experiment conceptual design.

The clinical software used for the experiment was the Cerner Millennium suite with the FirstNet ED component (v2012.01.30) and Nuance Dragon Medical 360 Network Edition UK (version 2.0, 12.51.200.072) SR software. Both were configured to replicate the operation of the EHR that participants used daily. All user actions, down to individual keystrokes, were automatically logged with recording software. Session EHR screens and audio were also separately recorded with a High-Definition Multimedia Interface capture device (see Supplementary Appendices B and C).

Thirty-five participants volunteered from 3 urban teaching hospitals in Sydney, Australia, from an eligible population of approximately 100 ED clinicians. To be eligible, subjects must have previously completed training in the EHR system, including specific SR training (EHR 4 h, SR 2 h). Clinicians were excluded if they had a pronounced speech impediment or physical disability that might affect system use.

It was estimated that a sample size of 27 clinicians would be sufficient to test for differences in time efficiency and error rates when using a t-test with a significance level of 0.05 and power of 0.95. Calculations were performed using G*Power (v3.1).9

Participants self-assessed their prior experience with clinical documentation using the EHR and the 2 input methods through a pre-study questionnaire. A brief exit questionnaire solicited opinions on the technologies used and their implementation within their workplace. The study was approved by the university and participating hospitals’ ethics committees. The trials took place over 2 months, commencing March 2015.

OUTCOME MEASURES

The efficiency of each input modality was measured by the time taken to complete a documentation task, including separate measurements for all subtasks. Task completion times excluded time spent during any interruptions. The time required to launch the dictation dialog box was also deducted, as this time was unrelated to either user behavior or SR time but was associated with the way in which the SR system was locally integrated with the EHR.

The safety of documentation performance was assessed by the number of errors observed. Each observed error was assigned a label in 3 categories by 2 reviewers (TH and DL, see Supplementary Appendix D for the trial errors observed and their assigned labels).

Potential for Patient Harm (PPH): This was an assessment of risk that an error had a major, moderate, or minor impact on patient outcomes, based on the scale within the US Food and Drug Administration 2005 guidance document.10

Error Type: The nature of the error was separated into 3 classes: (A) integration/system: associated with technology (including software, software integration, and hardware); (B) user: associated with user action; and (C) comprehension: related to comprehension (eg, user adds words to or omits words from the prescribed task). Errors could be assigned to > 1 class within this label set.

User Error Type: Where user errors occurred, they were assigned 1 of 2 additional labels: (A) omission: errors that occurred when a participant failed to complete an assigned task, and (B) commission: errors that occurred when participants incorrectly executed an assigned task.

The labels for Error Type were not mutually exclusive, and some errors could have multiple labels assigned. Minor typographical errors, such as missing full stops or incorrect capitalization, were treated as a discrete category, as they had no potential for harm and could not be easily assigned a Type category.

Definitions for these error classes are contained in Supplementary Appendix E. The process for allocating observed errors to class and the inter-rater agreement are presented in Supplementary Appendix D. Inter-rater agreement was calculated using Cohen’s kappa.11 Initial observed agreement was robust across all three categories: (1) 81.48% agreement, κ = 0.694, P < .001, 95% confidence interval [CI], 0.476–0.912. (2) 88.89% agreement, κ = 0.800, P < .001, 95% CI, 0.590–1.010. (3) 85.19% agreement, κ = 0.778, P < .001, 95% CI, 0.584–0.971. All discrepancies between the reviewers were resolved through discussion.

Statistical comparisons were made for efficiency and safety outcome variables on equivalent tasks using both KBM and SR, ie, simple or complex tasks. Aggregate data across all task types were reported, but heterogeneity in task type precluded statistical testing. Since the study data do not follow normal distribution, only nonparametric statistical tests, including Wilcoxon signed rank and Mann-Whitney tests, were undertaken using IBM SPSS Statistics v24.0.0.0. For those statistical tests that ranked paired observations, comparisons were possible only where values for both input modalities were available. In cases where a task had no value for 1 input modality (such as a missed or incomplete task), the pair was excluded.

RESULTS

There were 19 female and 16 male participants, who identified themselves predominantly as senior clinicians (24/35). Most rated their SR skills lower than their EHR skill level, with a mean of 3.9/5 for KBM and 2.7/5 for SR (on a scale from 1 = very poor to 5 = excellent). Many participants had <1 year of SR experience (19/35) while few had <1 year EHR experience (7/35).

Efficiency

Across all 4 experimental conditions (simple task KBM, simple task SR, complex task KBM, and complex task SR), there was no association found between task completion time and clinical role (P = .100–.588), skill level (P = .073–.452), or period of experience with the EHR or SR (P = .303–.717). Interruptions had no effect on task completion times for either input modality across both task types (see Supplementary Appendix F).

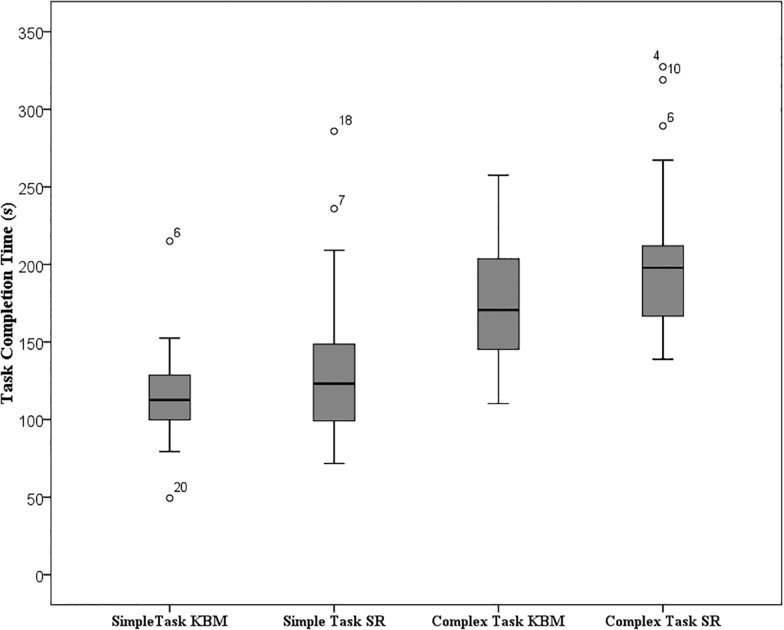

Overall, complex tasks took 52.82% longer to complete than simple tasks (mean: complex 185.63 s, simple 121.47 s; P < .001; 95% CI, 50.55–75.34). Clinical documentation took significantly longer to complete using SR compared to KBM, with mean time to complete all tasks 18.11% longer when using SR (KBM 140.09, SR 165.46; P = .001; 95% CI, 9.87–33.91). This significant difference in mean task completion time held for both simple (KBM 112.38, SR 131.44; P = 0.050; 95% CI, −0.07, 30.50) and complex (KBM 170.48, SR 201.84; P = .009; 95% CI, 9.61–47.73) tasks (see Table 1 and Figure 2 ).

Table 1.

Efficiency summary

| Task Type | N | Mean task completion time (s) | Max task completion time (s) | Min task completion time (s) | Difference in completion time SR vs KBM (%) | Z | Wilcoxon P-value | 95% CI |

|---|---|---|---|---|---|---|---|---|

| KBM – simple and complex | 65 | 140.09 | 257.63 | 49.38 | 129.56, 151.45 | |||

| SR – simple and complex | 60 | 165.46 | 327.45 | 71.67 | 150.56, 180.37 | |||

| Total KBM vs total SR | 58 | 118.11 | −3.302 | 0.001 | 9.87, 33.91 | |||

| Simple task KBM | 34 | 112.38 | 214.99 | 49.38 | 103.15, 122.42 | |||

| Simple task SR | 31 | 131.44 | 285.91 | 71.67 | 117.01, 146.94 | |||

| Simple task KBM vs simple task SR | 31 | 116.95 | −1.960 | 0.050 | −0.07, 30.50 | |||

| Complex task KBM | 31 | 170.48 | 257.63 | 110.31 | 157.26, 183.80 | |||

| Complex task SR | 29 | 201.84 | 327.45 | 138.87 | 185.71, 219.26 | |||

| Complex task KBM vs complex task SR | 27 | 118.40 | −2.643 | 0.009 | 9.61¸47.73 | |||

| Tasks with interruptions | ||||||||

| Simple task KBM with interrupt | 34 | 112.22 | 247.26 | 60.40 | 101.22, 124.77 | |||

| Simple task KBM vs simple task KBM with interrupt | 34 | −99.85 | −0.710 | 0.478 | −13.19, 7.69 | |||

| Simple task SR with interrupt | 32 | 134.41 | 250.20 | 67.69 | 119.78, 149.08 | |||

| Simple task SR vs simple task SR with interrupt | 29 | 102.26 | −0.465 | 0.642 | −19.75, 28.12 | |||

| Complex task KBM with interrupt | 31 | 186.65 | 355.90 | 102.94 | 168.91, 207.55 | |||

| Complex task KBM vs complex task KBM with interrupt | 29 | 109.49 | −0.465 | 0.642 | −3.39, 33.60 | |||

| Complex task SR with interrupt | 31 | 216.45 | 345.70 | 133.13 | 196.82, 238.76 | |||

| Complex task SR vs complex Task SR with interrupt | 26 | 107.24 | −1.664 | 0.096 | −1.96, 49.12 | |||

Figure 2.

Boxplot of task completion time for simple and complex tasks via input modality.

Safety

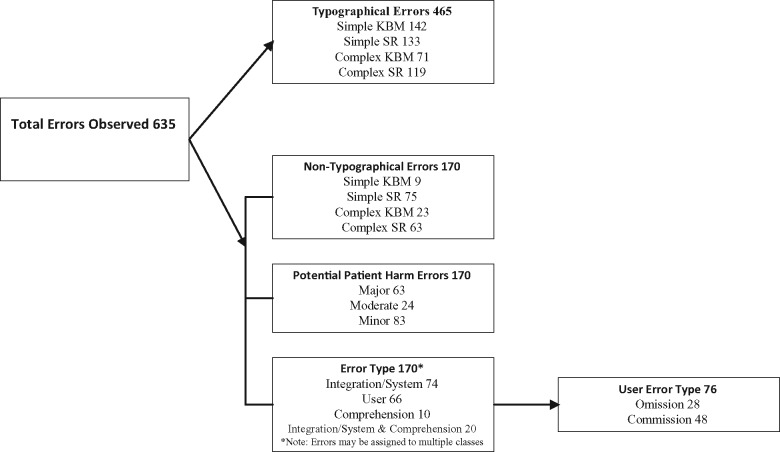

A total of 170 errors was observed, with a significant difference in the number of errors (excluding typographical errors) via input modality (KBM 32, SR 138) (see Figure 3 ). This increase in errors observed via SR held for both simple (KBM 9, SR 75; P < .001; 95% CI, 1.50–2.50) and complex (KBM 23, SR 63; P < .001; 95% CI, 0.50–1.50) tasks. There were many typographical errors observed with both input modalities (KBM 213, SR 252), and no significant difference in typographical error frequency by input modality for either simple (KBM 142, SR 133; P = .345; 95% CI, −1.00, 0.50) or complex (KBM 71, SR 119; P = .600; 95% CI, −0.50, 0.50) tasks was found.

Figure 3.

Error framework: overview of the breakdown of errors by class.

There was no association found between number of errors observed and participant clinical role, skill, or period of experience with the EHR or SR. The introduction of interruptions made no statistically significant difference in the number of errors for complex tasks and simple tasks using SR. However, there was a significant difference in errors observed for simple tasks using KBM (KBM 9, KBMI 7; P = .018; 95% CI, 0.00–1.00).

Potential for patient harm

There were significant increases in the occurrence of all classes of PPH errors when using SR across both task types: major PPH simple task (KBM 2, SR 29; P < .001; 95% CI, 0.50–1.00), and complex task (KBM 11, SR 21; P = .005; 95% CI, 0.00–0.50), moderate PPH simple task (KBM 0, SR 13; P = .008; 95% CI, 0.00–0.50), minor PPH simple task (KBM 7, SR 33; P = .002; 95% CI, 0.50–1.00), and complex task (KBM 9, SR 34; P < .001; 95% CI, 1.50–2.50). The exception was moderate PPH errors during complex tasks, where there was no significant difference (KBM 3, SR 8; P = .083; 95% CI, 0.00–0.00) (see Table 2).

Table 2.

Error summary

| Non-interrupts |

Interrupts |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| KBM vs KBMI |

SR vs SRI |

||||||||||

| KBM | SR | Z | Wilcoxon P-value | 95% CI | Z | P-value | 95% CI | Z | Wilcoxon P-value | 95% CI | |

| Total errors observed | 245 | 390 | |||||||||

| Non-typographical | 32 | 138 | |||||||||

| Simple | 9 | 75 | −4.370 | 0.000 | 1.50, 2.50 | −2.373 | 0.018 | 0.00, 1.00 | −1.115 | 0.265 | −0.50, 1.50 |

| Complex | 23 | 63 | −4.085 | 0.000 | 0.50, 1.50 | −0.294 | 0.769 | 0.00, 0.50 | −1.086 | 0.278 | −0.50, 1.00 |

| Potential patient harm | 32 | 138 | |||||||||

| Major | 13 | 50 | |||||||||

| Simple | 2 | 29 | −3.616 | 0.000 | 0.50, 1.00 | −2.496 | 0.013 | 0.00, 0.50 | −1.099 | 0.272 | −0.50, 0.50 |

| Complex | 11 | 21 | −2.799 | 0.005 | 0.00, 0.50 | −1.425 | 0.154 | 0.00, 0.50 | −0.317 | 0.751 | −0.50¸ 0.50 |

| Moderate | 3 | 21 | |||||||||

| Simple | 0 | 13 | −2.636 | 0.008 | 0.00, 0.50 | −1.732 | 0.083 | 0.00¸0.00 | −0.474 | 0.635 | 0.00, 0.00 |

| Complex | 3 | 8 | −1.732 | 0.083 | 0.00, 0.00 | −1.732 | 0.083 | 0.00, 0.00 | −2.648 | 0.008 | 0.00, 0.50 |

| Minor | 16 | 67 | |||||||||

| Simple | 7 | 33 | −3.101 | 0.002 | 0.50, 1.00 | −0.686 | 0.493 | 0.00, 0.00 | −0.198 | 0.843 | −0.50, 0.50 |

| Complex | 9 | 34 | −4.637 | 0.000 | 1.50, 2.50 | −1.809 | 0.070 | 0.00, 0.50 | −2.532 | 0.011 | −1.50, 0.50 |

| Error type | 32 | 158 | |||||||||

| Integration/system | 2 | 92 | |||||||||

| Simple | 0 | 56 | −4.598 | 0.000 | 1.00, 2.00 | −1.414 | 0.157 | 0.00, 0.00 | −1.792 | 0.073 | −1.00, 0.00 |

| Complex | 2 | 36 | −4.460 | 0.000 | 1.00, 2.50 | −0.577 | 0.564 | 0.00, 0.00 | −1.008 | 0.314 | −1.00, 0.50 |

| User errors | 22 | 44 | |||||||||

| Simple | 5 | 18 | −2.681 | 0.007 | 0.00, 0.50 | −2.194 | 0.028 | 0.00, 0.50 | −1.397 | 0.162 | 0.00, 0.50 |

| Complex | 17 | 26 | −3.133 | 0.002 | 0.00, 0.50 | −2.675 | 0.007 | 0.00, 0.50 | −0.210 | 0.834 | −0.50, 0.50 |

| Comprehension | 8 | 22 | |||||||||

| Simple | 4 | 5 | −1.000 | 0.317 | 0.00, 0.00 | −0.707 | 0.480 | 0.00, 0.00 | −2.207 | 0.027 | 0.00, 0.50 |

| Complex | 4 | 17 | −4.361 | 0.000 | 1.00, 1.50 | 0.000 | 1.000 | 0.00, 0.00 | −3.006 | 0.003 | −1.00, 0.00 |

| User error type | 30 | 46 | |||||||||

| Omission | 8 | 20 | |||||||||

| Simple | 2 | 14 | −3.207 | 0.001 | 0.50, 0.00 | −1.265 | 0.206 | 0.00, 0.00 | −2.138 | 0.033 | −0.50, 0.00 |

| Complex | 6 | 6 | −2.840 | 0.005 | 0.00, 0.50 | −0.632 | 0.527 | 0.00, 0.00 | −2.500 | 0.012 | −0.50, 0.00 |

| Commission | 22 | 26 | |||||||||

| Simple | 7 | 5 | −0.359 | 0.719 | 0.00, 0.00 | −1.186 | 0.236 | 0.00, 0.00 | −1.588 | 0.112 | 0.00, 0.50 |

| Complex | 15 | 21 | −1.279 | 0.201 | 0.00, 0.50 | −0.188 | 0.851 | 0.00, 0.50 | −0.471 | 0.637 | −0.50, 0.00 |

| Typographical | 213 | 252 | |||||||||

| Simple | 142 | 133 | −0.944 | 0.345 | −1.00, 0.50 | −4.702 | 0.000 | −2.50, 1.50 | −1.038 | 0.299 | −1.00, 0.50 |

| Complex | 71 | 119 | −0.525 | 0.600 | −0.50, 0.50 | −4.511 | 0.000 | −3.00, 1.50 | 0.303 | 0.303 | −0.50, 1.00 |

Bold values indicate general totals and italic bold corresponds to category totals.

Error type

There were significant increases in the occurrence of integration/system, user, and comprehension errors while using SR across both task types: integration/system errors simple task (KBM 0, SR 56; P < .001; 95% CI, 1.00–2.00) and complex task (KBM 2, SR 36; P < .001; 95% CI, 1.00–2.50), user errors simple task (KBM 5, SR 18; P = .007; 95% CI, 0.00–0.50) and complex task (KBM 17, SR 26; P = .002; 95% CI, 0.00–0.50), and comprehension errors complex task (KBM 4, SR 17; P < .001; 95% CI, 1.00–1.50), with the exception of comprehension errors during simple tasks, where no significant difference was found (KBM 4 SR 5; P = .317; 95% CI, 0.00–0.00) (See Table 2).

User error type

There were significant increases in the occurrence of omission errors via SR across both task types: simple task (KBM 2, SR 14; P = .001; 95% CI, 0.00–0.50) and complex task (KBM 6, SR 6; P = .005; 95% CI, 0.00–0.50). However, there were no significant differences in the occurrence of commission errors via either input modality: simple task (KBM 7, SR 5; P = .719; 95% CI, 0.00–0.00) and complex task (KBM 15, SR 21; P = .201; 95% CI, 0.00–0.50) (see Table 2).

DISCUSSION

This is the first study, to our knowledge, that compares the impact of input modality on the safety and efficiency of clinical documentation using an EHR in a controlled experimental environment. The study’s results show statistically significant differences in outcomes when using KBM compared to SR, with SR created records taking longer and associated with a higher error rate. While these results do not appear to be influenced by the degree of EHR or SR experience, skill level, or seniority, it is possible that results might be different with changes in task type, participants, setting, or technology. For the tasks undertaken in this study, creating EHR clinical documentation with the assistance of SR was significantly slower (18.11%) than using KBM alone, and this difference held for both simple (16.95%) and complex (18.40%) documentation tasks.

These results are different from those of a recent observational study that found no difference in charting time when a voice driven EHR at one site was compared to a KBM driven EHR at another.12 However, the study sample size was too small to determine if this result was statistically significant, and the EHRs at these sites were different and may themselves have accounted for the time difference. Further, patient acuity was different at the 2 sites, resulting in different admission rates and case mix. Consequently, it is hard to draw any conclusions from this study, and comparison with the controlled experiment reported here is not possible.

Interestingly, task interruptions did not significantly increase task completion times in our study. This is consistent with another controlled experiment, where interruptions did not affect electronic prescribing tasks.13 A previous observational study on the impact of interruptions on ED physicians’ task time showed a reduction in task time following interruptions, possibly because physicians hurry to complete tasks after being interrupted when they are under time pressure.14 Our results suggest that documentation tasks might not be easily time compressed, and that interruption penalties might therefore be incurred with other tasks associated with patient care.

In this study, more errors occurred when tasks were completed using SR than KBM alone, and this held for both simple and complex tasks.

The occurrence of serious errors with major PPH was significantly greater using SR (simple task: KBM 2, SR 29; complex task: KBM 11, SR 21) (Table 3). Any increase in these errors that could result in death or serious injury to patients requires further examination.

Table 3.

Major potential patient harm errors

| Error observed | Incorrect patient | Incorrect blood glucose level | Incorrect test/order collection date entered | Data lost during text transfer (no EHR record created) | Data entered in incorrect EHR field | Section of EHR missed | Incorrect significant word entered (diagnostic) | Significant word entered (diagnostic) | Misrecognition of word by SR | Element of EHR down, eg, vitals | Total errors |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Simple task KBM | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 2 |

| Simple task SR | 0 | 0 | 0 | 2 | 1 | 0 | 0 | 0 | 26 | 0 | 29 |

| Complex task KBM | 1 | 3 | 3 | 0 | 2 | 1 | 0 | 0 | 0 | 1 | 11 |

| Complex task SR | 0 | 12 | 6 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 21 |

Far more integration/system errors (such as network transmission delays causing SR commands to either not complete or go to the incorrect location in a chart or the wrong chart) occurred while tasks were being undertaken with SR. These included some highly potential patient harm errors, such as misrecognition of words (eg, “follow” instead of “FLU,” “fractured” instead of “ruptured”). It is possible that some of these integration errors could be avoided through a redesign or reconfiguration of inter-system communication methods.

Documentation error rates were high independent of input modality, and if replicated in real-world situations would raise questions about the acceptable baseline level of errors for an EHR system. Recent real-world studies have indeed shown that clinical documentation accuracy can be poor, with one study finding accurate documentation rates of 54.4% via paper and 58.4% with EHRs.15 Our results thus support calls to improve the quality of clinical documentation independent of modality of input.

There continue to be rapid changes in SR technologies; a recent study reports SR systems that now exceed human performance for conversational speech.16 One recent study examined the potential for EHR transcription using SR together with natural language processing and found potential time and usability benefits for electronic documentation.17 These results are in accord with our recent systematic review that identified benefits (and risks) of using SR for clinical documentation.3

SR as a use model is typically associated with dictation tasks where users are simply creating large volumes of text. Dictation time benefits may be realized in part because the user interaction and screen navigation tasks associated with electronic documentation are not present, unlike the real-world use cases within this study.

SR systems have carved out a role in specific areas of clinical documentation, such as report dictation in radiology,3 but there has been little attention paid to the potential risks and benefits of using SR as a primary mode of data entry and system navigation in the EHR. The results of this study, which was designed to replicate real world tasks and used a working clinical system with real clinical users, give some pause for thought. SR appears to reduce efficiency by taking more time than KBM, and also appears to increase the rates of error, both minor and serious.

Some of the errors and inefficiencies appear to be inherent in current-generation SR engines and their fit to the task of clinical documentation within EHRs. EHRs are designed with KBM input in mind, and simply expecting SR to directly replace KBM in such a design may be inappropriate. Designing EHRs that are purpose-built for SR may yield different workflows and design constructs, and consequently different results. It is possible that at least some of these weaknesses with SR can be mitigated with system redesign.

The use of SR to drive interactive clinical documentation in the EHR thus requires careful evaluation, and it cannot be assumed that it is a safe and effective replacement for KBM in this particular setting. Current generation implementations may require significant changes before they are considered safe and effective. Improving system integration and workflow, as well as further developing SR accuracy and enhancing the capacity of user interface design to allow clinicians to detect and correct errors, may improve SR performance.

LIMITATIONS

Several factors could hinder the results of this study being generalized to other clinical settings or information systems. The study used a routine and standardized version of a widely used commercial clinical record system for EDs, integrated with a common commercial clinical SR system. However, other EHR and SR systems might differ in their individual performance, and different approaches to integrating the two could also lead to varying results.

SR performance may be affected by extrinsic factors, such as microphone quality, background noise level, and user accent. Equally, the tasks created for this study were intended to be representative of typical clinical documentation work in an ED, but different tasks in other settings could yield different outcomes. For example, dictation of investigation reports at high volume by expert clinicians might yield better time performance and recognition rates, although our previous review did not identify this.3

The lack of any impact of interruptions should be generalized only cautiously, given that the impact of interruptions is a complex phenomenon, and many different variables must interact for interruptions to have a negative effect on performance.

While the study was not specifically designed to test for the effects of clinical role, skill, or period of experience on outcome variables and was underpowered to test for these effects individually, it was possible to demonstrate a significant absence of correlation when all SR and KBM tasks were pooled. While there were no statistically significant differences in performance observed based on experience, skill, or seniority, the fact that participants volunteered for this study may have introduced a recruitment bias. All participants had completed training in the use of both the EHR and SR, and it is quite possible that results would be different, and potentially worse, if users had no such training.

CONCLUSION

The search for the safest and most efficient method of clinical documentation remains a work in progress. Emerging technologies such as mobile devices, touch pads, pen control, head-mounted displays, virtual reality, and wearable technology all have different affordances and are likely to work better in some contexts and provide varying results over a wide range of tasks.18 Given the ubiquitous nature of electronic records in healthcare, and the substantial cost of documentation in terms of clinician time and patient safety, the foundational act of interacting with an electronic record requires far closer attention, and substantially higher research priority, than it currently receives.

Supplementary Material

ACKNOWLEDGMENTS

David Lyell performed the second reviewer functions for error classification.

Thanks are offered to the staff and management teams at participating hospitals, in particular Dr Richard Paoloni and Dr Marty Sterrett, who both assisted greatly with study design and trial session coordination.

Funding

This work was supported by the National Health and Medical Research Council Centre for Research Excellence in eHealth (APP1032664).

Competing Interests

The authors declare that they have no competing interests.

Contributors

TH, EC, and FM conceived the study and its design. TH conducted the research, the primary analysis, and the initial drafting of the paper. EC and FM contributed to the analysis and drafting of the paper, and TH, EC, and FM approved the final manuscript. TH is the corresponding author.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

References

- 1. Coiera E. Guide to Health Informatics, 3rd ed London: CRC Press; 2015. [Google Scholar]

- 2. Chiang MF, Read-Brown S, Tu DC. et al. Evaluation of electronic health record implementation in ophthalmology at an academic medical center (an American Ophthalmological Society thesis). Trans Am Ophthalmol Soc. 2013;111:70. [PMC free article] [PubMed] [Google Scholar]

- 3. Hodgson T, Coiera E. Risks and benefits of speech recognition for clinical documentation: a systematic review. J Am Med Inform Assoc. 2016;23(e1):e169–e79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Shea S, Hripcsak G. Accelerating the use of electronic health records in physician practices. New Engl J Med. 2010;3623:192–95. [DOI] [PubMed] [Google Scholar]

- 5. Communications N. Speech Recognition Accelerating the Adoption of Electronic Health Records (white paper). Nuance Communications; 2012. [Google Scholar]

- 6. Wolpin S. Samsung's new refrigerator is also a giant tablet. 2016. http://www.techlicious.com/blog/samsung-refrigerator-family-hub-touchscreen//. Accessed November 19, 2016.

- 7. Coiera EW, Jayasuriya RA, Hardy J, Bannan A, Thorpe MEC. Communication loads on clinical staff in the emergency department. Med J Australia. 2002;1769:415–18. [DOI] [PubMed] [Google Scholar]

- 8. Coiera E. The science of interruption. BMJ Qual Safety. 2012;215:357–60. [DOI] [PubMed] [Google Scholar]

- 9. Faul F, Erdfelder E, Lang A-G, Buchner A. G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods. 2007;392:175–91. [DOI] [PubMed] [Google Scholar]

- 10. Food U, Administration D. Guidance for the content of premarket submissions for software contained in medical devices. Center Devices Radiol Health. 2005. [Google Scholar]

- 11. Cohen J. Weighted kappa: Nominal scale agreement provision for scaled disagreement or partial credit. Psychol Bull. 1968;704:213. [DOI] [PubMed] [Google Scholar]

- 12. dela Cruz JE, Shabosky JC, Albrecht M. et al. Typed versus voice recognition for data entry in electronic health records: emergency physician time use and interruptions. Western J Emerg Med. 2014;154:541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Magrabi F, Li SY, Day RO, Coiera E. Errors and electronic prescribing: a controlled laboratory study to examine task complexity and interruption effects. J Am Med Inform Assoc. 2010;175:575–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Westbrook JI, Coiera EW, Dunsmuir WTM. et al. The impact of interruptions on clinical task completion. Qual Safety Health Care. 2010;194:284–89. [DOI] [PubMed] [Google Scholar]

- 15. Yadav S, Kazanji N, Narayan K. et al. Comparison of accuracy of physical examination findings in initial progress notes between paper charts and a newly implemented electronic health record. J Am Med Inform Assoc. 2017;241:140–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Xiong W, Droppo J, Huang X. et al. Achieving Human Parity in Conversational Speech Recognition. 2016;arXiv preprint arXiv:1610.05256. [Google Scholar]

- 17. Kaufman DR, Sheehan B, Stetson P. et al. Natural language processing–enabled and conventional data capture methods for input to electronic health records: a comparative usability study. JMIR Med Inform. 2016;44:e35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. PricewaterhouseCoopers. The Wearable Future. Consumer Intelligence Series. 2014. Accessed October 2014. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.