Abstract

Comparison of Different Input Modalities and Network Structures for Deep Learning-Based Seizure Detection

Cho KO, Jang HJ. Sci Rep. 2020;10(1):1-11. doi: 10.1038/s41598-019-56958-y

The manual review of an electroencephalogram (EEG) for seizure detection is a laborious and error-prone process. Thus, automated seizure detection based on machine learning has been studied for decades. Recently, deep learning has been adopted in order to avoid manual feature extraction and selection. In the present study, we systematically compared the performance of different combinations of input modalities and network structures on a fixed window size and data set to ascertain an optimal combination of input modalities and network structures. The raw time series EEG, periodogram of the EEG, 2-dimensional [2D] images of short-time Fourier transform results, and 2D images of raw EEG waveforms were obtained from 5-second segments of intracranial EEGs recorded from a mouse model of epilepsy. A fully connected neural network (FCNN), recurrent neural network (RNN), and convolutional neural network (CNN) were implemented to classify the various inputs. The classification results for the test data set showed that CNN performed better than FCNN and RNN, with the area under the curve (AUC) for the receiver operating characteristics curves ranging from 0.983 to 0.984, from 0.985 to 0.989, and from 0.989 to 0.993 for FCNN, RNN, and CNN, respectively. As for input modalities, 2D images of raw EEG waveforms yielded the best result with an AUC of 0.993. Thus, CNN can be the most suitable network structure for automated seizure detection when applied to the images of raw EEG waveforms, since CNN can effectively learn a general spatially invariant representation of seizure patterns in 2D representations of raw EEG.

Commentary

The diagnosis of epilepsy largely relies on a clinical diagnosis and does not necessarily require the detection of an electrographic seizure using electroencephalogram (EEG).1 However, EEG plays a major role in evaluating epilepsy and may be relied on more heavily as remote seizure monitoring approaches are being developed and utilized clinically.1 Detection of seizures not only has clinical applications but is also essential for basic epilepsy research. Unfortunately, seizure detection is labor-intensive and can have large variability between investigators. Thus, there is a huge unmet need for automated seizure detection, which could potentially standardize seizure detection across sites, reduce laborious monitoring, and enable closed-loop therapeutic interventions.2

Seizure detection is a long-standing problem and a plethora of seizure detection methods have been developed. Popular among these are feature-based methods (power, line length, variance, skewness, kurtosis, entropy) combined with machine learning classifiers (support vector machine, logistic regression, k-nearest neighbors).3-5 Both the process of feature extraction and machine learning classifier selection require the user to have domain expertise and will affect model performance.4-7 Deep learning methods are a subclass of machine learning models which are based on neural networks with multiple layers that automate the extraction of features.7 Thus, deep learning methods have the advantage of not requiring user domain expertise to design the feature extraction making them ideal for wide adoption across the scientific/clinical community.7 Many studies investigated deep learning methods for automating seizure detection. However, their results are difficult to directly compare because several deep learning models were tested on different data sets that were trained on different input types (such as raw EEG traces, EEG images, fast fourier transform results) and different input sizes.

In an elegant study, Cho and Jang performed an in-depth comprehensive comparison of 3 deep learning model architectures (convolutional, recurrent, and fully connected neural networks: CNN, RNN, FCNN, respectively) across a variety of input types (raw EEG traces, raw EEG images, periodograms, and short-time Fourier Transform images) using a fixed 5-second window size.8 After initial classification of the 5-second segments, the authors classified seizures as continuous seizure events that lasted more than 10 seconds and had 1.2 higher absolute mean amplitude than nearby segments, further refining the model predictions. In general, all models performed very well reaching a classification score (area under the curve of receiver operating characteristics—AUC of ROC) greater than 98%. Convolutional neural networks performed overall better than the FCNN and RNN networks. Furthermore, the 2-dimensional [2D] CNNs with EEG images as input were superior to 1D CNNs with EEG traces or periodograms as inputs. Interestingly, models with temporally separated EEG images as input that captured 2 minutes before and after an event performed the best. The latter observation reinforces the fact that seizures may be better detected by contrasting traces proceeding and following an event. The raw 2D CNNs with EEG images also outperformed the other models when tested on multichannel human intracranial EEG data even though their performance was reduced in comparison to the rodent EEG, reaching a classification score of 0.824 that could have resulted from the smaller size of the data set.

Given the success of the deep learning models for seizure detection, it is necessary to evaluate the capability of implementation and validation of these models across the epilepsy community. It may also be beneficial to implement models with different qualities which can be used in various settings. For example, offline seizure detection, either in research or clinical context, will benefit from models with the highest sensitivity. A model with high sensitivity could be combined with manual inspection of the detected events allowing both a huge reduction in laborious screening and ensuring high fidelity of detected seizure events (and also yielding useful training data for model refinement). On the other hand, in cases such as closed-loop seizure detection and electrical stimulation, it will be more important to have models with the highest specificity precluding accidental stimulation when a seizure is not present. These slight differences in the model requirements necessitate the flexibility of a sharing platform to provide the ability to adapt these methods as needed.

Therefore, creating platforms with open-source infrastructures to share and evaluate the best performing models will be critical. Cho and Jang used the Python programming language which is open source and broadly used for software development, scientific, and data analysis. Importantly, the deep learning packages in Python are also open-source, state-of-the-art, and very well supported. As a result, these models can be easily distributed and adopted by the wider scientific and clinical epilepsy community. It is also important to weigh the cost and benefits of model performance and practicality. When considering practical models for wide adoption, raw EEG images as input are much more computationally expensive both in terms of model training and data conversion. In the case of semiautomation of offline EEG analysis, sensitivity is most important and the 1D versus 2D EEG CNN models exhibited very similar sensitivities (∼96%). Therefore, it could be more efficient and practical to focus on the use 1D EEG models for this application.

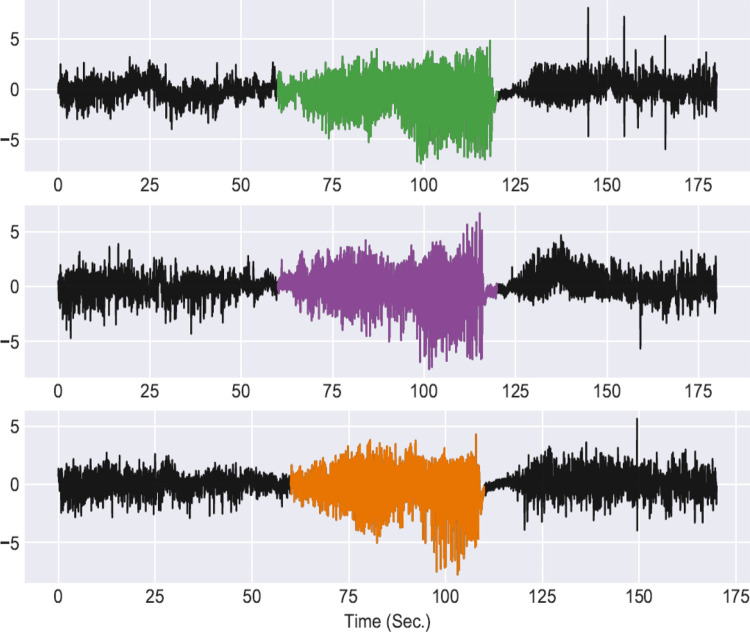

We trained a model based on the 1D CNN EEG model from Cho and Jang8 and tested its performance on seizure detection. The model was trained and tested on local field potential (LFP)/EEG data obtained from chronically epileptic mice generated using the intrahippocampal kainate model in our laboratory9 (LFP/EEG recordings obtained by Dr Trina Basu and human-annotated validation performed by Dr Trina Basu and Dr Pantelis Antonoudiou; Figure 1). The model was first trained with 5-second normalized inputs on a small portion of the data set (14 178 seizure and 58 432 nonseizure segments) using the Keras toolbox (TensorFlow Backend) with minimum interrogation of model training parameters.10 We then tested the model on the main part of the data set (4055 seizure and 2 541 260 nonseizure segments) to predict seizure events (predictions were refined with seizures of minimum duration of 25 seconds and power [2-40 Hz] 200% higher than nearby regions). The model detected 96.9% of the total seizures (345/356), with 326 extra false positives reaching an AUC of the ROC of 0.952 (Figure 1). Lowering the power threshold to 150% increased the seizure detection to 97.5% (347/356) along with false positives to 769. Therefore, this 1D CNN model with minimum optimization would be very useful for manual inspection of the detected events. In this case, it would reduce manual screening of ∼3535 hours of recordings to manual inspection of just 1116 events (∼55 minutes). Further model optimization, including increasing the training size and tuning hyperparameters will be required to reduce the number of false positives and improve classification accuracy. Therefore, current deep learning models do not require domain expertise for feature extraction and can be used in conjunction with manual inspection to cut down on laborious manual screening. Moreover, sharing reproducible models for validation will lead to better model generalization, reduce bias, and increase classification accuracy.

Figure 1.

We tested the automated seizure detection method developed by Cho and Jang using data collected in our lab from chronically epileptic mice (recorded using depth electrodes in the ventral hippocampus, frontal cortex electroencephalogram [EEG], and electromyogram), downsampled to 100 Hz in 5-second segments, with a seizure to nonseizure ratio of approximately 1 in 5. The colored segments indicate individual seizures recorded in the ventral hippocampus detected using the multichannel 1D convolutional neural network (CNN) model and demonstrate the ability to easily implement a deep learning model out of the box for automated seizure detection.

The study by Cho and Jang takes an important step in moving seizure detection to the next level, utilizing deep learning methods to optimize, and automate seizure detection. We have validated these approaches in our own hands, demonstrating the ability to quickly implement these tools and validating their utility across data sets. We are further developing these seizure detection methods, developing open-source platforms to facilitate the dissemination and implementation of these tools, making them more accessible, user-friendly, and adaptable. Further development of these automated seizure detection methods has the potential to be transformative for the field of epilepsy, benefitting both the clinical and basic research communities.

By Pantelis Antonoudiou and Jamie L. Maguire

Footnotes

ORCID iD: Jamie L. Maguire  https://orcid.org/0000-0002-4085-8605

https://orcid.org/0000-0002-4085-8605

References

- 1. Flink R, Pedersen B, Guekht AB, et al. Guidelines for the use of EEG methodology in the diagnosis of epilepsy: International league against epilepsy: Commission report commission on European Affairs: Subcommission on European Guidelines. Acta Neurologica Scandinavica. 2002;106(1):1–7. [DOI] [PubMed] [Google Scholar]

- 2. Stacey WC, Litt B. Technology insight: neuro engineering and epilepsy—designing devices for seizure control. Nature clinical practice. Neurology. 2008;4(4):190–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Baldassano S, Wulsin D, Ung H, et al. A novel seizure detection algorithm informed by hidden Markov model event states. J Neural Eng. 2016;13(3):036011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Siddiqui MK, Morales-Menendez R, Huang X, Hussain N. A review of epileptic seizure detection using machine learning classifiers. Brain Inform. 2020;7(1):1–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Logesparan L, Casson AJ, Rodriguez-Villegas E. Optimal features for online seizure detection. Med Biol Eng Comput. 2012;50(7):659–669. [DOI] [PubMed] [Google Scholar]

- 6. White AM, Williams PA, Ferraro DJ, et al. Efficient unsupervised algorithms for the detection of seizures in continuous EEG recordings from rats after brain injury. J Neurosci Methods. 2006;152(1-2):255–266. [DOI] [PubMed] [Google Scholar]

- 7. LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–444. [DOI] [PubMed] [Google Scholar]

- 8. Cho KO, Jang HJ. Comparison of different input modalities and network structures for deep learning-based seizure detection. Sci Rep. 2020;10(1):1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Zeidler Z, Brandt-Fontaine M, Leintz C, et al. Targeting the mouse ventral Hippocampus in the intrahippocampal kainic acid model of temporal lobe epilepsy. eNeuro. 2018; 5(4):ENEURO.0158–18.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Chollet F. Keras. 2015. https://www.tensorflow.org/guide/keras