Abstract

Objective: To reduce wasteful ordering of rare 1,25-OH vitamin D lab tests through use of a noninterruptive decision support tool.

Materials and Methods: We conducted a time series quality improvement study at 2 academic hospitals. The titles of vitamin D tests and the order in which they appeared in search results were changed to reflect the purpose and rarity of the tests. We used interruptive time series analyses to evaluate the changes we made.

Results: The estimated number of monthly tests ordered at the 2 hospitals increased, by 24.8 and 14.2, following the introduction of computerized provider order entry (CPOE) (both P < .001). When we changed the titles of the tests, the estimated number of monthly tests decreased at the 2 hospitals, by 22.1 and 11.3 (both P < .001). The search order did not affect test utilization.

Discussion: Changing catalog names in CPOE systems for infrequently used tests can reduce unintentional overuse. Users may prefer this to interruptive or restrictive interventions.

Conclusion: CPOE vendors and users should refine interfaces by incorporating human factors engineering. Health care institutions should monitor test utilization for unintended changes after CPOE implementation.

Keywords: Vitamin D, clinical decision support, user interface optimization, test naming conventions

INTRODUCTION

To deliver high-value care, providers should avoid ordering tests that do not influence clinical decisions.1 Yet unnecessary laboratory testing occurs commonly in the inpatient environment.2–4 Elements contributing to this waste include providers’ lack of familiarity with the costs, indications, and performance characteristics of tests5,6 as well as a desire to reduce their discomfort with clinical uncertainty.7 Clinical decision support systems (CDSSs) embedded within computerized provider order entry (CPOE) software display information and recommendations to clinicians about tests or treatments relevant to a specific patient. CDSSs have been shown to reduce wasteful lab utilization, but have important limitations.8 Many CPOE platforms require costly local CDSS development,9 and CDSS may rely excessively on interruptive alerts, which can result in clinician “alert fatigue.”10 Without incorporating user-centered design principles into interfaces and CDSSs, CPOE implementation may facilitate wasteful laboratory utilization.11,12

After CPOE implementation at our institution, the laboratory reported an unexpected increase in electronic ordering of 1,25-OH vitamin D testing (1,25-VDT) and a decrease in electronic ordering of 25-VDT. Whereas 25-VDT is commonly indicated and sufficient for evaluation of hypovitaminosis D, the 1,25-VDT test is only required for evaluation of rare endocrine problems. During informal discussions with users to identify root causes of the waste, many clinicians acknowledged confusing these similar sounding tests and selecting both rather than seeking guidance. We theorized that our CPOE interface increased incorrect orders because searches for “vitamin D” (1) presented 1,25-VDT first by alphanumeric sorting, and (2) the order window lacked visible guidance about which test to choose. A solution for vitamin D test misordering has been reported, but it relied on interruptive alerts.11 We performed a quality improvement intervention based on a noninterruptive optimization of the user interface for vitamin D level orders to address our theory about incorrect ordering.

We anticipated that a successful intervention would reduce the number of 1,25-OH VDT orders to a level similar to the pre-CPOE period.

METHODS

This quality improvement intervention with time series analysis was conducted between November 2011 and September 2015 at 2 affiliated tertiary care academic hospitals. The hospitals share a CPOE system (Cerner Millennium, Kansas City, MO, USA), which completely replaced paper orders in “big bang” implementations in May 2012 (Hospital A, a university medical center) and September 2012 (Hospital B, an urban safety net and trauma hospital).

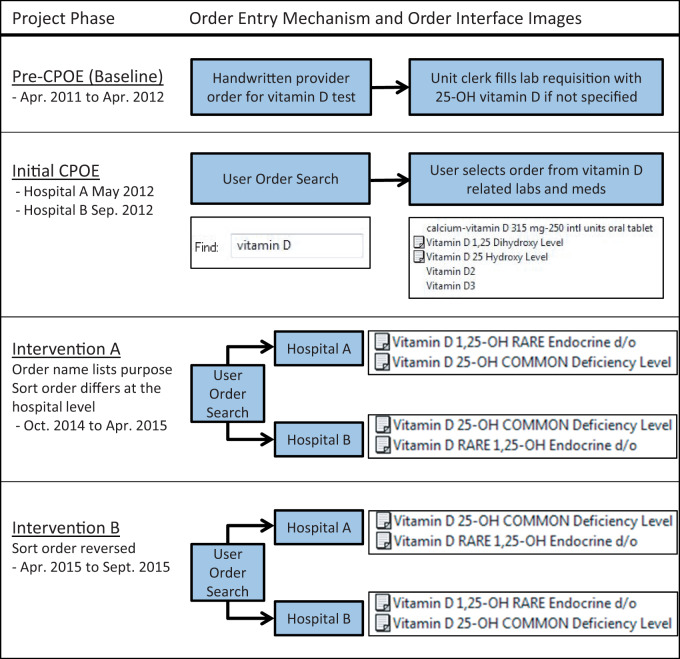

Prior to CPOE, ward clerks transcribed handwritten orders onto lab requisitions, interpreting nonspecific orders for vitamin D testing to mean the 25-OH metabolite (Figure 1). At go-live, CPOE orders generated lab requisitions that were transmitted electronically to the laboratory computer system, bypassing the clerk. Vitamin D test orders were initially labeled “Vitamin D 1,25 Dihydroxy Level” and “Vitamin D 25 Hydroxy Level” (Figure 1). Vendor functionality limited order names to 40 characters and users could access a Web-based test guide with infobuttons next to each test.13 No reflexive panels or order sets contained 1,25-VDT, although users could add any test to personal order sets.

Figure 1.

Mechanisms of vitamin D metabolite order entry during a quality improvement initiative at 2 academic hospitals (CPOE: computerized provider order entry)

In November 2014, we implemented intervention Part A (Figure 1). The vitamin D test names were changed to indicate the purpose and relative frequency of each test. The 25-VDT was named “Vitamin D 25-OH COMMON Deficiency Level.” The 1,25 VDT test included the phrase “RARE Endocrine d/o”; the word order was adjusted so that 1,25-VDT sorted alphanumerically before 25-VDT at Hospital A and after 25-VDT at Hospital B (Figure 1). In April 2015 we implemented intervention Part B and reversed the alphanumeric sort order of 1,25-VDT and 25-VDT at the 2 hospitals as part of a process improvement cycle to address whether hospital-specific ordering habits might bias evaluation of the effect of sort order on test utilization (Figure 1).

We estimated mean monthly test utilization for 1,25-VDT for 3 time periods: (1) the pre-CPOE period, (2) the initial CPOE period, and (3) the intervention period for each hospital. To evaluate changes between the 3 time periods in 1,25-VDT, we conducted an interrupted time series analysis using an autoregressive correlation with a lag of 1. We conducted a separate analysis for each hospital, examining the number of 1,25-VDT tests per month ordered across time (month and year). Because there was a small number of data points for intervention Parts A and B when analyzed separately, we also reanalyzed our data combining Parts A and B into 1 group to determine whether the small counts affected our inference. To assess for secular trends in hospital patient volume, the monthly average daily census was abstracted from administrative databases.

To evaluate appropriateness, 1 author (A.A.W.) reviewed charts from a sample of 30 randomly selected patients (15 from each hospital) who underwent 1,25-VDT after CPOE and before the intervention.

RESULTS

The number of monthly 1,25-VDT tests increased after CPOE implementation and then decreased following our intervention. The increases in utilization occurred immediately after CPOE implementation at each hospital. The mean monthly numbers of 1,25-VDT tests for Hospital A in the pre-CPOE period, initial CPOE period, and intervention period were 2.8, 28.4, and 1.1, respectively. In Hospital B, the mean monthly numbers of 1,25-VDT tests in the pre-CPOE period, initial CPOE period, and intervention period were 1.3, 15.3, and 1.8, respectively.

Hospital A

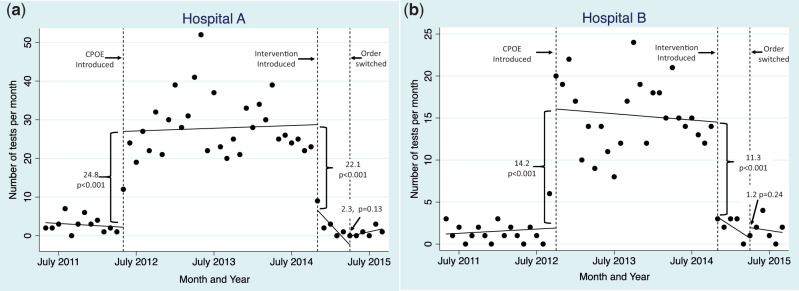

Between the pre- and initial CPOE periods, the estimated number of monthly tests ordered at Hospital A increased by 24.8 (P < .001) (Figure 2A). Compared to the initial CPOE period, the estimated number of monthly tests after the intervention (Part A) was introduced decreased by 22.1 (P < .001). There was no statistically significant difference in estimated number of monthly tests ordered when intervention Part B was introduced, changing the alphanumeric sort order (2.3, P = .13, Figure 2A). When Parts A and B were treated as a single group, the decrease between the initial CPOE period and the intervention was 24.9 (P < .001) (not shown in graph).

Figure 2.

Time series graph of 1,25-OH vitamin D order counts at 2 hospitals. The graph spans periods with the use of paper orders, the use of native CPOE beginning May 2012 and September 2012 at Hospital A (A) and Hospital B (B), and the quality improvement intervention in October 2014.

Hospital B

Between the pre- and initial CPOE periods, the estimated number of monthly tests ordered at Hospital B increased by 14.2 (P < .001) (Figure 2B). Compared to the initial CPOE period, the estimated number of monthly tests after the intervention (Part A) was introduced decreased by 11.3 (P < .001). There was no statistically significant difference in the estimated number of monthly tests ordered when intervention Part B was introduced, changing the alphanumeric sort order (1.2, P = .24, Figure 2B). When Parts A and B were treated as a single group, the decrease between the initial CPOE period and the intervention was 11.9 (P < .001) (not shown in graph).

Supplemental Analysis

The chart review from the pre-intervention, post-CPOE period found no indication for 1,25-VDT in 28 of 30 orders (93.3%) and 25-VDT was ordered simultaneously in 40% of patients (12/30).

Patient volume was also comparable; the combined average daily census of the 2 hospitals increased slightly (by 2.4%) from the pre-CPOE to the initial CPOE phase (718.4 patients per day to 735.6 patients per day).

DISCUSSION

In this time series quality improvement study, we found a dramatic rise in unnecessary 1,25-VDT utilization associated with CPOE implementation followed by a sustained reciprocal decrease associated with a minor change in the name of the order. That the increase in 1,25-VDT orders occurred independently at each hospital in concert with CPOE implementation suggests the CPOE interface was responsible for the increase in 1,25-VDT ordering. The decrease occurred at both study hospitals simultaneously following our intervention. It was not statistically significantly associated with a change in the sort order, indicating that displaying the purpose and relative frequency of the test in its name accounted for most of the change in ordering behavior.

Our noninterruptive mechanism to guide clinicians to the correct test more effectively reduced waste than the application’s native infobutton decision support. During the initial CPOE and intervention phases, Web-based information about the test could be reached with a single mouse click. However, infobuttons did not inhibit wasteful use of 1,25-VDT before the test name change. Additionally, the prevalence of simultaneous orders for 25-VDT and 1,25-VDT during the pre-intervention period indicates that the infobutton did not deter clinicians from ordering both tests.

Prior system-based solutions for reducing waste of a single test have relied primarily on interruptive or restrictive mechanisms, such as pop-up alerts, forms requiring the clinician to justify the order, and efforts to delete or obscure tests in the order catalog.8,11,14,15 These types of interventions entail significant cumulative cognitive costs for clinicians: alert fatigue, inefficient workflow, burdensome data entry, and frustration or lost time associated with locating and ordering tests outside CPOE. Within the narrow context of this single laboratory test, our solution succeeded without creating alerts or extra clicks for the user and did not require workflow barriers to legitimate orders for 1,25-VDT. Although this solution may not apply to all other types of overutilization, it suggests there may be opportunities to increase innovation in interface changes that are rooted in human factors engineering. For example, CPOE vendors could assist clients by recommending proven default test names. In addition to optimizing naming conventions, future search functions within CPOE might emulate Internet search engines by incorporating information about the user’s level of training, specialty, and past orders or the patient’s diagnosis to customize how orders are displayed to the user.

Our study has limitations. First, the data comes from a single CPOE software package at 2 academic centers, which may limit generalizability. Other CPOE systems may have different noninterruptive decision support, and providers at our academic setting may be different from other providers and settings. By using a nonrandomized quality improvement design, there may have been unmeasured confounders or temporal trends that contributed to the outcome. However, the presence of a stable baseline and the dramatic changes that followed our efforts suggests unmeasured secular changes or other factors are unlikely to be responsible for the observed effect. We had a limited number of data points to examine changes in the alphanumeric sort order of our intervention, which may have limited our ability to detect differences with this intervention. Also, the small increase in hospital volume over the study period was of insufficient magnitude to explain the changes in test utilization. Finally, these findings may not generalize to tests with complex or nuanced indications that would be difficult to encode succinctly in the name of the test.

Our findings highlight the need for CPOE vendors and users to refine user interfaces by incorporating human factors engineering. Misordering of tests leads to wasted resources and may mislead clinical decisions. Our work points to the need for health care institutions to closely monitor test utilization for unintended changes after CPOE implementation. Lastly, this work demonstrates the potential for subtle but informed changes to the user interface to reduce waste without interrupting physician workflow.

Contributorship statement

Study conception and design: All

Acquisition of data: A.A.W., N.G.H.

Analysis and interpretation of data: C.M.McK.

Drafting of manuscript: A.A.W., C.M.McK.

Critical Revision: All

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sector.

Competing interests

The authors have no competing interests to declare.

REFERENCES

- 1. Cassel CK, Guest JA. Choosing wisely: helping physicians and patients make smart decisions about their care. JAMA. 2012;307(17):1801–1802. [DOI] [PubMed] [Google Scholar]

- 2. van Walraven C, Raymond M. Population-based study of repeat laboratory testing. Clin Chem. 2003;49(12):1997–2005. [DOI] [PubMed] [Google Scholar]

- 3. van Walraven C, Naylor CD. Do we know what inappropriate laboratory utilization is? A systematic review of laboratory clinical audits. JAMA. 1998;280(6):550–558. [DOI] [PubMed] [Google Scholar]

- 4. Miyakis S, Karamanof G, Liontos M, et al. Factors contributing to inappropriate ordering of tests in an academic medical department and the effect of an educational feedback strategy. Postgrad Med J. 2006;82(974):823–829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Graham JD, Potyk D, Raimi E. Hospitalists' awareness of patient charges associated with inpatient care. J Hosp Med. 2010;5(5):295–297. [DOI] [PubMed] [Google Scholar]

- 6. Sood R, Sood A, Ghosh AK. Non-evidence-based variables affecting physicians' test-ordering tendencies: a systematic review. Neth J Med. 2007;65(5): 167–177. [PubMed] [Google Scholar]

- 7. Foundation ABoIM. Unnecessary tests and procedures in the health care system: what physicians say about the problem, the causes, and the solutions. 2014. http://www.choosingwisely.org/wp-content/uploads/2014/04/042814_Final-Choosing-Wisely-Survey-Report.pdf. Accessed March 15, 2015. [Google Scholar]

- 8. Kobewka DM, Ronksley PE, McKay JA, et al. Influence of educational, audit and feedback, system based, and incentive and penalty interventions to reduce laboratory test utilization: a systematic review. Clin Chem Lab Med. 2015;53(2):157–183. [DOI] [PubMed] [Google Scholar]

- 9. Bryant AD, Fletcher GS, Payne TH. Drug interaction alert override rates in the Meaningful Use era: no evidence of progress. Appl Clin Inform. 2014;5(3):802–813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc. 2006;13(2): 138–147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Krasowski MD, Chudzik D, Dolezal A, et al. Promoting improved utilization of laboratory testing through changes in an electronic medical record: experience at an academic medical center. BMC Med Inform Decis Mak. 2015; 15(1):137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Chan J, Shojania KG, Easty AC, et al. Does user-centred design affect the efficiency, usability and safety of CPOE order sets? J Am Med Inform Assoc. 2011;18(3): 276–281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Cimino JJ, Li J. Sharing infobuttons to resolve clinicians' information needs. AMIA Annu Symp Proc. 2003; 2003:815. [PMC free article] [PubMed] [Google Scholar]

- 14. Rosenbloom ST, Chiu KW, Byrne DW, et al. Interventions to regulate ordering of serum magnesium levels: report of an unintended consequence of decision support. J Am Med Inform Assoc. 2005;12(5):546–553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Froom P, Barak M. Cessation of dipstick urinalysis reflex testing and physician ordering behavior. Am J Clin Pathol. 2012;137(3): 486–489. [DOI] [PubMed] [Google Scholar]