Abstract

Objective: In this systematic review, we aimed to evaluate methodological and reporting trends present in the current literature by investigating published usability studies of electronic health records (EHRs).

Methods: A literature search was conducted for articles published through January 2015 using MEDLINE (Ovid), EMBASE, Scopus, and Web of Science, supplemented by citation and reference list reviews. Studies were included if they tested the usability of hospital and clinic EHR systems in the inpatient, outpatient, emergency department, or operating room setting.

Results: A total of 4848 references were identified for title and abstract screening. Full text screening was performed for 197 articles, with 120 meeting the criteria for study inclusion.

Conclusion: A review of the literature demonstrates a paucity of quality published studies describing scientifically valid and reproducible usability evaluations at various stages of EHR system development. A lack of formal and standardized reporting of EHR usability evaluation results is a major contributor to this knowledge gap, and efforts to improve this deficiency will be one step of moving the field of usability engineering forward.

Keywords: electronic health records, health information technology, human factors, usability

Introduction

Using electronic health records (EHRs) is a key component of a comprehensive strategy to improve healthcare quality and patient safety.1 The incentives provided by the Meaningful Use program are intended to encourage increased adoption of EHRs as well as more interactions between EHRs and medical providers.2 As such, there has never been a greater need for effective usability evaluations of EHR systems, both to prevent the implementation of suboptimal EHR systems and improve EHR interfaces for healthcare provider use. Compromised EHR system usability can have a number of significant negative implications in a clinical setting, such as use errors that can potentially cause patient harm and an attenuation of EHR adoption rates.1,3 As a result, the National Institute of Standards and Technology has provided practical guidance for the vendor community on how to perform user- centered design and diagnostic usability testing to improve the usability of EHR systems currently under development.1,2,4 The Office of the National Coordinator for Health Information Technology has even established user-centered design requirements that must be met before vendor EHRs receive certification.5

Despite the pressing need for usability evaluations, knowledge gaps remain in regards to how to successfully execute such an assessment. There is currently little guidance on how to perform systematic evaluations of EHRs and report findings and insights that can guide future usability studies.6,7 Identifying these gaps and reporting on the current methodology and outcome reporting practices is the first step of moving the field toward adopting a more unified and generalizable process of studying EHR system usability. In the present systematic review, we aimed to evaluate methodological and reporting trends present in the current literature by assessing published usability studies of EHRs. We reviewed the different engineering methods employed for usability testing in these studies as well as the distribution of medical domains, end-user profiles, development phases, and objectives for each method used.

Methods

We followed the standards set forth by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA-P) 2015 Initiative8 and the Institute of Medicine's Standards for Systematic Reviews9 to conduct this study.

Institutional Review and Human Subject Determination

The present study was exempted from approval by the Mayo Clinic institutional review board, because it did not involve active human subject research. No individual patients participated in this study.

Data Sources

Our medical reference librarian (A.M.F) designed the search strategy and literature search with input from the investigators. We searched MEDLINE (Ovid), EMBASE, Scopus, and Web of Science for articles published from database inception through January 2015. Duplicates were removed automatically using Endnote, and the remaining articles were then compared manually using the author, year, title, journal, volume, and pages to identify any additional duplicates. We increased the comprehensiveness of the database searches by looking for additional potentially relevant studies in the cited references of the retrieved studies.

Search Terms

We performed the search by identifying literature that contained keywords in two or more of the following categories (expanded with appropriate Medical Subject Headings and other related terms): (1) electronic health records, (2) usability, and (3) usability testing methods.10 The final search strategy we used is available online in Supplementary Appendix A.

Inclusion and Exclusion Criteria

Setting

We included studies that tested the usability of hospital and clinic EHR systems in the inpatient, outpatient, emergency department, or operating room settings. We excluded usability studies of medical devices, web-based applications, mobile devices, dental records, and personal health records.11

Systems

We included studies that performed usability testing on any of the following EHR components: electronic medical record (ie, document manager, lab viewer, etc.), computerized physician order entry, clinical decision support tools, and anesthesia information management system.

Language

Only English-language studies were included in the review.

Article Selection

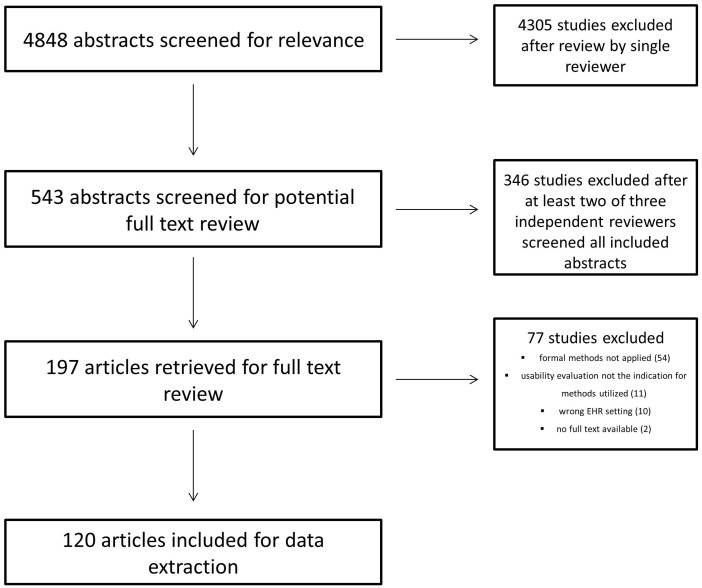

One of the authors (M.A.E.) independently screened the titles and abstracts of all the citations retrieved by the final search strategy (4848) to determine whether an abstract was "potentially relevant" or "not relevant" to the subject at hand. Abstracts were considered "not relevant" if they were not about a medical subject or were overall grossly unrelated to the study topic. Using this screening process, 543 studies were considered to be "potentially relevant." At least two of three reviewers (M.A.E, J.C.O, and M.D.) further screened each of the 543 studies' abstracts to determine candidates for full text review. Studies were excluded from full text review if they did not pertain to EHRs. Of these, 197 studies underwent a subsequent full text review, performed by the same three reviewers, with 120 articles ultimately selected for data extraction.12–131 A summary of this review process is presented in Figure 1. Any conflicts that arose in the screening and review processes were resolved by discussion between the conflicting reviewers. Article screening was coordinated and performed using the online systematic review tool Covidence (Alfred Health, Monash University, Melbourne, Australia).132,133

Figure 1.

Study selection flow chart.

Data Abstraction

Comprehensive data extraction was performed by one of the authors (M.A.E.) on the articles that passed the full screening review. We extracted data relating to country, objective, setting, component tested, type of evaluation, description of evaluators and subjects (prescriber: physician, advanced practice nurse, physician assistant; non-prescriber: registered nurse, pharmacist, support staff), and usability methods employed.

Results

Characteristics of Included Studies

Table 1 summarizes the characteristics of the studies included in this systematic review. The majority of the studies were conducted in the United States (53%) or the Netherlands (8%), and no other country had more than four articles. Most studies had a summative (80%) outcome design and evaluated EHRs in either a mixed (42%) or outpatient (35%) clinical setting.

Table 1.

Characteristics of Included Studies

| Characteristic | n (%) |

|---|---|

| Total | 120 (100) |

| Country | |

| United States | 64 (53) |

| Netherlands | 10 (8) |

| Canada | 4 (3) |

| Norway | 4 (3) |

| Othera | 38 (32) |

| Study Objective | |

| Summative | 96 (80) |

| Formative | 20 (17) |

| Both | 4 (3) |

| Setting | |

| Mixed | 50 (42) |

| Outpatient | 42 (35) |

| Hospital wards | 13 (11) |

| Emergency department | 6 (5) |

| Intensive care unit | 3 (2) |

| Operating room | 3 (2) |

| Other | 3 (2) |

aCountries with ≤3 studies, totaled.

Characteristics of Usability Evaluations

Table 2 summarizes the characteristics of the usability evaluations performed in the included studies. A slight majority (56%) of the studies were performed on an electronic medical record, and clinical decision support tools (21%) and computerized physician order entries (17%) were the next most-common study subjects. A majority of the studies were performed as implementation/post-implementation evaluations (64%), and requirements/development evaluations (8%) were the least-often performed in the studies reviewed. More than two-thirds of the evaluations involved clinical prescribers (45%, prescribers only; 23%, prescribers and non-prescribers), and 15 (13%) studies failing to give a description of the evaluation subjects. Only 29% of the studies assessed included a description of the study evaluators responsible for designing and carrying out the usability evaluation.

Table 2.

Characteristics of Usability Evaluations

| Characteristic | n (%) |

|---|---|

| Component Tested | |

| Electronic medical record | 67 (56) |

| Clinical decision support tool | 25 (21) |

| Computerized physician order entry | 20 (17) |

| Anesthesia information management system | 2 (1) |

| Mixed | 6 (5) |

| Type of Evaluation | |

| Implementation/post-implementation | 77 (64) |

| Prototype | 18 (15) |

| Requirements/development | 9 (8) |

| Mixed | 16 (13) |

| Evaluation Subjects | |

| Prescribers | 54 (45) |

| Non-prescribers | 23 (19) |

| Both | 28 (23) |

| Unknown | 15 (13) |

| Description of Evaluators Included? | |

| Yes | 35 (29) |

| No | 85 (71) |

Usability Methods Utilized

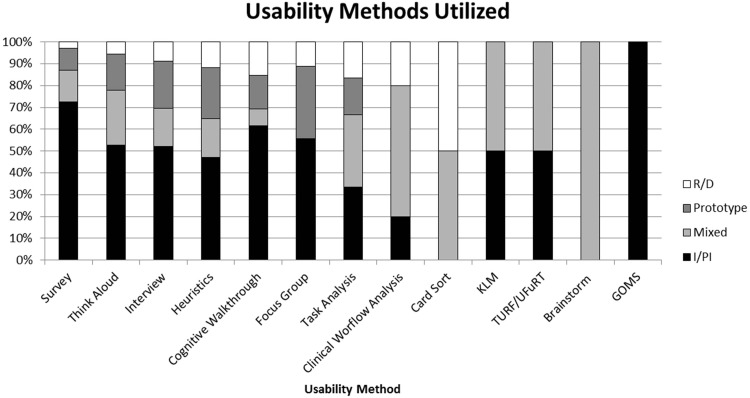

Supplementary Appendix B provides the list of usability methods used for categorization.4,10 The 120 studies analyzed for this review were categorized based on these different analysis methods, with many studies utilizing more than one method. Table 3 and Figure 2 provide tabular and graphical breakdowns of the relative frequency of use of each usability analysis method, stratified by the four different EHR evaluation types (requirements/development, prototype, implementation/post-implementation, and mixed). The most frequent methods used were survey (37%) and think-aloud (19%), which, combined, accounted for more than half of all the usability evaluations we reviewed. These two methods were both the first- and second-most-commonly used techniques in each type of evaluation.

Table 3.

Usability Methods Utilized

| Usability Method | Type of Evaluation |

Total n (%) | |||

|---|---|---|---|---|---|

| R/D | Prototype | I/PI | Mixed | ||

| Survey | 2 | 7 | 50 | 10 | 69 (37) |

| Think-aloud | 2 | 6 | 19 | 9 | 36 (19) |

| Interview | 2 | 5 | 12 | 4 | 23 (12) |

| Heuristics | 2 | 4 | 8 | 3 | 17 (9) |

| Cognitive walkthrough | 2 | 2 | 8 | 1 | 13 (7) |

| Focus group | 1 | 3 | 5 | – | 9 (5) |

| Task analysis | 1 | 1 | 2 | 2 | 6 (3) |

| Clinical workflow analysis | 1 | – | 1 | 3 | 5 (3) |

| Card sort | 1 | – | – | 1 | 2 (1) |

| KLM | – | – | 1 | 1 | 2 (1) |

| TURF/UFuRT | – | – | 1 | 1 | 2 (1) |

| Brainstorm | – | – | – | 1 | 1 (<1) |

| GOMS | – | – | 1 | – | 1 (<1) |

| Total, n (%) | 14 (8) | 28 (15) | 108 (58) | 36 (19) | 186 |

GOMS, goals, operators, methods, and selections; KLM, keystroke-level model; I/PI, implementation/post-implementation; R/D, requirements/development; TURF/UFuRT, tasks, users, representations, and functions.

Figure 2.

Usability method distribution by type of evaluation. R/D, requirements/development; I/PI, implementation/post-implementation.

Of the 69 studies in which surveys were performed, 33 (48%) were developed specifically for the study or were not described in the article's text. The most common existing and validated survey used was the System Usability Scale134 (20%), followed closely by the Questionnaire for User Interaction Satisfaction135 (16%).

The heuristics method was used in 17 studies, with Nielsen's Usability Heuristics136 being cited as the basis of the evaluation in 10 (59%) of these studies. Study-specific heuristic methods or methods that were not described in the article's text accounted for 35% of the heuristic evaluations.

Objective Data

Twenty-eight (23%) of the included studies reported objective data that were obtained in addition to the formal usability evaluation. These data included time to task completion, task completion accuracy, usage rates, mouse clicks, and cognitive workload.

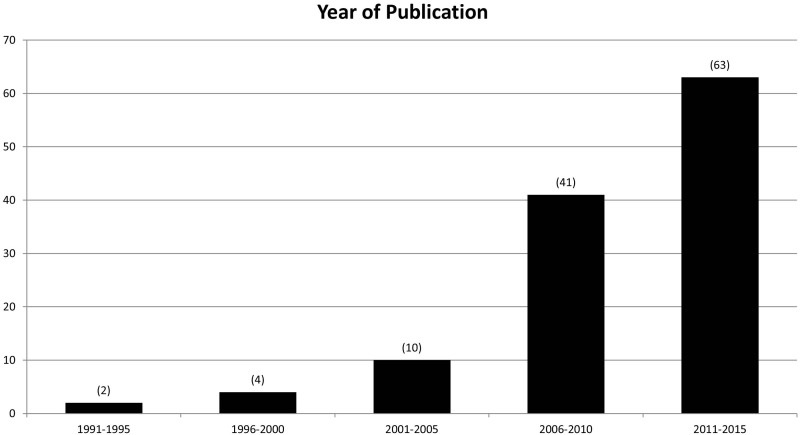

Publication Year

Included studies were published between the years 1991 and 2015, although only six studies were published before 2000 (Figure 3). When analyzed by 5-year time increments, there were no obvious differences in regards to study objective, components tested, stage of development, and usability method used in the studies reviewed.

Figure 3.

Distribution of included studies, stratified by year. The number of studies that were published within each 5-year increment is in parentheses.

Discussion

The goal of this systematic review was to describe the range and characteristics of published EHR evaluation studies that included accepted usability analysis or engineering methods.10,137 Even though our initial search identified nearly 5000 potential studies, only a very small fraction of these truly applied usability evaluation standards and were therefore eligible for our review.

The majority of studies included in the systematic review had a summative study objective and were performed late in the EHR system design cycle, either during or after the system's implementation. This is consistent with previous findings7,138 and sheds light on the lack of EHR evaluations performed early on or throughout the design process, when usability issues can be more readily identified and rectified. Often, the responsibility of conducting evaluations early in the EHR design process is placed on commercial EHR vendors, and relying on their diligence is important for successful EHR implementation and adoption. However, a recent evaluation of the largest EHR vendors revealed that less than half are conducting industry-standard5 usability evaluations, and a significant number of vendors are not even employing usability staff to carry out these assessments.139,140

We found that the usability method most often employed in published evaluations of EHR systems is to survey or distribute questionnaires among end-users. Although surveys are useful for gathering self-reported data about the user's perception of how useful, usable, and satisfying141 an EHR system is, they do not allow evaluators to identify individual usability problems that can be targeted for improvement, a process that is at the core of usability evaluations.2,10,43,142 Furthermore, of all the survey evaluations identified, almost half did not use validated surveys or failed to describe the survey creation methods in a way that allows the reader to assess the reliability and generalizability of the studies' results.

As with the survey-based studies, we found that studies using other, sometimes more complicated, usability analysis methods also often neglected to provide a clear, detailed description of their study design.2,138 For example, a significant number of the heuristic evaluation reports we reviewed did not describe what heuristics were used or the methods behind developing the study-specific heuristics. In addition, interview, focus group, and think-aloud evaluations almost universally lacked specific details about the techniques used to moderate participant sessions143 and the expertise or qualifications of the moderator.4 These omissions prevent the reader from being able to assess what biases or unintended consequences, which could possibly affect the reported outcomes, may have been introduced into the study design.

Although the majority of studies we reviewed provided a description of the subjects who tested the EHR system's usability, these studies less consistently described the evaluators responsible for designing and carrying out the usability evaluation. Often the reader is not informed of what the expertise level and domain experience are of those performing the evaluations. Many usability evaluation methods are complex and multifaceted, and evaluators who have usability, domain, and/or local systems expertise are critical for an effective evaluation.1,43 This lack of a consistent background reporting framework limits the reader's ability to appraise the reliability of the evaluation or validate its outcomes.6

The need to improve usability standardization and transparency has never been greater, especially since this issue has been garnering attention from academic,11 industry,139,140 and media144,145 sources. The pressure from industry to improve the usability of EHRs should be systematically aligned with policy-level certification and requirement standards, because recent data show variability among EHR vendors regarding what actually constitutes "user-centered designed" and how to carry out appropriate evaluations of EHR systems.140

This systematic review has some limitations, most of which stem from the heterogeneity of the studies reviewed. The lack of uniformity in study authors' descriptions of usability reporting and the methodology employed in the studies means it is possible that articles that met our inclusion criteria were overlooked and not included in the review. However, this emphasizes not only the need for formal usability evaluation performance standards1,2,5 but also for reporting and disseminating evaluation results.6 The exclusion of non-English-language studies may limit the generalizability of our findings to the worldwide effort to improve EHR usability evaluations. However, the general conclusions of this review are similar to those of previous international work done on this topic7,146 and help validate our search and study evaluation processes.

Additionally, it is important to remember that the purpose of a specific evaluation cannot be accurately determined by only assessing the types of methods used for the evaluation. This is especially relevant when multiple or mixed methods are employed to evaluate EHRs at various stages of their development. Thus, any conclusions about EHR evaluations are best made in the context of each unique evaluation, its primary purpose and goals, and the specific boundaries within which it was undertaken.

Conclusion

Usability evaluations of EHR systems are an important component of the recent push to put electronic tools in the hands of clinical providers. Both government and industry standards have been proposed to help guide these evaluations and improve the reporting of outcomes and relevant findings from them (Table 4). However, a review of the literature on EHR evaluations demonstrates a paucity of quality published studies describing scientifically valid and reproducible usability evaluations conducted at various stages of EHR system development and how findings from these evaluations can be used to inform others in similar efforts. The lack of formal and standardized reporting of usability evaluation results is a major contributor to this knowledge gap, and efforts to improve this deficiency will be one step of moving the field of usability engineering forward.

Table 4.

Proposed Framework for Reporting Usability Evaluations

| Background |

| Previous usability work |

| Impetus for current study |

| Test Planning |

| Test objectives (the questions the study is designed to answer) |

| Test application |

| Performance and satisfaction metrics |

| Methodology |

| Study evaluators |

| Participants |

| Tasks |

| Procedure |

| Test environment/equipment |

| Analysis |

| Timeline |

| Results/Outcomes |

| Metrics (performance, issues-based, self-reported, behavioral) |

| Audience insights |

| Actionable improvements |

Supplementary Material

Contributors

M.A.E.: study design, data collection, data analysis and interpretation, writing, manuscript editing; M.D.: study design, data collection, data analysis and interpretation, writing, manuscript editing; J.C.O.: study design, data collection, data analysis and interpretation, manuscript editing; A.M.F.: study design, data collection, writing, manuscript editing; J.Z.: study design, data analysis and interpretation, manuscript editing; V.H.: study design, data analysis and interpretation, manuscript editing.

Funding

J.C.O.'s time and participation in this study was funded by the Mayo Clinic's Robert D. and Patricia E. Kern Center for the Science of Health Care Delivery. No other funding was secured for this study.

Competing interests

None.

REFERENCES

- 1. Lowry SZ, Quinn MT, Ramaiah M, et al. Technical evaluation, testing, and validation of the usability of electronic health records. US Department of Commerce, National Institute of Standards and Technology (NIST). NIST Interagency/Internal Report (NISTIR) – 7804. 2012. [Google Scholar]

- 2. Shumacher RM, Lowry SZ. NIST guide to the processes approach for improving the usability of electronic health records. US Department of Commerce, National Institute of Standards and Technology (NIST). NIST Interagency/Internal Report (NISTIR) – 7741. 2010. [Google Scholar]

- 3. Han YY, Carcillo JA, Venkataraman ST, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics. 2005;116(6):1506–1512. [DOI] [PubMed] [Google Scholar]

- 4. US Department of Health and Human Services (HHS) Office of the Assistant Secretary for Public Affairs, Digital Communications Division. Usability.gov. http://www.usability.gov. Accessed May 1, 2015.

- 5. US Department of Health and Human Services (HHS), Office of the National Coordinator for Health Information Technology. Health Information Technology: Standards, Implementation Specifications, and Certification Criteria for Electronic Health Record Technology.

- 6. Peute LW, Driest KF, Marcilly R, Bras Da Costa S, Beuscart-Zephir MC, Jaspers MW. A framework for reporting on human factor/usability studies of health information technologies. Stud Health Technol Inform. 2013;194:54–60. [PubMed] [Google Scholar]

- 7. Peute LW, Spithoven R, Bakker PJ, Jaspers MW. Usability studies on interactive health information systems; where do we stand? Stud Health Technol Inform. 2008;136:327–332. [PubMed] [Google Scholar]

- 8. Shamseer L, Moher D, Clarke M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: elaboration and explanation. BMJ. 2015;349:g7647. [DOI] [PubMed] [Google Scholar]

- 9. Eden J, Levit L, Berg A, Morton S, eds. Finding What Works in Health Care: Standards for Systematic Reviews. Committee on Standards for Systematic Reviews of Comparative Effectiveness Research; Institute of Medicine. Washington, DC: National Academies Press; 2011. [PubMed] [Google Scholar]

- 10. Harrington L, Harrington C. Usability Evaluation Handbook for Electronic Health Records. Chicago, IL: Healthcare Information and Management Systems Society (HIMSS); 2014. [Google Scholar]

- 11. Middleton B, Bloomrosen M, Dente MA, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013; 20(e1):e2–e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Agha Z, Calvitti A, Ashfaq S, et al. EHR usability burden and its impact on primary care providers workflow. J Gen Int Med. 2014;29:S82–S83. [Google Scholar]

- 13. Anders S, Albert R, Miller A, et al. Evaluation of an integrated graphical display to promote acute change detection in ICU patients. Int J Med Inform. 2012;81(12):842–851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Anderson JA, Willson P, Peterson NJ, Murphy C, Kent TA. Prototype to practice: developing and testing a clinical decision support system for secondary stroke prevention in a veterans healthcare facility. Comput Inform Nurs. 2010;28(6):353–363. [DOI] [PubMed] [Google Scholar]

- 15. Avansino J, Leu MG. Effects of CPOE on provider cognitive workload: a randomized crossover trial. Pediatrics. 2012;130(3):e547–e552. [DOI] [PubMed] [Google Scholar]

- 16. Avidan A, Weissman C. Record completeness and data concordance in an anesthesia information management system using context-sensitive mandatory data-entry fields. Int J Med Inform. 2012;81(3):173–181. [DOI] [PubMed] [Google Scholar]

- 17. Batley NJ, Osman HO, Kazzi AA, Musallam KM. Implementation of an emergency department computer system: design features that users value. J Emerg Med. 2011;41(6):693–700. [DOI] [PubMed] [Google Scholar]

- 18. Bauer NS, Carroll AE, Downs SM. Understanding the acceptability of a computer decision support system in pediatric primary care. J Am Med Inform Assoc. 2014;21(1):146–153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Beuscart-Zephir MC, Pelayo S, Bernonville S. Example of a human factors engineering approach to a medication administration work system: potential impact on patient safety. Int J Med Inform. 2010; 79(4):e43–e57. [DOI] [PubMed] [Google Scholar]

- 20. Beuscart-Zephir MC, Pelayo S, Degoulet P, Anceaux F, Guerlinger S, Meaux JJ. A usability study of CPOE's medication administration functions: impact on physician-nurse cooperation. Stud Health Technol Inform. 2004;107(Pt 2):1018–1022. [PubMed] [Google Scholar]

- 21. Borbolla D, Otero C, Lobach DF, et al. Implementation of a clinical decision support system using a service model: results of a feasibility study. Stud Health Technol Inform. 2010;160 (Pt 2):816–820. [PubMed] [Google Scholar]

- 22. Borges HL, Malucelli A, Paraiso EC, Moro CC. A physiotherapy EHR specification based on a user-centered approach in the context of public health. AMIA Annual Symposium Proceedings/AMIA Symposium. 2007;11:61–65. [PubMed] [Google Scholar]

- 23. Bossen C, Jensen LG, Udsen FW. Evaluation of a comprehensive EHR based on the DeLone and McLean model for IS success: approach, results, and success factors. Int J Med Inform. 2013;82(10):940–953. [DOI] [PubMed] [Google Scholar]

- 24. Bright TJ, Bakken S, Johnson SB. Heuristic evaluation of eNote: an electronic notes system. AMIAAnnual Symposium Proceedings/AMIA Symposium. 2006:864. [PMC free article] [PubMed] [Google Scholar]

- 25. Brown SH, Hardenbrook S, Herrick L, St Onge J, Bailey K, Elkin PL. Usability evaluation of the progress note construction set. Proceedings/AMIA Annual Symposium. 2001:76–80. [PMC free article] [PubMed] [Google Scholar]

- 26. Brown SH, Lincoln M, Hardenbrook S, et al. Research paper - derivation and evaluation of a document-naming nomenclature. J Am Med Inform Assoc. 2001;8(4):379–390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Buenestado D, Elorz J, Perez-Yarza EG, et al. Evaluating acceptance and user experience of a guideline-based clinical decision support system execution platform. J Med Sys. 2013;37(2):9910. [DOI] [PubMed] [Google Scholar]

- 28. Carayon P, Cartmill R, Blosky MA, et al. ICU nurses' acceptance of electronic health records. J Am Med Inform Assoc. 2011;18(6):812–819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Carrington JM, Effken JA. Strengths and limitations of the electronic health record for documenting clinical events. Comput Inform Nurs. 2011;29(6):360–367. [DOI] [PubMed] [Google Scholar]

- 30. Catalani C, Green E, Owiti P, et al. A clinical decision support system for integrating tuberculosis and HIV care in Kenya: a human-centered design approach. PLoS ONE. 2014;9(8);e103205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Chan J, Shojania KG, Easty AC, Etchells EE. Usability evaluation of order sets in a computerised provider order entry system. BMJ Qual Saf. 2011;20(11):932–940. [DOI] [PubMed] [Google Scholar]

- 32. Chase CR, Ashikaga T, Mazuzan JE., Jr Measurement of user performance and attitudes assists the initial design of a computer user display and orientation method. J Clin Monitor. 1994;10(4):251–263. [DOI] [PubMed] [Google Scholar]

- 33. Chen YY, Goh KN, Chong K. Rule based clinical decision support system for hematological disorder. Proceedings of 4th IEEE International Conference on Software Engineering and Service Science (ICSESS). 2013:43–48. [Google Scholar]

- 34. Chisolm DJ, Purnell TS, Cohen DM, McAlearney AS. Clinician perceptions of an electronic medical record during the first year of implementaton in emergency services. Pediatr Emerg Care. 2010;26(2):107–110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Cho I, Lee J, Han H, Phansalkar S, Bates DW. Evaluation of a Korean version of a tool for assessing the incorporation of human factors into a medication-related decision support system: the I-MeDeSA. Appl Clin Inform. 2014;5(2):571–588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Chrimes D, Kitos NR, Kushniruk A, Mann DM. Usability testing of Avoiding Diabetes Thru Action Plan Targeting (ADAPT) decision support for integrating care-based counseling of pre-diabetes in an electronic health record. Int J Med Inform. 2014;83(9):636–647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Cimino JJ, Patel VL, Kushniruk AW. Studying the human-computer-terminology interface. J Am Med Inform Assoc. 2001;8(2):163–173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Clarke MA, Belden JL, Kim MS. Determining differences in user performance between expert and novice primary care doctors when using an electronic health record (EHR). J Eval Clin Pract. 2014;20(6): 1153–1161. [DOI] [PubMed] [Google Scholar]

- 39. Corrao NJ, Robinson AG, Swiernik MA, Naeim A. Importance of testing for usability when selecting and implementing an electronic health or medical record system. J Oncol Pract. 2010;6(3):120–124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Cresswell KM, Bates DW, Williams R, et al. Evaluation of medium-term consequences of implementing commercial computerized physician order entry and clinical decision support prescribing systems in two 'early adopter' hospitals. J Am Med Inform Assoc. 2014;21(e2):e194–e202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Devine EB, Lee CJ, Overby CL, et al. Usability evaluation of pharmacogenomics clinical decision support aids and clinical knowledge resources in a computerized provider order entry system: a mixed methods approach. Int J Med Inform. 2014;83(7):473–483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Dos Santos ES, Martins HG. Usability and impact of electronic health records for primary care units in Portugal. Proceedings of 6th Iberian Conference on Information Systems and Technologies (CISTI). 2011:1–3. [Google Scholar]

- 43. Edwards PJ, Moloney KP, Jacko JA, Sainfort F. Evaluating usability of a commercial electronic health record: a case study. Int J Hum Comput Stud. 2008;66(10):718–728. [Google Scholar]

- 44. Fahey P, Harney C, Kesavan S, McMahon A, McQuaid L, Kane B. Human computer interaction issues in eliciting user requirements for an electronic patient record with multiple users. Proceedings of 24th International Symposium on Computer-Based Medical Systems (CBMS). 2011:1–6. [Google Scholar]

- 45. Farri O, Rahman A, Monsen KA, et al. Impact of a prototype visualization tool for new information in EHR clinical documents. Appl Clin Inform. 2012;3(4):404–418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Fossum M, Ehnfors M, Fruhling A, Ehrenberg A. An evaluation of the usability of a computerized decision support system for nursing homes. Appl Clin Inform. 2011;2(4):420–436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Fung CH, Tsai JS, Lulejian A, et al. An evaluation of the Veterans Health Administration's clinical reminders system: a national survey of generalists. J Gen Int Med. 2008;23(4):392–398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Gadd CS, Ho YX, Cala CM, et al. User perspectives on the usability of a regional health information exchange. J Am Med Inform Assoc. 2011;18(5):711–716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Gainer A, Pancheri K, Zhang J. Improving the human computer interface design for a physician order entry system. AMIA Annu Symp Proc. 2003;2003:847. [PMC free article] [PubMed] [Google Scholar]

- 50. Galligioni E, Berloffa F, Caffo O, et al. Development and daily use of an electronic oncological patient record for the total management of cancer patients: 7 years' experience. Ann Oncol. 2009;20(2):349–352. [DOI] [PubMed] [Google Scholar]

- 51. Georgiou A, Westbrook JI. Clinician reports of the impact of electronic ordering on an emergency department. Stud Health Technol Inform. 2009;150:678–682. [PubMed] [Google Scholar]

- 52. Ghahramani N, Lendel I, Haque R, Sawruk K. User satisfaction with computerized order entry system and its effect on workplace level of stress. J Med Syst. 2009;33(3):199–205. [DOI] [PubMed] [Google Scholar]

- 53. Grabenbauer LA, Fruhling AL, Windle JR. Towards a Cardiology/EHR interaction workflow usability evaluation method. Proceedings of 47th Hawaii International Conference on System Sciences (HICSS). 2014:2626–2635. [Google Scholar]

- 54. Graham TA, Kushniruk AW, Bullard MJ, Holroyd BR, Meurer DP, Rowe BH. How usability of a web-based clinical decision support system has the potential to contribute to adverse medical events. AMIAAnnual Symposium Proceedings/AMIA Symposium. 2008;6:257–261. [PMC free article] [PubMed] [Google Scholar]

- 55. Guappone K, Ash JS, Sittig DF. Field evaluation of commercial computerized provider order entry systems in community hospitals. AMIA Annual Symposium Proceedings/AMIA Symposium. 2008;6:263–267. [PMC free article] [PubMed] [Google Scholar]

- 56. Guo J, Iribarren S, Kapsandoy S, Perri S, Staggers N. Usability evaluation of an electronic medication administration record (eMAR) application. Appl Clin Inform. 2011;2(2):202–224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Harrington C, Wood R, Breuer J, et al. Using a unified usability framework to dramatically improve the usability of an EMR Module. AMIA Annual Symposium Proceedings/AMIA Symposium. 2011;2011: 549–558. [PMC free article] [PubMed] [Google Scholar]

- 58. Harrington L, Porch L, Acosta K, Wilkens K. Realizing electronic medical record benefits: an easy-to-do usability study. J Nurs Adm. 2011;41(7-8):331–335. [DOI] [PubMed] [Google Scholar]

- 59. Heselmans A, Aertgeerts B, Donceel P, Geens S, Van De Velde S, Ramaekers D. Family physicians' perceptions and use of electronic clinical decision support during the first year of implementation. J Med Syst. 2012;36(6):3677–3684. [DOI] [PubMed] [Google Scholar]

- 60. Hollin I, Griffin M, Kachnowski S. How will we know if it's working? A multi-faceted approach to measuring usability of a specialty-specific electronic medical record. Health Inform J. 2012;18(3):219–232. [DOI] [PubMed] [Google Scholar]

- 61. Horsky J, McColgan K, Pang JE, et al. Complementary methods of system usability evaluation: surveys and observations during software design and development cycles. J Biomed Inform. 2010;43(5):782–790. [DOI] [PubMed] [Google Scholar]

- 62. Hoyt R, Adler K, Ziesemer B, Palombo G. Evaluating the usability of a free electronic health record for training. Perspect Health Inf Manag. 2013;10:1b. [PMC free article] [PubMed] [Google Scholar]

- 63. Hypponen H, Reponen J, Laaveri T, Kaipio J. User experiences with different regional health information exchange systems in Finland. Int J Med Inform. 2014;83(1):1–18. [DOI] [PubMed] [Google Scholar]

- 64. Hyun S, Johnson SB, Stetson PD, Bakken S. Development and evaluation of nursing user interface screens using multiple methods. J Biomed Inform. 2009;42(6):1004–1012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Jaderlund Hagstedt L, Rudebeck CE, Petersson G. Usability of computerised physician order entry in primary care: assessing ePrescribing with a new evaluation model. Inform Primary Care. 2011;19(3):161–168. [DOI] [PubMed] [Google Scholar]

- 66. Jaspers MW, Peute LW, Lauteslager A, Bakker PJ. Pre-post evaluation of physicians' satisfaction with a redesigned electronic medical record system. Stud Health Technol Inform. 2008;136:303–308. [PubMed] [Google Scholar]

- 67. Jaspers MW, Steen T, Van Den Bos C, Geenen M. The use of cognitive methods in analyzing clinicians' task behavior. Stud Health Technol Inform. 2002;93:25–31. [PubMed] [Google Scholar]

- 68. Jaspers MW, Steen T, van den Bos C, Geenen M. The think aloud method: a guide to user interface design. Int J Med Inform. 2004;73(11-12):781–795. [DOI] [PubMed] [Google Scholar]

- 69. Junger A, Michel A, Benson M, et al. Evaluation of the suitability of a patient data management system for ICUs on a general ward. Int J Med Inform. 2001;64(1):57–66. [DOI] [PubMed] [Google Scholar]

- 70. Kallen MA, Yang D, Haas N. A technical solution to improving palliative and hospice care. Support Care Cancer. 2012;20(1):167–174. [DOI] [PubMed] [Google Scholar]

- 71. Khajouei R, de Jongh D, Jaspers MW. Usability evaluation of a computerized physician order entry for medication ordering. Stud Health Technol Inform. 2009;150:532–536. [PubMed] [Google Scholar]

- 72. Khajouei R, Hasman A, Jaspers MW. Determination of the effectiveness of two methods for usability evaluation using a CPOE medication ordering system. Int J Med Inform. 2011;80(5):341–350. [DOI] [PubMed] [Google Scholar]

- 73. Khajouei R, Peek N, Wierenga PC, Kersten MJ, Jaspers MW. Effect of predefined order sets and usability problems on efficiency of computerized medication ordering. Int J Med Inform. 2010;79(10):690–698. [DOI] [PubMed] [Google Scholar]

- 74. Khajouei R, Wierenga PC, Hasman A, Jaspers MW. Clinicians satisfaction with CPOE ease of use and effect on clinicians' workflow, efficiency and medication safety. Int J Med Inform. 2011;80(5):297–309. [DOI] [PubMed] [Google Scholar]

- 75. Kilsdonk E, Riezebos R, Kremer L, Peute L, Jaspers M. Clinical guideline representation in a CDS: a human information processing method. Stud Health Technol Inform. 2012;180:427–431. [PubMed] [Google Scholar]

- 76. Kim MS, Shapiro JS, Genes N, et al. A pilot study on usability analysis of emergency department information system by nurses. Appl Clin Inform. 2012;3(1):135–153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Kjeldskov J, Skov MB, Stage J. A longitudinal study of usability in health care - does time heal? Stud Health Technol Inform. 2007;130:181–191. [PubMed] [Google Scholar]

- 78. Klimov D, Shahar Y, Taieb-Maimon M. Intelligent visualization and exploration of time-oriented data of multiple patients. Artif Intell Med. 2010;49(1):11–31. [DOI] [PubMed] [Google Scholar]

- 79. Koopman RJ, Kochendorfer KM, Moore JL, et al. A diabetes dashboard and physician efficiency and accuracy in accessing data needed for high-quality diabetes care. Ann Fam Med. 2011;9(5):398–405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Kushniruk A, Kaipio J, Nieminen M, et al. Human factors in the large: experiences from Denmark, Finland and Canada in Moving Towards Regional and National Evaluations of Health Information System Usability. Contribution of the IMIA Human Factors Working Group. Yearb Med Inform. 2014;9(1):67–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81. Kushniruk AW, Kaufman DR, Patel VL, Levesque Y, Lottin P. Assessment of a computerized patient record system: a cognitive approach to evaluating medical technology. MD Comput. 1996;13(5):406–415. [PubMed] [Google Scholar]

- 82. Lee TT, Mills ME, Bausell B, Lu MH. Two-stage evaluation of the impact of a nursing information system in Taiwan. Int J Med Inform. 2008;77(10):698–707. [DOI] [PubMed] [Google Scholar]

- 83. Li AC, Kannry JL, Kushniruk A, et al. Integrating usability testing and think-aloud protocol analysis with "near-live" clinical simulations in evaluating clinical decision support. Int J Med Inform. 2012;81(11):761–772. [DOI] [PubMed] [Google Scholar]

- 84. Linder JA, Rose AF, Palchuk MB, et al. Decision support for acute problems: the role of the standardized patient in usability testing. J Biomed Inform. 2006;39(6):648–655. [DOI] [PubMed] [Google Scholar]

- 85. Longo L, Kane B. A novel methodology for evaluating user interfaces in health care. Proceedings of 24th International Symposium on Computer-Based Medical Systems (CBMS). 2011:1–6. [Google Scholar]

- 86. Madison LG, Phillip WR. A case study of user assessment of a corrections electronic health record. Perspect Health Inform Manag. 2011;8:1b. [PMC free article] [PubMed] [Google Scholar]

- 87. Mann D, Kushniruk A, McGinn T, et al. Usability testing for the development of an electronic health record integrated clinical prediction rules in primary care. J Gen Int Med. 2011;26:S193. [Google Scholar]

- 88. Mann DM, Kannry JL, Edonyabo D, et al. Rationale, design, and implementation protocol of an electronic health record integrated clinical prediction rule (iCPR) randomized trial in primary care. Implement Sci. 2011;6:109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89. Mann DM, Lin JJ. Increasing efficacy of primary care-based counseling for diabetes prevention: rationale and design of the ADAPT (Avoiding Diabetes Thru Action Plan Targeting) trial. Implement Sci. 2012;7:6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90. Martins SB, Shahar Y, Galperin M, et al. Evaluation of KNAVE-II: a tool for intelligent query and exploration of patient data. Stud Health Technol Inform. 2004;107(Pt 1):648–652. [PubMed] [Google Scholar]

- 91. Martins SB, Shahar Y, Goren-Bar D, et al. Evaluation of an architecture for intelligent query and exploration of time-oriented clinical data. Artif Intell Med. 2008;43(1):17–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92. Meredith J. Electronic patient record evaluation in community mental health. Inform Prim Care. 2009;17(4):209–213. [DOI] [PubMed] [Google Scholar]

- 93. Mupueleque M, Gaspar J, Cruz-Correia R, Costa-Pereira A. Evaluation of a maternal and child electronic health record in a developing country: preliminary results from a field pilot. Proceedings of the International Conference on Health Informatics. 2012:256–262. [Google Scholar]

- 94. Nagykaldi ZJ, Jordan M, Quitoriano J, Ciro CA, Mold JW. User-centered design and usability testing of an innovative health-related quality of life module. Appl Clin Inform. 2014;5(4):958–970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95. Narasimhadevara A, Radhakrishnan T, Leung B, Jayakumar R. On designing a usable interactive system to support transplant nursing. J Biomed Inform. 2008;41(1):137–151. [DOI] [PubMed] [Google Scholar]

- 96. Neinstein A, Cucina R. An analysis of the usability of inpatient insulin ordering in three computerized provider order entry systems. J Diabetes Sci Technol. 2011;5(6):1427–1436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97. Neri PM, Pollard SE, Volk LA, et al. Usability of a novel clinician interface for genetic results. J Biomed Inform. 2012;45(5):950–957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98. Nykanen P, Kaipio J, Kuusisto A. Evaluation of the national nursing model and four nursing documentation systems in Finland--lessons learned and directions for the future. Int J Med Inform. 2012;81(8):507–520. [DOI] [PubMed] [Google Scholar]

- 99. O'Dell DV, Tape TG, Campbell JR. Increasing physician acceptance and use of the computerized ambulatory medical record. Proceedings - the Annual Symposium on Computer Applications in Medical Care. 1991:848–852. [PMC free article] [PubMed] [Google Scholar]

- 100. Page CA, Schadler A. A nursing focus on EMR usability enhancing documentation of patient outcomes. Nurs Clin North Am. 2014;49(1):81–90. [DOI] [PubMed] [Google Scholar]

- 101. Peleg M, Shachak A, Wang D, Karnieli E. Using multi-perspective methodologies to study users' interactions with the prototype front end of a guideline-based decision support system for diabetic foot care. Int J Med Inform. 2009;78(7):482–493. [DOI] [PubMed] [Google Scholar]

- 102. Peute LW, Jaspers MW. The significance of a usability evaluation of an emerging laboratory order entry system. Int J Med Inform. 2007;76(2-3):157–168. [DOI] [PubMed] [Google Scholar]

- 103. Pose M, Czaja SJ, Augenstein J. The usability of information technology within emergency care settings. Comput Ind Eng. 1996;31(1-2):455–458. [Google Scholar]

- 104. Ries M, Golcher H, Prokosch HU, Beckmann MW, Burkle T. An EMR based cancer diary - utilisation and initial usability evaluation of a new cancer data visualization tool. Stud Health Technol Inform. 2012;180:656–660. [PubMed] [Google Scholar]

- 105. Rogers ML, Sockolow PS, Bowles KH, Hand KE, George J. Use of a human factors approach to uncover informatics needs of nurses in documentation of care. Int J Med Inform. 2013;82(11):1068–1074. [DOI] [PubMed] [Google Scholar]

- 106. Rose AF, Schnipper JL, Park ER, Poon EG, Li Q, Middleton B. Using qualitative studies to improve the usability of an EMR. J Biomed Inform. 2005;38(1):51–60. [DOI] [PubMed] [Google Scholar]

- 107. Roukema J, Los RK, Bleeker SE, van Ginneken AM, van der Lei J, Moll HA. Paper versus computer: feasibility of an electronic medical record in general pediatrics. Pediatrics. 2006;117(1):15–21. [DOI] [PubMed] [Google Scholar]

- 108. Saitwal H, Feng X, Walji M, Patel V, Zhang J. Assessing performance of an electronic health record (EHR) using cognitive task analysis. Int J Med Inform. 2010;79(7):501–506. [DOI] [PubMed] [Google Scholar]

- 109. Saleem JJ, Haggstrom DA, Militello LG, et al. Redesign of a computerized clinical reminder for colorectal cancer screening: a human-computer interaction evaluation. BMC Med Inform Decis Mak. 2011;11:74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110. Saleem JJ, Patterson ES, Militello L, Asch SM, Doebbeling BN, Render ML. Using human factors methods to design a new interface for an electronic medical record. AMIAAnnual Symposium Proceedings/AMIA Symposium. 2007:640–644. [PMC free article] [PubMed] [Google Scholar]

- 111. Santiago O, Li Q, Gagliano N, Judge D, Hamann C, Middleton B. Improving EMR Usability: a method for both discovering and prioritizing improvements in EMR workflows based on human factors engineering. AMIA Annual Symposium Proceedings/AMIA Symposium. 2006:1086. [PMC free article] [PubMed] [Google Scholar]

- 112. Seland G, Sorby ID. Usability laboratory as the last outpost before implementation - lessons learnt from testing new patient record functionality. Stud Health Technol Inform. 2009;150:404–408. [PubMed] [Google Scholar]

- 113. Shah KG, Slough TL, Yeh PT, et al. Novel open-source electronic medical records system for palliative care in low-resource settings. BMC Palliat Care. 2013;12(1):31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114. Sheehan B, Kaufman D, Stetson P, Currie LM. Cognitive analysis of decision support for antibiotic prescribing at the point of ordering in a neonatal intensive care unit. AMIA Annual Symposium Proceedings/AMIA Symposium. 2009;2009:584–588. [PMC free article] [PubMed] [Google Scholar]

- 115. Sittig DF, Kuperman GJ, Fiskio J. Evaluating physician satisfaction regarding user interactions with an electronic medical record system. Proceedings/AMIA Annual Symposium. 1999:400–404. [PMC free article] [PubMed] [Google Scholar]

- 116. Sorby ID, Seland G, Nytro O. The avoidable misfortune of a computerized patient chart. Stud Health Technol Inform. 2010;160(Pt 1):131–135. [PubMed] [Google Scholar]

- 117. Staggers N, Jennings BM, Lasome CE. A usability assessment of AHLTA in ambulatory clinics at a military medical center. Mil Med. 2010;175(7):518–524. [DOI] [PubMed] [Google Scholar]

- 118. Staggers N, Kobus D, Brown C. Nurses' evaluations of a novel design for an electronic medication administration record. Comput Inform Nurs. 2007;25(2):67–75. [DOI] [PubMed] [Google Scholar]

- 119. Tan YM, Flores JV, Tay ML. Usability of clinician order entry systems in Singapore: an assessment of end-user satisfaction. Stud Health Technol Inform. 2010;160(Pt 2):1202–1205. [PubMed] [Google Scholar]

- 120. Thum F, Kim MS, Genes N, et al. Usability improvement of a clinical decision support system. LNCS. 2014;8519:125–131. [Google Scholar]

- 121. Tuttelmann F, Luetjens CM, Nieschlag E. Optimising workflow in andrology: a new electronic patient record and database. Asian J Androl. 2006;8(2):235–241. [DOI] [PubMed] [Google Scholar]

- 122. Urda D, Ribelles N, Subirats JL, Franco L, Alba E, Jerez JM. Addressing critical issues in the development of an Oncology Information System. Int J Med Inform. 2013;82(5):398–407. [DOI] [PubMed] [Google Scholar]

- 123. Usselman E, Borycki EM, Kushniruk AW. The evaluation of electronic perioperative nursing documentation using a cognitive walkthrough approach. Stud Health Technol Inform. 2015;208:331–336. [PubMed] [Google Scholar]

- 124. Vatnøy TK, Vabo G, Fossum M. A usability evaluation of an electronic health record system for nursing documentation used in the municipality healthcare services in Norway. LNCS. 2014;8527:690–699. [Google Scholar]

- 125. Viitanen J, Hypponen H, Laaveri T, Vanska J, Reponen J, Winblad I. National questionnaire study on clinical ICT systems proofs: physicians suffer from poor usability. Int J Med Inform. 2011;80(10): 708–725. [DOI] [PubMed] [Google Scholar]

- 126. Washburn J, Del Fiol G, Rocha RA. Usability evaluation at the point-of-care: a method to identify user information needs in CPOE applications. AMIA Annual Symposium Proceedings/AMIA Symposium. 2006:1137. [PMC free article] [PubMed] [Google Scholar]

- 127. Wyatt TH, Li X, Indranoi C, Bell M. Developing iCare v.1.0: an academic electronic health record. Comput, Inform, Nurs. 2012;30(6):321–329. [DOI] [PubMed] [Google Scholar]

- 128. Yui BH, Jim WT, Chen M, Hsu JM, Liu CY, Lee TT. Evaluation of computerized physician order entry system-a satisfaction survey in Taiwan. J Med Syst. 2012;36(6):3817–3824. [DOI] [PubMed] [Google Scholar]

- 129. Zacharoff K, Butler S, Jamison R, Charity S, Lawler K. Impact of an electronic pain and opioid risk assessment on documentation and clinical workflow. J Pain. 2013;1:S9. [Google Scholar]

- 130. Zhang Z, Walji MF, Patel VL, Gimbel RW, Zhang J. Functional analysis of interfaces in U.S. military electronic health record system using UFuRT framework. AMIA Annual Symposium Proceedings/AMIA Symposium. 2009;2009:730–734. [PMC free article] [PubMed] [Google Scholar]

- 131. Ziemkowski P, Gosbee J. Comparative usability testing in the selection of electronic medical records software. AMIA Annual Symposium Proceedings/AMIA Symposium. 1999:1200. [Google Scholar]

- 132. Babineau J. Product review: covidence (systematic review software). J Canad Health Libraries Assoc. 2014;35(2):68–71. [Google Scholar]

- 133. Covidence. www.covidence.org. Accessed April 1, 2015.

- 134. Brooke J. SUS: a retrospective. J Usability Stud. 2013;8(2):29–40. [Google Scholar]

- 135. Chin JP, Diehl VA, Norman KL. Development of an instrument measuring user satisfaction of the human-computer interface. SIGCHI '88. New York: Association for Computing Machinery (ACM)1988:213–218. [Google Scholar]

- 136. Nielsen J, Mack R. Usability Inspection Methods. New York: John Wiley and Sons; 1994. [Google Scholar]

- 137. Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform. 2004;37(1):56–76. [DOI] [PubMed] [Google Scholar]

- 138. Yen PY, Bakken S. Review of health information technology usability study methodologies. J Am Med Inform Assoc. 2012;19(3):413–422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 139. Ratwani RM, Benda NC, Hettinger AZ, Fairbanks RJ. Electronic health record vendor adherence to usability certification requirements and testing standards. JAMA. 2015;314(10):1070–1071. [DOI] [PubMed] [Google Scholar]

- 140. Ratwani RM, Fairbanks RJ, Hettinger AZ, Benda NC. Electronic health record usability: analysis of the user-centered design processes of eleven electronic health record vendors. J Am Med Inform Assoc. 2015;22(6):1179–1182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 141. Zhang J, Walji MF. TURF: toward a unified framework of EHR usability. J Biomed Inform. 2011;44(6):1056–1067. [DOI] [PubMed] [Google Scholar]

- 142. Bias RG, Mayhew DJ. Cost-Justifying Usability, Second Edition: An Update for the Internet Age. 2nd edn.San Francisco, CA: Morgan Kauffman; 2005. [Google Scholar]

- 143. Romano Bergstrom JC. Moderating Usability Tests. Washington DC: UXPA DC; 2013. [Google Scholar]

- 144. Tahir D. Doctors barred from discussing safety glitches in U.S.-funded software. 2015. http://www.politico.com/story/2015/09/doctors-barred-from-discussing-safety-glitches-in-us-funded-software-213553. Accessed September 24, 2015. [Google Scholar]

- 145. Perna G. EHR issues become part of ebola story in Texas (update: THR clarifies position on EHR). 2014. http://www.healthcare-informatics.com/news-item/ehr-issues-become-part-ebola-story-texas. Accessed September 24, 2015. [Google Scholar]

- 146. Lei J, Xu L, Meng Q, Zhang J, Gong Y. The current status of usability studies of information technologies in China: a systematic study. Biomed Res Int. 2014;2014:568303. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.