Abstract

Background

Many patient safety organizations recommend the use of the action hierarchy (AH) to identify strong corrective actions following an investigative analysis of patient harm events. Strong corrective actions, such as forcing functions and equipment standardization, improve patient safety by either preventing the occurrence of active failures (i.e. errors or violations) or reducing their consequences if they do occur.

Problem

We propose that the emphasis on implementing strong fixes that incrementally improve safety one event at a time is necessary, yet insufficient, for improving safety. This singular focus has detracted from the pursuit of major changes that transform systems safety by targeting the latent conditions which consistently underlie active failures. To date, however, there are no standardized models or methods that enable patient safety professionals to assess, develop and implement systems changes to improve patient safety.

Approach

We propose a multifaceted definition of ‘systems change’. Based on this definition, various types and levels of systems change are described. A rubric for determining the extent to which a specific corrective action reflects a ‘systems change’ is provided. This rubric incorporates four fundamental dimensions of systems change: scope, breadth, depth and degree. Scores along these dimensions can then be used to classify corrective actions within our proposed systems change hierarchy (SCH).

Conclusion

Additional research is needed to validate the proposed rubric and SCH. However, when used in conjunction with the AH, the SCH perspective will serve to foster a more holistic and transformative approach to patient safety.

Keywords: patient safety, systems change, root cause analysis, active failures, latent conditions

[A]ctive failures are like mosquitos. They can be swatted one by one, but they still keep coming. The best remedies are to create more effective defenses and to drain the swamps in which they breed. The swamps, in this case, are the ever-present latent conditions.

JAMES REASON [1]

Introduction

There are a variety of proactive and reactive methods for improving patient safety [2–4]. Regardless of the method, the general goal is to determine how patient harm events might occur, or why they did occur, in order to prevent their reoccurrence [5]. The recommended practices for performing these activities involve identifying active failures that can or did directly cause harm (e.g. errors or violations), as well as the latent system conditions that promote them (e.g. a breakdown in the interactions among teams, task, technology, environment and organizational variables) [6]. The best practice for generating corrective actions to improve safety focuses on identifying ‘strong’ recommendations [5]. ‘Strong recommendations are those that rely less on people’s actions and memories and are more likely to be effective and sustainable’ [7].

The ‘action hierarchy’ (AH) is a commonly used tool for identifying strong recommendations (Table 1) [8]. The AH supports these activities by dividing safety recommendations into stronger, intermediate and weaker action categories. Use of the AH can facilitate the development of corrective actions that are stronger than the training and policy changes that commonly emerge from patient safety efforts, including root cause analysis (RCA) of actual or potential patient harm events [5].

Table 1.

A corrective action hierarchy (adapted from the VA National Center for Patient Safety)

| Action category | Examples |

|---|---|

| Stronger actions: These actions will very likely eliminate the problem or hazard, and they nominally rely on humans to remember to perform the task correctly or require intentional compliance with the change. | Architectural/physical plant changes |

| New devices with usability testing | |

| Engineering control (forcing functions) | |

| Simplify process | |

| Standardize on equipment or process | |

| Intermediate actions: These actions indirectly reduce the likelihood of the problem or hazard reoccurring; however, they tend to rely, at least in part, on humans remembering to perform the task correctly and/or intentionally complying with the change. | Redundancy |

| Increase in staffing/decrease in workload | |

| Software enhancements, modifications | |

| Eliminate/reduce distractions | |

| Education using simulation-based training, with periodic refresher sessions and observations | |

| Checklist/cognitive aids | |

| Eliminate look- and sound-alikes | |

| Standardized communication tools | |

| Enhanced documentation, communication | |

| Weaker actions: These actions do not directly eliminate the problem or hazard and rely heavily on human memory to perform a task correctly and/or intentional compliance with the change. | Double checks |

| Warnings | |

| New procedure/memorandum/policy | |

| Training | |

| Additional study/analysis |

The AH, however, is not without its limitations [9]. By its very nature, the tool prioritizes corrective actions that either directly prevent active failures or mitigate their consequences. Such corrective actions are not a defense against ‘...the insidious buildup of latent failures within the organizational and managerial spheres…[that] represent the major residual hazard’ and foster future active failures [10]. Consequently, the AH does not foster corrective actions that can ultimately prevent patient harm events from occurring throughout a system.

It is our premise that the narrow pursuit of strong recommendations to prevent active failures has detracted from the identification of broader systems changes following the analysis of actual or potential patient harm events (i.e. near misses). In fact, many systems changes would likely be considered ‘weaker’ actions using the AH’s standards and discarded for stronger local fixes. This conundrum is grounded in a misunderstanding of systems safety principles that distinguish between the prevention of single-point failures and improvements in overall systems safety [11]. This misunderstanding, in our opinion, has contributed to the lack of progress in the broader patient safety movement [12].

In this paper, we build on our prior work [13–15] and that of others who have identified the need to address broader systems issues and apply both Safety I and II approaches when addressing organizational safety issues [16–22]. We begin by further explaining the limitations of the AH. We then discuss and define the concept of ‘systems change’. Following this discussion, we provide recommendations for designing a systems change hierarchy (SCH), along with a prototype tool to support the identification of broader system-level changes to improve patient safety. Next, we describe how the AH and SCH can be used in concert to develop intervention strategies that enhance the overall safety integrity of a system, by targeting both active and latent failures. We conclude with potential barriers to implementing major systems changes and strategies for a more holistic approach to patient safety.

Limitations of the action hierarchy

Consider the following hypothetical case, based on a Patient Safety Network report [23]. A respiratory therapist (RT), working in a crowded space while under high stress and time pressure, inadvertently hooks up a patient’s oxygen mask to an air flowmeter rather than the appropriate oxygen flowmeter. The patient’s hypotension and shortness of breath subsequently worsen. A chest X-ray indicates acute respiratory distress syndrome, and the patient is intubated for type I (hypoxemic) respiratory failure. The physician again orders the patient to be placed on high-flow oxygen; however, the same tubing connected to the air flowmeter is used. Shortly thereafter, the RT returns to check on the patient and notices she had mistakenly connected the oxygen mask to the air flowmeter. She connects the tube to the appropriate oxygen flowmeter and reports the incident to her supervisor.

Deeper analysis of this event (e.g. RCA) might reveal that the hospital gas supply delivers gas under pressure to outlets that are typically on wall panels at the head of the bed. The working pressure for outlets is too high to deliver gas directly to masks and bags, so a flowmeter is connected to the outlet to adjust the flow rate. These flowmeters look nearly identical and are used to provide gas to the same ventilatory devices (e.g. masks). Furthermore, the same tubing is used interchangeably for both the air and oxygen flowmeter nozzles.

We can use the AH to evaluate recommendations for preventing this event from reoccurring. One weaker action would be to warn RTs that many connectors and tubes work and look alike, so there is a risk of hooking up devices to the wrong flowmeter; consequently, they should be mindful of these risks. Another weaker action would be to place colored labels on each flowmeter to decrease the chance of confusion. In contrast, a stronger action would be to redesign the flowmeter connectors and device tubing to make it physically impossible to attach a device to the wrong flowmeter. The latter is a stronger action because it incorporates forcing functions and does not rely on memory or willingness to comply.

Redesigning the connectors and tubing is, indeed, a good idea. Given the problem exists elsewhere (e.g. emergency department (ED) and other intensive care units (ICU)) [23], we would advocate implementing the redesign across the enterprise. However, just because a corrective action is implemented system-wide does not make it a ‘systems fix’. Thus, implementation of this ‘strong’ intervention will have a nominal impact on the organization’s overall patient harm rate, because it does not address the underlying latent conditions that promoted the active failure (i.e. hooking the tube to the wrong flowmeter).

Our assertion is based on a core tenet of systems safety. Corrective actions aimed at preventing active failures only target the ‘symptoms’ of underlying latent conditions [11]. We are not implying that strong interventions that target active failures are unnecessary. When a workplace hazard is identified, we are obligated to address it. Similarly, if a patient is bleeding, we must first stop the bleeding. We then, however, must determine what’s causing the bleeding, in order to effectively address the underlying problem. In our example of the RT connecting the mask to the wrong flowmeter, further investigation would affirm she was working in a crowded space while under high stress and time pressure. Additional analysis might also reveal the unit was understaffed, the RT was busy mentoring a new hire, and the event occurred 10 h into her 12-h shift at 4:00 AM. Surely, these latent factors contributed to the experienced RT’s erroneous action, given she knows the difference between ambient air and pure oxygen and has previously connected tubes to these flowmeters hundreds of times without incident. Yet, the ‘stronger’ corrective action of redesigning the connectors and device tubing does nothing to address these latent conditions that actually fostered the active failure.

At this point, one might ask, ‘What does it matter? The equipment redesign prevents someone from hooking up a device to the wrong flowmeter, regardless of the latent conditions’. This is true; that is why we need to redesign the equipment. However, given we have not addressed the latent conditions, other seemingly arbitrary active failures (i.e. errors) will emerge, such as not fully turning on the gas, not noticing the tubing is loose or that the patient has moved the mask, misadministering an aerosol-based medication, setting up resuscitation equipment improperly, misreading lung capacity measurements, mismanaging a nasal cannula, missing a patient’s respiratory treatment, responding late to a code, conducting an inadequate handoff and so on. Indeed, a thorough analysis would likely reveal that many of these active failures have occurred before but were immediately detected and corrected without incident. Such occurrences went unreported because the outcomes were inconsequential for the patient and the RTs were reluctant to self-report near misses. The current case only came to light because the outcome was too consequential and public to be ignored.

The ‘stronger’ fix of redesigning airflow connectors and tubes targets only one of the many active failures caused by the same underlying latent conditions. Given latent conditions can manifest in other ways, ‘efforts to prevent the repetition of specific active [failures] will only have a limited impact on the safety of the system as a whole. At worst, they [are] merely better ways of securing a particular stable door once its occupant has bolted’[10]. We could wait for each active failure to emerge and then implement a fix (i.e. swatting each mosquito after it bites us). Or, we could implement major systems changes that address their common underlying latent conditions (i.e. ‘drain the swamp in which the mosquitoes breed’).

What is a systems change?

Much has been written about the need to take a ‘systems approach’ to improve patient safety [6]. A ‘system’ is defined as a collection of different elements (e.g. human, technology, task, environment and organizational components) that operate in unison to produce results or achieve a goal, not otherwise obtainable by each element alone [24]. A systems approach to safety, therefore, focuses on the functional interactions among these elements and how these interactions break down to cause accidents [6]. A systems approach to safety is not to blame humans for their errors; rather the focus is on understanding how interactions among system elements impact one’s ability to perform safely [5, 6]. The essence of the systems approach is eloquently captured by the adage, ‘We can’t change the human condition, but we can change the conditions under which humans work’ [1].

The question remains, however, as to what constitutes a systems change? Despite all the discussions about using a systems approach for safety, little has been written about the topic within the patient safety literature. There is no widely accepted definition of ‘systems change’, nor are there established criteria for determining the extent to which one’s patient safety improvement initiatives reflect a systems change. Consequently, the litmus test used to determine a systems change is based on argumentum ex silentio; corrective actions that are ‘silent’ in blame (i.e. they do not hold individuals culpable for their mistakes) are by default ‘systems changes’. This criterion is unsatisfying because it does not adequately reflect the depth and substantive qualities of a ‘true’ systems change (notwithstanding how poorly such qualities were previously defined). Indeed, a definitive description and definition of ‘systems change’ is needed.

Based on research in other disciplines, we define systems change as ‘an intentional process designed to alter the performance of a system by shifting and realigning the form and function of a targeted element’s interactions with other elements in the system’ [25, 26]. We propose that changes to system elements can be conceptualized as either macro-level changes (e.g. organizational or healthcare system level), meso-level changes (e.g. department or unit level) or micro-level changes (e.g. group or individual level). Changes also occur at the meta-level (e.g. political, regulatory and societal level); however, here we will focus on macro-level changes and below, since the sphere of influence of most safety programs is currently contained within these boundaries. Finally, we propose that different system-level changes address different types of failures, with macro- and meso-level changes generally targeting latent system conditions and changes at the micro-level primarily targeting active failures.

Systems change hierarchy (SCH)

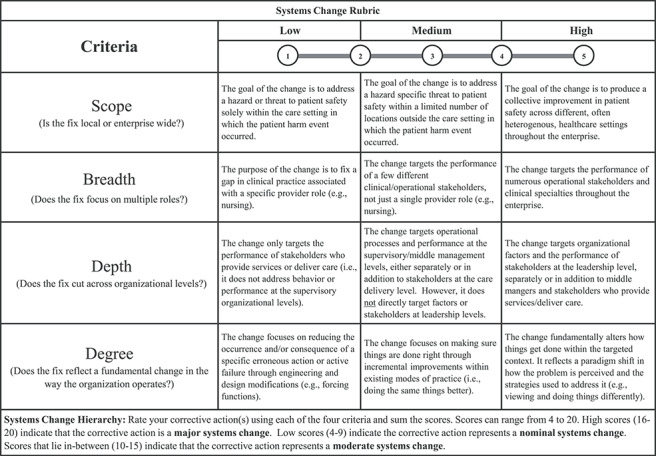

We realize our definitions and assumptions may be contested; however, they provide an initial set of criteria for characterizing systems changes. As illustrated in Table 2, these criteria can be used to develop a rubric for assessing the degree to which any corrective action, whether generated via RCA or other safety improvement process (i.e. FMEA), reflects a ‘systems change’. These criteria focus on four aspects of a corrective action: (a) ‘scope’, ‘Is the fix local or enterprise wide?’; (b) ‘breadth,’ ‘Does the change target more than one clinical role?’ (c) ‘depth’, ‘Does the fix cut across multiple levels of the organization?’ and (d) ‘degree’, ‘Does the fix reflect a fundamental shift in the way the organization operates?’

Table 2.

Systems change rubric and hierarchy

|

Systems change hierarchy: Rate your corrective action(s) using each of the four criteria and sum the scores. Scores can range from 4 to 20. High scores (16–20) indicate that the corrective action is a major systems change. Low scores (4–9) indicate the corrective action represents a nominal systems change. Scores that lie in-between (10–15) indicate that the corrective action represents a moderate systems change.

The systems change rubric (SCR) can be used to rate corrective actions across these criteria using a five-point scale (Table 2). Total scores can be used to determine the action’s placement within the SCH. A corrective action with a high overall score across the four dimensions (e.g. 16–20) would be considered a ‘major systems change’ that seeks to widely transform latent conditions across all system levels, including the macro-level [25]. A corrective action that receives a low overall score (e.g. 4–9) would be considered a ‘nominal systems change’ that likely addresses active failures at the micro-level. Corrective actions whose overall scores fall somewhere in-between (e.g. 10–15) would be considered ‘moderate systems changes’ that likely target latent failures across limited meso-level settings using strategies that focus on ‘doing the same things better’ [25].

To illustrate, let’s consider three corrective actions for the previous incident involving the RT. As suggested, one action could be to redesign connectors and device tubing throughout the system to make it physically impossible to attach a device to the wrong flowmeter.

Another idea might be to improve RT supervisors’ staffing and scheduling processes [27], in order to better manage RT workload and competing priorities, including mentoring new RTs (e.g. ‘doing the same things better’). A third idea might be to implement an inclusive leadership program [28] that fosters a sense of psychological safety among staff across the system, so they are more willing to self-report errors and active failures before they cause harm. The SCH scores associated with these actions would result in classifying the equipment redesign as a minor systems change, the workload planning tool as a moderate systems change and the inclusive leadership program to improve psychological safety as a major systems change (Table 3).

Table 3.

Example scoring of three corrective actions using the SCH

| Corrective action | Systems change criteria | Comments | ||||

|---|---|---|---|---|---|---|

| Scale | Breadth | Depth | Degree | Sum | ||

| Redesign connectors and device tubing throughout the healthcare system to make it physically impossible for RTs or any other personnel to attach a device to the wrong flowmeter. | 2 | 2 | 1 | 1 | 6 | The corrective action will be applied in a few other locations outside the context of the harm event (e.g. ED); it will reach a small number of specialties (e.g. RTs, nurses, pulmonologists). The fix is lateral in terms of organizational structure; it addresses the active failure through engineering and design modifications (e.g. forcing functions); it does not change how work is performed or prevent future harm due to latent conditions. |

| Revise supervisors’ processes associated with staffing and scheduling, to better manage RT workload and competing priorities, such as mentoring new RTs, responding to codes, handing off patients, etc. | 3 | 1 | 3 | 3 | 10 | The corrective action will impact RTs’ work throughout the system, not just ICU or ED; it will reach only one specialty (e.g. RTs). The fix addresses processes at the supervisory levels to addresses latent conditions (i.e. scheduling and workload); it builds on the existing process (i.e. doing the same things better). |

| Implement an inclusive leadership program that foster a sense of psychological safety among staff throughout the healthcare system, so they are more willing to self-report errors or other hazards before they cause harm. | 5 | 5 | 4 | 4 | 18 | The action seeks to produce a collective improvement across numerous healthcare settings throughout the enterprise; it targets most specialties, but not necessarily general staff; the goal is to transform beliefs and behavior across multiple organizational levels. This action prevents future harm by addressing latent conditions. |

Systems change hierarchy: Rate your corrective action using each of the four criteria and then sum the scores. Scores can range from 4 to 20. High scores (16–20) indicate that the corrective action is a major systems change. Low scores (4–9) indicate the corrective action represents a nominal systems change. Scores that lie in-between (10–15) indicate that the corrective action represents a moderate systems change

Conversely, the use of the AH would result in a rank reversal, with the minor systems change (i.e. equipment redesign) ranked the strongest. The major systems change (i.e. inclusive leadership program) would be ranked the weakest because it only remotely addresses the active failure of hooking up a tube to the wrong flowmeter. The moderate systems change (i.e. improving supervisors’ work planning processes) would receive an intermediate ranking, because it impacts, albeit indirectly, the safety of RT work activities, including hooking up respiratory devices.

Other versions of the AH include ‘tangible engagement of leadership’ as a strong action [8], even though it has little to do with ‘directly’ reducing reliance on human memory or eliminating performance deviations. This inclusion highlights the challenge of reconciling the differences between strong corrective actions that target active failures and systems changes that target latent conditions. Most patient safety professionals realize the importance of the latter, so they are reluctant to label leadership engagement as an intermediate or weak action. Nevertheless, if one adheres to the AH criterion, it is. This predicament further argues for a holistic approach to patient safety that incorporates both the AH and SCH perspectives.

Toward a holistic approach to safety

A holistic approach to patient safety includes the implementation of several types of corrective actions: strong fixes that address active failures at the micro-systems level, intermediate strength actions that focus on latent failures by ‘doing things better’ at the meso-level and major systems changes at the macro-level that fundamentally alter latent conditions throughout the enterprise. Unfortunately, most recommendations following an investigation include neither stronger fixes (i.e. AH actions) nor major systems changes (i.e. SCH actions). As stated, they typically only include weaker-strength actions at the micro-level (e.g. warnings) or intermediate strength actions at the meso-level (e.g. training) [5, 7].

Lesser-strength AH actions are often chosen because strong actions are not always feasible for preventing an active failure, such as a delayed response to a code [29]. Intermediate and weaker fixes are also chosen over stronger fixes because they often require less time and resources to adopt. Moderate-level systems changes are often chosen over major systems changes for similar reasons.

However, there are unique reasons why major systems changes are neglected. First, the concept of systems change has been poorly defined until now, leading to little discussion about the need for both corrective actions and system-level changes. When distinctions are made, they are typically framed in favor of strong fixes or confuse moderate systems changes (i.e. ‘doing the same things better’) with major systems changes that fundamentally transform an organization’s approach to ameliorating latent conditions. Additionally, moderate system-level changes have distinct and measurable goals that can be accomplished by a small set of stakeholders in a predictable time period. Conversely, major systems changes involve multiple phases that require the alignment and longer-term engagement of a diverse set of stakeholders [26]. Outcomes of major systems changes are also more difficult to measure (e.g. changes in psychological safety), and their impact on patient harm rates can take longer to materialize [25, 26].

Another reason why major systems changes are not commonly adopted following a patient harm event is because leadership believes the resultant improvement in safety does not justify the required cost and/or operational disruptions, unless the incident is egregious or a high-profile event. This is true for most industries. In aviation, for example, fatal helicopter accidents in the USA occurred for years with few attempts to change small helicopter business operations. Not until there was a high-profile accident involving the death of a professional athlete [30] did the push for major systems changes occur, including at the regulatory level (i.e. meta-level).

As in most industries, the ability to trend findings across events is crucial to justifying investments in major systems changes within healthcare. For example, aggregate data might show a large percentage of safety events over the past 18 months involved staff who were aware of specific workarounds (i.e. active failures), well before they caused harm. However, in each case, the organizational culture (latent condition) failed to foster a sense of psychological safety among staff (latent condition), so threats to patient safety went unreported. With aggregate data, a combination of tools (i.e. risk matrices) could be utilized to justify to leadership the need to invest in major systems changes following the investigation of an individual harm event. In doing so, the data would show how the same underlying latent conditions identified in the current event have been associated with patient harm events in the past. As a result, leadership will understand that unless major systems changes are initiated to address latent conditions, patient harm will continue unabated.

Conclusion

A considerable emphasis has been placed on corrective actions that are stronger than the training and policy changes that commonly emerge from investigations of patient harm events [5, 9]. We proposed that this focus on strong fixes that target active failures has detracted from healthcare systems’ pursuit of major systems changes that address the latent conditions that consistently underlie patient harm [11, 31]. To remedy this, we provided suggestions for adopting a holistic approach to patient safety, including the implementation of major systems changes. These suggestions include definitions of various levels and types of systems change, as well as a rubric for evaluating proposed systems changes. We acknowledge that much research is needed to refine the proposed rubric and validate the scoring categories within the SCH. Research is also needed to determine whether a systems approach to patient safety might also generalize to the other areas of safety, such as the protection and well-being of those involved in providing care. Our hope is that this deeper discussion about the concept of ‘major systems change’, including how it is defined, what it looks like and its importance relative to ‘strong fixes’, will help motivate this area of research.

Funding

This work was supported by the Clinical and Translational Science Award (CTSA) program, through the National Institutes of Health (NIH) National Center for Advancing Translational Sciences (NCATS) [grant UL1TR002373], as well as the UW School of Medicine and Public Health’s Wisconsin Partnership Program (WPP). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH or WPP.

References

- 1. Reason J. Human error: models and management. BMJ 2000;320:768–70. doi: 10.1136/bmj.320.7237.768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Dhillon BS. Methods for performing human reliability and error analysis in health care. Int J Health Care Qual Assur 2003;16:306–17. [Google Scholar]

- 3. Canham A, Jun GT, Waterson P et al. Integrating systemic accident analysis into patient safety incident investigation practices. Appl Ergon 2018;72:1–9. [DOI] [PubMed] [Google Scholar]

- 4. Hughes RG. Tools and strategies for quality improvement and patient safety In: Hughes RG. (ed.). Patient Safety and Quality: An Evidence-Based Handbook for Nurses. Agency for Healthcare Research and Quality (US), 2008. [PubMed] [Google Scholar]

- 5. National Patient Safety Foundation RCA2: Improving Root Cause Analyses and Actions to Prevent Harm. Boston: NPSF, 2016. [Google Scholar]

- 6. Carayon P. Handbook of Human Factors and Ergonomics in Health Care and Patient Safety. Boca Raton, FL: CRC Press, 2006. [Google Scholar]

- 7. Hibbert PD, Thomas MJ, Deakin A et al. Are root cause analyses recommendations effective and sustainable? An observational study. Int J Qual Health Care 2018;30:124–31. [DOI] [PubMed] [Google Scholar]

- 8. VA Action hierarchy U.S. Department of Veterans Affairs National Center for Patient Safety (2015). Root Cause Analysis Tools . Retrieved from https://www.patientsafety.va.gov/docs/joe/rca_tools_2_15.pdf (26 February 2020, date last accessed).

- 9. Liberati EG, Peerally MF, Dixon-Woods M. Learning from high risk industries may not be straightforward: a qualitative study of the hierarchy of risk controls approach to healthcare. Int J Qual Health Care 2018;30:39–43. doi: 10.1093/intqhc/mzx163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Reason JT. Human Error. Cambridge, England: Cambridge University Press, 1990. [Google Scholar]

- 11. Reason JT. Organizational Accidents Revisited. Boca Raton, FL: CRC Press, 2016. [Google Scholar]

- 12. Wears R, Sutcliffe K. Still Not Safe: Patient Safety and the Middle-Managing of American Medicine. New York, NY: Oxford University Press, 2020. [Google Scholar]

- 13. Wiegmann DA, Shappell SA. Human error analysis of commercial aviation accidents: application of the human factors analysis and classification system (HFACS). Aviat Space Environ Med 2001;72:1006–16. [PubMed] [Google Scholar]

- 14. Wiegmann DA, Shappell SA. A Human Error Approach to Aviation Accident Analysis: The Human Factors Analysis and Classification System. Vermont: Ashgate Publishing, 2003. [Google Scholar]

- 15. Wiegmann DA, Wood LJ, Cohen T et al. in press Understanding the “Swiss cheese model” and its application to patient safety. J Patient Saf 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Waterson P. A critical review of the systems approach within patient safety research. Ergonomics 2009;52:1185–95. [DOI] [PubMed] [Google Scholar]

- 17. Waring J. Getting to the ‘roots’ of patient safety. Int J Qual Health Care 2007;19:257–8. doi: 10.1093/intqhc/mzm035. [DOI] [PubMed] [Google Scholar]

- 18. Braithwaite J, Clay-Williams R, Taylor N et al. Deepening our understanding of quality in Australia (DUQuA): an overview of a nation-wide, multi-level analysis of relationships between quality management systems and patient factors in 32 hospitals. Int J Qual Health Care 2020;32:8–21. doi: 10.1093/intqhc/mzz103. [DOI] [PubMed] [Google Scholar]

- 19. Dalmas M, Azzopardi JG. Learning from experience in a National Healthcare System: organizational dynamics that enable or inhibit change processes. Int J Qual Health Care 2019;31:426–32. [DOI] [PubMed] [Google Scholar]

- 20. Wiig S, Braithwaite J, Clay-Williams R. It’s time to step it up. Why safety investigations in healthcare should look more to safety science. Int J Qual Health Care 2020;00:1–4. doi: 10.1093/intqhc/mzaa013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Waterson P. Causation, levels of analysis and explanation in systems ergonomics–a closer look at the UK NHS Morecambe Bay investigation. Appl Ergon 2020;84:1–14. doi: 10.1016/j.apergo.2019.103011. [DOI] [PubMed] [Google Scholar]

- 22. Shortell SM. Increasing value: a research agenda for addressing the managerial and organizational challenges facing health care delivery in the United States. Med Care Res Rev 2004;61:12S–30S. [DOI] [PubMed] [Google Scholar]

- 23. Gaba D. M. (2004). Thin Air [Case Study] . Retrieved fromhttps://psnet.ahrq.gov/web-mm/thin-air. (5 March 2020, date last accessed).

- 24. Rivera AJ, Karsh BT. Human factors and systems engineering approach to patient safetys for radiotherapy. Int J Radiat Oncol Biol Phys 2008;71:S174–7. doi: 10.1016/j.ijrobp.2007.06.088. [DOI] [PubMed] [Google Scholar]

- 25. Foster-Fishman PG, Nowell B, Yang H. Putting the system back into systems change: a framework for understanding and changing organizational and community systems. Am J Community Psychol 2007;39:197–215. [DOI] [PubMed] [Google Scholar]

- 26. Turner S. J. W., Goulding L., Denis J. L., et al. (2016). Major system change: a management and organisational research perspective In Raine R., Fitzpatrick R., Barrat H, et al. (Eds.), Challenges, solutions and future directions in the evaluation of service innovations in health care and public health. Health Serv Delivery Res ,4; 85-104. doi: 10.3310/hsdr04160-85 [DOI] [Google Scholar]

- 27. Sir MY, Dundar B, Steege LMB, Pasupathy KS. Nurse–patient assignment models considering patient acuity metrics and nurses’ perceived workload. J Biomed Inform 2015;55:237–48. [DOI] [PubMed] [Google Scholar]

- 28. Nembhard IM, Edmondson AC. Making it safe: the effects of leader inclusiveness and professional status on psychological safety and improvement efforts in health care teams. J Organ Behav 2006;27:941–66. [Google Scholar]

- 29. Grout JR. Mistake proofing: changing designs to reduce error. BMJ Qual Saf 2006;15:i44–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Aircraft Owners and Pilots Association Air Safety Institute (2018). GA Accident Scorecard . Retrieved from https://www.aopa.org/-/media/files/aopa/home/training-and-safety/nall-report/20162017accidentscorecard.pdf

- 31. Reason JT. Managing the Risks of Organizational Accidents. Brookfield, VT: Ashgate Publishing Ltd, 1997. [Google Scholar]