Abstract

Photoacoustic computed tomography (PACT) based on a full-ring ultrasonic transducer array is widely used for small animal wholebody and human organ imaging, thanks to its high in-plane resolution and full-view fidelity. However, spatial aliasing in full-ring geometry PACT has not been studied in detail. If the spatial Nyquist criterion is not met, aliasing in spatial sampling causes artifacts in reconstructed images, even when the temporal Nyquist criterion has been satisfied. In this work, we clarified the source of spatial aliasing through spatiotemporal analysis. We demonstrated that the combination of spatial interpolation and temporal filtering can effectively mitigate artifacts caused by aliasing in either image reconstruction or spatial sampling, and we validated this method by both numerical simulations and in vivo experiments.

Keywords: Photoacoustic computed tomography, spatiotemporal antialiasing, spatial interpolation, temporal filtering

I. Introduction

Photoacoustic computed tomography (PACT) is an imaging modality that provides tomographic images of biological tissues. By converting highly scattered photons into ultrasonic waves, which are much less scattered than light in biological tissues, PACT forms high-resolution images of the tissues’ optical properties at depths [1]–[8]. In PACT, the photon-induced acoustic waves, called photoacoustic waves, are detected by an ultrasonic transducer array. The detected acoustic signals are used to reconstruct the target tissue’s optical absorption via inverse algorithms. Commonly used reconstruction algorithms include forward-model-based iterative methods [9]–[18], time reversal methods [19]–[23], and the universal back-projection (UBP) method [1], [4], [6], [24]–[27].

In PACT, the ultrasonic transducer array should provide dense spatial sampling (SS) around the object to satisfy the Nyquist sampling theorem [4], [28]. The SS interval on the tissue surface should be less than half of the lowest detectable acoustic wavelength. Otherwise, artifacts may appear in image reconstruction (IR), a problem we call spatial aliasing. In practice, due to the high cost of a transducer array with a large number of elements or limited scanning time, spatially sparse sampling is common.

In this work, we analyze the spatial aliasing in PACT using the UBP reconstruction [24]. We use a circular geometry (a full-ring ultrasonic transducer array or its scanning equivalent) with point elements as an example for analysis. In addition, we discuss only acoustically homogeneous media. Starting from the reconstruction at a source point, we identify two types of spatial aliasing: aliasing in SS and aliasing in IR. Then we demonstrate that aliasing in IR can be eliminated through spatial interpolation, while aliasing in SS can be mitigated through temporal filtering. Finally, we validate the proposed spatiotemporal antialiasing methods via both numerical simulations and in vivo experiments.

II. Background

In a homogeneous medium, a photoacoustic wave can be expressed as [29], [30]

| (1) |

Here, p (r, t) is the pressure at location r and time t, c is the speed of sound (SOS), V is the volumetric space occupied by the tissue, and p0 (r′) is the initial pressure at r′. For convenience in the following discussion, we rewrite Equation (1) as

| (2) |

Discretizing Equation (2) in space, we obtain

| (3) |

Here, we assume M source points distributed at , m = 1, 2, …, M, and N point detection elements distributed at rn,n = 1, 2, …, N. The term vm is the volume of the m-th source point.

The response of an ultrasonic transducer can be described by the equation

| (4) |

Here, is the pressure impinging on the n-th point detection element at time t, and he (t) is the ultrasonic transducer’s electric impulse response (EIR). Substituting Equation (3) into Equation (4), we obtain

| (5) |

The term is a function of both the time and space, where the first prime denotes temporal derivative. The following discussion is based on the spatiotemporal analysis of this term. When acoustic signals are digitized by a data acquisition system (DAQ), an antialiasing filter for a sufficiently high temporal sampling rate avoid temporal aliasing. Thus, for simplicity, the time variable is assumed to be continuous here. The spatial variables are discretized, allowing for further discussion of SS.

For the three common detection geometries—planar, spherical, and cylindrical surfaces, an image mapping the initial pressure p0 (r″) can be reconstructed through the UBP formula [24]:

| (6) |

where the back-projection term , is the solid angle for detection element at r with respect to reconstruction location r″, dS is the detection element surface area, and nS (r) is the ingoing normal vector. The total solid angle is denoted as Ω0. In practice, the true pressure p (r, t) is approximated by the detected pressure , leading to a discretized form of Equation (6):

| (7) |

Here, is the reconstructed initial pressure and is the back-projection term computed from the detected pressure. The weights wn, n = 1, 2, …, N come from in Equation (6).

III. Spatial Aliasing in SS and IR

Given a detection geometry, the SS frequency is determined by the reciprocals of the distances between the adjacent sampling positions. Here, we use a full-ring ultrasonic transducer array with point detection elements as an example for spatiotemporal analysis. We assume that the full-ring transducer array with a radius of R has N evenly distributed detection elements, shown as the red circle in Fig. 1(a). The center O of the circle is the origin of a coordinate system for IR. The upper cutoff frequency of the ultrasonic transducer is fc (the estimation of fc is discussed in Appendix B), and the corresponding lower cutoff wavelength . The acquired signals were first filtered by a third-order lowpass Butterworth filter and a sinc filter (both with a cutoff frequency of fc). Thus, the frequency components with frequencies higher than fc were removed. We define S0, called the detection zone here, as

| (8) |

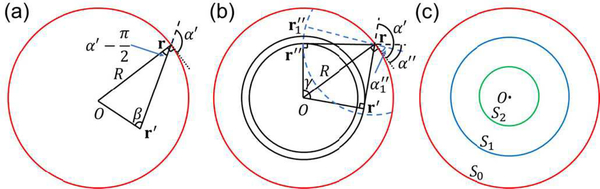

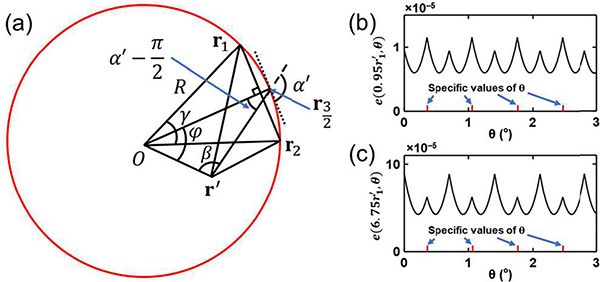

Fig. 1.

Analysis of the spatial aliasing for a circular geometry. (a) A full-ring transducer array of radius R (red circle), where a detection element location r and a source point location r′ are marked. The locations r and r′ are also seen as vectors from the origin to these locations. Vectors -r′ and r−r′ form an angle β, while the extension of line segment r−r′ forms an angle α′ with the tangential dotted line that is perpendicular to r. The angle formed by vectors −r and r′−r can be expressed as . This graph is used to analyze the aliasing in SS. (b) A full-ring transducer array with a detection element location r, two reconstruction locations r″ and and a source point location r′ marked. Extensions of the line segments r−r″, and r′−r can be expressed as α″, and α′, respectively, with the tangential dotted line that is perpendicular to r. Vectors r″ and r′ form an angle where r″ = ‖r″‖. Points r″ and are on the same circle centered at r. This graph is used to analyze aliasing in IR. (c) Regions in the field of view representing different types of aliasing. In S2 (green circle), which contains all source points and reconstruction locations, UBP reconstruction yields no aliasing. In S1 (blue circle), aliasing does not exist in SS but may exist in UBP reconstruction. In S0 (red circle), aliasing may exist in SS.

We first analyze aliasing in SS. When the detection element location r varies discretely, the step size along the perimeter is . The tangential direction is marked by a dotted line (Fig. 1(a)), which is perpendicular to vector r. We consider a source point at r′, and extend the line segment r−r′ as a dashed line (Fig. 1(a)). Vectors −r′ and r−r′ form an angle β, while vector r−r′ forms an angle α’ with the tangential dotted line. Then the angle formed by vectors −r and r′−r can be expressed as . The local sampling step size of ‖r−r′‖ is approximately

| (9) |

whose absolute value means the length of the local sampling step size, while the sign means the sampling direction. This approximation is proven to be accurate enough in Appendix C. From Equation (5), at a given time t, and with the lower cutoff wavelength λc, we can express the Nyquist criterion as

| (10) |

To transform this inequality to a constraint for the source point location r′, we use the Law of Sines:

| (11) |

Here r′ = ‖r′‖. Using Equation (11), Expression (9) can be transformed to

| (12) |

Combining Inequality (10) and Equation (12), we obtain

| (13) |

which must be satisfied for any β ∈ [0, 2π).

When or , Equation (13) leads to the smallest r′:

| (14) |

and the length of the local step size maximizes to . Obviously, if r′ satisfies Equation (14), the length of the local step size of ‖r−r′‖ given by Equation (12) also satisfies the Nyquist criterion:

| (15) |

We define the region S1, called the one-way Nyquist zone here, as

| (16) |

Equivalently, we can consider the boundary of S1 as a virtual detection surface, where the sampling spacing is scaled down from the actual detection spacing by with R1 being the radius of S1. For any source point inside S1, there is no spatial aliasing during SS because the sampling spacing is less than half of the lower cutoff wavelength, which agrees with the result in Xu et al. [28].

Next, we analyze the spatial aliasing in IR. Substituting Equation (5) into Equation (7), we obtain

| (17) |

Here, we use the differential operator

| (18) |

In Equation (17), we need to analyze only the expression , which consists of two terms: and . As analyzed in Appendix A, the difference between the spectra of and is negligible. Thus we only need to analyze the spatial aliasing in and .

If has an upper cutoff frequency fc (the estimation of fc is discussed in Appendix B), will have the same upper cutoff frequency (Appendix B). Given a reconstruction location r″ and a source point location r′ (Fig. 1(b)), we need to analyze the sampling step size of ‖r″−r‖ − ‖r′−r‖ while the detection element location r varies. In fact, the lengths of the step sizes of both ‖r″−r‖ and ‖r′−r‖ reach maxima when r″−r and r′−r are perpendicular to r″ and r′, respectively. If the angle γ between vectors r″ and r′ satisfies , where r″ = ‖r″‖ and r′ = ‖r″‖, then the lengths of the step sizes of ‖r″−r‖ and ‖r′−r‖ achieve maxima of and , respectively, with r at the same location, as shown in Fig. 1(b). In addition, as r passes this location clockwise, ‖r″−r‖ increases while ‖r′−r‖ decreases. Thus, the length of the step size of ‖r″−r‖ − ‖r′−r‖ achieves its maximum of

| (19) |

The Nyquist criterion requires that

| (20) |

which is equivalent to

| (21) |

One may interpret this condition as follows. The physical propagation of the photoacoustic wave in the object to the detectors is succeeded by a time-reversal propagation for the IR. The combined region encompasses a disc with a radius of r″ + r′. On the perimeter of this disc, the Nyquist sampling criterion requires that the sampling spacing be less than half of the lower cutoff wavelength, i.e., Equation (20).

We denote S2, referred to as the two-way Nyquist zone here, as

| (22) |

Again, we can consider the boundary of S2 as a virtual detection surface, where the sampling spacing is scaled down from the actual detection spacing by with R2 being the radius of S2. If the source points are inside S2 and we reconstruct at points within S2, then and , respectively, and Inequality (21) is satisfied. Thus, there is no spatial aliasing during reconstruction, and we call S2 an aliasing-free region. It needs to be pointed out that, due to the finite duration of the transducer’s temporal response, the function has nonzero value only when t is within a finite interval, denoted as Te. The broader the bandwidth of he (t), the shorter Te. When is out of Te, signals from source point r′ that are detected by the element at r have no contribution to the reconstruction at r″.

In the following discussion, we assume that belongs to Te. Even with source points inside S2, we may still have aliasing when reconstruction locations are outside S2 but inside S1. To demonstrate this, we assume that both r″ and r′ are on the boundary of S2, and that the length of the step size of ‖r″−r‖ − ‖r′−r‖ achieves the maximum value of . Here, α″ and α′ denote the angles formed by the line segments r−r″ and r−r′ respectively, with the tangential dotted line that is perpendicular to r. We move the reconstruction location r″ to a new position outside S2 but inside S1, as shown in Fig. 1(b). We keep the distance constant. Thus still belongs to Te. As r″ moves to , the angle α″ decreases to . Both α″ and belong to , then we have . Thus, for the local step size of , we have the estimation , which means that spatial aliasing appears in reconstruction. Switching the source and reconstruction locations, we can repeat the analysis and draw a similar conclusion: with source points inside S1 but outside S2, we may have aliasing when reconstruction locations are inside S2.

We visualize the relative sizes of the three regions S0, S1, and S2 in Fig. 1(c). Spatial aliasing in SS does not appear for objects inside S1, but appears for objects outside S1. Spatial aliasing in IR does not appear for objects and reconstruction locations inside S2, but appears for other combinations of objects and reconstruction locations. A detailed classification is shown in Fig. 2(a).

Fig. 2.

Different combinations of source locations and reconstruction locations subject to spatial aliasing in SS and IR. Three regions S0, S1, and S2 are defined in Equations (8), (16), and (22), respectively. The first line radiating from the origin O represents the range of source locations for SS, while the second line radiating from the tip of the first line represents the range of reconstruction locations for IR. A solid line means no aliasing, while a dotted line means aliasing. (a) Spatial aliasing in UBP. The innermost two-way Nyquist zone S2 is an aliasing-free region. (b) Spatial aliasing in UBP with spatial interpolation. Spatial interpolation removes spatial aliasing in three cases of IR, making the one-way Nyquist zone S1 an aliasing-free region. The dotted lines representing the three cases in (a) are changed to blue-solid lines in (b). (c) Spatial aliasing in UBP with temporal filtering and spatial interpolation. Temporal filtering extends the one-way Nyquist zone S1 in (b) to in (c), and the original S1 is marked as a blue-dashed circle for reference. Spatial interpolation further makes an aliasing-free region.

IV. Spatial Antialiasing in SS and IR

Spatial aliasing solely in IR but not in SS can be removed by spatial interpolation. In fact, without spatial aliasing in SS, spatially continuous signals can be accurately recovered from spatially discrete signals through Whittaker–Shannon interpolation. Then, in theory, no aliasing would occur in reconstructing the image using the spatially continuous signals. In practice, the number of detection elements is numerically increased. To clarify the process, at any given time t, we define

| (23) |

where r = (Rcosθ, Rsinθ), θ ∈ [0, 20π,). The function fR(θ) is sampled at , n = 0, 1, 2, …, N − 1. For objects inside the region S1, SS has no aliasing. Thus, the function fR (θ) can be well recovered from fR(θn), n = 0, 1, 2, …, N −1 through spatial Whittaker–Shannon interpolation. To extend the region S2, we can numerically double the number of detection elements N′ = 2N based on the interpolation. Substituting N′ for N in Equation (22), we obtain a larger region:

| (24) |

From the above discussion about Equation (22), we know that with source and reconstruction locations inside , IR has no aliasing. From , we can see that spatial interpolation successfully removes spatial aliasing in IR. Fig. 2(a) is now replaced by Fig. 2(b). For source points outside the region S1, SS has aliasing, and spatial interpolation cannot recover the lost information, which is mitigated by the next method.

Spatial aliasing in SS can be eliminated by temporal lowpass filtering. We consider a region larger than S1:

| (25) |

We have already shown that source points in this region can produce spatial aliasing during SS. To avoid this concern, before spatial interpolation and reconstruction, we process the signals using a lowpass filter with upper cutoff frequency . Replacing λc in Equation (16) with , we extend the region S1 to

| (26) |

Based on the above discussion about Equation (16), for source points inside , using spatial interpolation, we can reconstruct any points inside without aliasing artifacts. Thus, we extend the one-way Nyquist zone through temporal lowpass filtering at the expense of spatial resolution, and replace Fig. 2(b) with Fig. 2(c).

An ideal antialiasing method should extend the region in Fig. 2(c) to the whole region S0. However, lowpass filtering removes the high-frequency signals, and blurs the reconstructed images. Directly extending to S0 would greatly compromise the image resolution. As a balance between spatial antialiasing and high resolution, to reconstruct the image at r′ ∈ S0, we design the lowpass filter based on its distance to the center r′ = ‖r′‖. If , we apply spatial interpolation, then perform reconstruction. If , we first filter the signals with upper cutoff frequency , then perform spatial interpolation and reconstruction. We call this method radius-dependent temporal filtering.

V. Numerical Simulation

To visualize the artifacts caused by spatial aliasing, we first simulated a 3D case with a hemispherical detection geometry, where the antialiasing methods were not applied. The k-wave toolbox [12] was used for the forward problem.

The simulation parameters were set as follows: the frequency range of the ultrasonic transducer is from 0.1 MHz to 4.5 MHz (2.3-MHz central frequency, 191% bandwidth, fc = 4.5MHz), the SOS c= 1.5mm · μs−1, the number of detection elements N= 651 (evenly distributed on the hemisphere based on the method in [31]), the radius of the hemisphere R = 30 mm, and the simulation grid size is 0.1× 0.1× 0.1 mm3). The elements are shown as red dots in Fig. 3(a). A simple numerical phantom with nonzero initial pressure from two layers with distances of 0 and 6.8 mm from the xy plane was analyzed first. The phantom is shown as blue dots in Fig. 3(a). The ground-truth images of the two layers are shown in Fig. 3(b1) and (c1), respectively, and the reconstructions are shown in Fig. 3(b2) and (c2), respectively. As can be seen, artifacts appear in both layers and seem stronger in the layer further from the xy plane.

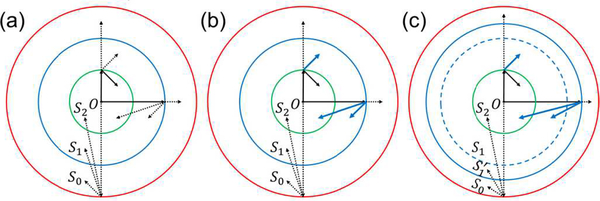

Fig. 3.

Aliasing artifacts in 3D reconstruction in a numerical simulation. (a) An array with 651 detection elements evenly distributed on a hemisphere and a simple numerical phantom covered by the array. (b1)–(b2), (c1)–(c2) Ground-truth slices (Column 1) of a simple phantom at z = 0 (Row b) and 6.8 mm (Row c), respectively, and their corresponding reconstructed images (Column 2). (d) The same array with a complex numerical phantom. (e1)–(e2), (f1)–(f2), (g1)–(g2), and (h1)–(h2) Ground-truth slices (Column 1) of the phantom at z = 0, 3.4, 6.8, and 10.2 mm (Rows e–h), respectively, and their corresponding reconstructed images (Column 2). (i) STD values of the pixel values in the ROIs outlined in the green boxes.

Using the same array, we further simulated a complex phantom with nonzero initial pressure from four layers with distances of 0, 3.4, 6.8, and 10.2 mm from the xy plane, shown as blue dots in Fig. 3(d). The ground-truth images of the four layers are shown in Fig. 3(e1), (f1), (g1), and (h1), respectively, and the reconstructions are shown in Fig. 3(e2), (f2), (g2), and (h2), respectively. Strong aliasing artifacts appear in all reconstructed images, and the artifacts tend to be more obvious in layers further from the origin. Regions of interest (ROIs) A–G (1.5×1.5 mm2) with increasing distances from the origin were picked from the four layers at locations with zero initial pressure. The standard deviations (STDs) of the pixel values inside these regions were calculated to quantify the aliasing artifacts. As can be seen in Fig. 3(i), the artifacts become stronger as the ROI moves away from the origin.

The 3D simulation provides a direct observation of the aliasing artifacts. Furthermore, we give a simplified estimation of the one-way Nyquist zone of the hemisphere geometry. We consider the planes crossing the center of the hemisphere. On each plane, we calculate the one-way Nyquist zone for the 2D case. All the detection elements for this plane lie on its intersection with the hemisphere, which is a semicircle with length π R (subset of a full circle with length 2π R). Based on the above analysis, we only need to estimate the number of detection elements for this plane. In fact, given the area of the hemisphere 2π R2 and the number of elements N, we know that the distance between two neighboring elements is approximately . Thus, the number of elements (in a full circle) for this plane is approximately

| (27) |

Using Equation (15), we obtain the one-way Nyquist zone on this plane:

| (28) |

For a 3D simulation, we vary the plane’s normal vector: All the 2D regions form a half ball with radius ≈ 1.70 mm, which is much smaller than the radius R = 30 mm. This large difference explains the prevalence of the artifacts in the reconstructed 3D image.

To simplify the problem and clarify the key points, in the following simulations, we focus on the 2D case with a full-ring transducer array of radius R = 30 mm. The frequency range of the transducer is from 0.1 MHz to 4.5 MHz. We set the number of detection elements N= 512 and the SOS c = 1.5 mm·μs−1. The radius of the one-way Nyquist zone S1 is thus .

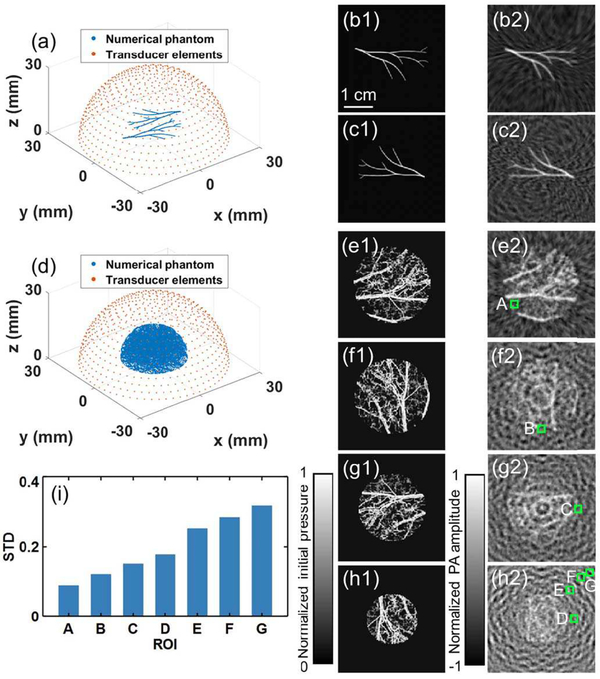

We used 0.1 × 0.1 mm2 grid size, and we first simulated a simple initial pressure distribution shown in Fig. 4(a), with closeups of the blue-dashed, red-dashed, and yellow-dashed boxed regions. The full-ring transducer array and the boundaries of S1 and S2 are marked by red, blue, and green circles, respectively. The reconstruction of the object in Fig. 4(a) using UBP is shown in Fig. 4(b). Despite the clean ground-truth background boxed in Fig. 4(a), obvious aliasing-induced artifacts appear in the reconstructed image outside S1 (red box) but not inside (blue box). It should be noticed that the yellow-boxed region in Fig. 4(b) is outside S1, but it does not show strong artifacts. In fact, based on the above discussion, spatial aliasing only appears for certain combinations of source and reconstruction locations. Due to the specific distribution of the source, the artifacts turn out to be much stronger in the red-boxed region than that in the yellow-boxed region. We used spatial interpolation to remove aliasing solely in IR. Given a time t, signals from all the detection elements formed a vector with length N. The fast Fourier transformation (FFT) was then applied to the vector, and zeros were padded behind the highest frequency components to double the vector length to 2N. Finally, the inverse FFT was applied to the new vector to interpolate the data. This process is the frequency domain implementation of Whittaker–Shannon interpolation. Spatial interpolation numerically doubled the number of detection elements. The reconstruction of the object in Fig. 4(a) using UBP with spatial interpolation is shown in Fig. 4(c). To remove the spatial aliasing during SS, we applied a radius-dependent lowpass filter to the temporal signals before spatial interpolation. We used a third-order lowpass Butterworth filter and a sinc filter (with the same cutoff frequency) for radius-dependent filtering. The reconstruction of the object in Fig. 4(a) using UBP with temporal filtering and spatial interpolation is shown in Fig. 4(d).

Fig. 4.

Spatial interpolation and temporal filtering’s effects on IR in numerical simulations for a simple phantom. The full-ring transducer array and the boundaries of S1 and S2 are marked by red, blue, and green circles, respectively. (a) Ground truth of a simple initial pressure p0 distribution. (b)–(d) Reconstructions of the object in (a) using (b) UBP, (c) UBP with SI, and (d) UBP with TF and SI, respectively. SI, spatial interpolation; TF, temporal filtering. (e) Comparison of the STDs in the ROIs A–E marked with the green boxes. (f)–(g) Comparisons of the profiles of lines (f) P and (g) Q, respectively, based on the three methods.

To quantify the amplitude of artifacts, we chose ROIs A–E (1.2×1.2mm2) at locations with zero initial pressure. The STDs of the pixel values inside these regions were calculated. As can be seen in the red-boxed regions and Fig. 4(e), spatial interpolation mitigates the aliasing artifacts significantly, while adding temporal filtering before spatial interpolation further diminishes the artifacts. This observation agrees with the above demonstration about both methods’ effects on extending the aliasing-free region. To quantify the impact of the antialiasing methods on image resolution, we picked two lines at P and Q, respectively, in each reconstructed image, and compared their profiles in Fig. 4(f) and (g), respectively. For signals from source points inside S1, there is no spatial aliasing in SS, and spatial interpolation is accurate at the interpolation points. Moreover, radius-dependent temporal filtering does not affect the signals when reconstructing inside S1. Thus, the antialiasing methods have negligible impact on the profile of line P (inside S1), as shown in Fig. 4(f). For signals from source points outside S1, the spatial interpolation is inaccurate due to spatial aliasing. Thus, directly applying spatial interpolation affects the profile of line Q (outside S1). Radius-dependent temporal filtering smooths the signals before reconstructing outside S1, thus it further smooths the profile of line Q, as shown in Fig. 4(f).

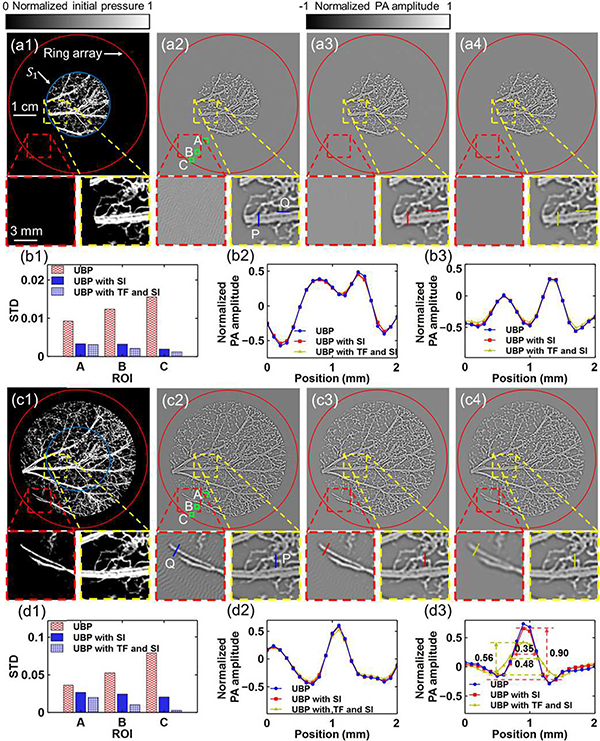

Next, we simulated two complex cases. For the first case, the object is completely within S1, as shown in Fig. 5(a1). The reconstructions of the object in Fig. 5(a1) using UBP, UBP with spatial interpolation, and UBP with temporal filtering and spatial interpolation are shown in Fig. 5(a2)–(a4), respectively. ROIs A–C (1.2×1.2 mm2) were chosen at locations with zero initial pressure. The STDs were calculated and compared, as shown in Fig. 5(b1). Profiles of lines P and Q are shown in Fig. 5(b2) and (b3), respectively. For the second case, the object is beyond S1, and covers most of the area inside the full-ring transducer array, as shown in Fig. 5(c1). The reconstructions of the object in Fig. 5(c1) using the three methods are shown in Fig. 5(c2)–(c4), respectively. The STDs of ROIs A–C are compared in Fig. 5(d1), while the profiles of lines P and Q are shown in Fig. 5(d2) and (d3), respectively. Although spatial interpolation mitigates the aliasing artifacts in both Fig. 5(a2) and (c2), visible artifacts remain in the red-boxed region in Fig. 5(c3) but not in Fig. 5(a3). It can also be seen in Fig. 5(b1) and (d1) that spatial interpolation has more obvious antialiasing effects on Fig. 5(a2) than on Fig. 5(c2), while temporal filtering’s effect on Fig. 5(c2) is more obvious than on Fig. 5(a2). In fact, the aliasing artifacts in Fig. 5(a2) are solely from the IR, while those in Fig. 5(c2) are from both the SS and the IR. Thus, spatial interpolation works well in antialiasing for Fig. 5(a2), for which temporal filtering’s smoothing effect slightly helps; but not as well for Fig. 5(c2) due to the spatial aliasing in SS, for which temporal filtering is necessary. In general, the aliasing artifacts are mitigated by spatial interpolation and further diminished by temporal filtering, as shown in both Fig. 5(b1) and (d1). The antialiasing methods maintain the image resolution well inside S1, as shown in Fig. 5(b2), (b3), and (d2). Due to spatial aliasing in SS for objects outside S1, the profile of line Q is affected by spatial interpolation. Adding temporal filtering further smooths the profile. The full width at half maximum (FWHM) of the main lobe in line Q’s profile was increased by temporal filtering while the amplitude was reduced, as shown in Fig. 5(d3). All these observations regarding the complex phantoms agree with the discussions about the simple phantom in Fig. 4.

Fig. 5.

Spatial interpolation and temporal filtering’s effects on IR in numerical simulations for two complex phantoms. (a1) Ground truth of a complex initial pressure p0 distribution confined to S1. (a2)–(a4) Reconstructions of the object in (a1) using (a2) UBP, (a3) UBP with SI, and (a4) UBP with TF and SI, respectively. SI, spatial interpolation; TF, temporal filtering. The artifacts in the red-boxed region are caused by spatial aliasing in IR, and they are mainly mitigated by SI. (b1) Comparison of the STDs in the ROIs A–C. (b2) and (b3) Comparisons of the profiles of lines P and Q, respectively, for the three methods. (c1) Ground truth of a complex initial pressure p0 distribution beyond S1. (c2)–(c4) Reconstructions of the object in (c1) using (c2) UBP, (c3) UBP with SI, and (c4) UBP with TF and SI, respectively. The artifacts in the red-boxed region are caused by spatial aliasing in SS and IR, and the artifacts are mitigated by TF and SI. (d1) Comparison of the STDs in the ROIs A–C. (d2) and (d3) Comparisons of the profiles of lines P and Q, respectively, for the three methods. The FWHM of the main lobe at Q was increased from 0.35 mm to 0.48 mm by temporal filtering, while the amplitude was changed from 0.90 to 0.56, respectively.

VI. In Vivo Experiment

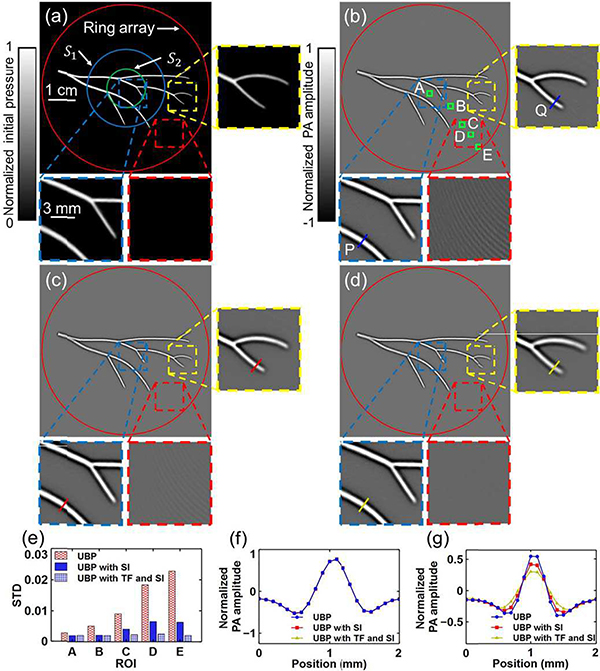

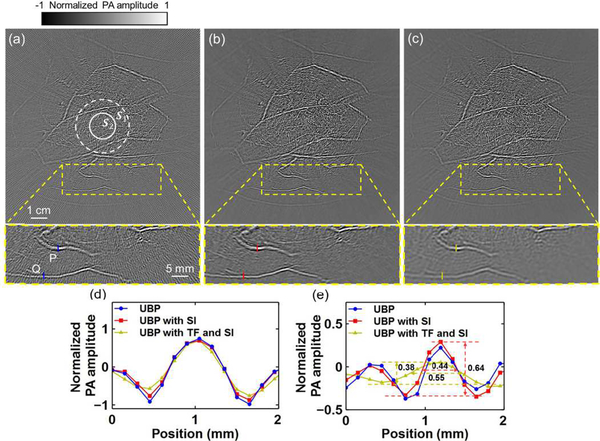

Finally, we applied the spatial interpolation and temporal filtering methods to human breast imaging. The imaging system, as previously reported by Lin et al. [6], employed a 512element full-ring ultrasonic transducer array (Imasonic, Inc., 110-mm radius, 2.25-MHz central frequency, 95% one-way bandwidth). Based on point source measurements (Appendix B), the cutoff frequency is estimated to be fc ≈ 3.80 MHz. The acquired signals were filtered by a third-order lowpass Butterworth filter and a sinc filter (both with cutoff frequency 3.80 MHz). Thus, the one-way Nyquist zone S1 has a radius , while the two-way Nyquist zone S2 has a radius of 8.0 mm. Here we use the speed of sound c = 1.49 mm · μs−1.

Using UBP, we reconstructed a cross-sectional image of a breast, shown in Fig. 6(a). The aliasing artifacts are obvious in the peripheral regions, as shown in Fig. 6(a)’s closeup subset in a yellow-boxed region. After spatial interpolation of the raw data, the reconstructed image is shown in Fig. 6(b). Applying temporal filtering and spatial interpolation, we obtained Fig. 6(c). As can be seen from these subsets, the image quality is improved by spatial interpolation, and the aliasing artifacts are further mitigated by temporal filtering. For comparison, the profiles of lines P and Q for the three images are shown in Fig. 6(d) and (e), respectively. As shown by the numerical simulation, image resolution outside S1 is compromised by both spatial interpolation and temporal filtering. Temporal filtering smooths the profiles, as shown in Fig. 6(d) and (e). Quantitatively, as shown in Fig. 6(e), temporal filtering increases the FWHM of the main lobe of line Q’s profile and reduces the amplitude.

Fig. 6.

Spatial interpolation and temporal filtering’s effects on IR in an in vivo human breast image. (a) Reconstructed image using UBP without either spatial interpolation or temporal filtering, and a closeup subset in the yellow-boxed region. Boundaries of S1 and S2 are shown as white-dashed and white-solid circles, respectively. (b) and (c) Reconstructions of the same region as (a) using (b) UBP with SI, and (c) UBP with TF and SI, respectively. SI, spatial interpolation; TF, temporal filtering. (d) and (e) Comparisons of the profiles of lines P and Q, respectively, for the three methods. The FWHM of the main lobe at Q was increased from 0.44 mm to 0.55 mm by temporal filtering, while the amplitude was changed from 0.64 to 0.38, respectively.

VII. Conclusions and Discussion

In this work, we clarified the source of spatial aliasing in PACT through spatiotemporal analysis. Then we classified the aliasing into two categories: aliasing in SS, and aliasing in IR. Using a circular geometry as an example, we demonstrated two antialiasing methods to remove aliasing artifacts. The methods were validated by numerical and in vivo studies. Spatial interpolation maintains the resolution in the one-way Nyquist zone S1 while mitigating the artifacts caused by aliasing in IR. It extends the aliasing-free zone from S2 to S1. For objects outside S1, spatial interpolation is inaccurate due to spatial aliasing in SS, thus compromises the resolution. Adding radius-dependent temporal filtering does not affect the resolution inside S1. For objects outside S1, temporal filtering suppresses high-frequency signals to satisfy the temporal Nyquist sampling requirement. Although reducing the spatial resolution in the affected regions, temporal filtering mitigates aliasing in SS and makes the spatial interpolation accurate, thus further extends the aliasing-free zone.

The spatiotemporal analysis used here is not limited to circular geometry. It can also be applied to the linear array geometry, as shown in Appendix D. Based on the basic 1D circular and linear geometries, one can analyze 2D geometries, such as, the planar geometry and the spherical geometry, through decomposition. Moreover, the conclusions drawn are also applicable to other IR algorithms. For example, spatial interpolation has been used in time reversal methods to generate a dense enough grid for numerical computation [20]–[23]. Now we prove that it can mitigate the aliasing artifacts caused in reconstruction. Furthermore, location-dependent temporal filtering can be incorporated into a wave propagation model and be used in time reversal methods and iterative methods to mitigate aliasing in SS.

Acknowledgment

The authors appreciate the close reading of the manuscript by Professor James Ballard.

This work was sponsored by National Institutes of Health Grants R01 CA186567 (NIH Director’s Transformative Research Award), R01 EB016963, U01 NS090579 (NIH BRAIN Initiative), and U01 NS099717 (NIH BRAIN Initiative). L.V.W. has financial interests in Microphotoacoustics, Inc., CalPACT, LLC, and Union Photoacoustic Technologies, Ltd., which did not support this work.

Appendix A

Spectrum Analysis of in equation (17)

To analyze the spatial aliasing in IR, we first analyze the expression , which is a multiplication of a fast-change variable and a slow-change variable . Considering that the multiplication is equivalent to a convolution in the frequency domain, the multiplication of causes spectrum change. In this appendix, we analyze this spectrum change and prove that it is negligible.

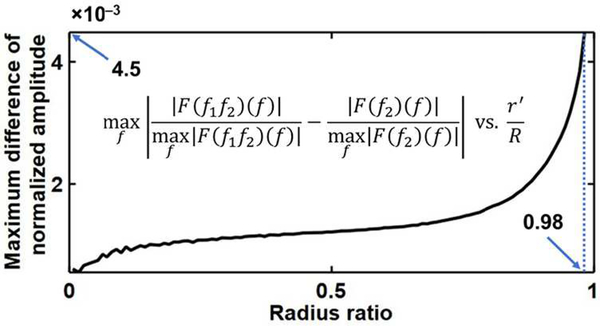

To simplify the problem, we only consider the case with and . In this case, can achieve the maximum sampling step size as n varies (Equation (19)), where spatial aliasing is the most severe. Given an element location r(θ) = R(cos θ, sin θ), we define and . Then, the continuous form of can be expressed as f1 (θ) f2 (θ). Applying the Fourier transformation to f1 (θ) f2 (θ) and f2 (θ), we obtain F (f1f2) (f) and F (f2)(f), respectively. Here F denotes the Fourier transformation operator. The difference between the two normalized spectra is expressed as , which is a function of r′ ∈ [0, R). We calculated the difference between the two normalized spectra for a full-ring array geometry with a radius R = 110 mm, and a point source response measured through experiments. We used a speed of sound c = 1.49 mm · μs−1 in the computation. As shown in Fig. 7, for r′ ∈ [0, 0.98R], which is large enough for this research, we have . When r′ approaches R, singularity occurs. For a system with a frequency-dependent SNR smaller than , which is almost always true for our experimental cases, the difference between the two normalized spectra is negligible. Therefore, to simplify the problem, we analyze the spatial aliasing in to respresent the spatial aliasing in .

Fig. 7.

Difference between the normalized spectra and for r′ ∈ [0,0.98R]. We have for any r′ ∈ [0,0.98R].

Appendix B

Estimation of the Upper Cutoff Frequency

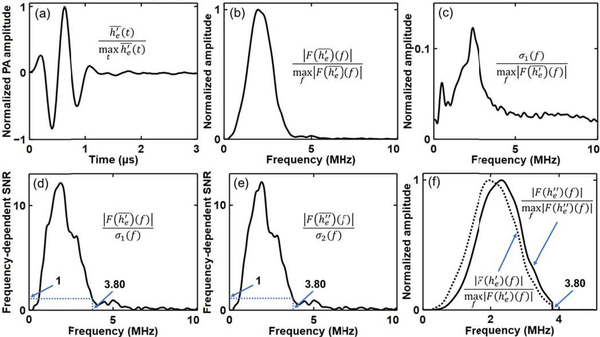

In this appendix, we estimate fc using point source measurements. From these measurements, we obtain an estimation of the point source response. We quantify the response’s amplitude and noise level in the frequency domain, then calculate the frequency-dependent signal-to-noise ratio (SNR). We choose the upper cutoff frequency fc, higher than the central frequency, where the frequency-dependent SNR decreases to one for the first time.

We used the full-ring transducer array to acquire the PA signals generated by a point source located at the array center for J repetitions (J = 100). From each acquisition, we obtained N measurements from N transducer elements (N= 512). Based on Equation (5), by ignoring a constant factor, we can express the point source response as . We denote the measurement of by the n-th element in the j-th acquisition as . In each acquisition, the mean response is denoted as

| (29) |

We can further estimate the point source response using

| (30) |

The normalized value of is shown in Fig. 8(a). Applying the Fourier transformation to and , we obtain and , respectively. The normalized amplitude of is shown in Fig. 8(b).

Fig. 8.

Estimation of the upper cutoff frequency. (a) Normalized estimated point source response . (b) Normalized frequency spectrum of the estimated point source response . (c) Normalized frequency spectrum of the noise . (d) Frequency-dependent SNR . The upper cutoff frequency is estimated to be fc = 3.80 MHz, where the SNR equals to one. (e) Frequency-dependent SNR of the derivative of the point source response . The upper cutoff frequency is also estimated to be fc = 3.80 MHz. (f) Normalized frequency spectra of (frequency components with frequencies higher than 3.80 MHz are removed) and ( and , respectively).

Considering that each pixel value in a reconstructed image is obtained by a weighted summation of the signals from N elements, we can use the noise in (rather than ) to approximate the noise in the reconstructed image. At a frequency of f, the noise STD in can be estimated as

| (31) |

Thus, we can define the frequency-dependent SNR as

| (32) |

The normalized value of σ1 ( f ) and the value of SNR1 ( f ) are shown in Fig. 8(c) and (d), respectively. We choose the cutoff frequency fc to be 3.80 MHz (higher than the central frequency 2.25 MHz), where SNR ( f ) decreases to one for the first time.

To observe the temporal differentiation effect on the spectrum of , we replace in Equation (31) and (32) with , and obtain σ2 ( f ) and SNR2 ( f ), respectively. As can be seen in Fig. 8(e), the upper cutoff frequency we obtain from SNR2 ( f ) is the same as that from SNR1 ( f ). In practice, before the UBP reconstruction, we filter the acquired signals with a third-order lowpass Butterworth filter and a sinc filter (both with a cutoff frequency of fc). Thus, the frequency components with frequencies higher than 3.80 MHz are removed from . The normalized frequency spectrum of and are shown in Fig. 8(f). As can be seen, although the spectrum is positively shifted by the temporal differentiation for f < 3.80 MHz, the cutoff frequency 3.80 MHz doesn’t change.

Appendix C

Accuracy of the Sampling Step Size Approximation

This research is based on an approximation of the sampling step size, as shown in Expression (9), especially its maximum value . In this appendix, we discuss the accuracy of this approximation by expressing the sampling step size as a Taylor expansion with the Lagrange remainder. The first-order term is the approximation we use. By analyzing the higher-order terms, we prove that the differences are negligible.

For a full-ring transducer array with a radius R and centered at the origin O, we consider a source point at r′ and two adjacent detection element locations r1 and r2 (Fig. 9(a)). The bisector of the angle formed by vectors r1 and r2 intersects with the ring at . Vectors r1 and r2 form an angle γ; vectors and r′ form an angle φ; while vectors and −r′ form an angle β. Vector forms an angle α′ with the tangential dotted line crossing point . Thus, the angle formed by vectors and can be expressed as . Based on the Law of Cosines in triangles Or1r′ and Or2r′, we have

| (33) |

and

| (34) |

respectively. To simplify the following expression, we define functions

| (35) |

| (36) |

and

| (37) |

Here, we let . Using the Law of Sines in triangle

| (38) |

we have

| (39) |

and

| (40) |

One can prove that

| (41) |

| (42) |

and

| (43) |

Then, based on the Taylor expansion of fk,φ (γ)− fk,φ (−γ), we can express the sampling step size as

| (44) |

Here, we use the sign function

| (45) |

Fig. 9.

Accuracy of the sampling step size approximation. (a) A full-ring transducer array with a radius R (red circle), where two adjacent detection element locations r1 and r2, and a source point location r′ are marked. These and the following locations are also regarded as vectors from the origin O to them. Vectors r1 and r2 form an angle γ, whose bisector intersects with the ring at . Vector forms an angle α′ with the tangential dotted line that is perpendicular to vector . Vectors and r′ form an angle φ, while vectors and −r′ form an angle β. The angle formed by vectors and can be expressed as . This graph is used to estimate the sampling step size |‖r′−r1‖ − ‖r′−r2‖|. (b) and (c) Errors of using to approximate s(r′, θ) for r′ = 0.95 and , respectively.

In practice, it is the maximum sampling step size that affects spatial aliasing. To have a finer estimation of the maximum sampling step size, we first estimate the upper bound of |‖r′−r1‖ − ‖r′−r2‖|. For , we have . Thus, the high order terms in Equation (44) are nonpositive, and we have

| (46) |

Here we assume that N ≥ 8. For , we have and , which means that both fk,φ(γ) and fk,φ(−γ) belong to . Thus, we have

| (47) |

Here, we use Inequality (46) with . Similarly, for , we have

| (48) |

Combining Inequalities (46)–(48), we obtain the upper bound of the sampling step size

| (49) |

Next, we estimate the lower bound of |‖r′ − r1‖| − ‖r′ − r2‖ for . In fact, for each r′ > 0, there exist N locations of r′ evenly distributed on the circle ‖r′‖ = r′ such that . For each location, we have φ = arccos k, sgn(sin φ) = 1, and g (k, arccos k) = k. In general, we may have singularities in . To avoid the singularities, we assume k ≤ cos γ, which means that . Thus, we have and , leading to

| (50) |

and

| (51) |

respectively. One can validate that is equivalent to . Thus , as a function of k, is monotonically increasing on . Note that is equivalent to , which is valid for any . Thus, for any k ≤ cos γ, we have . Based on the monotonicity of as a function of k, we have

| (52) |

Further, one can prove that is equivalent to , which is valid for any . In summary, for any k ≤ cos γ, we have

| (53) |

and

| (54) |

Combining Inequalities (53) and (54) with Equation (44), we obtain

| (55) |

For any source point r′ = (r′ cos θ, r′ sin θ), the maximum sampling step size can be expressed as

| (56) |

From Inequality (49) we have

| (57) |

According to Inequality (55), there exist at least N values of θ evenly distributed in [0, 2π) such that

| (58) |

As can be seen in Equation (57), using to approximate s (r′, θ) is sufficient for the antialiasing analysis; while in Equation (58) can be used to estimate the necessity to further reduce the upper bound of s (r′, θ) for the N specific values of θ. For general values of θ, numerical simulation can be used to estimate.

Next, we analyze these estimations in our numerical simulations tions and in vivo experiments. In both cases, we use N = 512. The radius constraint, from Inequality (58) is close enough to the intrinsic . For the numerical simulations, we set c = 1.5mm · μs−1, R = 30mm, and fc = 4.5MHz, thus we have . For in vivo experiments, we use c = 1.49mm · μs−1, R = 110mm, and fc = 3.80MHz, then we have . In both cases, for the N specific values of θ, using to approximate s (r′, θ) is accurate enough. For general values of θ, we use numerical simulation to observe the approximation error for source locations on two circles (in vivo experiment cases): one with a radius of ( is the radius of S1), the other with a radius of (98% of R). We plot for and in Fig. 9(b) and (c), respectively. Each red bar marks a choice of θ assumed in Inequality (58). One can see from Fig. 9(b) and (c) that, for general values of θ, to further reduce the upper bound of s (r′, θ) is unnecessary.

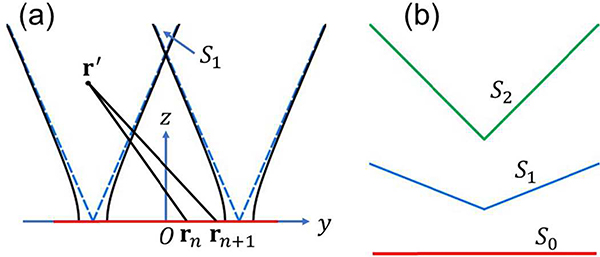

Appendix D

Spatiotemporal Antialiasing for the Linear Array

As another application of the proposed spatiotemporal analysis, we consider a linear array with N(N ≥ 4) point elements, pitch d, and lower cutoff wavelength λc < 2d. Using the linear array center O as the origin, the array (marked as the red line) as one axis and its normal as another axis, we construct a cartesian coordinate, as shown in Fig. 10(a). For a source point location r′ = (y, z) and two adjacent element locations and , n = 0, …, N – 2, the sampling step size can be expressed as

| (59) |

We first consider the sampling step size of

| (60) |

which is a hyperbola with a standard form:

| (61) |

For source points lie in between the two curves of this hyperbola, the spatial Nyquist criterion

| (62) |

is satisfied. Based on this geometric perception, the spatial Nyquist criterion for all element pairs can be simplified into two cases: the leftmost one , and the rightmost one . Illustrations of these two hyperbolas with z ≥ 0 are shown in Fig. 10(a) as black-solid curves, while their asymptotes are shown as blue-dashed lines. Due to the symmetry between these two hyperbolas, they intersect with z axis at the same point , while their asymptotes intersect with z axis at

| (63) |

We approximate the one-way Nyquist zone using

| (64) |

which is the region above the two intersecting asymptotes, as shown in Fig. 10(a). At y = 0, the approximation error achieves the maximum value

| (65) |

Assuming c = 1.5 mm · μs−1, fc = 4.5 MHz, N = 256, and d = 0.25 mm, we have z1 ≈ 35.5 mm and , which proves the accuracy of using S1 as the one-way Nyquist zone. Further, we approximate the two-way Nyquist zone using

| (66) |

One can prove that spatial interpolation extends S2 to S1, whereas further extending S1 requires temporal filtering. The linear array (with the whole imaging domain S0) and the boundaries of the two zones (S1 and S2) are shown in Fig. 10(b) as red, blue, and green lines, respectively.

Fig. 10.

Spatial aliasing in a linear array. (a) A linear array (marked as the red line) with N elements and pitch d. A Cartesian coordinate is formed with the array’s center O as the origin, the array direction as y axis, and its normal vector as z axis. Two adjacent element locations rn, rn+1, and a source point location r′ are marked. Two symmetric hyperbolas (with sampling step size ) with z ≥ 0 are shown as black-solid curves and their asymptotes as blue-dashed lines. The one-way Nyquist zone S1 is formed by the points above the two asymptotes crossing z axis. (b) The whole imaging domain S0, the one-way Nyquist zone S1, and the two-way Nyquist zone S2 are outlined with red, blue, and green lines, respectively.

References

- [1].Kruger RA, Kuzmiak CM, Lam RB, Reinecke DR, Del Rio SP, and Steed D, “Dedicated 3D photoacoustic breast imaging,” Med. Phys, vol. 40, no. 11, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Tzoumas S, Nunes A, Olefir I, Stangl S, Symvoulidis P, Glasl S, Bayer C, Multhoff G, and Ntziachristos V, “Eigenspectra optoacoustic tomography achieves quantitative blood oxygenation imaging deep in tissues,” Nat. Commun, vol. 7, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Deán-Ben XL, Sela G, Lauri A, Kneipp M, Ntziachristos V, Westmeyer GG, Shoham S, and Razansky D, “Functional optoacoustic neuro-tomography for scalable whole-brain monitoring of calcium indicators,” Light Sci. Appl, vol. 5, no. 12, p. e16201, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Li L, Zhu L, Ma C, Lin L, Yao J, Wang L, Maslov K, Zhang R, Chen W, Shi J, and others, “Single-impulse panoramic photoacoustic computed tomography of small-animal whole-body dynamics at high spatiotemporal resolution,” Nat. Biomed. Eng, vol. 1, no. 5, p. 0071, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Matsumoto Y, Asao Y, Yoshikawa A, Sekiguchi H, Takada M, Furu M, Saito S, Kataoka M, Abe H, Yagi T, and others, “Label-free photoacoustic imaging of human palmar vessels: a structural morphological analysis,” Sci. Rep, vol. 8, no. 1, p. 786, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Lin L, Hu P, Shi J, Appleton CM, Maslov K, Li L, Zhang R, and Wang LV, “Single-breath-hold photoacoustic computed tomography of the breast,” Nat. Commun, vol. 9, no. 1, p. 2352, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Li L, Shemetov AA, Baloban M, Hu P, Zhu L, Shcherbakova DM, Zhang R, Shi J, Yao J, Wang LV, and others, “Small near-infrared photochromic protein for photoacoustic multi-contrast imaging and detection of protein interactions in vivo,” Nat. Commun, vol. 9, no. 1, p. 2734, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Wu Z, Li L, Yang Y, Hu P, Li Y, Yang S-Y, Wang LV, and Gao W, “A microrobotic system guided by photoacoustic computed tomography for targeted navigation in intestines in vivo,” Sci. Robot, vol. 4, no. 32, p. eaax0613, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Wang K, Su R, Oraevsky AA, and Anastasio MA, “Investigation of iterative image reconstruction in three-dimensional optoacoustic tomography,” Phys. Med. Biol, vol. 57, no. 17, p. 5399, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Huang Chao, Wang Kun, Nie Liming, Wang LV, and Anastasio MA, “Full-wave iterative image reconstruction in photoacoustic tomography with acoustically inhomogeneous media,” IEEE Trans. Med. Imaging, vol. 32, no. 6, pp. 1097–1110, June 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Mitsuhashi K, Poudel J, Matthews TP, Garcia-Uribe A, Wang LV, and Anastasio MA, “A forward-adjoint operator pair based on the elastic wave equation for use in transcranial photoacoustic computed tomography,” SIAM J. Imaging Sci, vol. 10, no. 4, pp. 2022–2048, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Treeby BE and Cox BT, “k-Wave: MATLAB toolbox for the simulation and reconstruction of photoacoustic wave fields,” J. Biomed. Opt, vol. 15, no. 2, p. 021314, 2010. [DOI] [PubMed] [Google Scholar]

- [13].Mitsuhashi K, Wang K, and Anastasio MA, “Investigation of the farfield approximation for modeling a transducer’s spatial impulse response in photoacoustic computed tomography,” Photoacoustics, vol. 2, no. 1, pp. 21–32, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Han Y, Ntziachristos V, and Rosenthal A, “Optoacoustic image reconstruction and system analysis for finite-aperture detectors under the wavelet-packet framework,” J. Biomed. Opt, vol. 21, no. 1, p. 016002, 2016. [DOI] [PubMed] [Google Scholar]

- [15].Arridge S, Beard P, Betcke M, Cox B, Huynh N, Lucka F, Ogunlade O, and Zhang E, “Accelerated high-resolution photoacoustic tomography via compressed sensing,” ArXiv Prepr. ArXiv160500133, 2016. [DOI] [PubMed] [Google Scholar]

- [16].Han Y, Ding L, Ben XLD, Razansky D, Prakash J, and Ntziachristos V, “Three-dimensional optoacoustic reconstruction using fast sparse representation,” Opt. Lett, vol. 42, no. 5, pp. 979–982, 2017. [DOI] [PubMed] [Google Scholar]

- [17].Schoeder S, Olefir I, Kronbichler M, Ntziachristos V, and Wall W, “Optoacoustic image reconstruction: the full inverse problem with variable bases,” Proc. R. Soc. A, vol. 474, no. 2219, p. 20180369, 2018. [Google Scholar]

- [18].Matthews TP, Poudel J, Li L, Wang LV, and Anastasio MA, “Parameterized Joint Reconstruction of the Initial Pressure and Sound Speed Distributions for Photoacoustic Computed Tomography,” SIAM J. Imaging Sci, vol. 11, no. 2, pp. 1560–1588, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Xu Y and Wang LV, “Time reversal and its application to tomography with diffracting sources,” Phys. Rev. Lett, vol. 92, no. 3, p. 033902, 2004. [DOI] [PubMed] [Google Scholar]

- [20].Treeby BE, Zhang EZ, and Cox B, “Photoacoustic tomography in absorbing acoustic media using time reversal,” Inverse Probl, vol. 26, no. 11, p. 115003, 2010. [Google Scholar]

- [21].Cox BT and Treeby BE, “Artifact trapping during time reversal photoacoustic imaging for acoustically heterogeneous media,” IEEE Trans. Med. Imaging, vol. 29, no. 2, pp. 387–396, 2010. [DOI] [PubMed] [Google Scholar]

- [22].Treeby BE, Jaros J, and Cox BT, “Advanced photoacoustic image reconstruction using the k-Wave toolbox,” in Photons Plus Ultrasound: Imaging and Sensing 2016, 2016, vol. 9708, p. 97082P. [Google Scholar]

- [23].Ogunlade O, Connell JJ, Huang JL, Zhang E, Lythgoe MF, Long DA, and Beard P, “In vivo three-dimensional photoacoustic imaging of the renal vasculature in preclinical rodent models,” Am. J. Physiol.-Ren. Physiol., vol. 314, no. 6, pp. F1145–F1153, 2017. [DOI] [PubMed] [Google Scholar]

- [24].Xu M and Wang LV, “Universal back-projection algorithm for photoacoustic computed tomography,” Phys. Rev. E, vol. 71, no. 1, p. 016706, 2005. [DOI] [PubMed] [Google Scholar]

- [25].Song L, Maslov KI, Bitton R, Shung KK, and Wang LV, “Fast 3-D dark-field reflection-mode photoacoustic microscopy in vivo with a 30-MHz ultrasound linear array,” J. Biomed. Opt, vol. 13, no. 5, p. 054028, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Deán-Ben XL and Razansky D, “Portable spherical array probe for volumetric real-time optoacoustic imaging at centimeter-scale depths,” Opt. Express, vol. 21, no. 23, pp. 28062–28071, 2013. [DOI] [PubMed] [Google Scholar]

- [27].Pramanik M, “Improving tangential resolution with a modified delay-and-sum reconstruction algorithm in photoacoustic and thermoacoustic tomography,” JOSA A, vol. 31, no. 3, pp. 621–627, 2014. [DOI] [PubMed] [Google Scholar]

- [28].Xu Y, Xu M, and Wang LV, “Exact frequency-domain reconstruction for thermoacoustic tomography. II. Cylindrical geometry,” IEEE Trans. Med. Imaging, vol. 21, no. 7, pp. 829–833, 2002. [DOI] [PubMed] [Google Scholar]

- [29].Wang LV and Wu H, Biomedical optics: principles and imaging. John Wiley & Sons, 2012. [Google Scholar]

- [30].Zhou Y, Yao J, and Wang LV, “Tutorial on photoacoustic tomography,” J. Biomed. Opt, vol. 21, no. 6, p. 061007, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Deserno M, “How to generate equidistributed points on the surface of a sphere,” Polym. Ed, p. 99, 2004. [Google Scholar]