Abstract

Background:

US Federal regulations since the late 90s have required registration of some clinical trials and submission of results for some of these trials on a public registry; ClinicalTrials.gov. The quality of the submissions made to ClinicalTrials.gov determines the duration of the Quality Control review, whether the submission will pass the review (success) and how many review cycles it will take for a study to be posted. Success rate for all results submitted to ClinicalTrials.gov is under 25%. To increase the success of investigators’ submissions and meet the requirements of registration and submission of results in a timely fashion, the Johns Hopkins ClinicalTrials.gov Program implemented a policy to review all studies for quality before submission. To standardize our review for quality, minimize inter-reviewer variability and have a tool for training new staff, we developed a checklist.

Methods:

The Program staff learned from major comments received from ClinicalTrials.gov and also reviewed the Protocol Registration and Results System review criteria for registration and results to fully understand how to prepare studies to pass Quality Control review. These were summarized into bulleted points and incorporated into a checklist used by Program staff to review studies before submission.

Results:

In the period before the introduction of the checklist, 107 studies were submitted for registration with a 45% (48/107) success rate, a mean (SD) of 18.9 (26.72) days in review and 1.74 (0.78) submission cycles. Results for 44 records were submitted with 11% (5/44) success rate, 115.80 (129.33) days in review and 2.23 (0.68) submission cycles.

In the period after the checklist, 104 studies were submitted for registration with 80% (83/104) success rate, 2.12 (3.85) days in review and 1.22 (0.46) submission cycles. Results for 22 records were submitted with 41% (9/22) success rate, 39.27 (19.84) days in review and 1.64 (0.58) submission cycles.

Of the 44 results submitted prior to the checklist, 30 were Applicable or Probable Applicable Clinical Trials, with 10% (3/30) being posted within 30 days as required of the National Institutes of Health. For the 22 results submitted after the checklist, 17 were Applicable or Probable Applicable Clinical Trials with 47% (8/17) being posted within 30 days of submission.

These pre and post checklist differences were statistically significant improvements.

Conclusion:

The checklist has substantially improved our success rate and contributed to a reduction in the review days and number of review cycles. If Academic Medical Centers and industry will adopt or create a similar checklist to review their studies before submission, the quality of the submissions can be improved and the duration of review minimized.

Keywords: Checklist, clinical trials, ClinicalTrials.gov, success rate, quality, registration, results

Background

The Food and Drug Administration Modernization Act of 1997 required the US Department of Health and Human Services to establish a registry of clinical trials information for both federally and privately funded experimental trials for serious or life-threatening diseases.1 This requirement led to the creation of ClinicalTrials.gov in the year 2000. ClinicalTrials.gov is an online registry and results database of clinical trial information operated by the National Library of Medicine (NLM) at the National Institutes of Health (NIH). ClinicalTrials.gov provides an avenue for reporting results of all registered trials including failed or negative trials, majority of which do not get published.2 The registry provides researchers with information on negative outcomes in order to not waste resources on conducting experiments which have already failed in the same way. The International Committee of Medical Journal Editors instituted a policy in 2005 to require clinical trial registration in a public registry, to address the problem of selective trial reporting.3 The Amendments Act of 2007 expanded the Modernization Act to include registration of more studies that met certain criteria and submission of results information for some of these studies determined to be Applicable Clinical Trials.4 This regulation also requires investigators to submit the results of these studies within one year of completion and NIH to post these results within 30 calendar days of submission.

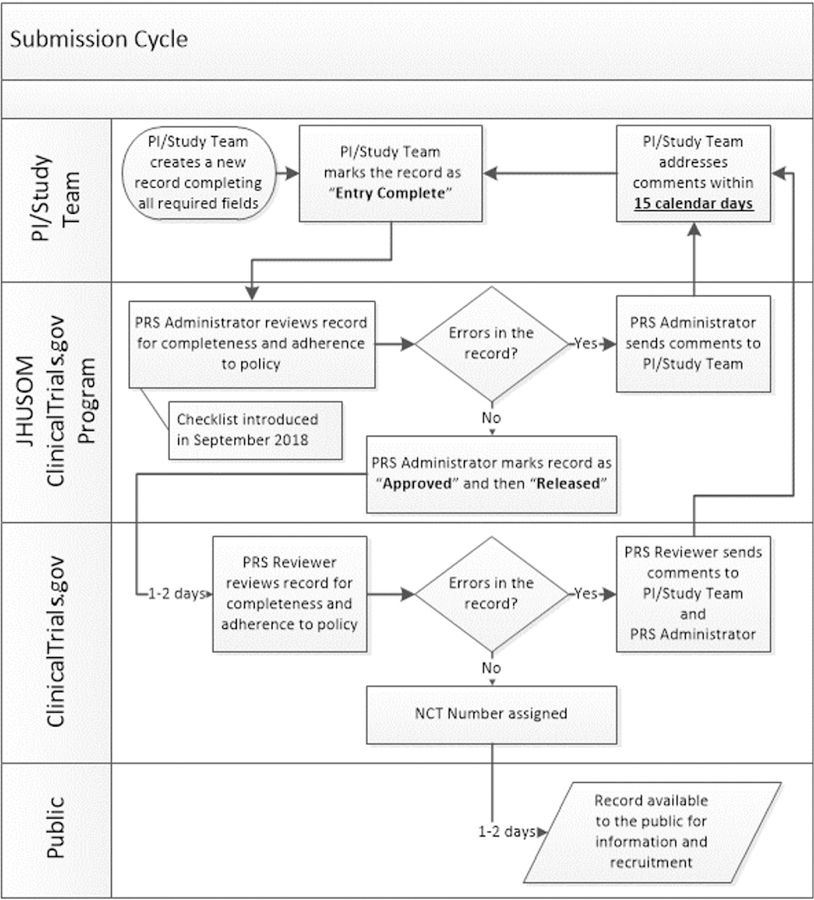

Studies are submitted to ClinicalTrials.gov through the Protocol Registration and Results System. Each study (termed a record) undergoes a Quality Control (QC) review. If the submission passes the QC review, the record is posted on the ClinicalTrials.gov public website and if it does not pass QC review, the record is returned to the investigator with major comments. The time from submission to either posting on the ClinicalTrials.gov public site or return of study to investigator with major comments is termed a submission cycle (Figure 1). If a study is reviewed for the first time and it passes the QC review, it is counted as a success. According to a recent publication, the overall success rate for results submitted to ClinicalTrials.gov from May 2017 to September 2018 was less than 25%. Industry records had a success rate of 31% (862 of 2780 submissions) and non-industry records had a success rate of 17% (582 of 3486 submissions).5

Figure 1. ClinicalTrials.gov Trial Registration Submission Cycle.

JHUSOM: Johns Hopkins University School of Medicine; NCT: National Clinical Trial; PI: Principal Investigator; PRS: Protocol Registration and Results System

The Johns Hopkins University School of Medicine desires to uphold the promise to trial participants that not only will results contribute to the scientific community but will also be made available to participants. Our institution also believes that registering and reporting the results of clinical trials on ClinicalTrials.gov exhibits responsible management of research funds. These commitments led to the creation of the Johns Hopkins ClinicalTrials.gov Program in June 2016. The primary function of the program is to assist investigators with registering and reporting results of trials. In order to improve the quality of submissions to ClinicalTrials.gov, the program created a comprehensive checklist for reviewing studies before submission.

Methods

Within the Protocol Registration and Results System, there is a role known as Responsible Party which determines who submits studies to ClinicalTrials.gov for posting. The role can either be the host institution/organization or be delegated by the institution to the Principal Investigator (PI). Before the creation of our program, PIs were delegated the Responsible Party role. The Johns Hopkins ClinicalTrials.gov Program realized that these submissions were often going beyond statutory timelines, not in the right format to pass the QC review or both. This led to most records being returned with major comments, and an overall poor success rate.6 Furthermore, the QC review has become more rigorous, with more stringent measures to ensure that whatever is posted to the public is a comprehensible summary of the study. We noticed some submissions made prior to 2018 were in formats that passed QC review but when studies are submitted in similar formats now, they are returned with major comments.

To improve institutional compliance the Vice Dean of Clinical Investigation implemented a policy, upon recommendations from the Johns Hopkins ClinicalTrials.gov Program, mandating the Responsible Party role to be designated as the institution. This allows our Program staff (two full-time Clinical Research Compliance Specialists) to review records for any errors or inconsistencies and ensure entries are in the NIH-preferred format before submission. To standardize our review, minimize inter-reviewer variability and have a tool for training new staff, we developed a checklist (see supplementary material) in September 2018.

Our Program staff reviewed a compilation of major comments we previously received from the NIH to fully understand how to prepare studies to pass QC review. NIH has recently made available a compilation of major comments.7 We also reviewed NIH guidance materials for registration and results submission.8 The key themes from our major comments compilation and the NIH review criteria were summarized into bulleted points covering every section of a record. Our institutional policies were added to the checklist for internal consistency, easier workflow and management of records (Figure 2). The two full-time Clinical Research Compliance Specialists carefully reviewed each study using this checklist before submission to ClinicalTrials.gov. The checklist is continually updated with any new major comments received from NIH.

Figure 2. Front page of Johns Hopkins ClinicalTrials.gov Program record review checklist.

ACT: Applicable Clinical Trial; CRMS: Clinical Research Management System; IDE: Investigational Device Exemption; IND: Investigational New Drug; IRB: Institutional Review Board; JHU: Johns Hopkins University; NCT: National Clinical Trial; NIH: National Institutes of Health; pACT: Probable Applicable Clinical Trial; PI: Principal Investigator; TBD: To be determined

Johns Hopkins University has a number of entities with individual Protocol Registration and Results System accounts. This checklist was implemented for the Johns Hopkins School of Medicine and School of Nursing account only.

We reviewed all submissions for registration and results posting one year before the introduction of the checklist (September 2017 – August 2018) and one year after (September 2018 – August 2019). Data was analyzed with Stata IC 15.1.

Records are identified in the Protocol Registration and Results System as either Applicable or Probable Applicable Clinical Trials. These were combined because they have the same statutory requirement for results submission and posting. All other records not identified as Applicable or Probable Applicable Clinical Trials do not meet statutory requirements but are often submitted on a voluntary basis. These records are known as Non Applicable Clinical Trials and are not prioritized with respect to review time although the review criteria is the same.

Results

In the period before the introduction of the checklist, 107 studies were submitted for registration with a 45% (48/107) success rate, a mean (SD) of 18.9 (26.72) days in review and 1.74 (0.78) submission cycles (Table 1). Results for 44 records were submitted with 11% (5/44) success rate, 115.80 (129.33) days in review and 2.23 (0.68) submission cycles.

Table 1.

Success rates, duration of reviews and submission cycles for pre and post checklist submissions to ClinicalTrials.gov

| Pre Checklist | Post Checklist | p-value | |

|---|---|---|---|

| Registration, N | 107 | 104 | |

| Success rate (%) | 44.86 | 79.81 | < 0.001 |

| Submission cycles, mean (SD) | 1.74 (0.78) | 1.22 (0.46) | < 0.0001 |

| Total days in review, mean (SD) | 18.90 (26.72) | 2.12 (3.85) | < 0.0001 |

| Results – ACTs, N | 30 | 17 | |

| Success rate (%) | 10.00 | 35.29 | 0.054 |

| Submission cycles, mean (SD) | 2.17 (0.59) | 1.71 (0.59) | 0.0152 |

| Total days in review | 70.10 (31.03) | 36.35 (16.12) | 0.0001 |

| Posted within 30 daysa (%) | 10.00 | 47.06 | 0.009 |

| Results – Non-ACTs, N | 14 | 5 | |

| Success rate (%) | 14.29 | 60.00 | 0.084 |

| Submission cycles, mean (SD) | 2.36 (0.84) | 1.40 (0.55) | 0.0310 |

| Total days in review, mean (SD) | 213.71 (195.03) | 49.20 (29.44) | 0.0263 |

| Results – Overall, N | 44 | 22 | |

| Success rate (%) | 11.36 | 40.91 | 0.010 |

| Submission cycles, mean (SD) | 2.23 (0.68) | 1.64 (0.58) | 0.0011 |

| Total days in review, mean (SD) | 115.80 (129.33) | 39.27 (19.84) | < 0.0001 |

ACTs: Applicable Clinical Trials

Applies to Applicable or probable Applicable Clinical Trials only.

In the period after the checklist, 104 studies were submitted for registration with 80% (83/104) success rate, 2.12 (3.85) days in review and 1.22 (0.46) submission cycles. Results for 22 records were submitted with 41% (9/22) success rate, 39.27 (19.84) days in review and 1.64 (0.58) submission cycles.

Of the 44 results submitted prior to the checklist, 30 were Applicable or Probable Applicable Clinical Trials, with 10% (3/30) being posted within 30 days as required of the NIH. For the 22 results submitted after the checklist, 17 were Applicable or Probable Applicable Clinical Trials with 47% (8/17) being posted within 30 days of submission. There were more results submitted within the year before the checklist due to a backlog of studies with unreported results from prior years.

These pre and post checklist differences were all statistically significant improvements (Table 1).

Conclusion

From the start of our program in June 2016, our efforts to improve our success rate through learning from previous comments only achieved a success rate below the national average. The checklist has substantially improved our success rate and contributed to a reduction in the review days and number of review cycles. In October 2019, NIH announced posting of results for Applicable Clinical Trials within 30 calendar days of submission whether or not the submission passes QC review, beginning January 2020.9 This is to meet the federal requirement to post these results within 30 calendar days of submission. Deb Zarin, former ClinicalTrials.gov director raised questions regarding this initiative. Will the posting of results which did not pass QC review shame Academic Medical Centers into submitting more quality results? Will the public come to distrust results posted on ClinicalTrials.gov now that these results will be displayed along with major comments of why the submission has not passed quality review?10 As our institution focuses on producing quality submissions, this checklist has proven to be a highly effective tool. There was no formal analysis of the time spent using the checklist. Our experience is that the amount of time it takes to use the checklist in reviewing studies is inconsequential compared to the resource requirements of multiple submission cycles and responding to major comments. If Academic Medical Centers, industry and the network of clinical trial registries will adopt or create a similar checklist, the quality of submissions can be improved and the duration of review minimized.

Supplementary Material

Acknowledgments

Funding

Research reported in this publication was supported by the National Center For Advancing Translational Sciences of the National Institutes of Health under Award Number (UL1TR003098). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Footnotes

Conflict of Interest

Authors have no conflict of interest to declare

References

- 1.Food and Drug Administration Modernization Act of 1997. Public Law 105–115. https://www.govinfo.gov/content/pkg/PLAW-105publ115/pdf/PLAW-105publ115.pdf#page=17 (1997, accessed 18 November 2019).

- 2.Hwang TJ, Carpenter D, Lauffenburger JC, et al. Failure of Investigational Drugs in Late-Stage Clinical Development and Publication of Trial Results. JAMA Intern Med 2016; 176(12):1826–1833. [DOI] [PubMed] [Google Scholar]

- 3.De Angelis C, Drazen JM, Frizelle FA, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. N Engl J Med 2004; 351:1250–1251. [DOI] [PubMed] [Google Scholar]

- 4.Food and Drug Administration Amendments Act of 2007. Public Law 110–85. https://www.govinfo.gov/content/pkg/PLAW-110publ85/pdf/PLAW-110publ85.pdf#page=82 (2007, accessed 18 November 2019).

- 5.Zarin DA, Fain KM, Dobbins HD, et al. 10-Year Update on Study Results Submitted to ClinicalTrials.gov. N Engl J Med 2019; 381:1966–1974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Keyes A, Mayo-Wilson E, Atri N, et al. Time From Submission of Johns Hopkins University Trial Results to Posting on ClinicalTrials.gov. JAMA Intern Med 2020; 180(2):317–319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.ClinicalTrials.gov PRS. ClinicalTrials.gov Major Comments, https://prsinfo.clinicaltrials.gov/MajorComments.html (2019, accessed 26 January 2020).

- 8.U.S. National Library of Medicine. Support Materials. https://clinicaltrials.gov/ct2/manage-recs/resources#ReviewCriteria (2020, accessed 30 November 2019).

- 9.U.S. National Library of Medicine. Updated Quality Control and Posting Procedures Webinar, https://clinicaltrials.gov/ct2/manage-recs/present#QCPostingWebinar (2020, accessed 30 November 2019).

- 10.Zarin DA. The Culture of Trial Results Reporting at Academic Medical Centers. JAMA Intern Med 2020; 180(2):319–320. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.