Abstract

Introduction

The breakdown of a deadly infectious disease caused by a newly discovered coronavirus (named SARS n-CoV2) back in December 2019 has shown no respite to slow or stop in general. This contagious disease has spread across different lengths and breadths of the globe, taking a death toll to nearly 700 k by the start of August 2020. The number is well expected to rise even more significantly. In the absence of a thoroughly tested and approved vaccine, the onus primarily lies on obliging to standard operating procedures and timely detection and isolation of the infected persons. The detection of SARS n-CoV2 has been one of the core concerns during the fight against this pandemic. To keep up with the scale of the outbreak, testing needs to be scaled at par with it. With the conventional PCR testing, most of the countries have struggled to minimize the gap between the scale of outbreak and scale of testing.

Method

One way of expediting the scale of testing is to shift to a rigorous computational model driven by deep neural networks, as proposed here in this paper. The proposed model is a non-contact process of determining whether a subject is infected or not and is achieved by using chest radiographs; one of the most widely used imaging technique for clinical diagnosis due to fast imaging and low cost. The dataset used in this work contains 1428 chest radiographs with confirmed COVID-19 positive, common bacterial pneumonia, and healthy cases (no infection). We explored the pre-trained VGG-16 model for classification tasks in this. Transfer learning with fine-tuning was used in this study to train the network on relatively small chest radiographs effectively.

Results

Initial experiments showed that the model achieved promising results and can be significantly used to expedite COVID-19 detection. The experimentation showed an accuracy of 96% and 92.5% in two and three output class cases, respectively.

Conclusion

We believe that this study could be used as an initial screening, which can help healthcare professionals to treat the COVID patients by timely detecting better and screening the presence of disease.

Implication for practice

Its simplicity drives the proposed deep neural network model, the capability to work on small image dataset, the non-contact method with acceptable accuracy is a potential alternative for rapid COVID-19 testing that can be adapted by the medical fraternity considering the criticality of the time along with the magnitudes of the outbreak.

Keywords: COVID, Chest radiographs, Neural networks, Transfer learning

Introduction

The brisk spread of novel coronavirus disease (COVID-19) throughout the world has put an unprecedented load on healthcare systems around the globe. It is a highly infectious disease caused due to SARS n-CoV2 (severe acute respiratory syndrome novel coronavirus-2). The disease originated in December 2019 and has affected more than 200 countries worldwide.1 It is declared a pandemic by the world health organization (WHO).2 The disease has a mortality rate of 2%, which is due to massive respiratory failure and alveolar damage.3

The current form of testing, i.e., viral nucleic acid detection using real-time polymerase chain reaction (RT-PCR), is the acceptable form of COVID diagnosis. However, in many countries, especially developing nations where testing kits are not adequate and widespread testing has not started, early, automatic, and cost-effective diagnosis can be crucial for timely monitoring of the spread of disease.4 , 5 Non-contact automated diagnosis systems can prove to be an essential tool in containing the virus spread (even in healthcare professionals) with the timely referral of patients to care facilities and quarantine. Currently, in India, the cost of the RT-PCR test is very high and is out of budget for the majority of the population.6 Financial constraints arising from diagnostic test cost is a significant concern for patients in major developing nations.7

Healthcare systems across the globe have evolved in multiple domains to enhance the detection and diagnosis rates with the central aim of being minimally invasive. This underlines the fact that invasive procedures for the detection and diagnosis of diseases should be avoided to a great extent and wherever possible. The inclusion of medical imaging techniques like ultrasound, Computed Tomography (CT), Magnetic Resonance Imaging (MRI), Functional Magnetic Resonance Imaging (fMRI), etc. has dramatically changed the way that diseases are detected, diagnosed, examined, or analyzed. Since each of these imaging modalities has a unique underlying physics of operation, each imaging modality is different. Hence, every imaging modality cannot be used for every anatomical site. Among these imaging modalities, the best that suits the study of lungs and its health conditions is the radiograph. The details from a radiograph can aid a radiologist or other relevant healthcare professionals to evaluate lungs to diagnose conditions like a persistent cough, breathlessness, etc. Radiographs can also be utilized to diagnose conditions like emphysema, pneumonia, and cancer. In addition to this, generating radiographs from a subject is relatively straightforward in practice. This makes radiographs suitable for detection and diagnosis in emergency situations.

With the advent of these medical imaging modalities, researchers and other professionals have continuously attempted to develop computer-aided systems that act a second opinion to the healthcare experts. There is a plethora of work in literature wherein computer-aided detection and diagnosis systems have been developed. These systems have been developed for numerous applications like the detection of brain tumors, thyroid nodules, ground-glass opacity, Alzheimer's disease, etc.

With the help of publicly available datasets of chest radiographs (X-ray images) of COVID-19 patients and healthy cases, the study of automatic COVID detection became possible through the use of radiographs, which demonstrate COVID positive patients, other bacterial pneumonia patients, and healthy patients.8 Chest radiographs are the universally used imaging technique for diagnosis, and almost all the healthcare facilities, even in remote (underdeveloped) areas, have radiographic imaging as a basic diagnosis system. CT imaging can also be used for COVID-19 detection,9, 10, 11 but due to the non-availability of CT scanners in small healthcare facilities and its time-consuming nature, it prohibits the timely detection and screening of COVID patients. Also, real-time chest radiographic imaging can help to study the progression of the disease, which in turn can help to better screen the patients at different stages of disease (sec-4). Fig. 1 demonstrates the radiographs of COVID-19 positive, bacterial pneumonia, and healthy cases.

Figure 1.

Chest radiographs of COVID positive patient, bacterial pneumonia and healthy case.

In view of the above-mentioned advantages, a deep learning-based model was developed that can be used to automate COVID-19 detection and screening with high accuracy and sensitivity. This could reduce the number of RT-PCR tests required as only those patients can be sent for viral nucleic tests, which test positive with this model.

In recent years deep learning models have been very successful in object detection and classification.12, 13, 14 In medical image analysis and classification, these models have started to prove very useful and are of great help to doctors, especially radiologists, to detect patterns in medical images15, 16, 17, 18 Computer-aided diagnosis (CAD) systems employed with deep learning techniques help professionals to make clinical decisions.

Deep learning architectures, especially convolutional neural networks (CNNs), help in automatic feature detection in images.19 The repeated process learns rich and discriminative features of linear and non-linear transformations at every layer of the CNN model.20 The network starts with more straightforward features and learns more abstract and discriminative features deeper into the network.

This study aimed to utilize state of the art deep learning techniques for automatic COVID-19 detection on chest radiographs to assist in testing and screening of COVID-19 patients.

Materials and method

Transfer learning

Transfer learning is the strategy by which we can use the knowledge gained while solving some problems and applying it to some different but related issues. Usually, the dataset involved in the new problem is small in order to train the CNN from scratch. Transfer learning involves initially training the deep neural network for a specific task using a large-scale dataset like ImageNet. To obtain useful features extracted from the network, it is usually believed that the dataset must have at least 5000 to 6000 instances per class,21 i.e., data availability is the most important factor for initial training for successfully extracting the significant features. After successfully initial training of the CNN, the network is set to process the new data and extract features from it based on knowledge gained from the initial training.

Transfer learning in deep neural networks can be done in two ways. The first method involves feature extraction using transfer learning, where the original CNN model is treated as a feature extractor, and a new classifier is trained on top of that.22 In this method, the pre-trained model retains its model architecture as well as its learned parameters. The learned features from this model are given to the new classifier learned for the specific task at hand. The second method involves network modification to pre-trained models to obtain better results. Usually, some blocks in these models are replaced with new fine-tuned ones according to the specific task at hand. Mostly fully connected (FC) layers in the original pre-trained model are replaced with a new FC head whose weights are initialized randomly. In order to preserve the rich discriminating filters learned by convolutional (Conv) layers, the convolutional layers are frozen, i.e., backpropagation is not allowed through these layers but only up to FC layers, as their weights are random. This method allows FC layers to start to learn the patterns from highly discriminative and feature-rich convolutional layers. After FC layers have started to learn the patterns of a new dataset, the whole network is allowed to train (unfreeze) with very small learning in order to achieve sufficient accuracy on the new task.

Model

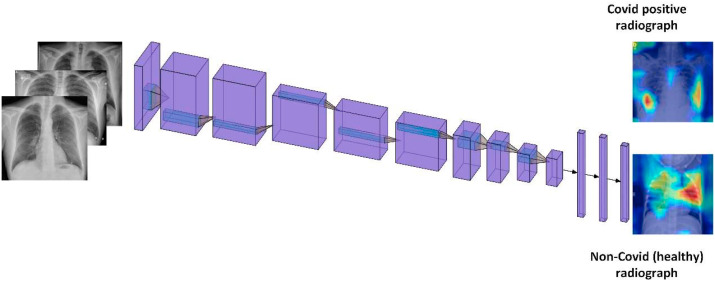

In this study, we use the second strategy for training the VGG-16 network for COVID-19 detection. A pre-trained VGG-16 model was used for a classification task.23 VGG-16 is a 16-layer convolutional neural network which consists of 13 convolutional layers and 3 fully connected layers. It also contains 5 max-pooling layers. In two experimental settings, the last dense layer of the network is changed to two classes (COVID and non-COVID) and three class (COVID, non-COVID pneumonia, and normal) output settings. It takes an input image of size 224 × 244 × 3 images and produces a feature vector of 1 × 1 × 4096 at the first dense layer. We freeze the convolutional layers and use a new FC head containing three fully connecting layers with 512, 64, and 2 neurons in two-class cases while 512, 64, and 3 neurons in three-class case. In FC head, we use ReLU activation while as during training, dropout of 0.5 is used to avoid overfitting. In the base VGG-16 model, there are 13 convolution layers and 5 max-pooling layers. Conv1_1 and conv1_2 use 64 filters, conv2_1 and conv2_2 use 128 filters, conv3_1, conv3_2 and conv3_3 use 256 filters while as conv4_1, conv4_2, conv4_3, conv5_1, conv5-2, conv5-3 use 512 filters. VGG-16 in all its convolutional layers use 3x3 filters while as max-pooling layers use 2 × 2 pooling with a stride of 2. Figure (2) shows the original architecture of VGG-16. In transfer learning, layer cutoff represents the number of untrainable layers in the network, i.e., no of frozen layers while as rest of the layers are trained on the new dataset. In our experiments, the layer cutoff is 13, i.e., 13 untrainable layers, while as FC head, which contains 3 FC layers are trained on the COVID dataset.

Figure 2.

A schematic model for automatic COVID-19 detection.

Dataset used

In this work, we used the following datasets:

-

1.

We used an open-source database containing chest X-rays of COVID and non-COVID patients (including different diseases like SARS, Streptococcus, etc.).24

-

2.

Kaggle chest X-ray competition dataset.25

A dataset consisting of 1428 radiographs is created by a data augmentation technique that generates more samples by rotation at five different angles, translation, and flipping (up/down, right/left).

Results

We allocated the dataset randomly into training and test sets with 70% from each class (COVID, non-COVID pneumonia, and healthy) as the training set and the remaining 30% as the test set. Regarding the classification, specific metrics are recorded as follows:

-

•

True-positive (TP): refers to correctly classified cases of COVID-19.

-

•

False-positive (FP): refers to incorrectly classified healthy cases as COVID-19.

-

•

True-negative (TN): refers to healthy cases that are correctly classified.

-

•

False-negative (FN): refers to incorrectly classified COVID-19 cases as healthy.

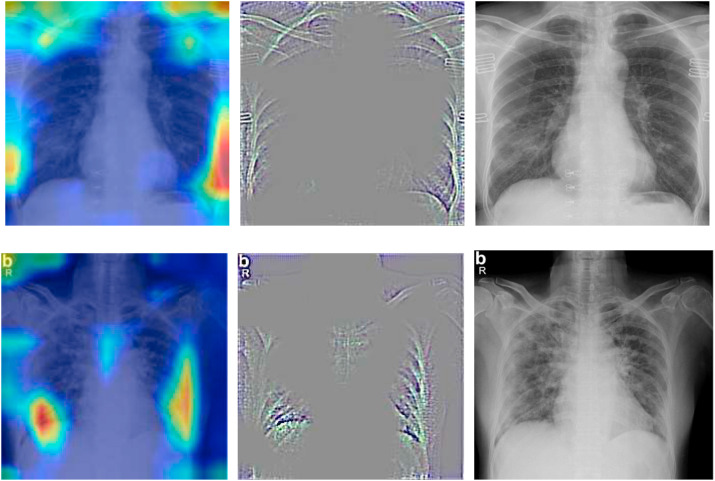

The main goal of the study is to identify and detect COVID-19 cases; therefore, in three-class cases (COVID, non-COVID pneumonia, and normal (healthy)). TP indicates the correctly classified COVID-19 cases, TN indicates the cases belonging to non-COVID pneumonia or healthy, which are classified as COVID-19. FP shows the cases that actually belong to non-COVID pneumonia or normal but classified as COVID-19, while as FN indicates cases belonging to COVID-19 but classified as normal or non-COVID pneumonia. We use gradient class activation maps (Grad-CAM)26 to highlight the regions of interest (ROI) that the model used to make predictions. Grad-CAM images of different patients are shown in Fig. (3), which highlights the highly localized regions of interest for COVID-19 positive cases class within chest radiographs.

Figure 3.

Grad-CAM images of COVID-positive patients (left: heat map, middle: guided grad-cam, right: original x-ray).

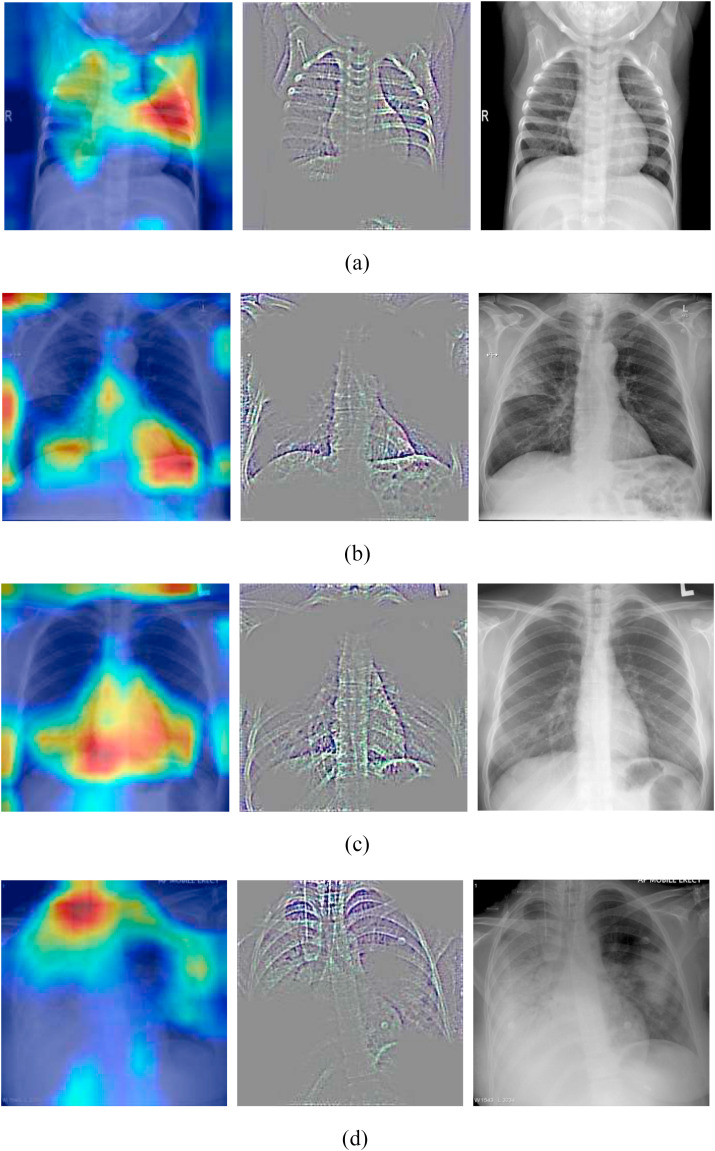

Figure (4) shows the grad-cam images of non-COVID patients (non-COVID pneumonia and healthy).

Figure 4.

Grad-cam images of Non-COVID patients (a: Healthy X-ray, b: Bacterial pneumonia, c: SARS, d: Streptococcus).

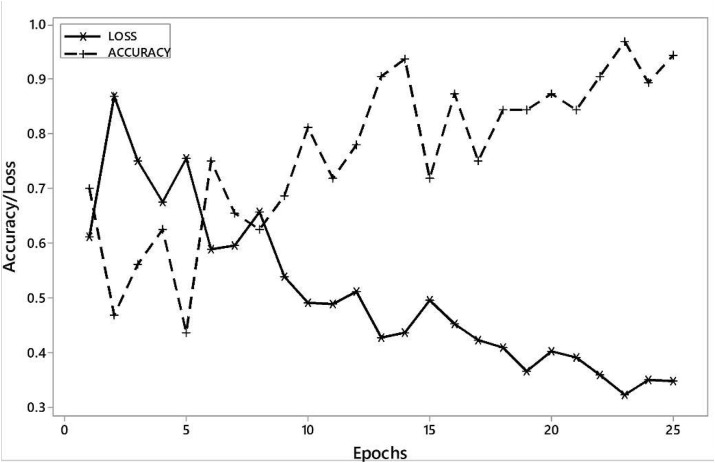

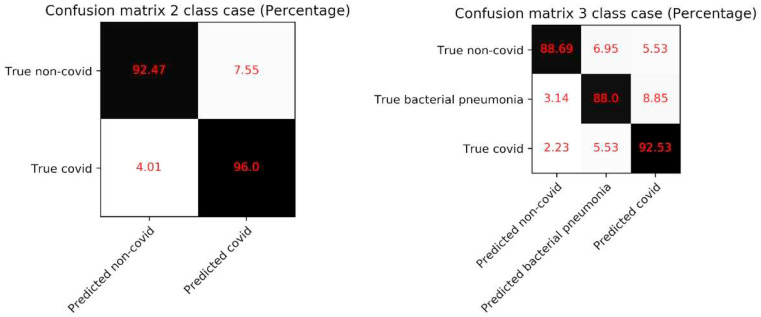

An accuracy of 96% in the two-class output case and 92.53% in the three-class output case is achieved. The network in both cases was trained 25 epochs with a batch size of 64. The learning rate was set to 0.001, and Adam optimizer was used for training. All the layers in the network use the ReLU activation function.

Figure (5) represents the plot of accuracy/training loss vs. epochs. Table (1) shows that we managed to achieve sensitivity (true positive rate) of 92.64% and 86.7%, while as we achieved specificity (true negative rate) of 97.27% and 95.1% in 2 and 3 output class cases, respectively.

Figure 5.

Learning curve accuracy/training loss obtained on VGG-16.

Table 1.

Result metrics obtained.

| Network | Accuracy | Specificity | Sensitivity |

|---|---|---|---|

| VGG-16 (2 class output) | 96% | 97.27% | 92.64% |

| VGG-16 (3 class output) | 92.53% | 95.1% | 86.7% |

The confusion matrices on the test data for the two configurations of our model are shown in Fig. 4. It can be seen that in 2 class cases, the false-negative of 4.01% and false positive of 7.55%. The true positive in this case is 96.0%. In the 3 class case, the false negative is 7.76%, while false positives are 14.38%. In this case, we got an accuracy of 92.53% (see Fig. 6 ).

Figure 6.

Confusion matrix of 2 class case and 3 class case of COVID detection.

Discussion

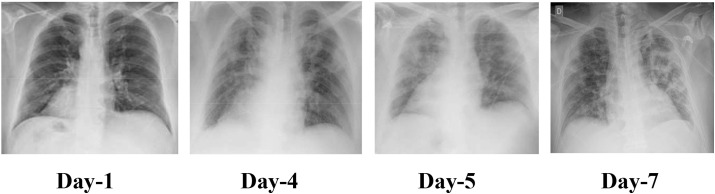

The study is aimed at designing the deep neural network-based model to automatically detect the COVID-19 using chest radiographs without explicit feature engineering. Fig. 7 shows the timeline of the radiographs of a 50-year-old COVID-19 positive patient for a week.27 Day 1 represents no markers of infection, i.e., lungs are clear. On day4, the radiograph is patchy and ill-defined. It has bilateral alveolar consolidations. Day 5 radiograph shows the typical radiological worsening with consolidation in the left upper lobe. Day 7 shows the typical presence of pneumonia in the radiograph.

Figure 7.

Chest radiographs of a 50-year-old COVID-19 patient with pneumonia over a week.

Fig. 3 shows the saliency maps of positive COVID-19 radiographs. The typical markers present in Fig. 3 that depict the presence of COVID infection include:

-

1.

Patchy shadows and ground-glass opacity in the early stages. (Day1, Day4 Fig. 7 ).

-

2.

As the infection progresses, multiple ground glass and infiltrate in both lungs appear. (Day 7 Fig. 7 ).

In saliency maps, the highlighted regions represent the regions of interest in the radiograph. This can be a useful tool for doctors to elevate the efficiency of diagnosis based on saliency maps. The frequently observed characteristics are observed in COVID-19 radiographs28:

-

1.

Ground glass opacities.

-

2.

Broncho vascular thickening.

-

3.

Air space consolidation.

-

4.

Bronchiectasis.

Table 2 demonstrates the comparison of deep learning-based techniques used in COVID-19 detection and outlines the proposed method achieves the state of the art accuracy in 2 class case. Sethy et al.29 used a combination of CNN model-based and SVM classifier for COVID-19 detection. They achieved 95.38% accuracy on 50 chest radiograph images. Song et al.30 and Wang et al.31 used deep learning on CT images to detect COVID-19 and managed to achieve 90.8% and 82.9% accuracy, respectively. Xu et al.32 used ResNet deep learning model on CT images and got 86.7% accuracy. Zheng at al 33 also used CT images couples with deep learning and achieved 90.8% accuracy.

Table 2.

Comparison of proposed automatic COVID-19 detection technique using deep learning with other deep learning methods.

| Reference | Types of images | Dataset used | Accuracy (%) |

|---|---|---|---|

| Ioannis et al.34 | Chest radiographs | 224 COVID-19(+) | 93.48 |

| 700 Pneumonia | |||

| 504 Healthy | |||

| Wang & Wong et al.35 | Chest radiographs | 53 COVID-19(+) | 92.4 |

| 5526 COVID-19 (−) | |||

| 8066 Healthy | |||

| Sethy & Behar et al.29 | Chest radiographs | 25 COVID-19(+) | 95 |

| 25 COVID-19 (−) | |||

| Ying et al.30 | Chest CT | 777 COVID-19(+) | 90.8 |

| 708 Healthy | |||

| Wang et al.31 | Chest CT | 195 COVID-19(+) | 82.9 |

| 258 COVID-19(−) | |||

| Xu et al.32 | Chest CT | 219 COVID-19(+) | 86.7 |

| 224 Viral pneumonia | |||

| 175 Healthy | |||

| Zheng et al.33 | Chest CT | 313 COVID-19(+) | 90.8 |

| 229 COVID-19(−) | |||

| This study | Chest radiographs | 224 COVID-19(+) | 96 - 2 class |

| 504 Healthy | |||

| 224 COVID-19(+) | 92.53 - 3 class | ||

| 700 Pneumonia | |||

| 504 Healthy |

It must be noted that the proposed model can be used for COVID-19 diagnosis without putting pressure on the already saturated hospital systems. The availability of chest radiographs for COVID-19 detection is the limitation of this study as only limited number of samples are present in the datasets. In this study, we utilize the dataset of 1428 chest radiographs, of which 224 radiographs are of COVID-19 positive patients, 700 radiographs belong to bacterial pneumonia cases while as 504 radiographs are of healthy instances. To demonstrate the effectiveness of the approach, we used two experimental settings with two and three output class cases. In two output class cases, bacterial pneumonia and healthy case images are treated as non-COVID while, as in three output class cases, we used COVID positive, pneumonia, and healthy as three output classes.

Conclusion

In this paper, we used the deep learning model to detect COVID-19 using chest radiographs automatically. The study shows the robust and effective method of non-contact testing on COVID patients, which can help in early and cost-effective detection and screening of COVID cases. A collaboration of medical professionals is required to check if the model extracts sufficient biomarkers for the COVID-19 positive cases. Grad CAM images of chest radiographs are presented, which shows the regions of interest for confirmed COVID-19 positive cases, bacterial pneumonia, and healthy cases.

We believe that this study could be used as an initial screening, which can help healthcare professionals to treat the COVID patients by timely detecting better and screening the presence of disease. It provides not only a cost-effective but also an automatic non-contact testing method, which helps in reducing the risk of COVID contraction by medical practitioners.

Conflict of interest statement

None.

Acknowledgements

Funding source is Ministry of Human Resource Development, NPIU, India. Grant number: 1-5740344761.

References

- 1.Coronavirus cause: origin and how it spreads. https://www.medicalnewstoday.com/articles/coronavirus-causes [Online]. Available.

- 2.Rolling updates of COVID-19. https://www.who.int/emergencies/diseases/novel-coronavirus-2019/events-as-they-happen [Online]. Available.

- 3.Xu Z., Shi L., Wang Y., Zhang J., Huang L., Zhang C. Pathological findings of COVID-19 associated with acute respiratory distress syndrome. Lancet Respir Med. 2020;8(4):420–422. doi: 10.1016/S2213-2600(20)30076-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Won J., Lee S., Park M., Kim T.Y., Park M.G. Choi BY, et al. Development of a laboratory-safe and low-cost detection protocol for SARS-CoV-2 of the Coronavirus Disease 2019 (COVID-19) Exp Neurobiol. 2020;29(2):107. doi: 10.5607/en20009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Esbin M.N., Whitney O.N., Chong S., Maurer A., Darzacq X., Tjian R. Overcoming the bottleneck to widespread testing: a rapid review of nucleic acid testing approaches for COVID-19 detection. RNA. 2020:076232. doi: 10.1261/rna.076232.120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rs 2,400 per covid test incurs loss, hampers testing. https://www.outlookindia.com/website/story/india-news-rs-2400-per-covid-test-incurs-loss-hampers-testing-private-sector-labs/355904 [Online]. Available.

- 7.Developing countries face diagnostic challenges as the COVID-19 pandemic surges. https://cen.acs.org/analytical-chemistry/diagnostics/Developing-countries-face-diagnostic-challenges/98/i27 [Online]. Available.

- 8.Dao J.P.C., Pm and L. COVID-19 image data collection. arXiv. 2020;2003:11597. https://github.com/ieee8023/covid-chestxray-dataset [Online] Available. [Google Scholar]

- 9.Fang Y., Zhang H., Xie J., Lin M., Ying L., Pang P. 2020 Feb 19. Sensitivity of chest CT for COVID-19: comparison to RT-PCR; p. 200432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gozes O., Frid-Adar M., Greenspan H., Browning P.D., Zhang H., Ji W. Rapid AI development cycle for the coronavirus (COVID-19) pandemic: initial results for automated detection & patient monitoring using deep learning CT image analysis. arXiv. 2020 200305037. [Google Scholar]

- 11.Shi F., Xia L., Shan F., Wu D., Wei Y., Yuan H. Large-scale screening of covid-19 from community acquired pneumonia using infection size-aware classification. arxiv. 2020 doi: 10.1088/1361-6560/abe838. [DOI] [PubMed] [Google Scholar]

- 12.Girshick R., Donahue J., Darrell T., Malik J. Proceedings of the IEEE computer society conference on computer vision and pattern recognition. 2014. Rich feature hierarchies for accurate object detection and semantic segmentation. [Google Scholar]

- 13.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. InAdvances in neural information processing systems. 2012:1097–1105. [Google Scholar]

- 14.Simonyan K., Zisserman A. 3rd international conference on learning representations, ICLR 2015 - conference track proceedings. 2015. Very deep convolutional networks for large-scale image recognition. [Google Scholar]

- 15.Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M. Medical Image Analysis; 2017. A survey on deep learning in medical image analysis. [DOI] [PubMed] [Google Scholar]

- 16.Xu Y., Mo T., Feng Q., Zhong P., Lai M., Chang E.I.C. ICASSP, IEEE international conference on acoustics, speech and signal processing - proceedings. 2014. Deep learning of feature representation with multiple instance learning for medical image analysis. [Google Scholar]

- 17.Brosch T., Tam R. Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics) 2013. Manifold learning of brain MRIs by deep learning. [Google Scholar]

- 18.Rajpurkar P., Irvin J., Zhu K., Yang B., Mehta H., Duan T. Radiologist-level pneumonia detection on chest x-rays with deep learning. arxiv. 2017 [Google Scholar]

- 19.Varshni D., Thakral K., Agarwal L., Nijhawan R., Mittal A. Proceedings of 2019 3rd IEEE international conference on electrical, computer and communication technologies, ICECCT 2019. 2019. Pneumonia detection using CNN based feature extraction. [Google Scholar]

- 20.Mohammad Khalid Pandit, Rnm, Mac Adaptive deep neural networks for the internet of things. Int J Sensor Wireless Commun Contr. 2020;10(1) [Google Scholar]

- 21.Brock A.R. Deep learning for computer vision. www.pyimagesearch.com [Online]. Available.

- 22.Huh M., Agrawal P., Efros A.A. What makes ImageNet good for transfer learning? arXiv. 2016 preprint arXiv:1608.08614. [Google Scholar]

- 23.Deng J., Dong W., Socher R., Li L.J., Li K., Imagenet Fei-Fei L. 2009 IEEE conference on computer vision and pattern recognition. Ieee; 2009 Jun 20. A large-scale hierarchical image database; pp. 248–255. [Google Scholar]

- 24.Cohen J.P. COVID-19 image data collection. 2020. https://github.com/ieee8023/covid-chestxray-dataset [Online]. Available.

- 25.COVID-19 X-rays Kaggle dataset. https://www.kaggle.com/andrewmvd/convid19-x-rays [Online]. Available.

- 26.Selvaraju R.R., Cogswell M., Das A., Vedantam R., Parikh D., Batra D. Proceedings of the IEEE international conference on computer vision. 2017. Grad-cam: visual explanations from deep networks via gradient-based localization; pp. 618–626. [Google Scholar]

- 27.Lorente E. COVID-19 pneumonia - evolution over a week.

- 28.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Acharya U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput Biol Med. 2020 Apr 28:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sethy P.K., Behera S.K. Detection of coronavirus disease (covid-19) based on deep features. Preprints. 2020 Mar 19 2020030300:2020. [Google Scholar]

- 30.Song Y., Zheng S., Li L., Zhang X., Zhang X., Huang Yc Z. Deep learning enables accurate diagnosis of novel coronavirus (COVID-19) with CT images. medRxiv. 2020 doi: 10.1109/TCBB.2021.3065361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wang S., Kang B., Ma J., Zeng X., Xiao M., Guo Bx J. A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19) medRxiv. 2020 doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Xu X., Jiang X., Ma C., Du P., Li X., Lv S. Pneumonia. Engineering; 2020. A deep learning system to screen novel coronavirus disease 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zheng C., Deng X., Fu Q., Zhou Q., Feng J., Ma Xw H. Deep learning-based detection for COVID-19 from chest CT using weak label. medRxiv. 2020 [Google Scholar]

- 34.Apostolopoulos I.D., Mpesiana T.A. Covid-19: automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys Eng Sci Med. 2020:1. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wang Aw L. COVID-net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest radiography images. Sci Rep. 2020;10(1):1–12. doi: 10.1038/s41598-020-76550-z. Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]