Abstract

Objectives

Graphic display formats are often used to enhance health information. Yet limited attention has been paid to graph literacy in people of lower education and lower socioeconomic status (SES). This study aimed to: 1) examine the relationship between graph literacy, numeracy, health literacy and sociodemographic characteristics in a Medicaid-eligible population 2) determine the impact of graph literacy on comprehension and preference for different visual formats.

Methods

We conducted a cross-sectional online survey among people in the US on Medicaid, and of presumed lower education and SES.

Results

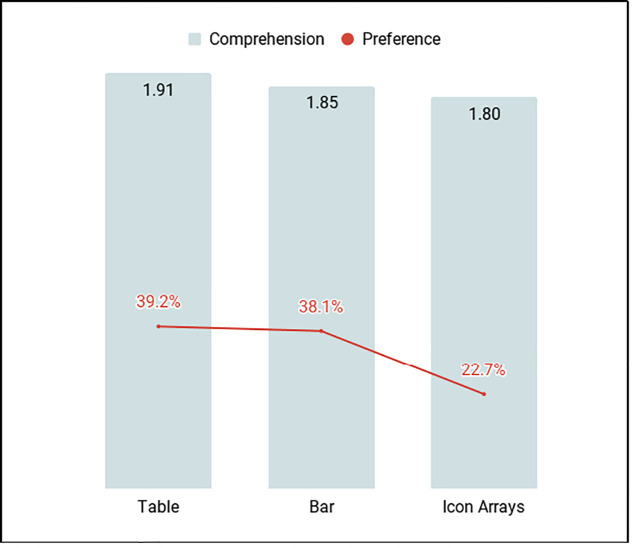

The mean graph literacy score among 436 participants was 1.47 (SD 1.05, range: 0 to 4). Only graph literacy was significantly associated with overall comprehension (p < .001). Mean comprehension scores were highest for the table format (1.91), closely followed by bar graph (1.85) and icon array (1.80). Information comprehension was aligned with preference scores.

Conclusions

Graph literacy in a Medicaid-eligible population was lower than previous estimates in the US. Tables were better understood, with icon arrays yielding the lowest score. Preferences aligned with comprehension.

Practice implications

It may be necessary to reconsider the use of graphic display formats when designing information for people with lower educational levels. Further research is needed.

Introduction

Understanding health information and related numerical information is critical to making informed decisions, promoting adherence to treatment, and improving health outcomes [1–8]. It is estimated that about 30% of the general population in the United States (US) has limited numeracy [9]. Limited numeracy is widespread and varies significantly between OECD (Organization for Economic Co-operation and Development) countries, with the US ranked 29 out of 35 countries on numeracy skills, considerably below the OECD average [10]. In people of lower educational attainment, processing numerical information is even more difficult than for more educated groups [11]. In other words, there is a gap in numeracy skills between people of lower and higher educational attainment [11]. Numerical health information can be presented as numbers only or graphically, using graphic display formats [12]. Graphic display formats, such as pie charts, bar charts, line plots, and icon arrays are frequently used to enhance communication [13–15]. They can minimize denominator neglect [16], framing effects [17, 18], and the effect of anecdotal reasoning [19]. Graphic display formats can also lead people to overestimate low probabilities and underestimate high probabilities [20]. Few studies have explored risk communication and the ability to understand graphic displays of risks in people of lower educational attainment and lower socioeconomic status (SES) [21].

While pictures may be worth a thousand words, a graph is more complex than a picture [22]. Extracting and understanding information presented graphically requires a specific set of skills, called graph literacy, also known as graphicacy [22–25]. Graph literacy is a concept that has so far received limited attention [23, 26]. Bar charts, pie charts, and line plots were first introduced in the late 18th century [24, 27], with icon arrays later appearing in the early 20th century. Galesic and Garcia-Retamero suggest that there is therefore no obvious reason why people should intuitively understand information presented graphically [23]. Graph literacy requires the ability to extract information from two-dimensional images, to read data, and compare the information of interest to other groups or categories of information [24]. Graphic display formats may not benefit all adults in the same way. People with lower graph literacy may not always benefit from graphic displays of information, and may process information more accurately with numbers alone [22, 23, 28]. Our study of the acceptability and feasibility of patient decision aids among women of lower SES suggested that many participants struggled to comprehend numerical estimates of risk presented as icon arrays. In the pictorial patient decision aid group, about 77% of women of lower SES who completed the online survey found icon arrays confusing [29].

Galesic and Garcia-Retamero have investigated the level of graph literacy in the US and Germany [23]. In both countries, about one third of the population had low graph literacy. Nayak et al. assessed graph literacy skills in a highly educated sample of prostate cancer patients (78% college educated). Graph literacy and numeracy were positively correlated, suggesting that people with limited numeracy also have limited graph literacy. Despite high educational attainment and high health literacy levels, considerable variations in the ability to understand graphs were observed [22]. Graph literacy scores were more strongly correlated with dashboard comprehension scores than numeracy scores. If graph literacy was the strongest predictor of dashboard comprehension scores in Nayak’s highly educated sample, this tendency is likely to be exacerbated in people of lower educational attainment. However, as far as can be determined, no studies to date have assessed graph literacy in people of lower educational attainment and lower SES. This study thus aimed to: 1) examine the relationship between graph literacy, numeracy, health literacy and sociodemographic characteristics in people of presumed lower SES and 2) determine the impact of graph literacy on comprehension and preference for different visual formats as well as the relationship between comprehension and preference. The results will be useful in tailoring medical information to people of lower SES and presumed lower numeracy and lower graph literacy in the most effective way to enhance understanding, thus following a proportionate universalism approach [30].

Methods

We conducted a cross-sectional online survey using a survey sampling panel on Qualtrics. The study was designed, conducted, and reported according to CHERRIES (CHEcklist for Reporting Results of Internet E-Surveys) (S1 File) [31].

Participants

We used self-reported current Medicaid enrollment as a proxy measure of lower SES to recruit people of presumed lower SES and lower educational attainment living in the United States (US) (determined using panel IDs). Medicaid is a joint federal and state program that provides health coverage to over 72 million people in the US. Medicaid enrollees include low-income families, qualified pregnant women and children, individual with disabilities and individuals receiving Supplemental Security Income (SSI). We asked the Qualtrics team to target the recruitment of Medicaid enrollees on their panels. In addition, we used three screening questions aiming to only include participants who reporting being at least 18 years old, being a Medicaid enrollee, and felt comfortable reading and completing a survey in English. We based the sample size on the estimated number of adults in the US enrolled in Medicaid and using a 95% confidence interval with a 5% margin of error. We identified unique visitors using unique panel IDs and checked for duplicates. Qualtrics does not use IP addresses to check for duplicates. In addition, cookies were placed upon entering the survey to prevent participants from completing the survey more than once.

Survey administration

The survey was exclusively open to Qualtrics’ active panel participants. Qualtrics (www.qualtrics.com) is a customer experience company and online survey platform that sends online surveys to a targeted population of respondents. A survey invitation containing a link was sent out to them based on the profiling data provided to Qualtrics (described above). To encourage participation, the participants were offered points that they could redeem for prizes.

Survey design

The survey had 13 pages (S2 File). Except for the page that provided study information, consent, and instructions, each page contained between zero and six questions. Each page included a progress bar but no back button. The survey had a total of 34 questions including the consent and screening questions (see Table 1).

Table 1. Overview of survey structure.

| Page number | Section | Number of Questions |

|---|---|---|

| 1 | Introduction and consent | 1 |

| 2 | Screener 1 | 1 |

| 3 | Screener 2 | 1 |

| 4 | Screener 3 | 1 |

| 5 | Demographics | 6 |

| 5 | Health literacy | 1 |

| 6 | Graph literacy | 4 |

| 7 | Subjective numeracy | 3 |

| 8 | Introduction to comprehension section | 0 |

| 9 | Comprehension section 1* | 5 |

| 10 | Comprehension section 2* | 5 |

| 11 | Comprehension section 3* | 5 |

| 12 | Preferred visual format | 1 |

* The order of the three visual formats was randomized.

An information sheet and consent form appeared on the first page of the survey. It briefly described the purpose of the study, what participation would involve, and stated that participants may opt out at any time. The next three pages had one screening question each to check that the participant met the inclusion criteria of being: 1) over the age of 18, 2) currently enrolled in Medicaid, and 3) comfortable completing the survey in English. Participants were subsequently asked to provide demographic information (six questions) and answer the one-item health literacy question. The next two pages tested graph literacy (four questions) and subjective numeracy (three questions). The following page provided a short introduction to the comprehension questions. The subsequent three pages each assessed comprehension of fictitious risk information using icon arrays, bar graph, and table (three questions each). We purposefully chose not to use pie charts as previous studies have demonstrated that this format leads to slower and less accurate responses [15, 32]. The order of the three visual formats was randomized to check for interaction effects with other study variables. The last page asked participants to indicate the visual format they found most helpful in presenting risk information (one question). No personal information that could link participants back to their identity was collected.

Health literacy

We measured health literacy using Chew’s one-item scale, “How confident are you in filling out medical forms by yourself?” The answer choices were extremely, quite a bit, somewhat, a little bit, and not at all [33]. Based on the validation study, those who answered extremely and quite a bit were assigned to the adequate health literacy group; the rest were assigned to the limited health literacy group, which included both inadequate and marginal health literacy. This was treated as a dichotomous variable.

Subjective numeracy

We measured subjective numeracy using the validated 3-item Subjective Numeracy Scale (SNS3), which asks respondents to rate their perceived ability in using numbers (S2 File) [34]. The three items are scored on a scale of 1–6 (1 = Not at all good or never to 6 = Extremely good or very often) and the sum of three answers is the SNS3 score (range 3–18) [34–37]. For analysis, we created a dichotomous variable. Participants with scores above the median score were assigned to the “higher” SNS3 group while those with scores lower than the median score were assigned to the “lower” SNS3 group.

Graph literacy

To minimize burden on respondents, we used the validated 4-item short version of the graph literacy scale [26]. The graph literacy scale asks participants to interpret four different graphs with one question per graph (S2 File). Each participant was given a graph literacy score based on the sum score of four questions (range 0–4). We created a dichotomous variable of high and low graph literacy group based on whether the score was above or below the median score. Participants with graph literacy scores above the median score were assigned to the “higher” graph literacy group while those with scores lower than the median were assigned to the “lower” graph literacy group.

Comprehension

Participants viewed hypothetical recurrence risks of cancer treatments in three different formats: table, bar graph, and icon array. They answered three comprehension questions following each visual display format. The first question required basic interpretation of the recurrence risk after one treatment option (gist task); the second required expressing the recurrence risk out of 100, 10 years after a given treatment (verbatim task), and the third question required comparing the difference in recurrence risk between two options (verbatim task). Each correct answer was given a point of 1, each incorrect answer was given a score of 0. Answers to free text questions were dual coded with conflicts discussed with a third coder. The comprehension score for each format equaled the number of first three questions answered correctly (range 0–3). We also examined the total comprehension score, which was the sum of the scores for all formats (range 0–9).

Preference

For each visual display format (table, bar chart, icon array), we asked about overall preference for visual display format.

Survey development

The survey was developed in Qualtrics using the validated scales described above. Other items (such as the comprehension questions) were developed by the research team, based on existing literature in this area, and tested with a convenience sample of research collaborators and lay users. Testing focused on the usability and readability of the written content of the survey and visual display formats embedded in the survey. Only minor formatting and typographical edits were made.

Statistical analysis

Analyses corresponding to our primary aim

We performed simple logistic regressions to assess the unadjusted relationship between sociodemographic factors including gender, education, having one or more chronic conditions, and having family history of cancer and the dichotomous variables for health literacy, SNS3 and graph literacy. We also performed a multiple logistic regression with graph literacy as the dependent variable and all sociodemographic variables, SNS3, and health literacy as independent variables to assess how each independent variable was associated with graph literacy.

Analyses corresponding to our secondary aim

We performed one-sample t-tests to determine if there were differences in comprehension scores by format. We performed simple logistic regressions to assess the unadjusted relationship between graph literacy and overall comprehension and graph literacy and preference. We performed a multiple logistic regression to evaluate whether the relationship between graph literacy and comprehension changed when controlling for preference, SNS3, health literacy, age, gender, education, having one or more chronic conditions, and having family history of cancer with graph literacy as the dependent variable and all other factors as independent variables.

We performed simple linear regression to assess the relationship between preference and overall comprehension score. We performed multiple linear regression to assess this relationship while controlling for SNS3, health literacy, age, gender, education, having chronic conditions, and having family history of cancer.

Assessing for order effects

We performed a simple linear regression to assess if overall comprehension score was different based on which graph format was seen first. We also looked at the individual format scores based on format order using simple logistic regressions to determine if individual format scores varied based on which format was seen first. A logistic regression was used since we dichotomized the variable of icons seen first (yes/no as dependent variable).

The study was approved by the Committee for the Protection of Human Subjects (CPHS) at Dartmouth College on August 7, 2017.

Results

Participants

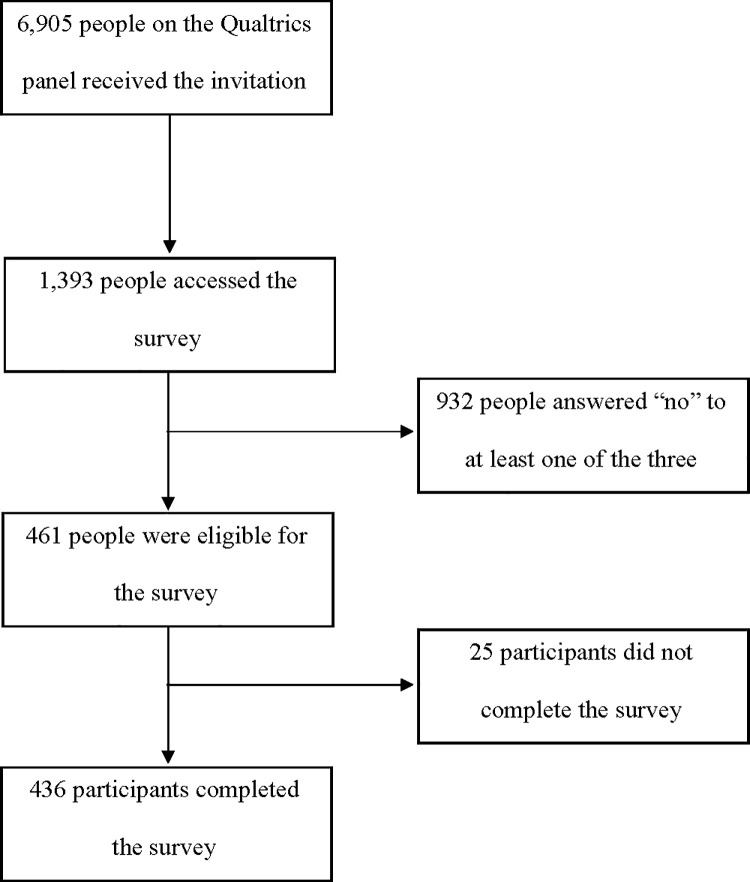

Approximately 6,905 individuals on the Qualtrics panel received the invitation, of whom 1,393 accessed the survey (20.2%). After giving consent to participate, 932 participants answered “no” to at least one of the three screening questions (including receiving Medicaid) and were excluded (66.9%). Of those who answered “yes” to all three screening questions (n = 461), 25 did not finish the survey (Fig 1). Of those who were eligible (answering “yes” to all screening questions) and consented to participate, 94.6% completed the survey. We analyzed data from 436 participants who met the above eligibility criteria (being a Medicaid enrollee) and completed the survey. The average survey completion time was 15.5 minutes (range 3.9–320.3).

Fig 1. Flow diagram of survey participation.

All participants self-reported being Medicaid enrollees. The majority of participants were female (82.3%) and the mean age was 40.5 years. Most participants identified as White or Caucasian (68.8%), followed by Black or African American (19.7%) and Spanish or Latino/a (8.7%). The vast majority (85.8%) did not have a bachelor’s degree, 41.5% reported having a high school diploma or less and 44.3% reported having some college education or an associate degree. See Table 2 for participant characteristics.

Table 2. Participant characteristics.

| Characteristics | n (%) (unless otherwise specified) |

|---|---|

| Gender | |

| Female | 359 (82.3) |

| Male | 77 (17.7) |

| Age | Mean 40.5 years (SD: 14.8) |

| Range 18–78 | |

| Race/Ethnicity* | |

| American Indian or Alaska Native | 14 (3.2) |

| Asian | 10 (2.3) |

| Black of African American | 86 (19.7) |

| Native Hawaiian or Other Pacific Islander | 1 (0.23) |

| White or Caucasian | 300 (68.8) |

| Spanish or Latino/a | 38 (8.7) |

| Other | 7 (1.6) |

| Education | |

| Less than high school diploma | 35 (8.0) |

| High school diploma or equivalent | 146 (33.5) |

| Some college or associate degree | 193 (44.3) |

| Bachelor’s degree or higher | 62 (14.2) |

| Chronic Conditions* | |

| Arthritis | 102 (23.4) |

| Cancer | 18 (4.1) |

| Chronic obstructive pulmonary disorder | 25 (5.7) |

| Depression or anxiety | 182 (41.7) |

| Diabetes | 43 (9.9) |

| Heart disease | 8 (1.8) |

| Hypertension | 91 (20.9) |

| History of stroke | 8 (1.8) |

| Other | 53 (12.2) |

| Family history of cancer | |

| Yes | 260 (59.6) |

| No | 176 (40.4) |

*Participants could select more than one.

For brevity, we only report odds ratios and 95% confidence intervals where there was statistical significance. See Table 3 for all analyses.

Table 3. Odds ratios and 95% CIs assessing the relationship between sociodemographic characteristics and graph literacy, subjective numeracy, and health literacy^.

| High graph literacy (>1) | High subjective numeracy (>11) | High health literacy | |

|---|---|---|---|

| High subjective numeracy (>11) | 1.49 | ||

| 1.02–2.18 | |||

| High health literacy | 1.33 | 2.66* | |

| 0.77–2.28 | 1.48–4.78 | ||

| Age | 1.01 | 1.01 | 1.02* |

| 1.00–1.02 | 1.00–1.03 | 1.00–1.04 | |

| Gender | 1.37 | 0.42* | 0.88 |

| 0.83–2.25 | 0.27–0.70 | 0.43–1.82 | |

| Education | |||

| Less than high school | 1 (referrent) | 1 (referrent) | 1 (referrent) |

| High school degree or equvalent | 1.58 | 2.12 | 2.67* |

| 0.73–3.42 | 0.95–4.73 | 1.11–6.43 | |

| Some college | 2.06 | 2.14 | 2.36* |

| 0.97–4.38 | 0.97–4.70 | 1.02–5.44 | |

| College or higher | 1.92 | 4.83** | 4.56 |

| 0.81–4.52 | 3.07–19.97 | 1.41–14.72 | |

| Family history of cancer | 1.38 | 1.12 | 0.51* |

| 0.94–2.02 | 0.76–1.64 | 0.28–0.93 | |

| One or more chronic conditions | 1.25 | 1.12 | 1.10 |

| 0.84–1.87 | 0.75–1.67 | 0.62–1.92 |

^ Top column variables were treated as the outome, each row variable was treated as the exposure. Simple logistic regression used for all analyses.

*p < .05

** p < .001.

Results corresponding to the primary aim

Health literacy and sociodemographic characteristics

A total of 374 participants (85.8%) reported adequate health literacy. There was no statistically significant relationship between health literacy and gender (p = .73) or having at least one chronic condition (p = .75). Respondents with adequate health literacy were more likely to have higher education (OR = 1.40, 95% CI 1.01–1.95, p = .04) and older (OR = 1.02, 95% CI 1.00–1.04, p = .024). Respondents with a family history of cancer had lower odds of adequate health literacy (OR = 0.51, 95% CI: 0.28–0.93, p = .03).

Numeracy (SNS3) and sociodemographic characteristics

The mean SNS3 score was 11.1 where a higher score indicated higher subjective numeracy (SD = 4.08, range = 3–18, median = 11). When dichotomized, 48.9% of participants (213/436) were in the high numeracy group and 51.2% (223/436) were in the low numeracy group. The was no statistically significant relationship between SNS3 and a family history of cancer (p = .56) or having chronic conditions (p = .57). Women were less likely than men to have high numeracy (OR = 0.42, 95% CI 0.25–0.70, p < .001). Respondents with a bachelor’s degree or higher had greater odds of high numeracy (OR = 7.83, 95% CI 3.07–19.97, p < .001).

Graph literacy and sociodemographic characteristics

The mean graph literacy score was 1.47 (SD 1.05, range 0–4, median 1) where a higher score indicates higher graph literacy. When dichotomized, 52.1% of participants (227/436) were in the low graph literacy group and 47.9% (209/436) were in the high graph literacy group. The relationship between graph literacy and gender (p = .22), having chronic conditions (p = .27), having a family history of cancer (p = .10), or higher education (p = .73) was not statistically significant.

Health literacy, subjective numeracy, and graph literacy

In the bivariate analysis, the relationship between graph literacy and health literacy was not statistically significant (p = .31). Respondents with higher SNS3 had higher odds of adequate health literacy (OR = 2.66, 95% CI 1.48–4.78, p < .001). Respondents with higher graph literacy had greater odds of higher SNS3 (OR = 1.49, 95% CI: 1.02–2.18, p = .037). In the multiple logistic regression, graph literacy was not associated with any independent variable.

Results corresponding to the secondary aim

Comprehension, preference, and graph literacy

Mean comprehension score by format was highest for table (1.91), then bar graph (1.85), followed by icon array (1.80) (possible range 0–3, p = .02 when comparing table and icon scores). Mean overall comprehension score was 5.6 (SD = 2.5). The majority of the sample preferred tables or bar graphs (39.2% and 38.1% respectively) (Fig 2).

Fig 2. Mean comprehension score (range 0–3) by graphical format and reported preference for one of the three formats.

In the simple logistic regressions, higher overall comprehension was associated with higher graph literacy (OR = 1.31, 95% CI 1.20–1.42, p < .001) and preference for icons was associated with lower graph literacy compared to preference for tables (OR = 0.59, 95% CI 0.35–0.97, p = .04). In the multiple logistic regression controling for preference, SNS3 score, age, education, history of chronic disease, family history of cancer, and health literacy, graph literacy was still strongly associated with overall comprehension (OR = 1.29, 95% CI 1.18–1.41, p < .001).

Comprehension and preference

In the simple linear regression, preference for bars or icon arrays was associated with lower overall comprehension scores when compared to preference for tables (bars: coefficient = -0.82, 95% CI -1.35, -0.28, p = 0.003; icon arrays: coefficient = -1.11, 95% CI -1.73, -0.49, p < .001). In the multiple linear regression, preference for bars or icon arrays was still associated with lower overall comprehension scores when compared to preference for tables (bars: coefficient = -0.74, 95% CI -1.25, -0.23, p = .005; icon arrays: coefficient = -0.88, 95% CI -1.48, -0.28).

Order effects

When looking at order effects, we found that participants who saw bar graphs or icon arrays first had lower overall comprehension scores when compared to seeing tables first (bar: -0.62, 95% CI -1.20, -0.03, p = .039, icon arrays: -0.68, 95% CI -1.27, -0.09, p = .024). If participants saw icon arrays first they had a lower icon array score than if they saw the tables or bar charts first (OR = 0.76, 95% CI 0.66–0.93, p = .008). No other order effects were significant.

Discussion

Summary of main findings

We found a positive relationship between graph literacy and numeracy. Numeracy was related to gender, education, and health literacy. Only graph literacy was significantly associated with the total graph comprehension score. There was alignment between comprehension scores and preferred visual display format, with tables being preferred and yielding the highest comprehension score. Icon arrays yielded the lowest comprehension score and were least preferred. Regarding order effects, participants who saw bar graphs or icon array first had lower overall comprehension scores.

Strengths and limitations of the study

This study was the first online survey of graph literacy in a Medicaid eligible population with lower education conducted in the US. The characteristics of our sample were broadly representative of the Medicaid enrolled population. A major strength is the inclusion of participants on Medicaid, with lower education and lower graph literacy. Most study limitations are related to the nature of online survey data collection and the highly selected nature of the study sample. The measures used have not been fully validated for online use although most have previously been used with online samples. The response rate was low when considering the large number of people who received the online survey invitation (6,905). However, of those who accessed the survey and were eligible (answering “yes” to all screening questions), 94.6% completed the survey. Our sample was derived from an online panel of respondents (who did not communicate with one another, as far as can be determined) and may not be fully representative of the US population receiving Medicaid, with lower education, and presumed lower SES. Social desirability bias could have affected the responses participants provided, particularly on the subjective health literacy (85.8% of our sample self-reported adequate health literacy) and numeracy scales. This bias may have led some participants to respond to those questions based on social expectations, particularly for participants with higher education levels. The survey response rate of 20.2% is aligned with average online survey responses rates in online panels [38, 39].

Comparison with other studies

The mean graph literacy score was considerably lower (1.47, SD 1.05) than mean scores reported in the validation of the short form graph literacy scale in a sample of the US (mean: 2.21, SD 1.12) and German populations (mean: 2.03, SD 1.10). This may indicate that people of lower educational attainment and lower SES have considerably lower graph literacy than the general population and may not always benefit from information presented graphically. This hypothesis may also be supported by the fact that 39.2% of our sample reported preferring using the table to process numeric health information, while seemingly understanding information better in this format.

Nayak’s study of graph literacy in a highly educated sample of prostate cancer patients assessed graph literacy using the 13-item version of the scale [22]. It is therefore difficult to compare the full scale mean score to the short version’s score used in our study. However, consistent with our findings, Nayak et al. found that numeracy and graph literacy scores were correlated, suggesting that people with lower numeracy also have lower graph literacy. Brown et al., in a sample with higher educational attainment, also found that less numerate individuals may have less ability to interpret graphs [40, 41]. Our study found a similar relationship between subjective numeracy and graph literacy. Those findings question the previously accepted hypothesis that graphical representation of risks improves understanding in individuals with lower numeracy [6, 14, 42, 43]. Further research is needed.

Mean numeracy scores in our sample of survey respondents with lower educational attainment were considerably lower than numeracy scores found in Nayak’s highly educated sample, and McNaughton’s study [22, 44]. Several studies have shown that education does not predict numeracy [45–47]. In our study population, and consistent with Brown’s finding in a higher SES sample, education was related to subjective numeracy and health literacy [40]. Numeracy was also associated with health literacy (p < .001), consistent with Brown’s finding among higher SES participants.

In our sample, preference for a visual display format coincided with better comprehension. This finding is not consistent with prior studies [15, 40]. In this sample, the table format was preferred, immediately followed by the vertical bar graph. In Brown’s study, where tables had not been introduced, the vertical bar graph was preferred. Regarding comprehension, tables and vertical bar graph were best understood, closely followed by icon arrays. In previous research, both bar charts and icon arrays have been shown to perform best, depending on the type of task at hand (verbatim versus gist), the denominator used etc. [15]. In Scalia’s recent study, patients recruited from a vascular clinic preferred the pie chart format over icon array and reported better realization that risks increase with time for each option [48]. Given the small comprehension score differences between visual display formats but a significant association between stated preference and comprehension score in our sample, further research is required.

Practice implications

In light of our findings, we may want to investigate those issues further and possibly reconsider the use of graphic display formats among people of lower graph numeracy, who are more likely to hace lower education and lower numeracy. Further research that compares visual display formats among people of lower SES recruited in community settings (to prevent potential biases introduced by online samples) may be warranted. Offering multiple ways to present and process numeric health information, including numbers alone using natural frequencies as well as bar charts (given the stated preference) may be worth exploring [15]. Should clinicians and developers of health information also consider patient preferences in communicating risks? Effective communication strategies should consider the impact of lower education and lower numeracy on graph literacy among patients of lower SES. Alternative strategies that use video messages and nonverbal cues (e.g., voice intonations, facial expressions) as well as words to convey affective and cognitive meaning of the numbers to improve patient comprehension of numeric health information require further investigation [49].

Conclusions

Despite frequently reporting adequate health literacy, graph literacy among a Medicaid eligible population was considerably lower than the mean scores previously collected in the general population in the US. Our study findings suggest that in people of lower educational attainment, graph literacy seems to be the strongest predictor of graph comprehension and is correlated with numeracy. Further, preference for a particular display format mirrored comprehension on this format. Both tables and bar charts lead to slightly higher comprehension scores than icon arrays, and are thus understood differently by different people. It may therefore be necessary to reconsider the use of graphic display formats when designing information for people with lower educational levels. Given the stated limitations of the present survey, further research in this area is needed.

Supporting information

(PDF)

(PDF)

Acknowledgments

We would like to thank all the participants who completed this survey. We would also like to thank Mirta Galesic and Yasmina Okan for their advice in designing this survey. We would also like to thank Julia Song for her help with the data collection procedure.

Data Availability

Data available upon request. There are ethical restrictions on sharing the data imposed by the Institutional Review Board. The Institutional Review Board is Dartmouth College Committee for the Protection of Human Subjects. We did not have the permission to share the data without request because this was not mentioned in the participants consent form. You can contact The Dartmouth Committee for the Protection of Human Subjects: Phone: (603) 646-6482, Fax: (603) 646-9141, Email: cphs@dartmouth.edu.

Funding Statement

Research reported in this manuscript did not receive external funding. It is the personal work of the authors.

References

- 1.Koh HK, Brach C, Harris LM, Parchman ML. A proposed 'health literate care model' would constitute a systems approach to improving patients' engagement in care. Health Aff (Millwood). 2013;32(2):357–67. 10.1377/hlthaff.2012.1205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Parker RM. What an informed patient means for the future of healthcare. Pharmacoeconomics. 2006;24 Suppl 2:29–33. 10.2165/00019053-200624002-00004 [DOI] [PubMed] [Google Scholar]

- 3.Baker GC, Newton DE, Bergstresser PR. Increased readability improves the comprehension of written information for patients with skin disease. J Am Acad Dermatol. 1988;19(6):1135–41. 10.1016/s0190-9622(88)70280-7 [DOI] [PubMed] [Google Scholar]

- 4.Jacobson TA, Thomas DM, Morton FJ, Offutt G, Shevlin J, Ray S. Use of a low-literacy patient education tool to enhance pneumococcal vaccination rates. A randomized controlled trial. Jama. 1999;282(7):646–50. [DOI] [PubMed] [Google Scholar]

- 5.Wallace AS, Seligman HK, Davis TC, Schillinger D, Arnold CL, Bryant-Shilliday B, et al. Literacy-appropriate educational materials and brief counseling improve diabetes self-management. Patient education and counseling. 2009;75(3):328–33. 10.1016/j.pec.2008.12.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schwartz LM, Woloshin S, Black WC, Welch HG. The role of numeracy in understanding the benefit of screening mammography. Ann Intern Med. 1997;127(11):966–72. 10.7326/0003-4819-127-11-199712010-00003 [DOI] [PubMed] [Google Scholar]

- 7.Koo K, Brackett CD, Eisenberg EH, Kieffer KA, Hyams ES. Impact of numeracy on understanding of prostate cancer risk reduction in PSA screening. PLoS One. 2017;12(12):e0190357 10.1371/journal.pone.0190357 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Reyna VF, Nelson WL, Han PK, Dieckmann NF. How numeracy influences risk comprehension and medical decision making. Psychol Bull. 2009;135(6):943–73. 10.1037/a0017327 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kutner M, Greenberg E., Jin Y., Paulsen C. The Health Literacy of America’s Adults: Results From the 2003 National Assessment of Adult Literacy. Washington D.C.: U.S. Department of Education, National Center for Education Statistics; 2006. [Google Scholar]

- 10.OECD. Skills Matter: Further Results from the Survey of Adult Skills. Paris: OECD Skills Studies; 2016. [Google Scholar]

- 11.Galesic M, Garcia-Retamero R. Statistical numeracy for health: a cross-cultural comparison with probabilistic national samples. Archives of internal medicine. 2010;170(5):462–8. 10.1001/archinternmed.2009.481 [DOI] [PubMed] [Google Scholar]

- 12.Zipkin DA, Umscheid CA, Keating NL, Allen E, Aung K, Beyth R, et al. Evidence-based risk communication: a systematic review. Ann Intern Med. 2014;161(4):270–80. [DOI] [PubMed] [Google Scholar]

- 13.Lipkus IM. Numeric, verbal, and visual formats of conveying health risks: suggested best practices and future recommendations. Med Decis Making. 2007;27(5):696–713. [DOI] [PubMed] [Google Scholar]

- 14.Lipkus IM, Hollands JG. The visual communication of risk. J Natl Cancer Inst Monogr. 1999(25):149–63. [DOI] [PubMed] [Google Scholar]

- 15.Trevena LJ, Zikmund-Fisher BJ, Edwards A, Gaissmaier W, Galesic M, Han PK, et al. Presenting quantitative information about decision outcomes: a risk communication primer for patient decision aid developers. BMC medical informatics and decision making. 2013;13 Suppl 2:S7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Garcia-Retamero R, Galesic M, Gigerenzer G. Do icon arrays help reduce denominator neglect? Med Decis Making. 2010;30(6):672–84. [DOI] [PubMed] [Google Scholar]

- 17.Garcia-Retamero R, Cokely ET. Effective communication of risks to young adults: using message framing and visual aids to increase condom use and STD screening. J Exp Psychol Appl. 2011;17(3):270–87. 10.1037/a0023677 [DOI] [PubMed] [Google Scholar]

- 18.Garcia-Retamero R, Galesic M. How to reduce the effect of framing on messages about health. J Gen Intern Med. 2010;25(12):1323–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fagerlin A, Wang C, Ubel PA. Reducing the influence of anecdotal reasoning on people's health care decisions: is a picture worth a thousand statistics? Med Decis Making. 2005;25(4):398–405. [DOI] [PubMed] [Google Scholar]

- 20.Gurmankin AD, Helweg-Larsen M, Armstrong K, Kimmel SE, Volpp KG. Comparing the standard rating scale and the magnifier scale for assessing risk perceptions. Med Decis Making. 2005;25(5):560–70. 10.1177/0272989X05280560 [DOI] [PubMed] [Google Scholar]

- 21.Ancker JS, Senathirajah Y, Kukafka R, Starren JB. Design features of graphs in health risk communication: a systematic review. J Am Med Inform Assoc. 2006;13(6):608–18. 10.1197/jamia.M2115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Nayak JG, Hartzler AL, Macleod LC, Izard JP, Dalkin BM, Gore JL. Relevance of graph literacy in the development of patient-centered communication tools. Patient Educ Couns. 2016;99(3):448–54. 10.1016/j.pec.2015.09.009 [DOI] [PubMed] [Google Scholar]

- 23.Galesic M, Garcia-Retamero R. Graph literacy: a cross-cultural comparison. Med Decis Making. 2011;31(3):444–57. 10.1177/0272989X10373805 [DOI] [PubMed] [Google Scholar]

- 24.Friel S, Curcio F, GW B. Making sense of graphs: Critical factors infleuencing comprehension and instructional implications. Journal for Research in Mathematics Education. 2001;32(2):124–58. [Google Scholar]

- 25.Aldrich FK. Graphicacy: the fourth ‘R’. Prim Sci Rev. 2000;21:382–90. [Google Scholar]

- 26.Okan Y, Janssen E, Galesic M, Waters EA. Using the Short Graph Literacy Scale to Predict Precursors of Health Behavior Change. Med Decis Making. 2019;39(3):183–95. 10.1177/0272989X19829728 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Spence I. No humble pie: the origins and usage of a statistical chart. J Educ Behav Stat. 2005;30:353–68. [Google Scholar]

- 28.Gaissmaier W, Wegwarth O, Skopec D, Muller AS, Broschinski S, Politi MC. Numbers can be worth a thousand pictures: individual differences in understanding graphical and numerical representations of health-related information. Health Psychol. 2012;31(3):286–96. 10.1037/a0024850 [DOI] [PubMed] [Google Scholar]

- 29.Alam S, Elwyn G, Percac Lima S, Grande SW, Durand MA. Assessing the acceptability and feasibility of encounter decision aids for early stage breast cancer targeted at underserved patients. BMC medical informatics and decision making. 2016;16(1):147 10.1186/s12911-016-0384-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Carey G, Crammond B, De Leeuw E. Towards health equity: a framework for the application of proportionate universalism. Int J Equity Health. 2015;14:81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Eysenbach G. Improving the quality of Web surveys: the Checklist for Reporting Results of Internet E-Surveys (CHERRIES). J Med Internet Res. 2004;6(3):e34 10.2196/jmir.6.3.e34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Feldman-Stewart D, Kocovski N, McConnell BA, Brundage MD, Mackillop WJ. Perception of quantitative information for treatment decisions. Med Decis Making. 2000;20(2):228–38. 10.1177/0272989X0002000208 [DOI] [PubMed] [Google Scholar]

- 33.Chew LD, Griffin JM, Partin MR, Noorbaloochi S, Grill JP, Snyder A, et al. Validation of screening questions for limited health literacy in a large VA outpatient population. J Gen Intern Med. 2008;23(5):561–6. 10.1007/s11606-008-0520-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McNaughton CD, Cavanaugh KL, Kripalani S, Rothman RL, Wallston KA. Validation of a Short, 3-Item Version of the Subjective Numeracy Scale. Med Decis Making. 2015;35(8):932–6. 10.1177/0272989X15581800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Fagerlin A, Zikmund-Fisher BJ, Ubel PA, Jankovic A, Derry HA, Smith DM. Measuring numeracy without a math test: development of the Subjective Numeracy Scale. Med Decis Making. 2007;27(5):672–80. 10.1177/0272989X07304449 [DOI] [PubMed] [Google Scholar]

- 36.Rolison JJ, Wood S, Hanoch Y, Liu PJ. Subjective numeracy scale as a tool for assessing statistical numeracy in older adult populations. Gerontology. 2013;59(3):283–8. 10.1159/000345797 [DOI] [PubMed] [Google Scholar]

- 37.Zikmund-Fisher BJ, Smith DM, Ubel PA, Fagerlin A. Validation of the Subjective Numeracy Scale: effects of low numeracy on comprehension of risk communications and utility elicitations. Med Decis Making. 2007;27(5):663–71. 10.1177/0272989X07303824 [DOI] [PubMed] [Google Scholar]

- 38.Pedersen MJ, Nielsen CV. Improving Survey Response Rates in Online Panels: Effects of Low-Cost Incentives and Cost-Free Text Appeal Interventions. Social Science Computer Review. 2016;34(2). [Google Scholar]

- 39.Tourangeau R, Couper MP, Steiger DM. Humanizing self-administered surveys: experiments on social presence in web and IVR surveys. Computers in Human Behavior. 2003;19(1):1–24. [Google Scholar]

- 40.Brown SM, Culver JO, Osann KE, MacDonald DJ, Sand S, Thornton AA, et al. Health literacy, numeracy, and interpretation of graphical breast cancer risk estimates. Patient education and counseling. 2011;83(1):92–8. 10.1016/j.pec.2010.04.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Nelson W, Reyna VF, Fagerlin A, Lipkus I, Peters E. Clinical implications of numeracy: theory and practice. Ann Behav Med. 2008;35(3):261–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Galesic M, Garcia-Retamero R, Gigerenzer G. Using icon arrays to communicate medical risks: overcoming low numeracy. Health Psychol. 2009;28(2):210–6. 10.1037/a0014474 [DOI] [PubMed] [Google Scholar]

- 43.Garcia-Retamero R, Galesic M. Communicating treatment risk reduction to people with low numeracy skills: a cross-cultural comparison. American journal of public health. 2009;99(12):2196–202. 10.2105/AJPH.2009.160234 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.McNaughton C, Wallston KA, Rothman RL, Marcovitz DE, Storrow AB. Short, subjective measures of numeracy and general health literacy in an adult emergency department. Academic emergency medicine: official journal of the Society for Academic Emergency Medicine. 2011;18(11):1148–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Estrada C, Barnes V, Collins C, Byrd JC. Health literacy and numeracy. Jama. 1999;282(6):527 10.1001/jama.282.6.527 [DOI] [PubMed] [Google Scholar]

- 46.Lipkus IM, Samsa G, Rimer BK. General performance on a numeracy scale among highly educated samples. Medical decision making: an international journal of the Society for Medical Decision Making. 2001;21(1):37–44. 10.1177/0272989X0102100105 [DOI] [PubMed] [Google Scholar]

- 47.Sheridan SL, Pignone M. Numeracy and the medical student's ability to interpret data. Eff Clin Pract. 2002;5(1):35–40. [PubMed] [Google Scholar]

- 48.Scalia P, O'Malley AJ, Durand MA, Goodney PP, Elwyn G. Presenting time-based risks of stroke and death for Patients facing carotid stenosis treatment options: Patients prefer pie charts over icon arrays. Patient Educ Couns. 2019. 10.1016/j.pec.2019.05.004 [DOI] [PubMed] [Google Scholar]

- 49.Azevedo RFL, Morrow D, Hazegawa-Johnson M, editors. Improving Patient Comprehension of Numeric Health Information. Proceedings of the Human Factors and Ergonomics Society Annual Meeting; 2016.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(PDF)

(PDF)

Data Availability Statement

Data available upon request. There are ethical restrictions on sharing the data imposed by the Institutional Review Board. The Institutional Review Board is Dartmouth College Committee for the Protection of Human Subjects. We did not have the permission to share the data without request because this was not mentioned in the participants consent form. You can contact The Dartmouth Committee for the Protection of Human Subjects: Phone: (603) 646-6482, Fax: (603) 646-9141, Email: cphs@dartmouth.edu.