SUMMARY:

Fueled by new techniques, computational tools, and broader availability of imaging data, artificial intelligence has the potential to transform the practice of neuroradiology. The recent exponential increase in publications related to artificial intelligence and the central focus on artificial intelligence at recent professional and scientific radiology meetings underscores the importance. There is growing momentum behind leveraging artificial intelligence techniques to improve workflow and diagnosis and treatment and to enhance the value of quantitative imaging techniques. This article explores the reasons why neuroradiologists should care about the investments in new artificial intelligence applications, highlights current activities and the roles neuroradiologists are playing, and renders a few predictions regarding the near future of artificial intelligence in neuroradiology.

Why Should We Care?

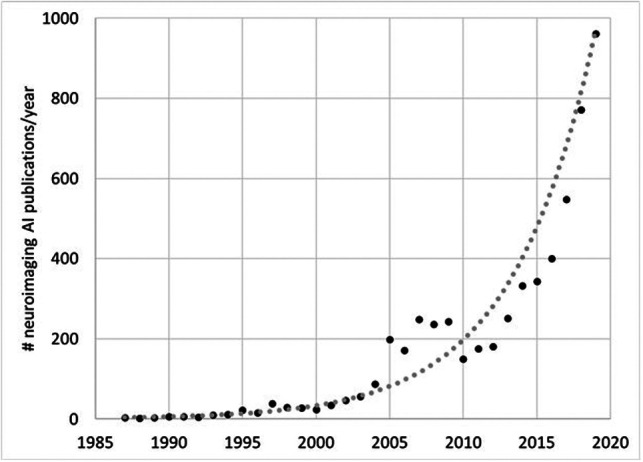

All radiologists have by now heard, on the one hand, far-reaching statements from technology pundits and the mainstream media about how our jobs will be in jeopardy because of artificial intelligence (AI) and, on the other hand, the advice that we should embrace AI as a technology that will empower rather than replace radiologists. On a practical level, how do we move ahead? In recent years, interest in how AI may be applied to medical imaging has increased exponentially, reflected in the rapid rise in publications in AI from ∼200 peer-reviewed publications in 2010 to about 1000 in 2019 (Fig 1). Neuroradiology is the most highly represented subspecialty in these works, accounting for approximately one-third of all such articles.1 The influence of neuroradiology in AI research may be attributable to a variety of factors: 1) neuroimaging comprises rich, multidimensional, multicontrast, and multimodality data that lend themselves well to machine learning tasks; 2) there are well-established neuroimaging public datasets including Alzheimer disease with the Alzheimer Disease Neuroimaging Initiative (ADNI; adni.loni.usc.edu), Parkinson disease through the Michael J. Fox Foundation (www.ppmi-info.org/data), stroke with data bases like Anatomical Tracings of Lesion After Stroke for stroke (ATLAS; https://doi.org/10.1101/179614), brain tumors with the Tumor Cancer Imaging Archive (https://www.cancerimagingarchive.net) and the Cancer Genome Atlas Program (https://www.cancer.gov/tcga); 3) a long history of quantitative neuroimaging research informs clinical practice; 4) historically, being at the forefront of imaging innovations may perhaps attract researchers to the subspecialty; and 5) myriad unsolved problems pertaining to neuroscience and neurologic disease remain.

Fig 1.

Total number of publications from PubMed using search of “brain” AND [“artificial intelligence” OR “machine learning” OR “deep learning”] showing exponential growth in AI-related neuroimaging publications since 1987.

In sharp contrast with the appealing concept of AI applied to imaging and the exponential growth of research, mainstream clinical adoption of AI algorithms remains slow.2 Indeed, a number of obstacles must be overcome to allow successful clinical implementation of AI tools. These include not only validation of the accuracy of such tools but ethical challenges such as bias and practical considerations such as information technology integration with other systems, including the PACS and Electronic Health Records.

Neuroradiologists are well-equipped to partner with AI experts to provide the expertise to help solve these issues. The American Society of Neuroradiology (ASNR), the American Society of Functional Neuroradiology (ASFNR), and their members have pledged to become the stewards of the clinical implementation of AI in neuroradiology. In this article, we will outline a roadmap to achieve this goal. The common hope is that an optimal implementation of AI for neuroradiology will aid us in existing challenges in neuroimaging (eg, by providing quantitative results in a digestible manner, automating postprocessing of volumetric data, facilitating interpretation of studies with many images, and so forth) and open new horizons in diagnosis and treatment of neurologic diseases for which imaging has thus far played a limited role, such as neuropsychiatric disorders.

Current Clinical AI Portfolio and Emerging Applications in Neuroradiology Coming Soon to a PACS Near You

The past few years have seen the introduction of a vast portfolio of AI-enabled clinical applications in neuroradiology by academic researchers and commercial entities. Given the unprecedented flexibility and performance of deep learning technology, these tools span a broad range of categories, including disease detection, lesion quantification, segmentation, image reconstruction, outcome prediction, workflow optimization, and scientific discovery. A number of current proof-of-concept, cutting-edge applications are likely to mature into mainstream, routine use (Table). In the beginning, workflow optimization tools may precede deep learning–based computer-aided diagnosis tools to implementation because of the additional regulatory burden on algorithms belonging to the latter category. In terms of workflow optimization, AI algorithms are already being applied to tasks such as clinical decision support, scheduling, predicting patient behavior (eg, same-day cancellations or no-show), wait-time determination,3 protocol aid (eg, flagging of incorrect orders, enforcement of appropriateness criteria), accuracy of coding, and enhancing reporting and communication (eg, optimization of case assignments to neuroradiologists, automatic population of structured reports, longitudinal tracking of lesions).

Promising clinical applications of AI in neuroradiology

| Applications |

|---|

| Classification of abnormalities (eg, urgent findings such as hemorrhage, infarct, mass effect) |

| Detection of lesions (eg, metastases) |

| Prediction of outcome (eg, predicting final stroke volume, predicting tumor type, and prognosis) |

| Postprocessing tools (eg, brain tumor volume quantification) |

| Image reconstruction (eg, fast MR imaging, low-dose CT) |

| Image enhancement (eg, noise reduction, super-resolution) |

| Workflow (eg, automate protocol choice, optimize scanner efficiency) |

Detecting abnormalities on imaging is the usual first thought when considering AI uses in medical imaging. Primary emphasis has been placed on identifying urgent findings that enable worklist prioritization for abnormalities such as intracranial hemorrhage (Fig 2),4-10 acute infarction,11-14 large-vessel occlusion,15,16 aneurysm detection,17-19 and traumatic brain injury20-22 on noncontrast head CT. This paradigm has been promoted, in large part, by the new accelerated FDA clearance pathway for computer-aided triage devices. For disease quantification, applications have focused on estimating the volume of anatomic structures for Alzheimer disease,23,24 ventricular size for hydrocephalus,25-27 lesion load in disorders such as multiple sclerosis,28-30 tumor volume for intracranial neoplasms,31,32 identification of metastatic brain lesions,33-35 and vertebral compression fractures.36,37 AI technology has the potential to ease the burden of time-consuming and rote tasks by facilitating 3D reconstructions and segmentation, identifying small new lesions, and quantifying longitudinal changes (ie, computer-aided change detection).

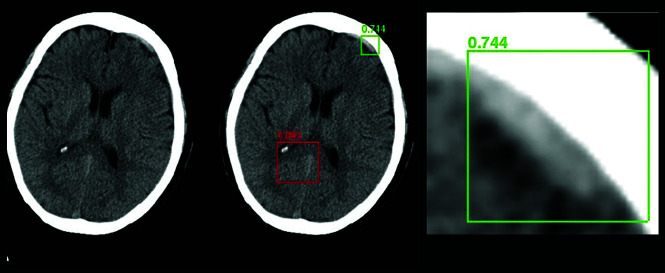

Fig 2.

A Regions with Convolutional Neural Networks deep learning approach to intracranial hemorrhage detection on a noncontrast head CT examination that uses a bounding box approach to generate a region proposal to focus the AI algorithm. The green box represents successful detection of a small, acute subdural hematoma (true-positive), while the red box denotes no abnormal hemorrhage (a true-negative).

Finally, deep learning is proving to be remarkably effective in reconstructing diagnostic-quality images and removing artifacts, despite extreme protocol parameters, for example in the context of rapidly accelerated MR imaging (Fig 3),38-41 ultralow radiation dose CT or nuclear medicine acquisitons,42 ultralow intravenous contrast protocols (Fig 4),43 and achieving super-resolution.44 Such techniques are poised to change the potential indications for long studies such as MR imaging and radiation-heavy studies such as CT perfusion and open the door to high-fidelity dynamic imaging, particularly suited to deep learning–based reconstructions based on the redundancy of spatial information with time. Multiple companies are now applying for FDA clearance for image denoising45,46 and enhanced-resolution tools that use convolutional neural networks.45

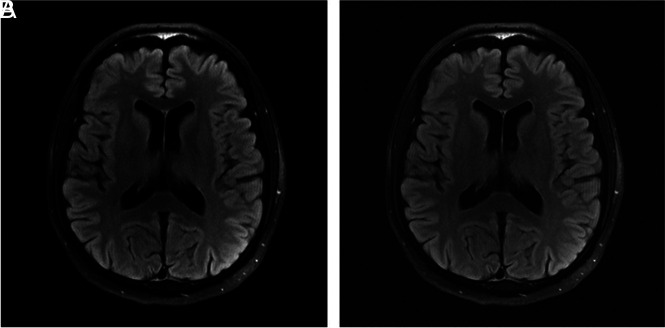

Fig 3.

Deep learning–based image reconstruction algorithms will pave the way to accelerating MR imaging acquisitions and reduce scan time; here a deep learning model (A) achieves a high-quality brain MR imaging reconstruction with 6-fold undersampling, compared with the criterion standard, fully sampled image (B) (unpublished data courtesy of Yvonne Lui, Tullie Murrell, May 24, 2020).

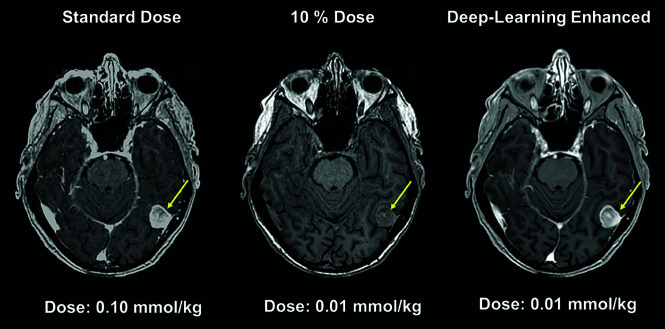

Fig 4.

Deep learning–based model used to generate diagnostic-quality postcontrast MR images using ultra-low-dose gadolinium.

Machine learning tools are facile at incorporating diverse data into a unified algorithm. These algorithms can be used to combine noninvasive imaging with clinical and laboratory metrics to facilitate outcome prediction. This will extend the practice of neuroradiologists and make us even more critically important in the care team. Current examples include prediction of molecular signatures of primary brain tumors such as isocitrate dehydrogenase (IDH) mutation, 1p/19q codeletion, MGMT promoter, and epidermal growth factor receptor amplification,47-49 and prediction of human papilloma virus for head and neck squamous cell carcinoma.50 Other predictive end points include estimation of progression-free or overall survival for patients with various CNS malignancies,51,52 determination of disease progression in dementia,53,54 and the risk of secondary stroke.14 Deep learning algorithms may facilitate the discovery of novel disease features. Initial work focused on supervised and semisupervised techniques to yield machine-curated atlases of tumor phenotypes34,47-49 as well as novel perfusion maps for core infarct estimation.13,14 As techniques develop, future work may incorporate ensembles of fully unsupervised deep learning models to study population-level imaging archives to identify new dominant disease patterns and phenotypic risk factors. Together, applications such as the ones described here will not only advance our understanding of neurologic disease but emphasize the critical role that imaging will have in the future of health care.

Integration of AI into Neuroradiology Practice: Paths of Least Resistance

The single most important feature of any software tool to achieve clinical success is to provide value. Value may come in many forms: from assisting with laborious tasks to providing improved diagnoses to saving time. Automation of repetitive manual tasks by technologists, 3D laboratory postprocessing personnel, and radiologists may be especially useful. Because of regulatory uncertainty in the AI realm, particularly pertaining to medical applications, practical implementation may come earlier for those AI algorithms that assist or augment tasks rather than mimic the radiologist. Other factors important to successful algorithm adoption include ease of integration with existing information technology infrastructure, processing speed, acceptance by end-users (whether they are radiologists, referring physicians, or patients themselves), and, of economic import, potential payer coverage of services. There are challenges to the successful implementation of AI algorithms into general clinical practice, made clear by early experiences with models that do not generalize well to real clinical cases; accuracy/sensitivity/specificity levels, which may look good in a research article but may not be acceptable in practice; and a potential penalty rather than a gain in time to interpret model outputs.

Current workflows already incorporate postprocessing tasks such as 3D visualization. While such tools are not generally based on machine learning models, they may serve as a roadmap for future AI algorithms. For example, perfusion analysis for acute stroke has found a place in the clinical workflow at many medical centers. Down the line, it seems inevitable that deep learning–based tools will improve current models on the basis of threshold methods for analysis. Integration of such AI-based techniques is already occurring, with the latest suites of tools from many different vendors. In such cases, one can imagine rather seamless incorporation into existing workflow.

Another point of integration is worklist optimization. A popular AI neuroradiology application is the use of deep neural networks to identify acute hemorrhage on noncontrast brain CT.4-10 Several companies have received FDA approval for competing tools that identify positive hemorrhage cases and re-prioritize them on the worklist. Ideally, the benefits would be in reducing turnaround time for studies with critical findings. In reality, every neuroradiologist knows that the definition of “critical” is unclear. Not every brain hemorrhage has the same level of urgency: Not only are some hemorrhages managed conservatively (eg, small areas of petechial hemorrhage postinfarct) but some are even expected findings (eg, postoperative cases). Large hemorrhages for which immediate triage might be appropriate are often already flagged in current workflows at the time of scanning by technologists or even before the study is performed by the referring physician who asks for a prioritized interpretation. Thus, the true utility of such prioritization schemes is uncertain. In the prioritization of one study, another inevitably becomes de-prioritized; the ramifications of this re-ordering are unclear. Furthermore, the prevalence of abnormalities in the patient population is important to consider because this affects the performance of the algorithm. Certain practice scenarios may lend themselves to situations in which such tools are more or less useful, for example an AI algorithm may be a useful assist for trainees but of limited value in busy hospital-based practices with low overall turnaround time.

AI tools may be used instead in identifying normal rather than abnormal. Unanswered questions here include where specificity thresholds should be set for true-negatives and how to handle potential cognitive biases that may be introduced by preliminary AI device interpretations. Legal ramifications with regard to liability remain undefined, akin to the autonomous automobile industry.55 It may be difficult to persuade the public to accept AI tool interpretations for important medical determinations unless they are supervised by a medical expert.

Broader applications to consider are AI models applied on amalgamated data. Compiled statistical data could help document the prevalence of risk factors or severity of disease (such as vascular atheromatous quantification) in populations, potentially informing insurance reimbursement of health systems in an accountable care environment.

For successful implementation, a host of informatics details need attention: infrastructure needs, potential cloud-based computing, appropriate handling of protected health information, backup systems, and monitoring systems. A systematic method for validation and quality control must be deployed and monitored to ensure consistent performance and continuous updating of the algorithm. Entirely new platforms are needed to address these needs. While there are many works in development, currently there is little standardization. The Integrating the Healthcare Enterprise Radiology Technical Committee has published 2 profiles that attempt to standardize critical aspects of AI processes: AI Workflow (https://mailchi.mp/ihe/ihe-rad-tf-supplement-published-pc-2020-03-30) for Imaging and AI Results (https://mailchi.mp/ihe/ihe-rad-tf-supplement-published-ti-2020-07-16). These profiles will help guide the vendors to create both intraoperable and interoperable solutions in the future.

Future Role of Neuroradiologists in an AI World

For some, the emergence of AI medical imaging tools engenders palpable concern for the future of radiology that typically centers around the threat of increasing automation to job security.56 However, previous experience with automation in medicine tells a different story. Automated screening of Pap smears using a neural network was first available in 199257 and has become more advanced since then. Although the initial promise was that these tools could identify cases that would no longer require cytopathologist review, final readouts of slides based on software alone never became widely accepted.57 In fact, a large study showed increased productivity but not increased sensitivity compared with manual review alone in more than 70,000 cases.58 Similarly, computer-assisted electrocardiography interpretation is commonplace and has achieved a savings in analysis time for experienced readers.59 Human expert over-reading remains the norm. Incorrect computer-assisted interpretations that were not properly corrected by over-reading physicians have led to misdiagnoses and inappropriate treatment.59,60

Thus, the threats of AI to the size of the radiology workforce may be overstated.56,61,62 Tools to augment performance rather than replace radiologists are the most likely near-term outlook, with the benefits of augmentation being many: improved quantification; reduction in repetitive, rote tasks; and productive allocation of tasks to radiologists. In the longer term, our field is likely to be transformed in ways that are difficult to predict. AI is set to alter many different aspects of practice beyond diagnostic support. For example, AI is just beginning to be applied to enhance radiology education by tailoring the presentation of teaching material to specifically address the needs and learning styles of individual trainees.63 Facilitating our community’s engagement and comfort level with implementing and using AI models will not succeed without addressing the evolving needs of AI education. AI education will need to target learners of all levels from medical students to senior practitioners, from tech-savvy to tech-illiterate.63

How We Will Get There?

Leading radiology and imaging informatics organizations have established parallel AI initiatives in a flurry of enthusiasm for AI. Initiatives are far-ranging and include developing educational programs for radiologists, providing areas of synergy between clinical radiologists and the engineering community, fostering industry relationships, and providing input into regulatory processes. Federal effort by the National Institutes of Health includes sponsoring forums on AI in medical imaging. AI challenges and dataset curation are being organized by the American College of Radiology (ACR) and the Radiological Society of North America (RSNA). As with early adoption of any new technology, parallel exploration is necessary on the path toward maturation of the field. Neuroradiologists in the ASNR and ASFNR are providing synergistic leadership as evidenced by the successful creation of an AI workshop that is now part of the annual meetings of both societies. The ASNR has established an AI Task Force, which is charged with advising and providing guidance to the society on this topic. The AI working group provides a forum for interested members, which includes a dedicated AI study group supported by the ASNR.

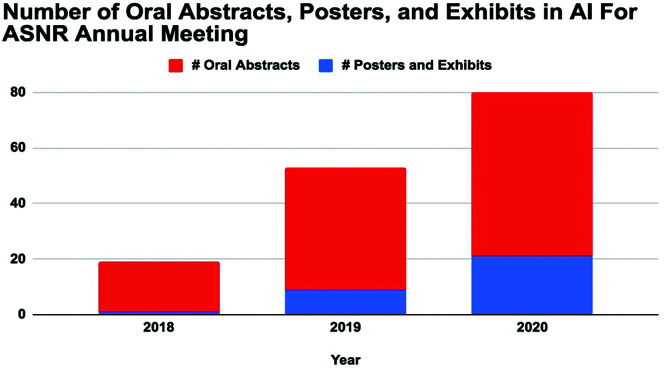

ASNR, the major subspecialty society for neuroradiologists, seeks to identify key collaborative opportunities for AI in neuroradiology, which include influential national and international organizations and its own subspecialty societies (eg, the ASFNR). The combined strengths of the ASNR with its focus on state-of-the-art education and the ASFNR with its emphasis on evolving, innovative techniques and research form a natural synergy. The trajectory is clear: 18 oral abstracts and 1 poster on AI at the 2018 ASNR annual meeting; 35 oral abstracts and 9 posters and exhibits in 2019; and now 59 oral abstracts and 21 posters and exhibits in 2020 (Fig 5). Similarly, AI abstracts at the ASFNR annual meeting have gone from 6.25% to 23.4% of total abstracts in 2 years. Last year saw the inaugural launch of an innovative, hands-on AI workshop, a major collaborative effort between the ASNR and ASFNR. This 2-part workshop (the first part at the spring ASNR annual meeting, the second part at the fall ASFNR annual meeting) incorporates an ambitious curriculum, including basic coding to ethical considerations capped by individual year-long projects by each attendee with formal mentorship. The workshop was a success in its first year and is currently oversubscribed for 2020.

Fig 5.

Increasing numbers of oral abstracts and posters in artificial intelligence presented at the ASNR Annual Meetings in 2018 (Vancouver, British Columbia, Canada), 2019 (Boston, Massachusetts), and 2020 (Virtual).

In addition, neuroradiologists are providing important contributions in several major collaborations toward enriching neuroimaging AI resources in the public domain. Here, we summarize a few of the related activities of major radiologic parent organizations, the ACR and the RSNA, and discuss how we can support and partner with their efforts.

1) The ACR Data Science Institute includes several resources including an innovative AI-LAB and the Define-AI Directory. (https://www.acrdsi.org). The AI-LAB provides simple browser-based tools empowering radiologists with basic hands-on access to tools for creating, testing, and validating machine learning algorithms without requiring extensive programming background. The Define-AI Directory contains a list of potential use cases with the aim of cataloging important clinical problems that may be good candidates for potential AI solutions. The goal is to organize and template key findings with narrative descriptions and flow charts to aid developers and industry in finding AI-based solutions. Representatives from our societies have been called on to help develop neuroradiology use cases for this initiative (https://www.acrdsi.org/DSI-Services/Define-AI). The ACR offers tools to evaluate algorithm performance using qualified datasets as an aid in the FDA premarket review process. In the role of the ACR as a liaison to the federal government, the ACR interacts with the FDA with the goal of helping to define the evaluation/approval processes for industry to uphold the standards of patient safety and algorithm performance while streamlining the review process.

2) The RSNA has succeeded in developing imaging informatics standards by leveraging its relationship to industry partners, for example with the creation of international standards organizations such as Integrating the Healthcare Enterprise. Just last year, the RSNA recognized the potential of AI in neuroradiology and spearheaded an AI challenge for head CT hemorrhage detection (https://www.kaggle.com/c/rsna-intracranial-hemorrhage-detection).64 The dataset of more than 28,000 noncontrast head CT studies from 4 different organizations was labeled by neuroradiologist volunteers. More than 1300 teams from around the world competed in the challenge, and the dataset remains available through the RSNA as an open-access resource.

3) The Society of Imaging Informatics in Medicine (SIIM) is less well-known to practicing radiologists, but critically important to our field. SIIM is devoted to developing and providing education for radiology informatics and has primarily nonphysician members (eg, physicists, engineers, information technology professionals). It is the certifying body for the imaging informatics professionals (Certification for Imaging Informatics Professionals). SIIM maintains close alliances with broader medical informatics bodies including the Healthcare Information and Management Society and the American Medical Informatics Association, creating an important tie between radiology informatics and medical informatics more generally. SIIM provides year-long informatics education through SIIM University and has sponsored AI and innovation challenges. SIIM currently hosts a separate meeting devoted to AI, the Conference on Machine Intelligence in Medical Imaging. This conference brings engineers and imaging experts together to focus on the problems faced with developing datasets, creating infrastructure to support machine learning, and clinical applications. Many of the scientific presentations, workshops, and hands-on presentations are neuroradiology-related and may be of interest to ASNR and ASFNR members.

As neuroimaging experts, we should be engaged in AI applications as they relate to neurointerventional radiology, as there has been considerable effort in stroke imaging. Working with our partners in vascular neurology and vascular neurosurgery toward AI applications in neurovascular disease including diagnosis, patient selection, and treatment is a priority.

One major obstacle to quality medical imaging AI research and development is the paucity of well-curated imaging datasets. Quality and diversity of datasets will be important to address training data biases to develop tools in an ethical and responsible manner.65,66 There is a need to be mindful of health disparities that can inadvertently be introduced or exacerbated in AI algorithms or data curation.67 Neuroradiologists can provide tremendous value by promoting standards of development for multicenter datasets to address high-profile clinical-use cases derived from our institutional membership and promoting new and additional neuroradiology-focused data science challenges following the lead of previous challenges led by ASFNR, RSNA, and the Medical Image Computing and Computer Assisted Intervention Society. Furthermore, the importance of data sharing and algorithm sharing cannot be stressed enough. If we, as a community, look to AI as a great opportunity to improve practice, it will be critical to share resources to derive the greatest benefit.

CONCLUSIONS

The ASNR and ASFNR are poised and ready to contribute to ongoing effort in meeting the new needs of neuroradiologists in AI. We will ensure the ethical application of AI and protect patients’ rights. The combined strengths of our societies in knowledge, experience, education, research, clinical expertise, and advocacy place us in the best possible position to influence and inform the future of the field. While continuing our own effort, we will actively seek ways to build complementary programs with others to contribute positively to key neuroradiology-related AI initiatives nationally and internationally. Conversely, parent radiology organizations such as the RSNA, ACR, and SIIM will seek synergies with neuroradiology leaders to tap into our knowledge, neuroimaging expertise, and commitment to the future of AI in imaging.

Since the inception of neuroradiology as a subspecialty within radiology, neuroradiologists have been the champions of emerging and innovative technologies that have transformed neuroimaging and patient care. The arrival of artificial intelligence represents another unique, historic opportunity for neuroradiologists to be the leaders and drivers of change with the implementation of AI algorithms into routine clinical practice. There is a wealth of possibilities, from enhanced operational efficiencies to reduce health care costs and improve access to the prediction of genomics from brain MR images. Thus, AI will provide neuroradiologists not with artificial but with augmented intelligence, making us increasingly indispensable to our patients and the clinical teams with whom we work.

ABBREVIATION:

- AI

artificial intelligence

References

- 1.Pesapane F, Codari M, Sardanelli F. Artificial intelligence in machine learning: threat or opportunity? Radiologists again at the forefront of innovation. Eur Radiol Exp 2018;2:35 10.1186/s41747-018-0061-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Balasubramanian S. Artificial Intelligence is Not Ready for the Intricacies of Radiology. Forbes https://www.forbes.com/sites/saibala/2020/02/03/artificial-intelligence-is-not-ready-for-the-intricacies-of-radiology/#bc4b92b67eb1. February 3, 2020. Accessed March 15, 2020

- 3.Curtis C, Liu C, Bollerman TJ, et al. . Machine learning for predicting patient wait times and appointment delays. J Am Coll Radiol 2018;15:1310–16 10.1016/j.jacr.2017.08.021 [DOI] [PubMed] [Google Scholar]

- 4.Ginat DT. Analysis of head CT flagged by deep learning software for acute intracranial hemorrhage. Neuroradiology 2020;62:335–40 10.1007/s00234-019-02330-w [DOI] [PubMed] [Google Scholar]

- 5.Kuo W, Häne C, Mukherjee P, et al. . Expert-level detection of acute intracranial hemorrhage on computed tomography using deep learning. Proc Natl Acad Sci USA 2019;116:22737–45 10.1073/pnas.1908021116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chang PD, Kuoy E, Grinband J, et al. . Hybrid 3D/2D convolutional neural network for hemorrhage evaluation on head CT. AJNR Am J Neuroradiol 2018;39:1609–16 10.3174/ajnr.A5742 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cho J, Park KS, Karki M, et al. . Improved sensitivity on identification and delineation of intracranial hemorrhage lesion using cascaded deep learning models. J Digit Imaging 2019;32:450–61 10.1007/s10278-018-00172-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lee H, Yune S, Mansouri M, et al. . An explainable deep learning algorithm for the detection of acute intracranial hemorrhage from small datasets. Nat Biomed Eng 2019;3:173–82 10.1038/s41551-018-0324-9 [DOI] [PubMed] [Google Scholar]

- 9.Ye H, Gao F, Yin Y, et al. . Precise diagnosis of intracranial hemorrhage and subtypes using a three-dimensional joint convolutional and recurrent neural network. Eur Radiol 2019;29:6191–01 10.1007/s00330-019-06163-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chilamkurthy S, Ghosh R, Tanamala S, et al. . Deep learning algorithms for detection of critical findings in head CT scans: a retrospective study. Lancet 2018;392:2388–96 10.1016/S0140-6736(18)31645-3 [DOI] [PubMed] [Google Scholar]

- 11.Yu Y, Xie Y, Thamm T, et al. . Use of deep learning to predict final ischemic stroke lesions from initial magnetic resonance imaging. JAMA Netw Open 2020;3:e200722 10.1001/jamanetworkopen.2020.0772 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ho KC, Scalzo F, Sarma KV, et al. . Predicting ischemic stroke tissue fate using a deep convolutional neural network on source magnetic resonance perfusion images. J Med Imag 2019;6:1 10.1117/1.JMI.6.2.026001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Heo J, Yoon JG, Park H, et al. . Machine-learning based model for prediction of outcomes in acute stroke. Stroke 2019;50:1263–65 10.1161/STROKEAHA.118.024293 [DOI] [PubMed] [Google Scholar]

- 14.Nielsen A, Hansen MB, Tietze A, et al. . Prediction of tissue outcome and assessment of treatment effect in acute ischemic stroke using deep learning. Stroke 2018;49:1394–401 10.1161/STROKEAHA.117.019740 [DOI] [PubMed] [Google Scholar]

- 15.Murray NM, Unberath M, Hager GD, et al. . Artificial intelligence to diagnose ischemic stroke and large vessel occlusions: a systematic review. J Neurointerv Surg 2020;12:156–64 10.1136/neurintsurg-2019-015135 [DOI] [PubMed] [Google Scholar]

- 16.Alawieh A, Zaraket F, Alawieh MB, et al. . Use of machine learning to optimize selection of elderly patients for endovascular thrombectomy. J Neurointerv Surg 2019;11:847–51 10.1136/neurintsurg-2018-014381 [DOI] [PubMed] [Google Scholar]

- 17.Sichtermann T, Faron A, Sijben R, et al. . Deep learning-based detection of intracranial aneurysms in 3D TOF-MRA. AJNR Am J Neuroradiol 2019;40:25–32 10.3174/ajnr.A5911 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Park A, Chute C, Rajpurkar P, et al. . Deep learning-assisted diagnosis of intracranial aneurysms using the HeadXNet model. JAMA Netw Open 2019;2:e195600 10.1001/jamanetworkopen.2019.5600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stember JN, Chang P, Stember DM, et al. . Convolutional neural networks for the detection and measurement of cerebral aneurysms on magnetic resonance angiography. J Digit Imaging 2019;32:808–15 10.1007/s10278-018-0162-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Stone JR, Wilde EA, Taylor RA, et al. . Supervised learning technique for the automated identification of white matter hyperintensities in traumatic brain injury. Brain Inj 2016;30:1458–68 10.1080/02699052.2016.1222080 [DOI] [PubMed] [Google Scholar]

- 21.Jain S, Vyvere TV, Terzopoulos V, et al. . Automatic quantification of computed tomography features in acute traumatic brain injury. J Neurotrauma 2019;36:1794–1803 10.1089/neu.2018.6183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kerley CI, Huo Y, Chaganti S, et al. . Montage based 3D medical image retrieval from traumatic brain injury cohort using deep convolutional neural network. Proc SPIE Int Soc Opt Eng 2019;10949:109492U 10.1117/12.2512559 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Li F, Liu M; Alzheimer's Disease Neuroimaging Initiative. A hybrid convolutional and recurrent neural network for hippocampal analysis in Alzheimer’s disease. J Neurosci Methods 2019;323:108–18 10.1016/j.jneumeth.2019.05.006 [DOI] [PubMed] [Google Scholar]

- 24.Liu M, Li F, Yan H, et al. , ; Alzheimer’s Disease Neuroimaging Initiative. A multi-modal deep convolutional neural network for automatic hippocampal segmentation and classification in Alzheimer’s disease. Neuroimage 2020;208:116459 10.1016/j.neuroimage.2019.116459 [DOI] [PubMed] [Google Scholar]

- 25.Huff TJ, Ludwig PE, Salazar D, et al. . Fully automated intracranial ventricle segmentation on CT with 2D regional convolutional neural network to estimate ventricular volume. Int J Comput Assist Radiol Surg.2019;14:1923–32 10.1007/s11548-019-02038-5 [DOI] [PubMed] [Google Scholar]

- 26.Klimont M, Flieger M, Rzeszutek J, et al. . Automated ventricular system segmentation in pediatric patients treated for hydrocephalus using deep learning methods. Biomed Res Int 2019;2019:3059170 10.1155/2019/3059170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Irie R, Otsuka Y, Hagiwara A, et al. . A novel deep learning approach with 3D convolutional ladder network for differential diagnosis of idiopathic normal pressure hydrocephalus and Alzheimer’s disease. Magn Reson Med Sci 2020. January 22 [Epub ahead of print] 10.2463/mrms.mp.2019-0106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Narayana PA, Coronado I, Sujit SJ, et al. . Deep learning for predicting enhancing lesions in multiple sclerosis from noncontrast MRI. Radiology 2020;294:398–404 10.1148/radiol.2019191061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zhao Y, Healy BC, Rotstein D, et al. . Exploration of machine learning techniques in prediction of multiple sclerosis disease course. PLoS One 2017;12:e0174866 10.1371/journal.pone.0174866 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ion-Margineanu A, Kocevar G, Stamile C, et al. . Machine learning approach for classifying mutliple sclerosis courses by combining clinical data with lesion loads and magnetic resonance metabolic features. Front Neurosci 2017;11:398 10.3389/fnins.2017.00398 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lao J, Chen Y, Li ZC, et al. . A deep learning-based radiomics model for prediction of survival in glioblastoma multiforme. Sci Rep 2017;7:10353 10.1038/s41598-017-10649-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Mobadersany P, Yousefi S, Amgad M, et al. . Predicting cancer outcomes from histology and genetics using convolutional neural networks. Proc Natl Acad Sci USA 2018;115:E2970–79 10.1073/pnas.1717139115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Charron O, Lallement A, Jarnet D, et al. . Automatic detection and segmentation of brain metastases on multimodal MR images with a deep convolutional neural network. Comput Biol Med 2018;95:43–54 10.1016/j.compbiomed.2018.02.004 [DOI] [PubMed] [Google Scholar]

- 34.Xue J, Wang B, Ming Y, et al. . Deep-learning-based detection and segmentation-assisted management on brain metastases. Neuro Oncol 2020;22:505–14 10.1093/neuonc/noz234] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Grovik E, Yi D, Iv M, et al. . Deep learning enables automatic detection and segmentation of brain metastases on mulitsequence MRI. J Magn Reson Imaging 2020;51:175–82 10.1002/jmri.26766 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Burns JE, Yao J, Summers RM. Vertebral body compression fractures and bone density: automated detection and classification on CT images. Radiology 2017;284:788–97 10.1148/radiol.2017162100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lessmann N, van Ginneken B, de Jong PA, et al. . Iterative fully convolutional neural networks for automatic vertebra segmentation and identification. Med Image Anal 2019;53:142–55 10.1016/j.media.2019.02.005 [DOI] [PubMed] [Google Scholar]

- 38.Zhao C, Shao M, Carass A, et al. . Applications of a deep learning method for anti-aliasing and super-resolution in MRI. Magn Reson Imaging 2019;64:132–41 10.1016/j.mri.2019.05.038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Sreekumari A, Shanbhag D, Yeo D, et al. . A deep learning-based approach to reduce rescan and recall rates in clinical MRI examinations. AJNR Am J Neuroradiol 2019;40:217–23 10.3174/ajnr.A5926 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mardani M, Gong E, Cheng JY, et al. . Deep generative adversarial neural networks for compressed sensing MRI. IEEE Trans Med Imaging 2019;38:167–79 10.1109/TMI.2018.2858752 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Lee D, Yoo J, Tak S, et al. . Deep residual learning for accelerated MRI using magnitude and phase networks. IEEE Trans Biomed Eng 2018;65:1985–95 10.1109/TBME.2018.2821699 [DOI] [PubMed] [Google Scholar]

- 42.Ouyang J, Chen KT, Gong E, et al. . Ultra-low-dose PET reconstruction using generative adversarial network with feature matching and task-specific perceptual loss. Med Phys 2019;46:3555–64 10.1002/mp.13626 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Gong E, Pauly JM, Wintermark M, et al. . Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J Magn Reson Imaging 2018;48:330–40 10.1002/jmri.25970 [DOI] [PubMed] [Google Scholar]

- 44.Dong C, Loy CC, He K, et al. . Image super-resolution using deep convolutional neural networks. IEEE Trans Pattern Anal Mach Intell 2016;38:295–307 10.1109/TPAMI.2015.2439281 [DOI] [PubMed] [Google Scholar]

- 45.Subtle Medical Receives FDA 510 (K) Clearance for AI-Powered Subtle MRI. October 15, 2019. https://finance.yahoo.com/news/subtle-medical-receives-fda-510-121200456.html. Accessed April 20, 2020

- 46.ClariPI Gets FDA Clearance for AI-Powered CT Image Denoising Solution. June 24, 2019. http://www.itnonline.com/claripi-gets-fda-clearance-for-ai-powered-ct-image-denoising-solution. Accessed April 27, 2020

- 47.Chang P, Grinband J, Weinberg BD, et al. . Deep-learning convolutional neural networks accurately classify genetic mutations in glioma. AJNR Am J Neuroradiol 2018;39:1201–17 10.3174/ajnr.A5667 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Young JD, Cai C, Lu X. Unsupervised deep learning reveals subtypes of glioblastoma. BMC Bioinformatics 2017;18:381 10.1186/s12859-017-1798-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Chang K, Bai HX, Zhou H, et al. . Residual convolutional neural network for the determination of IDH status in low-and high-grade gliomas from MR imaging. Clin Cancer Res 2018;24:1073–81 10.1158/1078-0432.CCR-17-2236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Fujima N, Andreu-Arasa VC, Meibom SK, et al. . Prediction of the human papillomavirus status in patients with oropharyngeal squamous cell carcinoma by FDG-PET imaging dataset using deep learning analysis: a hypothesis-generating study. Eur J Radiol 2020;126:108936 10.1016/j.ejrad.2020.108936 [DOI] [PubMed] [Google Scholar]

- 51.Han W, Qin L, Bay C, et al. . Deep transfer learning and radiomics feature prediction of survival of patients with high-grade gliomas. AJNR Am J Neuroradiol 2020;41:40–48 10.3174/ajnr.A6365 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Sun L, Zhang S, Chen H, et al. . Brain tumor segmentation and survival prediction using multimodal MRI scans with deep learning. Front Neurosci 2019;13:810 10.3389/fnins.2019.00810 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Li H, Habes M, Wolk DA, Fan Y; Alzheimer’s Disease Neuroimaging Initiative and the Australian Imaging Biomarkers and Lifestyle Study of Aging. A deep learning model for early prediction of Alzheimer’s disease dementia based on hippocampal magnetic resonance imaging data. Alzheimers Dement 2019;15:1059–70 10.1016/j.jalz.2019.02.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Basaia S, Agosta F, Wagner L, et al. ; Alzheimer's Disease Neuroimaging Initiative. Automated classification of Alzheimer’s disease and mild cognitive impairment using a single MRI and deep neural networks. Neuroimage Clin 2019;21:101645 10.1016/j.nicl.2018.101645 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Combs TS, Sandt LS, Clamann MP, et al. . Automated vehicles and pedestrian safety: exploring the promise and limits of pedestrian safety. Am J Prev Med 2019;56:1–7 10.1016/j.amepre.2018.06.024 [DOI] [PubMed] [Google Scholar]

- 56.Holodny AI. “Am I about to lose my job?!”: A comment on “computer-extracted texture features to distinguish cerebral radiation necrosis from recurrent brain tumors on multiparametric MRI—a feasibility study.” AJNR Am J Neuroradiol 2016;37:2237–38 10.3174/ajnr.A5002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Thrall MJ. Automated screening of Papanicolaou tests: a review of the literature. Diagn Cytopathol 2019;47:20–27 10.1002/dc.23931 [DOI] [PubMed] [Google Scholar]

- 58.Kitchener HC, Blanks R, Cubie H, et al. ; MAVARIC Trial Study Group. MAVARIC: a comparison of automation-assisted and manual cervical screening—a randomised controlled trial. Health Technol Assess 2011;15:1–70 10.3310/hta15030 [DOI] [PubMed] [Google Scholar]

- 59.Schläpfer J, Wellens HJ. Computer-interpreted electrocardiograms: benefits and limitations. J Am Coll Cardiol 2017;70:1183–92 10.1016/j.jacc.2017.07.723 [DOI] [PubMed] [Google Scholar]

- 60.Lindow T, Kron J, Thulesius H, et al. . Erroneous computer-based interpretations of atrial fibrillation and atrial flutter in a Swedish primary health care setting. Scand J Prim Health Care 2019;37:426–33 10.1080/02813432.2019.1684429 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Thrall JH, Li X, Li Q, et al. . Artificial intelligence and machine learning in radiology: opportunities, challenges, pitfalls, and criteria for success. J Am Coll Radiol 2018;15:504–08 10.1016/j.jacr.2017.12.026 [DOI] [PubMed] [Google Scholar]

- 62.Pakdemirli E. Artificial intelligence in radiology: friend or foe? Where are we now and where are we heading? Acta Radiol Open 2019;8:2058460119830222 10.1177/2058460119830222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Duong MT, Rauschecker AM, Rudie JD, et al. . Artificial intelligence for precision education in radiology. Br J Radiol 2019;92:20190389 10.1259/bjr.20190389 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Flanders AE, Prevedello LM, Shih G, et al. . Construction of a machine learning dataset through collaboration: the RSNA 2019 Brain CT Hemorrhage Challenge. Radiology: Artificial Intelligence 2020;2:e190211 10.1148/ryai.2020190211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Yune S, Lee H, Pomerantz SR, et al. . Real-world performance of deep-learning-based automated detection system for intracranial hemorrhage. In: Proceedings of the 2018 Society for Imaging Informatics in Imaging 2018 Conference on Machine Intelligence in Medical Imaging, San Francisco, California; September 9–10, 2018 [Google Scholar]

- 66.Guo J, Gong E, Fan AP, et al. . Predicting 15O-water PET cerebral blood flow maps from multi-contrast MRI using a deep convolutional neural network with evaluation of training cohort bias. J Cereb Blood Flow Metab 2019. November 13. [Epub ahead of print] 10.1177/0271678X19888123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Chen IY, Joshi S, Ghassemi M. Treating health disparities with artificial intelligence. Nat Med 2020;26:16–17 10.1038/s41591-019-0649-2 [DOI] [PubMed] [Google Scholar]