Abstract

Use of the electronic health record (EHR) has become a routine part of perioperative care in the United States. Secondary use of EHR data includes research, quality, and educational initiatives. Fundamental to secondary use is a framework to ensure fidelity, transparency, and completeness of the source data. In developing this framework, competing priorities must be considered as to which data sources are used and how data are organized and incorporated into a useable format. In assembling perioperative data from diverse institutions across the United States and Europe, the Multicenter Perioperative Outcomes Group (MPOG) has developed methods to support such a framework. This special article outlines how MPOG has approached considerations of data structure, validation, and accessibility to support multicenter integration of perioperative EHRs. In this multicenter practice registry, MPOG has developed processes to extract data from the perioperative EHR; transform data into a standardized format; and validate, deidentify, and transfer data to a secure central Coordinating Center database. Participating institutions may obtain access to this central database, governed by quality and research committees, to inform clinical practice and contribute to the scientific and clinical communities. Through a rigorous and standardized approach to ensure data integrity, MPOG enables data to be usable for quality improvement and advancing scientific knowledge. As of March 2019, our collaboration of 46 hospitals has accrued 10.7 million anesthesia records with associated perioperative EHR data across heterogeneous vendors. Facilitated by MPOG, each site retains access to a local repository containing all site-specific perioperative data, distinct from source EHRs and readily available for local research, quality, and educational initiatives. Through committee approval processes, investigators at participating sites may additionally access multicenter data for similar initiatives. Emerging from this work are 4 considerations that our group has prioritized to improve data quality: (1) data should be available at the local level before Coordinating Center transfer; (2) data should be rigorously validated against standardized metrics before use; (3) data should be curated into computable phenotypes that are easily accessible; and (4) data should be collected for both research and quality improvement purposes because these complementary goals bolster the strength of each endeavor.

CHALLENGE OF HETEROGENEOUS DATA IN ELECTRONIC HEALTH RECORD–DERIVED RESEARCH AND QUALITY IMPROVEMENT

Over the past decade, perioperative electronic health records (EHRs) have progressively gained widespread traction. As driven by public health incentives including Promoting Interoperability1 (formerly Meaningful Use), US hospitals utilizing perioperative EHRs have increased from 14% in 2008, to 75% in 2014, to an estimated 84% by 2020.2 Adoption of EHRs has enabled an array of opportunities for improvement and innovation including enhanced medical documentation for quality improvement3,4 and billing purposes,5,6 a platform for clinical decision support,7–11 and the development of health data registries providing opportunities for secondary use in research and quality improvement.12,13

Within anesthesiology, accumulation of EHR data has enabled the characterization of both rare outcomes (eg, emergency airway management,14 epidural hematoma,15 anaphylaxis,16 and perioperative death17) and more common events (intraoperative hypotension,18 perioperative hyperglycemia,19 and postoperative acute kidney injury20). Relating these events to practice patterns—such as intraoperative transfusion,21 nitrous oxide use,22 insulin administration,19 and vasopressor/inotrope use23—is a major achievement of perioperative registries. Such advances emerge from the integration of multiple data sources (clinical, laboratory, administrative, and research) across institutions.

However, variation in medical care documentation, differences in data handling across EHR platforms, and gaps in EHR infrastructure can lead to missing and/or inaccurate data across and within institutions.24–28 Collectively, variations in data provenance represent a substantial threat to the validity of perioperative EHR research. Conclusions drawn from nonstandardized or invalid data could lead to erroneous conclusions that negatively impact health care policy, threaten clinician autonomy, and incentivize misguided practices.29 Given current shortcomings of perioperative EHR database quality, there is an urgent need to improve the fidelity of EHR data through validated, standardized methodologies.30–32

In developing registries, multiple decisions must be made about how data are obtained, organized, and made available. These decisions, often made early on, have powerful impacts on the eventual use of the data and the utility of the registry.

In this Special Article, we explore 3 areas: first, we explore trade-offs when making decisions regarding structuring perioperative databases. Second, we describe the structure, operating processes, and current status of Multicenter Perioperative Outcomes Group (MPOG). Third, in light of the challenges posed by handling heterogeneous multicenter EHR data, we explore 4 considerations our group has prioritized, that may inform other multicenter data integration initiatives.

EARLY DECISIONS WITH POWERFUL IMPACTS: DESIGN TRADE-OFFS

A series of decisions are necessary during the translation of EHR data to a registry. These decisions have a powerful impact on the questions the registries can be used to answer. Importantly, the “best” approach designing a clinical registry structure is based on the proposed use cases; no single solution exists.

Choosing the Data Source: Clinical, Administrative, or Combination

The first choice a prospective secondary data user faces is where to draw data from. Clinical data are drawn from sources such as the EHR, Radiology Reports, or Laboratory records. Administrative data include data used for the billing (eg, Hospital or Professional Billing records) or health systems management (Registration or Admission, Discharge, and Transfer system records).

Compared to administrative data, clinical data sources may be more comprehensive but may require additional processing to enable secondary use. For example, a diabetes diagnosis derived from clinical data may need to consider medication treatments, serum blood glucose laboratory values, and text search of unstructured clinical notes. Administrative data offer greater structure but may be more limited in scope. In administrative data, a diabetes diagnosis may be readily identified as a single structured data element (eg, International Classification of Diseases, Tenth Revision [ICD-10], code E11.9), but granular detail as to disease progression and nuanced medical treatment plans are unavailable. Registries may be able to blend these, by including both clinical and administrative data to leverage the strength of each, at the cost of additional data validation or adjudication.

Data Collection: Manual Abstraction Versus Automated Extraction

After the selection of a data source, the next decision revolves around how data are to be collected for further use. Manual abstraction involves the use of a trained operator accessing the EHR, seeking specific information, and recording these details within the registry. This approach affords the opportunity for adjudication at the time of data collection. However, information to be collected must be prespecified at the time of initial review. The scale of such an approach is limited by the effort required to review cases and the availability of trained reviewers. Conversely, automated data extraction is less limited by reviewer availability. It may, therefore, allow for a broader range of electronic data to be captured, provided data can be defined by logical rules. However, temporal and institutional variations in documentation practices and sources of artifact within the data may impede the quality of automated extraction, particularly if not regularly validated.

Blended options allow automated extraction of some data elements and hand annotation of others. Such a system may import structured data—like demographics or laboratory results—and allow the user to provide additional information. Advances within the fields of natural language processing have allowed methods to further hybridize these approaches, such as reviewers validating suggested results to complex questions gathered automatically by machine review of unstructured text.

In developing a registry, the level of structure applied at the time of data collection may be a strength or weakness, depending on the intended use. Consider a registry that collects information on perioperative diabetes management. In a highly constrained data model, data collected might include the date/time and value of the last blood glucose measurement before the surgical procedure and up to 3 intraoperative measurements. A less constrained data model may hold all blood glucose values within 24 hours of the procedure start and during the entire case. Both approaches would answer questions regarding immediate preoperative values but only the latter would be able to assess if providers were checking blood glucose values every hour. But this would be at the expense of increased analytic complexity.

Inclusion Strategy: Which Patients, Which Providers, Which Locations, and All Cases Versus a Sample

Registries are designed with an a priori plan as to what care they describe. Registries may focus on particular diseases (eg, cancer), providers (eg, cardiothoracic surgeons), institutions (eg, stroke care at a particular hospital), or a combination (eg, bowel resections at a single institution by acute care surgeons). Each requires differing strategies for data collection and each offers unique and complementary perspectives. Registries focused on an individual provider practice need to take into account that the providers may work outside the organization where the registry is based. In addition, health care setting matters: office-based settings unconnected with larger organizations may have limited resources to facilitate large-scale data collection. Consideration must be given to the structure and internal boundaries of the organization within which the focus of the data collection efforts exists. Registries similarly may assess every eligible case based on their practice or alternatively may use a sampling methodology to more efficiently collect data or promote data heterogeneity.

INTRODUCTION TO MPOG

The MPOG currently spans 46 institutions across the United States and Europe. MPOG was established in 2009 with the primary goals of developing an inclusive perioperative multicenter registry to facilitate outcomes research and quality improvement.33 The intent was to collect data with minimal constraints to maximize the potential uses. Data contributed to MPOG ranges across 7 EHR or Anesthesia Information Management System (AIMS) vendors. As of March 2019, 10.7 million unique intraoperative records spanning from 2008 to the present day have been integrated within a secure database at the University of Michigan. Approximately 100,000 cases are added monthly to the database.

Regulatory Approval and Overview

Multiple institutional review board (IRB) approvals govern the conduct of MPOG operations. An IRB approval obtained at the Coordinating Center allows for the establishment, collection of data, and operation of the centralized database. MPOG implements Data Use Agreements and Business Associate Agreements between the Coordinating Center and each participating site to govern the exchange of protected health information (PHI). These agreements are reached between the contracting groups within each participating site and the coordinating center. Each participating site obtains IRB approval to collect, organize, and submit a Limited Data Set to the Coordinating Center database. Limited Data Sets, devoid of PHI except dates and extremes of age, are transmitted from each participating site to the Coordinating Center. Each site obtains a waiver of informed consent covering the collection and transmission of this limited data set, granted on the basis that no specific interventions occur at the patient level, and that meticulous efforts are taken to ensure the development of a limited data set (detailed below) before transmission to the Coordinating Center. Research projects using the dataset obtain project-specific IRB approval from the relevant board(s).

Work of MPOG: Overview of the Lifecycle From Data Acquisition to Use

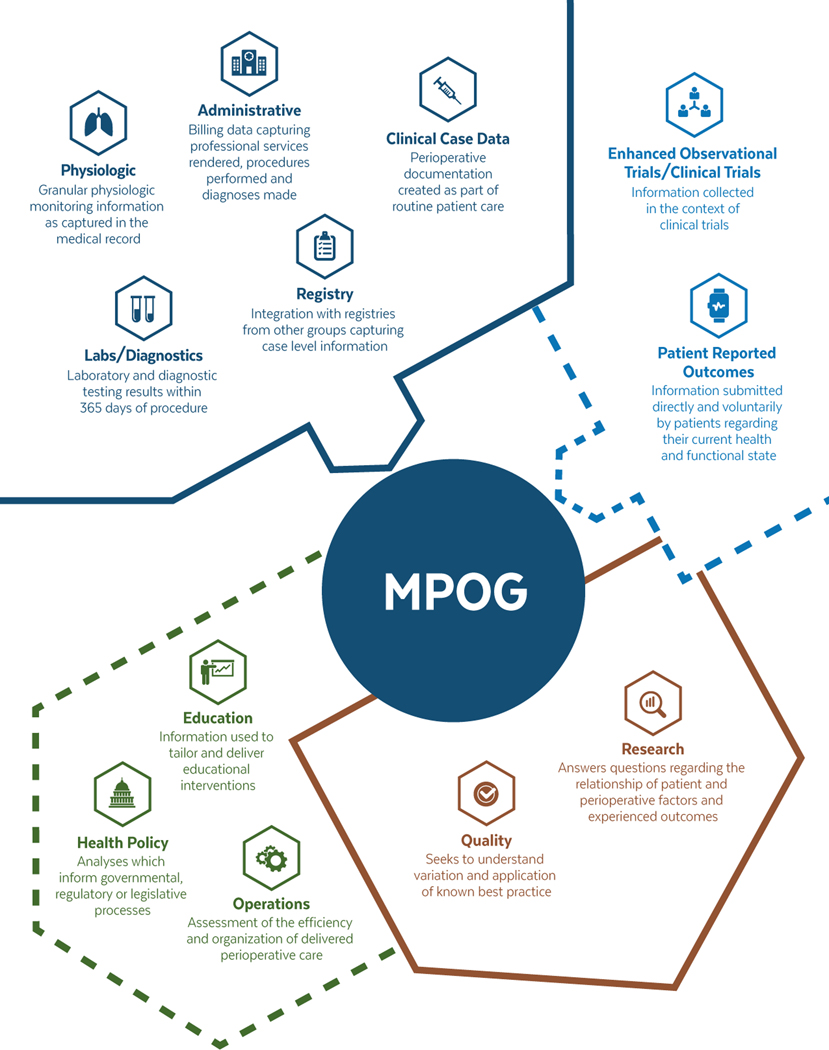

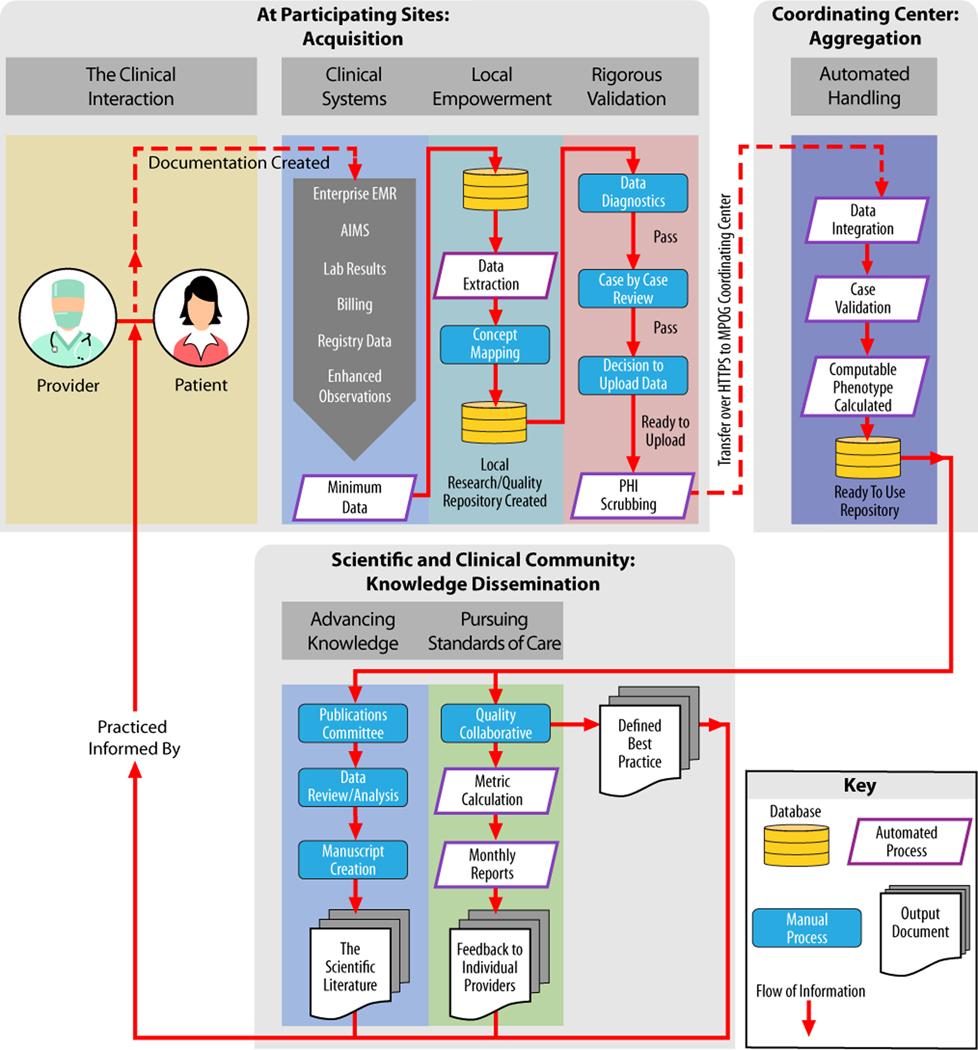

We provide an overview of the perioperative data types collected and health care applications of MPOG in Figure 1. In addition, Figure 2 provides an overview of the flow of information through the MPOG consortium, outlining the process of data acquisition at the point of care, importing of data into local and central data registries, and finally, curation of data for research and quality improvement measures.

Figure 1.

Overview of information contained within MPOG and its uses: conceptual overview of types of data held by MPOG and their uses. Current data types and uses are within the solid lines. Emerging data types and uses are indicated within the dashed lines. MPOG indicates Multicenter Perioperative Outcomes Group.

Figure 2.

The lifecycle of perioperative EHR information: data become knowledge that informs practice—illustration of flow of information as part of involvement in the MPOG process. At each participating site: creation during the patient–physician encounter, documentation in the EHR, extraction from EHR and other systems, standardization, validation, PHI removal, and upload to MPOG. At the coordinating center: this figure indicates the automated handling steps which make data available at the coordinating center. Finally, data are accessible for specified projects and purposes on the approval of the Quality Committee or PCRC or Publications Committee. AIMS indicates Anesthesia Information Management Systems; EMR, electronic medical record; MPOG, Multicenter Perioperative Outcomes Group; PCRC, Perioperative Clinical Research Committee; PHI, protected health information.

Participating Sites: Acquisition, Standardization, and Development of a Local Data Repository

Each participating site must adhere to a minimum data requirement for every submitted anesthetic case (Table 1). In practice, dependent on the level of systems integration at the local hospital/health system level, this involves the integration of data from multiple information systems into a single local repository.

Table 1.

MPOG Minimum Data Requirement

| Category | Detail |

|---|---|

| Identifiers held at local site | Full namea |

| Date of birtha | |

| Social security numbera | |

| Medical record numbera | |

| Other demographics | Age at date of surgery |

| Gender | |

| Basic case information | Admission type |

| Age at the time of operation | |

| Facility and operating room type | |

| Primary procedure text | |

| Primary diagnosis text | |

| Preoperative documentation | ASA PS classification |

| Height | |

| Weight | |

| Laboratory values taken up to 365 d before anesthesia | |

| Preoperative comorbidities (cardiac, pulmonary, endocrine, renal, hepatic, and immunologic) organized within a preoperative history and physical document | |

| Home medications | |

| Intraoperative documentation | Case times |

| Fluid inputs and outputs | |

| Medication administrations | |

| Observational/procedure notes | |

| Point-of-care laboratory values | |

| Staff tracking/sign-in/sign-out times for anesthesia attendings | |

| Vital signs, machine captured minute-by-minute | |

| Postoperative documentation | Laboratory values taken up to 365 d after anesthesia |

| Outcomes | In-hospital all-cause mortality |

| Charge capture and administration | Hospital discharge diagnoses codes |

| Professional fee anesthesia billing codes |

At the time of publication, this is available online on the MPOG website: https://www.mpog.org/join/mindataelements.

Abbreviations: ASA PS, American Society of Anesthesiologists Physical Status; MPOG, Multileft Perioperative Outcomes Group; PHI, protected health information.

Identifiers are required for PHI removal; PHI is subsequently removed from patient record “before” data upload to the MPOG central repository. PHI remains stored at the local site.

The MPOG consortium has developed interfaces to allow the inclusion of sites utilizing a range of enterprise EHR and AIMS vendors. Using a vendor-specific interface, each site transforms the varied output of these systems into a standardized format.

After anesthetic case data are extracted from the source system, data are integrated with other data sources including institutional research repositories, case data that may also be available from outcome registries (such as an extract of data captured by that site as part of participation in the National Surgical Quality Improvement Project [NSQIP] or Society of Thoracic Surgeons General Thoracic Surgery Database [STS-GTSD]), and other clinical, laboratory, or administrative systems. Data are matched at the participating site based on locally held unique identifiers (such as Medical Record Number or Social Security Number). The unique identifiers are removed before transmission to the Coordinating Center.

This data set is available locally, enabling research, education, and quality improvement initiatives to be pursued internally at the discretion of the participating site.34,35 Investigators retain ownership and have visibility into all data that are subsequently submitted to the MPOG central repository.

Once extracted from the local EHR, perioperative data are mapped to MPOG-developed standardized, semantically interoperable concepts before submission to the central repository.36 MPOG embraces standardized definitions where available, such as the use of ICD-10 diagnosis codes or Association of Anesthesia Clinical Directors (AACD) anesthesia events, but these are supplemented by an MPOG-specific set of data elements.37 This ensures, for example, the details of the laryngoscope used for airway management or dose of propofol administered to be understood in a comparable way between institutions.

Prioritizing extraction of information into the local MPOG repository before mapping prevents artificial constraints on data capture. Specified semantic structures are required at the time of extraction—that is, a drug must have a name, dose, route, and timestamp for the delivery. These constraints, however, are minimized to account for a full range of medications and dosing regimens to be captured. Similar approaches are taken for other documentation types, prioritizing flexibility of data handling.

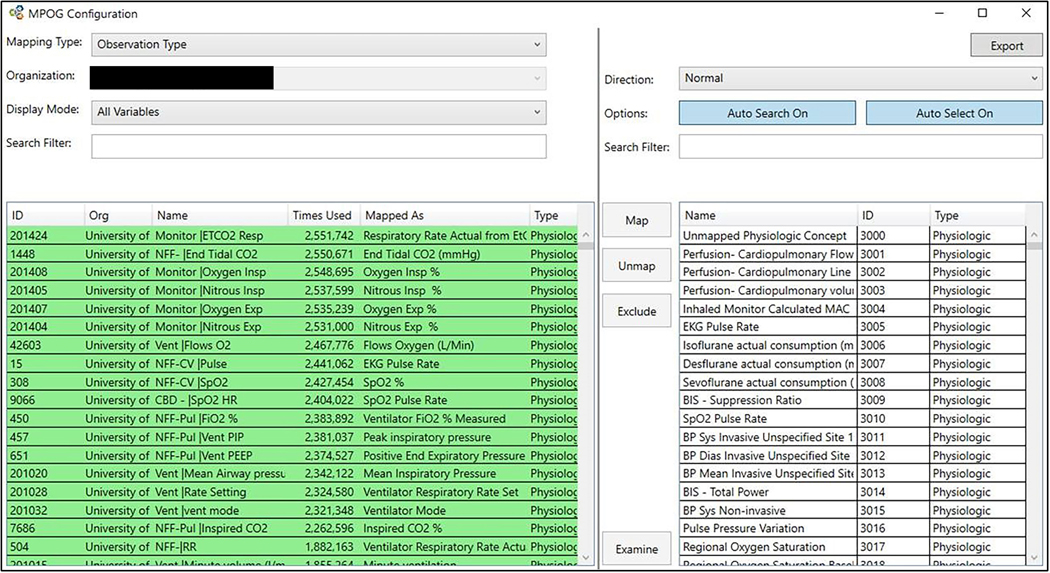

The mapping is performed using a dedicated utility, whereby local terminology and standardized terminology (MPOG Concepts) can be compared and matched (Figure 2). To maximize data mapping efficiency, priority is given to the most commonly occurring terminology in the local system. The mapping utility suggests likely MPOG Concepts; however, with the support of the Coordinating Center, the site’s Principal Investigator or his or her designee must manually select the most appropriate concept. The Coordinating Center adjudicates the mappings before upload to ensure that high-priority concepts are addressed. Should new terminology or new medications be introduced at a later date, the participating site can continue to map local concepts to new or existing MPOG Concepts.

Participating Sites: Rigorous Validation

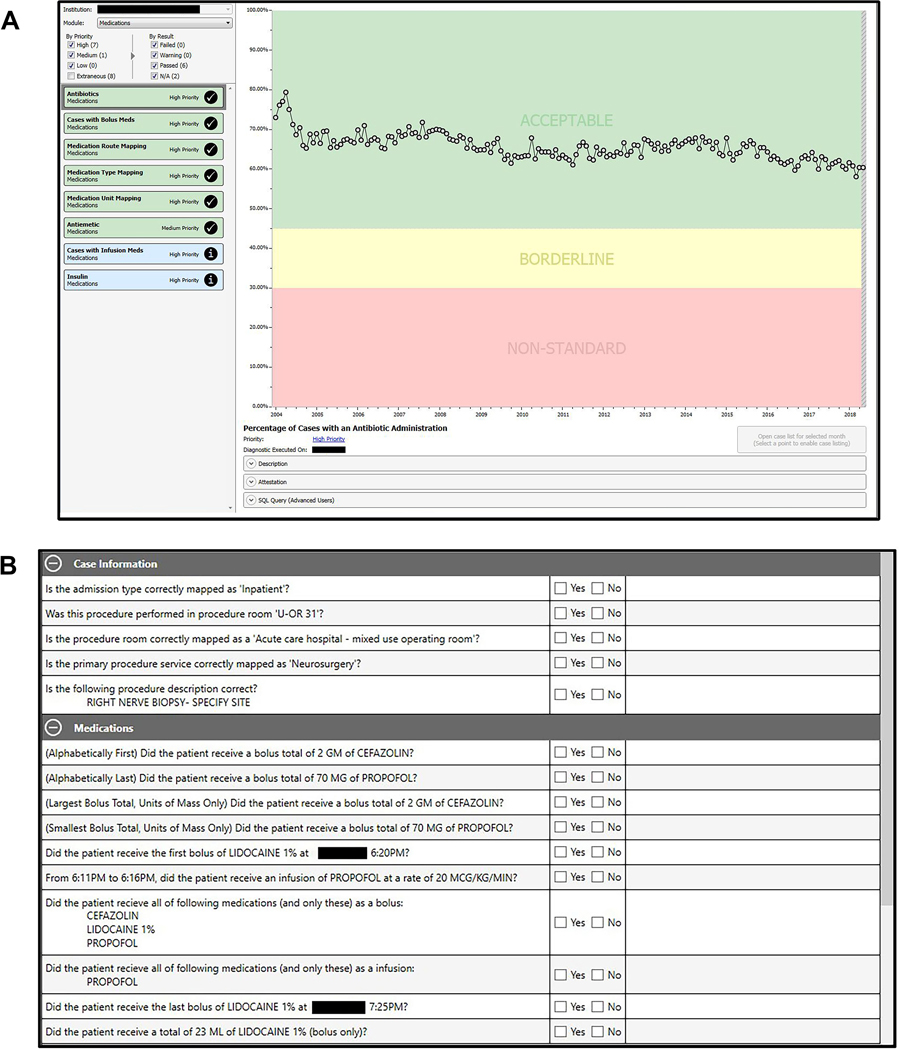

Once the mapping process is completed—and before centralized database transmission—data from participating sites are assessed for completeness and accuracy. Our Data Diagnostics tool facilitates the assessment—identifying specific deficiencies across data category, institution, and time domains (Figure 3). Data are further categorized by priority based on the relative value of the data type for achieving MPOG goals; highest priority is given to data comprising the minimum data requirement. MPOG requires a clinically trained site representative to review and attest to data accuracy before each data transmission to the central repository. At the Coordinating Center, the MPOG Director reviews the initial data upload (including the Data Diagnostics information) before it is integrated into the main MPOG database. We describe a complete list of data diagnostic categories in Supplemental Digital Content, File 1, http://links.lww.com/AA/C948.

Figure 3.

MPOG Concept mapping utility: using the MPOG Concept mapping utility, a site is able to match terminology present in their local AIMS or EHR systems to standardized MPOG Concepts. Concepts offered in the right hand pane are ordered based on probability of match based on text selection in the left hand pane. Color coding in the left hand pane indicates the status of the match. The Figure has been edited to remove identifying information. AIMS indicates Anesthesia Information Management Systems; EHR, electronic health record; MPOG, Multicenter Perioperative Outcomes Group.

Participating MPOG sites perform a manual review of a random sample of cases recorded within the local database before transmission to the centralized database. Case-level feedback on data quality is a counterpart to the Data Diagnostics tool because some errors may escape detection when assessed at an aggregate level. If a site, for example, maps a sufentanil local concept incorrectly to a fentanyl MPOG Concept, such an error would only be revealed via comparison of the source anesthesia record with the MPOG extracted record. To facilitate this process, participating sites are required to use a case validation tool, providing case-level feedback on data quality (Figure 4). Sites review a minimum of 5 cases per month to ensure the ongoing accuracy of high-priority data elements. Through recorded and audited attestations, a representative from each site must verify the validity and completeness of each data element when compared to the source anesthetic record. If issues are identified, the precise changes necessary to improve overall data quality can be implemented.

Figure 4.

MPOG Data Diagnostics and Case Validation Tools. A, The output of one of multiple data diagnostic control charts is demonstrated. Charts are color coded and prioritized to focus work on improving highest priority data elements. B, The case-by-case review is demonstrated, whereby the extracted record is compared against the source medical record and clinician attests to the accuracy of the detailed extraction. Both panels have been edited for clarity and to remove identifying information. MPOG indicates Multicenter Perioperative Outcomes Group; U-OR 31, operating room 31.

This multistep validation process must not only be completed before any data transfer, but also continues throughout MPOG participation to ensure the continuity and fidelity of the data upload.

Participating Sites: Removal of Identifiers and Data Transmission

A limited data set is first created locally by removing selected PHI via a customized “scrubbing” tool (leaving only dates and extremes of age) and then transmitted to a centralized MPOG database. The scrubbing tool additionally removes common names that may be entered in the free text. Several dictionaries are preloaded into the scrubbing application including the most common first and last names from the US Census Bureau and the Systematized Nomenclature of Medicine (SNOMED) dictionary to identify health care terminology that should remain with the transfer. Sites may add additional information to be scrubbed such as names, initials, or internal identifiers assigned to providers. All text is examined and passed through the scrubbing utility before upload.

Only after completion of validation procedures and the use of the scrubbing tool does the option of transferring case-level data to the MPOG Coordinating Center become available. Data are transferred into an encrypted repository, checked for validity, and integrated into the MPOG Coordinating Center database. A database table containing patient identifiers and unique case-linking information remains stored at the local site and is not transmitted to the MPOG Coordinating Center.

The chair/practice lead and Principal Investigator at each participating MPOG site receive monthly emails summarizing site data contribution to facilitate monitoring of data submitted over time.

Coordinating Center: Automated Handling

Once data are transmitted and integrated into the MPOG Coordinating Center database, the data are available for use within research and quality improvement projects. As specific to the needs of a project, data are subject to focused examination to ensure appropriate values are included. A key component of this is the creation of computable phenotypes. These are prespecified, standardized methodologies (ontologies) to define a specific patient feature, aspect of care, or outcome. Examples of computable phenotypes include standardized methods for calculating case duration, determining the presence of particular comorbidities, ascertaining if a patient met a standard definition of acute kidney injury, or applying a standardized form of artifact reduction. Computable phenotypes are reusable across projects and, therefore, allow preparatory work to be performed, populations defined, and outcomes explored in a standardized manner.

Scientific and Clinical Community: Knowledge Dissemination

To conduct a research project, a detailed specific proposal is presented through the monthly MPOG Perioperative Clinical Research Committee (PCRC), comprised of MPOG active site principal investigators, site chairs/heads of practice, statisticians, and other interested research faculty. The committee critically reviews and amends the proposal, and subsequently votes to accept, require revisions, or reject the proposal. Each active site submits a single vote. This monthly forum allows each participating site to comment on the suitability of their site data for inclusion in a particular research project and assist in understanding relevant site-specific practices before data extraction and analysis. The PCRC process allows sites to comment on projects that use their data; approval from this group is required for use of MPOG data in research projects, because this promotes equity of access to MPOG data.

Before accessing data, research project proposals are prospectively registered and tracked on the MPOG website which remains accessible to members. This process acts as a form of trial registration, clearly delineating the purposes for which data are being accessed and the a priori analytic plan.38,39 If protocol revisions are warranted, all changes are circulated among the MPOG members for feedback, approval, and documentation to the MPOG website.

To enable quality improvement, the Quality Committee oversees quality initiatives performed through parallel processes as the PCRC. The Quality Committee, composed of an anesthesiologist from each participating site, leads the development of quality measures. Anesthesia providers are able to see their performance on these measures against anonymized peers and institutional averages via web dashboards or monthly email notifications. Sites that choose to provide data on anesthesia quality to their providers are required to upload data on a monthly basis to enable near-time feedback.

Current State of MPOG

Table 2 provides a summary overview of data currently available within MPOG within a subset from the last 5 years available (years 2014–2018). When appropriate, counts, means, medians, or proportions are presented as summary measures of the data set.

Table 2.

Selected MPOG Perioperative Case Characteristics, January 1, 2014 to December 31, 2018

| MPOG Variable | Number | Fill Rate (%)a |

|---|---|---|

| Operating room type | ||

| Anesthetizing location | … | |

| Inpatient operating rooms | 2317 | |

| Ambulatory surgery center operating rooms | 554 | |

| NORA locations | 693 | |

| Patient information | … | |

| Cases included | 7,373,376 | |

| Distinct patients (sum of distinct patients at each institution) | 4,760,059 | |

| Known gender | 7,366,676 | 99.9 |

| Known patient race | 6,265,673 | 85.0 |

| Known patient height—total, y | 6,494,163 | 88.0 |

| ≥18 | 5,899,761 | 89.5 |

| <18 | 594,402 | 75.6 |

| Known patient weight—total, y | 6,920,295 | 93.9 |

| ≥18 | 6,156,952 | 93.5% |

| <18 | 763,343 | 97.1 |

| Age, y | 100.0 | |

| <1 | 91,473 | |

| 1–4 | 237,524 | |

| 5–11 | 242,961 | |

| 12–17 | 213,644 | |

| 18–34 | 1,129,055 | |

| 35–64 | 3,304,243 | |

| 65–84 | 1,976,374 | |

| >85 | 178,099 | |

| Specific Elixhauser comorbidities | ||

| CHF | 348,599 | |

| Diabetes (complicated or uncomplicated) | 617,154 | |

| Liver disease | 233,606 | |

| Cancer (solid tumor without metastasis, metastatic cancer, lymphoma) | 722,759 | |

| Case information | ||

| Anesthesia technique | ||

| General | 4,588,597 | |

| Includes regional/neuraxial | 497,093 | |

| Admission type | 99.9 | |

| IP | 2,885,173 | |

| OP | 4,304,247 | |

| Other | 177,779 | |

| Selected CPT case classifications | ||

| Cardiac | 118,842 | |

| Diagnostic endoscopy | 870,548 | |

| Major abdominal | 628,055 | |

| Ophthalmic procedure | 277,752 | |

| Preoperative documentation | ||

| ASA PS classification | 97.9 | |

| I, II | 3,915,973 | |

| III, IV | 3,282,043 | |

| Cases with MP classification documented | 5,042,240 | |

| Intraoperative documentation | ||

| EKG HR measurements | 776,522,753 | 89.2 |

| Systemic blood pressure measurements | ||

| Noninvasive | 188,908,501 | 95.2 |

| Invasive | 178,143,280 | 10.1 |

| Cases receiving a PRBC transfusion | 146,421 | 2.0 |

| Administered medications | 98,009,774 | 98.8 |

| Documented IV fluids | 15,968,246 | 81.9 |

| Staffing model | 99.9 | |

| Attending only | 1,071,745 | |

| CRNA/AA | 4,841,515 | |

| Trainees involved | 1,773,764 | |

| Outcome data | ||

| Cases with laboratory values in 365 d prior | 5,327,154 | 72.3 |

| Cases with laboratory values in 365 d after | 4,226,346 | 57.3 |

| Cases with both a pre- and postoperative creatinine value | 2,803,263 | 38.0 |

| Cases with troponin within 30 d postoperative | 339,071 | 4.6 |

| In-hospital mortality | 53,472 | 0.73 |

| Reoperation within 30 d (at same institution) | 704,183 | 9.6 |

Abbreviations: ASA PS, American Society of Anesthesiologists physical status; CHF, congestive heart failure; CPT, Current Procedural Terminology; CRNA/AA, certified registered nurse anesthetist/anesthesiologist assistant; EKG, electrocardiogram; HR, heart rate; IP, inpatient; IV, intravenous; MP, Mallampati; MPOG, Multicenter Perioperative Outcomes Group; NORA, nonoperating room anesthesia; OP, outpatient; PRBC, packed red blood cell.

Fill rate is calculated for selected variables, where the appropriate denominator is the entire population.

From the inception of MPOG PCRC in January 2012, 14 projects reached publication. These works cover diverse fields, ranging from airway management,40 to epidural hematoma formation,41 to distribution of arterial blood pressures during pediatric surgery.42

The MPOG Quality Committee has approved 27 measures, with 26 measures currently being used.43 Measures are consistently reviewed and revised to incorporate best practice guidelines published in the literature. Emails summarizing performance on these measures are sent to >3000 anesthesia providers each month. Between 2015 and 2018, MPOG was a designated Qualified Clinical Data Registry (QCDR); in 2019, MPOG did not seek participation in the program due to the combination of significant administrative burden of participation with limited uptake among MPOG member institutions.

Considerations to Improve Data Quality

The work of MPOG is contingent on contributions of usable data at each site, requiring ongoing diligence of participating sites to maintain data quality. We describe 4 considerations that may guide high-fidelity multicenter EHR data integration. These emerge from our practice and are shaped by the approach that we sought in creating our registry; however, these offer generalizable insights into the creation of any clinical registry.

Consideration 1: Data Availability at the Local Institution

By participating in MPOG, each site gains a validated, readily accessible local database that enables institutions to perform internal operational, quality improvement, and research studies. Such initiatives may function independently of local enterprise EHR systems or the MPOG network.

Understanding what information is being submitted on behalf of an organization is vital to the perception and trust of that registry within the organization. This is a common feature of clinical registries. Local usability builds trust in the data and increases the checking of diverse aspects of the data.

Allowing local usability creates a sense of departmental ownership of the information. This develops a responsibility separate from the enterprise information technology department whom might manage the remainder of the EHR data. Furthermore, anesthesiology departments may become resources and engaged stakeholders of institutional perioperative data.

Consideration 2: Data Validation Against Standardized Metrics Before Use

Working across multiple institutions and wide geographical areas reveals wide variations in clinical and documentation practices. To handle the variability of practice observed, MPOG seeks flexibility in handling the data extraction. However, this flexibility must be matched by validation to ensure data are complete and accurate.

This approach emerges from using electronically collected data with minimal constraints. Tight data constraints provide a form of validation at the time of data collection. In a hand-abstracted registry, a data collection instrument may specify if an element is mandatory and may require the data element to be captured as a “yes” or “no” value. This means that the data conform to a tightly specified structure at the time of collection, but does not, however, ensure that this collection is accurate.

Therefore, the data validation practice that MPOG uses focuses on both the accuracy of data captured across time by ongoing assessment with item-by-item review against source documentation (to ensure accuracy of data capture) and review of aggregate distributions (to ensure the data are being described in the expected manner). The approach taken by the MPOG process allows diverse practices to contribute heterogeneous data that are then systematically validated and standardized from data extraction to submission.

Consideration 3: Data Curation Into Computable Phenotypes to Be Easily Accessible

An important task of the MPOG coordinating center is the curation of data into computable phenotypes. This supports efforts to improve the reproducibility of our work by other investigators by promoting standard definitions of exposure and outcome variables and developing methods for standardized handling of artifacts. This also allows data elements for projects to be based on foundational building blocks.

Because these are developed after data extraction, they are analogous to postdata collection data dictionaries. Registries often undergo definitional evolution that causes criteria for inclusion or outcome ascertainment to shift over time. This means that it may not be possible to truly assess the changing incidence of an outcome in a registry if these underlying definitions have shifted. The use of computable phenotypes allows standardized definitions to be deployed across time to ensure consistent definitions are available at the time of data access.

Our approach addresses the challenge of repeatedly deriving commonly used measures for research or quality improvement efforts, so that investigators or quality innovators may instead channel efforts toward impacting clinical practice. Collectively computable phenotypes directly address challenges of reproducibility inherent to research derived from heterogeneous multicenter EHR data.

Consideration 4: Data Collected Are Used for Both Research and Quality Improvement Purposes Because These Complementary Goals Bolster the Strength of Each Endeavor

The integration of the quality improvement and research initiatives encourages individual sites to prioritize timeliness, accuracy, and completeness of data capture. Feedback on the quality of anesthesia care needs to be given rapidly to allow anesthesia providers to recall the specifics of the case in question. This is in contrast to research initiatives that typically have a smaller audience and a longer time horizon. However, both are predicated on high data quality to ensure valid conclusions are drawn. Integrating both initiatives incentivizes providers to become stakeholders in the quality of the data submitted and encourages local sites to ensure the fidelity of the data submitted. This process enhances the utility of the submitted data for investigating complex and timely research questions, which may in turn form the basis of a new best practice in the future.

The use of outcome registries for both quality and research endeavors is well established within the field of perioperative care. Our approach continues this practice and applies it in our context of institutionally focused, broad-based, and electronic data collection.

LIMITATIONS OF THE MPOG APPROACH

The value of a data set emerges from the manner in which it is used. The approach taken by MPOG is explicitly designed to maximize the potential uses of the data contained within it. This comes with trade-offs of increased analytic complexity.

Despite the multicenter effort with detailed care and ongoing review, it is impossible to ensure perfect data quality. Issues inherent to source documentation may be carried forward; for example, it is not appropriate to retrospectively correct a unit error in a drug-dosing documentation created at the time of patient care. Data diagnostics and validation strategies may identify errors that are present and may be able to flag medication administrations for further review or exclusion.

Variation in completeness of documentation will always remain. Some providers and some institutions will provide descriptions of the perioperative events in greater or lesser detail. Certain styles of documentation will be less structured and more narrative and thus require advanced data science methods such as natural language processing or machine learning to compile in an automated manner.

Because the unit of participation within MPOG is the electronic anesthesia record, the focus of our work is with institutions with such systems. Institutions with paper-based documentation systems or organizations without integrated anesthesia records—such as office-based anesthesia practices with limited scale—may not be able to participate and, thus, our data contain an implicit selection bias. The inclusion of nonacademic sites within the state of Michigan has expanded the reach of MPOG and the types of conclusions that can be drawn from the practice described within this registry. However, based on the practice patterns of participating institutions, we have limited insight into the practice of office-based anesthesia or practice that occurs within smaller private practice groups. The inclusion of all cases for which anesthesia care is provided gives a more holistic assessment of the breadth of anesthesia practice and may span a wide breadth from complex inpatient surgery to high-volume, low-complexity outpatient procedures. As a function of this inclusion strategy, our aggregate data are not directly comparable to other procedure- or proceduralist-oriented registries.

Multistage review allows opportunities to work with participating sites to improve both technical and process issues contributing to poor data quality. A data distribution review may identify that a participating site accidentally matched a local packed red blood cell transfusion concept to a MPOG Concept for salvaged processed blood, and this can be corrected relatively simply. But a project examining airway management may discover that participating sites vary in the quality, completeness, and descriptiveness of their airway management documentation, and this may require a more fundamental discussion of documentation standards at each institution.

MULTICENTER PERIOPERATIVE EHR DATA HORIZONS

Database-derived research has allowed important and complex questions to be posed based on the collective experience of anesthesia providers. The same platform built for gathering of detailed, structured, and standardized data regarding perioperative care across many institutions could transition into one that provides the mechanism for performing prospective clinical trials. To this end, MPOG has developed a framework for appending additional information to the standard data extract. This has been used to support an enhanced observational trial with prospectively collected data elements that are not routinely captured during a specific period of time for a targeted population. We anticipate that this will form the basis of further prospective observational and interventional trials with the ability to adapt sophisticated methodologies and mirror other clinical specialties.44–47 In addition, the MPOG network has been proposed as the data structure and data coordinating center for several awards of the Initiative for Multicenter Pragmatic Anesthesiology Clinical Trials (IMPACT) supported by the International Anesthesia Research Society.

The ability to capture additional data at the point of care in real time will enhance the quality mission. This enables the independently observed data—such as postanesthesia care unit (PACU) transitions of care—to be included in measured data types. Incorporation of additional data types and sources increases the aspects of care that can be measured and included in provider feedback, offering new opportunities for the measurement of quality of anesthesia care.

Inclusion of novel data sources allows transformative questions to be asked about the outcomes of perioperative care. Continued partnerships with surgical outcome registries offer opportunities for true multidisciplinary collaboration in the pursuit of improved patient outcomes, emerging naturally from a shared data set describing patient care. With proper consent and oversight, patient-centered data, such as from smartphone or wearable devices, may offer novel opportunities to better understand the true state of a patient health and well-being and to study new clinical outcomes defined by function and activity in the perioperative period.

SUMMARY

As perioperative EHR databases continue to evolve and expand, so too must the standards imposed and the methods used for ensuring high-fidelity integration of EHR data. The approach taken by the MPOG consortium offers specific techniques for improving usability of perioperative EHR data for quality improvement and research analytics. Our approach aims to maintain confidence in the validity of research and quality improvement projects, while being cognizant of the specific limitations of these works. Emerging from our experience, we consider that the engagement of investigators and clinicians improves understanding of clinical context and can increase data quality. This enables perioperative EHR databases to be a significant tool within the modern health care armamentarium.

Supplementary Material

ACKNOWLEDGMENTS

The authors acknowledge the work of the staff of the Multicenter Perioperative Outcomes Group (MPOG) coordinating center in the development of the technical, regulatory, and organizational infrastructure required for the establishment and sustainment of the work described.

The authors additionally acknowledge Michelle Romanowski (Department of Anesthesiology, University of Michigan Medical School, Ann Arbor, Michigan) for contributions in the acquisition of data required for this manuscript.

The authors thank Ken Arbogast-Wilson (WTW Design Group, Ann Arbor, Michigan) for the creation of Figure 1 and Paul Trombley (Graphics Artist, University of Michigan, Ann Arbor, Michigan) for the creation of Figure 2.

Finally, the authors recognize the contributions of the entire MPOG membership across the participating institutions for their ongoing collaboration in this endeavor.

Funding: All work and partial funding attributed to the Department of Anesthesiology, University of Michigan Medical School (Ann Arbor, Michigan). Research reported in this publication was supported by National Institute for General Medical Sciences of the National Institutes of Health under award number T32GM103730 (D.A.C. and M.L.B.); National Heart, Lung and Blood Institute of the National Institutes of Health under award number K01HL141701 (M.R.M.); and National Center for Advancing Translational Sciences under award number 1KL2TR002245 (R.E.F.). Support for underlying electronic health record data collection was provided, in part, by Blue Cross Blue Shield of Michigan (BCBSM) and Blue Care Network as part of the BCBSM Value Partnerships program for contributing hospitals in the state of Michigan. Although BCBSM and the Multicenter Perioperative Outcomes Group work collaboratively, the opinions, beliefs, and viewpoints expressed by the authors do not necessarily reflect the opinions, beliefs, and viewpoints of BCBSM or any of its employees.

GLOSSARY

- AACD

Association of Anesthesia Clinical Directors

- AIMS

Anesthesia Information Management Systems

- BCBSM

Blue Cross Blue Shield of Michigan

- EHR

electronic health record

- ICD-10

International Classification of Diseases, Tenth Revision

- IMPACT

Initiative for Multicenter Pragmatic Anesthesiology Clinical Trials

- IRB

institutional review board

- MPOG

Multicenter Perioperative Outcomes Group

- NSQIP

National Surgical Quality Improvement Project

- PACU

postanesthesia care unit

- PCRC

Perioperative Clinical Research Committee

- PHI

protected health information

- QCDR

Qualified Clinical Data Registry

- SNOMED

Systematized Nomenclature of Medicine

- STS-GTSD

Society of Thoracic Surgeons General Thoracic Surgery Database

DISCLOSURES

Name: Douglas A. Colquhoun, MBChB, MSc, MPH.

Contribution: This author helped design the work, analyze and interpret the data, draft the manuscript, and critically review and revise the manuscript.

Conflicts of Interest: None.

Name: Amy M. Shanks, PhD.

Contribution: This author helped design the work and critically review and revise the manuscript.

Conflicts of Interest: None.

Name: Steven R. Kapeles, MD.

Contribution: This author helped design the work, draft the figures, and critically review and revise the manuscript.

Conflicts of Interest: None.

Name: Nirav Shah, MD.

Contribution: This author helped critically review and revise the manuscript.

Conflicts of Interest: None.

Name: Leif Saager, DrMed, MMM.

Contribution: This author helped design the work and critically review and revise the manuscript.

Conflicts of Interest: L. Saager declares consultancy fees from Medtronic, The 37 Company & Merck and research support from Merck.

Name: Michelle T. Vaughn, MPH.

Contribution: This author helped critically review and revise the manuscript.

Conflicts of Interest: None.

Name: Kathryn Buehler, MS, RN, CPPS.

Contribution: This author helped critically review and revise the manuscript.

Conflicts of Interest: None.

Name: Michael L. Burns, MD, PhD.

Contribution: This author helped analyze the data and critically review and revise the manuscript.

Conflicts of Interest: None.

Name: Kevin K. Tremper, MD, PhD.

Contribution: This author helped design the article and critically review and revise the manuscript.

Conflicts of Interest: K. K. Tremper is the founder and equity holder of AlertWatch Inc.

Name: Robert E. Freundlich, MD.

Contribution: This author helped prepare the manuscript and critically review the final version of the manuscript.

Conflicts of Interest: R. E. Freundlich declares consultancy fees from Covidien.

Name: Michael Aziz, MD.

Contribution: This author helped prepare the manuscript and critically review the final version of the manuscript.

Conflicts of Interest: None.

Name: Sachin Kheterpal, MD, MBA.

Contribution: This author helped design the article; acquire, analyze, and interpret the data; and critically review and revise the manuscript.

Conflicts of Interest: None.

Name: Michael R. Mathis, MD.

Contribution: This author helped design the article, analyze the data, draft the manuscript, and critically review and revise the manuscript.

Conflicts of Interest: None.

This manuscript was handled by: Maxime Cannesson, MD, PhD.

Footnotes

Conflicts of Interest: See Disclosures at the end of the article.

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s website (http://www.anesthesia-analgesia.org).

Reprints will not be available from the authors.

REFERENCES

- 1.Center for Medicare Services (CMS). Promoting Interoperability (PI). 2019. Available at: https://www.cms.gov/Regulations-and-Guidance/Legislation/EHRIncentivePrograms/. Accessed April 22, 2019.

- 2.Stol IS, Ehrenfeld JM, Epstein RH. Technology diffusion of anesthesia information management systems into academic anesthesia departments in the United States. Anesth Analg. 2014;118:644–650. [DOI] [PubMed] [Google Scholar]

- 3.Peterfreund RA, Driscoll WD, Walsh JL, et al. Evaluation of a mandatory quality assurance data capture in anesthesia: a secure electronic system to capture quality assurance information linked to an automated anesthesia record. Anesth Analg. 2011;112:1218–1225. [DOI] [PubMed] [Google Scholar]

- 4.Sandberg WS, Sandberg EH, Seim AR, et al. Real-time checking of electronic anesthesia records for documentation errors and automatically text messaging clinicians improves quality of documentation. Anesth Analg. 2008;106:192–201. [DOI] [PubMed] [Google Scholar]

- 5.Freundlich RE, Barnet CS, Mathis MR, Shanks AM, Tremper KK, Kheterpal S. A randomized trial of automated electronic alerts demonstrating improved reimbursable anesthesia time documentation. J Clin Anesth. 2013;25:110–114. [DOI] [PubMed] [Google Scholar]

- 6.Spring SF, Sandberg WS, Anupama S, Walsh JL, Driscoll WD, Raines DE. Automated documentation error detection and notification improves anesthesia billing performance. Anesthesiology. 2007;106:157–163. [DOI] [PubMed] [Google Scholar]

- 7.Epstein RH, Dexter F, Patel N. Influencing anesthesia provider behavior using anesthesia information management system data for near real-time alerts and post hoc reports. Anesth Analg. 2015;121:678–692. [DOI] [PubMed] [Google Scholar]

- 8.Wanderer JP, Charnin J, Driscoll WD, Bailin MT, Baker K. Decision support using anesthesia information management system records and accreditation council for graduate medical education case logs for resident operating room assignments. Anesth Analg. 2013;117:494–499. [DOI] [PubMed] [Google Scholar]

- 9.Klumpner TT, Kountanis JA, Langen ES, Smith RD, Tremper KK. Use of a novel electronic maternal surveillance system to generate automated alerts on the labor and delivery unit. BMC Anesthesiol. 2018;18:78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kheterpal S, Shanks A, Tremper KK. Impact of a novel multiparameter decision support system on intraoperative processes of care and postoperative outcomes. Anesthesiology. 2018;128:272–282. [DOI] [PubMed] [Google Scholar]

- 11.Sathishkumar S, Lai M, Picton P, et al. Behavioral modification of intraoperative hyperglycemia management with a novel real-time audiovisual monitor. Anesthesiology. 2015;123:29–37. [DOI] [PubMed] [Google Scholar]

- 12.Deng F, Hickey JV. Anesthesia information management systems: an underutilized tool for outcomes research. AANA J. 2015;83:189–195. [PubMed] [Google Scholar]

- 13.Muravchick S Anesthesia information management systems. Curr Opin Anaesthesiol. 2009;22:764–768. [DOI] [PubMed] [Google Scholar]

- 14.Rosenstock CV, Nørskov AK, Wetterslev J, Lundstrøm LH; Danish Anaesthesia Database. Emergency surgical airway management in Denmark: a cohort study of 452 461 patients registered in the Danish anaesthesia database. Br J Anaesth. 2016;117(suppl 1):i75–i82. [DOI] [PubMed] [Google Scholar]

- 15.Bateman BT, Mhyre JM, Ehrenfeld J, et al. The risk and outcomes of epidural hematomas after perioperative and obstetric epidural catheterization: a report from the Multicenter Perioperative Outcomes Group research consortium. Anesth Analg. 2013;116:1380–1385. [DOI] [PubMed] [Google Scholar]

- 16.Saager L, Turan A, Egan C, et al. Incidence of intraoperative hypersensitivity reactions: a registry analysis: a registry analysis. Anesthesiology. 2015;122:551–559. [DOI] [PubMed] [Google Scholar]

- 17.Whitlock EL, Feiner JR, Chen LL. Perioperative mortality, 2010 to 2014: a retrospective cohort study using the national anesthesia clinical outcomes registry. Anesthesiology. 2015;123:1312–1321. [DOI] [PubMed] [Google Scholar]

- 18.Salmasi V, Maheshwari K, Yang D, et al. Relationship between intraoperative hypotension, defined by either reduction from baseline or absolute thresholds, and acute kidney and myocardial injury after noncardiac surgery: a retrospective cohort analysis. Anesthesiology. 2017;126:47–65. [DOI] [PubMed] [Google Scholar]

- 19.Ehrenfeld JM, Wanderer JP, Terekhov M, Rothman BS, Sandberg WS. A perioperative systems design to improve intraoperative glucose monitoring is associated with a reduction in surgical site infections in a diabetic patient population. Anesthesiology. 2017;126:431–440. [DOI] [PubMed] [Google Scholar]

- 20.Sun LY, Wijeysundera DN, Tait GA, Beattie WS. Association of intraoperative hypotension with acute kidney injury after elective noncardiac surgery. Anesthesiology. 2015;123:515–523. [DOI] [PubMed] [Google Scholar]

- 21.Karkouti K, Grocott HP, Hall R, et al. Interrelationship of preoperative anemia, intraoperative anemia, and red blood cell transfusion as potentially modifiable risk factors for acute kidney injury in cardiac surgery: a historical multicentre cohort study. Can J Anesth. 2015;62:377–384. [DOI] [PubMed] [Google Scholar]

- 22.Turan A, Mascha EJ, You J, et al. The association between nitrous oxide and postoperative mortality and morbidity after noncardiac surgery. Anesth Analg. 2013;116:1026–1033. [DOI] [PubMed] [Google Scholar]

- 23.Nielsen DV, Hansen MK, Johnsen SP, Hansen M, Hindsholm K, Jakobsen CJ. Health outcomes with and without use of inotropic therapy in cardiac surgery: results of a propensity score-matched analysis. Anesthesiology. 2014;120:1098–1108. [DOI] [PubMed] [Google Scholar]

- 24.Goldberg SI, Niemierko A, Turchin A. Analysis of data errors in clinical research databases. AMIA Annu Symp Proc. 2008;2008:242–246. [PMC free article] [PubMed] [Google Scholar]

- 25.Romano PS, Mark DH. Bias in the coding of hospital discharge data and its implications for quality assessment. Med Care. 1994;32:81–90. [DOI] [PubMed] [Google Scholar]

- 26.Raleigh VS, Cooper J, Bremner SA, Scobie S. Patient safety indicators for England from hospital administrative data: case-control analysis and comparison with US data. BMJ. 2008;337:a1702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Martin M, Kheterpal S. Monitoring artifacts and large database research: what you don’t know could hurt you. Can J Anesth. 2012;59:823–826. [DOI] [PubMed] [Google Scholar]

- 28.Kool NP, van Waes JA, Bijker JB, et al. Artifacts in research data obtained from an anesthesia information and management system. Can J Anesth. 2012;59:833–841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Anderson BJ, Merry AF. Paperless anesthesia: uses and abuses of these data. Paediatr Anaesth. 2015;25:1184–1192. [DOI] [PubMed] [Google Scholar]

- 30.Deshur MA, Levine WC. AIMS: Should we AIM Higher? Indianapolis, IN: Anesthesia Patient Safety Foundation; 2015. [Google Scholar]

- 31.Monk TG, Hurrell M, Norton A. Toward Standardization of Terminology in Anesthesia Information Management Systems. 2010. Available at: http://www.apsf.org/initiatives.php?id=2. Accessed March 9, 2016.

- 32.Neuman MD. The importance of validation studies in perioperative database research. Anesthesiology. 2015;123:243–245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kheterpal S. Clinical research using an information system: the multicenter perioperative outcomes group. Anesthesiol Clin. 2011;29:377–388. [DOI] [PubMed] [Google Scholar]

- 34.McCormick PJ, Yeoh C, Vicario-Feliciano RM, et al. Improved compliance with anesthesia quality measures after implementation of automated monthly feedback. J Oncol Pract. 2019;15:e583–e592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Forkin KT, Chiao SS, Naik BI, et al. Individualized quality data feedback improves anesthesiology residents’ documentation of depth of neuromuscular blockade before extubation. Anesth Analg. 2020;130:e49–e53. [DOI] [PubMed] [Google Scholar]

- 36.Multicenter Perioperative Outcomes Group (MPOG). Concept Browser. 2019. Available at: https://mpog.org/concept-browser/. Accessed April 22, 2019.

- 37.Boggs SD, Tsai MH, Urman RD; Association of Anesthesia Clinical Directors. The Association of Anesthesia Clinical Directors (AACD) glossary of times used for scheduling and monitoring of diagnostic and therapeutic procedures. J Med Syst. 2018;42:171. [DOI] [PubMed] [Google Scholar]

- 38.Kharasch ED. Observations and observational research. Anesthesiology. 2019;131:1–4. [DOI] [PubMed] [Google Scholar]

- 39.Eisenach JC, Kheterpal S, Houle TT. Reporting of observational research in anesthesiology: the importance of the analysis plan. Anesthesiology. 2016;124:998–1000. [DOI] [PubMed] [Google Scholar]

- 40.Kheterpal S, Healy D, Aziz MF et al. ; Multicenter Perioperative Outcomes Group (MPOG) Perioperative Clinical Research Committee. Incidence, predictors, and outcome of difficult mask ventilation combined with difficult laryngoscopy: a report from the multicenter perioperative outcomes group. Anesthesiology. 2013;119:1360–1369. [DOI] [PubMed] [Google Scholar]

- 41.Lee LO, Bateman BT, Kheterpal S et al. ; Multicenter Perioperative Outcomes Group Investigators. Risk of epidural hematoma after neuraxial techniques in thrombocytopenic parturients: a report from the Multicenter Perioperative Outcomes Group. Anesthesiology.2017;126:1053–1063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.de Graaff JC, Pasma W, van Buuren S, et al. Reference values for noninvasive blood pressure in children during anesthesia: a multicentered retrospective observational cohort study. Anesthesiology. 2016;125:904–913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Anesthesiology Performance Improvement and Reporting Exchange (ASPIRE). Our Measures. 2019. Available at: https://mpog.org/quality/our-measures/. Accessed April 22, 2019.

- 44.James S, Rao SV, Granger CB. Registry-based randomized clinical trials–a new clinical trial paradigm. Nat Rev Cardiol. 2015;12:312–316. [DOI] [PubMed] [Google Scholar]

- 45.Hemming K, Haines TP, Chilton PJ, Girling AJ, Lilford RJ. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. BMJ. 2015;350:h391. [DOI] [PubMed] [Google Scholar]

- 46.Thorlund K, Haggstrom J, Park JJ, Mills EJ. Key design considerations for adaptive clinical trials: a primer for clinicians. BMJ. 2018;360:k698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Weinfurt KP, Hernandez AF, Coronado GD, et al. Pragmatic clinical trials embedded in healthcare systems: generalizable lessons from the NIH collaboratory. BMC Med Res Methodol. 2017;17:144. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.