Abstract

Objective

Telephone-based cognitive assessment (TBCA) has long been studied but less widely adopted in routine neuropsychological practice. Increased interest in remote neuropsychological assessment techniques in the face of the coronavirus 2019 (COVID-19) pandemic warrants an updated review of relevant remote assessment literature. While recent reviews of videoconference-based neuropsychological applications have been published, no updated compilation of empirical TBCA research has been completed. Therefore, this scoping review offers relevant empirical research to inform clinical decision-making specific to teleneuropsychology.

Method

Peer-reviewed studies addressing TBCA were included. Broad search terms were related to telephone, cognitive, or neuropsychological assessment and screening. After systematic searching of the PubMed and EBSCO databases, 139 relevant articles were retained.

Results

In total, 17 unique cognitive screening measures, 20 cognitive batteries, and 6 single-task measures were identified as being developed or adapted specifically for telephone administration. Tables summarizing the identified cognitive assessments, information on diagnostic accuracy, and comparisons to face-to-face cognitive assessment are included in supplementary materials.

Conclusions

Overall, literature suggests that TBCA is a viable modality for identifying cognitive impairment in various populations. However, the mode of assessment selected clinically should reflect an understanding of the purpose, evidence, and limitations for various tests and populations. Most identified measures were developed for research application to support gross cognitive characterization and to help determine when more comprehensive testing was needed. While TBCA is not meant to replace gold-standard, face-to-face evaluation, if appropriately utilized, it can expand scope of practice, particularly when barriers to standard neuropsychological assessment occur.

Keywords: Telephone, Cognitive assessment, Telehealth, Neuropsychological assessment, Teleneuropsychology

Introduction

Remote neuropsychological assessment via telehealth (videoconference or telephone, i.e., teleneuropsychology) is of interest to both clinical and research neuropsychologists given the barriers sometimes encountered by patients and research participants (e.g., time, transportation, funds) to in-person visits, with utility dating back to the 1980s. Videoconference-based teleneuropsychology has garnered more attention relative to telephone audio-only teleneuropsychology and has some obvious benefits inherent to the video modality; however, many patients (as well as research participants) do not have access to the required equipment (e.g., high-speed internet, videoconference capable devices). The American Psychological Association (APA, 2017) Ethical Principle of Justice states, “Psychologists recognize that fairness and justice entitle all persons to have access to and benefit from the contributions of psychology and to equal quality in the processes, procedures, and services being conducted by psychologists” (Principle D: Justice). As such, a case can be made for adopting telephone-based teleneuropsychology, to which most people have easy access, when needed. As with videoconference-based testing, adapting the cognitive assessment portion of the neuropsychological evaluation for telephone administration is challenging; however, the existing literature on telephone-based cognitive assessment (TBCA) supports its feasibility and utility.

TBCA has perhaps been most frequently used in research settings (Russo, 2018) as a means to improve access to geographically dispersed and/or underserved populations (Castanho et al., 2014; Kliegel, Martin, & Jäger, 2007; Marceaux et al., 2019), increase cost-effectiveness (Castanho et al., 2014; Rabin et al., 2007), and reduce attrition (Beeri, Werner, Davidson, Schmidler, & Silverman, 2003; Castanho et al., 2014). It has also been suggested that such assessments are well accepted by older/elder populations and may be more forgiving in that they limit physical or motor requirements (Castanho et al., 2014; Kliegel et al., 2007).

Recent video-based teleneuropsychology meta-analyses primarily provide support for the use of neuropsychological testing that relies largely upon verbal responses (e.g., see the reviews by Brearly et al., 2017 and Marra, Hamlet, Bauer, & Bowers, 2020), which is the sole response type typically collected from TBCA. Furthermore, TBCA may circumvent some challenges ascribed to teleconference neuropsychological assessments, including minimizing disruption in communication (e.g., breaks in Wi-Fi connection, network speed limitations, loss or delay of sound) and other unpredictable complications (e.g., display or camera quality, limitations of camera angle, display size, sound/microphone quality, adequacy of computer/transmission device, familiarity with videoconference technology; Cernich, Brennana, Barker, & Bleiberg, 2007; Grosch, Gottlieb, & Cullum, 2011).

For both video-based teleneuropsychology and TBCA, familiar concerns regarding standardization, available normative data, and adequate cohort comparisons have remained limiting factors expressed by some neuropsychologists (Brearly et al., 2017; Marceaux et al., 2019) when considering implementing teleneuropsychology. However, within the past three decades, investigations using TBCAs have regularly been published, often assessing specific populations such as mild cognitive impairment (MCI) and dementia (e.g., Manly et al., 2011; Seo et al., 2011) and/or evaluating telephone-based measures/test batteries (e.g., the Mental Alternation Test [MAT]; McComb et al., 2010). The last reviews of TBCA measures were published in 2013 and 2014, and two of these limited their scope to measures designed to screen for MCI and dementia in older populations (Castanho et al., 2014; Kwan & Lai, 2013). Both reviews noted the demonstrated utility of TBCA for detecting cognitive impairment in certain clinical populations but called for further research to better characterize existing measures and develop new measures with enhanced psychometric properties. Another review (Herr & Ankri, 2013) limited their scope to tests used to recruit or screen research participants and noted TBCA may be especially appropriate in epidemiological studies given the benefit of reduced selection bias. However, the evidence for using TBCA to recruit patients for clinical trials was mixed, particularly for identifying patients with milder cognitive impairment. To date, no reviews to our knowledge have included TBCA measures developed for populations other than aging and dementia.

Overall, the use of teleneuropsychology has remained somewhat rare across available surveyed neuropsychologists (Rabin, Barr, & Burton, 2005; Russo, 2018), and reimbursement for such services has been limited (although this has improved with recent advocacy efforts; APA, 2020). However, with recent pandemic-based stay-at-home orders (for patients and providers) and uncertainty about safety of in-person contacts, an increasing number of neuropsychologists have expressed an interest in teleneuropsychology (Multiple authors, email communications sent over NPSYCH listserv, 2020). Thus, the need for an updated, broader review of TBCA has emerged. The goals of this paper are to offer an updated and systematic review of empirical research on TBCA, to present a useful and practical list of available TBCA measures and associated psychometric properties, and to assist clinicians and researchers in understanding their benefits and limitations to inform clinical and research decision-making.

Methods

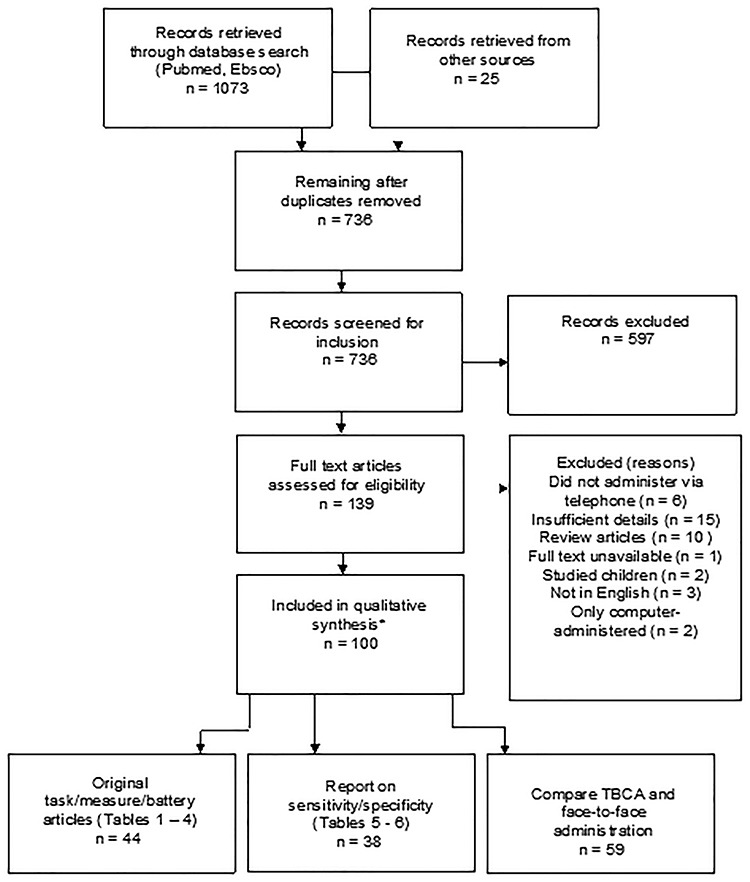

PubMed and EBSCO databases were queried in April of 2020. The search strategy utilized a combination of the search terms “telephone,” “cognit*,” “neuropsy*,” “cognit* status,” “~assessment,” and “screening,” using Boolean operators (AND, OR, NOT), excluding all therapy-based studies and using filters for English language, adult studies, and human studies. Queries returned a total of 1,073 articles. Reference sections of selected articles were reviewed to glean additional papers our search may have missed. Inclusion criteria were as follows: (a) empirical studies of any design published in a peer-reviewed format, (b) utilizing a telephone-based evaluation of cognitive functioning, and (c) validating or otherwise examining psychometric properties of a telephone-based assessment. Authors (ARC, HF, and JRL) independently reviewed titles and abstracts of articles returned to screen for eligibility based on the inclusion criteria stated earlier. Ultimately, 139 articles were retained for review. Full texts of eligible articles were retrieved via electronic library resources and rescreened by ARC, JRL, and HF (see Fig. 1).

Fig. 1.

Search strategy and results (date of search: April 2020). TBCA, telephone-based cognitive assessment.

Data Extraction

For Tables 1–4, (Supplementary material online), two researchers (HF and JRL) independently extracted data on study characteristics followed by a cross-check to ensure accuracy. For Tables 5–7, (Supplementary material online), four researchers extracted study data (CP, BM, HF, and ARC), also followed by cross-check for accuracy. The following study information was extracted: test/battery name, article authors, year published, primary aim(s), domains assessed, administration properties (items, time), populations included, languages, validation information, and scoring/interpretive information.

Synthesis of Results

Due to the heterogeneity in study designs, populations, statistical analysis, and data reported, a quantitative synthesis through meta-analysis was not conducted. Therefore, we present results in a series of tables (see Supplementary material online), summarizing key measures in narrative format and highlighting applicability to different referral questions and populations. An exhaustive list of all TBCA screening measures and batteries with descriptive information can be found in Supplementary material online, Tables 1–4. We will first review the most common measures used for TBCA, followed by a discussion of standard neuropsychological tests used in TBCA and computer-assisted TBCA. In general, our goal for these summaries is to provide the reader with narrative detail on the instrument’s development, purpose, and evidence for use. All studies reviewed here, conducted TBCA with participants off-site, presumably in their primary residence (not all studies gathered information on the exact location of the participant at the time of testing). Information found on diagnostic sensitivity/specificity is given for all studies reporting this information and is included in Supplementary material online, Tables 5 and 6. TBCA measures' comparison to in-person administration of neuropsychological tests is presented in Supplementary material online Table 7 to inform the reader of TBCA's concurrent validity with routine neuropsychological assessment.

Results

Screening Measures Developed for Telephone Use

Telephone Interview for Cognitive Status (TICS) and modified TICS

The Telephone Interview for Cognitive Status (TICS), developed by Brandt, Spencer, and Folstein (1988), was one of the earliest cognitive screening tests for dementia to be developed for administration via telephone to enhance patient follow-up and permit large-scale field studies of cognitive impairment. The TICS instrument consists of 11 items with a maximum of 41 points assessing the domains of orientation, attention/executive functioning (backwards counting, serial 7s, opposites), immediate memory, and language (sentence repetition, auditory naming, following directions). In the original study of 100 patients with Alzheimer’s disease (AD; M age = 71.4, M education = 13.1 years) and 33 normal controls (M age = 67.1, M education = 15.0 years), the TICS demonstrated 94% sensitivity and 100% specificity (100% positive predictive value [PPV] and 97% negative predictive value [NPV]) using a cutoff score of ≤30. The TICS total score was also highly correlated with Mini-Mental State Examination (MMSE; Folstein, Folstein, & McHugh, 1975) scores (r = .94) and demonstrated good test-retest reliability (r = .97).

The TICS (Brandt et al., 1988) was subsequently modified (TICS-M; Welsh, Breitner, & Magruder-Habib, 1993) to add a measure of delayed verbal free recall to the existing items to increase its sensitivity to MCI and AD and is scored out of 50 points. The TICS-M is among the most widely used TBCA tools (Castanho et al., 2014) and is often used in epidemiological research (Herr & Ankri, 2013). Diagnostic sensitivity/specificity of the TICS-M has been investigated in multiple studies across various populations (see Supplementary material online, Table 5). It also correlates well with other cognitive screening measures (e.g., the MMSE; de Jager, Budge, & Clarke, 2003) and comprehensive neuropsychological assessments (Crooks, Petitti, Robins, & Buckwalter, 2006; Duff, Beglinger, & Adams, 2009; see Supplementary material online, Table 7). In addition, there is some evidence that the TICS-M is less constrained by ceiling effects than similar standard screening measures like the MMSE (de Jager et al., 2003).

Both the TICS and TICS-M have been translated and used in multiple languages including Korean (Seo et al., 2011), German (Debling, Amelang, Hasselback, & Sturmer, 2005), Hebrew (Beeri et al., 2003), Italian (Dal Forno et al., 2006), Japanese (Konagaya et al., 2007), Finnish (Järvenpää et al., 2002), French (Vercambre et al., 2010), Portuguese (Castanho et al., 2016), and Spanish (Gude Ruiz, Calvo Mauri, & Carrasco López,1994). Despite development for use in dementia populations, several studies have reported on the utility of the TICS and TICS-M for detecting cognitive impairment in other populations including multiple sclerosis (George et al., 2016) and stroke (Barber & Stott, 2004; Desmond, Tatemichi, & Hanzawa, 1994). Cognitive screening for enrollment in clinical trials and epidemiologic research are other common applications of these measures (Herr & Ankri, 2013). Dennett, Tometich, and Duff (2013) published demographic corrections for the TICS-M given prior evidence of the effects of age and education on performance (e.g., de Jager et al., 2003; Duff et al., 2009). Duff, Shprecher, Litvan, Gerstenecker, and Mast (2014) also published regression-based corrections with a larger sample, additionally finding an effect for gender. More recently, Lindgren, Rinne, Palviainen, Kaprio, and Vuoksimaa (2019) compared the utility of three TICS-M versions and scoring methods (50-, 27-, and 35-point versions; Crimmins, Kim, Langa, & Weir, 2011; Knopman et al., 2010; Ofstedal, Fisher, & Herzog, 2005) with and without education-adjusted scoring to classify dementia and MCI in a large Finnish sample (n = 1,772, M age = 74, M education = 9 years). They demonstrated that older age, greater depression symptoms, male sex, positive APOE e4 status, and especially lower education were associated with poorer TICS-M performance. Specifically, a floor effect was noted for word list delayed recall among individuals with low education. Scoring methods adjusting for education (Knopman et al., 2010) resulted in more accurate prevalence estimates of MCI as well as higher associations with APOE e4 status in their sample, lending support for the use of education-adjusted scores when the goal is to detect MCI (Lindgren et al., 2019). Also, Bentvelzen et al. (2019) published normative data for the TICS-M and developed an online calculator tool to compute regression-based normative data and reliable change statistics. Manly and colleagues (2011) demonstrated the diagnostic utility of the TICS in a multi-ethnic population (n = 377) for distinguishing normal cognition (M age = 80, M education = 11 years) and MCI (M age = 82, M education = 10 years) from dementia (M age = 86, M education = 6 years), finding comparable validity among non-Hispanic Whites (31%), non-Hispanic Blacks (35%), and Caribbean Hispanic participants (34% of the sample; 88% sensitivity and 87% specificity for the whole sample). Similarly, several studies have reported that the TICS-M has adequate sensitivity/specificity for distinguishing normal cognition and MCI, as well as MCI and dementia (Beeri et al., 2003; Cook, Marsiske, & McCoy, 2009; Duff et al., 2009; Lines, McCarroll, Lipton, & Block, 2003). Others report that while the TICS-M does well in distinguishing dementia from normal cognition, it is less adept at distinguishing MCI from normal cognition or dementia (e.g., Knopman et al., 2010; Yaari, Fleisher, Gamst, Bagwell, & Thal, 2006). Regarding limitations, there is a dearth of research providing evidence of the TICS or TICS-M’s longitudinal utility (sensitivity to change over time), and at least one group has reported that they may not be suitable for use with the oldest old (e.g., 85–90; Baker et al., 2013) due to sensory limitations causing confusion and undue anxiety. Overall, the TICS and TICS-M are arguably the most well-characterized TBCA, but they are still vulnerable to the same limitations of typical screening tests as well as the limitations of telephone administration (e.g., hearing loss).

Telephone MMSE

The MMSE (Folstein et al., 1975) has long been described as the most widely employed screening instrument for cognitive impairment (Tombaugh & McIntyre, 1992), but with limitations including ceiling effects and limited sensitivity to mild degrees of cognitive impairment (Norton et al., 1999) and specific cognitive impairments. The MMSE is composed of a series of brief tasks assessing aspects of orientation, attention, calculation, language, verbal recall, and visuospatial skills and is scored out of 30 points.

While different adaptations of telephone-administered MMSEs have been proposed (e.g., Teng & Chui, 1987; Tombaugh & McIntyre, 1992), the Adult Lifestyles and Function Interview-MMSE (ALFI-MMSE) first demonstrated the construct validity of a telephone version of the MMSE (Roccaforte, Burke, Bayer, & Wengel, 1992). The first 10 items are orientation questions, followed by screening tasks of registration (repetition of three words), attention and calculation (serial 7 s), and recall (delayed recall of the three words). Departures from the original MMSE include the language section that requires repetition of a phrase and naming one item (i.e., “Tell me, what is the thing called that you are speaking into?”). A second naming item, three-step commands, sentence writing and comprehension, and copy of intersecting pentagons were excluded. The ALFI-MMSE has 22 points compared to the original MMSE’s 30 points. In a sample of 100 elderly subjects (95% White, M age = 79, M education = 11.5 years) with normal cognition or cognitive impairment, the phone and face- to-face versions of the MMSE correlated well across subjects (r = .85, p < .001). Based on traditional proportionate cutoff scores for impairment (i.e., 24 for MMSE, 17 for ALFI-MMSE), when cognitive impairment (vs. no impairment) was determined by the brief neuropsychiatric screening test (a brief battery of neuropsychological tests developed to be sensitive to mild AD; Storandt, Botwinick, Danziger, Berg, & Hughes, 1984), the MMSE had a sensitivity of 68% and specificity of 100%, whereas the ALFI-MMSE values were 67% and 100%, respectively (Roccaforte et al., 1992).

Another telephone-based variant of the MMSE, the telephone-administered MMSE (TMMSE), was adapted from the ALFI-MMSE (Newkirk et al., 2004). The most noteworthy difference between the 22-point ALFI-MMSE and the 26-point TMMSE is the addition of a three-step command: “Say hello, tap the mouthpiece of the phone three times, then say I’m back.” This adaptation also contains an additional question that asks the examinee to give the interviewer a phone number where they can usually be reached. Total score correlations between the MMSE and TMMSE were strong in a sample of 53 participants (87% White, M age = 77, 72% with >12 years of education) with AD (r = 0.88, p ≤ .001; Newkirk et al., 2004).

The telephone-modified MMSE (T-3MS), a telephone adaptation to the modified MMSE (3MS; Teng & Chui, 1987), was published in 1999 (Norton et al., 1999). The 3MS was developed to assess a wider range of cognitive abilities, expanding the scoring from 30 to 100 points, and has demonstrated superior reliability and sensitivity in detecting dementia compared to the MMSE (e.g., Bravo & Hébert, 1997; Grace et al., 1995; McDowell, Kristjansson, Hill, & Herbert, 1997). In developing the telephone version, nine 3MS items were removed (substituting questions intended to assess the same basic domains of cognitive function); for example, written command and written sentence were translated to aural command and spoken sentence. The 3MS’s three-step command was also translated into a three-step procedure (i.e., “Please tap three times on the part of the phone you speak into, press/dial the number 1, and then say I’m done”; Norton et al., 1999, p. 272). Copying of the MMSE intersecting pentagons was changed to questions about positioning clock hands at specified times of day (i.e., individuals were instructed to picture a clock and answer questions about where hands would be pointing at different times). Comparing the 3MS and T-3MS, the correlations from 263 community-dwelling adults in southern Idaho (M age = 76, M education = 13 years) over five age groups were r = 0.54 (ages 65–69), r = 0.69 (ages 70–74), r = 0.69 (ages 75–79), r = 0.89 (ages 80–84), and r = 0.79 (ages 85+; Norton et al., 1999). More recently, an Italian telephone version of the MMSE (Itel-MMSE) was published (Vanacore et al., 2006). The Itel-MMSE was simply described as being composed of seven items that assess orientation, language comprehension and expression, attention, and memory (with a score ranging from 0 to 22). The Itel-MMSE was only weakly, but significantly, correlated with the standard MMSE total score (r = 0.26, p = .006) in a sample of 107 cognitively normal (M age = 64, M education = 11 years) participants from the National Twin Registry Study (Vanacore et al., 2006). See Supplementary material online, Tables 6 and 7 for more information on the psychometric properties of various telephone iterations of the MMSE in different populations. Our search revealed that telephone versions of the MMSE (ALFI-MMSE, T-MMSE, MMSET and T3MS) have only been used in aging populations, with the majority of studies (with the exception of the Itel-MMSE and the Braztel-MMSE; Camozzato, Kochhann, Godinho, Costa, & Chaves, 2011) being conducted with monolingual English-speaking, educated, White participants, and thus have limited evidence for use in younger and more diverse populations.

Telephone Montreal Cognitive Assessment

The original Montreal Cognitive Assessment (MoCA; Nasreddine et al., 2005) is a cognitive screening tool used to assess eight cognitive domains (short-term memory, visuospatial abilities, executive functioning, attention, concentration, working memory, language, and orientation). The MoCA was developed to overcome limitations of the MMSE in detecting milder forms of cognitive impairment (Nasreddine et al., 2005). The telephone Montreal Cognitive Assessment (T-MoCA; Pendlebury et al., 2013) was developed to improve the efficiency of screening patients for research purposes. For telephone administration, all MoCA items requiring pencil and paper or a visual stimulus were removed. One modification was applied to the MoCA sustained attention task in which subjects tap on the desk during face-to-face testing; rather, in the telephone version, subjects were instructed to tap the side of the telephone with a pencil. The additional point for low education that was originally suggested by the developers was not added to the original T-MoCA (Pendlebury et al., 2013), but it has been added to subsequent T-MoCA studies (Zietemann et al., 2017). As such, the maximum score ranges from 22 to 23, respectively.

In patients with a history of stroke or transient ischemic attack (TIA; n = 91, M age = 73, 63% with <12 years of education), a T-MoCA cutoff of 18–19 has been found optimal for discrimination of normal versus impaired cognition (Pendlebury et al., 2013). Reliability of diagnosis for MCI was good, with area under the curve (AUC) value of 0.75. Reliability of diagnosis for MCI was reported to be greater (AUC = 0.85) when based on multi-domain MCI (vs. single domain or amnestic MCI; Pendlebury et al., 2013). Zietemann and colleagues (2017) reported similar findings in their population (n = 105, M age = 69, 38% with <12 years of education) of German stroke survivors, reporting an optimal cutoff score of 19 for MCI diagnosis.

Pendlebury and colleagues (2013) and Wong and colleagues (2015) also described a 5-min protocol for administering the MoCA via telephone utilizing only the word learning and recall trials, orientation items, and verbal fluency items. In Wong et al.’s (2015) study, participants (N = 104) were adults hospitalized in Hong Kong for ischemic stroke or TIA classified as normal (n = 53, M age = 69, M education = 6.3) or cognitively impaired (n = 51, M age = 71, M education = 6 years) who were administered the MoCA on initial presentation and agreed to be contacted later (M = 39.4 months) and administered the 5-min telephone MoCA (assessments were in Cantonese). A strong correlation was observed between modalities (r = 0.87), and the AUC for detecting cognitive impairment (based on in-person Clinical Dementia Rating [CDR] scale score ≥0.5) for the MoCA 5-min protocol was 0.78.

Overall, the telephone versions of the MoCA seem to be feasible for telephone screening of cognition. However, limitations exist in assessing visuospatial functioning, executive functioning, and complex language compared with face-to-face MoCA or gold-standard neuropsychological assessment. Furthermore, one study reported that participants performed significantly worse on the T-MoCA’s repetition, abstraction, and verbal fluency items (p < .02) than traditional MoCA administration (Pendlebury et al., 2013). Finally, like other measures reviewed herein, evidence of the T-MoCA’s applicability to educationally, ethnically, and linguistically diverse populations is lacking.

TELE

A telephone-based interview that includes cognitive tasks, referred to as the TELE, was initially developed as a screening instrument for a genetic study of dementing illnesses (Gatz et al., 1995). The instrument is composed primarily of items from other cognitive and mental status screening tests including the Southern California Alzheimer’s Disease Research Center (SCADRC) iterations of the Mental Status Questionnaire (MSQ; Kahn, Pollack, & Goldfarb, 1961; Zarit, 1980) and MMSE, the CDR, the Short Portable Mental Status Questionnaire (Pfeiffer, 1975), and the Memory-Information-Concentration test (Blessed, Tomlinson, & Roth, 1968). The chosen subtests explore orientation, attention, short-term memory, verbal abstraction, and recognition. In addition, the protocol includes interview questions regarding memory concerns, functional status, and mood screening questions from the Diagnostic Interview Schedule (Regier et al., 1984). There are two possible scoring methods: a 20-point scoring system, and an a priori algorithm based on number and pattern of errors (rather than a total score). Gatz and colleagues (1995) investigated the TELE’s psychometric properties among a registry sample of 15 subjects with AD (M age = 76, M education = 14 years) and 22 healthy controls (M age = 76, M education = 15 years). Based on the 20-point scoring system, scores correlated strongly with the SCADRC MSQ (r = 0.93). The algorithm showed 100% sensitivity and 91% specificity in diagnosing AD versus normal controls in this small pilot sample.

The TELE has also been investigated among a population-based sample of older adults from the Swedish Twin Study (n = 269; Gatz et al., 2002). An optimal cutoff score of 15/16 of 20 items revealed a sensitivity of 86% and a specificity of 90% (PPV 43%) in differentiating between cognitively intact individuals (n = 247, M age = 73) and those with various forms of dementia (n = 22, M age = 82). The algorithm scoring method described earlier (see Gatz et al., 1995 for further details) yielded a sensitivity of 95% and a specificity of 52% (PPV 46%). Interestingly, longitudinal follow-ups revealed that those identified as a false positive using the cut score (15/16) were significantly more likely to develop dementia than those identified as true negatives (p = .02). This suggests some utility in identifying cases of mild cognitive decline that may be at greater risk of developing dementia. The TELE has also been translated and adapted for use in Finnish populations (Järvenpää et al., 2002), with a cutoff score of 17 (sensitivity 90%, specificity 88.5%) identified as optimal in a sample of AD (n = 30; M age = 70, 53% with <7 years of education) and healthy control subjects (n = 26, M age = 73, 69% with < 7 years of education). Thus, as with many cognitive screening tests, the evidence available suggests that the TELE is perhaps most useful for discriminating normal cognition from gross dementia, although published data on the TELE are limited.

Additional screening measures

While the measures reviewed earlier appear to be the most well studied for TBCA, our search yielded several additional options for cognitive screening via telephone (see Supplementary material online, Table 1). Several of these measures were derived from the Blessed Information-Memory-Concentration (IMC) test or the Orientation Memory Concentration (OMC) test (Blessed et al., 1968; Katzman et al., 1983). The former is a 37-point screening instrument assessing orientation, personal memory, remote memory, and attention (Blessed et al., 1968). The Blessed OMC is a shortened version of the IMC, consisting of only six items assessing orientation, attention/concentration (count backward from 20, say months in reverse order), and address repetition and recall (Katzman et al.‚ 1983). Kawas, Karagiozis, Resau, Corrada, and Brookmeyer (1995) originally adapted the Blessed OMC for telephone administration, noting excellent agreement with in-person administration (see Supplementary material online, Table 7), though they found that orientation to place items were less relevant to telephone administration, producing significantly higher correct rates than in-person clinic assessment. Given these findings, Zhou, Zhang, and Mundt (2004) adapted the place orientation items to person orientation items and still achieved excellent correlation with in-person administration (see Supplementary material online, Table 7). Go and colleagues (1997) incorporated items from the Blessed OMC into their Structured Telephone Interview for Dementia Assessment, which also includes items from the MMSE and a structured interview for patient history. Finally, in a small study of older adults (n = 12), Dellasega, Lacko, Singer, and Salerno (2001) found excellent concordance of the TCBA and in-person Blessed OMC (Supplementary material online, Table 7).

Lipton and colleagues (2003) adapted the Memory Impairment Screen (MIS; Buschke et al., 1999), a 4-min cognitive screening measure consisting of a 4-item list with free and cued recall conditions, for telephone administration (MIS-T). In their sample (N = 300), the MIS-T had comparable diagnostic accuracy (AUC = 0.92) to the in-person MIS (AUC = 0.93) for detecting dementia and outperformed the TICS, despite being only 4 min (vs. 10 min for the TICS; see Supplementary material online, Table 5 for more study information). Lipton and colleagues (2003) also present normative data for the MIS-T, TICS, and Category Fluency-Telephone. Rabin and colleagues (2007) described the development of a structured interview, the Memory and Aging Telephone Screen, for a longitudinal study of early dementia. The measure mostly consists of structured interview questions but also includes a 10-item list-learning test (with alternate forms), with three learning trials and delayed free recall and yes/no recognition trials.

The six-item screener, a brief measure consisting of three-item recall and three-item temporal orientation (Callahan, Unverzagt, Hui, Perkins, & Hendrie, 2002), was adapted for TBCA by Chen and colleagues (2015) for use in tandem with the 5-min protocol National Institute of Neurological Disorders and Stroke and Canadian Stroke Network with stroke patients in China (Hachinski et al., 2006). It is also worth noting that as with other cognitive screening measures, abbreviated versions of the MoCA have been developed that can be administered via telephone. One example is the empirically derived short-form MoCA (SFMoCA; Horton et al., 2015), which is composed of the orientation, serial subtraction, and delayed recall of the word list items. Total SFMoCA scores were found to be comparable to the total MoCA and superior to the MMSE in correctly classifying normal control, MCI, and AD samples in receiver operating characteristic analyses, thus showing promise that a combination of these brief tasks could be used as a TBCA measure, although this has not been explored.

Batteries Developed for Telephone Use

Minnesota Cognitive Acuity Screen and modified MCAS

Knopman, Knudson, Yoes, and Weiss (2000) developed the Minnesota Cognitive Acuity Screen (MCAS) as a telephone cognitive screening measure to distinguish between healthy older adults and those with potential cognitive impairment at a lower cost than face-to-face cognitive screening. The MCAS instrument includes nine subtests: orientation, attention, delayed word recall, comprehension, repetition, naming, computation, judgment, and verbal fluency. According to Knopman and colleagues (2000), test administration rarely exceeded 20 min. In the development study, the MCAS demonstrated 97.5% sensitivity and 98.5% specificity (88% PPV and 99% NPV) using a post hoc optimal cut score (not defined in the study) in a sample of 228 patients with dementia (n = 99; M age = 82, M education = 12 years) and healthy older adults (n = 129, M age = 74 years, M education = 14 years; Knopman et al., 2000). The group with dementia was assessed in their respective assisted living facility rooms with the examiner in a different room within the facility, whereas the control group was tested remotely at home. The MCAS was also found to distinguish those with MCI (n = 100; M age = 73, M education = 13, 98% White) from healthy controls (n = 50, M age = 70, M education = 15, 100% White) with a sensitivity of 86% and specificity 78%, and the MCAS distinguished those with MCI and a clinical diagnosis of AD (n = 50, M age = 74, M education = 13, 94% White) with a sensitivity of 86% and specificity of 77%. Cut scores of <42.5 for AD patients and >52.5 for healthy controls were recommended (Tremont et al., 2011). A cutoff score of 47.5 resulted in a reported 96% sensitivity and specificity when distinguishing between healthy controls and AD patients when MCI was not considered. A cut score of 43 was associated with the emergence of objective activities of daily living (ADL) impairment indicated by the CDR (rating of 0.5; Springate, Tremont, & Ott, 2012). The MCAS total score was identified as a significant predictor of conversion from amnestic MCI to dementia over 2.5–3 years (Tremont et al., 2016) as well as homecare/institutionalization over 8 years (Margolis, Papandonatos, Tremont, & Ott, 2018).

The MCAS was recently modified to include learning and recognition components to the delayed word recall subtest (MCAS-M; Pillemer, Papandonatos, Crook, Ott, & Tremont, 2018). This modification resulted in similar sensitivity but improved specificity for amnestic MCI (97% for both) when compared to the MCAS (97% and 87%, respectively). Overall, the MCAS and MCAS-M present with similar limitations to other screening and telephone-based assessments (e.g., distractions/hearing loss). Additionally, most studies on the MCAS were conducted with an overwhelmingly White population, so evidence for applicability to wider populations is lacking. Limited evidence provides some indication that the MCAS and MCAS-M may be appropriate for screening for MCI.

Cognitive Telephone Screening Instrument

Per Kliegel and colleagues (2007), the Cognitive Telephone Screening Instrument (COGTEL) was developed to help overcome ceiling effects noted on other telephone screening measures for use in research and clinical work. The COGTEL consists of six subtests primarily assessing attention/working memory, long-term and prospective memory, and aspects of executive functioning. In the original validation study by Kliegel and colleagues (2007), the measure was administered to 81 younger (M age = 24.6, M education = 11 years) and 83 older (M age = 67, M education = 10 years) adults residing in Western Europe both over the telephone and again in person. Results of their analyses revealed that administration modality did not significantly affect participant performance in either age group or on the factor structure of the COGTEL (as demonstrated by confirmatory factor analysis). Additionally, the authors noted that the distribution of COGTEL total scores was roughly normally distributed, limiting ceiling effects (Kliegel et al., 2007).

In a larger cohort of aging German individuals (N = 1,697, M age = 74), Breitling and colleagues (2010) reported that the COGTEL was easily integrated in their epidemiological study without notable skew or ceiling effects. Overall, while the studies reviewed here report favorable characteristics such as limited ceiling and practice effects, empirical support for the COGTEL is limited to date by lack of published studies on this measure. Further research is warranted, particularly because no studies report on the COGTEL’s diagnostic accuracy and there is limited support for its use across diverse populations.

Brief Test of Adult Cognition by Telephone

The Brief Test of Adult Cognition by Telephone (BTACT) is an approximately 20-min measure that assesses verbal episodic memory (15-item word list), working memory, verbal fluency, and aspects of executive functioning (Tun & Lachman, 2006). The original validation sample used by Tun and Lachman (2006) consisted of three age cohorts (<40, n = 17; 40–59, n = 19; and >60, n = 20; total sample M education = 16 years) from the Greater Boston Area that were administered an in-person battery of tests with “cognitive domains similar to those sampled in the BTACT,” then contacted 1 year later and administered the BTACT. Medium-to-large correlations were found between in-person and telephone-based assessments of vocabulary, episodic memory, and processing speed. They conducted an additional study on a convenience sample of 75 community-dwelling adults (aged 18–82) who were administered the BTACT both in person and by telephone. Correlations across test items ranged from 0.57 (immediate word recall) to 0.95 (backwards counting). Lachman, Agrigoroaei, Tun, and Weaver (2014) reported on the use of the BTACT in the Midlife in the United States (MIDUS) study (N = 4,268) and in a subsample who also received in-person cognitive assessment (n = 299, M age = 59, M education = 15 years). Overall, evidence for convergent validity of the BTACT was demonstrated by modest but significant correlations (rs ranged 0.42–0.54) of individual BTACT tests (backward digit span, category fluency, number series, and the 30 Seconds and Counting Task) with the corresponding domains on face-to-face testing. The reader is referred to the correlation matrix in the original article for further details of convergent and discriminant validity (Lachman et al., 2014, p. 411; Supplementary material online, Table 5).

Gavett, Crane, and Dams-O’Connor (2013) utilized factor analysis to demonstrate the potential utility for BTACT interpretation using a bi-factor model (n = 3,878, M age = 56 years). Confirmatory factor analyses indicated that the bi-factor scoring model (number series and memory) yielded slightly larger group differences in clinical samples with history of stroke compared to the total score. Gurnani, John, and Gavett (2015) published regression-based normative data from a sample of 3,096 healthy adults from the MIDUS-II Cognitive Project with demographic corrections (i.e., sex education, occupation; Gurnani et al., 2015). Dams-O’Connor and colleagues (2018) used the BTACT to monitor cognitive functioning in English- or Spanish-speaking (further data not provided) individuals with moderate-to-severe traumatic brain injury (TBI) 1 and 2 years after inpatient rehabilitation (Year 1: n = 463 [59.2% White, M age = 48, 16.4% with >12 years of education]; Year 2: n = 386 [59.4% White, M age = 47, 14.4% with >12 years of education]). Results showed BTACT subtest completion rates (the rate at which participants successfully completed any given subtest) ranged from 60.5% to 68.7% for Year 1 and 56.2% to 64.2% in Year 2 for the full sample, but noted participants were able to independently complete (i.e., without assistance from a caregiver) the BTACT in Year 1 and Year 2 only 60.3% and 59.8% of the time, respectively. They also reported that the BTACT overall score successfully detected cognitive improvement over 1 year (p = .02), but the only significant change was observed on the Reasoning subtest (p < .01).

In summary, like other TBCA measures, the BTACT’s validation information is limited. It does have some potential benefits over other measures reviewed here, given the inclusion of measures of processing speed, the availability of normative data, availability of Spanish forms, and demonstrated utility in a wider range of ages and populations.

Telephone-Administered Cognitive Test Battery

Prince and colleagues (1999) described the development and initial validation of the Telephone-Administered Cognitive Test Battery (TACT), originally intended for use in a large prospective twin study in the United Kingdom (Cognitive Health and Aging in Twins and Siblings study). The TACT is composed of the TICS-M, Wechsler Memory Scale-Revised (WMS-R; Wechsler, 1987), Logical Memory, Verbal Fluency (Goodglass & Kaplan, 1983), Wechsler Adult Intelligence Scale (WAIS; Wechsler, 1955) Similarities, and the New Adult Reading Test (Nelson & O’Connell, 1987; materials mailed to subjects before testing). Additionally, two tests of visuospatial orientation were adapted from Thurstone’s Primary Mental Abilities battery (Thurstone, 1938): the Hands test and the Object Rotation test. Finally, the Letter Series test, which measures inductive reasoning, was included in the battery. The latter three tests were mailed to the subjects in a packet beforehand, and subjects were instructed to complete them when prompted by the examiner in a certain amount of time (these packets were then sent back to researchers). Pilot data (n = 98, age range = 55–76 years) showed a completion rate of 89% with factor analysis with varimax rotation showing a clear four-factor solution (crystalized intelligence, logical memory, word list memory, and tests requiring learning and applying rules and abstraction under time pressure). Test-retest reliability coefficients over 2 months for a subset of the sample (n = 21) were satisfactory for all tests (r range = 0.67–0.82) except for object rotation (r = 0.45). Thompson, Prince, Macdonald, and Sham (2001) further investigated reliability of the TACT based on telephone versus face-to-face administration in a small UK sample (n = 27, age range = 62–63 years). Telephone assessment always occurred first, and face-to-face assessment was between 2 and 10 months later. Correlations were medium to large for all tests (intraclass correlation coefficient (ICC) range = 0.33–0.72) except object rotation (ICC = 0.12) and similarities (r = 0.12). Overall, the TACT captures a broad range of cognitive domains, including those less frequently assessed by telephone (i.e., visuospatial skills and processing speed). However, sending materials to patients/participants may hinder its utility for some settings and has implications for test security. Of note, no research conducted outside of the United Kingdom was found for this test and information on diagnostic utility is lacking.

Standard Face-to-Face Neuropsychological Tests Adapted for Telephone Administration

Several additional neuropsychological test batteries exist, which have been developed for various epidemiological research studies or specific populations (see Supplementary material online, Table 2). Many of these utilized standard neuropsychological tests that were adapted for telephone administration. A brief narrative review of these individual tests under a domain-specific approach is provided below.

Verbal memory

Word list-learning measures are natural and popular tests to adapt for telephone administration. The Hopkins Verbal Learning Test-Revised (Benedict, Schretlen, Groninger, & Brandt, 1998), California Verbal Learning Test (CVLT; Delis, Kramer, Kaplan, & Ober, 1987) and CVLT-Second Edition (CVLT-II; Delis, Kramer, Kaplan, & Ober, 2000), and Rey Auditory Verbal Learning Test (RAVLT) have all been utilized for TBCA with relative success (Barcellos et al., 2018; Berns, Davis-Conway, & Jaeger, 2004; Bunker et al., 2016; Julian et al., 2012; Knopman et al., 2000; Rapp et al., 2012; Unverzagt et al., 2007; see Supplementary material online, Tables 5–7 for sample information and psychometric properties). Longer list-learning tests (CVLT/CVLT-II and RAVLT) may have greater utility in younger populations (Barcellos et al., 2018; Berns et al., 2004; Unverzagt et al., 2007), which lend support for incorporating verbal memory tasks into more robust telephone-based test batteries to more effectively assess episodic memory function in broader age ranges and across diagnostic groups. In general, TBCA-administered list-learning tests show good concurrent validity with face-to-face administered list-learning tests (see Supplementary material online, Table 7; e.g., Bunker et al., 2016; Julian et al., 2012; Unverzagt et al., 2007). Some have found lower performance on initial learning trials via telephone (e.g., Berns et al., 2004), which may be related to pragmatic issues with telephone administration of list-learning measures (i.e., difficulties hearing single words over the telephone). Thus, paragraph/story recall measures may be more amenable to telephone administration given their contextual nature. The most utilized of these measures are stories from the Wechsler Memory Scale and its various versions (WMS; Revised and 3rd edition; Debanne et al., 1997; Mitsis et al., 2010; Rankin, Clemons, & McBee, 2005; Taichman et al., 2005; The Psychological Corporation, 1997; Wilson et al., 2010). The main deviation from face-to-face protocol identified for these measures is the time interval between learning and delayed recall trials, which is not specified in some studies, but is likely shorter than standard test instructions, given the brevity of the overall test batteries (e.g., typically ranging from 10 to 20 min total) used in these studies.

Other memory measures adapted for telephone use include the Selective Reminding Test (Mitsis et al., 2010), WMS Verbal Paired Associates (VPA; Kliegel et al., 2007), and the Rivermead Behavioral Memory Test (RBMT; Kliegel et al., 2007; Wilson, Cockburn, & Baddeley, 1985). Kliegel and colleagues (2007) used the telephone versions of the WMS VPA and found it correlated well with in-person measures. For the RBMT, a significant effect of age and administration, along with an interaction effect, was observed, indicating better performance by younger adults via telephone (Kliegel et al., 2007). Mitsis and colleagues (2010) found differential performance for the delayed recall (but not the learning) trials of Buschke’s Selective Reminding Test (SRT; Buschke, 1973) based on modality (telephone vs. face-to-face). This may have been because participants were only given three learning trials for the telephone version, while the standard six learning trials were administered face-to-face.

In terms of limitations, there is a risk with all of these tests that individuals may write down items to facilitate their performance during telephone administration. While this issue is not directly addressed in most studies, the few that that have assessed the likelihood of “cheating” have found that the incidence appears to be low (Buckwalter, Crooks, & Petitti, 2002; Thompson et al., 2001). Furthermore, many of these studies were conducted with disproportionately White and highly educated populations. No studies were found reporting on the use of verbal learning and memory measures in linguistically diverse populations or in interpreter-mediated assessments.

Language

Verbal fluency measures are commonly included in TBCA. Findings from these generally suggest no difference across assessment modalities (see Supplementary material online, Table 7; e.g., Bunker et al., 2016; Christodoulou et al., 2016; Reckess et al., 2013), though at least one demonstrated relatively higher verbal fluency performances during in-person administrations (Berns et al., 2004).

Naming tests are less commonly employed during TBCA. Some studies have described methods for adapting them for administration by telephone. Bunker and colleagues (2016) included a modified version of the 15-item Boston Naming Test (BNT, Goodglass & Kaplan, 1983; modified to develop an auditory naming test under guidance from Hamberger and Seidel’s (2003) normative study) in their Successful Aging after Elective Surgery telephone battery (n = 50, M age = 75, 96% White, M education = 15 years). Short sentences describing an object were read to the participant to name the item, and a phonemic cue was given if the participant was unable to identify the object. No differences were observed based on the list of objects presented in-person versus over telephone, or the order of their administration. Mean BNT scores were relatively lower when administered by telephone, but this difference was not significant. Correlational analysis for comparisons between assessment modes showed a strong degree of agreement (r = 0.85). Wynn, Sha, Lamb, Carpenter, and Brian (2019) utilized the Verbal Naming Test (Yochim et al., 2015), in a sample of 81 individuals residing near St. Louis, MO (83% White, M age = 74 years, M education = 16 years), with a moderate correlation between scores administered in-person versus telephone (r = 0.56, p < .001). However, the authors also found small but statistically significant differences in total scores between initial and follow-up telephone evaluations (p < .001). Overall, while verbal fluency measures are very frequently included in TBCA assessment, naming tests are less common. Key limitations of TBCA language testing are lack of evidence for use in linguistically diverse populations as well as lack of evidence regarding cultural appropriateness of items for existing naming tests.

Attention, processing speed, and executive functioning

Digit span tests are commonly utilized in TBCA, with some form of digit span task included in most of the test batteries reviewed here. While some studies created their own digit span test, others pulled directly from the WAIS. Most studies found medium-to-large correlations between in-person and telephone-based assessment (see Supplementary material online, Table 7), though some found higher scores for TBCA than in-person administration (e.g., Bunker et al., 2016; Mitsis et al., 2010; Wilson et al., 2010). Others note that articulation and hearing issues are particularly pronounced on these tests when administered via telephone (Taichman et al., 2005).

Assessment of executive function and processing speed via telephone is less commonly reported in the literature; however, some studies have described modifications to existing tests to allow for telephone administration. These modifications, particularly of Trail Making Test (TMT; Reitan, 1958) and Symbol Digit Modalities Test (SDMT; Smith, 1982), were originally designed to address noncognitive physical limitations such as poor vision or impaired motor functioning but have also been utilized for telephone administration.

The Oral Trail Making Test (OTMT) versions A & B (Ricker & Axelrod, 1994) were evaluated by Mitsis and colleagues (2010) for telephone administration. After adjusting for age and education, their participants demonstrated better performance on OTMT-A during in-person assessment compared to telephone; however, there was no significant difference by method of administration for the OTMT-B (see Supplementary material online, Table 7). McComb and colleagues (2011) used an alternative version of OTMT, the MAT (Jones, Teng, Folstein, & Harrison, 1993). The test was initially designed for assessment of patients with greater levels of cognitive impairment who were unable to perform the lengthier letter-number alternations up to number 13. Parts A and B consist of counting to 20 and reciting the alphabet, respectively. In part C, participants are asked to alternate between numbers and letters for 30 seconds, with the number of alterations made in 30 seconds the overall score. A strong degree of agreement was found for all three parts of MAT across the two administration modes (see Supplementary material online, Table 7). There was no significant main effect of administration mode on MAT scores (p = .87), but typical age and education effects were observed. However, lack of normative data for the MAT decreases its utility for immediate clinical use.

The oral version of the SDMT by Smith (1982) has also been utilized for TBCA by Unverzagt and colleagues (2007). Prior to scheduled telephone assessments, the participants (n = 106) were provided stimulus sheets (consisting of corresponding letter/symbol pairs that make up the test) and were instructed to keep these nearby during the call. No significant differences were found in scores between the two administrations (see Supplementary material online, Table 7). The Hayling Sentence Completion Test (response initiation and response suppression task in which participants complete sentences with either congruent or incongruent words; Burgess & Shallice, 1997) was used by Taichman and colleagues (2005) in a sample of 23 adults with pulmonary arterial hypertension and showed a modest degree of agreement between in-person and telephone administration (ICC: 0.28, see Supplementary material online, Table 7).

Other measures of executive function adapted for telephone use have been described in the literature. Debanne and colleagues (1997) extended the Cognitive Estimation Test (Shallice & Evans, 1978) by using five questions that had highest predictive ability of executive dysfunction, with no significant differences observed between in-person and telephone-administered scores (see Supplementary material online, Table 7). The Telephone Executive Assessment (TEXAS) was developed as a telephone version of the Executive Interview (EXIT25; Royall, Mahurin, & Gray, 1992; Royall et al. 2015; Salazar, Velez, & Royall, 2014). The TEXAS is a five-item task including the “Number-Letter Task,” “Word Fluency,” “Memory/Distraction Task,” “Serial Order Reversal Task,” and “Anomalous Sentence Repetition” items from the EXIT25 and is scored 0–10, with higher scores indicating more impairment. The TEXAS correlates well (r = 0.85) with EXIT25 and has been used in both English- and Spanish-speaking populations (Royall et al. 2015, Salazar et al., 2014). The reader is referred to Royall and colleagues (2015) for a detailed description of its psychometric properties.

Intellectual ability

Tests of intellectual ability per se have rarely been used in telephone assessment, but the Vocabulary and Similarities subtests from the WAIS-3rd Edition (WAIS-III; Wechsler, 1997) were used in Taichman et al.’s (2005) small sample (n = 23) of adults with pulmonary arterial hypertension. As would be expected for these verbal tasks, there was a strong degree of agreement between in-person and telephone assessments for both subtests, with an ICC of 0.82 for Similarities and 0.68 for Vocabulary.

Overall, measures of intelligence, language, verbal memory, executive functioning, attention, and processing speed have demonstrated utility for TBCA, much like evidence from videoconference-based teleneuropsychology (e.g., see Cullum, Hynan, Grosch, Parikh, & Weiner, 2014; Marra et al., 2020). This literature can lend guidance and support for providers who intend to utilize telephone cognitive screening measures or who wish to create a more robust assessment. In general, normative data developed from face-to-face assessment are utilized in most studies. Caution is warranted, given that some measures show differential performance based on administration modality, many studies have small sample sizes, and, in general, the literature is still somewhat sparse in this area.

Computer-Assisted Telephone Administration

Several computer-assisted TBCAs have been developed to capitalize on advances in technology, but the literature is limited in this regard. The first of these adapted the TICS-M for computer-assisted administration (Buckwalter et al., 2002). Lay examiners were trained in use of a computer program that guided them through TICS-M administration and scoring. Examiners were also prompted for their ratings of pragmatic questions such as “Did the respondent appear to have any difficulty understanding the questions?” Analysis of psychometric properties indicated good internal consistency, but the authors noted that further research was needed to more fully explore validity. Overall, they reported that the computer-assisted telephone interview (CATI) version of the TICS-M was feasible and well received, and it is a potentially cost-effective way to widely test cognitive performance by using lay examiners (Buckwalter et al., 2002). In 2007, the same group published the Cognitive Assessment of Later Life Status (CALLS), a computerized telephone measure. The CALLS was developed to overcome limitations of existing telephone cognitive screening measures (namely, failure to measure all cognitive domains typically measured in a full neuropsychological evaluation). The 30-min-long battery is administered with similar procedures as the CATI TICS-M but also includes audio recordings for verbal measures to ensure scoring accuracy. Unique features of the test include addition of options for volume configuration before the testing begins, a measure of pitch discrimination, and measures of simple and choice reaction time, all of which use computer-generated tones and enable millisecond accuracy in recording response times. Analysis of the CALLS demonstrated good internal consistency (Cronbach’s alpha = 0.81) and adequate concurrent validity (r = 0.60 with the MMSE; Crooks, Parsons, & Buckwalter, 2007).

Another study made use of a CATI model for telephone administration of verbal fluency (Marceaux et al., 2019) in a large sample of community-dwelling older adults enrolled in the REasons for Geographic And Racial Differences in Stroke study (n = 18,072 for animal fluency; n = 18,505 for letter fluency). Examiners followed computer prompts during administration and utilized a computer-assisted scoring program. Results revealed comparable performances to in-person verbal fluency assessment based on published normative data, and it was noted that computer-assisted administration/scoring of telephone-assessed verbal fluency could serve to enhance quality control in large-scale studies.

Overall, the limited data on computer-assisted TBCA reveal a highly variable degree of computer assistance, with some methods more feasible for implementation (e.g., computer assistance via administration prompts) in large-scale studies rather than for clinical purposes. However, the validity of these methods in terms of diagnostic accuracy is yet to be established, and telephone based-normative data are not available. This appears to be a promising area for new test development, however, as technological advances may be able to enhance cognitive assessment possibilities, including automated methods of data acquisition, analysis, scoring, and display of results.

Discussion

In this paper, the authors systematically searched the literature seeking empirically supported telephone-administered cognitive instruments and then summarized the measures, available psychometric information, and cognitive domains assessed for both individual tests and screening batteries. Several conclusions and trends emerged from our review, which are discussed in detail later.

A large majority of TBCAs were developed for the purpose of screening for cognitive impairment in aging. The TICS, derived from the MMSE, was one of the first cognitive screening measures studied for administration by telephone (Brandt et al., 1988). Several measures (notably, the TICS-M) were born out of a desire to improve upon the sensitivity and specificity of the TICS, particularly as the concept of MCI gained traction in the late 1990s. In general, measures that added more robust list-learning/memory tasks seemed to have the most success in enhancing sensitivity/specificity in detection of cognitive impairment in MCI/dementia cohorts. Several other studies demonstrated that detecting cognitive impairment via TBCA is feasible in other, younger populations as well. However, most of these utilized lengthier batteries composed of more robust in-person measures adapted for telephone assessment. For example, Tun and Lachman’s (2006) BTACT battery was used in a large and diverse sample of study participants from the MIDUS study, with ages ranging from 25 to 84 (Brim, Ryff, & Kessler, 2004), in addition to a population with TBI (Dams-O’Connor et al., 2018). Thus, the utility of TBCA is not limited to the aging population, given the growing focus in other clinical/age cohorts. Additionally, several studies were identified that examined discrete cognitive tests when administered by telephone (e.g., CVLT, verbal fluency, TEXAS), with promising results when their psychometric properties were examined (see Supplementary material online, Tables 5–7). These could feasibly be compiled into a larger battery for a more robust assessment, depending on the referral question, much like what has been done in studies using videoconference-based teleneuropsychology (e.g., see Brearly et al., 2017; Marra et al., 2020; Cullum et al., 2014).

As with video-based teleneuropsychology, TBCA batteries tend to be briefer than in-person evaluations, even if they are intended for more in-depth evaluation than screening. The longest TBCA identified (of those studies that reported administration time) in this review averaged 45 minutes (Mitsis et al., 2010), which is comparable to the video-based teleneuropsychology literature. Still, some tended to have adequate sensitivity and specificity for the purposes of detecting gross (and sometimes more subtle) cognitive impairment, though this does range based on population and testing instrument/battery (see Supplementary material online, Tables 5 and 6).

It is apparent that some cognitive domains are more easily and accurately assessed via TBCA than others. Clearly, assessment of visuospatial skills is quite limited, if not impossible, via telephone alone (although at least one study did attempt this; Norton et al., 1999). In addition, some evidence exists that routinely used orientation questions are less relevant for TBCA (Kawas et al., 1995). Telephone-based assessment of executive functioning and attention are similarly limited, with some notable exceptions including McComb et al.’s (2010) MAT (a telephone-friendly alternative to the Trail Making Test). However, language and verbal memory assessments are quite achievable, and most studies assessing concordance between these measures face to face versus telephone showed medium to large correlations (see Supplementary material online, Table 7), consistent with the video-based teleneuropsychology literature.

Importantly, many of the identified TBCAs were developed for use in research, either to screen participants for study entry or for longitudinal follow-up. Several review papers of telephone tools for detection of cognitive impairment in aging populations have been published, and the reader is referred to these for a more detailed discussion of this area (Castanho et al., 2014; Herr & Ankri, 2013; Martin-Khan, Wootton, & Gray, 2010; Wolfson et al., 2009).

In the wake of the coronavirus 2019 (COVID-19) crisis, providers have been forced to utilize alternative methods of cognitive assessment in order to provide needed services to patients. Most of the guidance provided by national organizations and committees (e.g., APA and Interorganizational Practice Committee) at the time of this writing has focused on video-based teleneuropsychology (APA, 2013; Bilder et al., 2020). However, some patients in need of neuropsychological evaluation may not have access to necessary equipment or other resources to participate in such evaluations, especially lower income populations. Furthermore, even those with access to the requisite resources may be limited in their utilization of said equipment due to cognitive impairment or simply incompetence with technology. In contrast to modern videoconference platforms, the telephone is more universally familiar and thus provides access to a broader population. Almost all of the TBCA research reviewed here was conducted with participants off-site in their own residences. This contrasts with the video-based teleneuropsychology literature where very view studies have examined home-based assessment and instead evaluate participants at remote clinics or other monitored locations (Marra et al., 2020). Importantly, neither videoconference- nor telephone-based teleneuropsychology should replace gold-standard, face-to-face neuropsychological evaluation, and careful consideration should be given to their utilization. Castanho et al., (2014) and Bilder et al. (2020) have suggested a “triage” approach, wherein TBCA may be used to screen or help identify patients in need of further, more detailed in-person neuropsychological evaluation. TBCA is perhaps most useful for identifying grossly normal versus impaired cognition as seen in cases of known or suspected dementia. However, as with any brief cognitive assessment procedure, attempts at specific diagnoses based on TBCA beyond “suggestive or non-suggestive of impairment” are not advised in most cases given the limitations of telephone-based cognitive screening. Initial telephone evaluation may help identify those in need of further assessment (e.g., those who screen “positive” or suggestive of cognitive impairment) and also provide much-needed insight into interim supports that could be put in place while the patient awaits a full evaluation.

Regarding selection of telephone-administered tests/batteries, consideration of the test/battery’s development purpose and evidence for use is crucial. The tables provided in this review include relevant details of each studied test/test battery, with key sample and validation information highlighted in Supplementary material online, Tables 5–7. Practical use of these tables, combined with good clinical judgment, may, for example, help the thoughtful provider recognize that the use of any particular telephone-based test (or other brief screening assessment, regardless of administration medium) may be inadequate for certain patients or referral questions, such as to rule out subtle cognitive deficits that may be present in a young patient with, for example, multiple sclerosis. As always, in clinical settings, the referral question is key, with much diagnostic information to be obtained during a thorough clinical interview, followed by a careful selection of neuropsychological measures, with this latter process necessarily more restricted when conducting remote evaluations.

While a strong argument can be made for implementation of TBCA even after the COVID-19 crisis has passed, this modality is clearly not without its limitations, most of which have already been highlighted here. The inability to measure visuospatial skills and motor functioning is a major limitation, as many cognitive disorders present with impairments in these areas. Additionally, neuropsychologists typically focus much of their energy on rapport-building and behavioral observations, both of which are restricted by telephone assessment. Not only is the clinician blind to the patient’s behavior and appearance but also to their environment, which may be inappropriate for testing (e.g., too many distractions) and more conducive to receiving unauthorized help (e.g., assistance from others, writing down memory items). This is where video-based teleneuropsychological assessments have a clear advantage, but they are not without similar limitations. Many measures reviewed here rely on cutoff scores that do not take into account demographic and/or cultural factors, despite some evidence that many cognitive screening tests, whether telephone-, videoconference-, or in-person-based, may not be culture fair (Kiddoe, Whitfield, Andel, & Edwards, 2008). Moreover, validation studies of many of these measures were conducted on White, English-speaking, and highly educated individuals, with some notable exceptions for the most well-studied measures (e.g., TICS-M). No studies were found describing validity of these measures in interpreter-mediated assessments, which may be of primary interest in future research to minimize disparity in access to and quality of care. Not all measures reviewed here have been adequately validated, and many lack comparison to in-person assessment, rendering it difficult to comment on the practice of using normative data developed from in-person assessment for tests administered via telephone. Furthermore, at least one study has reported that hearing loss, common to the aging population, may adversely affect performance on TBCA relative to in-person assessment (Pachana, Alpass, Blakey, & Long, 2006).

Despite these limitations, TBCA, when appropriately utilized, has the potential to significantly enhance patient care. TBCA may enable enhanced screening, provision of broader services, and decrease no-show rates (compared to in-person evaluation; Caze, Dorsman, Carlew, Diaz, & Bailey, in press), by reducing barriers such as those related to travel or childcare needs, which have been identified as reasons for no shows in the general medical literature (Barron, 1980; Ellis, McQueenie, McConnachi, Wilson, & Williamson, 2017; Farley, Wade, & Birchmore, 2003; Nielsen, Faergeman, Foldspang, & Larsen, 2008; Sharp & Hamilton, 2001). Providers and researchers could also consider utilizing TBCA to gather interim screening data on cognitive trajectory compared to baseline face-to-face evaluation to further inform diagnosis and management strategies.

This review is not without its limitations. We did not conduct a quality assessment of the articles included and thus make no claims regarding the strength of any one measure or findings. Our aim was to provide a broad overview of available literature regarding telephone cognitive assessment, which does not lend itself well to quantitative methods. Future reviews may consider narrowing their focus to enable utilization of meta-analytic methods. Additionally, this review is limited to adult populations. No reviews for TBCA for pediatric populations currently exist.

Overall, TBCA has been of modest interest to the field of neuropsychology since the late 1980s, and the advent of videoconferencing, with its ability to allow for observation of patients and similarly promising data on feasibility, reliability, and validity (e.g., see Brearly et al., 2017; Cullum et al., 2014), seems to have largely supplanted that interest. However, this review demonstrates that TBCA has many strengths and lends itself well to a broad range of populations. In addition, many of the tests utilized in the videoconference environment are verbally mediated and thus may be similarly well suited for telephone administration, though without the opportunity for observation. TBCA may be more feasible for some populations in that it demands less equipment and technological experience, thus permitting access to services for those unable or unwilling to utilize videoconferencing technology. Similarly, its utility in research has perhaps been better recognized than in clinical work, with many studies demonstrating its effectiveness for screening research participants, reducing selection bias, and conducting longitudinal follow-ups (e.g., Callahan et al., 2002; Taichman et al., 2005; Reckess et al., 2013). Importantly, TBCA is perhaps more supported for in-home assessment than video-based teleneuropsychology, given that the latter has been infrequently studied when utilized for home-based assessment. Further research is needed, particularly for lengthier assessments, to understand the concordance between face-to-face and telephone-administered tests, and its ability to detect and characterize cognitive impairment in broader populations. In the era of the COVID-19 pandemic and need for more remote telehealth evaluations, however, telephone-based assessment appears to be a viable clinical and research option with a growing literature to support its utility.

Conflict of Interest

None declared.

Funding

This work was supported by funding provided in part by BvB Dallas Foundation Alzheimer’s Disease Neuropsychology Fellowship (A.R.C.) and the O’Donnell Brain Institute Cognition and Memory Center (A.R.C., L.L., C.M.C.).

Supplementary Material

Contributor Information

Anne R Carlew, Department of Psychiatry, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

Hudaisa Fatima, Department of Neurology, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

Julia R Livingstone, Department of Psychiatry, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

Caitlin Reese, Department of Psychiatry, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

Laura Lacritz, Department of Psychiatry, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA; Department of Neurology, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

Cody Pendergrass, Mental Health Department, VA North Texas Health Care System, Dallas, TX 75216, USA.

Kenneth Chase Bailey, Department of Psychiatry, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

Chase Presley, Department of Psychiatry, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

Ben Mokhtari, Department of Psychiatry, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

Colin Munro Cullum, Department of Psychiatry, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA; Department of Neurology, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA; Department of Neurological Surgery, University of Texas Southwestern Medical Center, Dallas, TX 75235, USA.

References

- American Psychological Association (2013). Guidelines for the practice of telepsychology Retrieved October 7, 2020, from https://www.apa.org/practice/guidelines/telepsychology

- American Psychological Association (2017). Ethical principles of psychologists and code of conduct (2002, amended effective June 1, 2010, and January 1, 2017). Retrieved October 7, 2020, from https://www.apa.org/ethics/code/

- American Psychological Association (2020). APA calls for comprehensive telehealth coverage Retrieved October 7, 2020, from https://www.apaservices.org/practice/reimbursement/health-codes/

- Baccaro A., Segre A., Wang Y. P., Brunoni A. R., Santos I. S., Lotufo P. A. et al. (2015). Validation of the Brazilian-Portuguese version of the modified telephone interview for cognitive status among stroke patients. Geriatrics and Gerontology International, 15(9), 1118–1126. doi: 10.1111/ggi.12409. [DOI] [PubMed] [Google Scholar]

- Baker A. T., Byles J. E., Loxton D. J., McLaughlin D., Graves A., & Dobson A. (2013). Utility and acceptability of the modified telephone interview for cognitive status in a longitudinal study of Australian women aged 85 to 90. Journal of the American Geriatrics Society, 61(7), 1217–1220. doi: 10.1111/jgs.12333. [DOI] [PubMed] [Google Scholar]

- Barber M., & Stott D. J. (2004). Validity of the telephone interview for cognitive status (TICS) in post-stroke subjects. International Journal of Geriatric Psychiatry, 19(1), 75–79. doi: 10.1002/gps.1041. [DOI] [PubMed] [Google Scholar]

- Barcellos L. F., Bellesis K. H., Shen L., Shao X., Chinn T., Frndak S. et al. (2018). Remote assessment of verbal memory in MS patients using the California verbal learning test. Multiple Sclerosis, 24(3), 354–357. doi: 10.1177/1352458517694087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barron W. M. (1980). Failed appointments. Who misses them, why they are missed, and what can be done. Primary Care, 7(4), 563–574. [PubMed] [Google Scholar]

- Beeri M. S., Werner P., Davidson M., Schmidler J., & Silverman J. (2003). Validation of the modified telephone interview for cognitive status (TICS-M) in Hebrew. International Journal of Geriatric Psychiatry, 18(5), 381–386. doi: 10.1002/gps.840. [DOI] [PubMed] [Google Scholar]

- Benedict R. H., Schretlen D., Groninger L., & Brandt J. (1998). Hopkins verbal learning test–revised: Normative data and analysis of inter-form and test-retest reliability. The Clinical Neuropsychologist, 12(1), 43–55. [Google Scholar]

- Bentvelzen A. C., Crawford J. D., Theobald A., Maston K., Slavin M. J., & Reppermund S. (2019). Validation and normative data for the modified telephone interview for cognitive status: The Sydney memory and ageing study. Journal of the American Geriatrics Society, 67(10), 2108–2115. doi: 10.1111/jgs.16033. [DOI] [PubMed] [Google Scholar]

- Berns S., Davis-Conway S., & Jaeger J. (2004). Telephone administration of neuropsychological tests can facilitate studies in schizophrenia. Schizophrenia Research, 71(2–3), 505–506. doi: 10.1016/j.schres.2004.03.023. [DOI] [PubMed] [Google Scholar]

- Bilder R. M., Postal K. S., Barisa M., Aase D. M., Cullum C. M., Gillaspy S. R. et al. (2020). InterOrganizational practice committee recommendations/guidance for teleneuropsychology (TeleNP) in response to the COVID-19 pandemic. The Clinical Neuropsychologist Advance online publication. doi: 10.1080/13854046.2020.1767214. [DOI] [PMC free article] [PubMed] [Google Scholar]