Abstract

Risk-benefit analyses are essential in the decision-making process when selecting the most effective and least restrictive assessment and treatment options for our clients. Clinical expertise, informed by the client’s preferences and the research literature, is needed in order to weigh the potential detrimental effects of a procedure against its expected benefits. Unfortunately, safety recommendations pertaining to functional analyses (FAs) are scattered or not consistently reported in the literature, which could lead some practitioners to misjudge the risks of FA. We surveyed behavior analysts to determine their perceived need for a risk assessment tool to evaluate risks prior to conducting an FA. In a sample of 664 Board Certified Behavior Analysts (BCBAs) and doctoral-level Board Certified Behavior Analysts (BCBA-Ds), 96.2% reported that a tool that evaluated the risks of proceeding with an FA would be useful for the professional practice of applied behavior analysis. We then developed an interactive tool to assess risk, which provides suggestions to mitigate the risks of an FA and validity recommendations. Subsequently, an expert panel of 10 BCBA-Ds reviewed the tool. Experts suggested that it was best suited as an instructional resource for those learning about the FA process and as a supporting resource for early practitioners’ clinical decision making.

Electronic supplementary material

The online version of this article (10.1007/s40617-020-00433-y) contains supplementary material, which is available to authorized users.

Keywords: Clinical decision making, Ethical practice, Functional analysis, Risk assessment, Safety precautions

Demand continues to grow for Board Certified Behavior Analysts (BCBAs) and their expertise (Behavior Analyst Certification Board [BACB], 2018; Deochand & Fuqua, 2016). This demand has created a “supply” issue, in that there is a shortage of BCBAs. The BACB reports that a vast majority of individuals certified as behavior analysts have been certified for 5 years or less (BACB, n.d.). This heightened demand, coupled with the junior status of many practicing behavior analysts, creates a need for tools that will continue to support the professional practice of behavior analysis. Determining the needs of behavior analysts requires intermittently conducting job analyses and expert panel reviews (Shook, Johnston, & Mellichamp, 2004), in addition to examining ongoing challenges encountered by those in the field.

Two surveys published in the Journal of Applied Behavior Analysis presented cause for concern, because a majority of certified behavior analysts (BCBAs, as well as Board Certified Assistant Behavior Analysts [BCaBAs] and doctoral-level BCBAs [BCBA-Ds]) reported they were facing barriers to conducting functional analyses (FAs) in practice, despite endorsing FAs as the most informative tool in the functional behavior assessment arsenal (Oliver, Pratt, & Normand, 2015; Roscoe, Phillips, Kelly, Farber, & Dube, 2015). Oliver et al. (2015) reported that 62.6% of certified behavior analysts surveyed (N = 682) indicated never or almost never using an FA. Similarly, Roscoe et al. (2015) reported that 61.9% of certified behavior analysts surveyed (N = 205) had entire client caseloads where none or almost none had received an FA to inform treatment. The participants in the Oliver et al. (2015) survey reported insufficient time (57.4%), lack of space/materials (51.8%), lack of trained support staff (26.7%), and lack of administrative policies (24.9%) as the primary barriers to implementing FAs in practice. Roscoe et al. (2015) reported four primary barriers: lack of space (57.6%), lack of trained support staff (55.6%), lack of support or acceptance of the procedure (46.3%), and insufficient time or client availability (42.4%). A majority of participants indicated having prerequisite assessment training experience. For example, only 12% of the sample reported lacking “how-to” functional behavior assessment knowledge in the Oliver et al. (2015) sample, and 82.4% indicated serving as the primary therapist or data collector in an FA in the Roscoe et al. (2015) sample. These data seem to suggest that practitioners have been trained in FA technologies but are not implementing these technologies due to either resource issues or a lack of administrative support.

FAs allow practitioners to better identify functionally matched treatments for problem behavior that do not rely as heavily on aversive stimuli to achieve beneficial treatment outcomes (Neef & Iwata, 1994; Pelios, Morren, Tesch, & Axelrod, 1999). There is a continual growth of functional analysis technology (Schlichenmeyer, Roscoe, Rooker, Wheeler, & Dube, 2013). It is essential that practitioners keep abreast of these changes in technology over time to ensure FAs are appropriately used in practice. FAs can be conducted in a variety of environments in abridged or adjusted forms, such as the single-function, latency (Call, Pabico, & Lomas, 2009; Thomason-Sassi, Iwata, Neidert, & Roscoe, 2011), brief (Northup et al., 1991; Wallace & Iwata, 1999), and trial-based (Bloom, Iwata, Fritz, Roscoe, & Carreau, 2011) FAs, as well as FAs using synthesized conditions (Hanley, Jin, Vanselow, & Hanratty, 2014). Some of these developments may help ameliorate concerns practitioners identify about using FAs in practice. For example, some practitioners reported barriers to adequate time and space to conduct FAs or a lack of trained staff. However, this is not consistent with the literature. Training staff to implement an FA should not be an issue if teachers with no behavior-analytic experience can be trained with minimal performance feedback (Rispoli et al., 2015). If individuals like direct-care staff (Lambert, Bloom, Kunnavatana, Collins, & Clay, 2013), educators (Rispoli et al., 2015; Wallace, Doney, Mintz-Resudek, & Tarbox, 2004), and residential caregivers (Phillips & Mudford, 2008), all with various backgrounds and education levels, can be trained to conduct FAs, then training support staff familiar with applied behavior analysis should not be a barrier for using FAs in our growing field. Iwata and Dozier (2008) noted that the use of FAs can actually reduce the time it takes to receive effective treatment, as selecting an FA like the brief FA, latency-based FA, or trial-based FA can limit the amount of time individuals with problem behavior spend in assessment. It appears that practitioners are either not knowledgeable about these developments or not “connecting the dots” to see that there are strategies they can use to train staff, minimize the time required to conduct their assessment, or embed their assessment into the client’s ongoing routine (and, hence, natural environment) to ameliorate the need for a separate space for the FA.

For the FA to gain social acceptance, it must be used in practice when it is the best suited assessment procedure. Administrative support for conducting FAs might increase if administrators had more knowledge of the risks and benefits of the procedure. Recently, Wiskirchen, Deochand, and Peterson (2017) concluded that safety recommendations for FAs were not easily accessible to behavior analysts, which may make it difficult for behavior analysts to effectively judge whether it is safe to conduct an FA or even what factors they should consider in making this judgment. Researchers have found that safety recommendations are often not consistently reported (Weeden, Mahoney, & Poling, 2010) or they are scattered in the behavioral literature across numerous journals and books (Wiskirchen et al., 2017). Wiskirchen et al. (2017) advocated for a formalized risk assessment prior to conducting an FA and suggested four domains (clinical experience, behavior intensity, support staff, and environmental setting) that could be included in such a risk assessment. This “call to action” is timely given that clinical decision making surrounding FA safety precautions appears not to be transparent to those in and outside the field.

In recent years, Behavior Analysis in Practice has published decision trees to help guide behavior analysts, covering topics ranging from selecting measurement systems (LeBlanc, Raetz, Sellers, & Carr, 2016) and treatments for escape-maintained behavior (Geiger, Carr, & LeBlanc, 2010), to considering the ethical implications of interdisciplinary partnerships (Newhouse-Oisten, Peck, Conway, & Frieder, 2017). Such tools offer guidance and resources to support the growing number of behavior analysts in the field. A similar tool for evaluating the risk of FA and for pointing practitioners to helpful literature for decreasing risk could be helpful to the field. However, prior to developing the FA risk assessment tool, we first surveyed BCBAs to ascertain if they reported a need for such a tool. Based on those results, we developed a beta version of a comprehensive risk assessment tool for evaluating risk prior to conducting an FA. The tool suggested strategies for ameliorating risks, provided references to peer-reviewed literature on how to implement such risk-reduction strategies, and provided considerations to increase the validity of FAs in practice. Ten BCBA-Ds with several years of experience conducting FAs and who had contributed to the knowledge base on this topic reviewed the tool. Their feedback was used to develop a refined version of the tool. In this article, we describe the outcomes of the survey, describe the process for evaluation of the tool, and provide the most recent version of the risk assessment tool for practitioner use.

Method

Phase 1: Needs Assessment

We surveyed behavior analysts to assess their professional opinion of the need for a formalized FA risk assessment tool. We also collected participants’ demographic data, as well as data on their experience conducting FAs.

Participants

A survey (described later) was distributed through the BACB’s mass e-mail service to BCBAs and BCBA-Ds. At the time the survey was disseminated, there were 2,088 BCBA-D and 23,582 BCBA certificants worldwide. The survey was sent out to all of these individuals. Of those behavior analysts who received the survey invitation, 708 started the survey, 664 completed the first half of the survey containing yes/no and demographic questions, and 596 (84% completed upon initiating the survey) completed the entire survey, including the Likert scale responses. Our sample was composed of 534 BCBAs (2.26% response rate) and 130 BCBA-Ds (6.2% response rate).

Materials

A 33-item response survey with an estimated 15- to 20-min time commitment was constructed by the authors using Qualtrics™ to assess the need for an FA risk assessment tool (see the survey in Supplemental Materials). Demographic data were collected pertaining to certification level, age, gender, and years of experience in the field. Thematically, questions were related to experience with FAs, the perceived validity and utility of the FA, and barriers to and risks of the procedure. There were 11 yes/no questions followed by 15 Likert questions. A 5-point Likert scale (strongly agree, agree, neutral, disagree, strongly disagree) drop-down menu was used to evaluate participant agreement or disagreement with statements regarding FA use in practice. The final two yes/no questions allowed participants to type more detailed responses regarding how they currently evaluate risk prior to conducting an FA, and whether they believe colleagues do not conduct FAs even when it would be the best assessment option.

Procedure

After accessing the survey using the e-mail link, participants could save and complete the survey online at any time, but after 3 months of inactivity, responses thus far in the survey were recorded (allowing data collection on partially completed surveys). Two months after the initial e-mail invitation, a second e-mail was sent so that anyone who had not participated in the survey could do so. This also served as a prompt to those who had started but not completed the survey in the first round. The survey was not searchable through search engine queries, so only those with an invite from the BACB® e-mail could participate. Additionally, to prevent multiple user responses generated from the same IP address, prevent “ballot box stuffing” was selected in the Qualtrics™ options. Survey data were separated by participants who completed the first half of the survey and participants who completed the entire survey (defined as having no more than two unanswered responses).

Survey Results and Discussion

Practitioner Demographics

Participants’ mean age was 39.2 years (range 24–78 years). Table 1 depicts participant demographic characteristics such as gender, certification type, age ranges, and years of experience in the field. There was a higher proportion of female participants, which comprised 81.2% (n = 539) of the sample. Of those, 450 identified as BCBAs, and 89 identified as BCBA-Ds. Male participants represented 18.7% (n = 124) of the sample, with 84 identifying as BCBAs and 40 as BCBA-Ds. One participant did not identify with the binary gender options. A large portion (31%) belonged to the 30- to 35-year-old age range. Approximately, 75% of the participants had fewer than 15 years of experience working in the field, which reflects the recent exponential growth of newly certified behavior analysts.

Table 1.

Participant demographic data (N = 664)

| Characteristic | N | Percentage |

|---|---|---|

| Gender | ||

|

Female Male Other |

539 124 1 |

81.17 18.67 0.15 |

| Certification | ||

|

BCBA BCBA-D |

534 130 |

80.42 19.58 |

| Age range | ||

|

24–29 30–35 36–40 41–45 46–50 >50 |

100 207 131 70 53 100 |

15.13 31.32 19.82 10.59 8.02 15.13 |

| Years of experience in the field | ||

|

0–5 6–10 11–15 16–20 21–25 >25 |

175 195 126 76 40 52 |

26.36 29.37 18.98 11.45 6.02 7.83 |

Practitioners’ FA Use and Perceptions of Risk

As depicted in Table 2, 95% of participants indicated that they had analyzed FA data, 91.9% had implemented treatment informed by FA results, 90.7% had assisted or observed an FA, 81.8% had designed an FA, and 69.4% had supervised an FA. In spite of this exposure, 78.2% of participants reported instances when they were unsure whether it was appropriate to conduct an FA. Similarly, 80.4% of the participants reported that if they conducted an FA, they would be concerned regarding the validity of the results. A clear majority (96.2%) of participants expressed a desire for a risk assessment tool that consolidates safety precautions for an FA. Of the sample, 94.7% expressed a need for a tool that determined risk and offered steps to mitigate this risk prior to FA implementation. Interestingly, 82.2% reported that they would be more willing to conduct an FA if a tool existed that offered safety precautions, validity recommendations, and other considerations. Roughly 66 participants did not complete the second half of the survey containing the Likert and final two yes/no questions, and their data are presented separately in Table 2.

Table 2.

Participants’ responses to questions regarding FA experience

| Questions | Yes N (%) |

No N (%) |

|---|---|---|

| N = 664 | ||

| Have you analyzed data from an FA? | 631 (95.0) | 33 (5.0) |

| Have you implemented treatment based on the results of an FA? | 610 (91.9) | 54 (8.1) |

| Have you assisted with or observed an FA? | 602 (90.7) | 62 (9.3) |

| Have you designed an FA? | 543 (81.8) | 121 (28.2) |

| Have you supervised an FA? | 461 (69.4) | 203 (30.6) |

| Were there times you were unsure whether it was appropriate to conduct an FA? | 519 (78.2) | 145 (21.8) |

| If you were to conduct an FA, would you be concerned about the validity of the results? | 534 (80.4) | 130 (19.6) |

| Have you heard of the term “risk assessment” in relation to conducting an FA? | 550 (82.8) | 114 (17.2) |

| Do you believe there is a need for a risk assessment tool that determines the risk of conducting an FA and offers ways to reduce risk posed in an FA? | 629 (94.7) | 35 (5.3) |

| Would a tool that helps evaluate when it would be safe to conduct an FA be useful for the field of applied behavior analysis? | 639 (96.2) | 25 (3.8) |

| Would you be more likely to conduct an FA if a tool existed that helped offer safety precautions, validity recommendations, and other considerations? | 546 (82.2) | 118 (17.8) |

| N = 598 | ||

| Have you used a formalized (standard decision-making tool) risk assessment before conducting an FA? | 35 (5.8) | 563 (94.2) |

| Do you believe some behavior analysts do not conduct an FA when it is actually the best option to guide treatment? | 559 (93.5) | 39 (6.5)v |

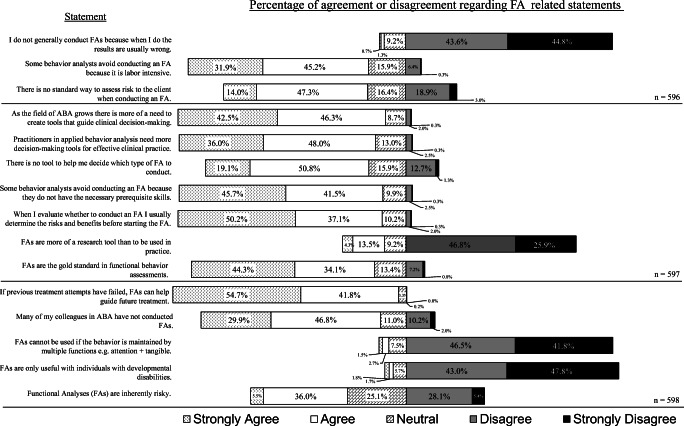

Data for Likert scale responses are presented using a diverging stacked bar chart (see Figure 1). This chart is optimal for quick visual analysis of Likert data. The proportion of participant agreement with any given question shifts the positioning of the bar chart (i.e., proportionally more disagreement than agreement would shift the bar to the right and away from the statement on the left, and vice versa). Roughly 596 participants of the original 664 completed the entire survey including the Likert questions. Participants could leave two responses unfilled and still meet inclusion criteria. Statements in Figure 1 are organized by the total responses for each statement, as some participants did not provide an endorsement to the provided 5-point Likert scale by leaving an unfilled response.

Fig. 1.

Percentage agreement/disagreement regarding ABA-related statements

Most participants (99.2%) agreed or strongly agreed that the FA helps guide future treatment when other methods have failed. Also, a majority of the sample (78.4%) agreed or strongly agreed that FAs are the gold standard functional assessment. Interestingly, 88.4% of participants disagreed or strongly disagreed with the statement “I do not generally conduct FAs because when I do the results are usually wrong.” Most (90.8%) disagreed or strongly disagreed that an FA could only be used with individuals with developmental disabilities, and 88.3% disagreed that FAs could not be used with behaviors that were multiply controlled. Just under 73% disagreed or strongly disagreed that FAs were more of a research tool than something to be used in practice. In summary, there seemed to be strong agreement that FAs are robust and should be relied upon when needed to inform treatment.

A smaller majority (61.3% of participants) either agreed or strongly agreed with the statement that there is no standard way to assess the risk to the client when conducting an FA. There was strong agreement (88% of participants agreed or strongly agreed) that there is a need for more tools that guide clinical decision making as the field grows, and 84% of participants supported the need for more decision-making tools for effective clinical practice. Although a majority of the sample (87.3%) agreed or strongly agreed that they engage in a risk-benefit analysis prior to an FA, most (approximately 70%) agreed or strongly agreed that there is no tool to help decide which type of FA should be conducted. Opinions were divided regarding FAs being inherently risky, as 41.5% of participants agreed or strongly agreed with this statement, and 33.5% strongly disagreed or disagreed. In summary, there seemed to be general agreement that a decision-making tool that helped evaluate the risk of FA procedures would be useful.

Interestingly, 76.7% of participants agreed or strongly agreed that many of their peers have not conducted FAs. Just over 77% either agreed or strongly agreed that some behavior analysts avoid conducting FAs because they are labor intensive, but 87.2% of participants indicated that the reason was due to a lack of prerequisite skills to implement FAs. Only 598 participants completed both close-ended questions at the conclusion of the survey, which allowed participants to provide textual information if they wanted to offer additional context surrounding their responses. In the first question, about 94.2% of participants reported never using a formalized process to determine risk before conducting an FA. The responses to the second question indicated that roughly 93.5% of the sample believed some behavior analysts do not conduct FAs even when they may be the best option to guide treatment.

These data suggest there is a need for a structured support tool to help evaluate the risks of conducting an FA. They also support the potential utility of including with the tool recommendations to improve the validity of the FA procedure by designing FAs to meet the diverse needs of the clients for whom an FA is warranted, as well as cater to the settings and contexts in which FAs are conducted. In order of priority based on the perceived needs of the sample, our tool should (a) provide the various safety precautions for implementing an FA found in the literature, (b), offer a way to assess the risk of implementing an FA, (c) provide options to reduce risk, and (d) offer considerations regarding how to conduct a valid FA.

Phase 2: Tool Development

In order to develop the tool, we examined a large number of seminal articles and books referencing applications of and consideration for the FA. We relied on our original (Wiskirchen et al., 2017) review of the literature and reviewed any new articles that had come out since then. The resources we used are listed in Table 3. We manually searched the journals and examined cross-citations within a reference. We selected references that pertained to safety recommendations for FAs, alternative experimental procedures rather than an FA that could be used to minimize risk, and validity issues that can arise when implementing FAs, as well as how these could be resolved. Earlier references were selected in favor of direct or systematic replications that did not provide further insight into variables that could influence safety. A complete list of the 83 references we used for this project can be found in the references tab of the tool in the Supplemental Materials.

Table 3.

List of sources for the references used in the tool in alphabetical order

| References | |

|---|---|

| Journals | |

|

Advances in Learning and Behavioral Disabilities Analysis and Intervention in Developmental Disabilities Behavior Analysis in Practice Behavior Analysis: Research and Practice Behavior Modification Behavioral Intervention: Principles, Models, and Practices Behavioral Interventions Cognitive and Behavioral Practice Education and Training in Developmental Disabilities Education and Treatment of Children European Journal of Behavior Analysis Journal of Applied Behavior Analysis Journal of Autism and Developmental Disorders Journal of Behavioral Education Journal of Developmental and Physical Disabilities Journal of Early and Intensive Behavioral Intervention Journal of Positive Behavior Interventions Journal of the Association for Persons With Severe Handicaps Pediatric Clinics of North America Research in Developmental Disabilities The Behavior Analyst |

1 1 4 1 3 1 3 1 2 4 1 42 1 1 2 1 1 1 1 3 1 |

| Book/BACB | |

| BACB Professional and Ethical Compliance Code for Behavior Analysts / Fourth and Fifth Edition Task Lists (BACB, 2012, 2017) | 3 |

| Matson, J. L. (Ed.). (2012). Functional assessment for challenging behaviors. New York, NY: Springer. | 4 chapters |

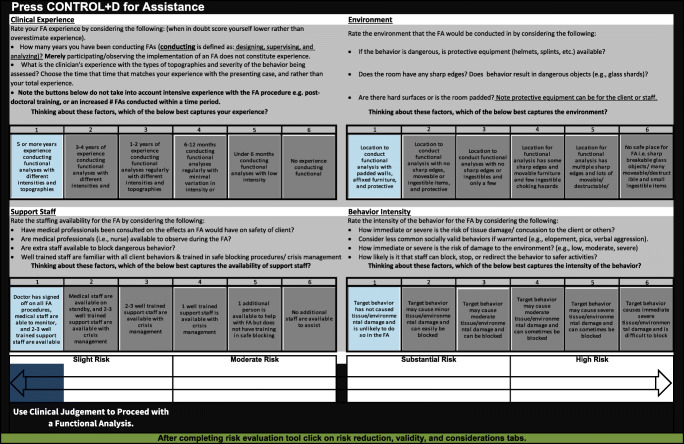

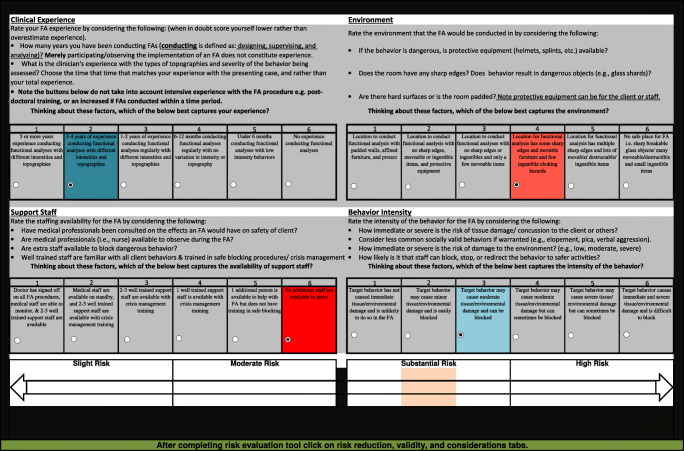

Bailey and Burch (2016) discuss components of a risk-benefit analysis and identify the following as considerations for risk as it relates to problem behavior: behavior that places the client or others at risk, appropriateness of the setting, clinician expertise, sufficiently trained personnel, buy-in from stakeholders, and other liabilities to the analyst. These suggestions correspond with Wiskirchen et al.’s (2017) four domains—clinical experience, behavioral intensity, FA environment, and support staff (see Figure 2)—which served as our primary categories of risk for the tool. We attempted to quantify these four domains as objectively as possible. Within each of these four domains, we created six levels from which the practitioner could select. Six levels were selected as they conveniently helped categorize meaningful contextual differences that could impact risk. We used an aesthetic graduated blue-to-red color scale to denote low risk (blue) to high risk (red). For the clinical experience domain, we initially envisioned using a set number of FAs completed, but heuristically, behavior analysts might more easily remember the number of years they have been conducting FAs. Thus, we used time-based criteria for experience. The levels for experience ranged from 5 or more years of experience conducting FAs with different intensities and topographies to no experience conducting FAs. For FA environment, conceivably the safest environment in which to conduct an FA could contain padded walls, affixed furniture, and protective equipment. The least safe environment for an FA is one that has sharp, breakable glass objects; moveable and destructible furniture and materials; and small, ingestible choking hazards. For supporting personnel to assist with the FA, the ideal situation would be to have a medical doctor provide written documentation that states that momentary increases in problem behavior due to FA procedures pose little to no risk to the participant, as well as to have medical staff available to monitor the client during the FA and two to three well-trained support staff available during the FA. The least ideal situation might be to have no additional staff to assist with the FA and no medical oversight. For behavioral intensity, the target behaviors that produce the least risk of physical harm to the client or others are those behaviors that are low in intensity and rate, have not caused tissue/environmental damage in the past, and are unlikely to do so during an FA (e.g., off-task behavior, mild disruption, screaming). In each domain, there are instructions to assist the user in selecting the appropriate level of risk for his or her context. For example, the user is tasked with considering the ease of blocking the target behavior when evaluating behavior intensity. Target behaviors with the most risk of causing physical harm to the client or others are those that are high in intensity and rate, have previously caused injury to self or others, and are severe; therefore, these are challenging to block regardless of whether the topography is self-injurious behavior, aggression, pica, elopement, or inappropriate sexual behavior.

Fig. 2.

Microsoft screen depicting a low risk scenario

Next, we attempted to develop a way for these six domains to be evaluated in an interactive fashion. Specifically, it was our opinion that risk could not be determined by simply considering these four domains in a linear fashion. Rather, risk was either heightened or lessened based on how the four domains interacted with each other. For example, the risk of conducting an FA with a very intense, self-injurious behavior (e.g., severe head-banging) might be lowered by conducting the evaluation in the safest environment with highly experienced behavior analysts, medical oversight, and trained staff to assist in the evaluation. Conversely, risk is increased when attempting to conduct an analysis of severe self-injury with poorly trained staff and a BCBA with limited experience. We needed a mechanism to allow for these kinds of interactions. We determined this could be accomplished through Microsoft Excel®. This software allows for the use and integration of Visual Basic coding, as well as macros to create dynamic interactive programs. We programmed the domains to be dynamically interactive, whereby a higher risk in the experience domain or in the behavioral intensity domain inflates the overall risk to a greater extent than the other domains. This effect is magnified further if there is a higher risk in both of those categories, or if three or all four domains are selected as higher risk ratings. This was accomplished by using different formula algorithms for each risk rating. We created three versions of the tool to operate on Microsoft, Macintosh, and Android operating systems and, therefore, to avoid compatibility issues. Using Excel® makes the tool accessible to most people, because many applied behavior analysts often use Excel for creating single-subject graphs (Deochand, Costello, & Fuqua, 2015). Thus, most people should not require any additional software to operate the risk analysis tool.

When the user opens the tool, he or she will see multiple tabs at the bottom of the Excel® sheet. The first tab, “About the Tool,” contains basic instructions for how to operate the tool. There is also an option to use an interactive help menu using a macro shortcut, which will help answer additional questions a user may have regarding the tool. The second tab, “Risk Evaluation,” contains the interactive tool consisting of the four domains and six buttons for each domain to represent the levels of risk within each domain. The user can click on one button within each domain to represent the current situation with his or her client. The buttons range in color from blue (lower risk) to red (greater risk). At the same time, a “slider” below the domains incorporates the clicks from all these buttons to suggest the overall risk of the situation. Overall risk can range from slight, moderate, substantial, to high risk (see Figure 3).

Fig. 3.

Macintosh screen depicting a substantial-risk scenario

The third tab, “Risk Assessment,” contains suggestions for lowering risk specific to given concerns selected by the user, as well as a published reference the user can refer to for more information. If any of the user’s clicks on the risk buttons on the second tab (Risk Evaluation) result in higher risk (i.e., within the 4–6 range for a given domain), matching suggestions for lowering risk and references in the third tab turn red to highlight for the user what the suggestions for lowering risk are. The fourth and fifth tabs, “Validity” and “Considerations,” offer tips to the user for maintaining high levels of validity in the assessment. We used “if-then” scenarios to help guide the user in selecting appropriate considerations for the client. For example, after a high-risk scenario is detected, the tool might recommend selecting reinforcing precursor behavior in an FA (Lalli, Mace, Wohn, & Livezey, 1995); using a structural analysis (Stichter & Conroy, 2005), modified choice assessment (Berg et al., 2007), or reinforcer or punisher assessments; or complimenting a descriptive assessment with a contingency space analysis (Martens, DiGennaro, Reed, Szczech, & Rosenthal, 2008). Additionally, in the Validity and Considerations tabs are tables and figures from articles that offer guidance regarding contextual variables for structural analyses (Stichter & Conroy, 2005), idiosyncratic variables that impact FA outcomes (Schlichenmeyer et al., 2013), or side effects of medications (Valdovinos & Kennedy, 2004). The measurement decision tree by LeBlanc et al. (2016) was also offered if the user was unsure how best to track the target behavior. Our objective was to offer the supporting pieces that were requested by the participants from the survey in the risk assessment tool. It is not possible to extract all suggestions from the tool and present them within this article, but the reader is encouraged to download and interact with the tool as an educational resource. It is available in the Supplemental Materials site for the journal.

Phase 3: Expert Review and Revision

After we created the tool, we requested feedback from experts in conducting FAs to determine whether they considered (a) the selected domains appropriate for assessing risk, (b) each domain to have produced an appropriate overall risk level, (c) the suggestions for lowering risk and literature base appropriate, and (d) the suggestions for maintaining the validity of the FA appropriate.

Expert Reviewers

Experts were selected based on their notoriety in the field for publishing peer-reviewed research on FAs, operating a behavior-analytic research/training laboratory, or engaging in the clinical practice of FAs at state-of-the-art intensive treatment centers. We sent an initial request to complete a review of the tool to 58 experts. We received 10 full responses and 2 partial responses to our request. Experiential demographic data were collected on each of the experts. Four of the participants had more than 15 years of experience conducting FAs, three had 11 to 15 years of experience conducting FAs, two had 6 to 10 years of experience conducting FAs, and one had 1 to 5 years of experience conducting FAs. Similarly, four had more than 15 years of experience training others to conduct FAs, three had 6 to 10 years of experiencing training others, and three had 1 to 5 years of experience training others. After we received feedback from these experts and revised the tool, we sent the tool to these same 10 experts for a second round of review.

Guided Review Process and Results

We used a survey to receive guided input from the experts, as this helped ensure our experts responded to similar stimuli from the tool and therefore guide the content of their responses. The survey was created in Qualtrics. A link to the Qualtrics survey and a copy of the three versions of the tool in Excel were provided to the experts. The survey began by first orienting reviewers to the tool to ensure they could effectively use it and could locate all of the features that we had built. Then, we provided them with 12 specific scenario combinations of risk within the four domains and asked them to rate the appropriateness of the overall risk rating the tool produced for each specific scenario combination. Most of these combinations constituted more “middle-of-the-road” risk, as we assumed there would be higher agreement with recommendations at the extreme ends of the continuum. The “grayer” areas seemed to be those where the most feedback was warranted. We used a 3-point Likert scale that included It’s just right, Needs to be lower risk, and Needs to be higher risk. If the expert selected anything other than It’s just right, we asked the expert to comment on why/how the risk should be different. Finally, for each of the 12 combinations, we also asked the experts to rate the recommendations for reducing risk with a 5-point Likert scale that included not helpful, a little helpful, somewhat helpful, helpful, and very helpful. Following that question, the experts could type any additional comments for that scenario combination.

After the initial assigned combinations, experts were asked to interact with the tool, choosing any combinations for the two domains clinical expertise and behavior intensity, while support staff and environment were fixed at the highest risk setting. We chose these two domains in particular to get expert feedback on, as these were the most commonly listed barriers to implementing FAs in the previously published survey research (Oliver et al., 2015). For these combinations, we asked the experts to rate the appropriateness of the level of risk identified, and if they would change it, to provide a rationale. Finally, experts selected additional combinations they wanted to try out, and again we only asked them to rate the appropriateness of the risk, and if they would change it, to provide a rationale.

Finally, we asked open-ended questions about the four domains, if there were any risk factors they felt we missed, if the level of detail in the Risk Reduction tab was sufficient to carry out the suggested change, if the level of detail in the Risk Reduction tab and Considerations tab was appropriate, how accessible the tool was, whether the weighting we used for our risk scales was appropriate, if the tool was useful, and for whom the tool would be useful.

We then took the ratings and commentary provided by the experts and analyzed what, if any, changes should be made to the tool. For most of the scenarios, at least 8 of 10 experts scored the risk rating as It’s just right. If more than one expert provided feedback that something needed to be changed, the authors discussed the expert’s rationale, compared it to the feedback given by the other experts, examined the literature, and made a determination whether to change the tool and, if so, how. Examples of changes made included the following. Originally, we had four overall risk ratings that included “minimal,” “some,” “moderate,” and “high.” Many of the reviewers felt that “minimal” risk was too narrow and that there is always risk with an FA. This produced interesting and spirited discussion among the authors. Eventually, we decided to change the lowest risk category to “slight” risk. Another common piece of feedback was that some of our combinations resulted in ratings that were not high enough or ratings that were too high. In these cases, we adjusted the formulas in the tool to produce higher or lower ratings, whichever was appropriate given the experts’ feedback. Further, a few reviewers requested that we add additional considerations to the Risk Reduction tab for reducing risk. We responded to these by adding in the requested considerations. After we discussed and resolved all issues, we sent the modified tool and a second survey to the experts who had completed the first survey, asking that they review our changes and make further commentary.

The second survey focused only on the changes we made to the tool to address the experts’ concerns. We made two substantial changes to our tool—specifically changing the formulas to produce higher levels of risk and changing our wording for the lowest level of risk on the slider representing overall risk. We specifically asked reviewers to attend to these changes and provide feedback on them. We provided them with some risk factor combinations that had produced suggestions in our first round of reviews, and asked them to evaluate whether the overall level of risk was now appropriate or inappropriate, or whether they were neutral about the change. There was also an open-ended commentary box for them to provide comments. We also requested feedback on the updated wording of each risk rating to “some,” “moderate,” “high,” and “very high” risk (previously “minimal,” “some,” “moderate,” and “high” risk). We asked for feedback using the same 3-point Likert scale and commentary box. However, to take into account all the respective Likert and qualitative text data on the topic, we again revised the wording to “slight,” “moderate,” “substantial,” and “high” risk.

After the second round of expert reviews, we made a few additional minor changes using the same rules from before—that two or more reviewers had to agree on a change in order to prompt a revision. The tool presented here is the culmination of our review of the literature on risk assessments in FAs, methods of reducing risk in FAs, tool creation, two rounds of expert review, and final modifications. This tool (located in the Supplemental Materials) is provided to assist practitioners in assessing risk and determining how risk can be reduced and to offer references to published research to provide practitioners with more information.

General Discussion

There is an ethical need for tools that offer guidance and resources to support the growing number of behavior analysts in the field (Geiger et al., 2010; LeBlanc et al., 2016; Newhouse-Oisten et al., 2017). However, sometimes decisions are complex, requiring multiple considerations—especially when one decision impacts the context of all other decisions in an interactive decision-making process. In such cases, domains of consideration may interact with one another in unique ways. For instance, our four domains all potentially impact risk in an FA (clinical experience, behavior intensity, support staff, and environmental setting) but likely do so in an interactive fashion. High risk in one domain might interact with low or moderate risk in another domain to create unique risk situations. In this case, the four domains, each with six categories in the continuum, would lead to 1,296 different possible combinations of risk factors. Unfortunately, a decision tree would not be a feasible format to display these options, nor could it easily be presented on a journal page. Thus, we created this interactive tool so that all 1,296 possible combinations could be taken into account in an efficient and useful manner.

Code 4.05 of the BACB® Professional and Ethical Compliance Code for Behavior Analysts specifies, “to the extent possible, a risk-benefit analysis should be conducted on the procedures to be implemented to reach the objective” (BACB, 2014). Evaluating whether assessments or treatments could adversely impact a client can be done at any point throughout the therapeutic relationship and should conclude with a course of action where the benefits outweigh the risks (BACB, 2014, p. 24). Unfortunately, the language surrounding how to conduct a risk-benefit analysis or what form a risk-benefit analysis should take is rather vague in our literature. Each assessment or treatment procedure can produce different probabilities of success, different amounts of time to take effect, different levels of restrictiveness, and different impacts on quality of life, which make the selection process for assessments and treatments a delicate tightrope on which to balance (Axelrod, Spreat, Berry, & Moyer, 1993). Code 3.01(a) specifies that functional assessments are prerequisites to behavior change programs and the type of assessment depends upon the needs of our clients, as well as their “consent, environmental parameters, and other contextual variables” (BACB, 2014). Because the functional assessment process is one of the largest differentiating factors of behavior-analytic practice from other psychological professions, it is essential that the safest and most efficacious assessment be selected for our clients’ needs.

Thirty-five of 598 participants reported using a formal process to assess risk, but when asked to type additional information, only 19 responded. Those who responded referenced using a standard assessment in their facility, the chapter on risk-benefit analysis in Bailey and Burch (2016), or the article “Functional Assessment of Problem Behavior: Dispelling Myths, Overcoming Implementation Obstacles, and Developing New Lore” by Hanley (2012). It is unknown what the remaining 579 participants use (if anything) to evaluate risk when implementing an FA. The majority of our survey sample endorsed FAs as powerful assessment tools capable of being used to evaluate cases where behavior is maintained by combined functions (Hanley et al., 2014). However, two prior surveys indicate that practicing behavior analysts appear to be avoiding the use of FAs. Bailey and Burch (2016) discuss the dearth of literature and decision-making frameworks for risk-benefit analyses, and note only one attempt to build a mathematical model for weighting risk for treatment selection. We speculated that the absence of a clear way to evaluate risk and direction on how to lower risk might be contributing, at least in part, to the lack of FA use in the field. Our systematic efforts to consolidate the research and expert recommendations are an attempt to offer some guidance surrounding the risk analysis process prior to conducting FAs. A formalized risk assessment might help bridge the research-to-practice gap that currently exists, at least when disseminating known research recommendations. If clinicians are unwilling to use the FA in practice because they are unsure whether it is safe to do so or because their administrators are unsure of the potential risks, then such a tool may aid in the continued ethical professional practice of applied behavior analysis.

Limitations

It is important to emphasize that the effectiveness of this tool in guiding clinical decision making about whether or not—or how—to proceed with an FA remains to be validated. Our attempt to create a tool to formalize the risk assessment process is a worthwhile goal; however, we must make clear a very important caveat: No published tool is a replacement for ongoing clinical decision making. Clinicians must take into account a myriad of idiosyncratic variables that play into decisions for specific clients at specific points in time and in their specific context.

Furthermore, surveys provide at best descriptive snapshots of reported participant responses. Despite a majority of participants reporting the FA to be the most informative assessment for problem behavior, similar to data collected from other surveys (Oliver et al., 2015; Roscoe et al., 2015), there is no guarantee that the data collected directly relate to the actual reasons why the FA may be underemployed in practice. For example, the participants reported concerns regarding the validity of the FA, but then they also endorsed the sentiment that the FA does not usually “get it wrong.” This seems incongruous and is a bit confusing.

Previous surveys gathered additional data on the settings in which certified behavior analysts were practicing (Oliver et al., 2015; Roscoe et al., 2015). Gathering pertinent information regarding where participants deliver the majority of their services could have informed our discussion regarding resources and agency buy-in. There can also be cultural barriers to the effective dissemination of evidenced-based practices derived from applied behavior analysis (Keenan et al., 2015). Therefore, as the survey was disseminated to BCBAs and BCBA-Ds all around the world, including demographic data regarding geographic location could have afforded us further insight as to whether this variable potentially influenced responding. Although data were gathered on years of experience in the field, it would have been beneficial to divide that question into two separate questions: one to ascertain a participant’s years of experience post-BACB® certification, and another related to working within clinical or special education settings.

There are notable considerations that should be taken into account when using the tool. One of the experts evaluating our tool rightly noted that not all years of experience are equal. For example, even with 2 years of FA exposure, the skill set of a postdoctoral fellow with extensive experience conducting FAs with the most severe behaviors under the mentorship of leading experts in the field is very different from a master’s-level practitioner working with only a few children who display mild problem behaviors. As a result, the level of risk for conducting an FA for each of these practitioners may be very different. Our tool does not account for this difference in training. We tried to make this apparent in our tool by offering instructions to the user to account for this (e.g., “Note FA experience in the buttons below do not take into account intensive experience with the procedure e.g. postdoctoral training, or an increased # FAs conducted within a time period. Thinking about these factors, which of the below best captures your experience?”). This is a clear example where clinical judgment must be used when completing and interpreting the ratings on the tool. Regardless, behavior analysts have a duty to promote an ethical culture. Offering this tool as an educational resource is our attempt to provide some guidance until further empirical support is generated for its application into behavior-analytic practice.

Future Directions

There is no guarantee that simply providing a tool to practicing behavior analysts will result in reducing the risks associated with conducting an FA. Therefore, our next step is to evaluate whether practitioners’ use of the tool effectively impacts clinical decision making. Our expert panel anticipated the tool in its current stage may help safeguard the ethical practice of FAs for new practitioners or those in training. It is our hope that this tool will help narrow the research-to-practice gap and offer a structure for considering variables that may potentially contribute to risk in an FA. We do not promote the use of FAs in all circumstances and contexts. In fact, there may be some cases where an FA is just not safe to conduct. We attempted to offer alternative forms of analyses (e.g., concurrent operants assessments, structural analysis) when the risk is just too great to make an FA appropriate or feasible, given the circumstances. It is our hope that this automated, interactive tool will offer insights and feedback to practitioners across several domains and that it captures at least some of the complexity represented in the clinical decision-making process.

During the peer-review process, we received additional feedback on our tool and incorporated those suggestions into the tool to enhance the final version. The most current three Excel versions created for phones and tablets, and Macintosh and Windows systems, can be downloaded as Supplemental Materials, but we hope that future versions of the tool (which we hope to continuously update) will be converted to web-based platforms to make them even more user-friendly and accessible. The tablet or phone version will operate with iPhones or iPads and Android tablets or phones that have the Google Sheets application installed, whereas the other versions require access to Microsoft Excel™. The current and future online formats should allow for ongoing developments in research to be appended to updated versions. In addition, a web-based platform may allow us to gather crowdsourced recommendations from behavior analysts so that new releases better meet the ongoing needs of those in the field. Behavior analysts have an ethical responsibility to proactively build frameworks that support the professional practice of behavior analysis, and we hope sharing our preliminary work on this topic meets this objective. We hope this tool serves as an educational resource that supports the professional practice of applied behavior analysis.

We strongly encourage the readers to download the tool in the Supplemental Materials and contact the authors with feedback so we can ensure that we refine this resource for all those in our growing community.

Electronic supplementary material

(DOCX 22 kb)

(XLSM 411 kb)

(XLSX 219 kb)

(XLSM 258 kb)

Author Note

We would like to express our sincere gratitude for the time and feedback of the 10 expert reviewers who reviewed beta versions of our risk assessment tool.

Funding

No sources of funding were utilized to present this information.

Compliance with Ethical Standards

Conflict of Interest

The authors know of no conflicts of interest that would present any financial or nonfinancial gain as a result of the potential publication of this manuscript.

Ethical Approval

All research was conducted in compliance with ethical standards and institutional review boards.

Footnotes

This article was updated to correct the spelling of Rebecca R. Eldridge’s name in the author listing.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

8/24/2020

This article was updated to correct the spelling of Rebecca R. Eldridge’s name in the author listing.

References

- Axelrod S, Spreat S, Berry B, Moyer L. A decision-making model for selecting the optimal treatment procedure. In: Van Houten R, Axelrod S, editors. Behavior analysis and treatment. New York, NY: Plenum Press; 1993. pp. 183–202. [Google Scholar]

- Bailey J, Burch M. Ethics for behavior analysts. New York, NY: Routledge; 2016. [Google Scholar]

- Behavior Analyst Certification Board. (2012). Task list (4th ed.). Littleton, CO: Author. Retrieved from http://www.bacb.com/index.php?page=100165

- Behavior Analyst Certification Board. (2014). Professional and ethical compliance code for behavior analysts. Retrieved July 27, 2016, from http://bacb.com/wp-content/uploads/2015/08/150824-compliance-code-english.pdf

- Behavior Analyst Certification Board. (2017). BCBA/BCaBA task list (5th ed.). Littleton, CO: Author. Retrieved from https://www.bacb.com/bcba-bcaba-task-list-5th-ed/

- Behavior Analyst Certification Board . US employment demand for behavior analysts: 2010–2017. Littleton, CO: Author; 2018. [Google Scholar]

- Behavior Analyst Certification Board. (n.d.). BACB certificant data. Retrieved from https://www.bacb.com/BACB-certificant-data

- Berg WK, Wacker DP, Cigrand K, Merkle S, Wade J, Henry K, Wang YC. Comparing functional analysis and paired-choice assessment results in classroom settings. Journal of Applied Behavior Analysis. 2007;40:545–552. doi: 10.1901/jaba.2007.40-545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bloom SE, Iwata BA, Fritz JN, Roscoe EM, Carreau AB. Classroom application of a trial-based functional analysis. Journal of Applied Behavior Analysis. 2011;44:19–31. doi: 10.1901/jaba.2011.44-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Call NA, Pabico RS, Lomas JE. Use of latency to problem behavior to evaluate demands for inclusion in functional analyses. Journal of Applied Behavior Analysis. 2009;42:723–728. doi: 10.1901/jaba.2009.42-723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deochand N, Costello MS, Fuqua RW. Phase-change lines, scale breaks, and trend lines using Excel 2013. Journal of Applied Behavior Analysis. 2015;48:478–493. doi: 10.1002/jaba.198. [DOI] [PubMed] [Google Scholar]

- Deochand N, Fuqua RW. BACB certification trends: State of the states (1999 to 2014) Behavior Analysis in Practice. 2016;9:243–252. doi: 10.1007/s40617-016-0118-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geiger KB, Carr JE, LeBlanc LA. Function-based treatments for escape-maintained problem behavior: A treatment-selection model for practicing behavior analysts. Behavior Analysis in Practice. 2010;3:22–32. doi: 10.1007/BF03391755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley GP. Functional assessment of problem behavior: Dispelling myths, overcoming implementation obstacles, and developing new lore. Behavior Analysis in Practice. 2012;5:54–72. doi: 10.1007/BF03391818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley GP, Jin CS, Vanselow NR, Hanratty LA. Producing meaningful improvements in problem behavior of children with autism via synthesized analyses and treatments. Journal of Applied Behavior Analysis. 2014;47:16–36. doi: 10.1002/jaba.106. [DOI] [PubMed] [Google Scholar]

- Iwata BA, Dozier CL. Clinical application of functional analysis methodology. Behavior Analysis in Practice. 2008;1:3–9. doi: 10.1007/BF03391714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keenan, M., Dillenburger, K., Röttgers, H. R., Dounavi, K., Jónsdóttir, S. L., Moderato, P., … Martin, N. (2015). Autism and ABA: the gulf between North America and Europe. Review Journal of Autism and Developmental Disorders, 2, 167–183. 10.1007/s40489-014-0045-2

- Lalli JS, Mace FC, Wohn T, Livezey K. Identification and modification of a response-class hierarchy. Journal of Applied Behavior Analysis. 1995;28:551–559. doi: 10.1901/jaba.1995.28-551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambert JM, Bloom SE, Kunnavatana SS, Collins SD, Clay CJ. Training residential staff to conduct trial-based functional analyses. Journal of Applied Behavior Analysis. 2013;46:296–300. doi: 10.1002/jaba.17. [DOI] [PubMed] [Google Scholar]

- LeBlanc LA, Raetz PB, Sellers TP, Carr JE. A proposed model for selecting measurement procedures for the assessment and treatment of problem behavior. Behavior Analysis in Practice. 2016;9:77–83. doi: 10.1007/s40617-015-0063-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martens BK, DiGennaro FD, Reed DD, Szczech FM, Rosenthal BD. Contingency space analysis: An alternative method for identifying contingent relations from observational data. Journal of Applied Behavior Analysis. 2008;41:69–81. doi: 10.1901/jaba.2008.41-69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matson JL, editor. Functional assessment for challenging behaviors. New York, NY: Springer; 2012. [Google Scholar]

- Neef NA, Iwata BA. Current research on functional analysis methodologies: An introduction. Journal of Applied Behavior Analysis. 1994;27:211. doi: 10.1901/jaba.1994.27-211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newhouse-Oisten MK, Peck KM, Conway AA, Frieder JE. Ethical considerations for interdisciplinary collaboration with prescribing professionals. Behavior Analysis in Practice. 2017;10:145–153. doi: 10.1007/s40617-017-0184-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Northup J, Wacker D, Sasso G, Steege M, Cigrand K, Cook J, DeRaad A. A brief functional analysis of aggressive and alternative behavior in an outclinic setting. Journal of Applied Behavior Analysis. 1991;24:509–522. doi: 10.1901/jaba.1991.24-509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliver AC, Pratt LA, Normand MP. A survey of functional behavior assessment methods used by behavior analysts in practice. Journal of Applied Behavior Analysis. 2015;48:817–829. doi: 10.1002/jaba.256. [DOI] [PubMed] [Google Scholar]

- Pelios L, Morren J, Tesch D, Axelrod S. The impact of functional analysis methodology on treatment choice for self-injurious and aggressive behavior. Journal of Applied Behavior Analysis. 1999;32:185–195. doi: 10.1901/jaba.1999.32-185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips KJ, Mudford OC. Functional analysis skills training for residential caregivers. Behavioral Interventions: Theory & Practice in Residential & Community-Based Clinical Programs. 2008;23:1–12. doi: 10.1002/bin.252. [DOI] [Google Scholar]

- Rispoli M, Burke MD, Hatton H, Ninci J, Zaini S, Sanchez L. Training Head Start teachers to conduct trial-based functional analysis of challenging behavior. Journal of Positive Behavior Interventions. 2015;17:235–244. doi: 10.1177/1098300715577428. [DOI] [Google Scholar]

- Roscoe EM, Phillips KM, Kelly MA, Farber R, Dube WV. A statewide survey assessing practitioners’ use and perceived utility of functional assessment. Journal of Applied Behavior Analysis. 2015;48:830–844. doi: 10.1002/jaba.259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlichenmeyer KJ, Roscoe EM, Rooker GW, Wheeler EE, Dube WV. Idiosyncratic variables that affect functional analysis outcomes: A review (2001–2010) Journal of Applied Behavior Analysis. 2013;46:339–348. doi: 10.1002/jaba.12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shook GL, Johnston JM, Mellichamp FH. Determining essential content for applied behavior analyst practitioners. The Behavior Analyst. 2004;27:67–94. doi: 10.1007/BF03392093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stichter JP, Conroy MA. Using structural analysis in natural settings: A responsive functional assessment strategy. Journal of Behavioral Education. 2005;14:19–34. doi: 10.1007/s10864-005-0959-y. [DOI] [Google Scholar]

- Thomason-Sassi JL, Iwata BA, Neidert PL, Roscoe EM. Response latency as an index of response strength during functional analyses of problem behavior. Journal of Applied Behavior Analysis. 2011;44:51–67. doi: 10.1901/jaba.2011.44-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valdovinos MG, Kennedy CH. A behavior-analytic conceptualization of the side effects of psychotropic medication. The Behavior Analyst. 2004;27:231. doi: 10.1007/bf03393182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MD, Doney JK, Mintz-Resudek CM, Tarbox RF. Training educators to implement functional analyses. Journal of Applied Behavior Analysis. 2004;37:89–92. doi: 10.1901/jaba.2004.37-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MD, Iwata BA. Effects of session duration on functional analysis outcomes. Journal of Applied Behavior Analysis. 1999;32:175–183. doi: 10.1901/jaba.1999.32-175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weeden M, Mahoney A, Poling A. Self-injurious behavior and functional analysis: Where are the descriptions of participant protections? Research in Developmental Disabilities. 2010;31:299–303. doi: 10.1016/j.ridd.2009.09.016. [DOI] [PubMed] [Google Scholar]

- Wiskirchen RR, Deochand N, Peterson SM. Functional analysis: A need for clinical decision support tools to weigh risks and benefits. Behavior Analysis: Research and Practice. 2017;17:325. doi: 10.1037/bar0000088. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 22 kb)

(XLSM 411 kb)

(XLSX 219 kb)

(XLSM 258 kb)