Abstract

Objective

The purpose of this review is to explore the literature on continuous assessment in the evaluation of clinical competence, to examine the variables influencing the assessment of clinical competence, and to consider the impact of high-stakes summative assessment practices on student experiences, learning, and achievement.

Methods

A literature search of CINAHL, PubMed, ERIC (EBSCO), Education Source, and Google Scholar was conducted using key terms. Articles reviewed were limited to full-text, peer-reviewed articles published in English from 2000 to 2019. Selected articles for this review include a meta-analysis, systematic reviews, and studies using qualitative and quantitative designs.

Results

Findings reveal that current assessment practices such as one-time high-stakes assessments in the evaluation of clinical competence are influenced by several variables: interexaminer differences in evaluation, variability with non-standardized client use in assessment, the failure to fail, and the impact of stress on performance outcomes. This literature review also highlights a programmatic assessment approach in which student competence is determined by a multitude of low-stakes assessments over time.

Conclusion

A review of the literature has highlighted current methods of clinical assessment relying on traditional, summative forms of evaluation, with reliability and validity of the assessment influenced by several variables. Emotions and student experiences related to one-time high-stakes summative assessments may negatively affect student learning and achievement outcomes. The design, implementation, and use of assessment practices within a competency-based education framework warrants further consideration so that optimal assessment for learning practices may be emphasized to enhance student learning and achievement.

Keywords: assessment, clinical competence, clinical education, competency, competency-based education, continuous assessment, education, feedback, formative assessment, high-stakes, summative assessment

Abstract

Objectif

La présente étude explore la littérature sur l’évaluation continue dans l’évaluation de la compétence clinique, examine les variables qui influencent l’évaluation de la compétence clinique, et prend en considération l’effet des pratiques d’évaluation sommative à enjeux importants sur les expériences, l’apprentissage et la réussite de l’étudiant

Méthodologie

Une recherche documentaire de CINAHL, PubMed, ERIC (EBSCO), Education Source et Google Scholar a été conduite au moyen de mots clés. Les articles évalués étaient limités à des articles de textes intégraux, évalués par les pairs, et publiés en anglais de 2000 à 2019. Les articles sélectionnés pour cette étude comprenant une méta-analyse, des revues systématiques et des études à l’aide de conceptions qualitatives et quantitatives.

Résultats

Les résultats ont révélé que les pratiques d’évaluation actuelles, telles que les évaluations ponctuelles à enjeux importants de l’évaluation d’une compétence clinique, sont influencées par plusieurs variables : les différences en matière d’évaluation parmi les examinateurs, la variabilité de l’utilisation non normalisée de l’utilisation du client lors de l’évaluation, le défaut d’échouer et l’effet du stress sur les résultats de performance. Cette analyse documentaire souligne aussi une approche d’évaluation de programme dans laquelle la compétence de l’étudiant est déterminée par une multitude d’évaluations à faibles enjeux au fil du temps.

Conclusion

Le présent article souligne les méthodes actuelles de l’évaluation clinique en se fondant sur les formes d’évaluation traditionnelles et sommatives, où la fiabilité et la validité de l’évaluation sont influencées par de multiples variables. Les émotions et les expériences des étudiants liées à des évaluations sommatives ponctuelles à enjeux importants peuvent avoir des effets négatifs sur les résultats d’apprentissage et de réussite des étudiants. La conception, la mise en œuvre et l’utilisation des pratiques d’évaluation dans le cadre d’une éducation fondée sur les compétences justifient d’autres considérations pour que l’évaluation optimale des pratiques d’apprentissage puisse être mise en valeur afin d’améliorer l’apprentissage et la réussite de l’étudiant.

PRACTICAL IMPLICATIONS OF THIS RESEARCH .

Emotions and student experiences related to traditional summative assessments may negatively affect student learning and achievement outcomes.

Review of current assessment methodologies and programmatic approaches to the assessment of clinical competence in dental hygiene education is warranted.

Low-stakes formative assessment practices that facilitate student engagement and meaningful learning opportunities for students should be adopted by all dental hygiene programs.

INTRODUCTION

Competency-based education has garnered much attention over the past few decades, and the difficulty and lack of unanimity in assessing clinical competence has been well established in the literature.1, 2 Educational programs, underpinned by various philosophies and practices, have utilized a variety of assessment tools for the evaluation of clinical competence. However, the ability to discern which students have demonstrated the complex array of skills, knowledge, and attitudes necessary for entry-to-practice competence has been challenged by issues of validity and reliability.2- 10

Assessment is an important element in curricular design and requires careful consideration, as optimal assessment for learning is essential to ensuring both public safety and the proficiency of health care professionals. It is incumbent on educators and postsecondary institutions to adopt assessment methodologies that provide a reliable measurement of the entry-to-practice competencies required for licensure and professional practice.2, 5- 7, 11, 12

Equally important is the educational experience, a vital aspect in student engagement, learning, and development.13- 15 Student satisfaction is positively correlated with student engagement,13,15 and the inclusion of student feedback on assessment practices is essential to informing and refining assessment methods within an educational program.13 A phenomenon known as the “backwash effect” recognizes the impact of assessment, rather than the curriculum, on student learning, engagement, and performance outcomes.16-18 Assessment influences the learning process and determines what and how students learn across various disciplines of study.4,17-24 Accordingly, consideration of the approach to assessment is essential to encouraging student engagement and cultivating positive learning experiences and outcomes.4,15,17-19

Although the literature in higher education identifies the need for an increase in formative assessment to support student learning, there is a proclivity for summative forms of assessment.12,14,25,26 The curricular culture of constructivism emphasizes fostering a sense of agency in learners27 and recognizing the importance of the sociocultural component to learning, with development situated on a continuum.27,28 Drawing on the constructivist learning theory, the learner should not only be evaluated at their current level of achievement, but the evaluation should also reflect potential development.27,28 The static nature of standard high-stakes assessment produces a measurement of learning as opposed to a dynamic and responsive formative process that supports assessment for learning.5,16,23,29 One-time high-stakes assessments are context dependent, evaluate what the learner has already achieved, and do not reflect the development and progression of the student’s complex skills, knowledge, and attributes required for professional practice. Numerous studies have documented the impact of high-stakes summative assessment on student learning, well-being, achievement, and self-efficacy.18,30-35 Because current methods of clinical assessment rely on traditional, summative forms of evaluation,12,25 there are considerable challenges to assuring that an observed assessment at a single moment in time is an accurate indication of student competence and a predictor of future professional behaviour.2-4,6,8,9,23

A plethora of studies has examined myriad ,summative and formative approaches to the assessment of clinical competence including, but not limited to, multiple-choice questions, progress testing, objective-structured clinical examinations (OSCEs), triple jump exams, critical appraised topic summary (CATs), simulations, self-assessment, portfolios, and continuous assessment. With no single assessment method to adequately measure all facets of clinical competence,2-6,8,9,23 there is a need to develop valid and authentic assessment programs to evaluate the complexity of skills, knowledge, and attitudes required for the professional practice of dental hygiene.

The purpose of this review is to explore the literature on continuous assessment in the evaluation of competence, to examine the variables influencing current methods of clinical competence assessment, and to consider the impact of high-stakes summative assessment practices on student experiences, learning, and achievement.

METHODS

A comprehensive literature search of CINAHL, PubMed, ERIC (EBSCO), Education Source, and Google Scholar was conducted using the following terms and combination of terms: competency, competency-based education, clinical competence, assessment, formative assessment, summative assessment, feedback, high-stakes, education, clinical education, continuous assessment. Article reference lists were examined to identify additional relevant articles for this review. The search was limited to articles that focussed on the assessment of competence across various disciplines, while exploring the challenges with clinical competence assessment and the impact of high-stakes summative assessment on student experiences, learning, and outcomes. Articles selected for inclusion were limited to full-text, peer-reviewed articles published in English from 2000 to 2019. Literature that did not meet the inclusion criteria was selected for conceptual information and to capture the educational theory behind the assessment of clinical competence. Sixty-two articles utilizing qualitative and quantitative designs were included in this review, as well as 3 systematic reviews, 1 meta-analysis, and content from 5 books.

RESULTS AND DISCUSSION

Assessment of clinical competence

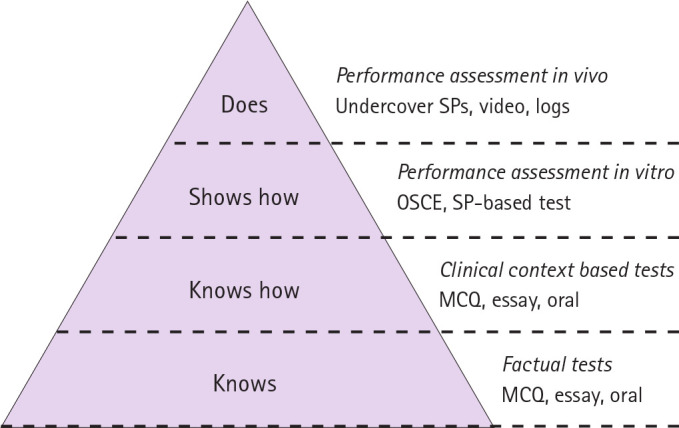

The goal of assessment is to be educational, formative in nature, and to offer a reliable measurement of student capacity, while predicting future clinical performance.6 Miller’s pyramid is a conceptual framework proposed to guide the assessment of clinical competence, and has shaped assessment practices in the education of health professionals for nearly 3 decades.3,4,6 Wass, Van der Vleuten, Shatzer, and Jones have adapted Miller’s framework to illustrate the clinical assessment techniques used to appraise clinical competence (Figure 1).6 The pyramid illustrates the levels of clinical competence, with the base, “knows,” representing the knowledge of basic facts. At the second level of the pyramid, “knows how,” students apply their knowledge to analyse and solve problems presented in case-based scenarios. These 2 levels of the pyramid are traditionally assessed using written clinical context-based tests, such as multiple-choice questions, essays, and triple jump examinations.6,23,25 At the “shows how” level of Miller’s pyramid, students demonstrate the application of skills in authentic conditions that allow for supervised interactions between health care provider and client.3,25 Finally, at the summit of the pyramid, “does,” the student, with limited support from instructors, is expected to perform the core competencies and responsibilities of a health care provider under authentic conditions over several weeks or months, ultimately allowing instructors to ascertain whether a student has consistently demonstrated the foundational competencies necessary for professional practice. Assessment methods employed at the “shows how” and “does” levels of the pyramid include OSCEs, high-fidelity simulations, direct observation, self-assessment, portfolios, longitudinal evaluations, and clinical competency examinations.4,6,23,25 Currently, there is no single assessment tool that adequately assesses the complexity of skills, knowledge, and attitudes necessary for clinical competence. Many of the assessment tools have reliability and validity issues,2-6,8-10 both of which are central to any assessment approach.2,6,16

Figure 1.

Wass et al.’s adaptation of Miller's Competence Pyramid

Reprinted from The Lancet, Vol 357, Wass V, Van der Vleuten C, Shatzer J, Jones R. Assessment of clinical competence, pp. 945–49, 2001, with permission from Elsevier.

Reliability refers to the reproducibility and consistency of an assessment result.2,3,6 Influenced by sources of error and bias, the reliability of assessment is both context and content dependent.4,36,37 Factors associated with sources of error and bias include non-standardized cases, student stress and anxiety, and faculty calibration issues.3,4,6,29,31,38-46

Inter-rater reliability and intercase reliability are important factors in the assessment of clinical competence. Its effective implementation challenges the traditional approach to assessment in which an individual case is used to assess a student’s competence.6,7,10,37 Consistency in rating student performance, with the use of multiple examiners across a broad sampling of cases, is essential to the reliable assessment of competence.4-6,20,36,37,44

The validity of an assessment instrument refers to its ability to measure what it is intended to measure.6 Recent developments in assessment methodology have focused on validity with the intent of achieving an authentic measurement of clinical competence.4,6 This review of the literature has identified a need to develop valid, reliable, and authentic assessment programs that minimize the sources of error or bias while evaluating the complexity of skills, knowledge, and attitudes required for professional practice.2-6, 8, 10, 23

Formative and summative assessment

There are numerous approaches to assessment, with predominant forms being either formative or summative.17 Summative assessments are traditionally used at the end of a course or semester to measure student development and achievement numerically, while formative assessments are often used to provide students with feedback to guide the learning process.17 Formative assessments may be non-graded to help reduce student anxiety. A completely summative assessment does not guide student learning. Rather it reflects their test-taking ability and study habits leading up to the assessment.47 Current literature argues that not every assessment requires a decision moment, as assessment may be continuous.5,47 For assessment to be meaningful, informative, and to influence the learning process, the functions of formative and summative assessment should be combined and integrated in a holistic programmatic approach to assessment.23,47,48 Furthermore, a reductionist approach whereby each assessment results in a binary (pass-fail, standard-not to standard) decision, ultimately generating a series of binary results to determine competence, should be avoided.4,23,47

Assessment is integral to evaluating the essential facets of clinical competence in dental hygiene education. The appropriate use of assessment practices to evaluate the cognitive, psychomotor, and affective attributes necessary for a judgement related to clinical competence is vital.2-5 Best practices in health professions education indicate that performance assessment must transcend the evaluation of knowledge, recall of specific facts, and demonstration of specific skills and allow for the assessment of a student’s ability to employ critical-thinking and problem-solving skills to synthesize and analyse contextual information for application in unique conditions.1,18,23 A report on student outcomes assessment in dental education revealed that traditional assessment methods used to evaluate student performance include multiple-choice tests, observation-type assessments, daily grades, and procedural requirements.12,25 With the intent to validly and reliably assess clinical competence, varied summative and formative approaches have been employed in dental and dental hygiene education including, but not limited to, multiple-choice questions, progress testing, OSCEs, triple jump exams, CATs, direct observation, self-assessment, and portfolios. Although the literature in health professions education supports the utility of newer assessment tools such as OSCEs, portfolios, triple jump exercises, and global evaluations, studies have demonstrated that they are underused in dental and dental hygiene education.12,25,49 However, these studies are becoming dated, and new research into current assessment practices in dental hygiene educational programs is required.

A recent study indicated OSCEs are used by 75% of dental educators to assess students. 50 Moreover, the implementation of portfolios to demonstrate competence was adopted by dental hygiene educators 2 decades ago.51 The validity and reliability of portfolios for the evaluation of competence in dental hygiene education have been asserted in the literature, with its reliability increasing with the use of 3 or more examiners.52,53 A study by Gadbury-Amyot, Bray, and Austin investigating the use of portfolio assessment for 15 years in a dental hygiene program demonstrated a positive correlation between portfolio performance and national board examination scores.51

The challenges to implementing these assessment techniques include a lack of familiarity with the assessment tool, time required to develop the assessment and train instructors, and the dearth of literature on implementing various assessment techniques within the context of dental hygiene. 12,49,54 Furthermore, there is limited evidence supporting clinical assessment methodologies in dental and dental hygiene education that determine a student’s ability to integrate and apply the identified domains of learning necessary for competent practice, continually over a period of time, in environments that are authentic and allow for the interaction of health care provider and client.25 Reviewing the implementation of each assessment modality is beyond the scope of this paper. However, performance-based examinations involving direct observation will be explored.

With direct observation, student performance is assessed in authentic clinical situations. 55 A common assessment technique in dental education,25,55 direct observation necessitates multiple observations to provide reliable data, as well as faculty training to ensure reliability.4-6,20,36,37,44 Difficulty in administering performance-based tests arises from the need for substantial planning and adequate physical space in which to conduct the assessment, and for multiple assessors to administer the assessment.56 Additional barriers are the lack of appropriate live clients for assessment purposes over a prolonged period3,29,40,57 and, due to the use of live clients for procedural requirements, a lack of standardized test conditions.57,58 The former has been the subject of much ethical debate.29,57,58 A study by Lantzy et al.58 revealed that 61% of dental hygiene students provided compensation to their clients. Issues with unethical student conduct and lapses in acceptable protocols and standards of dental hygiene care have also been cited.29,57,58 Specifically, performing unnecessary procedures, delaying treatment so that clients may be called for clinical requirements or a high-stakes examination at a later date, and an unacceptable standard of care during radiograph acquisition were common themes.29,58 Further, the use of a single non-standardized client to draw conclusions related to overall clinical competence is fraught with reliability issues and is a poor predictor of future performance.3,7,9,10,49,57

Continuous assessment

With postsecondary institutions accountable for the quality of graduates produced and responsible for ensuring students have met the competencies required in their chosen domain,59 a predominance of summative and one-time high-stakes assessments have been used to evaluate students in higher education.12,14,25,26 In an attempt to enhance student learning, increase validity and accuracy of assessment practices, and mitigate the challenges associated with various competence assessment tools, experts have advocated for a continuous assessment approach in which the triangulation of data from multisource feedback, multiple methods, and a variety of assessments over time provides a multifaceted and accurate representation of student competence.4,10,23,44,60

For 4 decades, continuous assessment, a hybrid of formative and summative assessment, has replaced or supplemented summative assessment in international education programs.20 Assessment has been acknowledged as the impetus for learning, with assessment practices influencing the student’s approach to learning and altering their study behaviours.19-21,23,24,57 Student efforts and engagement with course content are often influenced by the assessment format; students will either approach learning at a surface level or connect with the content on a deeper level.18,19,21,22,24 Students will engage with learning tasks and course content if it contributes to their numerical grade.24,61 However, numerical grades alone are ineffective as a source of feedback.23 Traditional summative assessments have contributed to a superficial approach to learning involving rote memorization and recall, where much of the content that was reviewed is forgotten shortly after the examination.18,20,22,24,29,61 Providing limited opportunity for feedback, summative assessments are considered high-stakes as students have one opportunity to demonstrate clinical competence.18,20,62 The provision of formative feedback is the most salient mechanism in facilitating student learning.23,24,46,63 If learning is to be meaningful, it must engage the student in a deeper understanding of the content leading to long-term retention.21,22,61,63

Many clinical assessments focus on psychomotor abilities and often neglect to consider the multifaceted construct of competence and the affective attributes required for professional practice. 23 With no single reliable assessment method that encompasses Miller’s entire competency pyramid,2-6,8-10,23 a one-time high-stakes assessment simply evaluates one dimension of Miller’s pyramid and does not capture a student’s developmental progress and competence over time.7,10,20,23,49 A shift away from individual assessment methods towards the implementation of a continuous assessment framework encourages students to connect with the course content on a continual basis throughout the semester or year.19, 21-23, 64 The approach to continuous assessment entails the provision of formative feedback and an increased frequency of and emphasis on low-stakes assessments over a period of time contributing to an overall result.19,24,64,65 By providing an opportunity for learning through formative feedback, continuous assessment guides the learning process and increases student motivation and engagement, all of which has a positive impact on student learning.19-22,24,41,64 With the reliability of assessment contingent on a substantial sampling across the content of the subject matter,3,4,6,23,36,37,44 assessment tools that are subjective or lack standardization may succeed in producing reliable results if multiple assessors, different contexts, and a large sampling across the content are used.4,23,36,37 The complexity involved in the assessment of competence necessitates the addition of qualitative information to quantitative assessments to make informed decisions related to student competence.4,41,47 A series of low-stakes continuous assessments, taken together, allows a student’s progression from novice towards competency to be thoroughly documented.23,44,62,65

Although low-stakes assessments provide opportunities for learning, the assessment must be perceived as low-stakes by the learner.62 Learner agency and the ability to make choices within the assessment are vital to the perception of the individual asses,sment as either low-stakes or high-stakes.61,62,66 Learner agency also fosters an environment in which students are more receptive to feedback.62,66 The multiple opportunities to demonstrate competence provide the students with increased control over the assessment experience and are useful in lowering the stakes.62

An argument against combining summative and formative assessment is premised on the effect of the numerical grade on the formative purpose of the feedback provided. In other words, students may focus on the numerical grade, hindering the “feed-forward” concept in which students respond to and integrate the feedback provided.64 Contrasting literature reveals that assessment programs in higher education should include complementary summative and formative methods of evaluation to provide a valid assessment of student competence and readiness for independent practice.4,19,41,47,67 Each assessment tool has inherent strengths and weaknesses; accordingly, an assessment program should employ a multimethod approach, in which results from various assessment tools provide meaningful feedback and conclusions related to student competence.2,4,8,10,23,47,60

Although the use of continuous low-stakes assessments may reduce student stress and anxiety often associated with high-stakes assessment,20,48 there is a risk that students may perceive continuous assessment as constant evaluation, potentiating assessment overload and student stress and anxiety.20,22,64 Consequently, an important balance between the number and complexity of learning activities must be found.20 In addition, the time and cost associated with the design and implementation of a continuous assessment format,20,22 as well as the need to ensure a balance between summative and formative assessment so that the formative function is not impeded4,16,22,24,64 have been identified as challenges to incorporating continuous assessment.

Drawing from qualitative assessment methodologies, Van der Vleuten et al.4 advocate for a programmatic assessment approach in which procedural strategies are utilized to reduce biases, while increasing trustworthiness and the ability to make robust decisions related to competence. The procedural strategies include the following: trained assessors, multiple assessors, multiple sources of assessment over an extended period of time, feedback cycles, assessment tools that promote the inclusion of qualitative information, and a broad sampling of content across different contexts, clients, and assessors.4 The use of these procedural strategies will minimize the need to substantiate each item within the assessment program, which results in a reductionist approach to assessment.4,47 Dijkstra et al.68 have published guidelines to support the design of a programmatic approach to assessment; these guidelines have been endorsed by ASPIRE, a program supported by the Association of Medical Education in Europe. Dijkstra et al. stress the need for further research and replication of their study and the application of the published guidelines in a range of contexts.68 Although Van der Vleuten et al.4 have advocated for a programmatic approach to the assessment of competence, its implementation in health professions education has been limited to date.23 Portfolios have been used to assist in the evaluation of clinical competence at the University of Missouri-Kansas City School of Dentistry where a cohort of dental students completed 4 years of portfolio assessment as a programmatic global assessment measure. Faculty and student experiences from the implementation of this portfolio global assessment strategy are providing the first outcome measures and lessons learned.54

Factors influencing competence assessment

The assessment of clinical competence and one-time high-stakes assessments have often been influenced by several variables, including interexaminer differences in evaluation, variability in non-standardized client use in assessment, the failure to fail, and the impact of student stress associated with clinical exams.4, 6, 29, 31, 38-55, 55

A source of inconsistency in clinical instruction and assessment is the varied educational and professional experience of examiners,38,42,43,46,69 with many instructors lacking sufficient clinical experience, teaching experience, and formal training in educational methodologies.38,39,42,46,55,69,70 One study noted interexaminer differences in the evaluation of competence, as evidenced by grading practices; some instructors were lenient, while others were more rigorous.40 Additional research asserts that students are able to identify instructors who are more lenient in grading clinical assessments, and seek out instructors who grade favourably. Variation in assessment is often related to an instructor’s status and experience, with part-time and less experienced instructors demonstrating higher levels of variation in their grading.38,39,46,70,71 Students also adjust their clinical performance to satisfy the expectations of individual instructors.18,29,38,61 Furthermore, a recent study by Waldron, Walker, Kanji, and von Bergmann highlights a lack of congruence between instructor pedagogical beliefs and actual teaching practices as an influencing factor in clinical instruction and assessment.69 Ultimately, the literature supports and emphasizes the need for increased calibration and professional development among clinical educators to minimize the variation in assessment and clinical instruction and to enhance student learning.31,38-40, 42,43,55,69,70 Research by Paulis reveals the need for clinical instructors to have 6 to 10 years of clinical dental hygiene experience prior to teaching, and knowledge and instruction in teaching methodology to support consistency and calibration in dental hygiene education.39

Although faculty attitudes towards calibration are positive, with educators viewing calibration as an important element in assessment and in improving the students’ educational experiences,38,39 the literature demonstrates that clinical educators often don’t attend calibration sessions. Primary reasons for poor attendance include part-time employees’ other employment commitments and full-time faculty’s existing responsibilities and workload.38 Given that one-third of clinical educators are not compensated for calibration attendance, it is possible that remuneration may improve participation in calibration sessions.38

Assessment, which requires the evaluator to make a judgement related to a student’s competency, entails a degree of subjectivity and bias that may undermine the assessment process.4,5 The prevailing discourse in the literature indicates that the multidimensional construct of competence necessitates the use of multiple kinds of evidence, across different contexts, provided by multiple evaluators to formulate a valid judgement.2,4,6,10,16,20,23,44,47,60,67,72 Research further advocates for the assessment of students by multiple assessors to reduce the potential for observer bias,4,6,23,37,40,44,47,67,72 and it has been argued that an appropriate sampling of observations from different assessors over time will adjust for any variation between assessors23 and increase reliability of the assessment.23,37,44 Instructor subjectivity may be further minimized with the use of scoring rubrics, training, calibration, and performance standards.38,39,42,43,55,69

The inconsistency in grading among instructors has often been influenced by “failure to fail,” a significant issue in clinical assessment.11,23,37,71,73-77 Recurring themes influencing the complex issue of “failure to fail” are a lack of confidence and uncertainty among instructors in making decisions related to performance assessment, issues with using and understanding the assessment or grading system, inadequate training or knowledge of standards expected of students, lack of institutional support, lack of instructor continuity and retention, and the emotional difficulty and n,egative consequences of failing a student.11, 71,74-77 Often, instructors lack the courage and confidence to fail students,11,23,37,73,74 which could have serious implications for student learning and public protection. The instructor–student relationship has been viewed as a potential source of bias. For example, the student’s personality and behaviour may influence an instructor’s grading, or the instructor may grade favourably to be liked by the students.2,71,74,76 Instructors have also expressed self-doubt and a sense of failure as a mentor and instructor when students are unsuccessful on evaluations.74,75,77 Due to the unfavourable consequences often associated with a negative decision, the instructor errs on the side of leniency and fails to use the lower end of the rating scale, subsequently lowering performance standards.4,11,23,74,76 Additionally, grade inflation in clinical assessments as a result of “failure to fail”74,76 has been cited in the literature and may lead to a student’s overestimation of their actual clinical skill level, ultimately affecting their interest and ability to self-reflect and learn.74

Vital to ensuring an accurate assessment of student abilities are an awareness and understanding of the effect of student emotions on performance assessment.45 The use of high-stakes testing and its associated social, emotional, and financial effects have received significant attention in recent years.30,40,45 Findings demonstrate increased levels of anxiety and stress with high-stakes examinations.30,31,35,45 The literature is inconclusive as to whether stress leads to better learning or whether it hinders learning and results in poor performance.18,30-35 While 2 studies demonstrated either no association or a weak association between stress and performance outcomes,30,32 contrasting evidence has demonstrated that elevated stress levels hinders engagement with course content, increases risk for depression, and influences academic achievement, performance outcomes, and learning.18,20,33-35 Significant sources of stress are examinations, grading, finances, lack of calibration among clinical faculty, clinical “requirement chasing,” and the fear of failing to complete clinical requirements or being kept behind.29,33,34,43,78,79 The lack of appropriate clients and standardized conditions for assessment purposes have been cited as barriers to the assessment of competence and as having a negative impact on student stress levels.7,29,33,40,79 Concerns over an assessment framework based on requirements have been expressed by both students and educators,7,29,40,57,58 who have noted issues with a lack of appropriate clients and standardized conditions for assessment purposes, costs to students, and lapses in ethical and moral decision making.7,29,57,58 The use of a requirement-based assessment framework is thought to create an educational environment in which students are no longer practising beneficence; ethical and moral decision making are compromised as students place their own educational needs above the health needs of the clients.7,29,57 Further evidence suggests that students in non-requirement based systems perform as well as or better on indices of clinical competence and have lower stress levels than their counterparts in requirement based systems.25,80 Additionally, because the use of non-standardized clients in the assessment process may introduce bias such that students with equal ability would not have the same probability of a successful outcome, careful consideration in designing assessments that account for client variability is essential.7,10,40

CONCLUSION

A shift away from high-stakes summative assessments towards low-stakes continuous assessment warrants further consideration as an assessment approach in dental hygiene competency-based education. Examining an evaluation framework in which the clinical skills, theoretical knowledge, and professional attributes necessary for professional practice are assessed with increased reliability and validity while minimizing student stress and anxiety is of significant importance.

This literature review included a considerable amount of non-dental hygiene literature from several countries and disciplines. As such, findings may not be representative of students and educators in North America and within the context of dental hygiene. Research on competency-based assessment approaches within dental hygiene education is required to help inform developments in dental hygiene assessment methodologies. This research should explore, in particular, programmatic approaches to competence assessment, and investigate the effects of high-stakes assessment on student performance, learning, and engagement. An exploration of student and faculty perceptions of current assessment tools and practices would provide valuable insight and inform future assessment approaches. Additional qualitative and quantitative research may be directed towards longitudinal studies that investigate low-stakes continuous assessment with multimethod and multisource feedback for the comprehensive assessment of entry-to-practice competence.

Findings from this literature review not only reveal that one-time high-stakes summative assessments may negatively affect student learning and achievement outcomes, but they also highlight the importance of using a purposeful continuous low-stakes assessment framework with multiple assessments and methods over time to evaluate clinical competence.7,10,16,18-20,47,49 In addition, these findings have implications for the development of assessment methodologies that reflect evidence-based pedagogical practices and the enhancement of dental hygiene curricular design.

CONFLICT OF INTEREST

The authors have declared no conflicts of interest.

Footnotes

CDHA Research Agenda category: capacity building of the profession

REFERENCES

- 1. Epstein R, Hundert E. Defining and assessing professional competence. JAMA 2002;287:226–35 [DOI] [PubMed] [Google Scholar]

- 2. Norman IJ, Watson R, Murrells T, Calman L, Redfern S. The validity and reliability of methods to assess the competence to practise of pre-registration nursing and midwifery students. Int J Nurs Stud 2002;39:133–45 [DOI] [PubMed] [Google Scholar]

- 3. Miller GE. The assessment of clinical skills/competence/performance. Acad Med 1990;65(9):S63– 2400509 [Google Scholar]

- 4. Van derVleutenCPM, Schuwirth LWT, Scheele V, Driessen EW, Hodges B, Currie E. The assessment of professional competence: Building blocks for theory development. Best Pract Res Clin Obstet Gynaecol 2010;24:703–19 [DOI] [PubMed] [Google Scholar]

- 5. Schuwirth CWT, Van der Vleuten CPM. Programmatic assessment: From assessment of learning to assessment for learning. Med Teach 2011;33:478–85 [DOI] [PubMed] [Google Scholar]

- 6. Wass V, Van der Vleuten C, Shatzer J, Jones R. Assessment of clinical competence. Lancet 2001;357:945–49 [DOI] [PubMed] [Google Scholar]

- 7. Fleckner LM, Rowe DJ. Assuring dental hygiene clinical competence for licensure: A national survey of dental hygiene program directors. J Dent Hyg 2015;89(1):26–33 [PubMed] [Google Scholar]

- 8.Wimmers PF, Guerrero LR, Baillie S. Instruction and assessment of competencies: Two sides of the same coin. In: Wimmers PF, Mentkowski M, editors. Assessing competence in professional performance across disciplines and professions [Internet]. Switzerland: Springer International Publishing, Switzerland; 2016 [cited 2019 April 7]. Available from: https://link-springer-com.ezproxy.library.ubc.ca/content/pdf/10.1007%2F978-3-319-30064-1_6.pdf

- 9. Ranney RR, Wood M, Gunsolley JC. Works in progress: A comparison of dental school experiences between passing and failing NERB candidates, 2001. J Dent Educ 2003;67(3):311–316 [PubMed] [Google Scholar]

- 10. Gadbury-Amyot CC, Bray K, Branson BS, Holt L, Keselyak N, Mitchell T, et al. Predictive validity of dental hygiene competency assessment measures on one-shot clinical licensure examinations. J Dent Educ 2005;69(3):363–370 [PubMed] [Google Scholar]

- 11. Berendonk C, Stalmeijer R, Schuwirth L. Expertise in performance assessment: Assessors’ perspectives. Adv Health Sci Educ 2013;18(4):559–571 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Navickis MA, Krust BrayK, Overman PR, Emmons M, Hessel RF, Cowman SE. Examining clinical assessment practices in US dental hygiene programs. J Dent Educ 2010;74(3):297–310 [PubMed] [Google Scholar]

- 13. Coates H. The value of student engagement for higher education quality assurance. Qual High Educ 2005;11:25–36 [Google Scholar]

- 14. Divaris K, Barlow PJ, Chendea SA, Cheong WS, Dounis A, Dragan IF, et al. The academic environment: The students’ perspective. Eur J Dent Educ 2008;12(Suppl 1):120–130 [DOI] [PubMed] [Google Scholar]

- 15. Kuh GD. What student affairs professionals need to know about student engagement. J Coll Stud Devel 2019;50(6):683–706 [Google Scholar]

- 16. Cross R, O’Loughlin K. Continuous assessment frameworks within university English pathway programs: Realizing formative assessment within high-stakes contexts. Stud High Educ 2013;38(4):584–594 [Google Scholar]

- 17. Biggs JB, Tang C.Teaching for quality learning at university: What the student does.Maidenhead:McGraw-Hill Education;2011. [Google Scholar]

- 18. Tiwari A, Lam D, Yuen KH, Chan R, Fung T, Chan S. Student learning in clinical nursing education: Perceptions of the relationship between assessment and learning. Nurse Educ Today 2005;25:299–308 [DOI] [PubMed] [Google Scholar]

- 19. Holmes N. Engaging with assessment: Increasing student engagement through continuous assessment. Act Learn High Educ 2018;19(1):23–34 [Google Scholar]

- 20. Bjælde O, Jørgensen T, Lindberg A. Continuous assessment in higher education in Denmark: Early experiences from two science courses. Dansk Universitetspædagogisk Tidsskrift 2017;12(23):1–19 Available from: https://tidsskrift.dk/dut/article/view/25634 [Google Scholar]

- 21. Tuunila R, Pulkkinen M. Effect of continuous assessment on learning outcomes on two chemical engineering courses: Case study. Eur J Eng Educ 2015;40(6):671–682 [Google Scholar]

- 22. Trotter E. Student perceptions of continuous summative assessment. Assess Eval High Educ 2006;31(5):505–521 [Google Scholar]

- 23.Van der Vleuten C, Sluijsmans D, Joosten-ten Brinke D. Competence assessment as learner support. In: Mulder M, editor. Competence-based vocational and professional education: Bridging the worlds of work and education [Internet]. Switzerland: Springer International Publishing, Switzerland; 2017 [cited 2019 April 7]. Available from: https://link-springer-com.ezproxy.library.ubc.ca/content/pdf/bfm%3A978-3-319-41713-4%2F1.pdf

- 24. Carless D.Excellence in university assessment: Learning from award-winning teaching.London:Routledge;2015. [Google Scholar]

- 25. Albino JEN, Young SK, Neumann LM, Kramer GA, Andrieu SC, et al. Assessing dental students’ competence: Best practice recommendations in the performance assessment literature and investigation of current practices in predoctoral dental education. J Dent Educ 2008;72(12):1405–1435 [PubMed] [Google Scholar]

- 26. MacLellan E. Assessment for learning: The differing perceptions of tutors and students. Assess Eval High Educ 2001;26(4):307–318 [Google Scholar]

- 27. Windschitl MA. Constructing understanding. In: Bolotin Joseph P, editor. Cultures of curriculum, 2nd ed. New York, NY:Routledge;2011. pp 81–01. [Google Scholar]

- 28. Powell KC, Kalina CJ. Cognitive and social constructivism: Developing tools for an effective classroom. Education 2009;130(2):241–250 [Google Scholar]

- 29. Henzi D, Davis E, Jasinevicius R, Hendricson W. In the students’ own words: What are the strengths and weaknesses of the dental school curriculum? J Dent Educ 2007;71(5):632–645 [PubMed] [Google Scholar]

- 30. Brand HS, Schoonheim-Klein M. Is the OSCE more stressful? Examination anxiety and its consequences in different assessment methods in dental education. Eur J Dent Educ 2009;13:147–53 [DOI] [PubMed] [Google Scholar]

- 31. Zartman RR, McWhorter AG, Seale NS, Boone WJ. Using OSCE-based evaluation: Curricular impact over time. J Dent Educ 2002;66(12):1323–1330 [PubMed] [Google Scholar]

- 32. Reteguiz JA. Relationship between anxiety and standardized patient test performance in the medicine clerkship. J Gen Intern Med 2006;21:415–18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Alzahem AM, Van derMolenHT, Alaujan AH, Schmidt HG, Zamakhshary MH. Stress amongst dental students: A systematic review. Eur J Dent Educ 2011;15:8–18 [DOI] [PubMed] [Google Scholar]

- 34. Elani HW, Allison PJ, Kumar RA, Mancini L, Lambrou A, Bedos C. A systematic review of stress in dental students. J Dent Educ 2014;78(2):226–242 [PubMed] [Google Scholar]

- 35. McClenny T. Student experiences of high-stakes testing for progression in one undergraduate nursing program. Int J Nurs Educ Scholarsh 2018;15(1):1–15 [DOI] [PubMed] [Google Scholar]

- 36. Schoonheim-Klein M, Muijtens A, Habets L, Manogue M, Van der Vleuten C, Hoogstraten J, Van der Velden U. On the reliability of a dental OSCE, using SEM: Effect of different days. Eur J Dent Educ 2008;12:131–37 [DOI] [PubMed] [Google Scholar]

- 37. Williams RG, Klamen DA, McGaghie WC. Cognitive, social and environmental sources of bias in clinical performance ratings. Teach Learn Med 2003;14(4):270–292 [DOI] [PubMed] [Google Scholar]

- 38. Dicke NL, Hodges KO, Rogo EJ, Hewett BJ. A survey of clinical faculty calibration in dental hygiene programs. J Dent Hyg 2015 Aug;89(4):264–273 [PubMed] [Google Scholar]

- 39. Paulis MR. Comparison of dental hygiene clinical instructor and student opinions of professional preparation for clinical instruction J Dent Hyg 2011;85(4):297–305 [PubMed] [Google Scholar]

- 40. Rolland S, Hobson R, Hanwell S. Clinical competency exercises: Some student perceptions. Eur J Dent Educ 2007;11:184–91 [DOI] [PubMed] [Google Scholar]

- 41. Tennant M, Scriva J. Clinical assessment in dental education: A new method. Aust Dent J 2000;45(2):125–130 [DOI] [PubMed] [Google Scholar]

- 42. Stanley J, Hanson CL, Van Ness CJ, Holt L. Assessing evidence-based practice knowledge, attitudes, access and confidence among dental hygiene educators. J Dent Hyg 2015;Oct;89(5):321–329 [PubMed] [Google Scholar]

- 43. Al-Omari WM. Perceived sources of stress within a dental educational environment. J Contemp Dent Prac 2005;6(4):1–12 [PubMed] [Google Scholar]

- 44. Palermo C, Chung A, Beck EJ, Ash S, Capra S, Truby H, Jolly B. Evaluation of assessment in the context of work-based learning: Qualitative perspectives of new graduates. Nutr Diet 2015;72:143–49 [Google Scholar]

- 45. von Der Embse N, Jester D, Roy D, Post J. Test anxiety effects, predictors, and correlates: A 30-year meta-analytic review. J Affect Disord 2018;227:483–93 [DOI] [PubMed] [Google Scholar]

- 46. Park SE, Kristiansen J, Karimbux NY. The influence of examiner type on dental students’ OSCE scores. J Dent Educ 2015;79(1):89–94 [PubMed] [Google Scholar]

- 47. Schuwirth L, Ash J. Assessing tomorrow’s learners: In competency-based education only a radically holistic method of assessment will work. Six things we could forget. Med Teach 2013;35:555–59 [DOI] [PubMed] [Google Scholar]

- 48. Shields S. “My work is bleeding”: Exploring students’ emotional responses to first-year assignment feedback. Teach High Educ 2015;20(6):614–624 [Google Scholar]

- 49. Patrick T. Assessing dental hygiene clinical competence for initial licensure: A Delphi study of dental hygiene program directors. J Dent Hyg 2001;75(3):207–213 [PubMed] [Google Scholar]

- 50. Patel US, Tonni I, Gadbury-Amyot C, Van der Vleuten CPM, Escudier M. Assessment in a global context: An international perspective on dental education. Eur J Dent Educ 2018;22(Suppl 1):21–27 [DOI] [PubMed] [Google Scholar]

- 51. Gadbury-Amyot CC, Bray KK, Austin KJ. Fifteen years of portfolio assessment of dental hygiene student competency: Lessons learned. J Dent Hyg 2014;88(5):267–274 [PubMed] [Google Scholar]

- 52. Gadbury-Amyot CC, McCracken MS, Woldt JL, Brennan RL. Validity and reliability of portfolio assessment of student competence in two dental school populations: A four-year study. J Dent Educ 2014;78(5):657–667 [PubMed] [Google Scholar]

- 53. Gadbury-Amyot CC, Kim J, Palm RL, Mills GE, Noble E, Overman PR. Validity and reliability of portfolio assessment of competency in a baccalaureate dental hygiene program. J Dent Educ 2003;67(9):991–1002 [PubMed] [Google Scholar]

- 54. Gadbury-Amyot CC, Overman PR. Implementation of portfolios as a programmatic global assessment measure in dental education. J Dent Educ 2018;82(6):557–564 [DOI] [PubMed] [Google Scholar]

- 55. Manogue M, Brown G, Foster H. Clinical assessment of dental students: Values and practices of teachers in restorative dentistry. Med Educ 2001;35:364–70 [DOI] [PubMed] [Google Scholar]

- 56. McCann AL, Campbell PR, Schneiderman ED. A performance examination for assessing dental hygiene competencies. J Dent Hyg 2001;75(4):291–304 [PubMed] [Google Scholar]

- 57. Formicola AJ, Shub JL, Murphy FJ. Banning live patients as test subjects on licensing examinations. J Dent Educ 2002;66(5):605–609 [PubMed] [Google Scholar]

- 58. Lantzy MJ, Muzzin KB, DeWald JP, Solomon ES, Campbell PR, Mallonee L. The ethics of live patient use in dental hygiene clinical licensure examinations: A national survey. J Dent Educ 2012;76(6):667–681 [PubMed] [Google Scholar]

- 59. MacLellan E. How convincing is alternative assessment for use in higher education? Assess Eval High Educ 2004;29(3):311–321 [Google Scholar]

- 60. Näpänkangas R, Karaharju-Suvanto T, Pyörälä E, Harila V, Ollila P, Lähdesmäki R, Lahti S. Can the results of the OSCE predict the results of clinical assessment in dental education? Eur J Dent Educ 2016;20:3–8 [DOI] [PubMed] [Google Scholar]

- 61. Cilliers FJ, Schuwirth LWT, Adendorff HJ, Herman N, Van der Vleuten CPM. The mechanisms of impact of summative assessment on medical students’ learning. Adv Health Sci Educ 2010;15(5):695–715 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Schut S, Driessen E, van TartwijkJ, Van der Vleuten C, Heeneman S. Stakes in the eye of the beholder: An international study of learners’ perceptions within programmatic assessment. Med Educ 2018;52:654–63 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Riaz F, Yasmin S, Yasmin R. Introducing regular formative assessment to enhance learning among dental students at Islamic International Dental College. J Pak Med Assoc 2015;65(12):1277–1282 [PubMed] [Google Scholar]

- 64. Hernández R. Does continuous assessment in higher education support student learning? High Educ 2012;64:489–502 [Google Scholar]

- 65. Taleghani M, Solomon, ES, Wathen W. Grading dental students in a “nongraded” clinical assessment program. J Dent Educ 2006;70(5):500–510 [PubMed] [Google Scholar]

- 66. Harrison CJ, Könings KD, Dannefer EF, Schuwirth LWT, Wass V, Van derVleutenCPM. Factors influencing students’ receptivity to formative feedback emerging from different assessment cultures. Perspect Med Educ 2016;5:276–84 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Prescott LE, Norcini JJ, McKinlay P, Rennie JS. Facing the challenges of competency-based assessment of postgraduate dental training: Longitudinal evaluation of performance (LEP). Med Educ 2002;36:92–97 [DOI] [PubMed] [Google Scholar]

- 68. Dijkstra J, Galbraith R, Hodges BD, McAvoy PA, McCrorie P, Southgate LJ, et al. Expert validation of fit-for-purpose guidelines for designing programmes of assessment. BMC Med Educ 2012 [cited 2019 April 7];12(20):1–8 Available from: [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Waldron SK, Walker J, Kanji Z, von BergmannH. Dental hygiene clinical instructors’ pedagogical beliefs and described practices about student-centered education. J Dent Educ 2019;89(3):1019–1029 [DOI] [PubMed] [Google Scholar]

- 70. Deeb JG, Koertge TE, Laskin DM, Carrico CK. Are there differences in technical assessment grades between adjunct and full-time dental faculty? A pilot study. J Dent Educ 2019;83(4):451–456 [DOI] [PubMed] [Google Scholar]

- 71. Bush HM, Schreiber RS, Oliver SJ. Failing to fail: Clinicians’ experience of assessing underperforming dental students. Eur J Dent Educ 2013;17:198–207 [DOI] [PubMed] [Google Scholar]

- 72. Cormack CL, Jensen E, Durham CO, Smith G, Dumas B. The 360-degree evaluation model: A method for assessing competency in graduate nursing students. A pilot research study. Nurse Educ Today 2018;64:132–37 [DOI] [PubMed] [Google Scholar]

- 73. Dudek NL, Marks MB, Regehr G. Failure to fail: The perspectives of clinical supervisors. Acad Med 2005 Oct;80(10):S84– [DOI] [PubMed] [Google Scholar]

- 74. Hughes LJ, Mitchell M, Johnston ANB. “Failure to fail” in nursing—A catch phrase or a real issue? A systematic integrative literature review. Nurse Educ Pract 2016;20:54–63 [DOI] [PubMed] [Google Scholar]

- 75. Cassidy S, Coffey M, Murphy F. “Seeking authorization”: A grounded theory exploration of mentors’ experiences of assessing nursing students on the borderline of achievement of competence in clinical practice. J Adv Nurs 2017;73(9):2167–2178 [DOI] [PubMed] [Google Scholar]

- 76. Docherty A, Dieckmann N. Is there evidence of failing to fail in our schools of nursing? Nurs Educ Perspect 2015;36(4):226–231 [DOI] [PubMed] [Google Scholar]

- 77. Luhanga F, Yonge OJ, Myrick F. “Failure to assign failing grades”: Issues with grading the unsafe student. Int J Nurs Educ Scholarsh 2008;5(1):1–14 [DOI] [PubMed] [Google Scholar]

- 78. Harris M, Wilson JC, Hughes S, Knevel RJM, Radford DR. Perceived stress and well-being in UK and Australian dental hygiene and dental therapy students. Eur J Dent Educ 2018;22:e602–e611 [DOI] [PubMed] [Google Scholar]

- 79. Hayes A, Hoover JN, Karunanayake CP, Uswak GS. Perceived causes of stress among a group of western Canadian dental students. BMC Res Notes 2017;10:714 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Licari FW, Knight W. Developing a group practice comprehensive care education curriculum. J Dent Educ 2003;67(12):1312–1315 [PubMed] [Google Scholar]