Abstract

Objective

The study sought to understand the potential roles of a future artificial intelligence (AI) documentation assistant in primary care consultations and to identify implications for doctors, patients, healthcare system, and technology design from the perspective of general practitioners.

Materials and Methods

Co-design workshops with general practitioners were conducted. The workshops focused on (1) understanding the current consultation context and identifying existing problems, (2) ideating future solutions to these problems, and (3) discussing future roles for AI in primary care. The workshop activities included affinity diagramming, brainwriting, and video prototyping methods. The workshops were audio-recorded and transcribed verbatim. Inductive thematic analysis of the transcripts of conversations was performed.

Results

Two researchers facilitated 3 co-design workshops with 16 general practitioners. Three main themes emerged: professional autonomy, human-AI collaboration, and new models of care. Major implications identified within these themes included (1) concerns with medico-legal aspects arising from constant recording and accessibility of full consultation records, (2) future consultations taking place out of the exam rooms in a distributed system involving empowered patients, (3) human conversation and empathy remaining the core tasks of doctors in any future AI-enabled consultations, and (4) questioning the current focus of AI initiatives on improved efficiency as opposed to patient care.

Conclusions

AI documentation assistants will likely to be integral to the future primary care consultations. However, these technologies will still need to be supervised by a human until strong evidence for reliable autonomous performance is available. Therefore, different human-AI collaboration models will need to be designed and evaluated to ensure patient safety, quality of care, doctor safety, and doctor autonomy.

Keywords: primary health care, general practitioners, medical informatics, artificial intelligence, qualitative study

INTRODUCTION

There has been a rapid advancement in artificial intelligence (AI) over the last decade.1,2 AI technologies, in particular the ones employing machine learning methods, are being increasingly integrated into many healthcare domains to support consumers, their carers, and health professionals.3–7 The main motivations behind employing AI technologies include supporting better decision making, improving care quality, and the need to move to precision medicine.8 Although AI technologies have delivered promising outcomes in some cases,9–11 there are also some serious concerns about the accuracy, bias, and patient safety of AI technologies and their unintended consequences such as deskilling.12–15 AI technologies replacing doctors16 is an unlikely scenario, at least in the near future.6 A more plausible alternative is the increasing advent of doctor-AI collaborations in which humans would stay in the decision-making role and some tasks would be delegated to AI.6 So far, the performance of AI varies depending on the tasks to be delegated. It can be better than human performance (ie, drug-drug interactions), equal to humans (ie, certain radiology cases), or still require clinician supervision (eg, electrocardiography reading).17

Primary care has become one key application field for AI development efforts.18–20 In addition to using AI to support decision making, predictive modelling, and business analytics,20 there is increasing interest in employing AI to assist with the documentation task in primary care.21 This is driven by the need to reduce documentation burden,22 which can cause clinician burnout, increase cognitive load, and lead to information loss.23–25 AI documentation assistants (or digital scribes) are a new class of technology with the potential to move from simple record dictation to intelligent summarisation of clinical conversations enabled by the advancements in AI, machine learning, and natural language processing.26 Primary care, with its office-based setting typically involving 2-person interactions, provides more suitable conditions for the initial development of AI documentation assistants compared with other healthcare settings, such as hospitals with their noisy environments involving many people.

Despite the increasing interest in AI documentation assistants, there remain several major obstacles to developing these solutions such as the nonlinear structure of doctor-patient conversations,27 varying audio quality, complexities in medical concept extraction, and lack of clinical data.26 In addition to these technical challenges, a major barrier is the integration of these technologies in the sociotechnical system of the consultation, and associated implications for clinical practice.26,28 Although there has been some prior research on general practitioners’ (GPs’) views on the potential role of AI in primary care,19,20,29 no work has specifically focused on documentation assistants.26 This study addresses this gap by (1) providing an in-depth qualitative understanding of GPs’ views on a future AI documentation assistant;19 (2) situating the documentation task in a larger sociotechnical context of consultations;26,28 and (3) employing a co-design approach, aligned with the calls for a bottom-up approach to designing future healthcare technologies.18,20

MATERIALS AND METHODS

Study setting and participants

Three workshops were conducted: 2 at Macquarie University (Sydney, Australia) and 1 at Cirqit Health (Melbourne, Australia). Each workshop lasted for about 2 hours. Eligibility criteria for participants required them to be a primary care doctor and use electronic health records regularly for documentation purposes. Participants were recruited through various channels including website information, social media, and primary healthcare networks email newsletters. A total of 16 GPs (6 women and 10 men) participated in the workshops. They were all actively practicing GPs with more than 3 years of experience. Two researchers facilitated the workshops. One is a human-computer interaction researcher with prior experience in conducting co-design workshops and the other is a health informatics researcher with a medical doctor degree and expertise in general practice.

Data collection

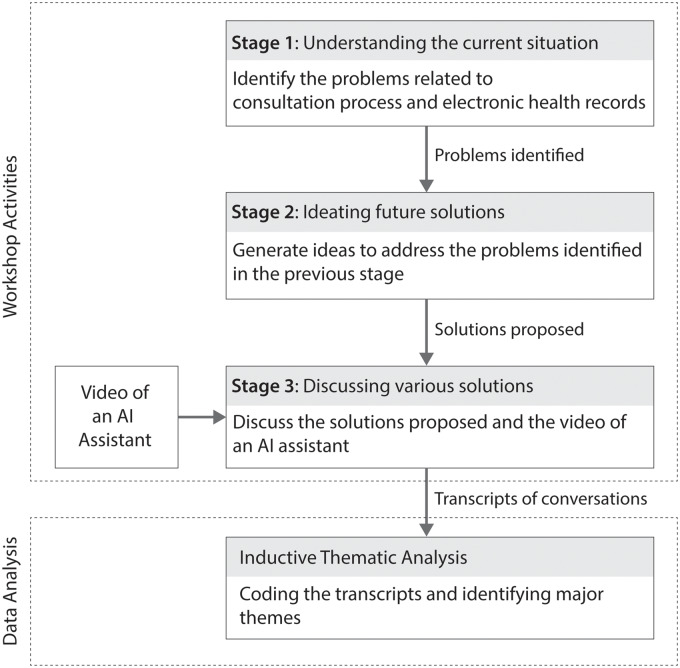

A co-design workshop method was undertaken, with activities structured according to the “future workshops” format,30 which is a commonly used workshop format to increase people’s involvement in shaping the design of future solutions and foster collective thinking.31 The main aim of the workshops was to understand the GPs’ views on future AI documentation assistants, but the workshops began by first placing AI and the documentation task within the larger consultation context. To this end, the workshops included 3 main stages focusing on (1) understanding the current consultation context and identifying existing problems, (2) ideating future solutions to these problems, and (3) discussing various solutions (Figure 1).

Figure 1.

Workshop activities and data analysis. AI: artificial intelligence.

Stage 1 (understanding the current situation) involved affinity diagramming, a generative design thinking activity,31 to gather workshop participants’ needs, problems, concerns, and frustrations in relation to the current situation. Affinity diagramming starts with participants writing down the problems and concerns on Post-it Notes, with each note containing a single problem or concern. Participants are asked to write as many notes as possible in 10 minutes. This is followed by a rapid thematic mapping activity performed by the workshop participants, to refine and categorize the items generated.

Stage 2 (ideating future solutions) involved brainwriting, an enhanced version of brainstorming that addresses common shortcomings of the latter such as some participants dominating or introvert participants being quiet.32 Each participant is given a sheet with a problem statement and asked to write down some solutions. Participants try to come up with 3 different solutions to each problem statement.

Stage 3 (discussing various solutions) involved evaluating the solutions proposed in stage 2, as well as an open discussion prompted by a video of a prototype AI assistant. A 2-minute video (https://www.microsoft.com/en-us/research/project/empowermd/) demonstrated clinical software listening to a doctor-patient conversation and automatically generating summary notes of the consultation with the aim to reduce doctors’ documentation burden.21 The video showed a user interface in which summaries were generated in real time in parallel to the doctor-patient conversation, which was heard as voice over. The full features of the AI assistant as depicted in the video are available in Table 1. Our aim with the prototype video was not to evaluate a particular technology, but rather to facilitate discussions about AI assistants, and to a lesser extent, the future roles of AI in primary care. After the brief presentations of the participants’ solutions to the problems identified at stage 2 (Supplementary Appendix 1), the facilitators played the prototype video and used some guiding questions (Supplementary Appendix 2) to keep the focus of the discussions on the implications of an AI assistant and the future role of AI in primary care.

Table 1.

The features of the AI assistant demonstrated in the video

| AI Assistant Features | Description |

|---|---|

| Display full transcription | The system transcribes doctor-patient conversations and displays the full transcription on screen in real time. |

| Highlight the important information | The system highlights the important information in the text by using bold or italic fonts. |

| Generate summary suggestions | The system presents summary note suggestions based on the important information detected in the transcription. The note suggestions are displayed in real time according to the standard Subjective Objective Assessment and Plan format.33 |

| Accept/reject/edit the summary suggestions | The system allows doctors to accept, reject, or edit the system’s suggestions. |

| Manual entry of summary notes | The system allows doctors to enter their own summary notes. |

| Selective presentation of transcription | The system allows doctors to select a note suggestion to view the surrounding conversations in the full transcript. |

| Personalize | The system learns from the choices made by the doctor in order to improve its suggestions. |

| Sign off | The system allows doctors to approve and sign off the final summary notes. |

AI: artificial intelligence.

Workshop activities were audio-recorded using a portable Zoom Audio Recorder and transcribed verbatim. The Macquarie University Ethics Committee approved the study (Ref. MQCRG2018008). Researchers obtained informed consent from all the participants. Participants were reimbursed at the standard hourly rate for GPs.

Data analysis

Inductive thematic analysis of the conversation transcripts was performed.34 Two researchers independently performed the thematic analysis. They coded the transcripts with an open coding approach using NVivo 12 (QSR International, Melbourne, Australia). After coding the entire transcript of the first workshop, the researchers compared and discussed their coding and aligned their understanding of the data. Then, they completed the coding of the remaining 2 workshop transcripts. Differing assessments were resolved by consensus. At the end, the themes and subthemes were determined, and the theme descriptions were written independently by the 2 researchers. After discussing and finalizing the descriptions and the representative quotes, one researcher wrote the final narrative of the results. Study results were shared with the workshop participants, and their feedback was incorporated into the analysis. In this article, we report our thematic analysis of the transcribed workshops, focusing on stage 3, in which the participants discussed some of their solutions proposed at the stage 2, the video prototype of a possible AI assistant, and the future role of AI in primary care. This study adheres to the COREQ (Consolidated Criteria for Reporting Qualitative Research) checklist (Supplementary Appendix 3).35

RESULTS

Three major themes were identified in our thematic analysis: professional autonomy, human-AI collaboration, and new models of care. Table 2 presents a brief summary of the themes and subthemes. These will be elaborated in the following sections. In the boxes below participants are identified with numerical codes; for example, P1 refers to participant 1.

Table 2.

Themes and subthemes identified in the thematic analysis

| Themes | Subthemes | Description |

|---|---|---|

| Professional autonomy | Individual ways to provide care | Recognizing doctors’ ability to care for their patients in their own way with the opportunities provided by new technologies. |

| Adaptation and personalization | Personalized systems to support doctors’ individual working style. | |

| Bottom-up technology design | Design of new technologies to involve doctors’ views and provide clear benefits to their practice. | |

| Doctor safety | Protecting doctors from the medico-legal issues caused by the retrospective assessment of full consultation records. | |

| Automation bias | Problems with overreliance of doctors on decision support systems. | |

| Human-AI collaboration | Core tasks of doctors | The core tasks of doctors in this collaboration could include clinical reasoning, empathy and human communication, and supervising AI. |

| Roles of AI | The roles of AI in this collaboration could include (1) providing decision support, (2) helping with repetitive tasks, (3) auditing, and (4) providing empathy support. | |

| Doctors’ concerns about AI | There were concerns regarding (1) bias in data collection and clinical reasoning, (2) limitations to deal with complex cases, (3) limitations to understand contextual information, (4) security of patient data, and (4) fear of being replaced by AI. | |

| Desired technological features of AI | Potential features included (1) being adaptive to doctors’ working style, (2) supporting speech-based interaction, (3) generating patient summary letters, and (4) simulating the writing experience. | |

| New models of care | Preconsultation stage | The stage in which a patient’s chief complaint and history of the current illness can be captured automatically. |

| Telehealth | Virtual and online ways to provide care. | |

| Mobile health | Mobile apps supporting consumers to monitor and manage their health, as well as collecting patient-generated data to be integrated in the electronic health record in a seamless and meaningful way. | |

| Automation vs improved care | Questioning the role of increased automation in improving patient care. |

AI: artificial intelligence.

Professional autonomy

The first major theme was professional autonomy. Within this theme, GPs discussed the need to recognize the different forms of care they provide, the importance of a bottom-up approach to technology design, personalization, and medico-legal issues.

Individual ways to provide care

First, GPs emphasized the importance of doctors’ ability to care for their patients in their own way with the opportunities provided by new technologies. While new technologies offer new possibilities to provide care, as noted by a GP, being dependent on an AI assistant might undermine the value of doctors’ recommendations, because patients might think they can get the same recommendations online elsewhere (Box 1, quotes 1 and 2).

Box 1.

Illustrative quotations regarding professional autonomy

Individual ways to provide care

Quote 1: Because increasingly the technology means you can do a lot of things without the patient actually being in front of you. That is confronting to the traditional model … The [healthcare] system doesn't allow that … you've got to be face-to-face with the patient. [P1]

Quote 2: If they [patients] think that we're just getting suggestions from a computer, then maybe they can just get suggestions from a computer. I think it becomes more difficult to convince them that our recommendations are more valuable than what they can pick up on the internet. [P5]

Adaptation and personalization

Quote 3: I think that the protectionist model doesn't survive, unfortunately in the world that we live in—a competitive world … we'll have to innovate, so yeah, the core task is basically interpreting Doctor Google. [P5]

Quote 4: We need to develop machine learning that has the capability … to accommodate professional preferences and styles … It's adaptive and it supports you based on your particular consultation and decision-making processes. [P2]

Bottom-up technology design

Quote 5: The nice thing about this [the AI assistant in the video] is as an individual practitioner, you'll be able to do it, so it will be our option … It's going to be a bottom up kind of diffusion not a top down dinosaur, so the barriers to doing it [are] going to be much easier … because we'll find the clinical utility of it and want to adopt. [P7]

Doctor safety and automation bias

Quote 6:

P2: There'd be an audit trail around the system. You remove something you didn't think was important … but it may have actually been important. This way it's your judgement and your skills as to what you put in the record.

P3: You're destroying social contract with this [AI assistant]

P2: Why not just record the whole thing and just be done with it, and never alter it.

Quote 7: You could imagine a nightmare scenario where something bad happens … they subpoena the records or something, and you've got years of consultation. These consultations are stored in your records, and they run search terms. A person maybe had a cancer or something, so they run through 20 symptoms of their cancer through the whole thing, and then identify every instance that was mentioned … and they say, “all of these were mentioned—how come you missed it?” Or something like that … I mean, in a nutshell … patient safety is good—it's prime, but the doctor's safety should be there [too]. [P11]

Adaptation and personalization

While there was a view that “protectionist” model in medicine (ie, the notion that some clinical tasks can only be performed by doctors)36 could not survive, and doctors are the ones who would need to innovate and adapt, some participants maintained that there was some adaptation work to be done by the future AI systems as well. Future systems could actually support their professional autonomy through personalized and adaptive features (Box 1, quotes 3 and 4).

Bottom-up technology design

GPs also noted the need for a bottom-up approach to technology development, in which their opinions are taken into consideration, with a focus on delivering clear benefits to their practice and workflow. There was a reference to a centralized electronic health record system introduced by the government, and how it was a very top-down technological solution with limited use. An AI assistant in the examination room as depicted in the prototype video can be more easily adopted with its clear benefits to GPs and their practice (Box 1, quote 5).

Doctor safety and automation bias

Medico-legal aspects involved in working with an AI assistant in the examination room was a major concern. GPs raised some questions: (1) in a doctor-AI collaboration scenario, who will be the ultimate decision maker? (2) when a GP does not agree with the recommendations of an AI assistant, can they change something? and (3) what would be the implications of changing something that was AI-generated? Some GPs expressed that fear of making a mistake may lead to automation bias37—overreliance of humans on computer-generated data and recommendations, damaging professional autonomy (Table 1, quote 6). They feared that continuous recording of every conversation needed to allow an AI system to summarize conversations into a record is prone to causing unintended consequences threatening what the participants referred to as doctor safety. This term was introduced as something that is likely to be critical for GPs to exercise their professional autonomy in future primary care scenarios involving AI assistants (Box 1, quote 7).

Human-AI collaboration

There was consensus that consultations of the future would increasingly involve more automated and AI-supported systems. However, there were differing views on how this human-AI collaboration would work, what roles doctors and AI would take, and what tasks could be delegated to AI.

The core tasks of doctors

A human-AI collaboration might be essential to ensure trustful and safe consultations in the future. The analogy with the partnership between pilots and autopilots appeared independently in 2 workshops. On the one hand, as a participant noted, pilots act as a safety guard. On the other hand, autopilots were considered as essential assistants to help control the aircraft during the entire flight. While recognizing both pilots and autopilots as the key actors in this human-AI collaboration, the participants highlighted the actors’ limits (Box 2, quotes 1 and 2). In future doctor-AI collaborative consultations, the participants identified the core tasks of doctors as clinical reasoning, human communication, embodied experience of interacting with a doctor, and empathy (Box 2, quotes 3 and 4).

Box 2.

Illustrative quotations regarding human-AI collaboration

Core tasks of doctors

Quote 1: Because the machine's doing all the flying so the pilot's there just to make sure everything is okay. … Ultimately, the human is there to make sure that if there is a discrepancy that your experience comes in. [P1]

Quote 2:

P11: These days a lot of airplane travel is on autopilot that's done by computers, based on our inputs and everything else, but at the same time, would anyone here get into a flight that's entirely pilotless?

P12: It's probably more dangerous if the pilots are actually flying.

Quote 3: I think the point of the doctor is to give suffering meaning. It's to provide a steady hand, I think. It's to support people through issues … a computer can support you, but it doesn't have any meaning because there's no emotional risk from the computer because the computer does not know what it means to live or die. [P2]

Quote 4: The patient comes in but then you're asking how their husband is who's never been in to see you but doesn't want to, but you then try to help their health care as part of that consultation. I mean this [the AI assistant in the video] has no way of … it's that caring bit … because you see the body language, so sometimes it's not what they say, it's something you pick up … the computer won't be able to pick that up. [P7]

Roles of AI

Quote 5: It would be great if you could go, hey Siri, can you print off this radiology X-ray. [P8]

Quote 6: Wouldn't it be amazing if the AI could capture all that advanced care directives and all [the] irrelevant stuff. You want to take away mundane repetitive tasks. [P1]

Quote 7: I think eventually the doctors will be the assistant doctors … Doctors will assist artificial intelligence what to do … eventually … we'll be helping it. I think we'll be assistant … Because they'll be doing everything. It will be just saying, yes, no, yes, no. Say supervision, but we'll be assisting. [P12]

Quote 8:

P5: Some people are not great at doing mental health consults … there are some people that are terrible and for those people just the right words …

P7: Can you imagine [if] the computer gives a suggested empathetic statement and you read it out—“oh, that must be really difficult what you're going through”

P5: Yeah, that's probably better than what they're already doing.

Doctors’ concern about AI

Quote 9: … A lot of the input data coming so far is from white males—so a lot of the algorithms and all of the learning at the moment is largely based on white male thinking … so there are inherent biases already within their programs in the way that [data is being collected]—even the idea of drawing conclusions about the diagnosis. [P12]

Quote 10: Men and women are quite different, in terms of their presentations of diseases, and if it's [the AI assistant in the video] learning on the basis of a male—a man's idea of a disease, and if I do not follow that, and something does happen, who gets sued in that intervention, if I chose to ignore that bit of information? [P12]

Quote 11: If I actually spend 10 minutes with a patient, right, in an ideal world this [the AI assistant in the video] will compute everything … We have what the patient needs, so I'm quickly looking, yeah, that's about right, yeah, save. That's it. That's an ideal outcome. In reality, what will happen is, no, no, no, delete, delete, delete. [P6]

Quote 12: I think undifferentiated illness would be tricky with this … You know if the patient that comes in—“oh, I'm just feeling a bit tired or my appetite is not great”—very undifferentiated things … I mean something that's very … protocol driven … It would be very easy to do with this system [the AI assistant in the video], but I would imagine it would be much harder with a patient that comes in with kind of vague things which often we see as GPs. [P5]

Quote 13: Sometimes … patients will say … a jumble of things. Part of our job's to pick up the significant things, but in this sort of system [the AI assistant in the video] everything is picked up, and something might seem irrelevant at the time, but then six months later it's like, oh, that was actually a huge thing. [P9]

Quote 14: It's always the problem of hacking, and somebody can know what exactly you do and can track the patient record. [P7]

Quote 15: I think eventually it [AI] will take all the jobs. We're going to be the last group anyway to use this. [P11]

Desired technological features of AI

Quote 16: The machine start[s] to learn your behaviors and is more predictive to your style … [the machine] that you're using is more nuanced to your individual style. [P6]

Quote 17: You just tell the computer what [you] want to check and then the computer will do the job and generate the report. [P14]

Quote 18: If there was a Tom's visit summary, so I could print off a document saying, Tom, you've come in with cough … Here's what I think's going on. Here's the management plan and here [are] the things to look out for and come back and see me … if one of these happen. [P7]

Quote 19: One of the advantages of when you write it is it reinforces what you thought … It's a thinking process, because you actually think about what this actually means? … How can you capture that writing experience in an electronic medium? [P4]

The roles of AI

Many GPs believed that an AI system could assist with tasks such as documentation, referrals, and other paperwork (Box 2, quotes 5 and 6). On the contrary, there was a contrasting view that GPs would actually be assistants to the AI system (Box 2, quote 7). Most of the GPs agreed that future AI systems would lack empathy, and that this was likely to stay a core task for doctors. In contrast, one GP maintained that there would be room for AI systems to actually assist when doctors are struggling to be empathetic (Box 2, quote 8).

Doctors’ concerns about AI

GPs voiced several concerns, including some potential biases in patient data and system design, the time needed to fix the errors and train the system, challenges of dealing with complex cases, and the auditing of AI. One major concern was the biases that can be introduced through the data used to train a machine learning system, and subjective views on what a diagnosis means (Box 2, quote 9). Some concerns of GPs about the biases were associated with the potential medico-legal consequences (Box 2, quote 10).

GPs were also concerned with the amount of training they personally might need to invest to ensure the proper functioning of such future systems. GPs felt that an AI system is likely to make many errors, and if GPs are going to spend too much time on editing and correcting the mistakes, then the benefits of having an AI assistant are diminished (Box 2, quote 11). The remaining concerns included the potential limitation of the capabilities of AI system to deal with more complex cases, the potential for AI systems to act as an auditing tool, constantly assessing the quality and performance of GPs’ work, and the security of patient data, especially with a system that records everything in doctor-patient conversations similar to the one depicted in the video (Box 2, quotes 12–14). Finally, there was a concern about a future scenario in which GPs are no longer needed and have been fully replaced by an AI system (Box 2, quote 15).

Desired technological features of AI

There were several features that GPs would like future AI systems to support such as personalization, data security, speech-based interaction, patient summary letters, and recreating the writing experience. One frequently mentioned feature was personalized and adaptive behavior to support GPs working styles (Box 2, quote 16). In addition, speech-based interaction and a computer-generated consultation summary for patients were perceived as useful components of the future AI systems (Box 2, quotes 17 and 18). Although writing is mainly a documentation activity, a GP noted that it was also a tool to structure thinking. A future AI system can recreate that writing experience as a tool to guide and structure GPs’ thinking processes (Box 2, quote 19).

New models of care

A few emerging models of primary care were discussed. GPs envisioned the future consultations to be more distributed and virtual while providing support for patients to manage their own health.

Preconsultation, mobile health, and telehealth

Participants frequently mentioned preconsultation as a new model of care. In this model, a computer system and patient work together to capture information on the patient’s chief complaint and medical history in the waiting room prior to the consultation with a doctor (Box 3, quote 1). Similar to the preconsultation, 2 more models of care were also envisioned to take place out of the examination room: mobile health and telehealth. With their accessibility and convenience, they were considered to play an important role in future primary care (Box 3, quotes 2 and 3). Telehealth solutions with AI capabilities were proposed to be particularly useful for remote or low-income communities (Box 3, quote 4).

Box 3.

Illustrative quotations regarding new models of care

Preconsultation

Quote 1: Imagine if a patient's sitting in a practice … if they get given an iPad that has … a written assistant that says—“Can you tell me a little bit about why you're here?”—The patient types in their presenting complaint … any family history of conditions, any current medications … When that patient comes in to see me, that information is presented to me in a manner that I can copy and paste, and I can go through it. [P6]

Telehealth and mobile health

Quote 2: They [patients] can do it [have the consultation] at home. You feel like rubbish in the morning, do you really want to have to call the GP, get an appointment, sit in a waiting room for an hour with other coughs and colds … think of all that disease that's spread in a waiting room. [P11]

Quote 3: I quite like the idea of having an app that's safe, that patients can update their health information that can link to the medical records. [P15]

Quote 4: I suppose in some countries where you're managing maybe thousands and thousands of people and having oversight, where the individual things are being managed by these [AI] assistants … those things can really give high quality medicine to low resourced countries. Now, they'll make mistakes, but even, you can bear those mistakes, basically, because of the overall benefit of them. [P6]

Automation vs improved care

Quote 5: I think … we're not asking the right question about the future role of technology in healing. Because the future is not better documenting disease … the future is in how we might empower people towards a better or subjective experience of their life … the question is what is the role of data in healing … the problem is you guys [technology designers/researchers] are conflating efficient data management with healing. [P2]

Automation vs improved care

A GP raised an important point on the tension between patient care and automation. It was maintained that improved documentation did not mean improved care. The GP criticized the current focus of technology developments on the improvements in task efficiency and believed that this focus needed to be recalibrated to prioritize patient experience and care (Box 3, quote 5).

DISCUSSION

In this qualitative study exploring GPs’ perspectives on a future AI assistant and the role of AI in primary care, we found 3 main themes: professional autonomy, human-AI collaboration, and new models of care. These themes have highlighted some implications for doctors, patients, healthcare system, and technology design.

Professional autonomy should be a system design consideration

Autonomy of GPs, central to their professional identity,37,38 emerged as a major theme in the discussions about a future AI-enabled primary care. This theme was closely associated with doctor-AI collaboration models in which 3 roles of AI appeared: assistant, auditor, and supervisor. While AI as an assistant was seen to mostly help with documentation tasks and supporting clinical decision making, AI as a supervisor would perform many more clinical tasks and make decisions on behalf of GPs. Participants believed that although the doctors would remain the ultimate decision makers, that role is likely to be delegated to the AI systems, because doctors might be too worried about the consequences of not agreeing with a high-performing AI system, and may not feel comfortable with changing the information generated by the system. Automation bias39 is a well-known situation in which humans may overtrust systems typically after a period of successful performance demonstrated by the system.40 Professional autonomy of GPs can be further diminished by highly automated systems. High levels of automation and regulation may reduce doctors’ role to “pushing buttons to answer questions in an evidence based computerized diagnostic pathway.”38,41

GPs’ concerns were also connected with the AI’s third role as an auditor. GPs imagined that future systems would constantly review their records that contain almost every detail of their interaction with patients and that this may radically transform the ways in which they provide care to their patients. GPs were also worried that an AI system could make mistakes because of the system’s inability to capture some contextual information such as patients’ nonverbal gestures. The limitations of AI with contextual information in healthcare settings have been highlighted previously.12 Therefore, it would be very important for AI systems to provide some mechanisms to address this limitation and allow doctors to exercise more autonomy against AI’s recommendations. In addition to patient safety, doctor safety in an AI-enabled primary care needs to be carefully considered, with special attention to its medico-legal aspects, as those concerns can have negative effects on their practice.42

Different human-AI collaboration models need to be investigated

There were contrasting views on the nature of doctor-AI collaboration. On the one hand, GPs have been increasingly relying on decision support features of their electronic health records, making such systems an indispensable part of their practice to ensure patient safety. The analogy of autopilot systems and human pilots made by the participants suggested that, similar to the human pilots who are, in most cases, no longer be able to fly when the autopilot is unavailable, doctors without any AI assistance may not be allowed to provide care to their patients in the future. On the other hand, fully autonomous AI systems in medicine seem a long way off and may not be viable because of the risks involved.6 It has been claimed that some tasks in medicine may never go beyond level 3 autonomy, “a conditional automation, for which humans will indeed be required for oversight of algorithmic interpretation of images and data.”6 Furthermore, accurate diagnosis is only a part of a patient’s care: human communication is needed for different patient cases and sensitive situations.43–45 Therefore, different models of doctor-AI collaboration need to be designed and investigated in a more participatory way.18 Although there seems a clear path to an increased level of automation in primary care,21 the ways in which doctors and AI work together in different scenarios with different levels of automation need to be further investigated.

AI can support new models of care

Distributed, mobile, and virtual models of care were considered by the workshop participants to be important parts of the future primary care landscape by the workshop participants, aligned with the previous views.46 In an extreme case, future consultations were suggested to take place out of the examination rooms. In fact, virtual consultations have been already taking place through emerging solutions using mobile applications and video streaming tools,47 demonstrating positive outcomes including convenience and decreased cost.48 Virtual consultations can become extremely valuable in response to disaster situations such as an infectious disease outbreak.49 An increasing number of telehealth services can be expected in the coming years. However, their increased adoption requires establishing necessary data infrastructures50 and new payment models.51

One major model of care commonly recommended by the participants involved preconsultation activities in which, in addition to supporting information gathering, an AI system could analyze the information and generate a summary of the patient’s reasons for visiting, with a preliminary assessment. It is possible to combine the preconsultation stage with a complete telehealth service52; however, its effectiveness needs to be thoroughly investigated.15 Aligned with the preconsultation activities, another model of care was associated with the idea of empowered patients53 equipped with smartphone and desktop applications to monitor and manage their health.54 These applications can be useful for early detection and prevention of various diseases as well as management of chronic conditions.55

The new models of care suggested by participants are aligned with Bodenheimer et al’s56 Quadruple Aim, which considers healthcare professionals’ well-being as a central goal in addition to the Triple Aim Model’s goals of improving patient experience, improving population health, and reducing associated costs.57 While a speech-based AI assistant in the examination room can reduce the documentation burden by automatically extracting medical information from doctor-patient conversations,21 patient-centered solutions such as mobile health apps and automated triaging tools can improve patient experience and reduce the load on the healthcare system.

Limitations

This study is subject to some limitations. The video prototype we used may have limited the participants’ capacity to think about alternative solutions. The workshops reflected the viewpoints of a limited number of GPs in Australia. Therefore, the views, perceptions, and concerns identified in our study may not be representative of the larger community of GPs around the world. In addition, other stakeholders receiving or providing primary care such as patients, carers, and other healthcare professionals should be involved in future research to obtain a more balanced and complete understanding of the roles that AI technologies can play in future primary care practice.

CONCLUSION

AI documentation assistants will likely to be integral to the future primary care consultations. However, these technologies will still need to be supervised by a human until strong evidence for reliable autonomous performance is available. Therefore, different human-AI collaboration models will need to be designed and evaluated to ensure patient safety, quality of care, doctor safety, and doctor autonomy.

FUNDING

This research was supported by the National Health and Medical Research Council grant APP1134919 (Centre for Research Excellence in Digital Health) led by Prof EC.

AUTHOR CONTRIBUTIONS

ABK, LL, JCQ, DR, and EC were involved in study design. ABK, LL, and HLT were involved in data collection. ABK and KI were involved in data coding and analysis. ABK wrote the first draft of the manuscript. ABK, KI, LL, JCQ, DR, HLT, SW, SB, and EC were involved in revisions and subsequent drafts of the manuscript. All authors approved the final draft.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGEMENTS

The Northern Sydney Primary Health Network and Cirqit Health assisted in the recruitment of study participants.

CONFLICT OF INTEREST STATEMENT

None declared.

REFERENCES

- 1. Jordan MI. Artificial intelligence—the revolution hasn’t happened yet. Harv Data Sci Rev 2019; 1 (1) [Google Scholar]

- 2. Brynjolfsson E, McAfee A. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. New York, NY: W.W. Norton & Company; 2014. [Google Scholar]

- 3. Hinton G. Deep learning-a technology with the potential to transform health care. JAMA 2018; 320 (11): 1101–2. [DOI] [PubMed] [Google Scholar]

- 4. Naylor CD. On the prospects for a (deep) learning health care system. JAMA 2018; 320 (11): 1099–100. [DOI] [PubMed] [Google Scholar]

- 5. Mandl KD, Bourgeois FT. The evolution of patient diagnosis: from art to digital data-driven science. JAMA 2017; 318 (19): 1859–60. [DOI] [PubMed] [Google Scholar]

- 6. Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med 2019; 25 (1): 44–56. [DOI] [PubMed] [Google Scholar]

- 7. Coiera E. The fate of medicine in the time of AI. Lancet 2018; 392 (10162): 2331–2. [DOI] [PubMed] [Google Scholar]

- 8. Topol E. Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again. New York, NY: Hachette; 2019. [Google Scholar]

- 9. Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016; 316 (22): 2402–10. [DOI] [PubMed] [Google Scholar]

- 10. Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017; 542 (7639): 115–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Lakhani P, Sundaram B. Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology 2017; 284 (2): 574–82. doi: 10.1148/radiol.2017162326. [DOI] [PubMed] [Google Scholar]

- 12. Cabitza F, Rasoini R, Gensini GF. Unintended consequences of machine learning in medicine. JAMA 2017; 318 (6): 517–8. [DOI] [PubMed] [Google Scholar]

- 13. Caruana R, Lou Y, Gehrke J, Koch P, Sturm M, Elhadad N. Intelligible models for healthcare: predicting pneumonia risk and hospital 30-day readmission. In: Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining Sydney, NSW, Australia: Association for Computing Machinery; 2015: 1721–30.

- 14. Fraser H, Coiera E, Wong D. Safety of patient-facing digital symptom checkers. Lancet 2018; 392 (10161): 2263–4. [DOI] [PubMed] [Google Scholar]

- 15. McCartney M. Margaret McCartney: innovation without sufficient evidence is a disservice to all. BMJ 2017; 358: j3980. [DOI] [PubMed] [Google Scholar]

- 16. Darcy AM, Louie AK, Roberts LW. Machine learning and the profession of medicine. JAMA 2016; 315 (6): 551–2. [DOI] [PubMed] [Google Scholar]

- 17. Yu K-H, Beam AL, Kohane IS. Artificial intelligence in healthcare. Nat Biomed Eng 2018; 2 (10): 719–31. [DOI] [PubMed] [Google Scholar]

- 18. Mistry P. Artificial intelligence in primary care. Br J Gen Pract 2019; 69 (686): 422–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Blease C, Kaptchuk TJ, Bernstein MH, Mandl KD, Halamka JD, DesRoches CM. Artificial intelligence and the future of primary care: exploratory qualitative study of UK general practitioners’ views. J Med Internet Res 2019; 21 (3): e12802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Liyanage H, Liaw ST, Jonnagaddala J, et al. Artificial intelligence in primary health care: perceptions, issues, and challenges. Yearb Med Inform 2019; 28 (1): 41–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Coiera E, Kocaballi AB, Halamka J, Laranjo L. The digital scribe. NPJ Digit Med 2018; 1 (1): 58. doi: 10.1038/s41746-018-0066-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: primary care physician workload assessment using EHR event log data and time-motion observations. Ann Fam Med 2017; 15 (5): 419–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Friedberg MW, Chen PG, Van Busum KR, et al. Factors affecting physician professional satisfaction and their implications for patient care, health systems, and health policy. Rand Health Q 2014; 3 (4): 1. [PMC free article] [PubMed] [Google Scholar]

- 24. Goldsmith R. To combat physician burnout and improve care, fix the electronic health record. Harv Bus Rev 2018. https://hbr.org/2018/03/to-combat-physician-burnout-and-improve-care-fix-the-electronic-health-record Accessed July 06, 2020.

- 25. Shachak A, Hadas-Dayagi M, Ziv A, Reis S. Primary care physicians’ use of an electronic medical record system: a cognitive task analysis. J Gen Intern Med 2009; 24 (3): 341–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Quiroz JC, Laranjo L, Kocaballi AB, Berkovsky S, Rezazadegan D, Coiera E. Challenges of developing a digital scribe to reduce clinical documentation burden. NPJ Digit Med 2019; 2 (1): 114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Kocaballi AB, Coiera E, Tong HL, et al. A network model of activities in primary care consultations. J Am Med Inform Assoc 2019; 26 (10): 1074–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Tran BD, Chen Y, Liu S, Zheng K. How does medical scribes’ work inform development of speech-based clinical documentation technologies? A systematic review. J Am Med Inform Assoc 2020; 27 (5): 808–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Blease C, Bernstein MH, Gaab J, et al. Computerization and the future of primary care: a survey of general practitioners in the UK. PLoS One 2018; 13 (12): e0207418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Kensing F, Madsen KH. Generating visions: future workshops and metaphorical design. In: Greenbaum J, Kyng M, eds. Design at Work: Cooperative Design of Computer Systems. Mahwah, NJ: Erlbaum; 1992: 155–68. [Google Scholar]

- 31. Tomitsch M, Wrigley C, Borthwick M, et al. Design. Think. Make. Break. Repeat.: A Handbook of Methods. Amsterdam, the Netherlands: BIS; 2018. [Google Scholar]

- 32. Rohrbach B. Creative by rules—method 635, a new technique for solving problems. Absatzwirtschaft 1969; 12: 73–5. [Google Scholar]

- 33. Gossman W, Lew V, Ghassemzadeh S. SOAP Notes . Treasure Island, FL: StatPearls; 2020. [Google Scholar]

- 34. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006; 3 (2): 77–101. [Google Scholar]

- 35. Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care 2007; 19 (6): 349–57. [DOI] [PubMed] [Google Scholar]

- 36. Mann C. Doctors need to give up professional protectionism. BMJ 2018; 361: k1757. [DOI] [PubMed] [Google Scholar]

- 37. Marjoribanks T, Lewis JM. Reform and autonomy: perceptions of the Australian general practice community. Soc Sci Med 2003; 56 (10): 2229–39. [DOI] [PubMed] [Google Scholar]

- 38. Fraser J. Professional autonomy—is it the future of general practice? Aust Fam Physician 2006; 35 (5): 353–5. [PubMed] [Google Scholar]

- 39. Parasuraman R, Molloy R, Singh IL. Performance Consequences of Automation-Induced “Complacency. Int J Aviat Psychol 1993; 3 (1): 1–23. [Google Scholar]

- 40. Friedman B, Kahn PH. Human agency and responsible computing: Implications for computer system design. J Syst Softw 1992; 17 (1): 7–14. [Google Scholar]

- 41. Chew M. The destiny of general practice: blind fate or 20/20 vision? Med J Aust 2003; 179 (1): 47–8. [DOI] [PubMed] [Google Scholar]

- 42. Nash LM, Walton MM, Daly MG, et al. Perceived practice change in Australian doctors as a result of medicolegal concerns. Med J Aust 2010; 193 (10): 579–83. [DOI] [PubMed] [Google Scholar]

- 43. Perloff RM, Bonder B, Ray GB, Ray EB, Siminoff LA. Doctor-patient communication, cultural competence, and minority health: theoretical and empirical perspectives. Am Behav Sci 2006; 49 (6): 835–52. [Google Scholar]

- 44. Ong LM, de Haes JC, Hoos AM, Lammes FB. Doctor-patient communication: a review of the literature. Soc Sci Med (1982) 1995; 40 (7): 903–18. [DOI] [PubMed] [Google Scholar]

- 45. Rocque R, Leanza Y. A systematic review of patients’ experiences in communicating with primary care physicians: intercultural encounters and a balance between vulnerability and integrity. PLoS One 2015; 10 (10): e0139577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Shaw RJ, Bonnet JP, Modarai F, George A, Shahsahebi M. Mobile health technology for personalized primary care medicine. Am J Med 2015; 128 (6): 555–7. [DOI] [PubMed] [Google Scholar]

- 47. Wattanapisit A, Teo CH, Wattanapisit S, Teoh E, Woo WJ, Ng CJ. Can mobile health apps replace GPs? A scoping review of comparisons between mobile apps and GP tasks. BMC Med Inform Decis Mak 2020; 20 (1): 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Powell RE, Henstenburg JM, Cooper G, Hollander JE, Rising KL. Patient perceptions of telehealth primary care video visits. Ann Fam Med 2017; 15 (3): 225–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Smith AC, Thomas E, Snoswell CL, et al. Telehealth for global emergencies: implications for coronavirus disease 2019 (COVID-19). J Telemed Telecare 2020; 26 (5): 309–13. doi: 10.1177/1357633X20916567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Panch T, Mattie H, Celi LA. The “inconvenient truth” about AI in healthcare. NPJ Digit Med 2019; 2 (1): 77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Wharton GA, Sood HS, Sissons A, Mossialos E. Virtual primary care: fragmentation or integration? Lancet Digit Health 2019; 1 (7): e330–1. [DOI] [PubMed] [Google Scholar]

- 52. Mahase E. Birmingham trust and Babylon Health discuss pre-A & E triage app. BMJ 2019; 365: l2354. [DOI] [PubMed] [Google Scholar]

- 53. Anderson RM, Funnell MM. Patient empowerment: reflections on the challenge of fostering the adoption of a new paradigm. Pat Educ Couns 2005; 57 (2): 153–7. [DOI] [PubMed] [Google Scholar]

- 54. Lymberis A. Smart wearable systems for personalised health management: Current R&D and future challenges. In: proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology (IEEE Cat. No.03CH37439); 2003; 4: 3716–9. [Google Scholar]

- 55. Varshney U. Mobile health: Four emerging themes of research. Decis Supp Syst 2014; 66: 20–35. [Google Scholar]

- 56. Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med 2014; 12 (6): 573–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Berwick DM, Nolan TW, Whittington J. The triple aim: care, health, and cost. Health Aff (Millwood) 2008; 27 (3): 759–69. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.