Abstract

In construing meaning, the brain recruits multimodal (conceptual) systems and embodied (modality-specific) mechanisms. Yet, no consensus exists on how crucial the latter are for the inception of semantic distinctions. To address this issue, we combined electroencephalographic (EEG) and intracranial EEG (iEEG) to examine when nouns denoting facial body parts (FBPs) and nonFBPs are discriminated in face-processing and multimodal networks. First, FBP words increased N170 amplitude (a hallmark of early facial processing). Second, they triggered fast (~100 ms) activity boosts within the face-processing network, alongside later (~275 ms) effects in multimodal circuits. Third, iEEG recordings from face-processing hubs allowed decoding ~80% of items before 200 ms, while classification based on multimodal-network activity only surpassed ~70% after 250 ms. Finally, EEG and iEEG connectivity between both networks proved greater in early (0–200 ms) than later (200–400 ms) windows. Collectively, our findings indicate that, at least for some lexico-semantic categories, meaning is construed through fast reenactments of modality-specific experience.

Keywords: EEG, embodied cognition, functional connectivity, intracranial recordings, semantic processing

Introduction

Evolutionarily driven to construe meaning, the human brain possesses multiple semantically selective regions consistently organized across individuals (Huth et al. 2016). As amply demonstrated, these regions comprise both embodied (modality-specific) (Ibáñez and García 2018; Pulvermüller 2018) and multimodal (modality-neutral) (Seghier 2013; Lambon Ralph et al. 2017) systems. However, an ardent dispute exists about how these systems contribute to the very inception of meaning (Pulvermüller et al. 2005; Papeo et al. 2009; Klepp et al. 2014; Papeo and Caramazza 2014; Shtyrov et al. 2014; Shtyrov and Stroganova 2015; Mollo et al. 2016, 2017; Pulvermüller 2018; García et al. 2019).

Whereas the “grounded view” posits that modality-specific mechanisms act immediately and automatically upon word perception, the “symbolic” position assumes that meaning critically depends on multimodal networks, followed by epiphenomenal recruitment of embodied systems (Bedny and Caramazza 2011; Pulvermüller 2018). Key experiments have focused on motor-network engagement by bodily action verbs, yielding inconsistent results: while several studies have reported rapid (<200 ms) modality-specific modulations (Shtyrov et al. 2014; García et al. 2019), others have observed such effects only in postconceptual (>300 ms) stages (Papeo et al. 2009; for a review, see Pulvermüller 2018). Moreover, the criticism of so-called “ultra-rapid embodied effects” (<100 ms) elicited by action verbs (Papeo and Caramazza 2014; Shtyrov and Stroganova 2015) and the explicit claim that embodied reactivations cannot precede multimodal semantic operations (Mollo et al. 2017) clash against several magnetoencephalographic (Pulvermüller et al. 2005; Klepp et al. 2014; Shtyrov et al. 2014; Mollo et al. 2016; García et al. 2019), electroencephalographic (EEG) (Hauk and Pulvermüller 2004; Shtyrov et al. 2004), and intracranial EEG (iEEG) (Ibáñez et al. 2013) studies showing an early (100–200 ms) engagement of motor networks during processing of such words.

To a large extent, and beyond particular theoretical commitments, these discrepancies likely reflect methodological shortcomings. In fact, semantically driven motor-network engagement may be overridden by response-related motor activity (Pulvermüller 2013) and confounded by articulatory reactivations triggered by sublexical processing (Wilson et al. 2004). Therefore, the grounded/symbolic debate calls for new, spatiotemporally precise approaches.

Here, through a combination of high-density EEG and direct iEEG recordings, we offer unprecedented, motor-artifact-free evidence that embodied reactivations are foundational in lexico-semantic operations. We performed an EEG experiment and two iEEG case studies targeting face-processing mechanisms during semantic decisions on nouns denoting facial body parts (FBPs; e.g., “nose”) and nonfacial body parts (nFBPs; e.g., “hand”). First, we analyzed N170 modulations, the most robust early EEG signature of facial processing (Rossion 2014). Second, we assessed iEEG-derived time-frequency patterns within the face-processing network (including the right fusiform, ventral/rostral lingual, and calcarine gyri) (Nakamura et al. 2000; Grill-Spector et al. 2004; Collins et al. 2012) and a multimodal semantic network (with hubs in the angular and supramarginal gyri) (Binder and Desai 2011; Seghier 2013), focusing on a frequency range (1–20 Hz) sensitive to facial and semantic effects (Zion-Golumbic et al. 2010; Vukovic and Shtyrov 2014). Moreover, we performed multivariate pattern decoding to estimate stimulus classification for each network based on time–frequency signals and examined their functional connectivity patterns.

Materials and Methods

Stimuli and Procedure for the Semantic Decision Task

The task comprised Spanish nouns belonging to two key categories: FBPs (n = 21), denoting parts of the human face (e.g., “nariz” [nose]) and nFBPs (n = 21), denoting parts from other body parts (e.g., “pecho” [chest]). These were presented amid a set of filler items, including 21 verbs denoting facial actions (e.g., “besar” [to kiss]), 21 verbs denoting nonfacial actions (e.g., “saltar” [to jump]), and 21 nouns denoting objects (e.g., “torta” [cake]). Psycholinguistic data for all stimuli were extracted from B-Pal (Davis and Perea 2005). One-tailed t-tests for Paired t-tests confirmed the stringent control of the stimuli, evidencing that FBP and nFBP words were similar in log frequency [t(40) = 0.34, P = 0.73, d = 0.111092], familiarity [t(40) = 0.001, P = 0.99, d = 0.0001], imageability [t(40) = 0.978, P = 0.33, d = 0.302891], concreteness [t(40) = 0.283, P = 0.78, d = 0.094415], number of letters [t(40) = 1.106, P = 0.27, d = 0.339804], number of phonemes [t(40) = 0.905, P = 0.37, d = 0.279879], syllabic length [t(40) = 0.518, P = 0.61, d = 0.168061], orthographic neighbors [t(40) = 0.455, P = 0.65, d = 0.139978], and phonological neighbors [t(40) = 0.425, P = 0.67, d = 0.131584]. Filler items were not statistically controlled in terms of psycholinguistic properties given that they were not meant for analysis. Descriptive statistics are offered in Table 1.

Table 1.

Psycholinguistic data for facial-body-part and nonfacial-body-part words

| Variable | FBP words | nFBP words |

|---|---|---|

| Log frequency | 1.32 (0.53) | 1.26 (0.55) |

| Familiarity | 5.82 (1.04) | 5.82 (0.63) |

| Imageability | 5.97 (0.68) | 5.77 (0.64) |

| Concreteness | 5.94 (0.61) | 5.88 (0.66) |

| Number of letters | 5.95 (1.56) | 5.43 (1.50) |

| Number of phonemes | 5.81 (1.47) | 5.38 (1.60) |

| Syllabic length | 2.48 (0.60) | 2.38 (0.59) |

| Orthographic neighbors | 3.52 (5.74) | 4.28 (5.10) |

| Phonological neighbors | 5.28 (8.52) | 6.33 (7.40) |

Note: Data presented as mean (SD), extracted from B-PAL (Davis and Perea 2005). FBP, facial body parts; nFBP, nonfacial body parts.

Participants in the EEG experiment were tested individually in a dimly illuminated room. Patients partaking in the iEEG case studies were tested in their hospital room. All participants performed the task on professional laptops equipped with a 15.6″ 16:9 HD (1366 × 768) LED backlight display. Instructions were first provided orally and then recapped on-screen. Participants were asked to read each word on the screen and press a “Yes” key if it denoted a part of the face or a “No” key if it did not. All responses were made with the middle and index fingers of the dominant hand, and the key assigned to each finger was counterbalanced across subjects. Stimuli were pseudorandomized so as to minimize possible phonological or semantic priming effects. Before the actual task, 12 practice trials were presented with stimuli not included in the experimental blocks.

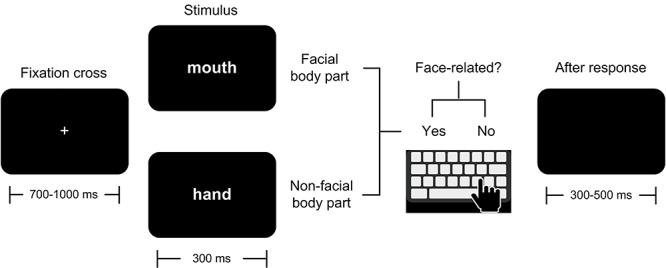

Each trial began with an ocular fixation cross at the center of the screen, with a random duration between 700 and 1000 ms. This was followed by the stimulus, which remained on the screen for 300 ms. After each response, a blank screen appeared for a period randomized between 300 and 500 ms (Fig. 1). If participants failed to respond within the first 2000 ms after stimulus onset, the following trial was automatically triggered. The fixation cross and the stimuli (font: Helvetica; color: white; size: 54; style: regular; height: 4 cm) were presented in the middle of the screen against a black background. At a viewing distance of approximately 95 cm, the stimuli had a visual angle of 2.4° horizontally by 2.6° vertically. From a psychophysical viewpoint, it is important to note that the use of white-ink stimuli against dark backgrounds is widespread in both EEG (e.g., Hald et al. 2006; Davidson and Indefrey 2007; Dalla Volta et al. 2014; Moldovan et al. 2016; Vilas et al. 2019) and iEEG (e.g., McDonald et al. 2010; Ponz et al. 2013; Khachatryan et al. 2018) studies and that, assuming average response latencies in the order of 700 ms, trials would be roughly 2000 ms apart from each other. These considerations, together with the pseudorandomized distribution of stimuli, rule out the possibility that ensuing results could be driven by afterimages.

Figure 1.

Semantic decision task. In each trial, participants had to press a “yes” key if word denoted a FBP (e.g., mouth) or a “no” key if it denoted an entity that is not part of the face (e.g., hand).

The task was designed and performed on Matlab (https://www.mathworks.com/products/matlab.html) and Psychtoolbox (http://psychtoolbox.org/). In the EEG experiment, each participant completed four runs of the tasks, each with a different pseudorandomization of the stimuli. This was done to increase the signal-to-noise ratio (Luck and Kappenman 2012), given that the Spanish language offers relative few FBP nouns that prove sufficiently frequent and familiar to ensure acceptable performance rates.

In the case studies, due to time constraints of the iEEG protocol, participants completed only one run with the original pseudorandomization of stimuli. The complete session for each participant lasted roughly 40 min in the EEG experiment and approximately 15 min in the iEEG assessments.

Participants

EEG Experiment

The sample consisted of 25 healthy Spanish-speaking subjects (16 women), with a mean age of 26.16 (SD = 4.36) and 17.56 years of education (SD = 2.73). All participants possessed normal or corrected-to-normal vision, and none of them reported a history of psychiatric or neurological disease. They were all predominantly right-handed, as established through the Edinburgh Inventory (Oldfield 1971). None of the subjects was found to be an outlier in terms of accuracy or response time (at a cutoff of 2.5 SDs from the sample’s mean), so all of them were entered in the analyses. Before the study, all participants read and signed an informed consent form in accordance with the Declaration of Helsinki. The study was approved by the institutional ethics’ committee.

To determine the sample size required for the EEG experiment, we ran an estimation analysis in G*Power 3.1 (Faul et al. 2007). Given our statistical design, we considered three parameters. First, we set an alpha level of 0.05. Second, we considered an effect size of 0.66 (based on Cohen’s d). This medium-to-large effect size was established based on results from a previous event-related potentials (ERP) study (Kiefer et al. 2008) that found significant embodied language effects (modulation of acoustically evoked potentials by sound-related words) in a window similar to our own (150–200 ms), with a sample of 20 subjects. Finally, we established a power of 0.8. This analysis showed that a sample size of 25 would yield a power of 0.89, supporting the robustness of ensuing effects.

IEEG Case Studies

The case studies were conducted as part of an ongoing iEEG protocol (Chennu et al. 2013; Canales-Johnson et al. 2015; Hesse et al. 2016; Birba, Hesse, et al. 2017b; García-Cordero et al. 2017; Canales-Johnson et al. 2020). It comprised two patients with intractable epilepsy undergoing intracranial monitoring. Patient 1 was a 17-year-old, right-handed woman who had completed 2 years of secondary education. Patient 2 was a 32-year-old, right-handed man who had completed a bachelor’s degree. The two subjects had normal vision. In both cases, handedness was established with the Edinburgh Inventory (Oldfield 1971). Both patients’ age of onset was 8-years old, and their epilepsy types were focal and nonlesional. Patient 1 suffered two to three seizures per month, whereas Patient 2 experienced them on a weekly basis. After iEEG implantation, the epileptogenic focus was detected in posterior superior occipital areas in Patient 1, and in left superior parietal regions in Patient 2. Neither patient underwent a Wada test. The two subjects were carefully selected from a pool of over 15 iEEG patients (recruited at a rate of five per year, over 3 years), such that the sites from which recordings would be obtained were distal to the epileptogenic foci. Importantly, their electrodes were implanted in task-relevant regions (i.e., face-processing and multimodal networks) of the right hemisphere. This offered infrequent, unique circumstances for our study.

Indeed, intracranial recordings occur exceptionally in humans and provide a unique opportunity to analyze brain function with high temporal and spatial resolution. However, since they come from patients with pharmacologically intractable epilepsy, they may not accurately represent a healthy population (Mukamel and Fried 2012). To account for that, we followed recent guidelines (Dastjerdi et al. 2013; Parvizi et al. 2013; Foster et al. 2015) and controlled for relevant factors. First, similar recording sites typically include multiple pathological and healthy brain regions (Musch et al. 2014). We addressed this issue by: 1) excluding channels in epileptic focus regions, 2) using stringent inclusion criteria for the remaining channels (see iEEG case studies, Preprocessing section) (Manning et al. 2009), 3) carefully inspecting MRI scans to rule out structural abnormalities, and 4) including only patients with relatively normal cognitive function as measured by neuropsychological tests (Oya et al. 2002)—see below. Second, a sample of two subjects may seem small, especially in comparison with other neuroimaging techniques. However, given the high spatiotemporal resolution of iEEG recordings, conclusions can be drawn even from a few subjects, as shown in multiple previous studies (Kahana et al. 1999; Crone et al. 2001; Kawasaki et al. 2001; Brovelli et al. 2005; Naccache et al. 2005; Tanji et al. 2005; Lachaux et al. 2006; Penny et al. 2008; Pourtois et al. 2010; De Lucia et al. 2011; Parvizi et al. 2012, 2013; Hammer et al. 2013; Hesse et al. 2016; Sedley et al. 2016). Thus, although we cannot avoid the intrinsic limitations of intracranial recordings, we have controlled for the most influential and recognized confounds.

As regards neuropsychological testing, both patients were assessed with selected tasks from the Rey auditory verbal learning test (Bean 2011), parts A and B of the trail-making test (Reitan and Wolfson 1985), and the Rey-Osterrieth complex figure test (Meyers and Meyers 1995). Moreover, Patient 2 was administered the minimental state examination (Upton 2013), a qualitative language assessment, and an adapted version of the Boston naming test (Kaplan et al. 1983). Results showed that neither patient had major cognitive deficits, despite mild difficulties in circumscribed domains of peripheral importance to the study’s task. Specifically, Patient 1 had preserved verbal attention and diminished verbal learning, normal word recognition, slight deficits in delayed recall, preserved executive functions, and mild visuo-motor coordination difficulties. Patient 2 had normal overall cognitive status, preserved verbal attention and verbal learning, normal word recognition, slight deficits in delayed recall, reduced executive functions, preserved visuo-motor coordination skills, and normal outcomes in spontaneous speech, writing, verbal comprehension, and picture naming tests (for details, see Supplementary Section 1). Importantly, both patients met the task’s processing requirements, as corroborated by their adequate performance levels (see Results section).

Evaluations for this protocol followed standard procedures (Chennu et al. 2013; Canales-Johnson et al. 2015; Hesse et al. 2016; Birba, Hesse, et al. 2017b; García-Cordero et al. 2017; Canales-Johnson et al. 2020). They were performed in 2-h sessions once or twice a day, depending on the patients’ availability and disposition. No more than two tasks were administered per session; when two tasks were administered, these were separated by intervals of roughly 10–30 min, depending on the patient’s preference for shorter or longer breaks. These precautions minimized the chance of any task being affected by spill-over, priming or fatigue effects. Importantly, although both subjects were told that they could terminate the task at any point, they completed it in full, displaying sustained attentiveness and cooperation throughout the protocol. Prior to the study, they gave written informed consent in accordance with the Declaration of Helsinki, and the study was approved by the institutional ethics committee.

EEG Experiment Methods

Preprocessing

EEG signals were recorded online with a 128-channel system at a 1024 Hz sampling rate. Analog filters were set at 0.03 and 100 Hz. A digital bandpass filter between 0.5 and 40 Hz was applied offline to remove unwanted frequency components. The reference was set to link mastoids for recordings and rereferenced off-line to the average of all electrodes. In line with reported procedures (Salamone et al. 2018; Vilas et al. 2019; Dottori et al. 2020; Fittipaldi et al. 2020), eye movements or blink artifacts were corrected with independent component analysis, and remaining artifacts were rejected offline from trials that contained voltage fluctuations exceeding ±200 μV, transients exceeding ±100 μV, or electro-oculogram activity exceeding ±70 μV. Epochs were selected from continuous data, from −200 to 800 ms locked to stimulus onset. These processing steps were implemented using custom Matlab scripts based on a previous toolbox (Delorme and Makeig 2004) and custom made scripts for further processing.

ERP Analysis

Processing and analysis of EEG data were conducted offline on Matlab software. Artifact-free epochs were averaged to obtain the ERPs. The rejection percentage was similar between both conditions [FBP words: M = 21.47%, SD = 9.37; nFBP words: M = 21.80%, SD = 11.33; t(24) = −0.19, P = 0.84, d = 0.031742]. Waveforms were averaged separately for FBP and the nFBP conditions. ERP analysis of face-sensitive N170 modulations (Liu et al. 2002, 2009; Herrmann et al. 2005; Cauchoix et al. 2014; Kashyap et al. 2016; Colombatto and McCarthy 2017) focused on two four-electrode temporo-occipital regions of interest (ROIs) associated with face-sensitive N170 modulations, as previously reported (Ibáñez et al. 2010; Ibáñez, Hurtado, et al. 2011a; Ibáñez, Petroni, et al. 2011b; Ibáñez, Melloni, et al. 2012a; Ibáñez, Riveros, et al. 2012b; Ibáñez, Urquina, et al. 2012c; Cauchoix et al. 2014) (right electrodes: B6, B7, B8, B9; left electrodes: A9, A10, A11, A12; see Fig. 3a). Analyses were performed over the average of both ROIs and for each ROI separately. In all cases, differences among categories were assessed for significance via Monte-Carlo permutation tests (1000 permutations) combined with bootstrapping (Manly 2006). The integrated data from each condition underwent a random partition, and a t-test was calculated. This process was repeated 1000 times to construct the t-value distribution under the null hypothesis, as reported in previous works (Amoruso et al. 2014; Couto et al. 2014; Garcia-Cordero et al. 2015, 2016; Melloni et al. 2015; Hesse et al. 2016, 2019a, 2019b). Results were corrected with the false discovery rate (FDR) method. The alpha level was set at P < 0.05. In line with previous ERP studies on facial and semantic processing (Guthrie and Buchwald 1991; Dalla Volta et al. 2014; Berchio et al. 2017; Calbi et al. 2019), effects were reported only if significant differences lasted at least 20 ms. This threshold is crucial to ensure that analyses capture truly robust differences between conditions. In fact, in the absence of a temporal threshold requiring at least a minimal temporal continuity of the effect, small random fluctuations in the signal can yield spurious significant differences, compromising the interpretation of results. For visualization purposes, the signal was smoothed with a span of 10 ms.

Figure 3.

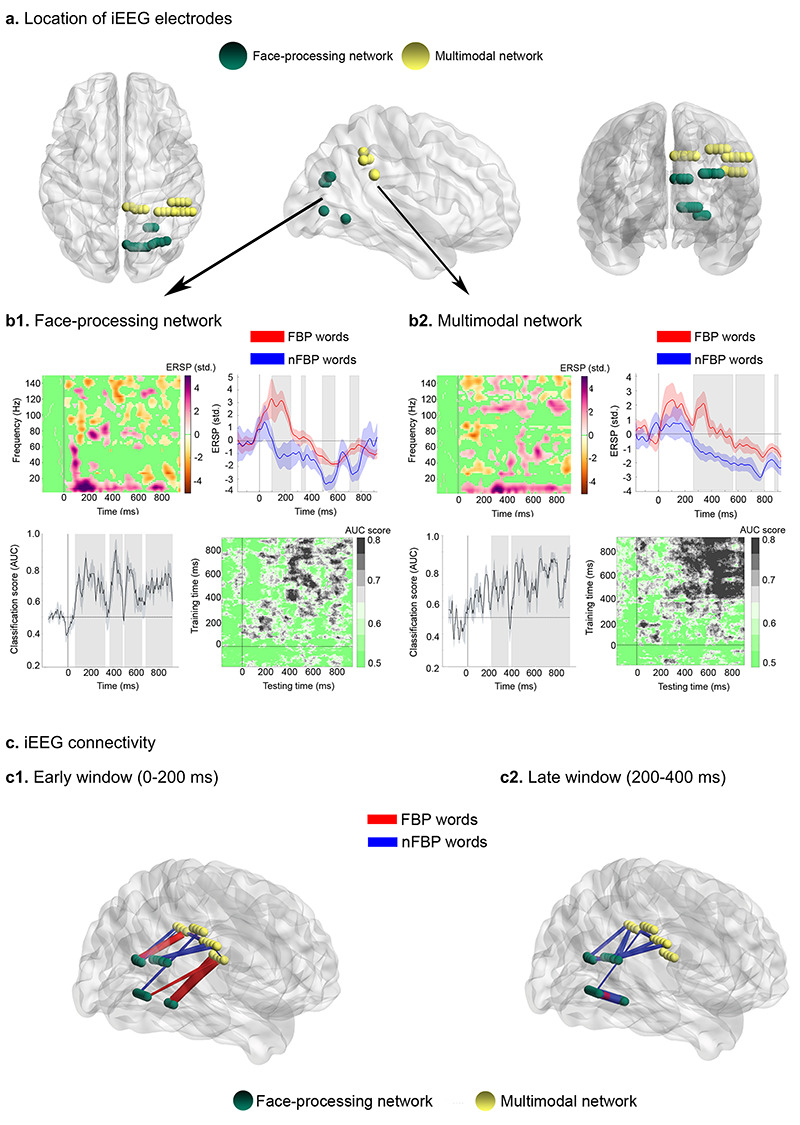

Results from the iEEG case studies. (a) iEEG electrodes in MNI coordinate space. The face-processing network comprised key fusiform-face-area hubs (right fusiform, ventral/rostral lingual, and calcarine gyri); the multimodal network included electrodes within the angular and supramarginal gyri. (b1,b2) Upper left: Subtraction between the time-frequency charts of FBP and nFBP words from the face-processing (b1) and multimodal (b2) networks. Nonsignificant points were assigned zero values (P > 0.05, bootstrapping, FDR-corrected) relative to baseline and color-coded in green. Upper right: Word-type discrimination (based on 1–20 Hz activity) started at ~100 ms in the face-processing network (b1) and at ~250 ms in the multimodal network (b2). Lower left: Word-type classification (based on 1–20 Hz activity) was high (AUC scores ~80%, P < 0.05, Mann–Whitney U-test) across the first 200 ms in the face-processing network (b1) and consistently lower in the multimodal network (AUC scores ~70%, P < 0.05), peaking after 250 ms (b2). Shaded regions identify significant (above-chance) classification scores (P < 0.05). Lower right: Generalization-across-time matrices showing reactivated patterns of decodable information, peaking earlier for the face-processing network (b1) than the multimodal network (b2). Nonsignificant points were color-coded in green (P > 0.05). (c) Connectivity patterns discriminating between FBP and nFBP words based on the wSMI index, sensitized for the 1–20 Hz range (parameters: k = 3, τ = 32 ms). IEEG results revealed enhanced connectivity (P < 0.05, bootstrap, FDR-corrected) between both networks for FBP words in the early (0–200 ms) window (c1), progressing towards the opposite pattern in the late (200–400 ms) window (c2). Nodes indicate channel locations. Links reflect significant connections (P < 0.05, bootstrap, FDR-corrected) between nodes, with their thickness corresponding to t-values from between-condition comparisons.

wSMI Connectivity Analysis

To examine when face-related and multimodal regions exhibited maximal levels of information exchange, we examined EEG connectivity in the early and late windows considering all electrodes across the scalp. As shown in previous embodied research (Birba et al. 2020), this approach offers a full coverage of all possible connections across the scalp, thus avoiding a-priori ROI selection and creating stringent conditions for hypothesis testing. The connectivity between all pairs of electrodes was quantified via the weighted symbolic mutual information (wSMI) (King et al. 2013), a method proven to be sensitive in both EEG (King et al. 2013; Sitt et al. 2014; Melloni et al. 2015; Dottori et al. 2017; Garcia-Cordero et al. 2017; Birba et al. 2020) and iEEG studies (Hesse et al. 2016, 2019a, 2019b). This metric assesses the extent to which two signals present joint nonrandom fluctuations, suggesting that they share information. In particular, wSMI represents a highly sensitive approach to assess functional connectivity because 1) it allows for robust estimation of the signals’ entropies, 2) it provides an efficient way to detect nonlinear coupling, and 3) it discards the spurious correlations arising from common sources, thus favoring nontrivial pairs of symbols.

This method calculates a nonlinear index of information sharing. In line with standard procedures (King et al. 2013), iEEG signals are first transformed into a series of discrete symbols, such that segments of signals are synthetically captured by a distinguishing symbol according to their distinct morphology. The set of symbols captures all possible patterns between k (symbol size) samples, yielding k! symbols. The value of k is usually fixed to 3 in order to obtain robust estimations of symbols’ probability densities for the recorded signals given their duration (if it were set to 4, for example, the estimation of probability densities for 24 symbols would require much longer time series) (King et al. 2013). Additionally, the parameter τ defines the temporal separation between the k samples. Once the signals from each channel are converted into a particular sequence of symbols, the wSMI metric tracks joint probability patterns of coupling between the symbol sequences of each electrode pair. By defining the values of k to 3 and τ to 16 ms, we sensitized wSMI to a frequency range of 1–21 Hz, which is apt to capture modulations related to facial and semantic processing (Zion-Golumbic et al. 2010; Vukovic and Shtyrov 2014), while encompassing the key range yielding N170 effects (Cauchoix et al. 2014; Rossion 2014) and proving equally traceable through EEG and iEEG methods. The joint probability between the signals was then calculated for each pair of channels, for each trial, and wSMI was estimated using a joint probability matrix multiplied by binary weights. These weights were set to zero for pairs of 1) identical symbols and 2) opposed symbols that could be elicited by a unique common source. Connectivity matrices for each stimulus type (FBP and nFBP words) were calculated in two time windows: an early one (0–200 ms poststimulus onset) and a later one (200–400 ms poststimulus onset). Such matrices were compared between conditions with nonparametric bootstrap tests (2000 permutations, P < 0.05), as reported in other studies (Naccache et al. 2005; Ibáñez et al. 2013; Hesse et al. 2016). Results were corrected for multiple comparisons via FDR.

Following the exact procedure reported in (Chennu et al. 2016), we represented EEG channels as nodes of a network. As seen in Figure 2b, each pair of connected nodes has an associated t-value, which is reflected by the normalized height of an arc. Therefore, the height of an arc offers a normalized representation of the weighted, undirected strength of the wSMI link between the nodes involved. Then, clearly delineated, nonoverlapping node groups (i.e., modules) within the network are identified by the Louvain algorithm, which calculates the network’s optimal community structure by maximizing the number of within-group links and minimizing the number of between-group links. For visual clarity, the strongest 10% of links are plotted. The colors plotted directly on the scalp represent the degree of the nodes involved, whereas the color of an arc identifies the group (i.e., module) to which it belongs (Chennu et al. 2016).

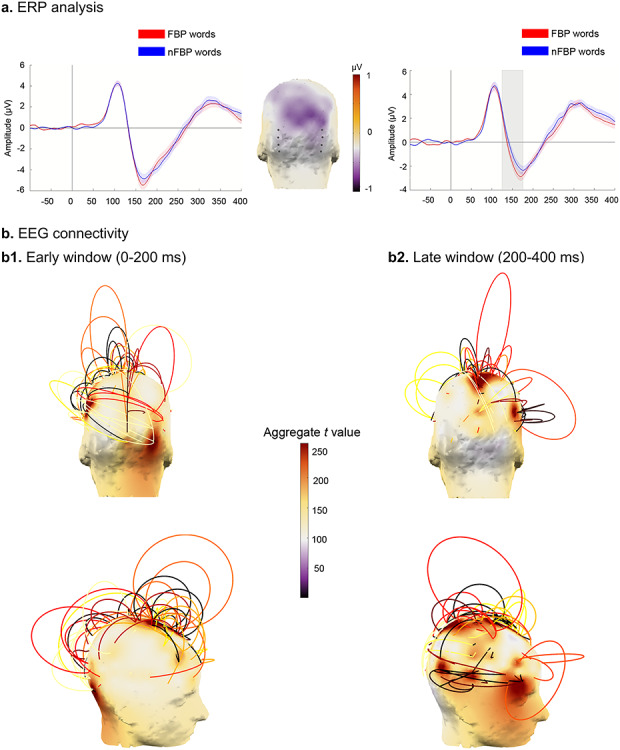

Figure 2.

Results from the EEG experiment. (a) Null ERP effects over the left ROI and enhanced N170 modulations over the right ROI for FBP over nFBP words (P < 0.05, bootstrapping, FDR-corrected). Middle inset shows the channels of each ROI. Significant time points are shaded in gray. (b) Connectivity patterns discriminating between FBP and nFBP words based on the wSMI index in the 1–20 Hz range. FBP words yielded significant EEG connectivity (P < 0.05, FDR-corrected) between right lateral occipital and left frontal/temporal electrodes in the early (b1) but not in the late (b2) window, the latter yielding more diffuse coactivation among fronto-temporo-parietal electrodes, with no involvement of canonical face-processing areas. Arcs reflect significant connections (P < 0.05, bootstrap, FDR-corrected) between nodes, with their height corresponding to t-values from between-condition comparisons and their color representing the group (i.e., module) to which they belong.

IEEG Case Study Methods

Recordings

Direct cortical recordings were obtained from semirigid, multilead electrodes implanted in each patient. The electrodes (DIXI Medical Instruments) had a diameter of 0.8 mm and consisted of 5, 10, or 15 2-mm wide contact leads placed 1.5-mm apart from each other. We used a video-SEEG monitoring system (Micromed) with depth-EEG with 67 and 118 electrodes for patients 1 and 2, respectively. The recordings were sampled at 1024 Hz. Postimplantation MRI and CT scans were obtained from each patient. Both volumetric images were affine registered and normalized using the SPM8 Matlab toolbox (Friston 2007). Montreal Neurological Institute (MNI) coordinates of each contact site and their respective Brodmann areas (BAs) were obtained from the MRIcron software (Rorden and Brett 2000). We used the normalized position of the electrode contact sites to an MNI coordinate space to examine the patients’ results in a common space (Foster et al. 2015).

Preprocessing

Data were filtered only to remove line artifacts following previous reports (Foster et al. 2015) and recommendations to avoid signal distortion (Widmann et al. 2015). A notch filter was applied at 50 Hz and its harmonic frequencies (100 Hz, 150 Hz) using EEGLAB’s (Delorme and Makeig 2004) default settings for band stop filters (3381 points, transition bandwidth = 0.9998 Hz, zero phase shift). Channels were discarded if 1) they exhibited pathological waveforms or noise under visual inspection; 2) their signal values exceeded five times the signal’s mean; 3) they included consecutive signal samples exceeding five standard deviations (SDs) from the gradient’s mean; 4) they were located in regions beyond task-relevant networks (i.e., face-processing and multimodal networks); 5) their contact sites were located in white matter; 6) their contact sites were located in epileptogenic zones or dysplastic regions identified by expert epilepsy neurologists (MCG, WS); and 7) they exhibited technical problems during acquisition (Ibáñez et al. 2013; Hesse et al. 2016; Parvizi and Kastner 2018). The remaining channels were referenced to the mean value (the averages of the sites in each subject were subtracted from each recording). Finally, the data were segmented into epochs from 250 ms prestimulus to 1000 ms poststimulus. All epochs were baseline corrected. Filtering and epoching scripts were adapted from an available toolbox (Delorme and Makeig 2004) and from custom-made code.

We defined two ROIs, corresponding to face-processing and multimodal networks. The face-processing network comprised key hubs in face-preferential regions (right fusiform, ventral/rostral lingual, and calcarine gyri) (Nakamura et al. 2000; Grill-Spector et al. 2004; Collins et al. 2012), whereas the multimodal network included areas implicated in multimodal lexico-semantic processing (angular and supramarginal gyri) (Binder and Desai 2011; Seghier 2013). Thus, the face-processing network comprised a total of 20 electrodes (11 and 9 electrodes from Patient 1 and Patient 2, respectively), and the multimodal network encompassed 18 electrodes (8 and 10 electrodes from Patient 1 and Patient 2, respectively). All iEEG analyses were performed including only the electrodes within these networks.

Time–Frequency Analysis

Time-frequency charts were obtained to identify differential modulations between conditions in each network for specific frequency bands. To this end, we combined both patients’ electrodes for each condition (FBP and nFBP words) and network (face-processing and multimodal) using an adapted version of the newtimef.m function (Delorme and Makeig 2004). The digitized signals were analyzed using a windowed Fourier transform (window-centered; window length: 250 ms; step 8 ms; window overlap 97%). The time-frequency charts were normalized to the baseline before stimulus onset. Normalization involved subtracting the baseline average and dividing it by the baseline SD on a frequency-by-frequency basis using a window from −250 to 0 relative to stimulus onset. Significant power increases and decreases (P < 0.05) were analyzed across time against baseline values and between conditions with nonparametric bootstrap tests (2000 permutations), as reported in other iEEG studies (Naccache et al. 2005; Ibáñez et al. 2013). Results were corrected for multiple comparisons via FDR. Given its relevance for semantic and facial processing (Zion-Golumbic et al. 2010; Vukovic and Shtyrov 2014), the 1–20 Hz frequency band was averaged for each condition and network. For both networks, we plotted the time course of the FBP and nFBP conditions, along with the statistical differences (nonparametric bootstrap test, 2000 permutations, P < 0.05) between them.

Decoding

We used multivariate pattern analysis (MVPA), referred to as “decoding,” to examine the classification efficiency of the combined signals of both patients related to each condition (FBP and nFBP words) between 1 and 20 Hz, for each network separately. These analyses, run on a Python script (Gramfort et al. 2014), consisted of a 10-fold stratified cross-validation. For each fold at each time sample, a linear support vector machine (SVM) (Chang and Lin 2007) was fitted on 9/10 of the trials (training set) with a single time sample recorded across all of the recorded sites for each subject. Each SVM aimed at finding the hyperplane that best discriminated between stimulus types, at each time sample. Classification performance was then computed with a receiver operating characteristic (ROC) curve, based on the probabilistic classification of an independent test set (1/10). With this approach, each classifier is assessed on 1) its ability to decode information at the time point at which it was trained, and 2) its ability to generalize across other time samples. Once t linear classifiers are fitted (where t is the training time), each of them is tested on its ability to discriminate the two types of trials at any time t’. This method thus leads to a temporal generalization matrix of “training time” by “testing time.” In each point of the matrix, decoding performance is summarized by the area under the curve (AUC). Classifiers trained and tested at the same time point correspond to the diagonal of this t2 matrix, and are thus referred to as “diagonal” decoding. Statistical analyses were performed on each classifier’s continuous outputs via Mann–Whitney U tests. The threshold of significance was set at P < 0.05. For further details regarding the decoding pipeline implemented here, see King and Dehaene (2014). Classification scores were considered significant if they spanned at least 12 consecutive time points.

wSMI Connectivity Analysis

To identify the period in which both networks exhibit greater functional interactions, we examined their information exchange patterns in each subject separately, considering both an early and a later time window. Connectivity coefficients were calculated using the wSMI measure (King et al. 2013) for each pair of iEEG electrodes within both networks for each patient separately (see Fig. 3a and Table 2 above). All parameters and statistical procedures were identical to those employed for EEG connectivity analysis. Even though results were obtained for each patient individually, these were plotted in a same figure using the Brain Net Viewer toolbox (Xia et al. 2013).

Table 2.

Location of iEEG electrodes

| Network | Patient | Region | Label | BA | x | y | z |

|---|---|---|---|---|---|---|---|

| Face processing | 1 | Right fusiform gyrus | TBP5 | 19 | 21 | −59 | −4 |

| Right fusiform gyrus | TBP6 | 19 | 23 | −59 | −4 | ||

| Right fusiform gyrus | TBP7 | 19 | 25 | −59 | −4 | ||

| Right fusiform gyrus | TBP8 | 19 | 27 | −59 | −4 | ||

| Right lingual gyrus | OI1 | 18 | 9 | −75 | 2 | ||

| Right lingual gyrus | OI2 | 18 | 11 | −75 | 2 | ||

| Right lingual gyrus | OI3 | 18 | 13 | −75 | 2 | ||

| Right lingual gyrus | OI4 | 18 | 15 | −75 | 2 | ||

| Right lingual gyrus | OI5 | 18 | 17 | −75 | 2 | ||

| Right lingual gyrus | OI6 | 18 | 19 | −75 | 2 | ||

| Right lingual gyrus | OI7 | 19 | 21 | −75 | 2 | ||

| 2 | Right supra calcarine cortex | SC11 | 31 | 25 | −73 | 27 | |

| Right supra calcarine cortex | SC12 | 31 | 27 | −72 | 27 | ||

| Right supra calcarine cortex | SC14 | 31 | 33 | −71 | 27 | ||

| Right supra calcarine cortex | SC15 | 19 | 37 | −71 | 27 | ||

| Right cuneus | SC1 | 18 | 1 | −73 | 23 | ||

| Right cuneus | SC2 | 18 | 4 | −73 | 23 | ||

| Right cuneus | SC3 | 18 | 8 | −75 | 23 | ||

| Right cuneus | SC4 | 18 | 11 | −75 | 23 | ||

| Right cuneus | SC5 | 18 | 14 | −75 | 23 | ||

| Multimodal | 1 | Right angular gyrus | P7 | 40 | 32 | −46 | 45 |

| Right angular gyrus | P8 | 40 | 34 | −46 | 45 | ||

| Right angular gyrus | P9 | 40 | 38 | −46 | 45 | ||

| Right angular gyrus | P10 | 40 | 42 | −46 | 45 | ||

| Right supramarginal gyrus | GCP9 | 39 | 41 | −38 | 29 | ||

| Right supramarginal gyrus | GCP10 | 39 | 46 | −38 | 28 | ||

| Right supramarginal gyrus | GCP11 | 39 | 50 | −38 | 28 | ||

| Right supramarginal gyrus | GCP12 | 39 | 54 | −38 | 28 | ||

| 2 | Right posterior medial parietal lobe | PS1 | 40 | 4 | −42 | 40 | |

| Right posterior medial parietal lobe | PS2 | 40 | 8 | −42 | 40 | ||

| Right posterior medial parietal lobe | PS3 | 40 | 11 | −44 | 40 | ||

| Right posterior medial parietal lobe | PS4 | 40 | 15 | −44 | 40 | ||

| Right posterior medial parietal lobe | PS5 | 40 | 19 | −44 | 40 | ||

| Right supramarginal gyrus | PS11 | 40 | 42 | −46 | 39 | ||

| Right supramarginal gyrus | PS12 | 40 | 46 | −46 | 39 | ||

| Right supramarginal gyrus | PS13 | 40 | 50 | −46 | 39 | ||

| Right supramarginal gyrus | PS14 | 40 | 54 | −46 | 39 | ||

| Right supramarginal gyrus | PS15 | 40 | 58 | −46 | 39 |

Note: The table lists details of the electrodes in the face-processing and multimodal networks. Information for each electrode includes (from left to right) the network to which it belonged, the patient in whom it was implanted, its anatomical region, the label used to identify the electrode, its BA, and the corresponding MNI coordinates.

Results

EEG Experiment

Behavioral Results

Subjects in the EEG experiment performed the task with high accuracy (M = 0.91, SD = 0.06) and consistent response times (M = 0.74 s, SD = 0.18 s). Neither variable yielded significant differences between FBP and nFBP words [accuracy: F(1,48) = 2.78, P = 0.102, ηp2 = 0.054746; response times: F(1,48) = 0.6049, P = 0.44, ηp2 = 0.124452].

ERP Results

Analysis of the average activity across both ROIs together revealed enhanced N170 modulations for FBP over nFBP words between ~150 and ~175 ms (P < 0.05, bootstrapping, FDR-corrected). When analyzed on its own, the left ROI revealed no differential modulation between FPB and nFBP words. Instead, the right ROI showed that FBP words elicited enhanced N170 modulations, in a window of ~130–175 ms (P < 0.05, bootstrapping, FDR-corrected). For details, see Figure 2a. This lateralization pattern was corroborated over extended occipito-temporal (N170-sensitive) ROIs and absent in three central ROIs not typically associated with N170 modulations, attesting to the topographic specificity of the observed pattern (for details, see Supplementary Section 2).

The preeminence of right-sided modulations was confirmed even upon removing the 20-ms threshold set for our main analyses. Although this much less stringent approach yields some short-lived differences within the N170 window in the left ROI, modulations over the right ROI begin considerably earlier, present higher magnitudes, and prove much more stable in time (for details, see Supplementary Section 2).

Functional Connectivity Results

In the early (0–200 ms) window, relative to nFBP words, FBP words yielded significantly greater functional connectivity (P < 0.05, FDR-corrected) between right lateral occipital electrodes (coinciding with the face-sensitive ROI used for ERP analysis) and left frontal/temporal electrodes (over the 1–20 Hz range). In the same frequency range, no distinct involvement of face-preferential electrodes was observed for connectivity patterns in the late (200–400 ms) window, which instead yielded more diffuse coactivation among fronto-temporo-parietal regions (P < 0.05, FDR-corrected). For details, see Figure 2b.

Also, an exploratory complementary analysis, via a 2 × 2 ANOVA, showed that the interaction between time window and condition was not significant, suggesting that, despite their clearly distinct topographies, the significant connectivity patterns discriminating between word types in each window did not differ in their connectivity strength (for details, see Supplementary Section 3).

IEEG Case Studies

Behavioral Results

Accuracy for subjects 1 and 2 was 79% and 83%, respectively. Both participants had similar response times (subject 1: M = 1.32 s, SD = 0.42 s; subject 2: M = 1.13 s, SD = 0.27 s). In each case, performance was similar for both FBP and nFBP words, there being no significant differences in accuracy (subject 1: χ2 = 0.141, P = 0.71; subject 2: χ2 = 0.686, P = 0.41) or response time [subject 1: t(20) = 0.4143, P = 0.68; subject 2: t(20) = −1.6179, P = 0.12] between them.

Importantly, the performance of each patient did not differ significantly from that of sex-matched subjects in the EEG experiment, even controlling for age and education differences. This was true for both accuracy (subject 1: P = 0.32, ZCC = −1.47; subject 2: P = 0.71, ZCC = 0.847) and response time (subject 1: P = 0.10, ZCC = 2.566; subject 2: P = 0.51, ZCC = 1.529). For details, see Supplementary Section 4.

Time–Frequency Results

Subtraction of FBP from nFBP words, relative to baseline, triggered fast (~100 ms) activity boosts within the face-processing network, with maximal effects in a range of 1–20 Hz (P < 0.05, bootstrapping, FDR-corrected; Fig. 3b1, upper insets). This pattern was mirrored by later (~275 ms) effects in the multimodal network (P < 0.05, bootstrapping, FDR-corrected; Fig. 3b2, upper insets). For additional details, see Supplementary Section 5.

Decoding Results

In the face-processing network, word-type classification (based on 1–20 Hz activity) yielded AUC scores of ~80% (P < 0.05, Mann-Whitney U-test) across the first 200 ms, indicating high rates of classification between FBP and nFBP words—Figure 3b1, lower left inset. Instead, in the multimodal-network, AUC scores for word-type classification (based on 1–20 Hz activity) only surpassed ~70% after 250 ms (P < 0.05, Mann–Whitney U-test; Fig. 3b2, lower left inset). Compatibly, stimulus decoding yielded an earlier generalization pattern for the face-processing than the multimodal network (Figs 3b1 and b2, lower right insets). For additional details, see Supplementary Section 6.

Functional Connectivity Results

In the 1–20 Hz range (parameters: k = 3, τ = 32 ms), wSMI connectivity between the face-processing and the multimodal networks was greater (P < 0.05, bootstrap, FDR-corrected) for FBP over nFBP words in the early (0–200 ms) window (Fig. 3c1). Conversely, FBP words yielded weaker (P < 0.05, bootstrap, FDR-corrected) connectivity than nFBP words in the late (200–400 ms) window (Fig. 3c2).

An exploratory analysis, via a 2 × 2 ANOVA, revealed a null effect of time window, a main effect of condition (with higher connectivity for FBP than nFBP words), and significant interaction between time window and condition (although this was not driven by differences in any of the critical comparisons). The main implication of this analysis is that the differential connectivity patterns in each window did not differ in their strength and that, overall, FBP words elicited higher information sharing than nFBP words (for details, see Supplementary Section 7).

Discussion

Rooted in a unique cross-methodological design, the above results circumvent key confounds and shortcomings (Bedny and Caramazza 2011; Pulvermüller 2018) of previous works informing the grounded/symbolic debate. Crucially, by targeting the facial/nonfacial (as opposed to the action/nonaction) distinction via a highly specific early EEG marker (the N170) and iEEG recordings from within critical regions impervious to motor confounds, alongside trial-by-trial decoding and functional connectivity analyses, this study offers unparalleled evidence that, at least for some lexical categories, grounded mechanisms play an inceptive role during semantic processing.

First, FBP words significantly increased N170 amplitude when averaging both ROIs, this effect being driven by right hemisphere activity (Fig. 2a). This precise modulation is the most robust early marker of facial processing (Rossion 2014), triggered by any stimulus evoking face-specific information, including portraits, emoticons, and objects displayed in face-like arrangements (Rossion 2014), as well as relevant linguistic stimuli (Ibáñez et al. 2010; Ibáñez, Riveros, et al. 2012b; Ibáñez, Urquina, et al. 2012c; Ibáñez et al. 2014). The engagement of this fast and highly specific marker by modality-specific words speaks to their grounding in sensory experience (Pulvermüller 2018; García et al. 2019).

In fact, this conclusion finds support in previous EEG studies. For example, sound-evocative words modulate ERPs at ~150 ms over electrodes that capture auditory activity, which again indicates swift access to modality-specific information (Kiefer et al. 2008). By the same token, action verbs have been linked to modulation of early (~200–280 ms) ERPs in topographies and sources associated with sensorimotor processing (Dalla Volta et al. 2014, 2018). Also, compared with affirmative sentences, negative sentences elicit larger amplitude of the inhibition-related N1 component (Beltran et al. 2018), suggesting that suppression of linguistic information recruits early domain-general response suppression mechanisms (see also Giora et al. 2007; Maciuszek and Polczyk 2017). In line with these findings, our results indicate that fast embodied effects can be detected even for mechanisms mediating the recognition of complex visual configurations (i.e., faces).

Moreover, iEEG results show that such early grounding occurs within relevant hubs and before the recruitment of multimodal networks. Relative to nFBP words, FBP words triggered maximal power increases in the face-processing network (Nakamura et al. 2000; Grill-Spector et al. 2004; Collins et al. 2012) at ~100 ms (Fig. 3b1), the very timespan in which ventral/lateral occipito-temporal cortices increase internal activity during face detection (Allison et al. 1999; Rosburg et al. 2010). Of note, such frequency boosts preceded corresponding results in multimodal hubs (Binder and Desai 2011; Seghier 2013), which peaked only after the 250-ms mark (Fig. 3b2). This evidence further attests to the precedence of sensorimotor reactivations over coarse-grained semantic effects (García et al. 2019).

Of note, MEG and fMRI embodiment research (e.g., Raposo et al. 2009; van Dam et al. 2010, 2012; Rodriguez-Ferreiro et al. 2011; Bedny et al. 2012; Boulenger et al. 2012; Mollo et al. 2016) has been claimed to yield anatomically imprecise (Kemmerer and Gonzalez-Castillo 2010) and otherwise inconsistent (García et al. 2019) results. Conversely, iEEG studies have revealed increased modulations within the amygdala (a structure indexing fear and threat) and the primary motor cortex (a core region subserving bodily movement) during processing of threatening words (Naccache et al. 2005) and action verbs (Ibáñez et al. 2013), respectively. Along with these results, our iEEG case studies reveal primary embodied effects with spatiotemporally optimal data, a high signal-to-noise ratio, and reduced muscular or ocular artifacts (Buzsáki 2006), thus circumventing response-related (Pulvermüller 2013) and phono-articulatory (Wilson et al. 2004) confounds.

Furthermore, differential modulations in the face-processing network were maximal between 1 and 20 Hz. This range subsumes bands implicated in lexico-semantic (Hald et al. 2006; Davidson and Indefrey 2007; Kielar et al. 2014; Maguire et al. 2015; Dottori et al. 2020) and embodied (van Elk et al. 2010; Vukovic and Shtyrov 2014; Birba et al. 2020) effects, while overlapping with the band that mainly drives N170 face effects (5–15 Hz) (Rousselet et al. 2007; Rossion 2014). In addition, word-type classification in the 1–20 Hz range surpassed 80% within 100 ms in the face-processing network (Fig. 3b1), yielding a systematic generalization through time (Fig. 3b1). Such maximal classification score preceded corresponding results in multimodal hubs (Binder and Desai 2011; Seghier 2013), which yielded classification peaks only after 250 ms (Fig. 3b2). This replicates recent findings in motor embodied domains (García et al. 2019) and underscores the consistency of our results across individual items.

Considering their timing, such face-preferential signatures hardly reflect postcomprehension (e.g., imagery-related) phenomena (Pulvermüller 2013, 2018; García et al. 2019). Granted, mechanistic interpretations in embodied research prove untenable in the absence of causal evidence, partially afforded to different degrees by lesion models (Birba, Garcia-Cordero, et al. 2017a; Bocanegra et al. 2017; Gallese and Cuccio 2018; García et al. 2018) or neuromodulation studies (Liuzzi et al. 2010; Willems et al. 2011; Vicario and Rumiati 2012; Kuipers et al. 2013; Vukovic et al. 2017). Notwithstanding, the present evidence challenges the view that grounding effects play merely secondary or modulatory roles during language processing (Hickok 2009, 2014; Pulvermüller 2018). Indeed, the latency of these modulations mirrors the earliest semantic effects documented to date (Pulvermüller 2018). Moreover, the windows exhibiting these face-preferential patterns replicate those in which action-related verbs (García et al. 2019) and sound-related nouns (Kiefer et al. 2008) elicit embodied effects in motor and auditory brain areas, respectively.

Yet, modality-specific mechanisms are not solely responsible for the inception of meaning. Indeed, differential information-sharing between both networks for FBP words was greater before than after the 200-ms mark (Figs 2b and 3c). This suggests that processing of particular noun types (and potentially other word classes) implies an early dynamic interplay between modality-specific and multimodal mechanisms, arguing against radical views which reduce comprehension to embodied reactivations (Hickok 2009).

Interestingly, our task required explicit judgments of face-relatedness, potentially triggering top–down attentional effects (i.e., face-specific expectations, perhaps manifested as implicit facial imagery). Yet, this in fact supports the embodied nature of the results: if differential neural responses to FBP and nFBP words are modulated by top–down effects, then face-sensitive semantic mechanisms must necessarily exist for top–down effects to operate on. In fact, a similar scenario is systematically present in studies showing effector-specific effects of manual action verbs on hand responses (Cardona et al. 2013; García and Ibáñez 2016). Although top–down preparatory motor activity in these tasks may prime hand-specific circuits trial after trial, manual responses would not be differentially affected by processing of manual action verbs unless these were actually grounded in those circuits. Similarly, the possible role of top–down operations in this study would not refute but rather confirm the fast embodied nature of the observed effects, extending evidence from implicit semantic tasks (Kiefer et al. 2008; García et al. 2019).

Be that as it may, the detection of these fast embodied effects for FBP words does not necessarily entail that sensorimotor resonance will precede multimodal effects under all circumstances. The late engagement of canonical multimodal regions during semantic processing is fairly well established for various word types, with most evidence showing that such circuits are systematically recruited only after the 250-ms mark (Jackson et al. 2015; Shimotake et al. 2015; Chen et al. 2016; Lambon Ralph et al. 2017; Mollo et al. 2017; García et al. 2019). However, the relative timing of embodied systems seems related to both stimulus- and task-related factors. On the one hand, as previously stated, early modulation of embodied systems has been reported for sound-evocative nouns (Kiefer et al. 2008), action-related verbs (Ibáñez et al. 2013; Dalla Volta et al. 2014, 2018; García et al. 2019), and negative markers (Beltran et al. 2018). However, sensorimotor resonance effects have also been observed to participate at later stages (>300 ms) during processing of action verbs (Papeo et al. 2009) and emotion-laden words (Naccache et al. 2005), for instance. Therefore, although multimodal systems seem to fire consistently only after 250 ms postword presentation (Lambon Ralph et al. 2017), the latency of embodied reactivations appears to be either less consistent or more extended across word types and experimental constraints. The early recruitment of face-related networks by FBP words, therefore, must not be over-generalized to any and all lexical categories or processing conditions. If anything, sweeping statements implying that embodied systems can never precede multimodal modulations (Mollo et al. 2017) should be reevaluated. More nuanced conceptions should be favored instead, acknowledging that the temporal unfolding of embodied and multimodal processes is driven by the specific semantic features of the words at hand (García et al. 2019), in combination with ongoing task demands. Considering that the use of iEEG recordings is unaffected by previous critical confounds, the present study suggests that FBPs do hinge on very fast reactivations of modality-specific systems, even when top–down mechanisms are at play during explicit semantic processing.

Finally, from a broader theoretical perspective, note that the effects we predicted and detected do not involve primary sensorimotor regions. Most embodiment research has typically targeted such areas, yielding, for example, distinct modulations of the primary motor cortex by action verbs. However, several studies have also reported embodied effects beyond primary sensorimotor regions, including significant engagement of olfactory, gustatory, and emotion-sensitive circuits, as well as regions subserving chromatic perception and shape recognition, during processing of smell-related (Gonzalez et al. 2006), taste-related (Barros-Loscertales et al. 2012), threat-related (Naccache et al. 2005), color-related (Simmons et al. 2007), and form-related (Wheatley et al. 2005) words, respectively. Our study aligns with the latter empirical corpus, showing that fast embodied effects may also be found in other unimodal association systems—specifically, the face-processing network.

Of course, the fusiform face area also responds to stimuli from other sensory modalities, such as auditory signals (Blank et al. 2015). Crucially, this does not undermine embodied interpretations of the observed effects. First, virtually all functionally specific regions and networks, including primary sensorimotor regions, are modulated by information from multiple modalities. For example, auditory stimuli can prime visual (Grahn et al. 2011), haptic (Kassuba et al. 2013), and motor (D'Ausilio et al. 2006) processes, just like tactile information can modulate visual (O’Callaghan et al. 2018), auditory (Kassuba et al. 2013), and motor (Kim et al. 2015) regions. In this sense, note that language embodiment hypotheses do not require finding grounding effects in functionally exclusive areas; rather, the field aims to establish whether linguistic units evoking specific experiences (e.g., words denoting the facial features we encounter in everyday life) differentially recruit core mechanisms implicated in those nonverbal experiences (i.e., processing of actual faces), irrespective of whether such regions belong within primary sensorimotor areas or networks subserving unimodal or multimodal association processes. The key markers targeted in the present study (N170 peaks and modulations of the right fusiform/lingual network) are objective hallmarks of face processing (Puce et al. 1995; Kanwisher et al. 1997; Kim et al. 1999; Barton et al. 2002; Rossion and Jacques 2011; Behrmann and Plaut 2014; Cauchoix et al. 2014; Rossion 2014; Gao et al. 2019). Their fast differential engagement during FBP as opposed to nFBP word processing suffices for interpreting the present effects as embodied patterns. Therefore, our work aligns with previous studies (e.g., Naccache et al. 2005; Wheatley et al. 2005; Gonzalez et al. 2006; Simmons et al. 2007; Barros-Loscertales et al. 2012) to suggest that embodied mechanisms are pervasive across human neurocognition, going beyond reactivations of primary sensorimotor areas.

Limitations and Avenues for Further Research

Our study features some limitations which pave the way for further research. First, as noted before, the task schema we used may trigger top–down operations. It would be interesting to examine whether present results are replicated in tasks that do not require explicit categorization of face-relatedness versus nonface-relatedness, as this would shed further light on the actual role of potential top–down and bottom–up mechanisms.

Another limitation is the use of a lexico-semantic category (Spanish FBP nouns) that offered only a restricted number of viable exemplars. Although the present number of items per category resembles that of previous EEG studies reporting robust embodied effects (Dalla Volta et al. 2014, 2018) while surpassing Ns in other iEEG reports (Heit et al. 1988; Hesse et al. 2016), it would be crucial for our approach to be replicated with other lexico-semantic domains affording a larger number of viable items.

In addition, our task entailed an imbalance between the summated critical and filler items requiring “Yes” responses (42 per run, including 21 FBPs and 21 facial-action fillers) and “No” responses (63 per run, including 21 nFBPs, 21 nonfacial-action fillers, and 21 object–noun fillers). Although the use of fillers is standard in neurolinguistic research (Shtyrov et al. 2010; Segaert et al. 2011; Bašnáková et al. 2013; Weber et al. 2016), this disproportion might have led some participants to favor unintended response strategies, perhaps guided by implicit intertrial predictions (Ma and Jazayeri 2014). However, even if present, these strategies would still be likely driven by semantic criteria, as required by our task. Also, such potential intertrial predictions would hardly have been successful given the pseudorandomization of stimuli across testing sessions. Moreover, accuracy and RT results show that the two critical word categories (FBPs and nFBPs) yielded similar behavioral performance, reducing the possibility of major strategy-related discrepancies between conditions. Notwithstanding, future implementations of our paradigm should even out the number of facial and nonfacial fillers to fully rule out this possibility.

Also, the implants in each of our two iEEG subjects differed in their location. As argued elsewhere (Hesse et al. 2019), this variability is inevitable in iEEG research given that implant sites are chosen following clinical criteria dictated by each patient’s specific condition. Importantly, despite such heterogeneity, the electrodes integrating the face-processing and the multimodal network in each patient objectively belonged within face-sensitive regions (Nakamura et al. 2000; Grill-Spector et al. 2004; Collins et al. 2012) and canonical conceptual integration areas (Binder and Desai 2011; Seghier 2013), respectively. Notwithstanding, it would be desirable to test the systematicity and specificity of our findings on iEEG patients with implants in more extended regions.

Conclusion

Succinctly, through a combination of EEG (ERP, functional connectivity) and iEEG (time-frequency analysis, decoding, functional connectivity) methods, we found that face-preferential mechanisms discriminated and individually classified FBP words before multimodal systems, and that both face-preferential and multimodal networks showed maximal interaction before the 200-ms mark. These multidimensional results, devoid of previous confounds, indicate that, at least during processing of particular word types, meanings spring via fast sensorimotor reenactments and rapid interplays with cross-modal conceptual systems. Such new insights may help disentangle extant controversies (Papeo et al. 2009; Bedny and Caramazza 2011; Pulvermüller 2013, 2018; Shtyrov et al. 2014) on how language is understood in the course of neural time.

Funding

Consejo Nacional de Investigaciones Científicas y Técnicas; Fondo para la Investigación Científica y Tecnológica (PICT 2017-1818 and 2017-1820); Comisión Nacional de Investigación Científica y Tecnológica (FONDECYT Regular 1170010); Fondo de Financiamiento de Centros de Investigación en Áreas Prioritarias (15150012); Programa Interdisciplinario de Investigación Experimental en Comunicación y Cognición (PIIECC), Facultad de Humanidades, USACH; the Global Brain Health Institute (GBHI ALZ UK-20-639295); and the Multi-Partner Consortium to Expand Dementia Research in Latin America (ReDLat), funded by the National Institutes of Aging of the National Institutes of Health under award number R01AG057234, an Alzheimer’s Association grant (SG-20-725707-ReDLat), the Rainwater Foundation, and the Global Brain Health Institute. The content is solely the responsibility of the authors and does not represent the official views of the National Institutes of Health, Alzheimer’s Association, Rainwater Charitable Foundation, or Global Brain Health Institute.

Competing Interests Statement

Adolfo M. García: The author declares no competing financial interests. Eugenia Hesse: The author declares no competing financial interests. Agustina Birba: The author declares no competing financial interests. Federico Adolfi: The author declares no competing financial interests. Ezequiel Mikulan: The author declares no competing financial interests. Miguel Martorell Caro: The author declares no competing financial interests. Agustín Petroni: The author declares no competing financial interests. Tristán Bekinchstein: The author declares no competing financial interests. María del Carmen García: The author declares no competing financial interests. Walter Silva: The author declares no competing financial interests. Carlos Ciraolo: The author declares no competing financial interests. Esteban Vaucheret: The author declares no competing financial interests. Lucas Sedeño: The author declares no competing financial interests. Agustín Ibáñez: The author declares no competing financial interests.

Author Contributions

Adolfo M. García: conceptualization, methodology, validation, investigation, writing—original draft preparation, visualization. Eugenia Hesse: software, formal analysis, data curation, writing—original draft preparation, visualization. Agustina Birba: software, formal analysis, data curation, writing—original draft preparation, visualization. Federico Adolfi: formal analysis, data curation. Ezequiel Mikulan: resources; data curation. Miguel Martorell Caro: resources; data curation. Agustín Petroni: formal analysis. Tristán Bekinchstein: resources. María del Carmen García: resources. Walter Silva: resources. Carlos Ciraolo: resources. Esteban Vaucheret: resources. Lucas Sedeño: investigation, writing—review & editing. Agustín Ibáñez: conceptualization, methodology, resources; writing—review & editing, visualization, supervision, project administration, funding acquisition.

Data Availability Statement

All experimental data, well as the custom code used to run the experimental task, are fully available online via the Open Science Framework, at https://osf.io/63qes/ (García A.M., 2019. Data from “Time to face language.” doi: 10.17605/OSF.IO/63QES).

Supplementary Material

References

- Allison T, Puce A, Spencer DD, McCarthy G. 1999. Electrophysiological studies of human face perception. I: potentials generated in occipitotemporal cortex by face and non-face stimuli. Cereb Cortex. 9:415–430. [DOI] [PubMed] [Google Scholar]

- Amoruso L, Sedeno L, Huepe D, Tomio A, Kamienkowski J, Hurtado E, Cardona JF, Alvarez Gonzalez MA, Rieznik A, Sigman M et al. 2014. Time to tango: expertise and contextual anticipation during action observation. Neuroimage. 98:366–385. [DOI] [PubMed] [Google Scholar]

- Barros-Loscertales A, Gonzalez J, Pulvermüller F, Ventura-Campos N, Bustamante JC, Costumero V, Parcet MA, Avila C. 2012. Reading salt activates gustatory brain regions: fMRI evidence for semantic grounding in a novel sensory modality. Cereb Cortex. 22:2554–2563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barton JJ, Press DZ, Keenan JP, O'Connor M. 2002. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology. 58:71–78. [DOI] [PubMed] [Google Scholar]

- Bašnáková J, Weber K, Petersson KM, van Berkum J, Hagoort P. 2013. Beyond the language given: the neural correlates of inferring speaker meaning. Cereb Cortex. 24:2572–2578. [DOI] [PubMed] [Google Scholar]

- Bean J 2011. Rey auditory verbal learning test, Rey AVLT In: Kreutzer J, DeLuca J, Caplan B, editors. Encyclopedia of clinical neuropsychology. New York: Springer, pp. 2174–2175. [Google Scholar]

- Bedny M, Caramazza A. 2011. Perception, action, and word meanings in the human brain: the case from action verbs. Ann N Y Acad Sci. 1224:81–95. [DOI] [PubMed] [Google Scholar]

- Bedny M, Caramazza A, Pascual-Leone A, Saxe R. 2012. Typical neural representations of action verbs develop without vision. Cerebral Cortex (New York, NY). 22:286–293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrmann M, Plaut DC. 2014. Bilateral hemispheric processing of words and faces: evidence from word impairments in prosopagnosia and face impairments in pure alexia. Cereb Cortex. 24:1102–1118. [DOI] [PubMed] [Google Scholar]

- Beltran D, Muneton-Ayala M, de Vega M. 2018. Sentential negation modulates inhibition in a stop-signal task. Evidence from behavioral and ERP data. Neuropsychologia. 112:10–18. [DOI] [PubMed] [Google Scholar]

- Berchio C, Piguet C, Michel CM, Cordera P, Rihs TA, Dayer AG, Aubry J-M. 2017. Dysfunctional gaze processing in bipolar disorder. NeuroImage Clin. 16:545–556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH. 2011. The neurobiology of semantic memory. Trends Cogn Sci. 15:527–536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birba A, Garcia-Cordero I, Kozono G, Legaz A, Ibáñez A, Sedeno L, Garcia AM. 2017a. Losing ground: frontostriatal atrophy disrupts language embodiment in Parkinson's and Huntington's disease. Neurosci Biobehav Rev. 80:673–687. [DOI] [PubMed] [Google Scholar]

- Birba A, Guerrero DB, Caro MM, Trevisan P, Kogan B, Sedeño L, Ibáñez A, García AM. 2020. Motor-system dynamics during naturalistic reading of action narratives in first and second language. Neuroimage. 216:116820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birba A, Hesse E, Sedeno L, Mikulan EP, Garcia MDC, Avalos J, Adolfi F, Legaz A, Bekinschtein TA, Zimerman M et al. 2017b. Enhanced working memory binding by direct electrical stimulation of the parietal cortex. Front Aging Neurosci. 9:178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blank H, Kiebel SJ, von Kriegstein K. 2015. How the human brain exchanges information across sensory modalities to recognize other people. Hum Brain Mapp. 36:324–339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bocanegra Y, García AM, Lopera F, Pineda D, Baena A, Ospina P, Alzate D, Buriticá O, Moreno L, Ibáñez A et al. 2017. Unspeakable motion: selective action-verb impairments in Parkinson’s disease patients without mild cognitive impairment. Brain Lang. 168:37–46. [DOI] [PubMed] [Google Scholar]

- Boulenger V, Shtyrov Y, Pulvermüller F. 2012. When do you grasp the idea? MEG evidence for instantaneous idiom understanding. Neuroimage. 59:3502–3513. [DOI] [PubMed] [Google Scholar]

- Brovelli A, Lachaux JP, Kahane P, Boussaoud D. 2005. High gamma frequency oscillatory activity dissociates attention from intention in the human premotor cortex. Neuroimage. 28:154–164. [DOI] [PubMed] [Google Scholar]

- Buzsáki G 2006. Rhythms of the brain. Oxford, New York: Oxford University Press. [Google Scholar]

- Calbi M, Siri F, Heimann K, Barratt D, Gallese V, Kolesnikov A, Umiltà MA. 2019. How context influences the interpretation of facial expressions: a source localization high-density EEG study on the “Kuleshov effect”. Sci Rep. 9:2107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canales-Johnson A, Billig AJ, Olivares F, Gonzalez A, Garcia MC, Silva W, Vaucheret E, Ciraolo C, Mikulan E, Ibáñez A et al. 2020. Dissociable neural information dynamics of perceptual integration and differentiation during bistable perception. Cereb Cortex. bhaa058. doi: 10.1093/cercor/bhaa058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canales-Johnson A, Silva C, Huepe D, Rivera-Rei A, Noreika V, Garcia Mdel C, Silva W, Ciraolo C, Vaucheret E, Sedeno L et al. 2015. Auditory feedback differentially modulates behavioral and neural markers of objective and subjective performance when tapping to your heartbeat. Cereb Cortex. 25:4490–4503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardona JF, Gershanik O, Gelormini-Lezama C, Houck AL, Cardona S, Kargieman L, Trujillo N, Arevalo A, Amoruso L, Manes F et al. 2013. Action-verb processing in Parkinson's disease: new pathways for motor-language coupling. Brain Struct Funct. 218:1355–1373. [DOI] [PubMed] [Google Scholar]

- Cauchoix M, Barragan-Jason G, Serre T, Barbeau EJ. 2014. The neural dynamics of face detection in the wild revealed by MVPA. J Neurosci. 34:846–854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang C-C, Lin C-J. 2007. LIBSVM: a library for support vector machines. ACM Trans Int Sys Tech. 2(3):27. [Google Scholar]

- Chen Y, Shimotake A, Matsumoto R, Kunieda T, Kikuchi T, Miyamoto S, Fukuyama H, Takahashi R, Ikeda A, Lambon Ralph MA. 2016. The 'when' and 'where' of semantic coding in the anterior temporal lobe: temporal representational similarity analysis of electrocorticogram data. Cortex. 79:1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chennu S, Noreika V, Gueorguiev D, Blenkmann A, Kochen S, Ibáñez A, Owen AM, Bekinschtein TA. 2013. Expectation and attention in hierarchical auditory prediction. J Neurosci. 33:11194–11205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chennu S, O’Connor S, Adapa R, Menon DK, Bekinschtein TA. 2016. Brain connectivity dissociates responsiveness from drug exposure during propofol-induced transitions of consciousness. PLoS Comput Biol. 12:e1004669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins HR, Zhu X, Bhatt RS, Clark JD, Joseph JE. 2012. Process- and domain-specificity in regions engaged for face processing: an fMRI study of perceptual differentiation. J Cogn Neurosci. 24:2428–2444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colombatto C, McCarthy G. 2017. The effects of face inversion and face race on the P100 ERP. J Cogn Neurosci. 29:664–676. [DOI] [PubMed] [Google Scholar]

- Couto B, Salles A, Sedeno L, Peradejordi M, Barttfeld P, Canales-Johnson A, Dos Santos YV, Huepe D, Bekinschtein T, Sigman M et al. 2014. The man who feels two hearts: the different pathways of interoception. Soc Cogn Affect Neurosci. 9:1253–1260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crone NE, Hao L, Hart J Jr, Boatman D, Lesser RP, Irizarry R, Gordon B. 2001. Electrocorticographic gamma activity during word production in spoken and sign language. Neurology. 57:2045–2053. [DOI] [PubMed] [Google Scholar]

- D'Ausilio A, Altenmuller E, Olivetti Belardinelli M, Lotze M. 2006. Cross-modal plasticity of the motor cortex while listening to a rehearsed musical piece. Eur J Neurosci. 24:955–958. [DOI] [PubMed] [Google Scholar]

- Dalla Volta R, Avanzini P, De Marco D, Gentilucci M, Fabbri-Destro M. 2018. From meaning to categorization: the hierarchical recruitment of brain circuits selective for action verbs. Cortex. 100:95–110. [DOI] [PubMed] [Google Scholar]

- Dalla Volta R, Fabbri-Destro M, Gentilucci M, Avanzini P. 2014. Spatiotemporal dynamics during processing of abstract and concrete verbs: an ERP study. Neuropsychologia. 61:163–174. [DOI] [PubMed] [Google Scholar]

- Dastjerdi M, Ozker M, Foster BL, Rangarajan V, Parvizi J. 2013. Numerical processing in the human parietal cortex during experimental and natural conditions. Nat Commun. 4:2528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson DJ, Indefrey P. 2007. An inverse relation between event-related and time-frequency violation responses in sentence processing. Brain Res. 1158:81–92. [DOI] [PubMed] [Google Scholar]

- Davis CJ, Perea M. 2005. BuscaPalabras: a program for deriving orthographic and phonological neighborhood statistics and other psycholinguistic indices in Spanish. Behav Res Methods. 37:665–671. [DOI] [PubMed] [Google Scholar]

- De Lucia M, Constantinescu I, Sterpenich V, Pourtois G, Seeck M, Schwartz S. 2011. Decoding sequence learning from single-trial intracranial EEG in humans. PLoS One. 6:e28630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delorme A, Makeig S. 2004. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods. 134:9–21. [DOI] [PubMed] [Google Scholar]

- Dottori M, Hesse E, Santilli M, Vilas MG, Martorell Caro M, Fraiman D, Sedeño L, Ibáñez A, García AM. 2020. Task-specific signatures in the expert brain: differential correlates of translation and reading in professional interpreters. Neuroimage. 209:116519. [DOI] [PubMed] [Google Scholar]

- Dottori M, Sedeno L, Martorell Caro M, Alifano F, Hesse E, Mikulan E, Garcia AM, Ruiz-Tagle A, Lillo P, Slachevsky A et al. 2017. Towards affordable biomarkers of frontotemporal dementia: a classification study via network's information sharing. Sci Rep. 7:3822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faul, F., Erdfelder, E., Lang, A. G. & Buchner, A. 2007. G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Met. 39:175–191. [DOI] [PubMed] [Google Scholar]

- Fittipaldi S, Abrevaya S, Adl F, Pascariello GO, Hesse E, Birba A, Salamone P, Hildebrandt M, Martí SA, Pautassi RM et al. 2020. A multidimensional and multi-feature framework for cardiac interoception. Neuroimage. 212:116677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster BL, Rangarajan V, Shirer WR, Parvizi J. 2015. Intrinsic and task-dependent coupling of neuronal population activity in human parietal cortex. Neuron. 86:578–590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ 2007. Statistical parametric mapping: the analysis of funtional brain images. Amsterdam, Boston: Elsevier/Academic Press. [Google Scholar]

- Gallese V, Cuccio V. 2018. The neural exploitation hypothesis and its implications for an embodied approach to language and cognition: insights from the study of action verbs processing and motor disorders in Parkinson's disease. Cortex. 100:215–225. [DOI] [PubMed] [Google Scholar]

- Gao C, Conte S, Richards JE, Xie W, Hanayik T. 2019. The neural sources of N170: understanding timing of activation in face-selective areas. Psychophysiology. 56:e13336–e13336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- García AM, Bocanegra Y, Herrera E, Moreno L, Carmona J, Baena A, Lopera F, Pineda D, Melloni M, Legaz A et al. 2018. Parkinson's disease compromises the appraisal of action meanings evoked by naturalistic texts. Cortex. 100:111–126. [DOI] [PubMed] [Google Scholar]

- García AM, Ibáñez A. 2016. A touch with words: dynamic synergies between manual actions and language. Neurosci Biobehav Rev. 68:59–95. [DOI] [PubMed] [Google Scholar]

- García AM, Moguilner S, Torquati K, García-Marco E, Herrera E, Muñoz E, Castillo EM, Kleineschay T, Sedeño L, Ibáñez A. 2019. How meaning unfolds in neural time: embodied reactivations can precede multimodal semantic effects during language processing. Neuroimage. 197:439–449. [DOI] [PubMed] [Google Scholar]

- Garcia-Cordero I, Esteves S, Mikulan EP, Hesse E, Baglivo FH, Silva W, Garcia MDC, Vaucheret E, Ciraolo C, Garcia HS et al. 2017. Attention, in and out: scalp-level and intracranial EEG correlates of Interoception and exteroception. Front Neurosci. 11:411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcia-Cordero I, Sedeno L, de la Fuente L, Slachevsky A, Forno G, Klein F, Lillo P, Ferrari J, Rodriguez C, Bustin J et al. 2016. Feeling, learning from and being aware of inner states: interoceptive dimensions in neurodegeneration and stroke. Philos Trans R Soc Lond B Biol Sci. 371:20160006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcia-Cordero I, Sedeno L, Fraiman D, Craiem D, de la Fuente LA, Salamone P, Serrano C, Sposato L, Manes F, Ibáñez A. 2015. Stroke and neurodegeneration induce different connectivity aberrations in the insula. Stroke. 46:2673–2677. [DOI] [PubMed] [Google Scholar]

- Giora R, Fein O, Aschkenazi K, Alkabets-zlozover I. 2007. Negation in context: a functional approach to suppression. Discourse Process. 43:153–172. [Google Scholar]

- Gonzalez J, Barros-Loscertales A, Pulvermüller F, Meseguer V, Sanjuan A, Belloch V, Avila C. 2006. Reading cinnamon activates olfactory brain regions. Neuroimage. 32:906–912. [DOI] [PubMed] [Google Scholar]

- Grahn JA, Henry MJ, McAuley JD. 2011. FMRI investigation of cross-modal interactions in beat perception: audition primes vision, but not vice versa. Neuroimage. 54:1231–1243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gramfort A, Luessi M, Larson E, Engemann DA, Strohmeier D, Brodbeck C, Parkkonen L, Hamalainen MS. 2014. MNE software for processing MEG and EEG data. Neuroimage. 86:446–460. [DOI] [PMC free article] [PubMed] [Google Scholar]