Abstract

Ensemble Kalman methods constitute an increasingly important tool in both state and parameter estimation problems. Their popularity stems from the derivative-free nature of the methodology which may be readily applied when computer code is available for the underlying state-space dynamics (for state estimation) or for the parameter-to-observable map (for parameter estimation). There are many applications in which it is desirable to enforce prior information in the form of equality or inequality constraints on the state or parameter. This paper establishes a general framework for doing so, describing a widely applicable methodology, a theory which justifies the methodology, and a set of numerical experiments exemplifying it.

Keywords: ensemble Kalman methods, equality and inequality constraints, derivative-free optimization, convex optimization

1. Introduction

1.1. Overview

Kalman filter based methods have been enormously successful in both state and parameter estimation problems. However, a major disadvantage of such methods is that they do not naturally take constraints into account. The ability to constrain a system often has a number of advantages that can play an important role in state and parameter estimation: they can be used to enforce physicality of modeled systems (non-negativity of physical quantities, for example); relatedly they can be used to ensure that computational models are employed only within state and parameter regimes where the model is well-posed; and finally the application of constraints may provide robustness to outlier data. Resulting improvements in algorithmic efficiency and performance, by means of enforcing constraints, has been demonstrated in the recent literature in a diverse set of fields, including process control [1], biomechanics [2], cell energy metabolism [3], medical imaging [4], engine health estimation [5], weather forecasting [6], chemical engineering [7], and hydrology [8]. Within the Kalman filtering literature the need to incorporate constraints is widely recognized and has been addressed in a systematic fashion by viewing Kalman filtering from the perspective of optimization. Indeed this optimization perspective leads naturally to many extensions, and to the incorporation of constraints in particular. Including constraints in Kalman filtering, via optimization, lends itself to an elegant mathematical framework, to a practical computational framework, and has potential in numerous applications. Surveys of the work may be found in the papers of Aravkin, Burke and co-workers [9, 10] and our work in this paper may be viewed as generalizing their perspective to the ensemble setting.

In the probabilistic view of filtering methods, constraints may be introduced by moving beyond the Gaussian assumptions that underpin Kalman methods and imposing constraints through the prior distributions on states and/or parameters. This, however, can create significant computational burden as the resulting distributions cannot be represented in closed form, through a finite number of parameters, in the way that Gaussian distributions can be. Here we circumvent this issue by taking the viewpoint that ensemble Kalman methods constitute a form of derivative-free optimization methodology, eschewing the probabilistic interpretation. The ensemble is used to calculate surrogates for derivatives. With this optimization perspective, constraints may be included in a natural way. Standard ensemble Kalman methods employ a quadratic optimization problem encapsulating relative strengths of belief in the predictions of the model and the data; these optimization problems have explicit analytic solutions. To impose constraints the optimization problem is solved only within the constraint set; when the constraints form a non-empty closed convex set, this constrained optimization problem has a unique solution.

In this introductory section, we give a literature review of existing work in this setting, we describe the contributions in this paper, and we outline notation used throughout.

1.2. Literature Review

Overviews of state estimation using Kalman based methods may be found in [11, 12, 13, 14]. The focus of this article is on ensemble based Kalman methods, introduced by Evensen in [15] and further developed in [16, 11]. The extension of the ensemble Kalman methodology to parameter estimation and inverse problems is overviewed in [17], especially for oil reservoir applications, and in an application-neutral formulation in [18]. Equipping Kalman-based methods with constraints can be desirable for a variety of inter-linked reasons described in the previous subsection: to enforce known physical boundaries in order to improve estimation accuracy; to operationalize filtering of a model which is ill-posed in subsets of its state or parameter space; and to provide robustness to noisy data and outlier events.

In extending the Kalman filter to non-Gaussian settings, a number of methods may be considered. Particle filters provide the natural methodology if propagation of probability distributions is required for state [19] or parameter [20] estimation. In the optimization setting, there are three primary methodologies: the extended Kalman filter, the unscented Kalman filter and the ensemble Kalman filter. The extended Kalman filter is based on linearization of the nonlinear system and therefore needs the computation of derivatives for propagation of the state covariance; this makes them unattractive in high dimensional problems. Unscented and ensemble Kalman filters, on the other hand, can be considered as particle-based methods which are derivative-free. In the unscented Kalman filter, the particles (sigma points) are chosen deterministically and are propagated through the nonlinear system to approximate the covariance, which is then corrected using the Kalman gain to compute the new sigma points. In the ensemble Kalman filter, the particles (ensemble members) are chosen randomly from the initial ensemble and are propagated through the dynamical system and corrected using the Kalman gain without needing to maintain the covariance.

In [21], and more recently in [22], overviews of different ways to impose constraints in linear and nonlinear state estimation are presented. To ensure that the estimates satisfy the constraints, moving horizon based estimators that solve a constrained optimization problem have been proposed [23, 24]. The paper [25] proposed a recursive nonlinear dynamic data reconciliation (RNDDR) approach based on extended Kalman filtering to ensure that state and parameter estimates satisfy the imposed bounds and constraints. The updated state estimates in this method are obtained by solving an optimization problem instead of using the Kalman gain. The resulting covariance calculations are, however, still similar to the Kalman filter: that is, unconstrained propagation and correction involving the Kalman gain, which can affect the accuracy of the estimates. To eliminate this deficiency, [26] proposed a Kullback-Leibler based method to update states and error covariances by solving a convex optimization problem involving conic constraints.

On the other hand, the paper [27] combined the concept of the unscented transformation [28] with the RNDDR formulation. In the prediction step, they propose step sizes to scale sigma points asymmetrically to better approximate the covariance information in the presence of lower and upper bounds. Then, for the update of each sigma point, they solve a constrained optimization problem. One disadvantage of this procedure is that the chosen step sizes for scaling the sigma points can only ensure the bound constraints. The paper [1] also tested various algorithms based on constrained optimization, projection [29] and truncation [5] to enforce bound constraints on unscented Kalman filtering. The paper [30] developed a class of estimators named constrained unscented recursive estimators to address the limitations of the unscented RNDDR method using optimization-based projection algorithms for obtaining sigma points in the presence of convex, non-convex and bound constraints.

As mentioned earlier, since the corrected covariance is used to compute the sigma points, unscented formulations always require enforcing constraints in both propagation and correction/update steps. In contrast, ensemble-based methods only require constraints to be enforced in the update step. In this context, the paper [8] tested projection and accept/reject methods to constrain ensemble members in a post-processing step, after application of the unconstrained ensemble Kalman filter. In the former, they project the updated ensemble members to the feasible space if they violate the constraints and in the latter they enforce the updated ensemble members to obey the constraints by resampling the dynamic and/or data model errors. On the other hand, [31, 32] proposed updating the state estimates in ensemble Kalman filtering by solving a constrained optimization problem while truncating the Gaussian distribution of the initial ensemble. The paper [6] demonstrated how to enforce a physics-based conservation law on an ensemble Kalman filtering based state estimation problem by formulating the filter update as a set of quadratic programming problems arising from a linear data acquisition model subject to linear constraints. Here we develop this body of work on constraining ensemble Kalman techniques, providing a unifying framework with an underpinning theoretical basis.

1.3. Our Contribution

The preceding literature review demonstrates that the imposition of constraints on state and parameter estimation procedures is highly desirable. It also indicates that ensemble Kalman methods offer the most natural context in which to attempt to do this, as extended Kalman methods do not scale well to high dimensional state or parameter space, whilst the unscented filter does not lend itself as naturally to the incorporation of constraints.

In this paper we build on the application-specific papers [8, 6] which demonstrate how to impose a number of particular constraints on ensemble based parameter and state estimation problems respectively. We formulate a very general methodology which is application-neutral and widely applicable, thereby making the ideas in [8, 6] accessible to a wide community of researchers working in inverse problems and state estimation. We also describe a straightforward mathematical analysis which demonstrates that the resulting algorithms are well-defined since they involve the solution of quadratic minimization problems subject to convex constraints at each step of the algorithm; these optimization problems have a unique solution. And finally we showcase the methodology on two applications, one from biomedicine and one from seismology. All of the algorithms discussed are clearly stated in pseudo-code.

Section 2 outlines the ensemble Kalman (EnKF) methodology for state estimation, with and without constraints. In section 3 the same program is carried out for ensemble Kalman inversion (EKI). Section 4 describes the numerical experiments which illustrate the foregoing ideas.

1.4. Notation

Throughout the paper we use to denote the positive integers {1, 2, 3,⋯} and to denote the non-negative integers . The matrix IM denotes the identity on . We use |⋅| to denote the Euclidean norm, and the corresponding inner-product is denoted ⟨⋅,⋅⟩. A symmetric, square matrix A is positive definite (resp. positive semi-definite) if the quadratic form ⟨u, Au⟩ is positive (resp. non-negative) for all u ≠ 0. By |⋅|B we denote the weighted norm defined by for any positive-definite B. The corresponding weighted Euclidean inner-product is given by ⟨⋅,⋅⟩B:= ⟨⋅, B−1⟩. We use ⊗ to denote the outer product between two vectors: (a ⊗ b) = ⟨b, c⟩a.

2. Ensemble Kalman State Estimation

2.1. Filtering Problem

Consider the discrete-time dynamical system with noisy state transitions and noisy observations in the form:

We assume that , are finite dimensional Hilbert spaces. Then ,and is the state-transition operator. The operator is the linear observation operator and . The covariance operators C0, Σ are assumed to be invertible. The objective of filtering is to estimate the state vj of the dynamical systems at time J, given the data Remark 2.1. • We may extend the methodology in this paper to the setting where , are separable infinite dimensional Hilbert spaces. The covariance operators C0, Σ are assumed trace-class on , and Γ on to ensure that the initial condition v0 and the noises ξJ and ηJ live in , and (respectively) with probability one. The update formulae we derive require operator composition and inversion, together with minimization of quadratic functionals on subject to convex constraints. Provided all of these operations can be carried out, then the methods derived here are well-defined in the general Hilbert space setting. This fact is important because it means that the methods derived have a robustness to mesh refinement and similar procedures arising when the problem of interest is specified via a partial differential equation, or other infinite dimensional problem.

We restrict attention to linear observation operators H because this leads to solvable quadratic optimization problems within the context of Kalman-based methods. In principle, a non-linear observation operator could be used, but the optimization problems defining the algorithms arising in this paper might not have a unique solution in this setting.

2.2. Ensemble Kalman Filter

The ensemble Kalman filter is a particle-based sequential optimization approach to the state estimation problem. The particles are denoted by and represent a collection of N candidate state estimates at time j. The method proceeds as follows. The state of all the particles at time j + 1 are predicted using the dynamics model to give . The resulting empirical covariance of the particles is then used to define the objective function Ifilter,,(v), which encapsulates the model-data compromise. This is minimized in order to obtain the updates To understand the origin of this optimization perspective on ensemble Kalman methods we argue as follows. In equation (4.10) of [33], it is shown that the data incorporation step of the Kalman filter may be written as a quadratic optimization problem for the state. In equation (4.15) of [33], the ensemble Kalman filter is written by using this quadratic minimization principle with an empirically (from the ensemble) computed covariance.

The prediction step is

| (1a) |

| (1b) |

| (1c) |

Here we have i.i.d.. Because the empirical covariance contains only N − 1 independent pieces of information, (1c) is sometimes scaled by N − 1 and not N; making this change would lead to no changes in the statements and proofs of all the theorems, and would only affect the definition of covariance within the algorithms.

Let denote the range of The update step is then

| (2) |

where

| (3) |

It can be useful to rewrite the objective function for the optimization problem in an equivalent and more standard form for input to software:

The are either identical to the data YJ+1, or found by perturbing it randomly.

Note that is an operator of rank at most N − 1, and thus can only be invertible when N − 1 is larger than the dimension of . For moderate- and high-dimensional systems, it is often impractical to satisfy this condition. However, the minimizing solution can be found by regularizing by addition of ∈I for ∈ > 0, deriving the update equations and then letting ∈ → 0. We give the resulting formulae, and then justify them immediately afterwards, in the following subsubsection. Alternatively it is possible to directly seek a solution in , which is a subspace of dimension N − 1; this is done in the subsequent subsubsection.

2.2.1. Formulation In The Original Variables

The well-known Kalman update formulae arising from solution of the minimization problem (2), (3) are as follows:

| (4a) |

| (4b) |

| (4c) |

| (4d) |

Here i.i.d. and the constant s takes value 0 or 1. When s = 1 the are referred to as perturbed observations. The choice s = 1 is made to ensure the correct statistics of the updates in the linear Gaussian setting when a probabilistic viewpoint is taken, and more generally to introduce diversity into the ensemble procedure when an optimization viewpoint is taken.

Derivation of the formulae may be found in [33]. In brief the formulae arise from completing the square in the objective function I filter,j,n(·) and then applying the Sherman–Morrison formula to rewrite the updates in the data space rather than state space; the latter is advantageous in many applications where has dimension much smaller than .

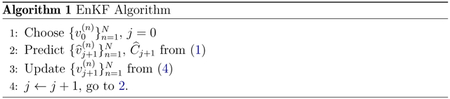

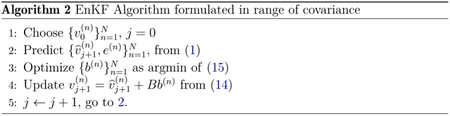

We summarize with the following pseudo-code:

An equivalent formulation of the minimization problem is now given by means of a penalized Lagrangian approach to incorporate the property that the solution of the optimization problem lies in the range of the empirical covariance. The perspective is particularly useful when further constraints are imposed on the solution of the optimization problem.

Theorem 2.2. Suppose that the dimensions of and are finite. Let j be in and 1 ≤ n ≤ N. Define Then the update formulae (4), which follow from the minimization problem (2), (3), may be given alternatively as

| (5) |

where and the argmin is projected from the pair (a,v’) onto the v′ coordinate only. Moreover with

| (6) |

and .

proof. For notational convenience denote . The objective function Ifilter,,(v) appearing in (3) is infinite if and only if is in the range of . Thus, since the range of is non-empty, we may confine minimization to the set of . Note that is in general not invertible, as it has rank N − 1,which may be less than the dimension of . The set thus comprises all v′ in the range of (a convex set) and, for each such v′ the set of a solving ; such an a is unique up to translations in the null-space of . Thus is a convex set. Notice that, for such pairs , with v′ lying in the range of the operator . Although the element a is uniquely defined only up to translations in the nullspace of , such translations do not change the value of the inner product ⟨a, v′⟩. The restriction of over the constraint set is positive definite which means that the quadratic objective function, now depending only on v′, is strongly convex. Therefore the problem has a unique solution and its Lagrangian is written as:

To express optimality conditions compute the derivatives and set them to zero:

The last two equations imply that . Thus we set and drop the second equation, replacing the first by

Solving the resulting equation for a gives

From this formula it follows that

If we define

| (8) |

then we see that

| (9) |

This is precisely the form of the ensemble Kalman update, and to complete the proof of the first part of the theorem it remains to show that this defintion of K agrees with the formulae given in (4); this amounts to verifying the identity

| (10) |

To verify this we start from the matrix identity

noting that it may be factored to write

Inverting on the right gives the desired identity (10).

We now study the alternative representation of the minimization problem (2), (3), by (6). We first note that is strictly positive definite and hence the related quadratic function is strongly convex. As a consequence we have existence and uniqueness of the solution, and the optimality condition becomes,

Then if we apply Woodbury matrix identity we obtain

Note that the matrix multiplying is

and that the matrix multiplying is

so that

Finally, as A↦A−1 is continuous over the set of invertible matrices, letting ϵ → 0 gives:

which concludes the proof.

2.2.2. Formulation In Range Of The Covariance

The minimization problem for each individual particle has a solution which, when suitably shifted, lies in the range of the empirical covariance. This allows us to seek the solution of the minimization problem as a linear combination of a given set of vectors, and to minimize over the scalars which define this linear combination. This reformulation of the optimization problem is widely employed in a variety of applications, such as weather forecasting, where the number of ensemble members N is much smaller than the dimension of the data space; this is because the inversion of S to form the Kalman gain K takes place in the data space.

In order to implement the minimization in the N dimensional subspace we note that I filter,j,n(v) is infinite unless

for some .From the structure of given in (1c) it follows that

| (11) |

Here each unknown parameter and , is the unknown vector to be determined. This form for v follows from the fact that which in turn implies that

| (12) |

which in turn implies that

| (13) |

Note that the unknown vector b depends on n as we need to solve the constrained minimization problem for each of the particles, indexed by n = 1,…, N; we have suppressed the dependence of b on n for notational simplicity.

The expression (11) for v in terms of the e(m) can be substituted into (3) to obtain a functional Jfilter,j,n(b) to be minimized over , because v is an affine function of b. Equation (11) may be written in compact form as

| (14) |

where B is the linear mapping from into defined by

We now identify Jfilter,j,n(b). We note that (13) is solved by taking

Although a is not unique, the non-uniqueness stems only from translations in the nullspace of . Translations do affect the values taken by the bm, but do not affect the vector v given by (14) because they result in changes to b which are in the null-space of B. Furthermore, for any such solution, independently of which a is chosen,

Using this and (14) in the definition of Ifilterj,n(v) we obtain

and hence, from (3),

| (15a) |

| (15b) |

Once b is determined it may be substituted back into (14) to obtain the solution to the minimization problem.

The preceding considerations also yield the following result, concerning the unconstrained Kalman minimization problem; its proof is a corollary of the more general Theorem 2.4 from the next subsection, which includes constraints in the minimization problem.

Corollary 2.3. Suppose that the dimensions of and are finite. Given the prediction (1a), the unconstrained Kalman update formulae may be found by minimizing Jfilter,j,n (b) from (15) with respect to b and substituting into (14).

We summarize the ensemble Kalman state estimation algorithm, using minimization over the vector b, in the following pseudo-code:

2.3. Constrained Ensemble Kalman Filter

In this subsection we introduce linear equality and inequality constraints on the state variable into the ensemble Kalman filter. We make prediction according to (1), and then incorporate data by solving the minimization problem (3) subject to the additional constraints

| (16a) |

| (16b) |

Here F and G are linear mappings which, respectively, take the state v into the number of equality and inequality constraints; the notation ≼ denotes inequality componentwise.

2.3.1. Formulation In The Original Variables

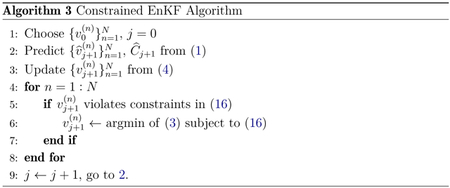

The preceding considerations lead to the following algorithm for ensemble Kalman filtering subject to constraints. The existence of a solution to the constrained minimization follows from Theorem 2.4 below.

2.3.2. Formulation In Range Of The Covariance

The linear constraints (16) can be rewritten in terms of the vector b, by means of (14), as follows:

| (17a) |

| (17b) |

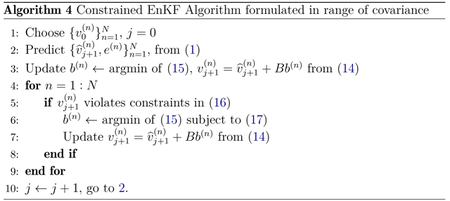

We may thus predict and then optimize the objective function Jfilter,j,n (b),given by (15), subject to the constraints (17). Implementation of this leads to following algorithm for ensemble Kalman filtering subject to constraints:

Justification for the use of this algorithm, working in the constrained space parameterized by b, is a consequence of the following:

Theorem 2.4. Suppose that the dimensions of and are finite. The problem of finding as the minimizer of Ifilterj,n(v) subject to the constraints (16) is equivalent to finding b to minimize Jfilter, J,n (b) subject to the constraints (17) and then using (14) to find from b. Furthermore, both of these constrained minimization problems have a unique solution provided that the constraint sets are non-empty.

Proof. For notational convenience set , , , and .

Denote

| (18) |

| (19) |

and

The part of the statement of Theorem 2.4 concerning existence of a minimizer is a consequence of the Lemma 2.5 stated and proved below. The second part, concerning the equivalence of minimization over b and over v (or v′) was shown in equations (11)–(15). This concludes the proof.

Notice that in the following lemma, the variables vϵ, , and v* are analogous to , , and the minimizer over v′ from (5), respectively.

Lemma 2.5. Suppose that the constraint sets of (18) and (19) are non empty, then v* exists and is unique and for all ϵ> 0,vϵ exists and is unique. Furthermore

Proof. The proof is broken into two parts. In the first we prove existence and uniqueness of a solution by using the idea that the constraint relating a and v′ renders the problem convex. In the second part of the proof we study the ∈ → 0 limit of the regularized solution, extracting convergent subsequences through compactness, and demonstrating that they converge to the desired limit. To prove existence and uniqueness of the solution of (18), notice that it can be reformulated as

and that the restriction of over its range is strictly positive definite. Hence J is a strongly convex function being minimized over a nonempty closed convex set. From standard theory v* exists and is unique. Then as is strictly positive definite, the same type of arguments provide existence and uniqueness v∈.

Now we prove the second part of the lemma. We note that matches the constraints of (19). It follows that for all ϵ > 0, . Then let us prove that . First denote by λ1 ≤⋯≤λN−1 the strictly positive eigenvalues of (recall that is symmetric positive semidefinite and that almost surely). Hence where the ak’s are the eigenvectors of (the first and second sums respectively gather the vectors of the range and of the nullspace of ). As v* lies in the range of , it holds that Now as the ak’s do not depend on ∈, by letting ∈ tending to zero, this quantity will tend to

Therefore it holds that . From this we deduce that there exists δ > 0 such that for all 0 < ∈ < δ, Then set where lies in the nullspace of and in its range (recall that for a symmetric matrix nullspace and range are orthogonal) and see that . It holds that for ∈ sufficiently small. Furthermore , and since this quantity is bounded from above we deduce that and that is bounded. Let be a sequence of positive real numbers such that , and from the preceding extract a converging subsequence (denoted for simplicity) such that converges to a limit denoted w*. As lies in , we can use the eigenvalue decomposition of to show that . This limiting identity, and the fact that has limit 0, may be used to establish the first equality within the following chain of equalities and inequalities:

Now note that w* matches all the constraints of (18). Indeed lies in the range of which is a closed space, also .It is a clear that matches the equality and inequality constraints of (18) for all m and hence passing to the limit we have that w* satisfies the equalities and inequalities.

From the uniqueness of the minimizer of (18) we have that w* is equal to v*. In particular this means that v* is the unique cluster point of the original sequence . Since the original sequence was arbitrarily chosen, we conclude that Remark 2.6. Notice that the proof remains true if we take general convex inequalities. We simply need the constrained sets to be closed and convex; however we have restricted to linear equality and inequality constraints for simplicity and because these arise most often in practice.

3. Ensemble Kalman Inversion

3.1. Inverse Problem

In this section we show how a generic inverse problem may be formulated as a partially observed dynamical system. This enables the machinery from the preceding section 2 to be used to solve inverse problems.

We are interested in the inverse problem of finding from where

Time does not appear (explicitly) in this equation (although G may involve solution of a time-dependent differential equation, for example). In order to use the ideas from the previous section, we introduce a new variable w = G(u) and rewrite the equation as

The key point about writing the equation this way is that the data y is now linearly related to the variable v = (u, w)T and now we may apply the ideas of the previous section to the model by introducing the following dynamical system, taking yj+1 = y as the given data:

If we introduce the new variables

| (20a) |

| (20b) |

and write vj = (uj, wj)T, we may write the dynamical system in the form

| (21a) |

| (21b) |

which is exactly in the same form as in the previous section. We note that

3.2. Ensemble Kalman Inversion

The prediction step and the Kalman gain are defined as in (3), and the solution of the optimization problem is given by (4). We now simplify these formulae using the specific structure on Ψ, v, H arising in the inverse problem and given in (20); this results in block form vectors and matrices. First we note that

Here

and

The covariance denotes the empirical covariance of the ensemble in data space, denotes the empirical covariance of the ensemble in space of the unknown u, and denotes the empirical cross-covariance from data space to the space of the unknown.

Noting that we obtain

| (22) |

Combining equation (22) with the update equation within (4) it follows that

and

and hence that

Thus we have derived the EKI update formula:

| (23) |

We note also that

| (24) |

However is not needed to update the state and so plays no role in this unconstrained EKI algorithm. (It may be used, however, to impose constraints on observation space, as discussed in the next subsection.)

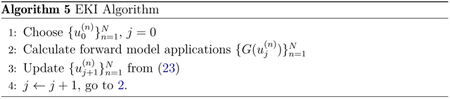

In summary we have derived the following algorithm for solution of the unconstrained inverse problem:

3.3. Ensemble Kalman Inversion With Constraints

3.3.1. Formulation In The Original Variables

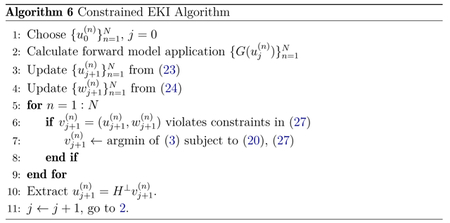

We now consider imposing constraints on the optimization step arising in ensemble Kalman inversion. As in the unconstrained case we do this by formulating the problem as a special case of the partially observed dynamical system, subject to constraints, from the previous section.

To this end we formulate the constraints in the space of the unknown and the data as follows:

| (25a) |

| (25b) |

| (25c) |

| (25d) |

The algorithm proceeds by predicting according to equation (1), and then optimizing (3), all using the specific structure (20), and with the optimization subject to the constraints (25), written in the notation of the general Kalman updating formulae in (27), detailed below; in particular the rewrite (27) of the constraints expresses everything in terms of the variable v. We may summarize the constraints as follows, to allow direct application of the ideas of the previous section. To this end define

| (26a) |

| (26b) |

| (26c) |

Then the constraints (25) may be written as

| (27a) |

| (27b) |

See Algorithm 6 for the resulting pseudo-code.

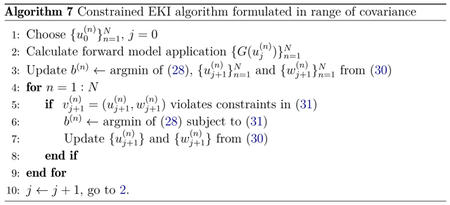

3.3.2. Formulation In Range Of The Covariance

We describe an alternative way to approach the derivation of the EKI update formulae. We apply Theorem 2.4 with the specific structure (20), (27) arising from the dynamical system used in EKI. To this end we define

| (28a) |

| (28b) |

where b is the vector of N scalar weights bm and

| (29a) |

| (29b) |

| (29c) |

Once this quadratic form has been minimized with respect to b then the update formula (11) gives

| (30a) |

| (30b) |

Note that the vector {bm} depends on the particle label n; as in the previous section, we have suppressed this dependence for notational convenience. We may now impose linear equality and inequality constraints on both u and w = G(u) (i.e. in parameter and data spaces) and minimize (28) subject to these constraints. To be more specific if we impose the constraints (27) expressed in the variable b:

| (31a) |

| (31b) |

Here F, G, f and g are given by (26), B is defined by (29) and

See Algorithm 7 for the resulting pseudo-code.

Remark 3.1. As in the previous section, the result holds true for general convex inequality constraints; the linear case is considered for simplicity of exposition, and because it is most frequently arising in practice.

Remark 3.2. The EKI algorithm, with or without constraints, has the following invariant subspace property: define , then for all J in {0, …, J} and for all n in {1,…, N}, then the defined by the three algorithms in this section all lie in . This is a direct consequence of writing the update formulae in terms of b and noting (30).

We can now state a result analogous to Theorem 2.4, and with proof that is a straightforward corollary of that result, using the specific structure (20):

Theorem 3.3. Suppose that the dimensions of and are finite. Suppose also that the specific structure (20) is applied. The problem of finding from the minimizer of Ifilter, J,n(v), defined in (3) and subject to the constraint (25), is equivalent to finding b that minimizes (28), subject to (31), and then using (30) to find from b. Furthermore, both of these constrained minimization problems have a unique solution provided that the constraint sets are non-empty.

4. Numerical Results

This section contains numerical results which demonstrate the benefits of imposing constraints on ensemble Kalman methods. Subsection 4.1 concerns an application of state estimation (using EnKF) in biomedicine, using real patient data, whilst subsection 4.2 concerns an application of inversion (using EKI) in seismology and employs simulated data. When comparing results from the two experiments, recall that iterations of EKI correspond to an algorithmic dynamics intended to converge to a single distribution (over ensemble members) on the parameters for which we invert, whereas iterations of EnKF correspond to the incorporation of new data at every physical measurement time, and thus the distribution (over ensemble members) is not necessarily expected to converge as the iteration progresses. In both applications, minimizations were performed in MATLAB using the default interior-point method (fmincon) through the general quadratic programming function, quadprog.

4.1. State Estimation

Here we present an application of the constrained EnKF to the tracking and forecasting of human blood glucose levels. We use self-monitoring data collected by an individual with Type 2 Diabetes. We use the “P1” data set described by Albers et al. in [34]; this dataset includes measurements of blood glucose and consumed nutrition, and is publicly available on physionet.org. For more information on the data, and on an unconstrained data assimilation approach using the unscented Kalman filter, see [34]. We model the glucose-insulin system with the ultradian model proposed by [35]. The primary state variables are the glucose concentration, G, the plasma insulin concentration, Ip, and the interstitial insulin concentration, Ii; these three state variables are augmented with a three stage delay (h1, h2, h3) which encodes a non-linear delayed hepatic glucose response to plasma insulin levels. The resulting ordinary differential equations have the form:

| (32a) |

| (32B) |

| (32c) |

| (32d) |

| (32e) |

| (32f) |

Here (t) represents a known rate of ingested carbohydrates, f1(G) represents the rate of glucose-dependent insulin production, f2(G) represents insulin-independent glucose utilization, f3(Ii) G represents insulin-dependent glucose utilization and f4(h3) represents delayed insulin-dependent hepatic glucose production; the functional forms of these parameterized processes can be found in the appendix, along with a description of model parameters.

In the EnKF setting, we write u = [Ip, Ii, G, h1, h2, h3], and use (32) to define F such that

Where θ contains model parameters. We then extend the state vector in order to perform joint parameter estimation: v = [u, Rg]T.

For the purposes of this paper, the function (t) may be viewed as known; it is determined from data describing meals consumed by the patient. Since insulin (Ip and Ii) and delay variables (h1, h2, and h3) are not measured, whilst glucose is measured, we define the measurement operator to be H = [0, 0, 1, 0, 0, 0, 0]. The discrete time forward model is obtained by integrating the deterministic model in (32) between consecutive measurement time-points and applying an identity map to Rg. Because these time-points may not be equally spaced, and because the time-dependent forcings (meals) will differ in different time-intervals, this leads to a map of the form

This is a slight departure from the methodology outlined in section 2, where Ψ does not depend on J (autonomous dynamics) but is a straightforward extension which the reader can easily provide.

We present EnKF results from a single patient’s data when run with and without constraints (Algorithms 1 and 3 respectively). We performed joint state-parameter estimation, augmenting the state with parameter Rg (see Appendix for details of where this parameter appears) and adding identity-map dynamics for parameter Rg. The following constraints were imposed:

| (33) |

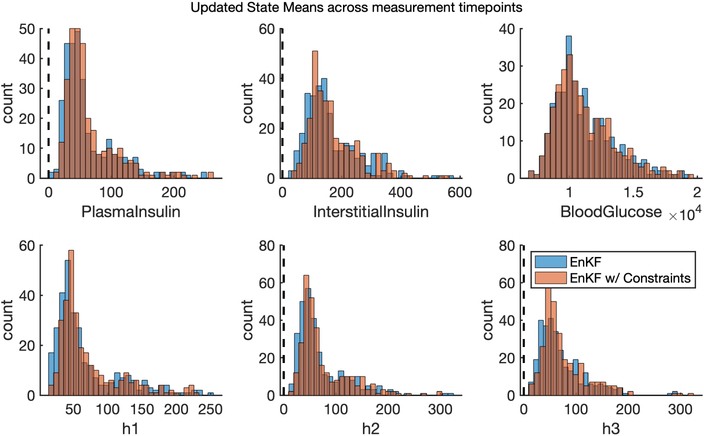

Figure 1 compares the overall distribution of updated state means over time when running EnKF with and without these state constraints. While individual particles in this experiment often violated the constraints, the overall updated means did not. Nevertheless, enforcement of lower-bound constraints shifts up the state distribution slightly. Note that upper bound constraints were never violated in this experiment.

Figure 1.

The distribution of mean state updates when running EnKF with and without inequality constraints. Black vertical lines denote lower bound state constraints.

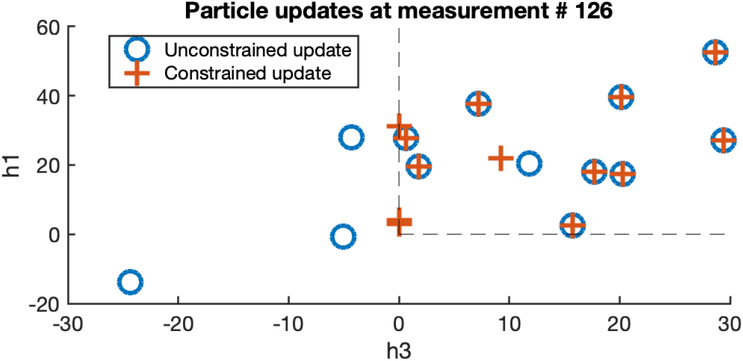

Figure 2 shows a two-dimensional state projection of updated particles at a given time step before and after applying the constrained optimization. Note that particles may additionally violate constraints in unplotted dimensions—this explains why one particle whose unconstrained update appears to live within the constraints is in fact differently updated under the constrained optimization. Time step 126 was selected for illustrative purposes, and was the measurement event in which particles most often violated the constraints.

Figure 2.

Particle updates at a given time-step (here, measurement 126) are shown using a traditional Kalman gain versus using the constrained optimization. The black lines denote lower bound constraints on the states h1 and h3.

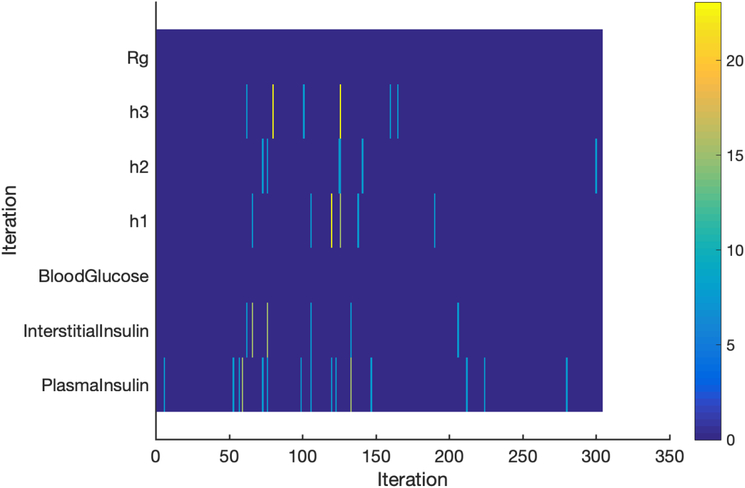

Figure 3 depicts the overall frequency of constraint violations. We observe that the the measured state (blood glucose) never violated a constraint, nor did the inferred parameter Rg. However, other model states did often violate constraints, and up to 30% (4/13) of particles simultaneously violated the constraints at a single time-step.

Figure 3.

Percentage map of the constraint violations, where each lower-bound constraint is represented by a row. At each iteration, the percentage of particles that violated a constraint is color-coded, with yellow representing the largest proportion of constraint violations.

By adding constraints, we ensure that all the simulations which constitute the ensemble method are biologically plausible.

4.2. Inverse Problem

Here we present application of the constrained EKI in seismology. We study near-surface site characterization in which we invert for the shear wave velocity profile of the geomaterials in the earth shallow crust, using downhole array data. For forward modeling, we consider a semi-discrete form of the following wave equation in a horizontally stratified heterogeneous soil layer:

Here (z, t) is the displacement field of the wave response as a function of spatial variable z ∈ (0, H) and time variable t ∈ (0, T]. The function (z) is the shear wave velocity function. We impose the following boundary and initial conditions:

Where d0(t) is the prescribed displacement at depth z = H. Generally, the shear wave velocity changes as a piecewise constant function with depth. If the layering information, i.e., the total number of layers and their thickness, is not available or is poorly characterized, it is desired to use a generic function for site characterization, such as this:

See, for example, [36]. In the constrained EKI setting, u = (cs0, k, z0, n, z1, α) and

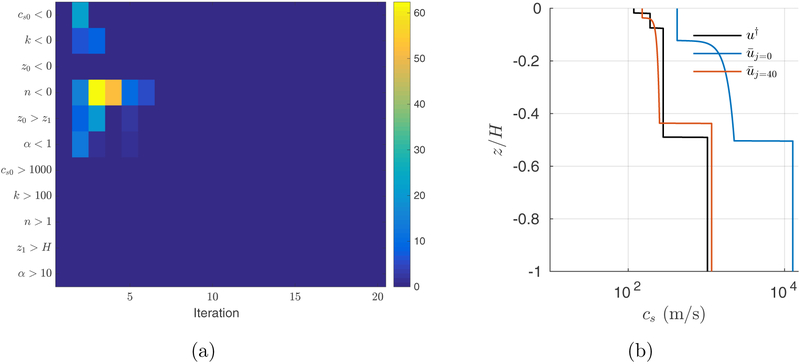

For the numerical example studied here, Gu and gu are determined by enforcing the constraints 0 ≤ cs0 1000, 0 ≤ k ≤ 100, 0 ≤ z0 ≤ z1, 0 ≤ n ≤ 1, z0 ≤ z1 ≤ H, and 1 ≤ α ≤ 10. We generate the initial ensemble by drawing samples from uniform distributions and discard members that violate the enforced constraints. In order to avoid very large velocities at z = z1, we also discard members with (z1) > 5000m/s. If we perform parameter learning using the unconstrained EKI, the experiment fails at J = 1 because of incapability of the dynamic model to propagate unphysical values of the shear wave velocity cs.

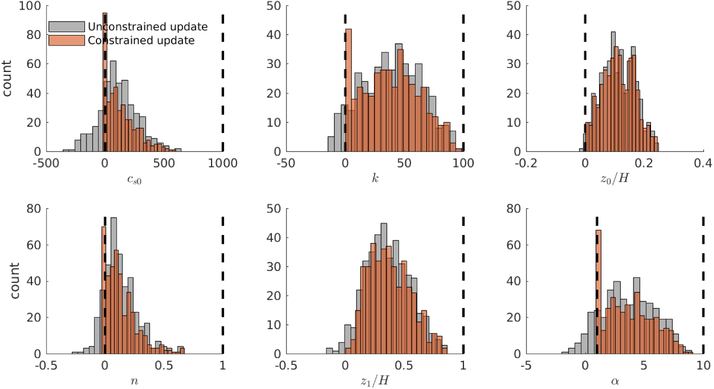

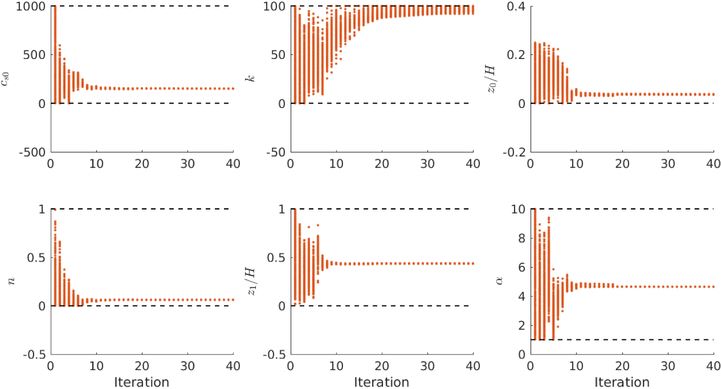

All results shown use Algorithm 7. Figure 4 shows the ensemble distribution of u at J = 2 before and after enforcing constraints whilst Figure 5 shows the evolution of the updated ensemble. Note that parameter k saturates with an ensemble close to the upper bound of 100 imposed through constraints on this parameter; however experiments in which we imposed different upper bounds on this parameter lead to different estimates for k, with little change to the estimated velocity profile and we conclude that this parameter suffers from identifiability issues. (Note that Figure 4 displays the updated ensemble distribution at a single step in the sequence of ensemble updates, comparing the effect of imposing constraints with neglecting them; in contrast Figure 1 shows the distribution over all measurement time-points of the ensemble means. The figures thus illustrate different phenomena).

Figure 4.

The distribution of parameters before and after enforcing constraints in Algorithm 7 at iteration J = 2. Black vertical lines denote the lower and upper bound constraints.

Figure 5.

Evolution of the updated ensemble with iteration. Black horizontal lines denote the lower and upper bound constraints.

Moreover, Figure 6a shows the map of violation for different constraints enforced on parameters whilst Figure 6b shows the estimated generic cs profile after 40 iterations compared to the true profile and the initial estimate. Figure 6a shows the key role employed by the enforcing of constraints. In this case the addition of constraints ensures that all the simulations which constitute the ensemble method are physically meaningful, and also that the forward model remains well-posed.

Figure 6.

(a) The percentage map of the constraint violations for the first 20 iterations; (b) the estimated velocity profile compared to the true profile (u †) and the initial estimate

5. Conclusions

Constraints arise naturally in many state and parameter estimation problems. We have shown how convex constraints may be incorporated into ensemble Kalman based state or parameter estimation algorithms with relatively few changes to existing code: the standard algorithm is applied and for any ensemble member which violates a constraint, a quadratic optimization problem subject to convex constraints is solved instead. We have written the resulting algorithms in easily digested pseudo-code, we have developed an underpinning theory and we have given illustrative numerical examples.

Two primary directions suggest themselves in this area. The first is the use of these methods in applications. As indicated in the introduction, our general formulation is inspired by the two papers [8, 6] from the geosciences and we have demonstrated applicability to problems from biomedicine and seismology; but many other potential application domains are ripe for application of ensemble Kalman methodology, because of its black-box and derivative-free formulation, and the ability to impose constraints in a straightforward fashion will help to extend this methodology. The second is the theoretical analysis of these methods: can the inclusion of constraints be used to deduce improved accuracy of state or parameter estimates; or can the inclusion of constraints be used to demonstrate improved performance as measured, for example, by proportion of model runs which are physically (or biologically etc.) plausible? Furthermore, although the imposition of constraints is reasonable, it is not clear that it may not lead to pathologies in algorithmic performance and ruling out, or understanding, the occurrence of such pathological behaviour may be important.

Acknowlegments

This work was funded by NIH-NLM grant RO1 LM012734. AMS was also funded by AFOSR Grant FA955017-10185 and by ONR grant N00014-17-1-2079.

Appendix

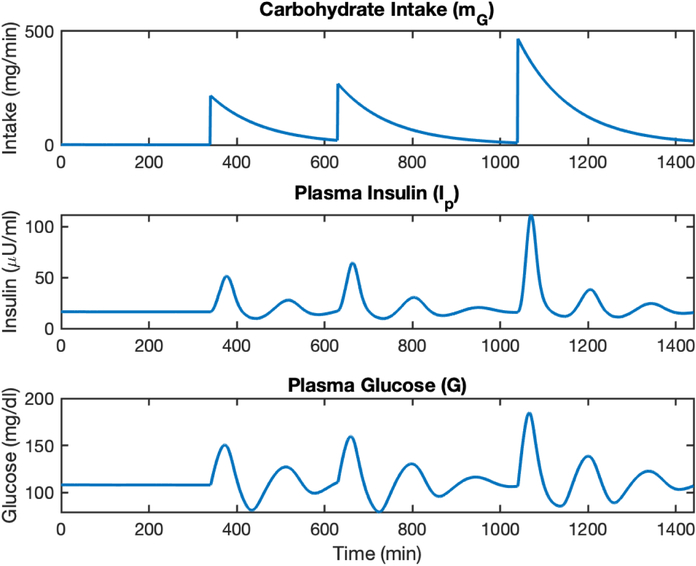

We give the details of the ultradian model of glucose-insulin dynamics used as the forward model in subsection 4.1. An example of the induced dynamics is given in Figure 7.

| (34) |

| (35) |

| (36) |

| (37) |

| (38) |

| (39) |

where, for N meals at times with carbohydrate composition

| (40) |

and

| (41) |

| (42) |

| (43) |

| (44) |

| (45) |

Figure 7.

Here we show the oscillating dynamics of the glucose-insulin response in the ultradian model, driven by an exponentially decaying nutritional driver mG

References

- [1].Teixeira BO, Tôrres LA, Aguirre LA and Bernstein DS 2010. Journal of Process Control 20 45–57 [Google Scholar]

- [2].Bonnet V, Dumas R, Cappozzo A, Joukov V, Daune G, Kulić D, Fraisse P, Andary S and Venture G 2017. Journal of biomechanics 62 140–147 [DOI] [PubMed] [Google Scholar]

- [3].Goffaux G, Perrier M and Cloutier M 2011. Cell energy metabolism: a constrained ensemble kalman filter Proceedings of the 18th IFAC world congress: Milano, Italy, International Federation of Automatic Control; pp 8391–8396 [Google Scholar]

- [4].Lei J, Liu S and Wang X 2012. IET Science, Measurement & Technology 6 63–77 [Google Scholar]

- [5].Simon D and Simon DL 2010. International Journal of Systems Science 41 159–171 [Google Scholar]

- [6].Janjić T, McLaughlin D, Cohn SE and Verlaan M 2014. Monthly Weather Review 142 755–773 [Google Scholar]

- [7].Yang X, Huang B and Prasad V 2014. Chemical Engineering Science 106 211–221 [Google Scholar]

- [8].Wang D, Chen Y and Cai X 2009. Water resources research 45 [Google Scholar]

- [9].Aravkin AY, Burke JV and Pillonetto G 2014. Optimization viewpoint on kalman smoothing with applications to robust and sparse estimation Compressed Sensing & Sparse Filtering (Springer; ) pp 237–280 [Google Scholar]

- [10].Aravkin A, Burke JV, Ljung L, Lozano A and Pillonetto G 2017. Automatica 86 63–86 [Google Scholar]

- [11].Evensen G 2009. Data assimilation: the ensemble Kalman filter (Springer Science & Business Media; ) [Google Scholar]

- [12].Reich S and Cotter C 2015. Probabilistic forecasting and Bayesian data assimilation (Cambridge University Press; ) [Google Scholar]

- [13].Law K, Stuart A and Zygalakis K 2015. Data Assimilation (Springer; ) [Google Scholar]

- [14].Carrassi A, Bocquet M, Bertino L and Evensen G 2018. Data assimilation in the geosciences: An overview of methods, issues, and perspectives vol 9 (Wiley Interdisciplinary Reviews: Climate Change, 5(2018)) [Google Scholar]

- [15].Evensen G 1994. Journal of Geophysical Research: Oceans 99 10143–10162 [Google Scholar]

- [16].Burgers G, Jan van Leeuwen P and Evensen G 1998. Monthly weather review 126 1719–1724 [Google Scholar]

- [17].Oliver DS, Reynolds AC and Liu N 2008. Inverse theory for petroleum reservoir characterization and history matching (Cambridge University Press; ) [Google Scholar]

- [18].Iglesias MA, Law KJ and Stuart AM 2013. Inverse Problems 29 045001 [Google Scholar]

- [19].Doucet A, De Freitas N and Gordon N 2001. An introduction to sequential monte carlo methods Sequential Monte Carlo methods in practice (Springer; ) pp 3–14 [Google Scholar]

- [20].Del Moral P, Doucet A and Jasra A 2006. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 68 411–436 [Google Scholar]

- [21].Simon D 2010. IET Control Theory & Applications 4 1303–1318 [Google Scholar]

- [22].Amor N, Rasool G and Bouaynaya NC 2018. Constrained state estimation — a review (Preprint arXiv:1807.03463)

- [23].Robertson DG, Lee JH and Rawlings JB 1996. AIChE Journal 42 2209–2224 [Google Scholar]

- [24].Rao CV, Rawlings JB and Mayne DQ 2003. IEEE transactions on automatic control 48 246–258 [Google Scholar]

- [25].Vachhani P, Rengaswamy R, Gangwal V and Narasimhan S 2005. AIChE Journal 51 946–959 [Google Scholar]

- [26].Li R, Jan NM, Prasad V and Huang B 2018. Constrained extended kalman filter based on kullback-leibler (kl) divergence 2018 European Control Conference (ECC) (IEEE; ) pp 831–836 [Google Scholar]

- [27].Vachhani P, Narasimhan S and Rengaswamy R 2006. Journal of process control 16 1075–1086 [Google Scholar]

- [28].Julier S, Uhlmann J and Durrant-Whyte HF 2000. IEEE Transactions on automatic control 45 477–482 [Google Scholar]

- [29].Simon D and Simon DL 2006. IEE Proceedings-Control Theory and Applications 153 371–378 [Google Scholar]

- [30].Mandela R, Kuppuraj V, Rengaswamy R and Narasimhan S 2012. Journal of Process Control 22 718–728 [Google Scholar]

- [31].Prakash J, Patwardhan SC and Shah SL 2008. 2008 American Control Conference 3542–3547 [Google Scholar]

- [32].Prakash J, Patwardhan SC and Shah SL 2010. Industrial & Engineering Chemistry Research 49 2242–2253 [Google Scholar]

- [33].Stuart A and Zygalakis K 2015. Data assimilation: A mathematical introduction Tech. rep Oak Ridge National Laboratory (ORNL), Oak Ridge, TN (United States) [Google Scholar]

- [34].Albers DJ, Levine M, Gluckman B, Ginsberg H, Hripcsak G and Mamykina L 2017. PLoS computational biology 13 e1005232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Sturis J, Polonsky KS, Mosekilde E and Van Cauter E 1991. American Journal of Physiology-Endocrinology And Metabolism 260 E801–E809 [DOI] [PubMed] [Google Scholar]

- [36].Shi J and Asimaki D 2018. Seismological Research Letters 89 1397–1409 [Google Scholar]