Abstract

Background:

Administrative health claims data have been used for research in neuro-ophthalmology, but the validity of International Classification of Diseases (ICD) codes for identifying neuro-ophthalmic conditions is unclear.

Evidence Acquisition:

We performed a systematic literature review to assess the validity of administrative claims data for identifying patients with neuro-ophthalmic disorders. Two reviewers independently reviewed all eligible full-length articles used a standardized abstraction form to identify ICD code-based definitions for nine neuro-ophthalmic conditions and their sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV). A quality assessment of eligible studies was also performed.

Results:

Eleven articles met criteria for inclusion: 3 studies of idiopathic intracranial hypertension (PPV 54–91%, NPV 74–85%), 2 of giant cell arteritis (sensitivity 30–96%, PPV 94%), 3 of optic neuritis (sensitivity 76–99%, specificity 83–100%, PPV 25–100%, NPV 98–100%), 1 of neuromyelitis optica (sensitivity 60%, specificity 100%, PPV 43–100%, NPV 98–100%), 1 of ocular motor cranial neuropathies (PPV 98–99%), and 2 of myasthenia gravis (sensitivity 53–97%, specificity 99–100%, PPV 5–90%, NPV 100%). No studies met eligibility criteria for non-arteritic ischemic optic neuropathy, thyroid eye disease, and blepharospasm. 45.5% provided only one measure of diagnostic accuracy. Complete information about the validation cohorts, inclusion/exclusion criteria, data collection methods, and expertise of those reviewing charts for diagnostic accuracy was missing in 90.9%, 72.7%, 81.8%, and 36.4% of studies, respectively.

Conclusions:

Few studies have reported the validity of ICD codes for neuro-ophthalmic conditions. The range of diagnostic accuracy for some disorders and study quality varied widely. This should be taken into consideration when interpreting studies of neuro-ophthalmic conditions using administrative claims data.

Introduction:

The epidemiology, healthcare utilization, and treatment outcomes of many neuro-ophthalmic disorders are incompletely understood and form the basis of ongoing active research efforts. Because these disorders are relatively rare, large administrative claims and other healthcare-related databases (“big data”) have become an increasingly popular clinical research tool. As Moss et al. discuss in a recent State-of-the-Art Review within this journal, large sample sizes (often in the tens or hundreds of millions) permit the study of rare diseases, and real-world data provide more accurate population-based estimates of disease incidence and prevalence and healthcare utilization and costs1. However, data which has been collected primarily for insurance billing rather than research purposes is prone to measurement error.

Administrative claims databases such as Medicare, commercial health insurance data (e.g. Optum Clinformatics Datamart), and the National Inpatient Sample have been used to study idiopathic intracranial hypertension (IIH)2, non-arteritic ischemic optic neuropathy (NAION)3–7, optic neuritis8, and thyroid eye disease (TED)9. In these studies, patients are identified using the International Classification of Diseases (ICD) coding system (ICD-9 or ICD-10), and accurate coding is critical for results to be externally valid. As neuro-ophthalmic disorders are especially prone to diagnostic error10,11, validation studies that confirm the accuracy of ICD-9 and ICD-10 codes are important for performing and interpreting the results off administrative claims studies in neuro-ophthalmology.

In this State-of-the-Art Review, we provide a systematic review of validation studies for ICD-9 and ICD-10 codes in neuro-ophthalmology. We believe that a better understanding of the validity of ICD codes for identifying neuro-ophthalmic disease in large datasets will help researchers and readers design and interpret administrative claims studies in neuro-ophthalmology.

Methods:

We performed a systematic review of validation studies for ICD-9 and ICD-10 codes used for neuro-ophthalmic disorders. The review protocol was developed in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement12, prospectively registered in the PROSPERO database (https://www.crd.york.ac.uk/PROSPERO/), and was exempt from institutional review board (IRB) review.

We based our search strategy on a 2012 systematic review of validation studies in neurology13, which has been applied to similar studies of diabetes14 and sepsis15. Briefly, this strategy involves performing separate searches for each of three concepts: 1) health services research, administrative claims, and ICD codes; 2) diagnostic validity including sensitivity, specificity, and predictive value; and 3) neuro-ophthalmic conditions of interest. For each concept, search terms (including both keywords and MeSH and EMTREE search terms) are separated by the delimiter ‘or’. When the results from all three concepts are combined, the delimiter ‘and’ is used such that results must appear in each of the three parent searches. We selected nine neuro-ophthalmic conditions of interest:, NAION, giant cell arteritis (GCA), optic neuritis, neuromyelitis optica (NMO), ocular motor cranial neuropathies, myasthenia gravis (MG), thyroid eye disease (TED), and blepharospasm. We chose these conditions because they represent a group of clinically relevant disorders encompassing both afferent and efferent neuro-ophthalmic disease, many of which have been studied using administrative claims data. We did not include multiple sclerosis (MS) as its validation studies have previously been systematically reviewed13. A complete list of our search syntax can be found in the Supplemental Material.

We searched MEDLINE (Ovid platform, 1948 to August 8, 2019) and EMBASE (Elsevier platform, 1980 to August 8, 2019). Two reviewers (AGH and LBD) independently reviewed all MEDLINE abstracts, and a third reviewer (TD) reviewed all EMBASE abstracts for eligibility. Articles were eligible for review if they were original studies validating ICD-9 or ICD-10 codes against a reference standard; reported sensitivity, specificity, positive predictive value, or negative predictive value; and were published in English as full-length articles. We did not include studies with ICD-8 or earlier code definitions. Full-length articles that were identified as potentially eligible by at least one reviewer were then independently reviewed in full by two reviewers (AGH and LDB) to confirm eligibility. Disagreements were resolved by consensus. We identified additional articles for review by examining the reference lists of full-length articles that we identified and consulting the Journal of Neuro-Ophthalmology Editorial Board to ensure that no additional studies were missed.

We used a standardized form to record the specific ICD codes, case definitions, sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) from each study. We also gathered information on study location, data source, sample size, years of study, and reference standard. Study quality was evaluated using adapted reporting guidelines for validation studies of health administrative data16. Due to the limited number of studies for each condition, we summarized results in descriptive tables but did not perform a quantitative meta-analysis.

Results:

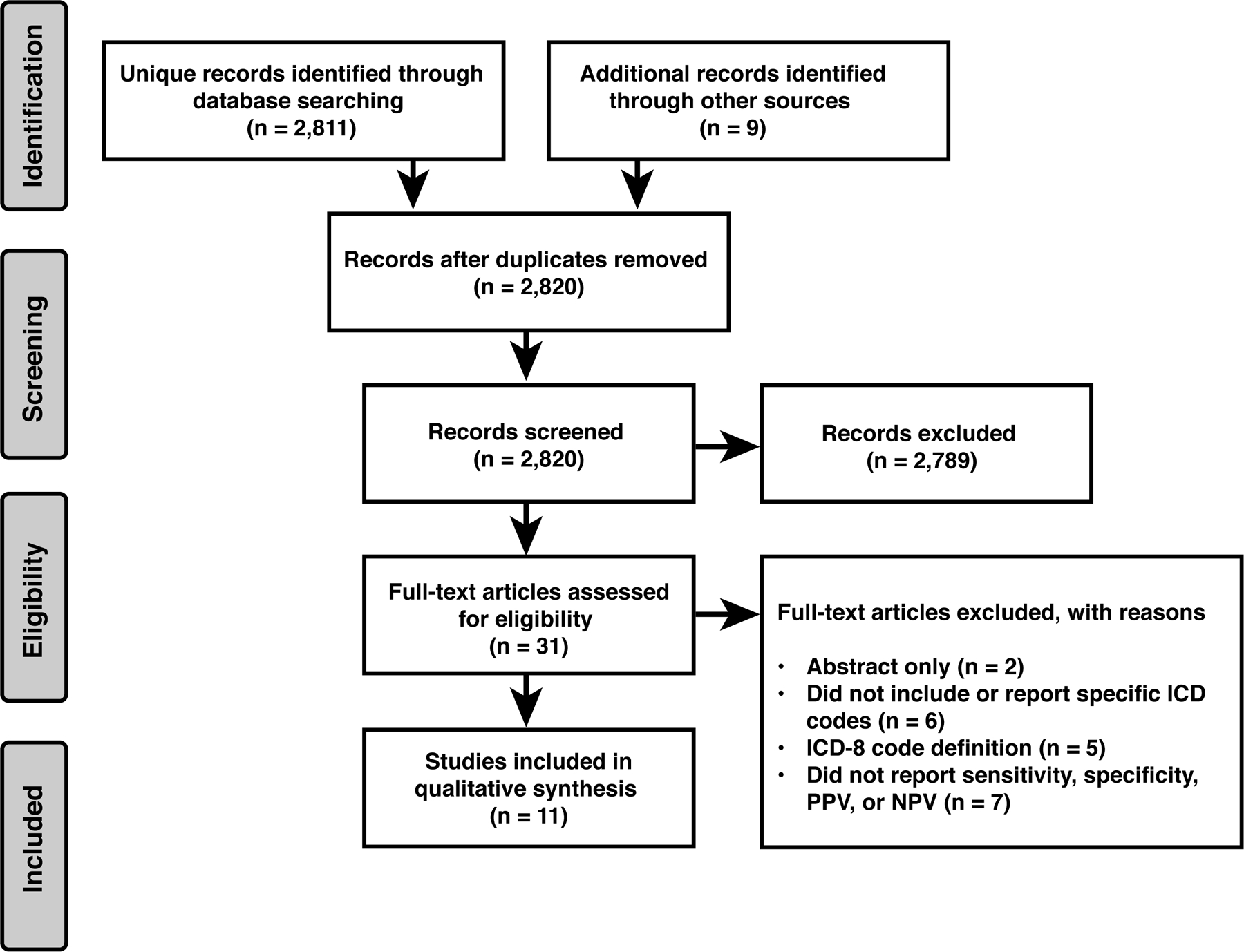

A total of 2,811 unique records were identified through database searching, and 9 additional studies were identified by reviewing reference lists and other external sources. Of these 2,820 studies, 31 abstracts met eligibility criteria for a full-length review. Twenty records were excluded during the review process (see Figure 1 for exclusion reasons), resulting in a final yield of 11 articles. The range of published sensitivity, specificity, PPV, and NPV for the diseases of interest included in these articles (IIH, GCA, otic neuritis, NMO, ocular motor cranial neuropathies, and MG) are summarized in Table 1. Detailed summaries of each article and case definition can be found in Table E1, and summarized quality assessments in Table E2. For NAION, TED, and blepharospasm, there were no full-length articles that met inclusion criteria.

Figure 1:

Systematic review flow diagram

Table 1:

Sensitivity, specificity, and positive and negative predictive values for ICD code-based definitions of neuro-ophthalmic conditions

| Condition | No. of studies | No. of case definitions/validations | Sensitivity (range) | Specificity (range) | PPV (range) | NPV (range) |

|---|---|---|---|---|---|---|

| IIH | 3 | 4 | 54–91% | 74–85% | ||

| GCA | 2 | 2 | 30–96% | 94% | ||

| Optic neuritis | 3 | 6 | 76–99% | 83–100% | 25–100% | 98–100% |

| NMO | 1 | 3 | 60% | 100% | 43–100% | 100% |

| Cranial nerve palsy | 1 | 3 | 98–99% | |||

| MG | 2 | 6 | 53–97% | 99–100% | 5–90% | 100% |

| NAION | 0 | |||||

| TED | 0 | |||||

| Blepharospasm | 0 |

Idiopathic intracranial hypertension:

Validation data for the ICD-9 and ICD-10 codes for IIH come from two sources: a single-center hospital-based inpatient and emergency department database in the United States17 and a national patient registry containing both inpatient and subspecialty outpatient data from Sweden18,19. In both cohorts, a single code for IIH in an adult had a positive predictive value of approximately 55% compared to Friedman and Jacobson or modified Dandy criteria, respectively. In the Swedish cohort, algorithm-based definitions that included two separate IIH code instances, age, and acetazolamide prescriptions increased PPV to 89–90% and provided NPV of 74–85%.

Giant cell arteritis:

Two studies used inpatient claims data from two different sources (a national public insurance database in France20 and hospital discharge records in Olmstead County, MN21) to examine the validity of ICD-9 and ICD-10 codes for GCA. Both used American College of Rheumatology diagnostic criteria as the reference standard, though these criteria changed slightly between the publication of the two studies. Hospital discharge data usually includes a primary diagnosis followed by a number of secondary diagnoses, which can vary in number between state and health system. The Olmstead County study examined ICD codes in either the primary or secondary diagnosis position and found a much lower sensitivity (30.1%) than the French study (96.4%), which limited its evaluation to primary or primary-related admission or discharge diagnoses.

Optic neuritis:

Three studies reported the validity of ICD-9 and ICD-10 codes for optic neuritis. One used pediatric inpatient data from Denmark22, whereas two used adult inpatient and outpatient data from Canada and the U.S., respectively23,24. Sensitivity and specificity were generally high, though PPV varied widely from 25.4% in Canada to 100% in the U.S. Interestingly, increasing the number of required diagnoses or specifying diagnosis position did not have as much of an impact as in IIH or GCA, respectively. Importantly, the reference standard for all three of these studies was the treating clinician’s diagnosis, with no requirement for specific exam or MRI findings. One study also required that a serum ACE level be ordered to identify patients with suspected optic neuritis, though this may have been because it was specifically examining optic neuritis in the setting of anti-TNF therapy for other rheumatologic conditions24.

Neuromyelitis optica:

One of the above studies also included pediatric NMO, comparing ICD-10 G36.0 to the Wingerchuck 2015 diagnostic criteria25. Sensitivity was 60%, and specificity was 100%. PPV improved when the diagnosis was required to be in the primary position and when two or more claims were required.

Ocular motor cranial neuropathies:

One study examined the association between migraine and ocular motor cranial nerve (CN) palsies using public health insurance data from Taiwan, and in doing so, also evaluated the validity of ICD-9 codes for third, fourth, and sixth nerve palsies26. All had very high PPV (>97%) as compared to a chart review by two neurologists. In this study, a diagnosis was confirmed “if the chart clearly described both the symptoms and the signs of these 3 CN palsies with a detailed diagnostic procedure to identify the underlying causes”. However, the signs, symptoms, and diagnostic testing for each cranial neuropathy are not specified. For example, it is unclear if the Parks-Bielschowsky three-step test was required to diagnose a fourth nerve palsy or if testing for ocular myasthenia gravis was required to exclude it as a mimicking cause of third, fourth, or sixth nerve palsy.

Myasthenia gravis:

Two inpatient and outpatient databases (one using public health insurance data27 from Canada, and the other using a single U.S. hospital’s electronic medical record28). Sensitivity ranged from 53.1%−97.1% and was better for inpatient or outpatient codes compared to inpatient-only and for two code instances compared to just one. PPV varied even more dramatically (4.8%−89.7%) depending on the number and type of claims that were required. Specificity and NPV were uniformly excellent (>99%). Of note, ICD codes do not distinguish between ocular and generalized MG, so these are unable to be differentiated in administrative claims databases.

Quality Assessment:

Using adapted reporting guidelines16, study quality varied widely among the 11 studies. Most (n=9, 81.8%) articles acknowledged in the title, abstract, or introduction that validation was a goal of the study. However, complete information about study methodology was variable. Only one (9.1%) described the validation cohort to which the reference standard was applied. While inclusion criteria were described in all studies, only three (27.3%) defined exclusion criteria. The expertise of the reviewers was described in seven (63.6%) studies. In reporting measures of diagnostic accuracy, five (45.5%) reported only a single measure with PPV (90.9%) and sensitivity (54.5%) most often reported. Of the ten studies reporting PPV, four (40%) compared their results to the population prevalence to allow readers to understand how prevalent a disease is in the validation cohort as compared to the population. Split-sample revalidation using a separate cohort was rare (n=2, 18.2%). All but one (90.9%) study discussed the applicability of their findings in the discussion.

Discussion:

In this systematic review, we found few studies validating ICD codes for specific neuro-ophthalmic diagnoses and wide variability in the range of reported measures of diagnostic accuracy for IIH, GCA, optic neuritis, NMO, ocular motor cranial neuropathies, and MG. In the 11 studies we identified, there were also notable limitations in study methodology that may affect the accuracy and generalizability of the specific diagnostic algorithms investigated.

Many of the conditions that neuro-ophthalmologists encounter in clinical practice are rare, limiting both clinical experience and research recruitment efforts at any one center. Administrative claims database studies provide the advantage of being able to study a much larger population across many centers (both academic and non-academic) throughout an entire health system, which may yield new insights into disease risk factors and treatment utilization and outcomes with greater generalizability to target future clinical research efforts. However, the validity of these studies relies heavily on the accuracy with which neuro-ophthalmic conditions can be identified in these databases. Understanding the diagnostic accuracy of ICD codes in administrative claims databases is important for neuro-ophthalmologists to be able to interpret the results of previous large database studies, and efforts to improve their accuracy may also aid in future research endeavors.

We observed several gaps in the validation literature for neuro-ophthalmic ICD codes. For both NMO and ocular motor cranial neuropathies, only one study each has evaluated coding accuracy, and in the case of the latter, it is unclear how diagnoses of third, fourth, and sixth nerve palsies were confirmed. We did not find any validation studies of the ICD codes for NAION, TED, and blepharospasm. This is especially problematic for NAION, which has been the subject of a number of population-based and administrative claims studies without prior validation3–7. This is an important gap in health services research in neuro-ophthalmology, and validation studies for NAION and other disorders are needed to improve the quality and impact of future research in this area.

In the validation studies we identified, we observed variability in measures of diagnostic accuracy. The accuracy of these measures depends on a number of factors including the source of validation data (single-center clinical data vs. health insurance database), clinical setting (inpatient vs. outpatient), number of ICD codes, and other criteria. For IIH, optic neuritis, NMO, and MG, sensitivity or PPV improved when more than one claim or additional criteria (e.g. age, medication prescription) were required. In IIH, inpatient and combined inpatient-outpatient data yielded similar overall PPV, but in MG, a single inpatient code had lower sensitivity and specificity but better PPV than combined inpatient-outpatient data. In optic neuritis and MG, clinic-based data provided better sensitivity and PPV than claims databases, but results were similar for IIH, and in GCA, a public health insurance database yielded higher sensitivity than a single hospital’s discharge data. In an ideal scenario, a case definition would be validated in the specific database of interest prior to its use, but as this is not always feasible, validation within a different database may be considered as long as the underlying population and sources of data (inpatient vs. outpatient, administrative claims vs. electronic health records) are similar.

Estimates of the accuracy of ICD codes also depend on the reference standard. For optic neuritis and ocular motor cranial neuropathies, all validation studies compared ICD codes to the treating neurologist’s or ophthalmologist’s diagnosis, but data from tertiary neuro-ophthalmology clinics suggests that overdiagnosis of these and other neuro-ophthalmic conditions is high10,11,29. The extent to which this affects the results of “big data” analyses depends on the specific research question. For studies of healthcare utilization, diagnostic accuracy (that is, whether the clinical diagnosis was correct) is a separate question from healthcare access and delivery. For example, a recent study using commercial health insurance claims data found that only about 60% of patients who were diagnosed with optic neuritis received a brain MRI30. Some of those patients may not actually have had optic neuritis, but to a certain extent, this does not matter: if a clinician thinks that someone has optic neuritis, even if the diagnosis is ultimately incorrect, a brain MRI should probably be obtained to screen for demyelinating lesions, so the fact that 40% of patients do not receive one represents a relevant healthcare disparity. However, it is difficult to determine whether this discrepancy is due to coding error (assigning a diagnosis code for optic neuritis when the clinician actually suspected something else) or clinical practice. Coding accuracy has greater implications for studies of disease epidemiology, prognosis and outcomes – for example, the risk of MS after optic neuritis, which is likely to be underestimated if optic neuritis is clinically overdiagnosed and overcoded,

The validation study literature would be enhanced by standardized reporting. For several of the conditions we studied, sensitivity or PPV were the only parameters reported, and information on specificity or NPV was lacking. This reflects the manner in which validation studies are generally conducted. For clinic-based studies, a patient population is identified from a clinical registry or similar source, and ICD codes are examined to determine how many patients carry the code of interest (sensitivity). For administrative claims databases, the population is identified using ICD codes, and charts are reviewed to determine how many actually have the disease of interest (PPV). This highlights several limitations. First, specificity and NPV cannot be determined unless a comparable group of disease- or ICD code-free patients is examined. For sensitivity studies, determining specificity requires reviewing the charts of disease-free patients and determining how many do not carry the ICD code of interest, and for PPV studies, determining NPV requires a group of patients without the ICD code of interest to determine how many truly do not have the disease of interest. However, defining this population is challenging, especially in neuro-ophthalmology where disease ascertainment often requires specialized examination or testing. For example, to determine NPV in IIH, one would ideally require individuals without the ICD code for IIH to have a fundus exam to confirm lack of papilledema, but in an observational dataset, only a small subset of otherwise healthy young women will have had a recent fundus exam, and the specific reasons for undergoing a recent eye exam are certain to confound the results.

This raises a second important caveat: while sensitivity and specificity are intrinsic properties of the test or code in question, PPV and NPV depend additionally on the disease prevalence within the population of interest. When interpreting the results of validation studies, the underlying prevalence of disease should therefore be considered, and the results of a validation study in a highly enriched population (e.g. demyelinating disease registry) should not be extrapolated to a population with lower disease prevalence (e.g. nationally representative sample). Furthermore, efforts to increase PPV almost always comes at the expense of decreasing NPV, as the positive results that are excluded are likely to contain at least some true positives. In both IIH and optic neuritis, measures to increase PPV by increasing the number of required claims resulted in a corresponding decrease in NPV.

In summary, few studies have examined the validity of ICD codes for neuro-ophthalmic conditions. Measures of diagnostic accuracy have been reported for administrative claims studies of IIH, GCA, optic neuritis, NMO, ocular motor cranial neuropathies, and MG, but not for other conditions such as NAION, TED, or blepharospasm. ICD codes are naturally prone to misclassification error, and limited and variable diagnostic accuracy within specific diagnoses is expected. However, clinicians and researchers should take this into consideration when interpreting and conducting “big data” research studies in neuro-ophthalmology, and additional validation studies are needed to improve the quality of research in this area.

Supplementary Material

References

- 1.Moss HE, Joslin CE, Rubin DS, Roth S. Big Data Research in Neuro-Ophthalmology: Promises and Pitfalls. J Neuro-Ophthalmol Off J North Am Neuro-Ophthalmol Soc. January 2019. doi: 10.1097/WNO.0000000000000751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Sodhi M, Sheldon CA, Carleton B, Etminan M. Oral fluoroquinolones and risk of secondary pseudotumor cerebri syndrome: Nested case-control study. Neurology. 2017;89(8):792–795. doi: 10.1212/WNL.0000000000004247 [DOI] [PubMed] [Google Scholar]

- 3.Rubin DS, Matsumoto MM, Moss HE, Joslin CE, Tung A, Roth S. Ischemic Optic Neuropathy in Cardiac Surgery: Incidence and Risk Factors in the United States from the National Inpatient Sample 1998 to 2013. Anesthesiology. 2017;126(5):810–821. doi: 10.1097/ALN.0000000000001533 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rubin DS, Parakati I, Lee LA, Moss HE, Joslin CE, Roth S. Perioperative Visual Loss in Spine Fusion Surgery: Ischemic Optic Neuropathy in the United States from 1998 to 2012 in the Nationwide Inpatient Sample. Anesthesiology. 2016;125(3):457–464. doi: 10.1097/ALN.0000000000001211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cestari DM, Gaier ED, Bouzika P, et al. Demographic, Systemic, and Ocular Factors Associated with Nonarteritic Anterior Ischemic Optic Neuropathy. Ophthalmology. 2016;123(12):2446–2455. doi: 10.1016/j.ophtha.2016.08.017 [DOI] [PubMed] [Google Scholar]

- 6.Lee MS, Grossman D, Arnold AC, Sloan FA. Incidence of nonarteritic anterior ischemic optic neuropathy: increased risk among diabetic patients. Ophthalmology. 2011;118(5):959–963. doi: 10.1016/j.ophtha.2011.01.054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lee Y-C, Wang J-H, Huang T-L, Tsai R-K. Increased Risk of Stroke in Patients With Nonarteritic Anterior Ischemic Optic Neuropathy: A Nationwide Retrospective Cohort Study. Am J Ophthalmol. 2016;170:183–189. doi: 10.1016/j.ajo.2016.08.006 [DOI] [PubMed] [Google Scholar]

- 8.Guo D, Liu J, Gao R, Tari S, Islam S. Prevalence and Incidence of Optic Neuritis in Patients with Different Types of Uveitis. Ophthalmic Epidemiol. 2018;25(1):39–44. doi: 10.1080/09286586.2017.1339808 [DOI] [PubMed] [Google Scholar]

- 9.Stein JD, Childers D, Gupta S, et al. Risk factors for developing thyroid-associated ophthalmopathy among individuals with Graves disease. JAMA Ophthalmol. 2015;133(3):290–296. doi: 10.1001/jamaophthalmol.2014.5103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fisayo A, Bruce BB, Newman NJ, Biousse V. Overdiagnosis of idiopathic intracranial hypertension. Neurology. 2016;86(4):341–350. doi: 10.1212/WNL.0000000000002318 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stunkel L, Kung NH, Wilson B, McClelland CM, Van Stavern GP. Incidence and Causes of Overdiagnosis of Optic Neuritis. JAMA Ophthalmol. 2018;136(1):76–81. doi: 10.1001/jamaophthalmol.2017.5470 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–269, W64. [DOI] [PubMed] [Google Scholar]

- 13.St Germaine-Smith C, Metcalfe A, Pringsheim T, et al. Recommendations for optimal ICD codes to study neurologic conditions: a systematic review. Neurology. 2012;79(10):1049–1055. doi: 10.1212/WNL.0b013e3182684707 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Khokhar B, Jette N, Metcalfe A, et al. Systematic review of validated case definitions for diabetes in ICD-9-coded and ICD-10-coded data in adult populations. BMJ Open. 2016;6(8):e009952. doi: 10.1136/bmjopen-2015-009952 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jolley RJ, Sawka KJ, Yergens DW, Quan H, Jetté N, Doig CJ. Validity of administrative data in recording sepsis: a systematic review. Crit Care Lond Engl. 2015;19:139. doi: 10.1186/s13054-015-0847-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Benchimol EI, Manuel DG, To T, Griffiths AM, Rabeneck L, Guttmann A. Development and use of reporting guidelines for assessing the quality of validation studies of health administrative data. J Clin Epidemiol. 2011;64(8):821–829. doi: 10.1016/j.jclinepi.2010.10.006 [DOI] [PubMed] [Google Scholar]

- 17.Koerner JC, Friedman DI Inpatient and emergency service utilization in patients with idiopathic intracranial hypertension. J Neuroophthalmol. 2014;34(3):229–232. doi: 10.1097/WNO.0000000000000073 [DOI] [PubMed] [Google Scholar]

- 18.Sundholm A, Burkill S, Sveinsson O, Piehl F, Bahmanyar S, Nilsson Remahl AIM. Population-based incidence and clinical characteristics of idiopathic intracranial hypertension. Acta Neurol Scand. 2017;136(5):427–433. doi: 10.1111/ane.12742 [DOI] [PubMed] [Google Scholar]

- 19.Sundholm A, Burkill S, Bahmanyar S, Nilsson Remahl AIM. Improving identification of idiopathic intracranial hypertension patients in Swedish patient register. Acta Neurol Scand. 2018;137(3):341–346. doi: 10.1111/ane.12876 [DOI] [PubMed] [Google Scholar]

- 20.Caudrelier L, Moulis G, Lapeyre-Mestre M, Sailler L, Pugnet G Validation of giant cell arteritis diagnosis code in the French hospital electronic database. Eur J Intern Med. 2019;60 Internal Medicine, CHU Toulouse, Toulouse, France:e16–e17. doi: 10.1016/j.ejim.2018.10.004 [DOI] [PubMed] [Google Scholar]

- 21.Michet CJ, Crowson CS, Achenbach SJ, Matteson EL The detection of rheumatic disease through hospital diagnoses with examples of rheumatoid arthritis and giant cell arteritis: What are we missing? J Rheumatol. 2015;42(11):2071–2074. doi: 10.3899/jrheum.150186 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Boesen MS, Magyari M, Born AP, Thygesen LC Pediatric acquired demyelinating syndromes: A nationwide validation study of the danish national patient register. Clin Epidemiol. 2018;10 Department of Pediatrics, Rigshospitalet, Copenhagen University Hospital, Copenhagen, Denmark:391–399. doi: 10.2147/CLEP.S156997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Marrie RA, Ekuma O, Wijnands JMA, et al. Identifying optic neuritis and transverse myelitis using administrative data Mult Scler Relat Disord. 2018;25 Departments of Internal Medicine and Community Health Sciences, Max Rady College of Medicine, Rady Faculty of Health Sciences, University of Manitoba, Winnipeg, MB, Canada: 258–264. doi: 10.1016/j.msard.2018.08.013 [DOI] [PubMed] [Google Scholar]

- 24.Winthrop KL, Chen L, Fraunfelder FW, et al. Initiation of anti-TNF therapy and the risk of optic neuritis: from the safety assessment of biologic ThERapy (SABER) Study. Am J Ophthalmol. 2013;155(1):183–189.e1. doi: 10.1016/j.ajo.2012.06.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wingerchuk DM, Banwell B, Bennett JL, et al. International consensus diagnostic criteria for neuromyelitis optica spectrum disorders. Neurology. 2015;85(2):177–189. doi: 10.1212/WNL.0000000000001729 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Yang C-P, Chen Y-T, Fuh J-L, Wang S-J Migraine and Risk of Ocular Motor Cranial Nerve Palsies: A Nationwide Cohort Study. Ophthalmology. 2016;123(1):191–197. doi: 10.1016/j.ophtha.2015.09.003 [DOI] [PubMed] [Google Scholar]

- 27.Breiner A, Young J, Green D, et al. Canadian administrative health data can identify patients with Myasthenia gravis. Neuroepidemiology. 2015;44(2):108–113. doi: 10.1159/000375463 [DOI] [PubMed] [Google Scholar]

- 28.Wright A, Pang J, Feblowitz JC, et al. A method and knowledge base for automated inference of patient problems from structured data in an electronic medical record. J Am Med Inform Assoc. 2011;18(6):859–867. doi: 10.1136/amiajnl-2011-000121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schroeder R Factors leading to the overdiagnosis of 3rd nerve palsy. In: Las Vegas, NV. [Google Scholar]

- 30.Meer E, Shindler KS, Yu Y, VanderBeek BL. Adherence to Clinical Trial Supported Evaluation of Optic Neuritis. Ophthalmic Epidemiol. May 2019:1–8. doi: 10.1080/09286586.2019.1621352 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.