Abstract

The coronavirus disease 2019 (COVID-19) pandemic has led to a massive rise in survey-based research. The paucity of perspicuous guidelines for conducting surveys may pose a challenge to the conduct of ethical, valid and meticulous research. The aim of this paper is to guide authors aiming to publish in scholarly journals regarding the methods and means to carry out surveys for valid outcomes. The paper outlines the various aspects, from planning, execution and dissemination of surveys followed by the data analysis and choosing target journals. While providing a comprehensive understanding of the scenarios most conducive to carrying out a survey, the role of ethical approval, survey validation and pilot testing, this brief delves deeper into the survey designs, methods of dissemination, the ways to secure and maintain data anonymity, the various analytical approaches, the reporting techniques and the process of choosing the appropriate journal. Further, the authors analyze retracted survey-based studies and the reasons for the same. This review article intends to guide authors to improve the quality of survey-based research by describing the essential tools and means to do the same with the hope to improve the utility of such studies.

Keywords: Pandemics, Data Analysis, Publishing, Surveys and Questionnaires, Periodicals as Topic

Graphical Abstract

INTRODUCTION

Surveys are the principal method used to address topics that require individual self-report about beliefs, knowledge, attitudes, opinions or satisfaction, which cannot be assessed using other approaches.1 This research method allows information to be collected by asking a set of questions on a specific topic to a subset of people and generalizing the results to a larger population. Assessment of opinions in a valid and reliable way require clear, structured and precise reporting of results. This is possible with a survey based out of a meticulous design, followed by validation and pilot testing.2 The aim of this opinion piece is to provide practical advice to conduct survey-based research. It details the ethical and methodological aspects to be undertaken while performing a survey, the online platforms available for distributing survey, and the implications of survey-based research.

Survey-based research is a means to obtain quick data, and such studies are relatively easy to conduct and analyse, and are cost-effective (under a majority of the circumstances).3 These are also one of the most convenient methods of obtaining data about rare diseases.4 With major technological advancements and improved global interconnectivity, especially during the coronavirus disease 2019 (COVID-19) pandemic, surveys have surpassed other means of research due to their distinctive advantage of a wider reach, including respondents from various parts of the world having diverse cultures and geographically disparate locations. Moreover, survey-based research allows flexibility to the investigator and respondent alike.5 While the investigator(s) may tailor the survey dates and duration as per their availability, the respondents are allowed the convenience of responding to the survey at ease, in the comfort of their homes, and at a time when they can answer the questions with greater focus and to the best of their abilities.6 Respondent biases inherent to environmental stressors can be significantly reduced by this approach.5 It also allows responses across time-zones, which may be a major impediment to other forms of research or data-collection. This allows distant placement of the investigator from the respondents.

Various digital tools are now available for designing surveys (Table 1).7 Most of these are free with separate premium paid options. The analysis of data can be made simpler and cleaning process almost obsolete by minimising open-ended answer choices.8 Close-ended answers makes data collection and analysis efficient, by generating an excel which can be directly accessed and analysed.9 Minimizing the number of questions and making all questions mandatory can further aid this process by bringing uniformity to the responses and analysis simpler. Surveys are arguably also the most engaging form of research, conditional to the skill of the investigator.

Table 1. Tools for survey-based studies.

| Serial No. | Survey tool | Features | |

|---|---|---|---|

| Free | Paid | ||

| 1 | SoGoSurvey | Pre-defined templates, multilingual surveys, skip logic, questions and answer bank, progress bar, add comments, import answers, embed multimedia, print surveys. | Advanced reporting and analysis, pre-fill known data into visible and hidden field, automatic scoring, display custom messages based on quiz scores. |

| 2 | Typeform | 3 Typeforms, 10 Q/t, 100 A/m, templates, reports and metrics, embed typeform in a webpage, download data. | 10,000 A/m, unlimited logic jumps, remove typeform branding, payment fields, scoring and pricing calculator, send follow up emails. |

| 3 | Zoho Survey | Unlimited surveys,10 Q/s, 100 A/s, in-mail surveys, templates, embed in website, scoring, HTTPS encryption, social media promotion, password protection, 1 response collector, Survey builder in 26 languages. | Unlimited questions and respondents and response collectors, question randomization, Zoho CRM, Eventbrite, Slack, Google sheets, Shopify and Zendesk integration, Sentiment analysis, Piping logic, White label survey, Upload favicon, Tableau integration. |

| 4 | Yesinsights | NA | 25,000 A/m, NPS surveys, Website Widget, Unlimited surveys and responses. |

| 5 | Survey Planet | Unlimited surveys, questions and responses, two survey player types, share surveys on social media and emails, SSL security, no data-mining or information selling, embed data, pre-written surveys, basic themes, surveys in 20 languages, basic in-app reports. | Export results, custom themes, question branching and images with custom formatting, alternative success URL redirect, white label and kiosk surveys, e-mail survey completion notifications four chart types for results. |

| 6 | Survey Gizmo | 3 surveys, unlimited Q/s, 100 A, raw data exports, share reports via URL, various question and answer options, progress bar and share on social media options. | Advanced reports (profile, longitudinal), logic and piping, A/B split testing, disqualifications, file uploads, API access, webpage redirects, conjoint analysis, crosstab reports, TURF reports, open-text analysis, data-cleaning tool. |

| 7 | SurveyMonkey | 10 questions, 100 respondents, 15 question types, light theme customization and templates. | Unlimited, multilingual questions and surveys, fine control systems, analyse, filter and export results, shared asset library, customised logos, colours and URLs. |

| 8 | SurveyLegend | 3 surveys, 6 pictures, unlimited responses, real time analytics, no data export, 1 conditional logic, Ads and watermarked, top notch security and encryption, collect on any device. | Unlimited surveys, responses, pictures, unlimited conditional logic, white label, share real time results, enable data export, 100K API calls and 10GB storage. |

| 9 | Google forms | Unlimited surveys and respondents, data collection in google spreadsheets, themes, custom logo, add images or videos, skip logic and page branching, embed survey into emails or website, add collaborators. | NA |

| 10 | Client Heartbeat | NA | Unlimited Surveys, 50 + Users, 10,000 + Contacts, 10 Sub-Accounts, CRM syncing/API access, Company branding, Concierge Support. |

Q/t = questions per typeform, A/m = answers per month, Q/s = questions per survey, A/s = answers per survey, NA = not applicable, NPS = net promoter score.

Data protection laws now mandate anonymity while collecting data for most surveys, particularly when they are exempt from ethical review.10,11 Anonymization has the potential to reduce (or at times even eliminate) social desirability bias which gains particular relevance when targeting responses from socially isolated or vulnerable communities (e.g. LGBTQ and low socio-economic strata communities) or minority groups (religious, ethnic and medical) or controversial topics (drug abuse, using language editing software).

Moreover, surveys could be the primary methodology to explore a hypothesis until it evolves into a more sophisticated and partly validated idea after which it can be probed further in a systematic and structured manner using other research methods.

The aim of this paper is to reduce the incorrect reporting of surveys. The paper also intends to inform researchers of the various aspects of survey-based studies and the multiple points that need to be taken under consideration while conducting survey-based research.

SURVEYS IN THE COVID-19 PANDEMIC

The COVID-19 has led to a distinctive rise in survey-based research.12 The need to socially distance amid widespread lockdowns reduced patient visits to the hospital and brought most other forms of research to a standstill in the early pandemic period. A large number of level-3 bio-safety laboratories are being engaged for research pertaining to COVID-19, thereby limiting the options to conduct laboratory-based research.13,14 Therefore, surveys appear to be the most viable option for researchers to explore hypotheses related to the situation and its impact in such times.15

LIMITATIONS WHILE CONDUCTING SURVEY-BASED RESEARCH

Designing a fine survey is an arduous task and requires skill even though clear guidelines are available in regard to the same. Survey design requires extensive thoughtfulness on the core questions (based on the hypothesis or the primary research question), with consideration of all possible answers, and the inclusion of open-ended options to allow recording other possibilities. A survey should be robust, in regard to the questions gathered and the answer choices available, it must be validated, and pilot tested.16 The survey design may be supplanted with answer choices tailored for the convenience of the responder, to reduce the effort while making it more engaging. Survey dissemination and engagement of respondents also requires experience and skill.17

Furthermore, the absence of an interviewer prevents us from gaining clarification on responses of open-ended questions if any. Internet surveys are also prone to survey fraud by erroneous reporting. Hence, anonymity of surveys is a boon and a bane. The sample sizes are skewed as it lacks representation of population absent on the Internet like the senile or the underprivileged. The illiterate population also lacks representation in survey-based research.

The “Enhancing the QUAlity and Transparency Of health Research” network (EQUATOR) provides two separate guidelines replete with checklists to ensure valid reporting of e-survey methodology. These include “The Checklist for Reporting Results of Internet E-Surveys” (CHERRIES) statement and “The Journal of Medical Internet Research” (JMIR) checklist.

COMMON TYPES OF SURVEY-BASED RESEARCH

From a clinician's standpoint, the common survey types include those centered around problems faced by the patients or physicians.18 Surveys collecting the opinions of various clinicians on a debated clinical topic or feedback forms typically served after attending medical conferences or prescribing a new drug or trying a new method for a given procedure are also surveys. The formulation of clinical practice guidelines entails Delphi exercises using paper surveys, which are yet another form of survey-mediated research.

Size of the survey depends on its intent. They could be large or small surveys. Therefore, identification of the intent behind the survey is essential to allow the investigator to form a hypothesis and then explore it further. Large population-based or provider-based surveys are often done and generate mammoth data over the years. E.g. The National Health and Nutrition Examination Survey, The National Health Interview Survey and the National Ambulatory Medical Care Survey.

SCENARIOS FOR CONDUCTING SURVEY-BASED RESEARCH

Despite all said and done about the convenience of conducting survey-based research, it is prudent to conduct a feasibility check before embarking on one. Certain scenarios may be the key determinants in determining the fate of survey-based research (Table 2).

Table 2. Various scenarios with respect to survey-based studies.

| Unsuitable scenarios | Suitable scenarios | ||

|---|---|---|---|

| Respondent related | Respondent related | ||

| 1. Avid Internet users are ideal target demographics. 2. Email database makes reminders convenient. 3. Enthusiastic target demographics nullifies need of incentives. 4. Supports a larger sample size. 5. Non-respondents and respondents must be matched. |

1. Under-represented on the internet can't be included. 2. Population with privacy concerns like transgenders, sex workers or rape survivors need to be promised anonymity. 3. People lacking motivation and enthusiasm, require coaxing and convincing by the physician or incentives as a last resort. 4. Illiterate population unable to read and comprehend the questions asked. |

||

| Investigator related | Investigator related | ||

| 1. Adequate budget for survey dissemination. 2. Well-versed with handling all software required for the survey. 3. Able to monitor IP address and cookies to avoid multiple responses. 4. Surveys undergo pilot testing, validation testing and reliability testing. 5. Allowing data entry without data editing. |

1. The investigator is a novice at or inexperienced with web-based tools. | ||

| Survey related | Survey related | ||

| 1. Engaging and interactive using the various tools. 2. Fast evolving content in repeated succession to keep the respondent alert. E.g. - Delphi surveys. 3. Suitable to record rare, strange events that later help to develop a hypothesis. |

1. Need of accurate and precise data or observational data. 2. An existing study has already validated key observations (door-to-door study has already been conducted). 3. Qualitative data is being studied. |

||

ETHICS APPROVAL FOR SURVEY-BASED RESEARCH

Approval from the Institutional Review Board should be taken as per requirement according to the CHERRIES checklist. However, rules for approval are different as per the country or nation and therefore, local rules must be checked and followed. For instance, in India, the Indian Council of Medical Research released an article in 2017, stating that the concept of broad consent has been updated which is defined “consent for an unspecified range of future research subject to a few contents and/or process restrictions.” It talks about “the flexibility of Indian ethics committees to review a multicentric study proposal for research involving low or minimal risk, survey or studies using anonymized samples or data or low or minimal risk public health research.” The reporting of approvals received and applied for and the procedure of written, informed consent followed must be clear and transparent.10,19

The use of incentives in surveys is also an ethical concern.20 The different of incentives that can be used are monetary or non-monetary. Monetary incentives are usually discouraged as these may attract the wrong population due to the temptation of the monetary benefit. However, monetary incentives have been seen to make survey receive greater traction even though this is yet to proven. Monetary incentives are not only provided in terms of cash or cheque but also in the form of free articles, discount coupons, phone cards, e-money or cashback value.21 These methods though tempting must be seldom used. If used, their use must be disclosed and justified in the report. The use of non-monetary incentives like a meeting with a famous personality or access to restricted and authorized areas. These can also help pique the interest of the respondents.

DESIGNING A SURVEY

As mentioned earlier, the design of a survey is reflective of the skill of the investigator curating it.22 Survey builders can be used to design an efficient survey. These offer majority of the basic features needed to construct a survey, free of charge. Therefore, surveys can be designed from scratch, using pre-designed templates or by using previous survey designs as inspiration. Taking surveys could be made convenient by using the various aids available (Table 1). Moreover, even the investigator should be mindful of the unintended response effects of ordering and context of survey questions.23

Surveys using clear, unambiguous, simple and well-articulated language record precise answers.24 A well-designed survey accounts for the culture, language and convenience of the target demographic. The age, region, country and occupation of the target population is also considered before constructing a survey. Consistency is maintained in the terms used in the survey and abbreviations are avoided to allow the respondents to have a clear understanding of the question being answered. Universal abbreviations or previously indexed abbreviations maintain the unambiguity of the survey.

Surveys beginning with broad, easy and non-specific questions as compared to sensitive, tedious and non-specific ones receive more accurate and complete answers.25 Questionnaires designed such that the relatively tedious and long questions requiring the respondent to do some nit-picking are placed at the end improves the response rate of the survey. This prevents the respondent to be discouraged to answer the survey at the beginning itself and motivates the respondent to finish the survey at the end. All questions must provide a non-response option and all questions should be made mandatory to increase completeness of the survey. Questions can be framed in close-ended or open-ended fashion. However, close-ended questions are easier to analyze and are less tedious to answer by the respondent and therefore must be the main component in a survey. Open-ended questions have minimal use as they are tedious, take time to answer and require fine articulation of one's thoughts. Also, their minimal use is advocated because the interpretation of such answers requires dedication in terms of time and energy due to the diverse nature of the responses which is difficult to promise owing to the large sample sizes.26 However, whenever the closed choices do not cover all probabilities, an open answer choice must be added.27,28

Screening questions to meet certain criteria to gain access to the survey in cases where inclusion criteria need to be established to maintain authenticity of target demographic. Similarly, logic function can be used to apply an exclusion. This allows clean and clear record of responses and makes the job of an investigator easier. The respondents can or cannot have the option to return to the previous page or question to alter their answer as per the investigator's preference.

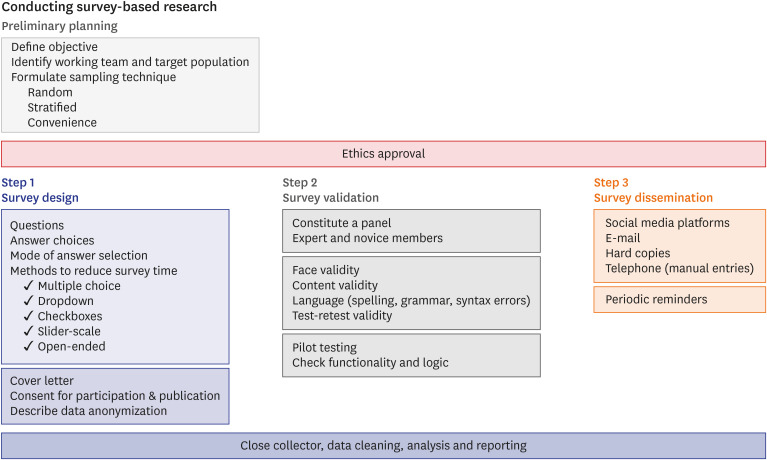

The range of responses received can be reduced in case of questions directed towards the feelings or opinions of people by using slider scales, or a Likert scale.29,30 In questions having multiple answers, check boxes are efficient. When a large number of answers are possible, dropdown menus reduce the arduousness.31 Matrix scales can be used to answer questions requiring grading or having a similar range of answers for multiple conditions. Maximum respondent participation and complete survey responses can be ensured by reducing the survey time. Quiz mode or weighted modes allow the respondent to shuffle between questions and allows scoring of quizzes and can be used to complement other weighted scoring systems.32 A flowchart depicting a survey construct is presented as Fig. 1.

Fig. 1. Algorithm for a survey construct.

Survey validation

Validation testing though tedious and meticulous, is worthy effort as the accuracy of a survey is determined by its validity. It is indicative of the of the sample of the survey and the specificity of the questions such that the data acquired is streamlined to answer the questions being posed or to determine a hypothesis.33,34 Face validation determines the mannerism of construction of questions such that necessary data is collected. Content validation determines the relation of the topic being addressed and its related areas with the questions being asked. Internal validation makes sure that the questions being posed are directed towards the outcome of the survey. Finally, Test – retest validation determines the stability of questions over a period of time by testing the questionnaire twice and maintaining a time interval between the two tests. For surveys determining knowledge of respondents pertaining to a certain subject, it is advised to have a panel of experts for undertaking the validation process.2,35

Reliability testing

If the questions in the survey are posed in a manner so as to elicit the same or similar response from the respondents irrespective of the language or construction of the question, the survey is said to be reliable. It is thereby, a marker of the consistency of the survey. This stands to be of considerable importance in knowledge-based researches where recall ability is tested by making the survey available for answering by the same participants at regular intervals. It can also be used to maintain authenticity of the survey, by varying the construction of the questions.

Designing a cover letter

A cover letter is the primary means of communication with the respondent, with the intent to introduce the respondent to the survey. A cover letter should include the purpose of the survey, details of those who are conducting it, including contact details in case clarifications are desired. It should also clearly depict the action required by the respondent. Data anonymization may be crucial to many respondents and is their right. This should be respected in a clear description of the data handling process while disseminating the survey. A good cover letter is the key to building trust with the respondent population and can be the forerunner to better response rates. Imparting a sense of purpose is vital to ideationally incentivize the respondent population.36,37 Adding the credentials of the team conducting the survey may further aid the process. It is seen that an advance intimation of the survey prepares the respondents while improving their compliance.

The design of a cover letter needs much attention. It should be captivating, clear, precise and use a vocabulary and language specific to the target population for the survey. Active voice should be used to make a greater impact. Crowding of the details must be avoided. Using italics, bold fonts or underlining may be used to highlight critical information. the tone ought to be polite, respectful, and grateful in advance. The use of capital letters is at best avoided, as it is surrogate for shouting in verbal speech and may impart a bad taste.

The dates of the survey may be intimated, so the respondents may prepare themselves for taking it at a time conducive to them. While, emailing a closed group in a convenience sampled survey, using the name of the addressee may impart a customized experience and enhance trust building and possibly compliance. Appropriate use of salutations like Mr./Ms./Mrs. may be considered. Various portals such as SurveyMonkey allow the researchers to save an address list on the website. These may then be reached out using an embedded survey link from a verified email address to minimize bouncing back of emails.

The body of the cover letter must be short, crisp and not exceed 2–3 paragraphs under idea circumstances. Ernest efforts to protect confidentiality may go a long way in enhancing response rates.38 While it is enticing to provide incentives to enhance response, these are best avoided.38,39 In cases when indirect incentives are offered, such as provision of results of the survey, these may be clearly stated in the cover letter. Lastly, a formal closing note with the signatures of the lead investigator are welcome.38,40

Designing questions

Well-constructed questionnaires are essentially the backbone of successful survey-based studies. With this type of research, the primary concern is the adequate promotion and dissemination of the questionnaire to the target population. The careful of selection of sample population, therefore, needs to be with minimal flaws. The method of conducting survey is an essential determinant of the response rate observed.41 Broadly, surveys are of two types: closed and open. Depending on the sample population the method of conducting the survey must be determined.

Various doctors use their own patients as the target demographic, as it improves compliance. However, this is effective in surveys aiming towards a geographically specific, fairly common disease as the sample size needs to be adequate. Response bias can be identified by the data collected from respondent and non-respondent groups.42,43 Therefore, to choose a target population whose database of baseline characteristics is already known is more efficacious. In cases of surveys focused on patients having a rare group of diseases, online surveys or e-surveys can be conducted. Data can also be gathered from the multiple national organizations and societies all over the world.44,45 Computer generated random selection can be done from this data to choose participants and they can be reached out to using emails or social media platforms like WhatsApp and LinkedIn. In both these scenarios, closed questionnaires can be conducted. These have restricted access either through a URL link or through e-mail.

In surveys targeting an issue faced by a larger demographic (e.g. pandemics like the COVID-19, flu vaccines and socio-political scenarios), open surveys seem like the more viable option as they can be easily accessed by majority of the public and ensures large number of responses, thereby increasing the accuracy of the study. Survey length should be optimal to avoid poor response rates.25,46

SURVEY DISSEMINATION

Uniform distribution of the survey ensures equitable opportunity to the entire target population to access the questionnaire and participate in it. While deciding the target demographic communities should be studied and the process of “lurking” is sometimes practiced. Multiple sampling methods are available (Fig. 1).47

Distribution of survey to the target demographic could be done using emails. Even though e-mails reach a large proportion of the target population, an unknown sender could be blocked, making the use of personal or a previously used email preferable for correspondence. Adding a cover letter along with the invite adds a personal touch and is hence, advisable. Some platforms allow the sender to link the survey portal with the sender's email after verifying it. Noteworthily, despite repeated email reminders, personal communication over the phone or instant messaging improved responses in the authors' experience.48,49

Distribution of the survey over other social media platforms (SMPs, namely WhatsApp, Facebook, Instagram, Twitter, LinkedIn etc.) is also practiced.50,51,52 Surveys distributed on every available platform ensures maximal outreach.53 Other smartphone apps can also be used for wider survey dissemination.50,54 It is important to be mindful of the target population while choosing the platform for dissemination of the survey as some SMPs such as WhatsApp are more popular in India, while others like WeChat are used more widely in China, and similarly Facebook among the European population. Professional accounts or popular social accounts can be used to promote and increase the outreach for a survey.55 Incentives such as internet giveaways or meet and greets with their favorite social media influencer have been used to motivate people to participate.

However, social-media platforms do not allow calculation of the denominator of the target population, resulting in inability to gather the accurate response rate. Moreover, this method of collecting data may result in a respondent bias inherent to a community that has a greater online presence.43 The inability to gather the demographics of the non-respondents (in a bid to identify and prove that they were no different from respondents) can be another challenge in convenience sampling, unlike in cohort-based studies.

Lastly, manually filling of surveys, over the telephone, by narrating the questions and answer choices to the respondents is used as the last-ditch resort to achieve a high desired response rate.56 Studies reveal that surveys released on Mondays, Fridays, and Sundays receive more traction. Also, reminders set at regular intervals of time help receive more responses. Data collection can be improved in collaborative research by syncing surveys to fill out electronic case record forms.57,58,59

Data anonymity refers to the protection of data received as a part of the survey. This data must be stored and handled in accordance with the patient privacy rights/privacy protection laws in reference to surveys. Ethically, the data must be received on a single source file handled by one individual. Sharing or publishing this data on any public platform is considered a breach of the patient's privacy.11 In convenience sampled surveys conducted by e-mailing a predesignated group, the emails shall remain confidential, as inadvertent sharing of these as supplementary data in the manuscript may amount to a violation of the ethical standards.60 A completely anonymized e-survey discourages collection of Internet protocol addresses in addition to other patient details such as names and emails.

Data anonymity gives the respondent the confidence to be candid and answer the survey without inhibitions. This is especially apparent in minority groups or communities facing societal bias (sex workers, transgenders, lower caste communities, women). Data anonymity aids in giving the respondents/participants respite regarding their privacy. As the respondents play a primary role in data collection, data anonymity plays a vital role in survey-based research.

DATA HANDLING OF SURVEYS

The data collected from the survey responses are compiled in a .xls, .csv or .xlxs format by the survey tool itself. The data can be viewed during the survey duration or after its completion. To ensure data anonymity, minimal number of people should have access to these results. The data should then be sifted through to invalidate false, incorrect or incomplete data. The relevant and complete data should then be analyzed qualitatively and quantitatively, as per the aim of the study. Statistical aids like pie charts, graphs and data tables can be used to report relative data.

ANALYSIS OF SURVEY DATA

Analysis of the responses recorded is done after the time made available to answer the survey is complete. This ensures that statistical and hypothetical conclusions are established after careful study of the entire database. Incomplete and complete answers can be used to make analysis conditional on the study. Survey-based studies require careful consideration of various aspects of the survey such as the time required to complete the survey.61 Cut-off points in the time frame allow authentic answers to be recorded and analyzed as compared to disingenuous completed questionnaires. Methods of handling incomplete questionnaires and atypical timestamps must be pre-decided to maintain consistency. Since, surveys are the only way to reach people especially during the COVID-19 pandemic, disingenuous survey practices must not be followed as these will later be used to form a preliminary hypothesis.

REPORTING SURVEY-BASED RESEARCH

Reporting the survey-based research is by far the most challenging part of this method. A well-reported survey-based study is a comprehensive report covering all the aspects of conducting a survey-based research.

The design of the survey mentioning the target demographic, sample size, language, type, methodology of the survey and the inclusion-exclusion criteria followed comprises a descriptive report of a survey-based study. Details regarding the conduction of pilot-testing, validation testing, reliability testing and user-interface testing add value to the report and supports the data and analysis. Measures taken to prevent bias and ensure consistency and precision are key inclusions in a report. The report usually mentions approvals received, if any, along with the written, informed, consent taken from the participants to use the data received for research purposes. It also gives detailed accounts of the different distribution and promotional methods followed.

A detailed account of the data input and collection methods along with tools used to maintain the anonymity of the participants and the steps taken to ensure singular participation from individual respondents indicate a well-structured report. Descriptive information of the website used, visitors received and the externally influencing factors of the survey is included. Detailed reporting of the post-survey analysis including the number of analysts involved, data cleaning required, if any, statistical analysis done and the probable hypothesis concluded is a key feature of a well-reported survey-based research. Methods used to do statistical corrections, if used, should be included in the report. The EQUATOR network has two checklists, “The Checklist for Reporting Results of Internet E-Surveys” (CHERRIES) statement and “The Journal of Medical Internet Research” (JMIR) checklist, that can be utilized to construct a well-framed report.62,63 Importantly, self-reporting of biases and errors avoids the carrying forward of false hypothesis as a basis of more advanced research. References should be cited using standard recommendations, and guided by the journal specifications.64

CHOOSING A TARGET JOURNAL FOR SURVEY-BASED RESEARCH

Surveys can be published as original articles, brief reports or as a letter to the editor. Interestingly, most modern journals do not actively make mention of surveys in the instructions to the author. Thus, depending on the study design, the authors may choose the article category, cohort or case-control interview or survey-based study. It is prudent to mention the type of study in the title. Titles albeit not too long, should not exceed 10–12 words, and may feature the type of study design for clarity after a semicolon for greater citation potential.

While the choice of journal is largely based on the study subject and left to the authors discretion, it may be worthwhile exploring trends in a journal archive before proceeding with submission.65 Although the article format is similar across most journals, specific rules relevant to the target journal may be followed for drafting the article structure before submission.

RETRACTION OF ARTICLES

Articles that are removed from the publication after being released are retracted articles. These are usually retracted when new discrepancies come to light regarding, the methodology followed, plagiarism, incorrect statistical analysis, inappropriate authorship, fake peer review, fake reporting and such.66 A sufficient increase in such papers has been noticed.67

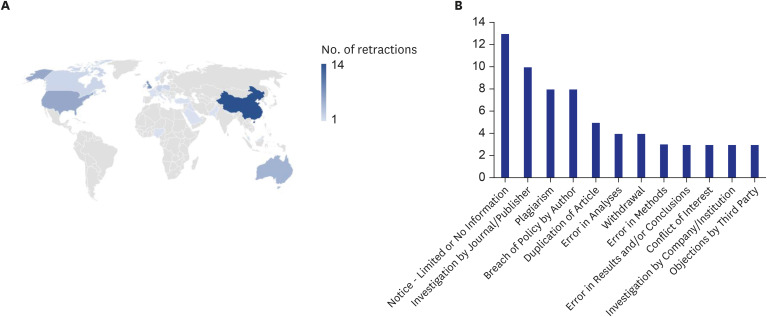

We carried out a search of “surveys” on Retraction Watch on 31st August 2020 and received 81 search results published between November 2006 to June 2020, out of which 3 were repeated. Out of the 78 results, 37 (47.4%) articles were surveys, 23 (29.4%) showed as unknown types and 18 (23.2%) reported other types of research. (Supplementary Table 1). Fig. 2 gives a detailed description of the causes of retraction of the surveys we found and its geographic distribution.

Fig. 2. Retraction of survey-based studies. (A) Geographic distribution, (B) Common causes.

CONCLUSION

A good survey ought to be designed with a clear objective, the design being precise and focused with close-ended questions and all probabilities included. Use of rating scales, multiple choice questions and checkboxes and maintaining a logical question sequence engages the respondent while simplifying data entry and analysis for the investigator. Conducting pilot-testing is vital to identify and rectify deficiencies in the survey design and answer choices. The target demographic should be defined well, and invitations sent accordingly, with periodic reminders as appropriate. While reporting the survey, maintaining transparency in the methods employed and clearly stating the shortcomings and biases to prevent advocating an invalid hypothesis.

Footnotes

Disclosure: The authors have no potential conflicts of interest to disclose.

- Conceptualization: Gaur PS, Zimba O, Agarwal V, Gupta L.

- Visualization: Gaur PS, Zimba O, Agarwal V, Gupta L.

- Writing - original draft: Gaur PS, Gupta L.

SUPPLEMENTARY MATERIAL

Reporting survey based research

References

- 1.Ball HL. Conducting online surveys. J Hum Lact. 2019;35(3):413–417. doi: 10.1177/0890334419848734. [DOI] [PubMed] [Google Scholar]

- 2.Gupta L, Muhammed H, Naveen R, Kharbanda R, Gangadharan H, Misra DP, et al. Insights into the knowledge, attitude and practices for the treatment of idiopathic inflammatory myopathy from a cross-sectional cohort survey of physicians. Rheumatol Int. 2020;40:2047–2055. doi: 10.1007/s00296-020-04695-1. [DOI] [PubMed] [Google Scholar]

- 3.Jones TL, Baxter MA, Khanduja V. A quick guide to survey research. Ann R Coll Surg Engl. 2013;95(1):5–7. doi: 10.1308/003588413X13511609956372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gupta L, Lilleker J, Agarwal V, Chinoy H, Agarwal R. COVID-19 and myositis- unique challenges for patients. Rheumatology (Oxford) doi: 10.1093/rheumatology/keaa610. Forthcoming 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Davis RE, Couper MP, Janz NK, Caldwell CH, Resnicow K. Interviewer effects in public health surveys. Health Educ Res. 2010;25(1):14–26. doi: 10.1093/her/cyp046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schweigman KP, Fabsitz RR, Sorlie P, Welty TK. Respondent bias in the collection of alcohol and tobacco data in American Indians: the Strong Heart Study. Am Indian Alsk Native Ment Health Res. 2000;9(3):1–19. doi: 10.5820/aian.0903.2000.1. [DOI] [PubMed] [Google Scholar]

- 7.Wantland DJ, Portillo CJ, Holzemer WL, Slaughter R, McGhee EM. The effectiveness of Web-based vs. non-Web-based interventions: a meta-analysis of behavioral change outcomes. J Med Internet Res. 2004;6(4):e40. doi: 10.2196/jmir.6.4.e40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mahon-Haft TA, Dillman DA. Does visual appeal matter? Effects of web survey aesthetics on survey quality. Surv Res Methods. 2010;4(1):43–59. [Google Scholar]

- 9.Connor Desai S, Reimers S. Comparing the use of open and closed questions for Web-based measures of the continued-influence effect. Behav Res Methods. 2019;51(3):1426–1440. doi: 10.3758/s13428-018-1066-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Indian Council of Medical Research. National ethical guidelines for biomedical and health research involving human participants. [Updated 2020]. [Accessed September 24, 2020]. https://ethics.ncdirindia.org//asset/pdf/ICMR_National_Ethical_Guidelines.pdf. [DOI] [PMC free article] [PubMed]

- 11.Ye H, Cheng X, Yuan M, Xu L, Gao J, Cheng C, et al. A survey of security and privacy in big data; 16th International Symposium on Communications and Information Technologies (ISCIT), Qingdao; Piscataway, NJ; Institute of Electrical and Electronics Engineers. 2016. pp. 268–272. [Google Scholar]

- 12.Rossman H, Keshet A, Shilo S, Gavrieli A, Bauman T, Cohen O, et al. A framework for identifying regional outbreak and spread of COVID-19 from one-minute population-wide surveys. Nat Med. 2020;26(5):634–638. doi: 10.1038/s41591-020-0857-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gewin V. Safely conducting essential research in the face of COVID-19. Nature. 2020;580(7804):549–550. doi: 10.1038/d41586-020-01027-y. [DOI] [PubMed] [Google Scholar]

- 14.Jeon J, Kim H, Yu KS. The impact of COVID-19 on the conduct of clinical trials for medical products in Korea. J Korean Med Sci. 2020;35(36):e329. doi: 10.3346/jkms.2020.35.e329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Farley-Ripple EN, Oliver K, Boaz A. Mapping the community: use of research evidence in policy and practice. Humanit Soc Sci Commun. 2020;7(1):83. [Google Scholar]

- 16.van Teijlingen E, Hundley V. The importance of pilot studies. Nurs Stand. 2002;16(40):33–36. doi: 10.7748/ns2002.06.16.40.33.c3214. [DOI] [PubMed] [Google Scholar]

- 17.Kelley K, Clark B, Brown V, Sitzia J. Good practice in the conduct and reporting of survey research. Int J Qual Health Care. 2003;15(3):261–266. doi: 10.1093/intqhc/mzg031. [DOI] [PubMed] [Google Scholar]

- 18.Gupta L, Misra DP, Agarwal V, Balan S, Agarwal V. Management of rheumatic diseases in the time of covid-19 pandemic: perspectives of rheumatology practitioners from India. Ann Rheum Dis. doi: 10.1136/annrheumdis-2020-217509. Forthcoming 2020. [DOI] [PubMed] [Google Scholar]

- 19.Choi IS, Choi EY, Lee IH. Challenges in informed consent decision-making in Korean clinical research: a participant perspective. PLoS One. 2019;14(5):e0216889. doi: 10.1371/journal.pone.0216889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Teisl MF, Roe B, Vayda ME. Incentive effects on response rates, data quality, and survey administration costs. Int J Public Opin Res. 2006;18(3):364–373. [Google Scholar]

- 21.Garg PK. Financial incentives to reviewers: double-edged sword. J Korean Med Sci. 2015;30(6):832–833. doi: 10.3346/jkms.2015.30.6.832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Schaeffer NC, Dykema J. Questions for surveys: current trends and future directions. Public Opin Q. 2011;75(5):909–961. doi: 10.1093/poq/nfr048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Moore DW. Measuring new types of question-order effects. Public Opin Q. 2002;66(1):80–91. [Google Scholar]

- 24.Yan T, Tourangeau R. Fast times and easy questions: the effects of age, experience and question complexity on web survey response times. Appl Cogn Psychol. 2008;22(1):51–68. [Google Scholar]

- 25.Burchell B, Marsh C. The effect of questionnaire length on survey response. Qual Quant. 1992;26(3):233–244. [Google Scholar]

- 26.Krause N. A comprehensive strategy for developing closed-ended survey items for use in studies of older adults. J Gerontol B Psychol Sci Soc Sci. 2002;57(5):S263–74. doi: 10.1093/geronb/57.5.s263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Weller SC, Vickers B, Bernard HR, Blackburn AM, Borgatti S, Gravlee CC, et al. Open-ended interview questions and saturation. PLoS One. 2018;13(6):e0198606. doi: 10.1371/journal.pone.0198606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tourangeau R, Sun H, Conrad FG, Couper MP. Examples in open-ended survey questions. Int J Public Opin Res. 2017;29(4):690–702. [Google Scholar]

- 29.Younas A, Porr C. A step-by-step approach to developing scales for survey research. Nurse Res. 2018;26(3):14–19. doi: 10.7748/nr.2018.e1585. [DOI] [PubMed] [Google Scholar]

- 30.Gheita TA, Salem MN, Eesa NN, Khalil NM, Gamal NM, Noor RA, et al. Rheumatologists' practice during the Coronavirus disease 2019 (COVID-19) pandemic: a survey in Egypt. Rheumatol Int. 2020;40(10):1599–1611. doi: 10.1007/s00296-020-04655-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Schaeffer NC, Presser S. The science of asking questions. Annu Rev Sociol. 2003;29(1):65–88. [Google Scholar]

- 32.Dolnicar S. Asking good survey questions. J Travel Res. 2013;52(5):551–574. [Google Scholar]

- 33.Bidari A, Ghavidel-Parsa B, Amir Maafi A, Montazeri A, Ghalehbaghi B, Hassankhani A, et al. Validation of fibromyalgia survey questionnaire and polysymptomatic distress scale in a Persian population. Rheumatol Int. 2015;35(12):2013–2019. doi: 10.1007/s00296-015-3340-z. [DOI] [PubMed] [Google Scholar]

- 34.Shah CH, Brown JD. Reliability and validity of the short-form 12 item version 2 (SF-12v2) health-related quality of life survey and disutilities associated with relevant conditions in the U.S. older adult population. J Clin Med. 2020;9(3):661. doi: 10.3390/jcm9030661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Agarwal V, Gupta L, Davalbhakta S, Misra DP, Agarwal V, Goel A. Prevalent fears and inadequate understanding of COVID-19 among medical undergraduates in India: results of a web-based survey. J R Coll Physicians Edinb. 2020;50(3):343–350. doi: 10.4997/JRCPE.2020.331. [DOI] [PubMed] [Google Scholar]

- 36.Gendall P. The effect of covering letter personalisation in mail surveys. Int J Mark Res. 2005;47(4):365–380. [Google Scholar]

- 37.Christensen AI, Lynn P, Tolstrup JS. Can targeted cover letters improve participation in health surveys? Results from a randomized controlled trial. BMC Med Res Methodol. 2019;19(1):151. doi: 10.1186/s12874-019-0799-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Edwards P, Roberts I, Clarke M, DiGuiseppi C, Pratap S, Wentz R, et al. Increasing response rates to postal questionnaires: systematic review. BMJ. 2002;324(7347):1183. doi: 10.1136/bmj.324.7347.1183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Porter SR, Whitcomb ME. The impact of lottery incentives on student survey response rates. Res Higher Educ. 2003;44(4):389–407. [Google Scholar]

- 40.Carpenter EH. Personalizing mail surveys: a replication and reassessment. Public Opin Q. 1974;38(4):614–620. [Google Scholar]

- 41.Yu J, Cooper H. A quantitative review of research design effects on response rates to questionnaires. J Mark Res. 1983;20(1):36–44. [Google Scholar]

- 42.Cheung KL, Ten Klooster PM, Smit C, de Vries H, Pieterse ME. The impact of non-response bias due to sampling in public health studies: a comparison of voluntary versus mandatory recruitment in a Dutch national survey on adolescent health. BMC Public Health. 2017;17(1):276. doi: 10.1186/s12889-017-4189-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Sheikh K, Mattingly S. Investigating non-response bias in mail surveys. J Epidemiol Community Health. 1981;35(4):293–296. doi: 10.1136/jech.35.4.293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Jooste PL, Yach D, Steenkamp HJ, Botha JL, Rossouw JE. Drop-out and newcomer bias in a community cardiovascular follow-up study. Int J Epidemiol. 1990;19(2):284–289. doi: 10.1093/ije/19.2.284. [DOI] [PubMed] [Google Scholar]

- 45.Keusch F. Why do people participate in web surveys? Applying survey participation theory to internet survey data collection. MRQ. 2015;65(3):183–216. [Google Scholar]

- 46.Roszkowski MJ, Bean AG. Believe it or not! longer questionnaires have lower response rates. J Bus Psychol. 1990;4(4):495–509. [Google Scholar]

- 47.Mindell JS, Tipping S, Pickering K, Hope S, Roth MA, Erens B. The effect of survey method on survey participation: analysis of data from the Health Survey for England 2006 and the Boost Survey for London. BMC Med Res Methodol. 2010;10(1):83. doi: 10.1186/1471-2288-10-83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Svensson M, Svensson T, Hansen AW, Trolle Lagerros Y. The effect of reminders in a web-based intervention study. Eur J Epidemiol. 2012;27(5):333–340. doi: 10.1007/s10654-012-9687-5. [DOI] [PubMed] [Google Scholar]

- 49.Haldule S, Davalbhakta S, Agarwal V, Gupta L, Agarwal V. Post-publication promotion in rheumatology: a survey focusing on social media. Rheumatol Int. 2020;40(11):1865–1872. doi: 10.1007/s00296-020-04700-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ahmed S, Gupta L. Perception about social media use by rheumatology journals: Survey among the attendees of IRACON 2019. Indian J Rheumatol. 2020;15(3):171–174. [Google Scholar]

- 51.Goel A, Gupta L. Social media in the times of COVID-19. J Clin Rheumatol. 2020;26(6):220–223. doi: 10.1097/RHU.0000000000001508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Kavadichanda GC, Gupta L, Balan S. Tuberculosis is a significant problem in children on biologics for rheumatic illnesses: results from a survey conducted among practicing rheumatologists in India. Indian J Rheumatol. 2020;15(2):130–133. [Google Scholar]

- 53.Agarwal V, Sharma S, Gupta L, Misra D, Davalbhakta S, Agarwal V, et al. COVID-19 and psychological disaster preparedness - an unmet need. Disaster Med Public Health Prep. doi: 10.1017/dmp.2020.219. Forthcoming 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Davalbhakta S, Advani S, Kumar S, Agarwal V, Bhoyar S, Fedirko E, et al. A systematic review of smartphone applications available for corona virus disease 2019 (COVID19) and the assessment of their quality using the mobile application rating scale (MARS) J Med Syst. 2020;44(9):164. doi: 10.1007/s10916-020-01633-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Gupta L, Gasparyan AY, Misra DP, Agarwal V, Zimba O, Yessirkepov M. Information and Misinformation on COVID-19: a cross-sectional survey study. J Korean Med Sci. 2020;35(27):e256. doi: 10.3346/jkms.2020.35.e256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Koker O, Demirkan FG, Kayaalp G, Cakmak F, Tanatar A, Karadag SG, et al. Does immunosuppressive treatment entail an additional risk for children with rheumatic diseases? A survey-based study in the era of COVID-19. Rheumatol Int. 2020;40(10):1613–1623. doi: 10.1007/s00296-020-04663-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Mehta P, Gupta L. Combined case record forms for collaborative datasets of patients and controls of idiopathic inflammatory myopathies. Indian J Rheumatol. doi: 10.4103/injr.injr_56_20. Forthcoming 2020. [DOI] [Google Scholar]

- 58.Kharbanda R, Naveen R, Misra DP, Agarwal V, Gupta L. Combined case record forms for collating obstetric outcomes in rare rheumatic diseases. Indian J Rheumatol. doi: 10.4103/injr.injr_102_20. Forthcoming 2020. [DOI] [Google Scholar]

- 59.Gupta L, Appani SK, Janardana R, Muhammed H, Lawrence A, Amin S, et al. Meeting report: MyoIN – Pan-India collaborative network for myositis research. Indian J Rheumatol. 2019;14(2):136–142. [Google Scholar]

- 60.Kennickell A, Lane J. Measuring the impact of data protection techniques on data utility: evidence from the survey of consumer finances. In: Domingo-Ferrer J, Franconi L, editors. Privacy in Statistical Databases. PSD 2006. Lecture Notes in Computer Science, vol 4302. Berlin, Heidelberg: Springer; 2006. pp. 291–303. [Google Scholar]

- 61.Colbert CY, Diaz-Guzman E, Myers JD, Arroliga AC. How to interpret surveys in medical research: a practical approach. Cleve Clin J Med. 2013;80(7):423–435. doi: 10.3949/ccjm.80a.12122. [DOI] [PubMed] [Google Scholar]

- 62.Eysenbach G. Improving the quality of web surveys: the checklist for reporting results of internet e-surveys (CHERRIES) J Med Internet Res. 2004;6(3):e34. doi: 10.2196/jmir.6.3.e34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Eysenbach G, Wyatt J. Using the internet for surveys and health research. J Med Internet Res. 2002;4(2):E13. doi: 10.2196/jmir.4.2.e13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Gasparyan AY, Yessirkepov M, Voronov AA, Gerasimov AN, Kostyukova EI, Kitas GD. Preserving the integrity of citations and references by all stakeholders of science communication. J Korean Med Sci. 2015;30(11):1545–1552. doi: 10.3346/jkms.2015.30.11.1545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Gasparyan AY. Choosing the target journal: do authors need a comprehensive approach? J Korean Med Sci. 2013;28(8):1117–1119. doi: 10.3346/jkms.2013.28.8.1117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Rivera H. Fake peer review and inappropriate authorship are real evils. J Korean Med Sci. 2018;34(2):e6. doi: 10.3346/jkms.2019.34.e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Gasparyan AY, Ayvazyan L, Akazhanov NA, Kitas GD. Self-correction in biomedical publications and the scientific impact. Croat Med J. 2014;55(1):61–72. doi: 10.3325/cmj.2014.55.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Reporting survey based research