Abstract

Purpose

At present, Argus II is the only retinal prosthesis approved by the US Food and Drug Administration that induces visual percepts in people who are blind from end-stage outer retinal degenerations such as retinitis pigmentosa. It has been shown to work well in sparse, high-contrast settings, but in daily practice visual performance with the device is likely to be hampered by the cognitive load presented by a cluttered real-world environment. In this study, we investigated the effect of a stereo-disparity–based distance-filtering system on four experienced Argus II users for a range of tasks: object localization, depth discrimination, orientation and size discrimination, and people detection and direction of motion.

Methods

Functional vision was assessed in a semicontrolled setup using unfiltered (normal camera) and distance-filtered (stereo camera) imagery. All tasks were forced choice designs and an extension of signal detection theory to latent (unobservable) variables was used to analyze the data, allowing estimation of person ability (person measures) and task difficulty (item measures) on the same axis.

Results

All subjects performed better with the distance filter compared with the unfiltered image (P < 0.001 on all tasks except localization).

Conclusions

Our results show that depth filtering using a disparity-based algorithm has significant benefits for people with Argus II implants.

Translational Relevance

The improvement in functional vision with the distance filter found in this study may have an important impact on vision rehabilitation and quality of life for people with visual prostheses and ultra low vision.

Keywords: retinal prosthesis, image processing, performance measures, multiple alternate forced choice, latent variable

Introduction

The Argus II retinal prosthesis was developed to induce visual percepts in people with end-stage outer retinal degenerations. So far, it is the only electronic retinal implant approved by the US Food and Drug Administration for this purpose. At the end of a 5-year clinical trial with 30 patients having the implants, the Argus II system was evaluated and found to be acceptably safe.1 Furthermore, a recent study provided histopathologic evidence supporting the long-term safety of Argus II retinal implant using morphometric analysis.2

The basic idea behind Argus II is to electrically stimulate the surviving ganglion cells in the retina to elicit visual percepts. However, it requires more than just perception of phosphenes to have any comprehensible visual experience. Functional visual performance with the Argus II has so far been modest, highly variable among recipients, and dependent on several factors such as retinal integrity, macular contact of the array, behavioral adaptation, and perceptual learning.3 A wide range of functional vision abilities, ranging from improved light perception to reading very large print, has been reported by recipients of Argus II implants.4–6 In almost all instances, performance was measured in the lab under idealized conditions, that is, using images with high contrast that have few or no distracting elements.

One of the fundamental limitations reported by Argus II users is the difficulty they have in processing information in complex everyday scenes. The current system does not provide sufficient resolution for common tasks such as object localization and finding people in a crowded environment, which is important in social settings. Image processing algorithms that simplify complex elements in a scene may therefore be a logical step toward improving the existing system. For example, filtering out irrelevant background information could improve functional performance.

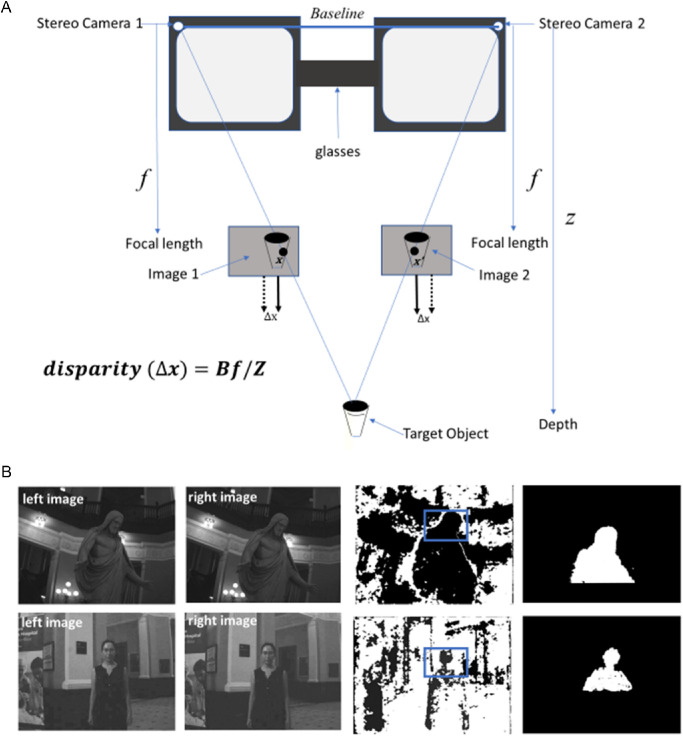

In this study, we used a disparity-based distance filtering system to remove background objects beyond a preset distance range. This range could be selected by the users depending on their specific task requirements. For this study, all the distances were set by the experimenters. The range of distances that could be set up with the distance filter are between 30 cm and 108 m. In this setup, depth selection was performed on the basis of object disparity in images from two cameras mounted on glasses worn by the subject (Figs. 1A, 1B, 2). The underlying principle is that for a baseline (B) distance between two cameras with focal length f, an object at distance z from the cameras will have a disparity Δx = x − x′ = Bf/z. Disparity is 0 for objects at infinity and increases as the object is closer to the cameras. Based on the disparities of objects across the image, a disparity map was generated using the FPGA core from Nerian Vision GmbH (Stuttgart, Germany).7,8 This disparity map was then used to filter out objects outside a specified depth, i.e., disparity, range. The baseline used in the current system was 141.26 mm, the focal length was 3.7 mm, and each pixel subtended an angle of 0.074°.

Figure 1.

(A) Schematic of the stereo disparity system. (B) Example of images from the stereo camera system and the corresponding disparity maps. The first two images on the left are from the left and right cameras, respectively, and the third image shows the disparity map. The blue rectangle shows the field of view of the system and the fourth image is the corresponding filtered image.

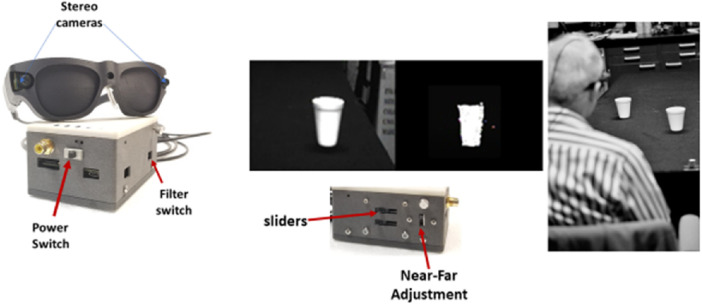

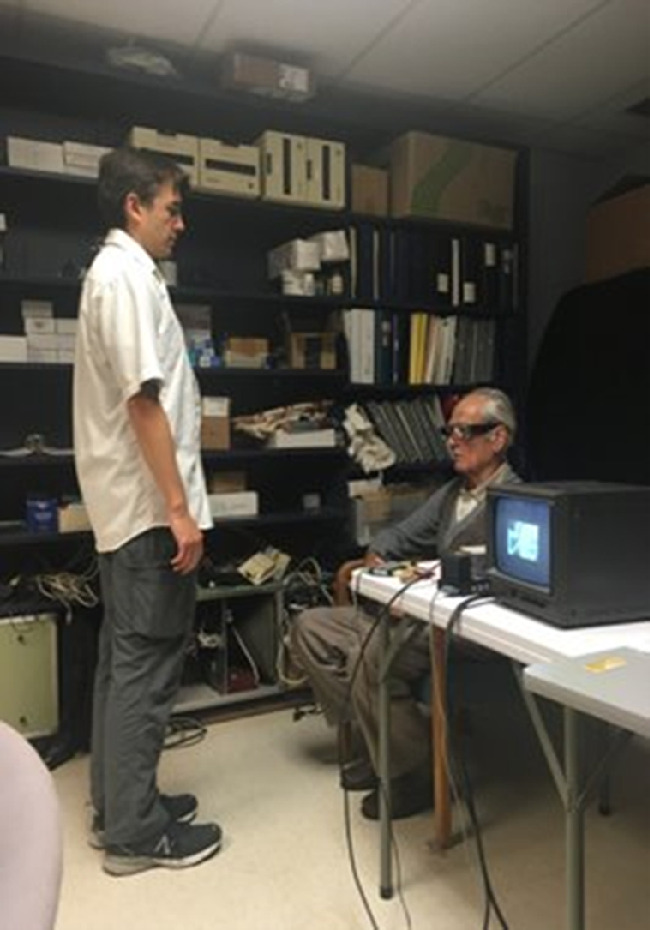

Figure 2.

Glasses with stereo cameras and processor box, example of images from normal camera and distance filter camera, and an experimental setup (right).

The aim of this study was to investigate the efficacy of a stereo-based distance-filtering system on functional vision performance by experienced Argus II users, that is, whether visual performance could be enhanced in situations representative of the real world. The functional vision tasks assessed—judging the location of a person at different distances, locating the closer of two drinking cups placed on a table top, orientation of a hanging object at eye level, and direction of movement of people boarding an escalator—are everyday scenarios. The assessment was done by comparing performance on the same task without and with the depth filter to investigate how the filter could assist in these tasks. Because we wanted to keep the tasks as close to the real world as possible, subjects were allowed to use any monocular depth cues available (e.g., size, distance, elevation, motion parallax, and brightness) while performing any of the tasks in the study.

Methods

Subjects

Four subjects implanted with an Argus II retinal prosthesis participated in the study; Table 1 provides demographic information. These subjects were experienced and have been a part of other psychophysical studies. The study protocol was approved by the Johns Hopkins Medicine Institutional Review Board, informed consent was obtained before participation in the study, and the study conformed to the tenets of the Declaration of Helsinki.

Table 1.

Subject Demographics

| Subject | Age (y) | Gender | No. of Years of Using Argus II | No. of Active Electrodes |

|---|---|---|---|---|

| 1 | 87 | Male | 12 | 55 |

| 2 | 80 | Male | 10 | 56 |

| 3 | 67 | Female | 5 | 57 |

| 4 | 59 | Male | 4 | 57 |

System Hardware and Software Specifications

Distance filtering was achieved with a custom-designed stereo camera pair based on ON Semiconductor Python480 sensors (800 × 600). Data were streamed to a custom-made processor box with a Xilinx Zynq-7000 SoCs. The disparity-based digital image processing algorithm was based on FPGA technology (Nerian Vision GmbH) that identified and removed everything outside of a user-preset disparity range. This range was adjustable and set by the experimenters before each of the tasks; the range of distances used for various tasks is given in Table 2. A switch was used to select near (low pass), far (high pass), or range (band pass), and disparity limits could be adjusted by the user through a pair of potentiometers (“sliders”) (Fig. 2). The nearest possible distance (disparity) that could be resolved by the system was 30 cm (320 pixels) and the farthest was 108 m (1 pixel). Any object or person within the selected distance range, regardless of its actual brightness, was presented as bright; the rest of the image was black, thus creating a high contrast white on black image (Figs. 1B, 2). The camera image corresponding with the subject's view was monitored during all experiments.

Table 2.

Summary of the Experimental Setup

| Experiment Name | No. of Response Choices | Range of Target Distances | Range of Distance Filter Settings | Target Description | No. of Trials |

|---|---|---|---|---|---|

| Depth discrimination | 3 | 1, 3, and 6 m | <1.5 and <4 m | Person | 60 |

| Object localization | 2 | 45–85 cm | <30 to <150 cm | Small foam cups | 40 |

| Orientation (single) | 3 | 1 m | <125 cm | 22”, 44” and 66” long and 6.5” wide foam boards | 45 |

| Orientation (double) | 6 | 1 m | <125 cm | 22”, 44” and 66” long and 6.5” wide foam boards | 45 |

| Size discrimination | |||||

| (double) | 3 | 1 m | <125 cm | 22”, 44” and 66” long and 6.5” wide foam boards | 45 |

| Size discrimination (triple) | 6 | 1 m | <125 cm | 22”, 44” and 66” long and 6.5” wide foam boards | 45 |

| Direction of motion (people) | 2 | 5–6 m | <536 cm | People | Range: 9–78 |

| Direction of motion (escalator) | 2 | 5–6 m | <536 cm | Escalator | 10 |

Tasks

All tasks were structured as m-alternative forced choice (m-AFC), which means that there were m ≥ 2 response choices on each trial with one response choice defined to be “correct” by the experimenter. The number of response choices varied for different tasks as we tried to induce different demands across the tasks. Whenever possible, we chose real-world stimuli (people, cups, escalator) so that subject performance would better relate to real-world functional performance. Broadly, these tasks were similar to the functional low-vision observer rated assessment, developed for and validated in Argus II users.9

As shown in Table 2, there were a total of eight tasks that were assessed in this study: depth discrimination, object localization, orientation with one object, orientation with two objects, size discrimination with two objects, size discrimination with three objects, direction of motion of people, and direction of motion of an escalator. In all cases, under both no filter and distance filter conditions, subjects were allowed to take as much time as needed to complete the task and use any available depth cues. All tasks were performed under two settings—no filter and distance filter. These settings were selected in random order as generated by a random number generator in MATLAB (MathWorks, Natick, MA) during each trial and were set by the experimenter. At the start of each task, subjects were given the opportunity to familiarize themselves with the task and the settings available for that task. At the end of the experiment, each subject was asked about their experience with each setting and whether they perceived any difference between the settings. The tasks are described in detail.

Depth Discrimination

Subjects were seated in the lab with the room lights on and were asked to find a target person standing directly in front of them and report whether the person was standing at 1, 3, or 6 m. Thus, the depth discrimination task was 3-AFC. In the distance filter setting, subjects were instructed to use the near–far adjustment switch on the side of the box (Fig. 2) to set the cut-off distance of the filter to 1.5 or 4.0 m. They were aware that in the near mode they would see the person only at 1 m, and in the far mode the person would be visible at 1 or 3 m. When there was no image in either of the settings it meant that the person was at 6 m. Subjects were reminded to check the two modes before they gave a response. In the no filter setting, they were asked to judge based on the available depth cues (Fig. 3).

Figure 3.

Shows the experimental set up for the depth discrimination task. Shown is the person standing in front of the subject at 1 m while the subject was seated directly in front of the target. The task was to report whether the person was standing at 1 m, 3 m, or 6 m.

Object Localization

The purpose of this task was to assess the benefits of using the distance filter to locate objects within close range such as a drinking cup on the table in front of the subject. This was a 2-AFC task where subjects had to report which of two cups was closer—left or right. Two white foam drinking cups of equal size were set up on a table that was covered with black felt cloth. Subjects were given an opportunity to touch the cups to know the texture and size. The slider could be adjusted to narrow or widen the range of the low pass filter. Thus, adjusting the slider to the far end brought both cups in to the view, sliding it to an intermediate point would show only the closer cup, and sliding it to the close end filtered out both cups. In this way, when they moved the slider, they were able to gauge which of the cups was closer.

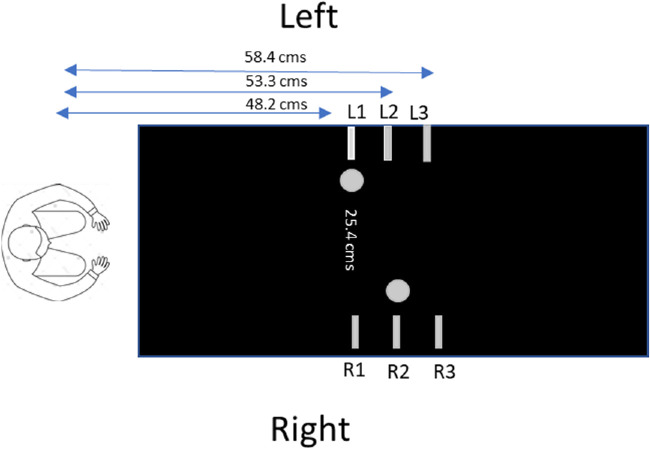

The two cups were separated laterally by 25.4 cm, with three possible distances for each cup (Fig. 4). Due to the narrow field of view of the Argus II implant, subjects could not see both cups simultaneously, so they had to scan back and forth while performing the task. Cups were randomly moved on each trial by varying their distances from the subject, but the lateral separation remained constant for all trials. They were placed at four possible locations as seen in Figure 4: L1 and R2, L2 and R3, R1 and L2, and R2 and L3. Which of the four locations was presented on each trial was determined through a random number generator in MATLAB. Subjects were asked to find each cup and report which cup was closer, in the case of distance-filtering by adjusting the slider. Forty trials each were performed in filtered and unfiltered conditions with responses (left vs. right) recorded.

Figure 4.

Schematic of the object localization task.

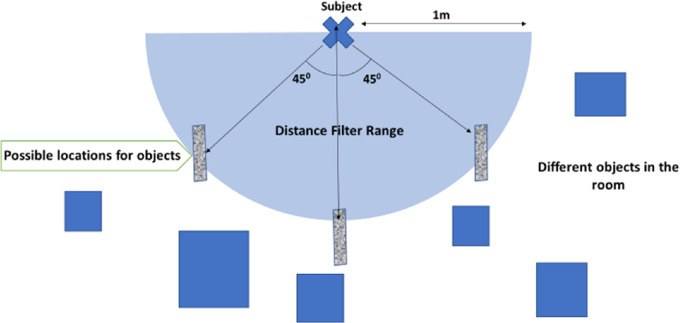

Orientation and Size Discrimination

Orientation (used here as in the term “orientation and mobility”) is the spatial relationship between the location of a person and the position of an object or target in space. For this task subjects were standing, and one to three foam boards of three different lengths (Table 2) with black and white noise patterns were suspended at 1 m distance, in three possible orientations: straight ahead, 45° to the right, and 45° to the left (Figs. 5, 6). Distractor objects were placed in various background locations throughout the room. The low pass distance filter was set to 1.25 m (Fig. 5). Objects were presented at one, two, or all three orientations in a randomized configuration. For assessing orientation, subjects were told the number of objects present and asked to report the orientation(s) and, for size discrimination, if more than one, to report the longer object. Responses were recorded for each trial (Supplementary Movies S1 and S2).

Figure 5.

Schematic of the experimental setup for orientation task. The sizes and locations for the background objects are not scaled to size. The distances of distractor objects ranged from 2 to 4 m.

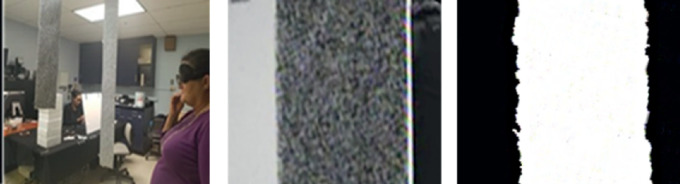

Figure 6.

Example of a subject performing the test (left), the unfiltered view of a suspended target (center), and the distance-filtered view (right). The center and right images are from the video output device monitoring the settings.

Direction of Motion

Subjects were at the bottom of a pair of escalators moving in opposite directions, seated 5 to 6 m off to the side, and observed passersby approaching or coming off the escalator (Fig. 7). The task was to report the direction of motion of the people and escalator (explained in detail in the next two sections). The low pass distance filter was set to the center of the closer escalator. Because there was (in reality) only one direction of motion for the closest escalator, the scene was left-right reversed in the image processor on half of the 10 blocks, randomly chosen. Subjects were asked to wear earplugs to suppress sound cues.

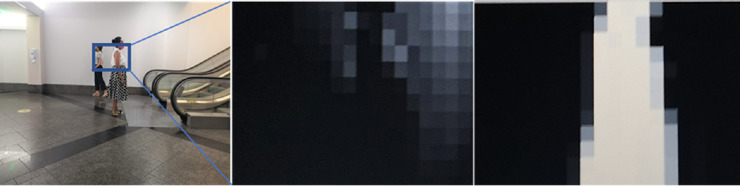

Figure 7.

View of the escalator from where the subjects were seated (left), with no filter (center), and distance filter settings (right). The center and right images are the input images for the Argus II system. The blue rectangle in the left image shows the Argus II field of view within the much wider camera field of view, while the center and right images show the magnified view of image inside the blue rectangle, which was presented to the Argus II user in the no filter condition (center) and the distance filtered condition (right). Note that under the no filter condition both persons appear dark against the brighter background, whereas in the distance filtered image only the closer person is seen as a bright silhouette after filtering out the background information (See Supplementary Movies S3 and S4).

People Direction

The task was to observe the scene and report the direction of motion (left to right or right to left) of each person they saw walking onto or off the closer escalator. Subjects were not told when an event occurred, and as a result the number of events detected varied between subjects and trials. For each subject, the session ended after 10 blocks of 10 trials each, for a total of 100 trials; there were a total of two sessions, one for the no filter condition and one for the distance filter condition.

Closer Escalator Direction

After the completion of each block (10 trials), subjects were asked to indicate the direction of motion of the closer escalator. They were asked to report the direction of escalator motion based on the direction of all people detected during that trial (even if none were detected). The possible responses were “left to right” or “right to left.”

Data Analysis

In typical psychophysics experiments, measures of subject performance are expressed in units of the physical stimulus scale (e.g., luminance, frequency, size). Therefore, the threshold for a physical stimulus is a measure of both a person's “ability” and the “difficulty” of the stimulus. In our m-AFC tasks there is no known physical measure of task difficulty, and we assumed that both person ability and task difficulty were defined by a latent (or unobservable) variable. Because our tasks varied in the number m of response choices (Table 2), we analyzed our data using a method that extends signal detection theory (SDT)—the standard mathematics used in psychophysics to analyze m-AFC responses10—to latent variables, which allowed us to estimate person measures (person ability) and item measures (item difficulty) on the same latent variable axis in d′ units (the unit of measurement in SDT).11

The estimation of item and person measures was done in two steps. First, item measures were estimated using the equation:

where ph(C) represents probability correct for the sample of persons for item h, δh represents the item measure for item h, ϕ(x) represents the standard normal distribution with Φ(x) its cumulative distribution function, m − 1 is the number of incorrect response choices, and x is the magnitude of the latent variable in d′ units. In other words, probability correct was transformed into d′ units for each item given the set of responses from the sample of persons; 95% confidence intervals were calculated by mapping binomial confidence intervals (estimated using the Wilson method)12 from probability correct into d′ units using the same equation. After all item measures were estimated, each person measure was independently estimated using a maximum likelihood estimation, with person measure standard errors being the reciprocal of the square root of the Hessian (because a maximum likelihood estimation was used). All computations were done in R using code from the article by Bradley and Massof.11 The item and person measures estimated by this analysis are reported here.

Because both item measures and person measures were estimated on the same “ability” axis, they could be directly compared with each other. More positive person measures represent greater person ability while more negative person measures represent less ability. The ability of the “average” person defined the axis origin, d′ = 0. More negative item measures represent easier items and more positive item measures represent more difficult items. In general, for any given person measure and item measure, the person measure minus the item measure represents the relative ability of the person to the item, with chance performance for that person–item combination being predicted when the two measures are equal. Thus, d′ = 0 represents chance performance for the sample of persons. Because a positive item measure would represent an item where subjects, on average, performed below chance, we should not expect any positive item measures in our analysis.

Data were combined into a single dataset that included both no filter and distance filter conditions. To compare each subject's performance in the two conditions across all tasks, we estimated two person measures per subject, one for each condition (4 subjects × 2 conditions = 8 person measures), and then used a paired t-test to determine if there were any significant differences in performance between the two conditions. To compare subject performance in the two conditions for each individual task, we estimated a single person measure for all four subjects in each condition in each task (2 person measures per task × 8 tasks = 16 total person measures) and used Welch's test (a t-test for unequal variances) to determine if there were any significant differences in subject performance per task. A paired t-test was also used to analyze differences in the number of people detected at the closer escalator under the no filter and distance filter conditions.

Results

All subjects were able to perform the tasks except subject 1, who was only available to participate in the depth discrimination and object localization tasks. These were experienced Argus II users, and they used the distance filtering system without any discomfort. The distance for each experiment was set before beginning the experiments, and subjects could not manipulate the slider, except for the object localization task where the near and distant cups could be visualized only when the subject adjusted the slider in order to correctly determine which of the two cups was closer.

Item Measures

Table 3 shows all estimated item measures (in d′ units) and their 95% confidence intervals. All estimated item measures were negative, as expected, because positive item measures would represent performance below chance. Note that the item measures showed a range of d′ values indicating that the difficulty levels varied across tasks. The most negative item measure indicates the easiest task, and the least negative item measure indicates the most difficult task. As seen in Table 3, orientation with two objects was the easiest task, whereas size discrimination with three objects was the hardest task for our subjects.

Table 3.

Estimated Item Measures and 95% Confidence Intervals for All Tasks in Our Experiment

| Type of Task | Item Measures |

|---|---|

| Orientation (double objects) | –2.17 (–2.26 to –1.05) |

| Direction of motion (people) | –1.31 (–2.08 to –0.24) |

| Orientation (single object) | –1.20 (–2.19 to 0.20) |

| Direction of motion (escalator) | –0.95 (–2.38 to 0.92) |

| Depth discrimination | –0.92 (–1.06 to –0.35) |

| Object localization | –0.78 (–1.24 to 0.64) |

| Size discrimination (double objects) | –0.36 (–0.42 to –0.14) |

| Size discrimination (triple objects) | –0.08 (–1.16 to 1.45) |

As explained in the Methods, detecting persons moving toward the closer escalator was required to determine their direction of motion. We found that the detection rate varied widely between subjects and between the no filter and distance filter settings. The total number of moving persons detected across all trials was 6, 21, and 20 in the no filter condition and 73, 70, and 69 with the distance filter for subjects 2, 3, and 4 respectively, out of 100 possible. The number of detections per trial ranged from 0 to 2, 1 to 3, and 0 to 6 under the no filter condition and from 4 to 10, 3 to 9, and 4 to 9 for subjects 2, 3, and 4, respectively, out of 10 possible. A paired t-test showed that the numbers of people detected were significantly higher under the distance filter than no filter conditions for subject 2 (t(10) = − 8.3 , P < 0.001), subject 3 (t(10) = − 8.8 , P < 0.001), and subject 4 (t(10) = − 5.9 , P < 0.001).

Person Measures

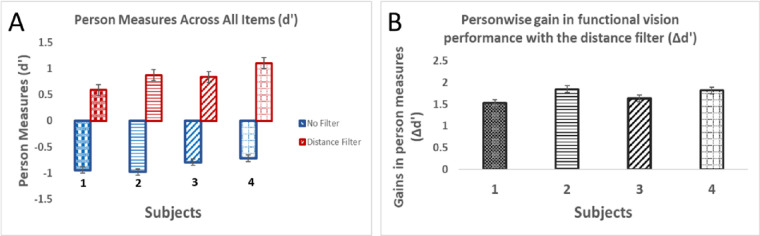

Figure 8A shows estimated person measures (in d′ units) for each person across all tasks under no filter and distance filter conditions. All subjects performed significantly better with the distance filter. The d′ values with the distance filter (mean = 0.92, SD = 0.07) were significantly higher (t(3) = − 15.78, P < 0.001) than unfiltered d′ values (mean = − 0.89, SD = 0.02), which means that subject ability was on average better with the distance filter than with the no filter setting for all tasks. This was true even when individual person scores for each individual task were compared between distance filter and no filter (Fig. 9A).

Figure 8.

Person measures obtained for no filter (blue) and distance filter (red) combined for all tasks (A) and (B) person wise gains in performance with the distance filter showing that with the distance filter all subjects were more capable when doing the same tasks. Error bars represent standard errors.

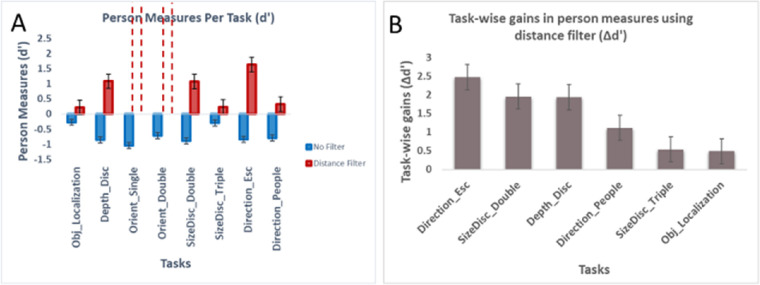

Figure 9.

Task-wise estimated person measures (A) and gains in performance using distance filter for each task (B). Error bars represent standard error of the mean. Note that the person measures for the distance filter condition could not be estimated for the orientation task for single and double objects because the subjects scored all correct in these tasks (d′ = ∞).

The person measures for each individual task were compared between no filter and distance filter using Welch's paired t-test for unequal variances. Except for the object localization task (t(408.4) = − 134.59, P > 0.5) the differences between no filter and distance filter conditions were highly statistically significant for all applicable pairs where d′ values were calculated for depth discrimination (t(455.9) = 556.06, P < 0.001), size discrimination with two objects (t(260.9) = 298.7, P < 0.001), size discrimination with three objects (t(262.15) = 92.5, P < 0.001), direction of motion of escalator (t(43.5) = 61.3, P < 0.001), and the direction of motion of people (t(93.5) = 133.14, P < 0.001) tasks. All four subjects showed similar gains with the distance filter compared with the no filter condition (Fig. 8B).

Discussion

In this study, we assessed the efficacy of image processing using a disparity-based distance filter on functional vision performance in Argus II users. Subjects performed a wide range of tasks that were based on real-world situations, including object localization and discrimination of orientation, size, people detection, and direction of motion. Subjects could use all available visual information related to depth such as relative size, elevation, motion parallax, and brightness under the no filter condition, whereas the filtered condition only provided size and movement. Nonetheless, as shown in the Results, subjects performed significantly better with than without the distance filter. This confirms our hypothesis that the poor resolution provided by the Argus II system does not allow users to make effective use of monocular depth cues; differences in performance between the two settings can be explained by the removal of “clutter” or extraneous information using the depth filtering system, and enhancement of the remaining information.

The task that improved most with the distance filter was the direction of motion of the escalator (Fig. 9B); this result can be attributed to the fact that decisions were based on the number of event detections pretrial, which was much higher in the depth-filtered condition. The task that showed the least difference was object localization, most likely because the black background with high-contrast objects (white cups) provided similar views in both conditions. Size cues were available in both conditions, whereas motion parallax relative to the background and vertical disparity (unfiltered) versus object selection using the slider (filtered) constituted the only differences between the conditions. Object selection in the filtered view still was slightly more effective, but not significant; size alone may have provided sufficient information in both conditions. It is worth noting that the only tasks harder than object localization were those with size discrimination (Table 3), suggesting that the size of the cups played a minor role in the decision. It should also be noted that that this task was the only one where subjects used the slider to manipulate the filter settings, which could have made the task more complex in the depth-filtered condition. Finally, it is interesting that this was the least real world of our tasks in terms of contrast and clutter, confirming that the benefit of the depth filter increases in more realistic scenarios.

The difference in gains between tasks is reminiscent of a finding in perceptual learning. Fine and Jacobs13 compared perceptual learning across a range of tasks with varying complexities and found that the greatest improvement with perceptual learning occurred for tasks that used natural stimuli compared with those with less complex stimuli. This finding seems to be true in our case as well, because the task that improved the most was direction of motion where the subjects were given the most real-world setting in our study compared with all the other tasks. In contrast, we allowed limited time for learning—only a short practice time and a modest number of trials for each condition and task—so the parallel with perceptual learning may be coincidental.

In the case of person-wise gains with the system, it was reassuring to find that all subjects in our study received similar gains from the distance filter system irrespective of their age or experience with the Argus II system. Thus, there is no evidence that our findings would have been different with a larger sample; it is possible, though, that less experienced Argus II users would have showed even more pronounced benefits of distance filtering.

There are several real-world implications of these results. For example, we found that performance gain with the distance filter was greatest in one of the direction of motion tasks. Detecting the direction of motion is a challenging task for Argus II users. The low contrast and clutter in the real world make it difficult to detect movement with the current system, demonstrated by the significantly improved detection rate for moving persons under the distance-filtered condition, which is an important secondary finding. Therefore, filtering out background objects can make a difference to Argus II users. Using the SDT-based latent variable analysis, we were able to estimate how much easier some tasks were relative to others. This is useful information for training during rehabilitation because it is encouraging for patients to begin rehabilitation with easier tasks and progress to more difficult ones. Similarly, it is useful to know which tasks are likely to see the most improvement from image enhancements when developing a training protocol. Another useful result of the SDT-based latent variable analysis is that we were able to obtain a set of calibrated item measures for Argus II users, so that future researchers can directly estimate person measures by administering the same set of items. Although for calibration purposes it is ideal to have a large sample of subjects, this is not possible with Argus II users because the population is limited.

One may wonder if our subjects’ performance correlates with standard visual function measures, such as visual acuity. We cannot answer this question for a lack of reliable visual function measures in this population. It is true that some Argus II users have quantifiable grating acuity measures better than 2.9 logMAR,14 but none of our four subjects reaches this criterion with statistical significance. Yet it is clear, both from their use of the system in daily life and from the results presented here, that they can effectively use the system for visual information gathering. This finding reemphasizes our previously published notion that ULV, including prosthetic vision, should be assessed through functional rather than clinical vision measures (Adeyemo O, et al. IOVS. 2017;58:ARVO E-Abstract 4688).

There are a few limitations to the current study. Even though we used real-world stimuli whenever possible (e.g., people, escalator, and cups), the experiments were conducted within a controlled setting rather than an outdoor or home environment. Another limitation was the absence of a convenient user interface in the current prototype system; subjects were not given an opportunity to explore use of the filter or distance switches, except in the distance discrimination task, or the slider, except in the object localization task; further research with a more ergonomic system is necessary to determine the effect of experience using these features. It is likely that with extensive training and rehabilitation, further gains can be achieved in the use of this system; however, our prototype was not configured to be taken home, and we did not have enough data to assess the effects of practice on performance in our study. A final limitation is that we did not include any mobility tasks in this study. Mobility is an important aspect of daily life and it is important to investigate the effect of the distance filter on mobility tasks in a future study.

It is important to note that every one of our subjects reported that they preferred the distance filter over the no filter setting. They found that the filter made tasks much easier to perform and reported that they would use the system more often, and specifically for tasks such as direction of motion and orientation. Rehabilitation of individuals with visual prostheses is challenging because there are limited tests available for assessing functional vision and there are no tools available for training. The functional vision tasks that are used in this study could be used as a guideline in designing a rehabilitation curriculum for people with a visual prosthesis.

The approach we used in this study is generalizable to developing functional vision assessments in other profoundly visually impaired individuals, be it native ultra low vision or vision partially restored through treatments such as gene therapy or stem cells. It is possible that these tools will need to be recalibrated in other patient groups; however, we have found other performance measures scale similarly in disparate groups such as BrainPort and Argus II users and individuals with native ultra low vision (Adeyemo O, et al. IOVS. 2017;58:ARVO E-Abstract 4688). Of note, the distance filter was developed as an accessory to be incorporated into the Argus II system with an upcoming update to the Video Processing Unit. However, the development was stalled due to the termination of the Argus II program by Second Sight Medical Products to focus on their Orion cortical prosthesis system. If the Orion receives approval from the US Food and Drug Administration, the distance filter system could be implemented into that system. In addition, there are more than 50 research groups working on developing visual prostheses; a distance filter option could be a viable image enhancement tool for these systems.

Supplementary Material

Acknowledgments

The authors thank the Argus II subjects for contributing their valuable time and Liancheng Yang for his technical support for this study.

Funded by the National Eye Institute (R42 EY025136) to GD/AME Corp.

Disclosure: A. Kartha, None; R. Sadeghi, None; M.P. Barry (E); C. Bradley, None; P. Gibson (E); A. Caspi (C, P); A. Roy (E); G. Dagnelie (C, P)

References

- 1. Da Cruz L, Dorn JD, Humayun MS, et al.. Five-year safety and performance results from the Argus II Retinal Prosthesis System clinical trial. Ophthalmology. 2016; 123(10): 2248–2254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Lin T-C, Wang L-C, Yue L, et al.. Histopathologic assessment of optic nerves and retina from a patient with chronically implanted Argus II retinal prosthesis system. Trans Vis Sci Technol. 2019; 8(3): 31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Ahuja A, Yeoh J, Dorn J. Factors affecting perceptual threshold in Argus II retinal prosthesis subjects. Transl Vis Sci Technol. 2013; 2(4): 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Stronks CH, Dagnelie G.. The functional performance of the Argus II retinal prosthesis. Expert Rev Med Devices. 2014; 11(1): 23–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Dagnelie G, Christopher P, Arditi A, et al.. Performance of real-world functional vision tasks by blind subjects improves after implantation with the Argus II retinal prosthesis system. Clin Exp Ophthalmol. 2017; 45: 152–159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Da Cruz L, Coley BF, Dorn JD, et al.. The Argus II epiretinal prosthesis system allows letter and word reading and long-term function in patients with profound vision loss. Br J Ophthalmol. 2013; 97: 632–636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Hirschmüller H. Accurate and efficient stereo processing by semi-global matching and mutual information. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05), San Diego, CA; 2005, pp. 807–814; 2005. [Google Scholar]

- 8. Stereo matching on the FPGA. Available at: https://nerian.com/products/stereo-vision-core/. Acces-sed August 10, 2020.

- 9. Geruschat D, Richards T, Arditi A, et al.. An analysis of observer-rated functional vision in patients implanted with the Argus II Retinal Prosthesis System at three years. Clin Exp Optom. 2016; 99: 227–232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Green DM, Swets JA.. Signal Detection Theory and Psychophysics. New York: Macmillan; 1966. [Google Scholar]

- 11. Bradley C, Massof RW. Estimating measures of latent variables from m-alternative forced choice responses. PloS One. 2019; 14(11): e0225581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Wilson EB. Probable inference, the law of succession and statistical inference. J Am Stat Assoc. 1927; 22(158). [Google Scholar]

- 13. Fine I, Jacobs RA. Comparing perceptual learning across tasks: a review. J Vis. 2002; 2(5): 190–203. [DOI] [PubMed] [Google Scholar]

- 14. Humlayun MS, Dorn JD, Da Cruz L, et al.. Interim results from the international trial of Second Sight's visual prosthesis. Ophthalmology. 2012; 119(4): 779–788. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.