Abstract

We perform an analysis of the average generalization dynamics of large neural networks trained using gradient descent. We study the practically-relevant “high-dimensional” regime where the number of free parameters in the network is on the order of or even larger than the number of examples in the dataset. Using random matrix theory and exact solutions in linear models, we derive the generalization error and training error dynamics of learning and analyze how they depend on the dimensionality of data and signal to noise ratio of the learning problem. We find that the dynamics of gradient descent learning naturally protect against overtraining and overfitting in large networks. Overtraining is worst at intermediate network sizes, when the effective number of free parameters equals the number of samples, and thus can be reduced by making a network smaller or larger. Additionally, in the high-dimensional regime, low generalization error requires starting with small initial weights. We then turn to non-linear neural networks, and show that making networks very large does not harm their generalization performance. On the contrary, it can in fact reduce overtraining, even without early stopping or regularization of any sort. We identify two novel phenomena underlying this behavior in overcomplete models: first, there is a frozen subspace of the weights in which no learning occurs under gradient descent; and second, the statistical properties of the high-dimensional regime yield better-conditioned input correlations which protect against overtraining. We demonstrate that standard application of theories such as Rademacher complexity are inaccurate in predicting the generalization performance of deep neural networks, and derive an alternative bound which incorporates the frozen subspace and conditioning effects and qualitatively matches the behavior observed in simulation.

Keywords: Neural networks, Generalization error, Random matrix theory

1. Introduction

Deep learning approaches have attained high performance in a variety of tasks (LeCun et al., 2015, Schmidhuber, 2015), yet their learning behavior remains opaque. Strikingly, even very large networks can attain good generalization performance from a relatively limited number of examples (Canziani et al., 2017, He et al., 2016, Simonyan and Zisserman, 2015). For instance, the VGG deep object recognition network contains 155 million parameters, yet generalizes well when trained with the 1.2 million examples of ImageNet (Simonyan & Zisserman, 2015). This observation leads to important questions. What theoretical principles explain this good performance in this limited-data regime? Given a fixed dataset size, how complex should a model be for optimal generalization error?

In this paper we study the average generalization error dynamics of various simple training scenarios in the “high-dimensional” regime where the number of samples and parameters are both large (), but their ratio is finite (e.g Advani, Lahiri, & Ganguli, 2013). We start with simple models, where analytical results are possible, and progressively add complexity to verify that our findings approximately hold in more realistic settings. We mainly consider a student–teacher scenario, where a “teacher” neural network generates possibly noisy samples for a “student” network to learn from Saad and Solla, 1995, Seung et al., 1992 and Watkin, Rau, and Biehl (1993). This simple setting retains many of the trade-offs seen in more complicated problems: under what conditions will the student overfit to the specific samples generated by the teacher network? How should the complexity (e.g. number of hidden units) of the student network relate to the complexity of the teacher network?

First, in Section 2, we investigate linear neural networks. For shallow networks, it is possible to write exact solutions for the dynamics of batch gradient descent learning as a function of the amount of data and signal to noise ratio by integrating random matrix theory results and the dynamics of learning. This is related to a large body of prior work on shallow networks (Bai and Silverstein, 2010, Baldi and Chauvin, 1991, Baldi and Hornik, 1995, Benaych-Georges and Rao, 2011, Benaych-Georges and Rao, 2012, Chauvin, 1990, Dodier, 1995, Dunmur and Wallace, 1999, Hoyle and Rattray, 2007, Kinouchi and Caticha, 1995, Krogh and Hertz, 1992, LeCun et al., 1991, Seung et al., 1992). Our results reveal that the combination of early stopping and initializing with small-norm weights successfully combats overtraining, and that overtraining is worst when the number of samples equals the number of model parameters.

For deep linear neural networks, in Section 3 we derive a reduction of the full coupled, nonlinear gradient descent dynamics to a much smaller set of parameters coupled only through a global scalar. Our reduction is applicable to any depth network, and yields insight into the dynamical impact of depth on training time. The derivation here differs from previous analytic works on the dynamics of deep linear networks (Saxe, McClelland, & Ganguli, 2014) in that we do not assume simultaneous diagonalizability of the input and input–output correlations. It shows that generalization error in this simple deep linear case behaves qualitatively similarly to the shallow linear case.

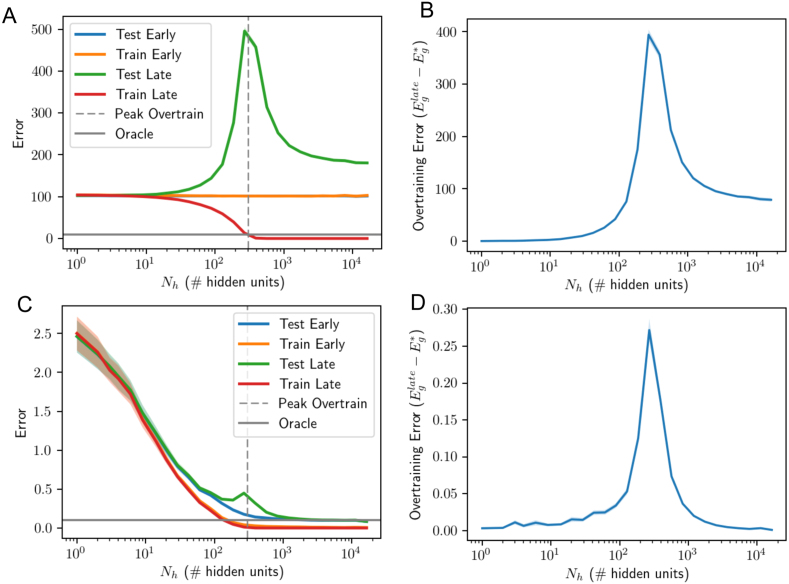

Next, in Section 4 we turn to nonlinear networks. We consider a nonlinear student network which learns from a dataset generated by a nonlinear teacher network. We show through simulation that the qualitative intuitions gained from the linear analysis transfer well to the nonlinear setting. Remarkably, we find that catastrophic overtraining is a symptom of a model whose complexity is exactly matched to the size of the training set, and can be combated either by making the model smaller or larger. Moreover, we find no evidence of overfitting: the optimal early-stopping generalization error decreases as the student grows larger, even when the student network is much larger than the teacher and contains many more hidden neurons than the number of training samples. Our findings agree both with early numerical studies of neural networks (Caruana, Lawrence, & Giles, 2001) and the good generalization performance of massive neural networks in recent years (e.g. Belkin, Hsu, Ma et al., 2019, Simonyan and Zisserman, 2015, Spigler et al., 2018). In this setting, we find that bigger networks are better, and the only cost is the computational expense of the larger model.

We also analyze a two layer network to gain theoretical intuition for these numerical results. A student network with a wide hidden layer can be highly expressive (Barron, 1993), even if we choose the first layer to be random and fixed during training. However, in this setting, when the number of hidden units is larger than the number of samples , our linear solution implies that there will be no learning in the zero-eigenvalue directions of the hidden layer covariance matrix. It follows that further increasing the network size will not actually increase the complexity of the functions the network can learn. Furthermore, there seems to be an implicit reduction in model complexity and overfitting at late stopping times when is increased above because the non-zero eigenvalues are actually pushed away from the origin, thus reducing the maximum norm learned by the student weights. Finally, through simulations with the MNIST dataset, we show that these qualitative phenomena are recapitulated on real-world datasets.

The excellent performance of complex models may seem to contradict straightforward applications of VC dimension and Rademacher bounds on generalization performance (Zhang, Bengio, Hardt, Recht, & Vinyals, 2017). We take up these issues in Sections 6, 7. Our results show that the effective complexity of the model is regularized by the combined strategy of early stopping and initialization with small norm weights. This two-part strategy limits the norm of the weights in the network, thereby limiting the Rademacher complexity. This strategy is analogous to that used in support vector machines to allow generalization from kernels with infinite VC dimension: generalization is preserved provided the training process finds a large-margin (low-norm weight vector) solution on the training data. Hence, while a large deep network can indeed fit random labels, gradient-trained DNNs initialized with small-norm weights learn simpler functions first and hence generalize well if there is a consistent rule to be learned.

Remarkably, even without early stopping, generalization can still be better in larger networks (Belkin, Hsu, Ma et al., 2019). To understand this, we derive an alternative bound on the Rademacher complexity of a two layer non-linear neural network with fixed first layer weights that incorporates the dynamics of gradient descent. We show that complexity is limited because of a frozen subspace in which no learning occurs, and overtraining is prevented by a larger gap in the eigenspectrum of the data in the hidden layer in overcomplete models. Our bound provides new intuitions for generalization behavior in large networks, and qualitatively matches the findings of our simulations and prior work: even without early stopping, overtraining is reduced by increasing the number of hidden units when .

Our results begin to shed light on the diverse learning behaviors observed in deep networks, and support an emerging view that suggests erring on the side of large models before pruning them later.

2. Generalization dynamics in shallow linear neural networks

To begin, we study the simple case of generalization dynamics in a shallow linear neural network receiving -dimensional inputs. We consider a standard student–teacher formulation, a setting that has been widely studied using statistical mechanics approaches (see Engel and Van Den Broeck, 2001, Seung et al., 1992, Watkin et al., 1993 for reviews, and Baldi and Chauvin, 1991, Baldi et al., 1990, Baldi and Hornik, 1995, Chauvin, 1990, Dunmur and Wallace, 1999, Kinouchi and Caticha, 1995, Krogh and Hertz, 1992, LeCun et al., 1991 for settings more closely related to the linear analysis adopted in this section). Here a student network with weight vector trains on examples generated by a teacher network with weights (see Fig. 1). The teacher implements a noisy linear mapping between inputs and associated scalar outputs ,

| (1) |

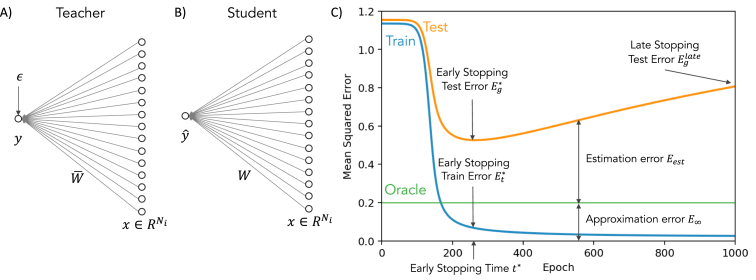

Fig. 1.

Learning from a noisy linear teacher. (A) A dataset of examples is created by providing random inputs to a teacher network with a weight vector , and corrupting the teacher outputs with noise of variance . (B) A student network is then trained on this dataset . (C) Example dynamics of the student network during full batch gradient descent training. Training error (blue) decreases monotonically. Test error, also referred to as generalization error, (yellow), here computable exactly (4), decreases to a minimum at the optimal early stopping time before increasing at longer times (), a phenomenon known as overtraining. Because of noise in the teacher’s output, the best possible student network attains finite generalization error (“oracle”, green) even with infinite training data. This error is the approximation error . The difference between test error and this best-possible error is the estimation error . (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

In the equation above, denotes noise in the teacher’s output. We will model both the noise and the teacher weights as drawn i.i.d. from a random distribution with zero mean and variance and respectively. The signal-to-noise ratio parametrizes the strength of the rule underlying the dataset relative to the noise in the teacher’s output. In our solutions, we will compute the generalization dynamics averaged over all possible realizations of to access the general features of the learning dynamics independent of the specific problems encountered. Finally, we assume that the inputs are drawn i.i.d. from a Gaussian with mean zero and variance so that each example will have an expected norm of one: .

The student network is trained using the dataset to accurately predict outputs for novel inputs . The student is a shallow linear network, such that the student’s prediction is simply . To learn its parameters, the student network will attempt to minimize the mean squared error on the training samples using gradient descent. The training error is

| (2) |

and full-batch continuous-time gradient descent on this error function (a good approximation to discrete time batch gradient descent with small step size) yields the dynamical equations:

| (3) |

where is a time constant inversely proportional to the learning rate. Our primary goal is to understand the evolving generalization performance of the network over the course of training. That is, we wish to understand the generalization error which is an average error on a new, unseen example:

| (4) |

as training proceeds. Here the average is over potential inputs and labels . The generalization error will be self-averaging meaning that the performance of all random instantiations of the data and model should be the same when the data is sufficiently high dimensional: .

2.1. Exact solutions in the high-dimensional limit

How does generalization performance evolve over the course of training? The long-term behavior is clear: if training is run for a long time and initialized with zero weights, the weight vector will converge to the subspace minimizing the training error (2), solving the least-squares regression problem,

| (5) |

where denotes the Moore–Penrose pseudoinverse. The error achieved by the pseudoinverse, along with more general dynamical results for even nonlinear students and teachers, have long been known (Krogh and Hertz, 1992, LeCun et al., 1991, Seung et al., 1992). Here we explicitly study the time course with which parameters are learned, and pursue connections to phenomena in modern deep learning.

First, we decompose the input data using the singular value decomposition (with , and ). The input correlation matrix is then

| (6) |

Next we write the input–output correlation matrix as

| (7) |

where the row vector will be called an alignment vector since it is related to the alignment between the input–output correlations and the input correlations.

Returning to the dynamics in (3), we make a change of variables to instead track the vector where . Applying (7) yields:

| (8) |

To make sense of the preceding equation we compute using the fact that

| (9) |

where we define , and under the assumption of white Gaussian noise, has i.i.d. elements drawn from a Gaussian with variance . The form of implies , so we can rewrite (8) as

| (10) |

The learning speed of each mode is independent of the others and depends only on the eigenvalue of the mode in question. As we will see, in the case of deep learning, there will be coupling between these modes. However in shallow neural networks, such coupling does not occur and we can solve directly for the dynamics of these modes. The error in each component of is simply

| (11) |

It follows from the definition of that the generalization error as a function of training time is

| (12) |

| (13) |

where the second equality follows from the assumption that the teacher weights and initial student weights are drawn i.i.d. from Gaussian distributions with standard deviation and respectively. By denoting the spectrum of data covariance eigenvalues as , the generalization error dynamics can be written as:

| (14) |

where we have normalized by to set the scale and defined the initialization noise ratio .

The generalization error expression contains two time-dependent terms. The first term exponentially decays to zero, and encodes the distance between the weight initialization and the final weights to be learned. The second term begins at zero and exponentially approaches its asymptote of . This term corresponds to overfitting the noise present in the particular batch of samples. We note two important points: first, eigenvalues which are exactly zero () correspond to directions with no learning dynamics (the right hand side of Eq. (10) is exactly zero) so that the parameters will remain at indefinitely. These directions form a frozen subspace in which no learning occurs. While in the original basis all weights typically change during learning, rotation into the principal component directions of the input data reveals these exactly frozen directions. Hence, if there are zero eigenvalues, weight initializations can have a lasting impact on generalization performance even after arbitrarily long training. Second, smaller eigenvalues lead to the most serious over-fitting due to the factor in the second term of the generalization error expression. The origin of this phenomena is that noise projected onto these smaller modes cause a large increase in the network weights (see (11)). Hence a large eigengap between zero and the smallest nonzero eigenvalue can naturally protect against overfitting. Moreover, smaller eigenvalues are also the slowest to learn, suggesting that early stopping can be an effective strategy, as we demonstrate in more detail later. Finally, the expression provides insight into the time required for convergence of the training dynamics. Non-zero but small eigenvalues of the sample covariance lead to very slow dynamics, so that it will take on the order of for gradient descent to minimize the training error.

The generalization dynamics (13) reveal the critical role played by the eigenvalue spectrum of the sample input covariance matrix. If there are many small eigenvalues generalization performance will be poor due to the growth in the norm of the student weights.

In many interesting cases, the distribution of the eigenspectrum of the sample covariance matrix is known in the high-dimensional limit where the input dimension and number of examples jointly go to infinity, while their measurement density remains finite. In particular, in the setting in which the inputs are drawn iid from a gaussian with zero mean and variance , the eigenvalue distribution of approaches the Marchenko–Pastur distribution (LeCun et al., 1991, Marchenko and Pastur, 1967) in the high dimensional limit:

| (15) |

for or , and is zero elsewhere. Here the edges of the distribution take the values and hence depend on the number of examples relative to the input dimension. Fig. 2A depicts this eigenvalue density for three different values of the measurement density . In the undersampled regime when , there are fewer examples than input dimensions and many eigenvalues are exactly zero, yielding the delta function at the origin. This corresponds to a regime where data is scarce relative to the size of the model. In the critically sampled regime , there are exactly as many parameters as examples. Here the distribution diverges near the origin, with increasing probability of extremely small eigenvalues. This situation yields catastrophic overtraining. Finally in the oversampled regime when , there are more examples than input dimensions, yielding the traditional asymptotic regime where data is abundant. The eigenvalue distribution in this case is shifted away from the origin.

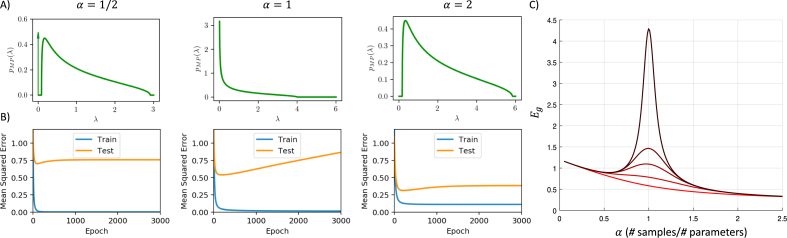

Fig. 2.

The Marchenko–Pastur distribution and high-dimensional learning dynamics. (A) Different ratios of number training samples () to network parameters () () yield different eigenvalue densities in the input correlation matrix. For large , this density is described by the MP distribution (15), which consists of a ‘bulk’ lying between , and, when , an additional delta function spike at zero. When there are fewer samples than parameters (, left column), some fraction of eigenvalues are exactly zero (delta-function arrow at origin), and the rest are appreciably greater than zero. When the number of samples is on the order of the parameters (, center column), the distribution diverges near the origin and there are many nonzero but arbitrarily small eigenvalues. When there are more samples than parameters (, right column), the smallest eigenvalues are appreciably greater than zero. (B) Dynamics of learning. From (13), the generalization error is harmed most by small eigenvalues; and these are the slowest to be learned. Hence for and , the gap in the spectrum near zero protects the dynamics from overtraining substantially (eigenvalues which are exactly zero for are never learned, and hence contribute a finite error but no overtraining). For , there are arbitrarily small eigenvalues, and overtraining is substantial. (C) Plot of generalization error versus for several training times, revealing a clear spike near . Other parameters: . As the colors vary from red to black the training time increases . (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Fig. 2B shows the generalization dynamics resulting from substituting (15) into (14) in the under-, critically- and over-sampled regimes. While all three exhibit overfitting, this is substantially worse at the intermediate point . Fig. 2C systematically traces out generalization performance as a function of at several training times, showing a clear divergence at . Hence overtraining can lead to complete failure of generalization performance when the measurement density is close to . The generalization error prediction (14) is also validated in Fig. 3 where we compare theory with simulations.

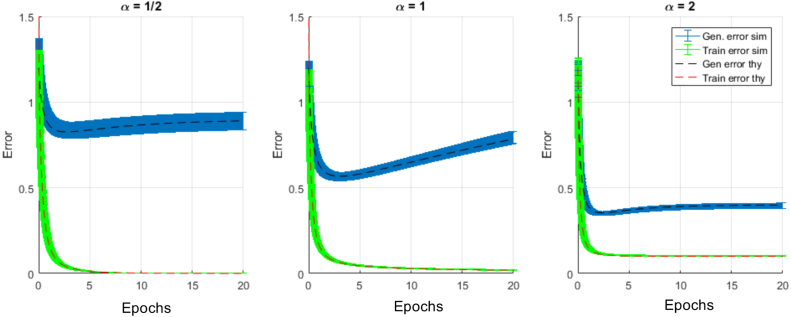

Fig. 3.

Generalization and training error dynamics compared with simulations. Here we demonstrate that our theoretical predictions for generalization and training error dynamics at different measurement densities (, and ) match with simulations. The simulations for generalization (blue) and training (green) errors have a width of standard deviations generated from 20 trials using , and Gaussian input, noise, and parameters with , and . The simulations show excellent agreement with our theoretical predictions for generalization and training error provided in (14), (26). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Remarkably, even this simple setting of a linear student and teacher yields generalization dynamics with complex overtraining phenomena that depend on the parameters of the dataset. In the following sections we explore aspects of these solutions in greater detail, and show that early stopping provides an effective remedy to combat overtraining.

2.2. Effectiveness of early stopping

The generalization curves in Fig. 2B improve for a period of time before beginning to worsen and converge to the performance of . Thus, there will be an optimal stopping time at which simply ending training early would yield improved generalization compared to training for longer times. The intuitive explanation for this is that by limiting time we are effectively regularizing the parameters being learned. This early stopping strategy is widely used in practice, and has been studied theoretically (Amari et al., 1996, Amari et al., 1995, Baldi and Hornik, 1995, Biehl et al., 2009, Chauvin, 1990, Dodier, 1995, Yao et al., 2007). To solve for the optimal stopping time numerically, we can differentiate (14) with respect to and set the result equal to zero to solve for . To gain qualitative insights into this problem, we solve for the stopping time that minimizes the error of each mode, yielding:

| (16) |

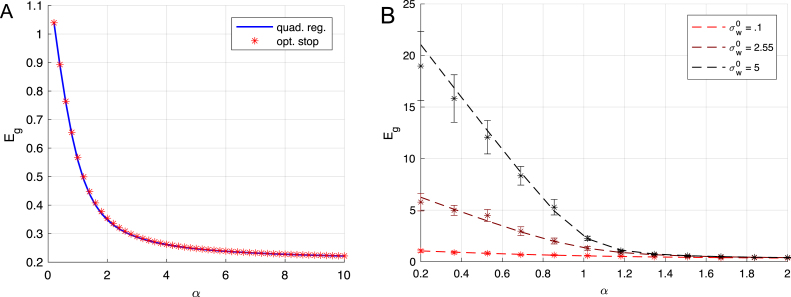

where we assume for simplicity . This qualitative scaling is verified in Fig. 4A. The intuition behind the logarithmic growth of optimal stopping time with SNR is that high quality data requires less regularization. However optimal stopping time does not achieve optimal stopping on every mode if there is a spread of eigenvalues in the input covariance, and to accurately select the optimal stopping time more generally, we must select to minimize Eq. (14), as done numerically in Fig. 4B. This reveals a dependence on measurement density, with longest training around where test error peaks.

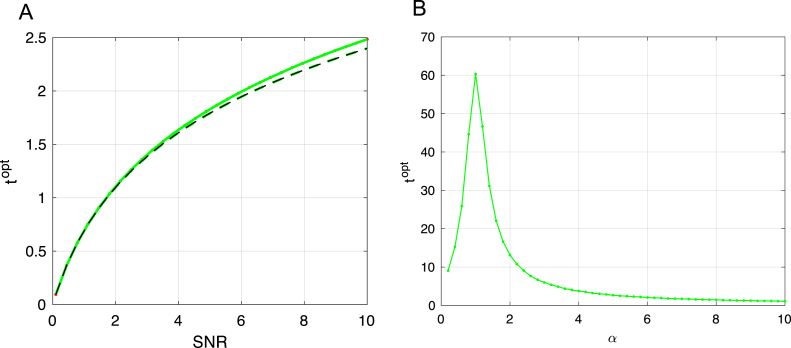

Fig. 4.

Impact of data quality and dimensionality on optimal stopping time. In (A) we plot the impact of SNR on the optimal stopping time with low measurement density , and compare this to the predictions of (16) with because the non-zero values of the MP distribution are highly peaked around this value at low measurement density. In (B) we plot the impact of measurement density on the optimal stopping time in the low noise (SNR 100) limit, where longer training is required near because the high quality of the data makes it beneficial to learn weights even in the small eigenvalue directions. In both plots green curves are optimal stopping time numerical predictions computed via gradient descent on using (14). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

2.3. L2 regularization

Another approach is the use of L2 regularization (also called weight decay), because the divergence of generalization error in the critical regime is due to divergence in the network weights. Here we compare the performance of early stopping of gradient descent to the performance of L2 regularization, which corresponds to solving the optimization problem:

| (17) |

This regularization yields a generalization error (Advani & Ganguli, 2016b) of the form:

| (18) |

Differentiating the above expression with respect to , one finds that the optimal performance of this algorithm occurs when the regularization strength is tuned to be inversely proportional to the signal-to-noise ratio: . In fact, under the assumption of noise, parameter, and input distributions drawn i.i.d. Gaussian, no algorithm can out-perform optimal L2 regularization in terms of generalization error performance, as shown in Advani and Ganguli (2016a) and Advani and Ganguli (2016c). Here we ask how close the performance of early stopping comes to this best-possible performance. In fact, if we substitute (16) for each mode into the expression for the time dependent generalization error (that is, if we could early-stop each mode individually), we obtain the same generalization performance as optimal L2 regularization, hinting at a strong relationship between the two methods. However, as mentioned above, early stopping generally performs suboptimally. We compare the two algorithms in Fig. 5A. There is a very close match between their generalization performances: here the relative error between the two is under 3 percent and is the largest around . This near-optimality of early stopping disappears when the initialization strengths of the network weights are large as shown in 5B. Hence early stopping can be a highly effective remedy against overtraining when the initial weights are small.

Fig. 5.

Optimal stopping performance. In (A) we plot the generalization error from early stopping (red stars) versus optimal quadratically regularized regression (blue line). In this example there is no more than 3 percent relative error between the two, which peaks near when the spectrum of non-zero eigenvalues is broader. In (B) we plot generalization error vs. for different initial weight norms. In the limited data regime, small initial weights are crucial for good generalization. In both plots, we have fixed SNR 5 and which implies that even at large measurement density the generalization error asymptotes to a non-zero value due to noise .

2.4. Weight norm growth with SNR

Given that longer training times provide the opportunity for more weight updates, one might also expect that the norm of the weights at the optimal early stopping time would vary systematically with SNR. Here we show that this intuition is correct. Assuming zero initial student weights, the average norm of the optimal quadratic regularized weights (derived in Appendix B) increases with SNR as:

| (19) |

The intuition behind this equation is that data with a lower requires greater regularization which leads to a lower norm in the learned weights. The norm of the learned weights interpolates between as and as . Thus the signal-to-noise ratio of the data plays a pivotal role in determining the norm of the learned weights using optimal regularization. In this setting, a well chosen algorithm leads to larger weights in the network when the data is easier to predict.3

2.5. Impact of initial weight size on generalization performance

In the preceding sections, we have shown the effectiveness of early stopping provided that the student is initialized with zero weights (). The early stopping strategy leaves weights closer to their initial values, and hence will only serve as effective regularization when the initial weights are small. To elucidate the impact of weight initialization on generalization error, we again leverage the similar performance of early stopping and L2 regularization when . If the student weights are not initially zero but instead have variance , the optimal L2 regularization strength is . This yields the generalization error,

| (20) |

In the limit of high , there are three qualitatively different behaviors for the dependence of generalization performance on the initial weight values in the under-sampled, equally sampled, and oversampled regimes. There is a linear dependence on initial weight size when , a square root dependence for , and no dependence when ,

Hence as more data becomes available, the impact of the weight initialization decreases. Our dynamical solutions reveal the source of this effect. When there are few examples relative to the input dimension, there are many directions in weight space that do not change because no examples lie in those directions, such that the component of weight initialization in these directions will remain indefinitely. It is therefore critical to initialize with small weights to maximize generalization performance in the overparametrized regime, as shown in Fig. 5B. Even when the number of examples is matched to the size of the model (), large-norm weight initializations remain detrimental for the optimal early stopping strategy because training must terminate before the influence of the initial weights has fully decayed. Hence, based on this simple linear analysis, excellent generalization performance in large models can be obtained through the two-part strategy of early stopping and initialization with small-norm weights. Remarkably, this strategy nearly matches the performance of the best possible Bayes-optimal learning strategy (optimal L2 regularization) for the setting we consider, and remains effective in the high-dimensional regime where the number of samples can be smaller than the size of the model.

2.6. Training error dynamics

Next we turn to the dynamics of the training error during learning. As we will show, these dynamics depend strongly on the small eigenvalues of the data covariance and weakly on the SNR. To derive the training error dynamics, we begin with the form:

| (21) |

Substituting the singular value decomposition of the data , and yields:

| (22) |

In the oversampled setting (), we define , and if we let so that in both cases . Rearranging the training error yields:

| (23) |

Here . From (11), we derived that the learning dynamics in linear networks follow:

| (24) |

Thus, we may write the training error as a function of time as:

| (25) |

where and the second sum in the expression above equals zero if . Note that the training error is strictly decaying with time. If , the training error will approach zero as each data point is memorized. If we average the training error dynamics over the noise, parameter, and data distributions, we find:

| (26) |

See Fig. 3 for demonstration that the theoretical prediction above matches the results of simulations.

This formula for training error dynamics may help explain recent empirical findings (e.g. Arpit et al., 2017, Zhang et al., 2017) that neural networks do not find it difficult to memorize noisy labels and require only slightly longer training times to do so. If we consider the amount of training time before reaches some pre-determined small value, this will increase slightly as noise is added to the output labels. If we consider the undersampled setting in which noise can be memorized , then at very late times () the training error will decay exponentially, so that to a good approximation:

| (27) |

It follows that the time required to reach a training error proportional to scales as:

| (28) |

Thus, increasing the variance in the output noise should lead to only a logarithmic increase in the time required to memorize a dataset.

3. Generalization dynamics in deep linear neural networks

The results in preceding sections have focused on the simplest case of a shallow neural network. We now turn to the question of whether similar qualitative properties occur in a class of simple deep networks. We consider a deep linear student network (Fig. 6A) with weights forming a -layer linear network. In response to an input , the student produces a scalar prediction following the rule . We initially make no assumptions about the size of the layers, except for the final layer which produces a single scalar output. Continuous time gradient descent on the mean squared training error yields the dynamics,

| (29) |

These equations involve coupling between weights at different layers of the network, and nonlinear interactions between the weights. While they compute a simple input–output map, deep linear networks retain several important features of nonlinear networks: most notably, the learning problem is nonconvex (Baldi and Hornik, 1989, Kawaguchi, 2016), yielding nonlinear dynamical trajectories (Arora et al., 2018, Du et al., 2018, Fukumizu, 1998, Ji and Telgarsky, 2019, Saxe et al., 2014).

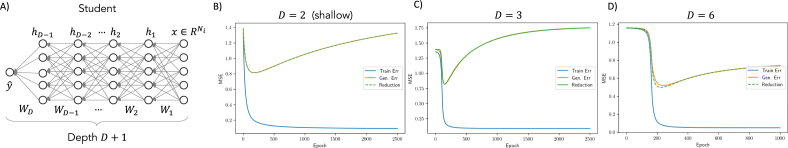

Fig. 6.

Reduction of deep linear network dynamics. (A) The student network is a deep linear network of depth . (B-D) Comparisons of simulations of the full generalization dynamics for networks initialized with small weights (gold) to simulations of the reduced dynamics (green) for different depths and parameter settings. Training error in blue. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

We wish to find a simplified expression that provides a good description of these dynamics starting from small random weights. Intuitively, the full gradient dynamics contain repulsion forces that attempt to orthogonalize the hidden units, such that no two represent the same feature of the input. When initializing with small random weights, however, hidden units will typically already be nearly orthogonal—and hence these orthogonalizing repulsive forces can be safely neglected as in Saxe et al. (2014). We begin by using an SVD-based change of variables to reduce coupling in these equations. We then will study the dynamics assuming that hidden units are initially fully decoupled—since this remains a good approximation for the full dynamics when starting from small random weights, as verified through simulation.

In particular, based on the observation that gradient descent dynamics in deep linear networks initialized with small weights extract low rank structure (Saxe et al., 2014), we make the following change of variables: , where is a row vector encoding the time-varying overlap with each principle axis in the input (recall ); and where the vectors are arbitrary unit norm vectors () specifying freedom in the internal representation of the network, is defined to be one, and is a scalar which encodes the change in representation over time. With these definitions, where we have defined the scalar . We show in Appendix A that solutions which start in this form remain in this form, and yield the following exact reduction of the dynamics:

| (30) |

| (31) |

These equations constitute a considerable simplification of the dynamics. Note that if there are hidden units in each layer, then full gradient descent in a depth network involves parameters. The above reduction requires only parameters, regardless of depth. Fig. 6 compares the predicted generalization dynamics from these reduced dynamics to simulations of full gradient descent for networks starting with small random weights and of different depths, confirming that the reduction provides an accurate picture of the full dynamics when starting from small random weights. A line of work has shown that the full gradient descent dynamics in fact converge to the low rank structure implied by these initialization conditions, in a wide range of settings beyond the student–teacher setting we consider here (Arora et al., 2018, Du et al., 2018, Ji and Telgarsky, 2019). Our result shows that, once attracted to this low rank solution, the generalization dynamics take a particularly simple form that reveals links to the shallow case.

In the reduction, all modes are coupled together through the global scalar . Comparing (31) with (8), this scalar premultiplies the shallow dynamics, yielding a characteristic slow down early in training when both and are small (see initial plateaus in Fig. 6C-D). This behavior is a hallmark of deep dynamics initialized close to the saddle point where all weights are zero, and has been found for training error dynamics as well (Goodfellow et al., 2015, Saxe et al., 2014). Remarkably, in the reduction the entire effect of depth is compressed into the scalar which sets a global speed of the dynamics shared by all modes. Otherwise, each mode’s dynamics is analogous to the shallow case and driven primarily by the size of its associated eigenvalue , with smaller eigenvalues being learned later. This suggests that optimal stopping will again be effective in deep networks, with comparable results to the shallow case. And as in the shallow case, eigenvalues that are zero make no progress, yielding a frozen subspace in which no learning occurs. Hence again, large initial weight norms will harm generalization error in the limited data regime in deep linear networks.

Thus for deep linear networks producing scalar outputs, our reduction predicts behavior qualitatively like their shallow counterparts in terms of their early stopping error and sensitivity to weight initializations. However, depth introduces a potential tension between training speed and generalization performance: small initial weights dramatically slow learning in deep networks, but large initial weights can harm generalization in the high-dimensional regime. This may underlie observations in full nonlinear networks that certain initializations can converge quickly but yield poorer generalization performance (e.g., Mishkin & Matas, 2016).

4. Generalization dynamics and optimal size in neural nets with frozen first layer weights

In practical settings, nonlinear activations are essential since they allow networks to express complex functions. In this section we explore the degree to which the qualitative intuitions obtained through studying linear networks transfer to the nonlinear setting by introducing a fixed, random first layer with non-linear activations in the hidden layer. Nonlinearity introduces the crucial question of model complexity: is there an optimal size of the student, given the complexity of the problem and the number of training samples available?

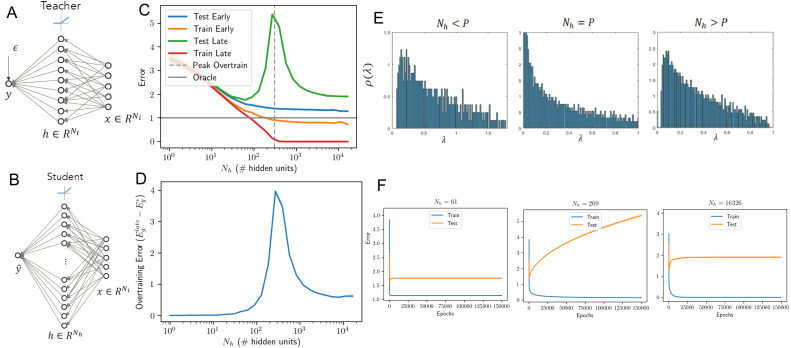

We begin by simulating the dynamics in a student–teacher scenario (Engel and Van Den Broeck, 2001, Saad and Solla, 1995, Seung et al., 1992, Watkin et al., 1993, Wei and Amari, 2008, Wei et al., 2008), as depicted in Fig. 7A-B. The teacher is now a single hidden layer neural network with rectified linear unit (ReLU) activation functions and hidden neurons. We draw the parameters of this network randomly. To account for the compression in variance of the ReLU nonlinearity, we scale the variance of the input-to-hidden weights by a factor (He et al., 2016, Saxe et al., 2014). We generate a dataset as before by drawing random Gaussian inputs, obtaining the teacher’s output , and adding noise. The student network, itself a single hidden layer ReLU network with hidden units, is then trained to minimize the MSE on this dataset. The procedure is to train only the second layer weights of the student network, leaving the first layer weights randomly drawn from the same distribution as the teacher. This setting most closely recreates the setting of the shallow linear case, as only the hidden-to-output weights of the student change during learning. Due to the nonlinearity, analytical results are challenging so we instead investigate the dynamics through simulations. For the simulations in Fig. 7, we fixed the teacher parameters to , and and the number of training samples to , and then trained student models at a set of hidden layer sizes ranging from smaller than the number of samples to larger. We average results at each size over 50 random seeds. Fig. 7C-D shows average errors as a function of the number of neurons in the student. Additionally, while we can only estimate the generalization error through cross validation in the setting without a teacher network, we find that the qualitative trends observed in the teacher–student framework hold when we instead use the MNIST dataset in Fig. 8.

Fig. 7.

Learning from a nonlinear teacher. (A) The teacher network ( ReLUs). (B) The student network ( ReLUs, but only the output weights are trained). (C) Effect of model complexity. Optimal early stopping errors as a function of number of hidden units for the case and training samples. Shaded regions show standard error of the mean over 50 random seeds. (D) Overtraining peaks at an intermediate level of complexity near the number of training samples: when the number of free parameters in the student network equals the number of samples (300). (E) The eigenspectrum of the hidden layer of a random non-linear neural network with samples and an dimensional input space. We consider three cases and find a similar eigenvalue density to a rescaled Marchenko–Pastur distribution when we concentrate only on the small eigenvalues and ignore a secondary cluster of eigenvalues farther from the origin. Left: Fewer hidden nodes than samples ( hidden units) leads to a gap near the origin and no zero eigenvalues. Center: An equal number of hidden nodes and samples () leads to no gap near the origin so that eigenvalues become more probable as we approach the origin. Right: More hidden nodes than samples () leads to a delta function spike of probability 0.5 at the origin with a gap to the next eigenvalue. (F) Average training dynamics for several models illustrating overtraining at intermediate levels of complexity. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

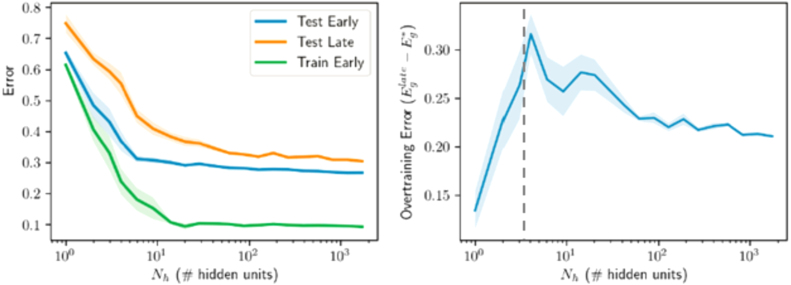

Fig. 8.

Training directly on data: two layer ReLU network trained on binary MNIST classification of 7s vs. 9s with 1024 training samples. First layer weights of student are random and fixed. The less pronounced peak is likely due to computational restrictions which limited training time and maximum model size (see text).

Our goal is to understand whether the qualitative patterns of overtraining obtained from the linear analysis hold for this nonlinear case, and we find the following empirical observations:

Overtraining occurs at intermediate model complexity. There is a peak in overtraining near where the number of samples in the dataset equals the number of hidden units/free parameters, with large models overtraining less than intermediate-sized models (Fig. 7C-D). Hence qualitatively, the network behaves akin to the linear case, with a peak in overtraining when the number of trainable parameters is matched to the number of training samples. To understand this behavior, we computed the eigenspectrum of the hidden layer activity in Fig. 7E. Despite the nonlinearity, we see qualitatively similar behavior to the linear case: the small eigenvalues approximately follow the MarchenkoPastur distribution (c.f. Fig. 2). The asymptotic distribution of the eigenvalues of this covariance matrix has been derived in Pennington and Worah (2017), and a similar observation about the small eigenvalues computed from the hidden layer was also used in a slightly different context to study training error in Pennington and Bahri (2017). We provide additional simulations in Fig. 14 showing this overtraining peak for different SNRs, which demonstrate that noisy data will amplify overtraining causing it to grow approximately proportionally to the inverse SNR as should be expected from (13).

Fig. 14.

Memorization, generalization, and . Nonlinear ReLU networks with fixed first layer (same setting as Fig. 7) are trained on target labels of varying . (A-B) Nearly random target labels (). In the high dimensional regime in which the model size outstrips the number of examples (here , indicated by vertical dotted line), all networks easily attain zero training error when trained long enough, thereby showing the ability to memorize an arbitrary dataset. (C-D) These exact same model sizes, however, generalize well when applied to data with nearly noise-free labels (). In fact in the low noise regime, even early stopping becomes unnecessary for large enough model sizes. The dynamics of gradient descent naturally protect generalization performance provided the network is initialized with small weights, because directions with no information have no dynamics. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Larger models are better when early-stopped. Strikingly, we find no evidence of overfitting: larger models are always better in terms of their early stopping test error. Here the teacher network has just 30 hidden neurons and the dataset consists of just 300 examples. Nevertheless, the best student model is the largest one tested, containing 16326 hidden units, or as many hidden neurons as the teacher and more neurons than training samples. This benefit of large models requires early stopping and initialization with small random weights, and hence reflects regularization through limiting the norm of the solution (see extended comments in Section 7). If instead training is continued to much longer times (green curve Fig. 7C), the optimal model size is smaller than the number of samples ( hidden units), consistent with standard intuitions about model complexity. We note that in this setting, although the late test error does not diverge to infinity in large models, it is nevertheless better for smaller models, which may appear to conflict with observations in other recent work (Belkin, Hsu, Ma et al., 2019). As we discuss in Section 6, whether an overparametrized model will generalize better than smaller models in our setting depends on the signal-to-noise ratio of the dataset.

Mathematical decomposition of generalization error

To gain insight into this more complex nonlinear case, we derive a decomposition of the test error into several interpretable components. In the setting of a frozen first layer and trained second layer, the generalization error can be decomposed into three parts: an approximation error, an over-fitting error, and a null-space error. This decomposition helps to explain how varying the number of parameters in a network can improve generalization performance when the number of examples is held fixed. It also provides one interpretation of how the student–teacher framework might apply to more realistic settings where the model is not in the same class as the data. In essence, the best possible neural network acts as an effective teacher, with the label noise capturing the remainder of the transformation that the neural network cannot represent. That is, we find that approximation error behaves very similarly to an external noise from the perspective of the neural network in estimating over-training error. This implies that even in settings when there is no label noise in the problem, there may be an effective noise based on the student network architecture.

We can write a two layer neural network in the form:

| (32) |

In general is the mapping from the input to a hidden representation and can be nonlinear. Here , , and . We leave the specific choice of general, but in a typical two layer network it would have the form: , where the matrix represents fixed input to hidden layer weights.

We then train the network using a set of input–output data for generated from some process which can be deterministic or stochastic. Assuming a MSE loss function the training error has the form:

| (33) |

We define the hidden layer activity matrix and the output matrix .

Assuming initial output weights of zero, the weights that will be learned at late time by the network are:

| (34) |

where we define:

| (35) |

The network weights which minimize the generalization error for the given architecture are simply:

| (36) |

where , and .

However, when training on finite data, the student network weights only grow in directions in which the hidden layer covariance is not null, so it is useful to define to be the weights which minimize generalization error subject to the constraint of being zero in the null space of the empirical hidden layer covariance. If we define the projection onto the space in which the data lies in the hidden layer as , then we can show that:

| (37) |

so that the best possible weights restricted to the subspace parallel to the data are simply the projection of onto this subspace.

We find one can decompose the generalization error into three terms to explain the behavior of late stopping generalization error as:

| (38) |

where we refer to the first term as the overfitting error , the second term as the null-space error , and the third term as the approximation error , and we define an approximation noise matrix:

| (39) |

The approximation error is defined as the minimal generalization error that can be achieved given a particular network architecture, by optimizing over the weights. If the network is too small or simple relative to the rule being learned the approximation error will be large.

The null-space error can be understood as additional approximation error due to the fact that no learning can take place in the null space of the hidden layer activity, and only occurs in this setting when the number of hidden units exceeds the number of examples.

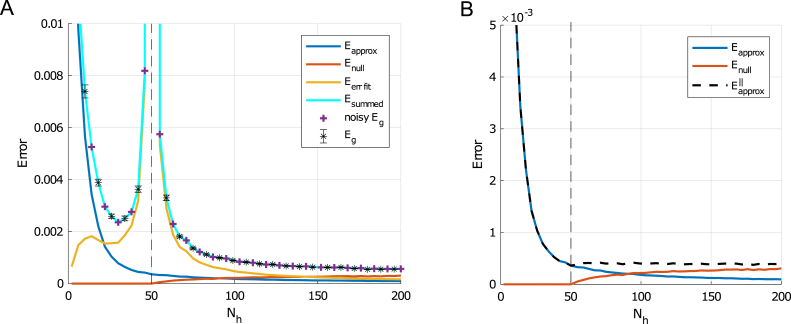

Finally, the over-fitting error is the error due to the fact the data set is finite and depends strongly on the ratio of number of parameters to examples through the eigenvalue decomposition of the empirical hidden covariance matrix. This over-fitting error is highly non-monotonic and can lead to a massive peak in generalization error at the point where the number of examples and free parameters matches. Derivation details on the decomposition calculation may be found in Appendix C. The decomposition is plotted in Fig. 9A and the sum is compared to the generalization error.

Fig. 9.

Two layer network generalization error decomposition: Here we use data generated from a two layer teacher network with ReLU activations, 20 hidden units, and no noise. The student network has fixed random first layer weights and ReLU activations. (A) Shows the approximation error in blue, the null-space error in red, the over-fitting error in gold, and the sum of all three in cyan. Generalization error computed from 5000 new examples are black error bars. Plus sign denotes generalization error with a two layer teacher with weights and Gaussian noise with a variance matched to the sum of approximation and null-space error. The number of examples used in training is (dashed vertical line) and number of trails used to compute error bars is 500. (B) Shows the approximation error (blue) and null space error (red) as well as the sum of the two (dashed black). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Interestingly, if we replace the actual data with new data generated from a model where noise is restricted to the data subspace:

| (40) |

where , we find a good agreement with the predictions of overfitting error and generalization from the linear network calculations (see Fig. 9A ‘noisy ’). Here the variance of the effective noise is due to the approximation error restricted to the data subspace:

| (41) |

This suggests that we can think of the approximation error as label noise which decays as the architecture grows until the number of parameters added exceeds the number of training examples at which point the restricted approximation error stops decreasing and begins to saturate (see Fig. 9B). Further generalization error improvement with wider networks in this context is not due to the networks reduced restricted approximation error, but to the eigenspectrum of the empirical co-variance, since the gap between small and zero eigenvalues grows with increased network width. Thus, the decomposition helps to explain how, even without regularization or early stopping, large neural networks avoid over-fitting and can achieve better generalization error when they have more parameters than examples.

With some additional modifications, it also helps one understand the impact of initialization weights and true label noise on the performance of non-linear networks of different sizes. Eq. (38) gives the generalization error at late times starting with zero initial weights. If instead initial weights are drawn iid with zero mean and variance , then for large networks we can average over this initialization to find:

| (42) |

where we can consider both the second and third terms part of the null-space error because the initialization weights are frozen during training, so that large initialization scales increase the generalization error when . In addition, i.i.d. label noise will appear in the generalization error under the transformation .

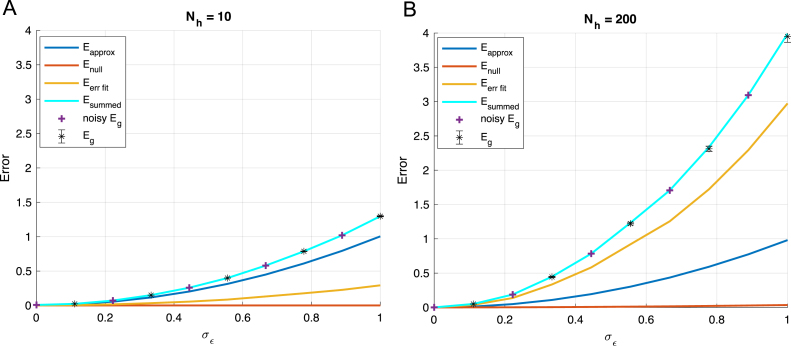

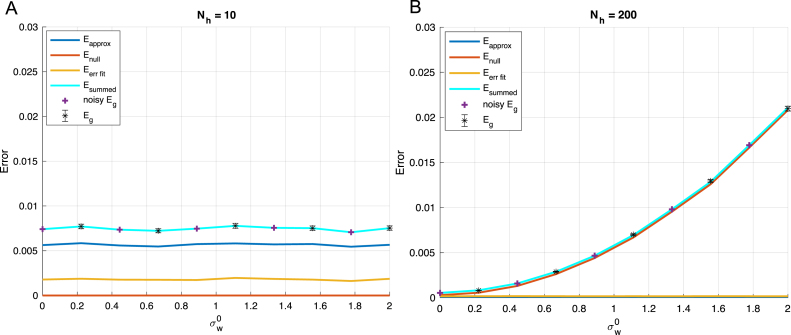

We demonstrate in Fig. 10 how label noise makes the shifts in approximation error due to the network architecture less important so that the over-fitting error and hidden layer covariance spectrum are more relevant in determining generalization error. Thus, this setting is most similar to the single layer network dynamics. We also demonstrate how initial weight scale can impact performance in large over-parameterized networks more than under-parameterized ones in Fig. 11. In this case, the null-space error grows with the variance of initialization and causes the generalization error to increase in the setting.

Fig. 10.

Impact of label noise on performance of wide networks: We plot the generalization error decomposition as in Fig. 9 for (A) an under-parameterized and (B) an over-parameterized network. Because output noise is added to both networks, approximation of the function is difficult even for the larger network because the function it is learning is not deterministic. However, the fitting error is larger in the larger network due to the eigenvalue spectrum of the hidden layer covariance. This is in contrast to our finding that larger networks perform better in the deterministic, low noise setting depicted in Fig. 9. Other parameters: , , , .

Fig. 11.

Impact of initialization on performance of wide networks. We plot the generalization error decomposition as in Fig. 9 for (A) an under-parameterized and (B) an over-parameterized network. We demonstrate that large initializations have a detrimental impact on generalization performance in wide neural networks which are over-parameterized and not on smaller networks. This effect is due to the frozen subspace observed when training with fewer examples than free parameters, and again is in contrast to our finding that larger networks perform better in the low initialization setting depicted in Fig. 9. Other parameters: , , , .

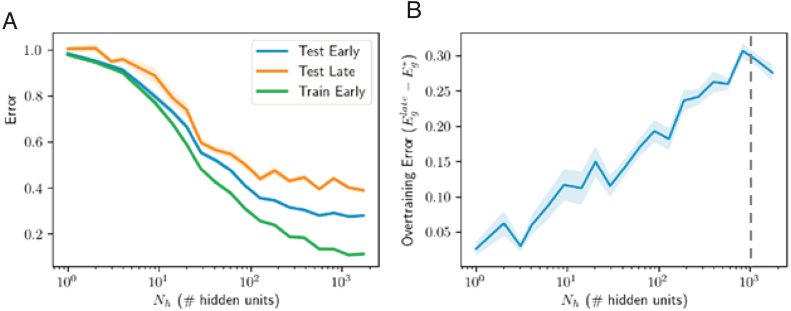

5. Fully trained two-layer network

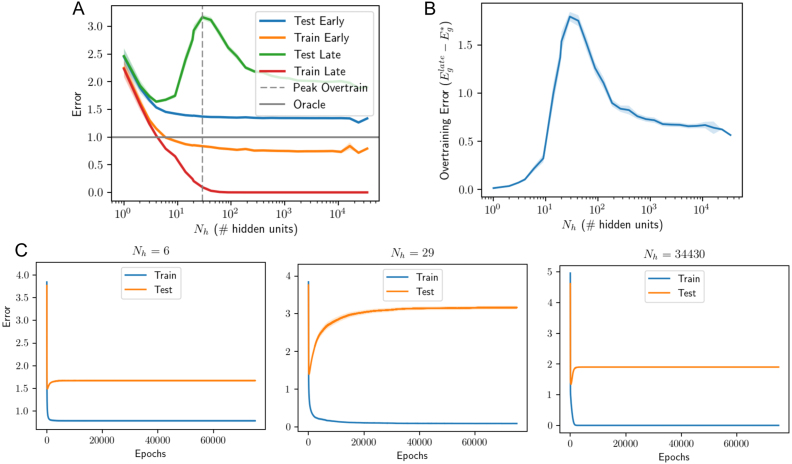

Next, we allowed both layers of the student network to train, as is typically the case in practice. Fig. 12 shows that similar dynamics are obtained in this setting. Overtraining is transient and peaked at intermediate levels of complexity, roughly where the number of free parameters () equal the number of examples . This is the point at which training error hits zero at long training times (red curve, Fig. 12A), consistent with recent results on the error landscape of ReLU networks (Soudry and Hoffer, 2017, Spigler et al., 2018). Regarding model complexity, again we find that massive models relative to the size of the teacher and the number of samples are in fact optimal, if training is terminated correctly and the initial weights are small. In particular, good performance is obtained by a student with 34,430 hidden neurons, or the number of teacher neurons and the number of examples. In contrast, without early stopping, the long-time test error has a unique minimum at a small model complexity of hidden neurons in this instance. Hence as before, early stopping enables successful generalization in very large models, and the qualitative behavior is analogous to the linear case. We caution that the linear analysis can only be expected to hold approximately. As one example of an apparent difference in behavior, we note that the test error curve when the number of parameters equals the number of samples (Fig. 12C middle) diverges to infinity in the linear and fix hidden weights cases (see Fig. 2B middle and Fig. 7F middle), while it appears to asymptote in the two-layer trained case (see Fig. 12C middle). Nevertheless, this asymptote is much larger at the predicted peak (when the number of free parameters and samples are equal) than at other network sizes.

Fig. 12.

Training both layers of nonlinear student. These simulations are of the same setting as that of Fig. 7, but with both layers of the student network trained. The number of hidden units for which the total number of free parameters is equal to the number of samples () is marked with a dashed line and aligns well with the peak in overtraining observed. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

5.1. Real-world datasets: MNIST

Finally, to investigate the degree to which our findings extend to real world datasets, we trained nonlinear networks on a binary digit recognition task using the MNIST dataset (LeCun, Cortes, & Burges, 1998). We trained networks to discriminate between images of 7s and 9s, following the setting of Louart, Liao, and Couillet (2017). Each input consists of a 28 × 28-pixel gray-scale image, flattened into a vector of length . Inputs were scaled to lie between 0 and 1 by dividing by 255 and each element was shifted to have zero mean across the training dataset. The target output was 1 depending on the class of the input, and the loss function remained the mean squared error on the training dataset. We train models using batch gradient descent with a single hidden layer for a fixed first layer and with both layers trained. Fig. 13 shows the resulting train and test errors for different model sizes and as a function of early stopping. Again, all qualitative features appear to hold: overtraining peaks when the number of effective parameters matches the number of samples, and larger models perform better overall. We note that in these experiments, due to computational restrictions, training was continued for only 5000 epochs of batch gradient descent (c.f. k epochs for the other experiments reported in this paper), yielding less pronounced overtraining peaks; and the maximum model size was 1740, making the peak in overtraining less identifiable, particularly for the fixed first layer case. There is additionally no evidence of overfitting, as the largest models outperformed smaller models. Overall, the qualitative findings accord well with those from the student–teacher paradigms, suggesting that our analysis accesses general behavior of generalization dynamics in gradient-trained neural networks.

Fig. 13.

Two layer network trained (without frozen weights) on binary MNIST classification of 7s vs. 9s with 1024 training samples. The qualitative trends identified earlier hold: over-training is a transient phenomenon at intermediate levels of complexity, and large models work well: no over-fitting is observed given optimal early stopping. Note that the over-training is less distinct than before primarily because these experiments were run for only 5000 epochs as opposed to tens of thousands of epochs as in Fig. 7, Fig. 12, due to computational constraints.

6. Double descent, interpolation, memorization, and generalization

In the interpolation regime where training error can be driven to zero, influential work by Belkin, Hsu, Ma et al. (2019) and Belkin, Hsu and Xu (2019) has shown that very large models can achieve better test error than smaller models. We find similar behavior in our Fig. 9, for a setting with little label noise. However, it is important to note that larger models do not always perform better after extensive training in our setting. Fig. 14 shows test error as a function of model size for two SNR levels. While we generically observe a double descent curve in which late test error is non-monotonic in hidden layer size (Belkin, Hsu, Ma et al., 2019), whether a smaller or larger model will achieve lower test error depends on the SNR. In the high label noise regime (Fig. 14A-B), smaller models perform better; while in the low noise regime (Fig. 14C-D), overparametrized models perform better. To understand the source of this difference, we turn to the error decomposition shown in Fig. 10: as noise increases, approximation error increases in small and large models because there is no way to exactly implement the non-deterministic teacher. However, the larger network has a larger error contribution from the error-fitting term, as shown in Fig. 10B (gold). Hence in the high noise regime, overparametrized models do not generalize as well as smaller models after extensive training.

To generalize well, a model must identify the underlying rule behind a dataset rather than simply memorizing each training example in its particulars. An empirical approach to test for memorization is to see if a deep neural network can fit a training set with randomly scrambled labels rather than the true labels (Zhang et al., 2017). If a network can fit arbitrary random labels, it would seem to be able to memorize any arbitrary training dataset, and therefore, have excessive capacity and poor generalization performance. This approach has been taken by Zhang et al. (2017), and extended by Arpit et al. (2017). Their main empirical findings conflict with this intuitive story: while large deep networks do readily achieve zero training error on a dataset that has been randomly labeled, they nevertheless generalize well when trained on the true labels, even without any regularization or early stopping. Our results provide a straightforward explanation of these phenomena in terms of the signal-to-noise ratio of the dataset and the high dimensional dynamics of gradient descent.

In our student–teacher setting, a randomly-labeled training set corresponds to a situation where there is no rule linking input to output, and is realized in the regime where . As shown in Fig. 14A (red curve), nonlinear networks with more parameters than samples can easily attain zero training error on this pure-noise dataset. However, they do not generalize well after substantial training, or even after optimal early stopping, because the model has fit pure noise. When the exact same networks are instead trained on a high dataset (Fig. 14C), they generalize extremely well, nearly saturating the oracle lower bound. Moreover, in the high- regime, the performance gain from early stopping nearly disappears for larger network sizes. To take a specific example, the largest network size considered () can easily fit noise labels in the low- regime, but attains the best generalization performance of all models in the high- regime, even without any early stopping. This behavior arises due to two important phenomena that arise in the high-dimensional setting: First, in overcomplete networks, gradient descent dynamics do not move in the subspace where no data lies. This frozen subspace implicitly regularizes the complexity of the network when networks are initialized with small norm weights. Regardless of network size, only a dimensional subspace remains active in the learning dynamics. Second, as overcompleteness increases, the eigengap (smallest nonzero eigenvalue) of the hidden layer correlation matrix also increases due to the nature of the Marchenko–Pastur distribution, which protects against overtraining even at long training times. This increasing eigengap is a fundamental property of the high dimensional setting. Thus large neural networks can generalize well even without early stopping, provided that the rule to be learned in the dataset is prominent, the network is large, and the network is initialized with small weights. We provide more details on this finding through the lens of Rademacher complexity in the following section.

7. Rademacher complexity and avoiding overfitting in non-linear neural networks

We want to understand why traditional Rademacher complexity bounds do not qualitatively match the overtraining picture observed in neural networks. In particular, they do not seem to square with the excellent generalization performance that can be achieved by large neural networks. To this end, we sketch how the dynamics of gradient descent learning may be included in the Rademacher complexity, yielding a bound which is more intuitively useful for understanding overtraining in neural networks. One particularly nice property of the bound we derive is that it shows how generalization can occur without early stopping: the Rademacher complexity can be expected to decrease with the number of hidden units for even when training is continued for long times.

As noted by Zhang et al. (2017), on the surface the excellent generalization ability of large networks seems to contradict the intuition from traditional measures such as Rademacher complexity and VC dimension. We find that a straightforward application of these measures yields not only loose bounds, but even the opposite qualitative behavior from what is observed in simulations.

A simple illustration of this can be seen in Fig. 7C where the task is fitting a random non-linear teacher. Here the size of the student network is increased well beyond the number of samples in the dataset and the number of hidden units in the teacher network, yet the generalization performance of early stopping continues to improve. The Rademacher complexity and VC dimension of the network is growing with the number of hidden units due to the fact that larger neural networks can more easily fit randomly labeled data, as discussed in Zhang et al. (2017). If we consider training a large non-linear neural network with a random first layer, the empirical Rademacher complexity of a classification problem (see e.g. Mohri, Rostamizadeh, & Talwalkar, 2012) is

| (43) |

where is the class of functions the network or classifier can have (mapping input to an output), and are randomly chosen labels. The Rademacher complexity measures the ability of a network to learn random labels, and leads to a bound on the gap between the probability of correct classification of a new example or training example, denoted as generalization error and training error respectively below. The bound states that with probability at least ,

| (44) |

As we increase the width of a large random network, we see empirically that the number of points which may be fit exactly grows with the size of the network. Thus the bound above becomes trivial when since in this limit and any random classification can be realized because solving for the weights in the final layer requires solving for unknowns with random constraint equations. This argument shows that the bound is loose, but this is to be expected from a worst-case analysis. More surprising is the difference in qualitative behavior: larger networks in fact do better than intermediate size networks at avoiding overtraining in our simulations.

However, we will see that a more careful bounding of the Rademacher complexity which uses the learning dynamics of wide networks does allow us to capture the overtraining behavior we observe in practice. For a rectified linear network with one hidden layer, a bound on the Rademacher complexity (Bartlett & Mendelson, 2002) is

| (45) |

where is the norm of the output weights, is the maximum L2 norm of the input weights to any hidden neuron, and is the maximum norm of the input data. From this it is clear that, no matter how many hidden neurons there are, the hypothesis complexity can be controlled by reducing the weight norm of the second layer.

Both early stopping and L2 regularization are capable of bounding the second layer weights and thus reducing overfitting, as we observe in simulations. In the case of early stopping, the dynamics scan through successively more expressive models with larger norms, and early stopping is used to pick an appropriate complexity.

Yet remarkably, even in the absence of early stopping we find that our analytic solutions provide a bound on the second layer weights in terms of the eigenspectrum of the hidden layer. The essential intuition is that, first, when a model is massively overcomplete, there is a large frozen subspace in which no learning occurs because no training samples lie in these directions; and second, the eigengap between the minimum eigenvalue and zero increases, protecting against overtraining and limiting the overall growth of the weights. To derive new bounds on the Rademacher complexity that account for these facts, we consider analytically how the weight norm depends on training time in our formulation. In the non-linear, fixed random first layer setting we are considering a model where

| (46) |

We will abuse notation because of the similarity with the single layer case, letting . If we then diagonalize the covariance of the hidden layer s.t. and solve for the dynamics of the weights, we find:

| (47) |

Here we define the vectors: , , and , and we denote the eigenvalues of the hidden layer covariance as . Thus, the average squared norm of the output layer weights is

| (48) |

In the case of a linear shallow network, without a hidden layer, the expression for the growth of the norm of weights is very similar, we simply substitute , and in this case the distribution of eigenvalues approach the Marchenko–Pastur distribution in the high dimensional limit.

We now make the assumption that the initial weights are zero (), since this is the setting which will minimize the error and our large scale simulations are close to this limit (note that in deep networks we cannot set the initial weights exactly to zero because it would freeze the learning dynamics). It follows that will monotonically increase as a function of time implying the Rademacher complexity bound is increasing with time.

The smallest non-zero eigenvalue constrains the maximum size that the norm of the weights can achieve, and zero eigenvalues result in no dynamics and thus do not impact the norm of the weights. Thus, even without early stopping, we can bound the norm of the weights in the hidden layer by an upper bound on the late-time behavior of (48) in the large limit:

| (49) |

The minimum over and arises because there will be no impact on learning from eigenvalues of strength zero, corresponding to the frozen subspace. The presence of the minimum eigenvalue in the denominator indicates that, as the eigengap grows, the bound will improve. Substituting this into (45) yields a bound on the Rademacher complexity in terms of the eigenspectrum of the hidden layer:

| (50) |

The above bound qualitatively matches our simulations with a non-linear network and fixed first layer weights, which show that the gap between training and generalization error at late stopping times drops as we increase the number of network parameters beyond the number of examples (see Fig. 7C-D). In the equations above, as we increase above , remains fixed at , but we do increase the minimum non-zero eigenvalue of the hidden layer covariance matrix (see e.g. Fig. 7E). This gap in the eigenspectrum can thus reduce the Rademacher complexity by bounding attainable network weights in massively overcomplete networks.

8. Discussion

Our findings show that the dynamics of gradient descent learning in the high-dimensional regime conspire to yield acceptable generalization performance in spite of large model sizes. Making networks very large, even when they have more free parameters than the number of samples in a dataset, can reduce overtraining because many of the directions of the network have zero gradient and thus are never learned. This frozen subspace protects against overtraining regardless of whether learning is stopped early. The worst setting for overtraining is when the network width matches the number of samples in shallow networks and when the number of parameters matches the number of samples in nonlinear random networks. Thus, our analysis of the learning dynamics helps to explain why overtraining is not a severe problem in very large networks.

Additionally, we have shown that making a non-linear two layer network very large can continuously improve generalization performance both when the first layer is random and even when it is trained, despite the high Rademacher complexity of a deep network (Zhang et al., 2017). We demonstrate this effect both on learning from a random teacher and on an MNIST classification task.

Our findings result from random matrix theory predictions of a greater abundance of small eigenvalues when the number of samples matches the number of parameters. In the under- or over-complete regimes, learning is well-behaved due to the gap between the origin and the smallest non-zero eigenvalue of the input correlation matrix. In our analysis, we have employed a simplified setting with Gaussian assumptions on the inputs, true parameters, and noise. However, the Gaussian assumption on parameters and noise is not essential. All that is required is that they are sampled i.i.d. with a finite mean and variance. In terms of other input distributions, we have shown empirically that our results apply for the MNIST dataset, but more broadly, the Marchenko–Pastur distribution is a universal limit for the eigenvalues of random matrices containing i.i.d. elements drawn from non-Gaussian distributions with sub-exponential tails (see e.g. Pillai, Yin, et al., 2014). Thus, the predictions are theoretically justified when the noise, parameters, and input are selected i.i.d. from non-heavy tailed distributions. We note that the exact extent of this universality is a current topic of research, and in particular, input distributions with strong correlations can deviate substantially.

Finally, our analysis points to several factors under the control of practitioners that impact generalization performance. To make recommendations for practice, it is necessary to understand the regime in which high-performing deep networks typically operate. We suggest that the relevant regime is the high-dimensional, high- setting (). In this regime, very large networks have dramatic advantages: they generalize better than their smaller counterparts, even without any regularization or early stopping (c.f. Fig. 14). Consider, for instance, our results on the MNIST dataset presented in Fig. 13. Here, while early stopping could improve performance, even at long training times the best model was the largest tested. For this setting, the practical message emerging from our theory is that larger models have no downside from a generalization perspective, provided they are initialized with small initial weights. Our results point to a strong impact of initialization on generalization error in the limited-data and large network regime: starting with small weights is essential to good generalization performance, regardless of whether a network is trained using early stopping.

Except for the deep linear network reduction, our results have focused on minimally deep networks with at most one hidden layer. It remains to be seen how these findings might generalize to deeper nonlinear networks (Kadmon & Sompolinsky, 2016), and if the requirement for good generalization (small weights) conflicts with the requirement for fast training speeds (large weights, Saxe et al., 2014) in very deep networks.

Since the original pre-print version of this paper was released, recent works have considered similar ideas (see Bahri et al., 2020 for a review). In particular, Spigler et al. (2018) trained deeper networks with a hinge loss and observed a similar empirical finding to the one observed in this paper that over-training is most problematic when the number of examples is near the number of effective parameters in a neural network. There has also been further investigation of the ‘double-descent’ phenomena (Belkin, Hsu, Ma et al., 2019, Belkin, Hsu and Xu, 2019), including in modern state of the art deep learning models (Nakkiran et al., 2020), and a growing number of papers have provided settings where double descent can be analyzed precisely (Ba et al., 2019, Bartlett et al., 2020, Belkin, Hsu and Xu, 2019, Hastie et al., 2019). For instance, Hastie et al. (2019) statistically analyze ridgeless regression (corresponding to infinitely late time in our analysis) in nonlinear settings. Going beyond learning readout weights from fixed nonlinear transforms, Ba et al. (2019) investigate asymptotic performance in a student–teacher setting where only the first layer weight matrices are trained, for different initialization regimes. Other studies have used tools from random matrix theory to investigate more complex input statistics than the simple Gaussian assumption considered here, including the impact of clustered inputs (Louart et al., 2017), and features arising from random nonlinear transformations (Pennington & Worah, 2017).