Abstract

Accurately quantifying dietary intake is essential to understanding the effect of diet on health and evaluating the efficacy of dietary interventions. Self-report methods (e.g., food records) are frequently utilized despite evident inaccuracy of these methods at assessing energy and nutrient intake. Methods that assess food intake via images of foods have overcome many of the limitations of traditional self-report. In cafeteria settings, digital photography has proven to be unobtrusive and accurate and is the method of choice for assessing food provision, plate waste, and food intake. In free-living conditions, image capture of food selection and plate waste via the user’s smartphone, is promising and can produce accurate energy intake estimates, though accuracy is not guaranteed. These methods foster (near) real-time transfer of data and eliminate the need for portion size estimation by the user since the food images are analyzed by trained raters. A limitation that remains, similar to self-report methods where participants must truthfully record all consumed foods, is intentional and/or unintentional under-reporting of foods due to social desirability or forgetfulness. Methods that rely on passive image capture via wearable cameras are promising and aim to reduce user burden; however, only pilot data with limited validity are currently available and these methods remain obtrusive and cumbersome. To reduce analysis-related staff burden and to allow real-time feedback to the user, recent approaches have aimed to automate the analysis of food images. The technology to support automatic food recognition and portion size estimation is, however, still in its infancy and fully-automated food intake assessment with acceptable precision not yet a reality. This review further evaluates the benefits and challenges of current image-assisted methods of food intake assessment and concludes that less burdensome methods are less accurate and that no current method is adequate in all settings.

INTRODUCTION

Accurately quantifying food intake (FI) is crucial for investigating the relationship between diet and health in observational studies, understanding the effects of dietary changes on the treatment and management of obesity and obesity-related diseases, and informing public health policies based on empirical data [1]. To date, self-report methods such as food records, food recalls, and food frequency questionnaires are the mainstay of nutritional epidemiology research [2] and commonly used to assess FI in clinical and research settings [3,4]. While self-report methods have helped to identify associations between consumption of different foods or diet quality and eating behaviors and diseases [1], evidence indicates that these methods frequently inaccurately assess energy and nutrient consumption [5], and their continued use in scientific settings has consequently been questioned and criticized [5,6]. Limitations of self-report methods and sources of their error include: (a) unintentional under-reporting of foods (forgetfulness), (b) intentional under-reporting of foods with negative health images (high-fat/high-sugar foods), (c) intentional over-reporting of foods that are perceived as healthy (fruits, vegetables), and (d) portion size estimation errors [7,8]. Further, reactivity due to awareness of being measured can cause changes in eating behavior, resulting in inaccurate reporting and the failure to capture habitual FI data [9]. People also have been found to undereat and lose weight when recording their FI [10]. The last limitation, however, highlights a strength of using self-report methods, as people become more aware of their FI and eating patterns when attempting to manage their body weight, even though the FI data are not necessarily accurate. Thus, self-reported FI remains a frequently-used tool in the clinical delivery of weight management services, with problems primarily occurring when these data are used to quantify intake.

Image-assisted methods, which rely on images of foods to estimate FI, are a promising approach to quantify FI that can overcome many of the limitations of self-report. For example, these methods can reduce user burden and eliminate the need for the user to estimate portion size. Additionally, many of these methods transmit food image data to researchers or clinicians in real time or near real time, providing a platform to adapt Ecological Momentary Assessment (EMA) [11] and other methods to detect and minimize missing data [12]. Over the past two decades, several image-assisted methods have been developed that include active or passive image capture and automated or semi-automated analysis of food images. As a result, some methods are better suited for certain conditions or populations vs. other methods. This review presents the strengths and weaknesses of currently available image-assisted methodologies for FI assessment and evaluates their validity in different settings and populations.

METHODS

We conducted a literature search through the PubMed electronic database for human studies from inception to February 2020. We included articles published in English, reporting image-assisted methods for FI assessment, assessing their feasibility, and validating them against weighed intake and doubly labeled water (DLW). The following search terms were used individually and in combination: diet*/food/energy intake, digital photography, valid*, reliab*, food record, image-assisted, image-based, portion size, wearable, food recognition. The references of articles were also screened for potentially relevant studies. For this review, methods were categorized as either primarily relying on human raters to estimate FI based on food images vs. methods that claim to be automated or semi-automated. As detailed herein, the term automated or semi-automated is somewhat of a misnomer, however, and those methods still require considerable effort from a human. Further, the reader should be cognizant that the methods used to capture food images can be distinct from the methods used to analyze the images.

RESULTS

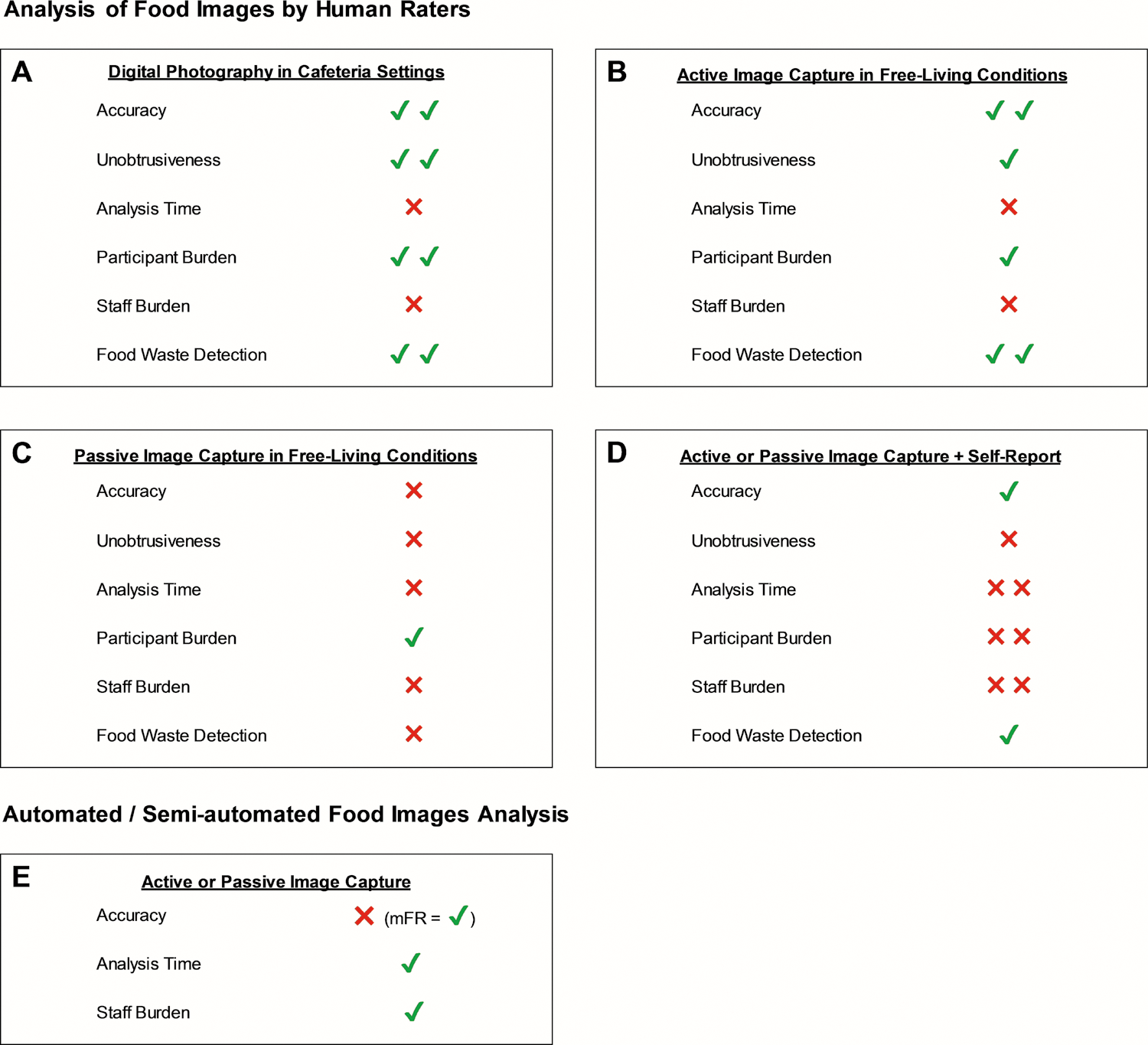

The literature search identified 278 articles. Forty-seven articles, reporting 12 distinct methods of image-assisted FI assessment met the inclusion criteria. Table 1 provides an overview of the included methods and their validation in various settings. Figure 1 illustrates the strengths and limitations of the different methodologies regarding their accuracy, feasibility, and ability to detect food waste.

Table 1.

Overview of image-assisted methods to measure food intake and studies validating these methods.

| Method | Methodology | Review / Analysis | Study Setting | Sample Size | Outcome | Reference Method | Reliability / Validity |

|---|---|---|---|---|---|---|---|

| Digital Photography of Foods Method (DPFM) [13,16,18,23] | Images of food selection and plate waste are captured with digital (video) cameras. | Human raters compare food images to images of weighed standard portions | Laboratory [13] | 60 test meals of 10 different portion sizes | Portion size | Weighed foods | Significant correlation with weighed foods of 0.92. Mean error in portion size was +5.2 g (SE 0.95) or 4.7% relative to weighed foods. |

| School cafeteria; 5 consecutive days of school lunches [16] | 43 school children | EI | Weighed foods | ICC for total EI was 0.93. Convergent validity was supported by significant correlation between food intake and adiposity (r=0.45) and discriminant validity was supported by non-significant correlation between food intake and depressed mood (r=0.1). | |||

| One laboratory-based test meal [18] | 22 preschool children | EI | Weighed foods | Significant correlation of DPFM with weighed foods of 0.96 and mean error in total intake of −4% compared to weighed foods. | |||

| School cafeteria; 7 days of school lunches and dinners [23] | 239 school children | EI | Weighed foods | Mean error in total intake of DPFM of 3 g (SD 20) or 1% compared to weighed foods. | |||

| Digital Photography + Recall (DP+R) [28] | Images of food selection and plate waste of cafeteria meals including notes to identify ambiguous foods and measuring cups/spoons to guide portion size estimation. Dietary recall to document any foods or beverages consumed outside the cafeteria. | Human raters compare food images to images of weighed standard portions and perform multi-pass dietary recall | Cafeteria and free-living conditions over 7 days | 91 adults with overweight/ obesity | EI | DLW | The mean EI estimated by DP + R was not significantly different from DLW, overestimating DLW by 264 kJ (SD 3138; 63 kcal [SD 750]) or 6.8% (SD 28) per day. No proportional bias variation as a function of the level of EI (r=−0.13, p=0.21). |

| Remote Food Photography Method (RFPM) [12,25,30–33,39] | Images of food selection and plate waste (including a reference card) are captured via smartphone app and sent to laboratory for analysis. | Human raters compare food images to images of weighed standard portions | Free-living conditions (6 days) and 2 laboratory-based buffet meals [12] | 50 adults | EI | DLW (free-living) and weighed foods (laboratory) | In free-living conditions, RFPM underestimated total EI by 636 kJ (SD 2904; 152 kcal [SD 694]) or 3.7% (SD 28.7) per day (p=0.16); ICC for daily EI was 0.74. |

| In the laboratory, underestimation for total EI was 17 kJ (SD 305; 4 kcal [SD 73]) or 1.2% (SD 62.8) and error for macronutrients was not significantly different from weighed foods. | |||||||

| Pre-packed lunch (consumed in laboratory) and dinner meals (consumed in laboratory or at home) over 3 days [25] | 52 adults | EI | Weighed foods | RFPM underestimated EI by 4.7%−5.5% (laboratory) and by 6.6% in free-living conditions. ICCs for EI were significant for laboratory (r=0.62; p<0.01) and free-living conditions (r=0.68, p<0.01). | |||

| Laboratory; 12-hour period [30] | 54 preschool children | EI | Weighed foods | RFPM significantly overestimated total EI by 314 kJ (SD 452; 75 kcal [SD 108]) or 7.5% (SD 10.0). The MPE for the macronutrient intakes ranged from 2.9% (fat) to 11.7% (protein), with high variability around the mean. | |||

| Free-living conditions over 7 days [31] | 39 preschool children | EI | DLW | RFPM underestimated total daily EI by a mean 929 kJ (SD 1146; 222 kcal [SD 274]) or 15.6%. | |||

| Laboratory; 2 visits 5–10 days apart [32] | 53 adults | EI | Weighed foods | RFPM underestimated EI of 2, 4, and 6 fl oz servings of infant formula by 6.7 kJ (SD 1.7; 1.6 kcal [SD 0.4]), 20.1 kJ (SD 2.5; 4.8 kcal [SD 0.6]), and 25.9 kJ (SD 4.2; 6.2 kcal [SD 1.0]), and overestimated intake by 0.4 kJ (SD 5.0; 0.1 kcal [SD 1.2]) kcals in 8 fl oz servings, but was equivalent to weighed intake within 7.5% for all servings. | |||

| Laboratory [33] | 7 bottles for each serving size (1, 2, 3, and 4-scoop) containing 5, 10, and 15% more and less formula than recommended | Serving size | Weighed foods | RFPM underestimated servings (1–4 scoops) of powdered instant formula by a mean 0.05 g (90% CI −0.49, 0.40) compared to directly weighed servings, with the MPE ranging from 0.32% to 1.58%. Estimates for all serving sizes were within 5% equivalence bounds. | |||

| Free-living conditions over 6 days at 2 time points (early vs late pregnancy) [39] | 23 pregnant women with obesity | EI | DLW | RFPM captured 64.4% (early pregnancy) and 62.2% (late pregnancy) of DLW-measured total daily EI and was not equivalent to DLW within 20% equivalence bounds. The underestimation was significantly associated with low reporting of snacks (R2=0.4). | |||

| Food Record App (FRapp) [43] | Images of food selection and plate waste including fiducial marker, captured with smartphone app. Additional options to capture food intake are speech-to-text conversions, capturing food label/nutrition facts images, selecting from recently recorded foods. | Human raters analyze recordings (images of food, labels or text recordings) of eating events | Free-living conditions over 3 days | 18 adolescents | N/A1 | N/A1 | N/A1 |

| Nutricam Dietary Assessment Method (NuDAM) [44] | Images of food selection (with fiducial marker) combined with a voice recording describing the foods, leftovers, location, and meal occasion, and a brief follow-up phone call the next day. | Trained professionals analyze food images, voice recording, and phone calls | Free-living conditions over 3 days | 10 adults, diagnosed with T2DM | EI | DLW | NuDAM underestimated total daily EI by 24% compared to DLW. |

| Multiple-pass 24-hour dietary recall + SenseCam (MP24+SC) [45] | SC (worn around the neck on a lanyard) captures images of eating events every 20 seconds, triggered to turn on by its sensors. Images of eating events are combined with MP24. | Review of food images and MP24 with participant to allow modification of self-report; estimation of EI by trained dietitian | Free-living conditions over 3 non-consecutive days | 40 adults (20 men, 20 women) | EI | DLW | MP24 + SC underestimated EI by 9% in men and by 7% in women compared to DLW. The addition of SC reduced the error in EI by approximately 50% compared to MP24 alone. |

| Micro-camera [47] | Micro-camera is worn on the ear and captures audiovisual recordings during meal times. Recordings are combined with food diary entries. | Human raters analyze food images and food diaries | Free-living conditions over 2 days | 6 adults | EI | DLW | Compared to DLW, daily EI was underestimated by 3912 kJ (SE 1996; 935 kcal [SE 477]) or 34% by food diaries alone and by 3507 kJ (SE 2170; 838 kcal [SE 519]) or 30% when combined with the micro-camera. The difference between the two methods was significant (p=0.02). |

| mobile Food Record (mFR) [50–52] | Food images are captured with the mFR app and sent to a server for analysis. After review by the user, volume and nutrient content are estimated by the app. | Automatic portion size estimation based on statistical pattern recognition techniques of the image | Free-living conditions over a 24-hour period [50,51] | 15 adolescents | Portion size | Weighed foods | Mean error in automated weight estimates using mFR compared to known weights ranged from a 38% underestimation to a 26% overestimation, with 75% of all analyzed food being within 7% of the true value. |

| Free-living conditions over 7.5 days [52] | 45 adults | EI | DLW | mFR EI correlated significantly (r=0.58) with DLW-measured daily EI and underestimated EI by 12% (SD 11) for men and 10% (SD 10) for women compared to DLW, with no systematic bias with increasing EI. | |||

| GoCARB [58] | Food images are captured with a smartphone from two different angles including a reference card. | Automatic segmetation and recognition of food items and reconstruction of their 3D shape | Cafeteria | 19 adults, 114 test meals | Carbohydrate content, food recognition | Weighed foods | The mean absolute estimation error of GoCARB compared to precisely weighed carbohydrate content was 26.9% (SD 18.9). Automatic food recognition was correct for 85.1% or all food items. |

| FoodCam [59] | The user captures a picture of the food and draws boxes around it to initiate the analysis process. The system populates possible food items and the user selects the best fit. | Automatic food recognition and portion size estimation | Laboratory | N/A2 | N/A2 | N/A2 | N/A2 |

| Snap-n-Eat [60] | The user captures a picture of the food and the system automatically estimates energy and nutrient content. | Automatic portion size estimation by image segmentation | Laboratory | 2,000 food images for 15 food categories | Food classification | N/A | 85% accuracy when classifying 2000 images of food items of 15 different categories. |

| eButton [61] | Food images are captured automatically by a chest-worn camera every 2–4 seconds. Human rater selects 3D models from software’s library, overlaying the food, and volume of food is then estimated by the software. | Semi-automatic analysis of food images | Laboratory | 7 adults capturing 100 pictures of foods | Portion size | Seed displacement method | The mean relative error across all food samples was −2.8% (SD 20.4) and the error for 85 out of 100 foods was between −30% and 30% compared to seed displacement. |

Feasibility study only, to date no validation of the method.

Usability study only, to date no validation of the method.

Abbreviations: CI, confidence interval; DLW, doubly labeled water; EI, energy intake; ICC, intra-class correlation coefficient; kJ, kilojoule MPE, mean percent error; SD, standard deviation; SE, standard error; T2DM, type 2 diabetes mellitus.

Figure 1.

Overview of different dietary assessment methods concerning accuracy, unobtrusiveness, analysis time, participant burden, staff burden, and food waste detection. Panels A-D illustrate methods that rely on human rater-based analysis where images are captured in cafeteria settings (Panel A), actively captured by users in free-living conditions (Panel B), passively captured in free-living conditions (Panel C), and passively or actively captured and combined with self-report methods (Panel D). Panel E illustrates systems that automatically or semi-automatically analyze images that are captured actively or passively. It is recognized that these methods differ widely and that many of these systems have not been validated, limiting the information available to perform the ratings displayed in the figure. It is noted, however, that the mFR is among the most studied and validated methods in this category. Each category was rated based on a 4-point scale with ✖✖= poor; ✖= fair; ✔= good; ✔✔= excellent.

Analysis of Food Images by Human Raters

The Digital Photography of Foods Method (DPFM)

The Digital Photography of Foods Method (DPFM) was developed to allow unobtrusive estimation of FI in cafeteria or similar settings [13,14], and this method or very similar methods have been developed and utilized by many groups [13–23]. These methods use digital video cameras or other devices (e.g., smartphones) to quickly capture images of participants’ food selection and plate waste and of precisely weighed standard portions of the foods served on the day of data collection. The images of the weighed standard portions serve as reference images during the analysis of participants’ food images, which can occur after data/image collection. The foods in the reference images are linked to foods in the United States Department of Agriculture’s (USDA) Food and Nutrient Database for Dietary Studies (FNDDS) [24], an alternative nutrition database, manufacturer’s information, or a custom recipe. This allows estimation of energy and nutrient intake. Trained raters analyze the images via computer software that simultaneously displays images of (a) the participant’s food selection, (b) plate waste, and (c) the weighed standard portion for each food consumed. The rater then estimates the number of portions of the standard portion of food that was selected and discarded. The software then calculates the amount of food selected, plate waste, and FI, which is the difference between food selection and plate waste.

Portion size estimates from this method have been shown to strongly correlate with weighed portion sizes (r=0.92) [13] and mean overestimation of image-based estimates compared to weighed foods is small, i.e., 5.2 g (standard error [SE] 0.95) or 4.7% of the weighed value. The mean deviation of individual food items such as entrées (17.5 g [SE 4.3]; 6.9%), starches (−1.2 g [SE 1.1]; −1.7%), fruits/vegetables (4.8 g [SE 1.8]; 5.9%), desserts (4.2 g [SE 2.6]; 5.4%), and beverages (7.6 g [SE 3.1]; 4.3%) were likewise small for image-based estimates of total intake compared to weighed estimates; however, condiment intake tended to be overestimated by 4.9 g (SE 4.6; 17%) [13]. This limitation is not unique to this method, and condiments typically do not account for a large proportion of daily FI. In school children (N=239), the mean difference between image-based and weighed estimates of total intake (g) was likewise very small, i.e., 3 g (standard deviation [SD] 20) or 1% [23] and in preschool children (N=22) digital diet estimates were 4% lower than the actual weights [18]. Importantly, agreement among raters has been shown to be high (intraclass correlation coefficients of 0.84 [14] and even 0.92 [16] and 0.93 [25], and Cohen’s κ of 0.78 [23]).

The DPFM and similar methods have proven to be adaptable and provide a comprehensive assessment of FI related behaviors, and the accurate quantification of plate waste is a unique strength of this and other image-assisted methods, particularly considering the goal of cutting food waste by 50% in the United States by 2030 [26]. Further, food selection/provision and waste data can be used to determine if efforts to improve diet quality result in higher plate waste due to people not eating the healthier foods, or if food provision and waste systematically differ such that dietary intake is more or less healthy [27]. Examples of the feasibility and utility of digital photography include its ability to estimate FI of large and diverse populations in various settings, including soldiers (N=139) during basic combat training [15], elementary school children (N=670) during school lunches over 2 years [15], and >2000 children from 38 schools over a 3-year period where intake was quantified for 3 days at 3 different time points [17]. Further, digital photography methods have been used to (a) characterize lunch meals served to preschoolers (N=796) enrolled in Head Start centers [20], (b) estimate FI at family dinners of 231 minority preschool children [19], (c) compare elementary school students’ food selection in the school cafeteria to the Institute of Medicine’s recommendations across 33 elementary schools, and (d) evaluate the effectiveness of a 28-month school-based obesity prevention intervention (LA Health) at reducing children’s selection and consumption of added sugars and sodium during school lunches [22,27]. Finally, digital photography has been used to assess changes in energy and macronutrient intake during a 16-month exercise trial (Midwest Exercise Trial-2 [21]) in 91 participants over four 7-day periods of ad libitum eating in a university cafeteria.

In summary, the validity and utility of the DPFM and similar methods indicate that they have become the method of choice for quantifying food selection, waste, and intake in cafeteria settings.

Digital Photography + Recall (DP+R)

The Digital Photography + Recall (DP+R) method estimates total daily energy intake (EI) by combining digital photography (pre-post meal images of food) for assessing EI in a cafeteria setting with dietary recall to record foods consumed outside of the cafeteria setting [28]. The DP+R method includes placing notes on the cafeteria tray to describe any difficult-to-identify food/drink items. Additionally, typical measuring cups and spoons are included in the images to facilitate the estimation of portion size. Multiple-pass dietary recalls are performed at each cafeteria meal to document any foods or drinks consumed outside of the cafeteria setting that day [28]. The DP+R method is valid in estimating total EI (required minimum of two cafeteria meals per day) in 91 young adults with overweight or obesity over 7 days. The mean overestimation of EI was 264 kJ (SD 3138; 63 kcal [SD 750]) per day or 6.8% (SD 28) compared to DLW whereby 28.8% of the total estimated daily EI occurred from foods consumed outside of the cafeteria [28]. The implementation of smartphone-captured images of foods consumed outside of the cafeteria may further improve the accuracy of the DP+R method and at the same time reduce participant burden.

Remote Food Photography Method © (RFPM)

The Remote Food Photography Method © (RFPM) resulted from the adaptation of DPFM methods for free-living conditions [12,25,29]. When using the RFPM, participants place a reference card next to their food and capture an image of their food selection and plate waste using the SmartIntake® app on a smartphone or other camera-enabled device. For foods that cannot be identified by wrappers or containers, participants briefly annotate the images (e.g., “chicken nuggets”). The annotated images are sent wirelessly to the laboratory via the app. Image information data (date, time, geolocation) are recorded and stored for all food images. In the laboratory, the images are analyzed to estimate FI using methods similar to the DPFM [13,14]: the foods in the images are linked to a nutrient database via computer software and compared to images of foods with known portion size. The result is detailed data on food selection, plate waste, and FI by difference.

A weakness of the RFPM is that it depends on participants’ ability to remember or not neglect to capture images of all consumed foods and calorie-containing beverages. To help address these concerns and ultimately improve data quality and completeness, EMA methods [11] have been incorporated. EMA methods prompt participants to capture images by sending reminders (text messages, push notifications) around participants’ typical meal times [25,29]. A web-based computer system tracks the delivery of prompts as well as participants responses to the prompts, allowing study personnel to more easily detect missing data in near real time. To capture FI data in the case of missing images or phone/app malfunction, participants are asked to additionally use a back-up method.

The reliability and validity of the RFPM have been tested in several different settings and populations [12,25,30–35]. First, the RFPM was validated against weighed lunch and dinner meals over three days, which participants (N=52) consumed either in the laboratory or in free-living conditions [25]. The RFPM underestimated daily EI by only 151 kJ (SE 81; 36 kcal [SE 19]; 5.5%) in the laboratory and by 406 kJ (SE 159; 97 kcal [SE 38]; 6.6%) in free-living conditions [25]. Further, the mean difference in estimating EI was stable over different levels of EI and did not differ by body weight or age [25]. Second, the RFPM was validated in adults (N=50) over six days in free-living conditions against DLW [12], which is considered accurate for quantifying EI over time in free-living individuals [36]. Total daily EI estimates from the RFPM did not differ significantly from DLW with a mean daily underestimation of 636 kJ (SD 2904; 152 kcal [SD 694]) (p=0.16) or 3.7% (SD 28.7) and a consistent error over different levels of EI [12]. Further, the RFPM’s accuracy in estimating nutrient intake was confirmed in two laboratory-based test meals, in which intake of macronutrients and most micronutrients (Calcium, Sodium, Iron, Fiber, Vitamin C) was not significantly different from weighed values [12]. Assessing FI with the RFPM also was not associated with reactivity or changes in EI [12], and, similar to the DPFM, the RFPM has proven feasible and effective at quantifying the plate waste of adults in free-living conditions [37].

The RFPM and SmartIntake® app have proven accurate at measuring infant formula in baby bottles at different stages of preparation (dry powdered formula, prepared formula, liquid waste). The RFPM was equivalent to all weighed servings of formula within 7.5% equivalence bounds and it underestimated EI by ~3% compared to direct weighing [32,33]. With preschool children who eat solid foods, the RFPM’s validity is less consistent, however. Specifically, in preschool children (N=54) who lived in a research unit for one day, the RFPM overestimated total intake in grams and kJ by 2.9% (SD 6.6) and 7.5% (SD 10.0), respectively, compared to weighed intake, and bias increased with higher levels of intake [30]. In free-living conditions over seven days, however, the method underestimated total daily EI by 929 kJ (SD 1146; 222 kcal [SD 274]; 15.6%) when compared to DLW [31]. Although this level of error is in the adequate reporting range identified by Burrows et al. in their review of FI assessment methods in children [38], the results demonstrated that, when the RFPM and SmartIntake® app are used by children’s caregivers, the method and app require refinement to obtain the desired level of validity in young children. The authors noted that the biggest challenge in this target group was providing sufficient training to all caregivers (some were not disclosed by the families) and ensuring that images of all meals, snacks, and beverages were captured and sent to the laboratory [30,31]. In pregnant women with obesity, the RFPM similarly was not able to accurately estimate EI, capturing only around 64% (SE 2.3) of DLW-measured total daily EI [39], which appeared to be due, at least in part, to participants failing to capture images of snacks [39].

The lackluster validity data from the pediatric and pregnancy studies highlighted challenges with the EMA prompts that were used in the older version of SmartIntake®. Specifically, the prompts were previously sent via email, while subsequent versions of the app utilize both push notifications (pop-up messages that are received on one’s smartphone, even if the app is not currently in use) and text messages to deliver EMA reminders, improving their effectiveness. Nonetheless, the data indicate that when images are captured, an accurate estimate is typically obtained. The RFPM and SmartIntake® app also have proven feasible and to produce clinically relevant data in demanding conditions, including assessing meal timing, location, level of preparation, and quality of dinner meals among rural, low-income families (N=31) over one week (153 dinner meals) [34,35]. Finally, the RFPM was a feasible and acceptable method for parents of young children (N=9) with type 1 diabetes mellitus to assess breakfast nutrition over three days [40].

In summary, the RFPM and SmartIntake® app have many of the same benefits as the DPFM and similar methods, including adaptability to various populations and settings. Additionally, the reference card that is used with the RFPM can facilitate portion size estimates but is not entirely necessary. It does, however, provide a platform for computer imaging algorithms to (a) standardize the images for distance, angle, and color, and (b) attempt to identify and estimate the portion sizes of the foods [41,42].

Food Record App (FRapp)

The Food Record App (FRapp) uses a methodology similar to the RFPM asking participants to capture and annotate images of all foods and beverages before and after consumption in free-living conditions and to include a fiducial marker (reference card) in each image [43]. FRapp integrates text entry, prompts predefined for eating occasions, and real-time communication between the user and clinician/researcher. The app also allows dietary intake recording via methods other than food images, including speech-to-text conversions with food item extraction, capturing food label/nutrition facts/barcode photos, and selecting from recently consumed food sets [43]. FRapp was an accepted method for dietary intake assessment in community-dwelling adolescents (N=18) in a free-living environment over three days; however, only 60% of all eating events with images included the fiducial marker and only 40% included both a pre- and post-meal image, indicating the need for further refinement of the method to improve data completeness in this population [43]. The FRapp has not yet been validated regarding its accuracy in estimating EI in either a laboratory or free-living setting. Validation of the FRapp will be important to evaluate whether the various options for dietary input, which could affect rater/analysis and user burden, yield any additional benefit to the accuracy of the method compared to methods that rely solely on food images.

The Nutricam Dietary Assessment Method (NuDAM)

The NuDAM combines a phone-captured image of food selection (with reference card) with a voice memo describing the food selection and waste as well as location and type of meal. In addition, on the following day, a brief follow-up phone call is used to probe for commonly underreported foods, and adjustments to the voice memos are made accordingly [44]. The image and accompanying voice recording are analyzed by trained professionals. In a pilot study (N=10) NuDAM was compared to DLW regarding its accuracy in assessing total daily EI over 3 days. NuDAM (8.8 MJ [SD 2.0]; 2102 kcal [SD 478]) underestimated total daily EI compared to DLW (11.8 MJ [SD 2.3]; 2819 kcal [SD 549]) by around 24%, likely due to under- or non-reporting of consumed foods or sugary beverages [44]. The accuracy of NuDAM has only been assessed in a pilot study and further studies with larger sample sizes are needed. However, it is noteworthy that the average underestimation of 24% compared to DLW is rather large compared to that of similar methods that are less burdensome and do not require a follow-up phone call (e.g., the RFPM).

24h Multiple-pass Dietary Recall + SenseCam (MP24+SC)

The MP24+SC method combines multiple-pass 24h dietary recall with SenseCam images taken throughout the day on the day before the recall [45]. SenseCam is a wearable camera with a wide-angle lens and built-in accelerometer, heat sensor, and light sensor. It is worn around the neck on a lanyard and captures images approximately every 20 seconds, as triggered by the sensors [46]. Participants wear the SenseCam continuously; however, they have the option to remove it whenever they are in a location or situation in which they deem photography inappropriate. On the following day, after completion of the dietary recall by trained dietitians, participants may review all SenseCam images in private and delete any images they prefer not to share. Following this, the researcher reviews the SenseCam images with the participant, asking the participant to confirm or modify the self-reported foods without giving any suggestions. Gemming et al. [45] assessed EI with the MP24+SC method over three non-consecutive 24h periods in free-living conditions (N=40) and found that on average, total daily EI as assessed by MP24+SC (13196 kJ [SD 2529]; 3154 kcal [SD 604]) was underestimated by 9% compared to DLW (14485 kJ [SD 2632]; 3462 kcal [SD 2632]) in men (n=20) and by 7% (10091 kJ [SD 1672]; 2412 kcal [SD 400] vs 10841 kJ [SD 1639]; 2591 kcal [SD 392]) in women (n=20). Compared to MP24 alone, which underestimated average daily EI by 17% (men) and 13% (women) compared to DLW, the addition of the SenseCam reduced the error in daily EI estimation by almost 50%, as previously unreported foods (often snacks) were identified [45]. These data are impressive, though the method has considerable participant and staff burden related to the participant identifying situations/locations in which photography is inappropriate and turning off the SenseCam, the need for the participant to screen all images, and the participant reviewing the images with a staff member.

Micro-camera

This method combines a lightweight, wearable micro-camera, worn on the ear similar to a wireless earpiece for cell phones, with a food diary [47]. The micro-camera has a wide-angle lens (170°) and a microphone for audio recordings during meal times. In a pilot study (N=6), total daily EI estimates from food diary entries over 2 days were analyzed with and without the additional audio-visual micro-camera recordings and compared to EI measured via DLW [47]. The addition of the micro-camera improved the accuracy in estimating total daily EI only slightly from a 34% underestimation (−3912 kJ [SE 1996]; 935 kcal [SE 477]) to a 30% underestimation (−3507 kJ [SE 2170]; 838 kcal [SE 519]) compared to DLW. Much of the underestimation was likely due to underreported foods/snacks and the fact that participants forgot or chose not to turn on the camera during meal times. Interpretation error in estimating intake by the assessors likely further contributed to the large underestimation [47]. Substantial refinement of the method and studies with larger sample sizes are necessary to justify the additional burden of wearing the micro-camera, which in its current state, did not lead to clinically meaningful improvements in EI estimation compared to the food diaries alone.

Automated and Semi-automated Analysis of Food Images

Mobile Food Record (mFR)

The Mobile Food Record (mFR) method has been extensively studied and consists of a smartphone app-based food record and a backend secure cloud-like image analysis system [48,49]. When using mFR, the user captures an image of the food (including a fiducial marker in the image), which is then transmitted to a server for automatic analysis. The analysis process is based on statistical pattern recognition techniques, identifying food and drink items in the image by comparing the image with those in the database. Next, the labeled image is returned to the participant for review, who then confirms or corrects the automatic labels before sending the image back to the server for final identification and automatic volume estimation via 3D reconstruction of the food items from the images [50]. Finally, identified foods are matched to the USDA FNDDS for nutrient analysis [48,49].

In a first validation study in adolescents (N=15), the mean error in mFR-estimated weights of individual food items compared to known weights ranged from a 38% underestimation to a 26% overestimation, with 75% of all analyzed foods being within 7% of the true value [50,51]. In 45 community-dwelling adults, mFR-reported daily EI over 7.5 days correlated significantly (r=0.58) with DLW-measured daily EI and underestimation of total EI was only 12% (SD 11) for men and 10% (SD 10) for women with no systematic bias with increasing EI [52]. Most participants rated the usability of mFR as easy and indicated willingness to use the method for an extended period [52]. Further, the general feasibility and acceptability of the mFR method have been confirmed in 62 young children (3–10 years) [53] and 41 adolescents (11–15 years) [54]; however, variations according to sex and eating occasions in adolescents (higher underreporting in boys and frequently unreported snacks) highlight the need for increased training in the target group to ensure complete data [54]. The mFR method has further been used to characterize adolescents’ (N=93) plate waste over three days [55] and to assess if 6-month tailored dietary feedback was effective in improving dietary intake of young adults (N=143) [56]. Recently, the automatic portion size estimation of the mFR method was further refined, being now able to estimate portion size and food energy without the need to fit geometric models onto the food but rather by using a complex algorithm that relies on learned energy distribution images [57]. This method’s accuracy needs improvement, however, as mean error in estimated EI was 874 kJ (209 kcal; 38%) compared to pre-weighed foods for the 347 analyzed eating occasions. Although further refinement is needed to improve accuracy and include various eating styles and patterns, this development may broaden the applicability of the mFR method to diverse foods and populations.

GoCARB

GoCARB is a smartphone-based food recognition system designed to support patients with type 1 diabetes mellitus in carbohydrate counting [58]. When using GoCARB, the user places a reference card next to their food and uses a smartphone to capture two images of the food from two different angles. The plate is detected via a series of computer vision operations, which automatically segment and recognize the different food items and reconstruct their 3D shape. After food recognition, the carbohydrate content is calculated by linking each food item’s volume to the nutritional information provided by the USDA FNDDS [24]. In a pilot study with 19 adults with type 1 diabetes and 114 test meals (one extreme outlier was removed), the mean absolute estimation error of GoCARB compared to precisely weighed carbohydrate content was 26.9% (SD 18.9) [58]. This was a significantly smaller error (−22%; p=0.01) compared to self-report, which had a mean absolute estimation error of 34.3% (SD 24.3) relative to the precisely weighed carbohydrate content. Food recognition was correct for 85.1% or all food items and 90% of participants found GoCARB easy to use and would like to continue to use it in their daily life. GoCARB has to date not been validated in free-living conditions.

FoodCam

FoodCam is a semi-automatic mobile food recognition system. When using FoodCam, the user points a smartphone camera at the food plate and draws bounding boxes around the plates on the smartphone screen to start the food recognition and portion size estimation process. Next, the system’s database populates a list of possible food items for the highlighted foods by comparing the captured food items with images stored in the database via a complex algorithm, and the participant selects the best fit. The system does not automatically recognize food volumes and it requires the user to estimated food volumes by touching a slider on the phone screen to adjust the bounding boxes around the food. Finally, calorie and nutrition estimates of each of the recognized food items are calculated based on the image and the food selection from the database [59]. To date, the validity of the FoodCam system has not been tested in laboratory or free-living conditions.

Snap-n-Eat

Snap-n-Eat is designed to recognize foods and estimate the energy and nutrient content of foods automatically [60]. The analytical system recognizes the salient region (food item) in the food image taken by the user and uses hierarchical segmentation to segment the image into regions. Next, the system classifies these regions into different food categories using a linear support vector machine classifier. To estimate portion sizes of the foods, the system counts the number of pixels in each food segment, which then allows the estimation of the energy and nutritional values of the foods. In a feasibility study, the system achieved over 85% accuracy when classifying 2000 images of food items of 15 different categories [60]. To be a feasible tool for dietary assessment, however, the system needs to be significantly up-scaled to include far more than the 15 different food categories and validity in free-living conditions needs to be established. Additionally, it is unclear if a user can correctly identify misclassified foods, as incorrectly identified foods necessarily result in inaccurate FI estimates.

eButton

The eButton is a small, chest-worn camera, which automatically captures images of consumed foods every 2–4 seconds. The recorded images are analyzed by computer software to estimate the food’s portion size semi-automatically. Specifically, during analysis, food items are identified by the rater and a particular 3D shape model is selected from the software’s library and adjusted in location and size to cover the food item in the image as closely as possible. The volume of the food item is then estimated by the software using the volume of the fitted model [61]. In a small pilot study (N=7), eButton was used to capture images of 100 food samples of Asian and Western foods (no liquids) and the software was then used to estimate portion size [61]. The mean relative error across all food samples was −2.8 % (SD 20.4) and the error for 85 out of 100 foods was between −30% and 30% compared to the reference method of seed displacement, which is a commonly-used method to objectively quantify food volume [62]. The eButton has to date not been validated in free-living conditions.

DISCUSSION

The studies included in this review present image-assisted methodologies to improve the assessment of FI in different settings and populations. Many methods can reduce under-reporting observed with traditional self-report methods, though some methods, particularly those relying on automated image analysis, inaccurately estimate FI. In cafeteria settings, the DPFM and similar methods have proven feasible, effective, and highly accurate at estimating FI in large samples of diverse participants [13–23] and can today be considered the method of choice. In free-living conditions, smartphone apps can be used to capture food images and to transfer the images and associated data to a reading center in real time. These methods can produce accurate estimates of energy and nutrient intake [12,25,30–33], though accuracy relies on sound methods, such as EMAs, to facilitate data quality and completeness.

A noted weakness of many of the reviewed methods is their limited reliability and validity. For example, many have only been tested in proof-of-concept and pilot studies and laboratory settings and are lacking validation against DLW in free-living conditions. Further, larger sample sizes are needed to make results more generalizable and identify the best method for specific settings and target groups. In general, more accurate methods tend to be less burdensome for the participant but can be more burdensome for the image-analyzing staff. This limits the deployability of these methods on a large scale.

Many of the reviewed methods, particularly those used in free-living conditions, rely on smartphone-captured images. These images are then sent to a reading center for analysis by human raters (RFPM [12,25,30–33,39], FRapp [43], NuDAM [44]) or analyzed semi-automatically via software and additional input by the user (mFR [48,49], GoCARB [58], FoodCam [59], or Snap-n-Eat [60]). Smartphones are a logical choice for image-assisted dietary assessment since ~3.2 billion people use smartphones daily [63] and most smartphone users carry their phones with them throughout the day [64]. Smartphone apps can reduce missing or incomplete data in free-living conditions by incorporating EMAs and thereby accurately estimate the EI of adults [11]. Failure to capture images of foods consumed due to forgetfulness and/or due to intentional misreporting (e.g., social desirability bias) is a limitation of image-based methods that remains a challenge. Although this limitation applies to any FI assessment method requiring participants to truthfully record all consumed foods, it is still an important limitation that should be considered when using methods with active image capture by the participant. For this reason, passive/automated image capture via wearable devices such as the eButton [61], SenseCam [45], or Micro-camera [47] offer significant advantages since missing food images should occur less frequently and additional contextual information about the eating event can be recorded and annotated at a later date. Currently, however, passive image capture also has limitations which might limit the ability to disseminate these methods widely in the immediate term. For example, the battery life and data storage capacity of the wearable device needs to be sufficient to capture high-quality images throughout the day. The large amount of passively captured images throughout the day further requires a time-consuming review by the participant before images are transmitted to the laboratory for analysis as some pictures may include other people and objects in the participant’s environment that the participant does not wish to share due to privacy concerns. While this review process is inevitable and participants would likely have reasonable concerns using systems without the option to censor images, the censorship of certain (food) images could affect the accuracy of these methods. Technological advances promise to dramatically improve these methods in the future.

Many of the more accurate methods rely on the participant or researcher to manage images, verify which images to send, identify foods or verify automatic food identification, and/or estimate or verify portion size. Thus, while some approaches of automated analysis have promise for the future, to date, completely automated food image analysis, including identification of foods, matching of foods to a nutrient database, and estimation of portion size and food waste with sufficient accuracy is not yet a reality. Even with much more advanced recognition technology in the future, the automatic image-based identification and distinction between very similar looking foods will likely remain a significant challenge and may never be possible without at least some degree of user verification. Additionally, the technology to support automatic portion size estimation is still in its infancy and not possible with acceptable precision without at least some form of user feedback.

Because of the limitations of automated food image recognition, many systems and studies in free-living conditions (RFPM [12,25,30–33,39], SenseCam [45], NuDAM [44]) continue to rely on analysis by trained raters who estimate portion sizes and calculate energy and macro-/ micronutrient content by matching the foods in the images to a nutrient database. Currently, analysis by human raters is more accurate and less variable than semi-automated image analysis. Importantly, rater-based analysis can rely on existing nutrient databases (USDA, etc.), whereas having to create comprehensive databases for automated food recognition systems can be burdensome and limits feasibility, at least without further technological advances.

Regardless of the method by which portion size is estimated, it is important to recognize the limits of the estimation. For example, portion size estimation of foods with amorphous shapes or higher energy densities tends to be challenging [65]. Further, the correct identification of certain ambiguous foods (e.g., diet soda vs. regular soda), preparation method (e.g., fried vs. baked), and the type and amount of hidden ingredients in a dish (e.g., butter in mashed potatoes) frequently require some form of image annotation by the participant. The precise annotation of images by the participant, of course, relies on self-report with its known flaws, and participants will not always know enough about the ingredients and preparation methods of their food to precisely account for added fat, etc. This problem is not unique to image-based methods, however, and even when directly weighing FI, the recipe and precise amount of ingredients used need to be carefully quantified and recorded. Nevertheless, despite these issues that are likely random [66], image-based methods that use trained raters for image analysis are still far less problematic than the systematic bias observed when food type and portion size are entirely self-reported [1,67,68].

In conclusion, image-assisted methods to assess FI will likely remain a provocative force in the literature. Despite technological advances, the more accurate methods still rely on human raters to estimate FI from food images, though significant advances in passive image capture and automated/semi-automated image analysis have opened a new frontier of development. As technology advances, the field can move forward, but only with thorough and critical evaluation of the strengths and weaknesses of the methods. It is unlikely that a single method will be a panacea and applicable to all data collection scenarios, populations, and sample sizes. While the less accurate methods are not suitable to measure FI as an outcome variable, they may still serve as important monitoring tools in behavioral interventions as they may mediate behavior change. In the future, pairing image-based methods with other sensors such as continuous glucose monitoring and using mathematical modeling to integrate the multi-sensor data may increase accuracy of the single methods and improve FI assessment.

Acknowledgments:

Funding: This work was supported by National Institutes of Health grants T32 DK064584 (NIDDK National Research Service Award), P30 DK072476 (Nutrition Obesity Research Center), and U54 GM104940 (Louisiana Clinical & Translational Science Center).

Footnotes

Competing Interests:

The intellectual property surrounding the Remote Food Photography Method © and SmartIntake® application are owned by Louisiana State University/Pennington Biomedical Research Center and CKM is an inventor. There are no other competing interests related to this study to declare.

REFERENCES

- [1].Subar AF, Freedman LS, Tooze JA, Kirkpatrick SI, Boushey C, Neuhouser ML, et al. Addressing Current Criticism Regarding the Value of Self-Report Dietary Data. J Nutr 2015;145:2639–45. 10.3945/jn.115.219634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Walter W Nutritional Epidemiology. Third Edition, New to this Edition: Oxford, New York: Oxford University Press; 2012. [Google Scholar]

- [3].Lytle LA, Nicastro HL, Roberts SB, Evans M, Jakicic JM, Laposky AD, et al. Accumulating Data to Optimally Predict Obesity Treatment (ADOPT) Core Measures: Behavioral Domain. Obes Silver Spring Md 2018;26 Suppl 2:S16–24. 10.1002/oby.22157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Johnson RK. Dietary Intake—How Do We Measure What People Are Really Eating? Obes Res 2002;10:63S–68S. 10.1038/oby.2002.192. [DOI] [PubMed] [Google Scholar]

- [5].Archer E, Pavela G, Lavie CJ. The Inadmissibility of ‘What We Eat In America’ (WWEIA) and NHANES Dietary Data in Nutrition & Obesity Research and the Scientific Formulation of National Dietary Guidelines. Mayo Clin Proc 2015;90:911–26. 10.1016/j.mayocp.2015.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Dhurandhar NV, Schoeller D, Brown AW, Heymsfield SB, Thomas D, Sørensen TIA, et al. Energy balance measurement: when something is not better than nothing. Int J Obes 2005 2015;39:1109–13. 10.1038/ijo.2014.199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Basiotis PP, Welsh SO, Cronin FJ, Kelsay JL, Mertz W. Number of days of food intake records required to estimate individual and group nutrient intakes with defined confidence. J Nutr 1987;117:1638–41. 10.1093/jn/117.9.1638. [DOI] [PubMed] [Google Scholar]

- [8].Macdiarmid J, Blundell J. Assessing dietary intake: Who, what and why of under-reporting. Nutr Res Rev 1998;11:231–53. 10.1079/NRR19980017. [DOI] [PubMed] [Google Scholar]

- [9].Rebro SM, Patterson RE, Kristal AR, Cheney CL. The effect of keeping food records on eating patterns. J Am Diet Assoc 1998;98:1163–5. 10.1016/S0002-8223(98)00269-7. [DOI] [PubMed] [Google Scholar]

- [10].Goris AH, Westerterp-Plantenga MS, Westerterp KR. Undereating and underrecording of habitual food intake in obese men: selective underreporting of fat intake. Am J Clin Nutr 2000;71:130–4. 10.1093/ajcn/71.1.130. [DOI] [PubMed] [Google Scholar]

- [11].Stone AA, Shiffman S. Ecological Momentary Assessment (Ema) in Behavioral Medicine. Ann Behav Med 1994;16:199–202. 10.1093/abm/16.3.199. [DOI] [Google Scholar]

- [12].Martin CK, Correa JB, Han H, Allen HR, Rood J, Champagne CM, et al. Validity of the Remote Food Photography Method (RFPM) for estimating energy and nutrient intake in near real-time. Obes Silver Spring Md 2012;20:891–9. 10.1038/oby.2011.344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Williamson DA, Allen HR, Martin PD, Alfonso AJ, Gerald B, Hunt A. Comparison of digital photography to weighed and visual estimation of portion sizes. J Am Diet Assoc 2003;103:1139–45. 10.1016/s0002-8223(03)00974-x. [DOI] [PubMed] [Google Scholar]

- [14].Williamson DA, Allen HR, Martin PD, Alfonso A, Gerald B, Hunt A. Digital photography: a new method for estimating food intake in cafeteria settings. Eat Weight Disord EWD 2004;9:24–8. [DOI] [PubMed] [Google Scholar]

- [15].Williamson DA, Martin PD, Allen HR, Most MM, Alfonso AJ, Thomas V, et al. Changes in food intake and body weight associated with basic combat training. Mil Med 2002;167:248–53. [PubMed] [Google Scholar]

- [16].Martin CK, Newton RL, Anton SD, Allen HR, Alfonso A, Han H, et al. Measurement of children’s food intake with digital photography and the effects of second servings upon food intake. Eat Behav 2007;8:148–56. 10.1016/j.eatbeh.2006.03.003. [DOI] [PubMed] [Google Scholar]

- [17].Williamson DA, Champagne CM, Harsha D, Han H, Martin CK, Newton R, et al. Louisiana (LA) Health: design and methods for a childhood obesity prevention program in rural schools. Contemp Clin Trials 2008;29:783–95. 10.1016/j.cct.2008.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Nicklas TA, O’Neil CE, Stuff J, Goodell LS, Liu Y, Martin CK. Validity and feasibility of a digital diet estimation method for use with preschool children: a pilot study. J Nutr Educ Behav 2012;44:618–23. 10.1016/j.jneb.2011.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Nicklas TA, O’Neil CE, Stuff JE, Hughes SO, Liu Y. Characterizing dinner meals served and consumed by low-income preschool children. Child Obes Print 2012;8:561–71. 10.1089/chi.2011.0114. [DOI] [PubMed] [Google Scholar]

- [20].Nicklas TA, Liu Y, Stuff JE, Fisher JO, Mendoza JA, O’Neil CE. Characterizing Lunch Meals Served and Consumed by Preschool Children in Head Start. Public Health Nutr 2013;16:2169–77. 10.1017/S1368980013001377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Washburn RA, Honas JJ, Ptomey LT, Mayo MS, Lee J, Sullivan DK, et al. Energy and macronutrient intake in the Midwest Exercise Trial-2 (MET-2). Med Sci Sports Exerc 2015;47:1941–9. 10.1249/MSS.0000000000000611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Hawkins KR, Burton JH, Apolzan JW, Thomson JL, Williamson DA, Martin CK. Efficacy of a school-based obesity prevention intervention at reducing added sugar and sodium in children’s school lunches: the LA Health randomized controlled trial. Int J Obes 2005 2018;42:1845–52. 10.1038/s41366-018-0214-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Marcano-Olivier M, Erjavec M, Horne PJ, Viktor S, Pearson R. Measuring lunchtime consumption in school cafeterias: a validation study of the use of digital photography. Public Health Nutr 2019;22:1745–54. 10.1017/S136898001900048X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].U.S. Department of Agriculture, Agricultural Research Service. USDA Food and Nutrient Database for Dietary Studies 2015–2016. Beltsville, MD: U.S. Department of Agriculture, Agricultural Research Service; 2018. [Google Scholar]

- [25].Martin CK, Han H, Coulon SM, Allen HR, Champagne CM, Anton SD. A novel method to remotely measure food intake of free-living individuals in real time: the remote food photography method. Br J Nutr 2009;101:446–56. 10.1017/S0007114508027438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].U.S. Environmental Protection Agency, U.S. Food and Drug Administration, U.S. Department of Agriculture. Formal Agreement Between EPA, USDA, and FDA Relative to Cooperation and Coordination on Food Loss and Waste. Washington, D.C.: 2020. [Google Scholar]

- [27].Martin CK, Thomson JL, LeBlanc MM, Stewart TM, Newton RL, Han H, et al. Children in school cafeterias select foods containing more saturated fat and energy than the Institute of Medicine recommendations. J Nutr 2010;140:1653–60. 10.3945/jn.109.119131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Ptomey LT, Willis EA, Honas JJ, Mayo MS, Washburn RA, Herrmann SD, et al. Validity of energy intake estimated by digital photography + recall in overweight and obese young adults. J Acad Nutr Diet 2015;115:1392–9. 10.1016/j.jand.2015.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Martin CK, Nicklas T, Gunturk B, Correa JB, Allen HR, Champagne C. Measuring food intake with digital photography. J Hum Nutr Diet Off J Br Diet Assoc 2014;27 Suppl 1:72–81. 10.1111/jhn.12014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Nicklas T, Islam NG, Saab R, Schulin R, Liu Y, Butte NF, et al. Validity of a Digital Diet Estimation Method for Use with Preschool Children. J Acad Nutr Diet 2018;118:252–60. 10.1016/j.jand.2017.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Nicklas T, Saab R, Islam NG, Wong W, Butte N, Schulin R, et al. Validity of the Remote Food Photography Method Against Doubly Labeled Water Among Minority Preschoolers. Obes Silver Spring Md 2017;25:1633–8. 10.1002/oby.21931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Altazan AD, Gilmore LA, Burton JH, Ragusa SA, Apolzan JW, Martin CK, et al. Development and Application of the Remote Food Photography Method to Measure Food Intake in Exclusively Milk Fed Infants: A Laboratory-Based Study. PloS One 2016;11:e0163833 10.1371/journal.pone.0163833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Duhé AF, Gilmore LA, Burton JH, Martin CK, Redman LM. The Remote Food Photography Method Accurately Estimates Dry Powdered Foods-The Source of Calories for Many Infants. J Acad Nutr Diet 2016;116:1172–7. 10.1016/j.jand.2016.01.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Bekelman TA, Bellows LL, McCloskey ML, Martin CK, Johnson SL. Assessing dinner meals offered at home among preschoolers from low-income families with the Remote Food Photography Method. Pediatr Obes 2019;14:e12558 10.1111/ijpo.12558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].McCloskey ML, Johnson SL, Bekelman TA, Martin CK, Bellows LL. Beyond Nutrient Intake: Use of Digital Food Photography Methodology to Examine Family Dinnertime. J Nutr Educ Behav 2019;51:547–555.e1. 10.1016/j.jneb.2019.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Buchowski MS. Doubly Labeled Water Is a Validated and Verified Reference Standard in Nutrition Research12. J Nutr 2014;144:573–4. 10.3945/jn.114.191361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Roe BE, Apolzan JW, Qi D, Allen HR, Martin CK. Plate waste of adults in the United States measured in free-living conditions. PloS One 2018;13:e0191813 10.1371/journal.pone.0191813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Burrows TL, Martin RJ, Collins CE. A systematic review of the validity of dietary assessment methods in children when compared with the method of doubly labeled water. J Am Diet Assoc 2010;110:1501–10. 10.1016/j.jada.2010.07.008. [DOI] [PubMed] [Google Scholar]

- [39].Most J, Vallo PM, Altazan AD, Gilmore LA, Sutton EF, Cain LE, et al. Food Photography Is Not an Accurate Measure of Energy Intake in Obese, Pregnant Women. J Nutr 2018;148:658–63. 10.1093/jn/nxy009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Rose MH, Streisand R, Aronow L, Tully C, Martin CK, Mackey E. Preliminary Feasibility and Acceptability of the Remote Food Photography Method for Assessing Nutrition in Young Children with Type 1 Diabetes. Clin Pract Pediatr Psychol 2018;6:270–7. 10.1037/cpp0000240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Martin CK, Kaya S, Gunturk BK. Quantification of food intake using food image analysis. Conf Proc Annu Int Conf IEEE Eng Med Biol Soc IEEE Eng Med Biol Soc Annu Conf 2009;2009:6869–72. 10.1109/IEMBS.2009.5333123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Dibiano R, Gunturk BK, Martin CK. Food image analysis for measuring food intake in free living conditions Med. Imaging 2013 Image Process, vol. 8669, International Society for Optics and Photonics; 2013, p. 86693N 10.1117/12.2006871. [DOI] [Google Scholar]

- [43].Casperson SL, Sieling J, Moon J, Johnson L, Roemmich JN, Whigham L. A mobile phone food record app to digitally capture dietary intake for adolescents in a free-living environment: usability study. JMIR MHealth UHealth 2015;3:e30 10.2196/mhealth.3324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Rollo ME, Ash S, Lyons-Wall P, Russell AW. Evaluation of a Mobile Phone Image-Based Dietary Assessment Method in Adults with Type 2 Diabetes. Nutrients 2015;7:4897–910. 10.3390/nu7064897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Gemming L, Rush E, Maddison R, Doherty A, Gant N, Utter J, et al. Wearable cameras can reduce dietary under-reporting: doubly labelled water validation of a camera-assisted 24 h recall. Br J Nutr 2015;113:284–91. 10.1017/S0007114514003602. [DOI] [PubMed] [Google Scholar]

- [46].Hodges S, Williams L, Berry E, Izadi S, Srinivasan J, Butler A, et al. SenseCam: A Retrospective Memory Aid Proc. 8th Int. Conf. Ubiquitous Comput. UbiComp 2006, Springer Verlag; 2006, p. 177–93. [Google Scholar]

- [47].Pettitt C, Liu J, Kwasnicki RM, Yang G-Z, Preston T, Frost G. A pilot study to determine whether using a lightweight, wearable micro-camera improves dietary assessment accuracy and offers information on macronutrients and eating rate. Br J Nutr 2016;115:160–7. 10.1017/S0007114515004262. [DOI] [PubMed] [Google Scholar]

- [48].Ahmad Z, Khanna N, Kerr DA, Boushey CJ, Delp EJ. A mobile phone user interface for image-based dietary assessment. Mob Devices Multimed Enabling Technol Algorithms Appl 2014 2014;9030:903007 10.1117/12.2041334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Ahmad Z, Kerr DA, Bosch M, Boushey CJ, Delp EJ, Khanna N, et al. A Mobile Food Record For Integrated Dietary Assessment. MADiMa16 Proc 2nd Int Workshop Multimed Assist Diet Manag Oct 16 2016 Amst Neth Int Workshop Multimed Assist Diet Manag 2nd 2016 Amst 2016;2016:53–62. 10.1145/2986035.2986038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Fang S, Liu C, Zhu F, Delp EJ, Boushey CJ. Single-View Food Portion Estimation Based on Geometric Models 2015 IEEE Int. Symp. Multimed. ISM, Miami, FL, USA: IEEE; 2015, p. 385–90. 10.1109/ISM.2015.67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Lee CD, Chae J, Schap TE, Kerr DA, Delp EJ, Ebert DS, et al. Comparison of known food weights with image-based portion-size automated estimation and adolescents’ self-reported portion size. J Diabetes Sci Technol 2012;6:428–34. 10.1177/193229681200600231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Boushey CJ, Spoden M, Delp EJ, Zhu F, Bosch M, Ahmad Z, et al. Reported Energy Intake Accuracy Compared to Doubly Labeled Water and Usability of the Mobile Food Record among Community Dwelling Adults. Nutrients 2017;9:312 10.3390/nu9030312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Aflague TF, Boushey CJ, Guerrero RTL, Ahmad Z, Kerr DA, Delp EJ. Feasibility and Use of the Mobile Food Record for Capturing Eating Occasions among Children Ages 3–10 Years in Guam. Nutrients 2015;7:4403–15. 10.3390/nu7064403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Boushey CJ, Harray AJ, Kerr DA, Schap TE, Paterson S, Aflague T, et al. How willing are adolescents to record their dietary intake? The mobile food record. JMIR MHealth UHealth 2015;3:e47 10.2196/mhealth.4087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Panizza CE, Boushey CJ, Delp EJ, Kerr DA, Lim E, Gandhi K, et al. Characterizing Early Adolescent Plate Waste Using the Mobile Food Record. Nutrients 2017;9:93 10.3390/nu9020093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [56].Shoneye CL, Dhaliwal SS, Pollard CM, Boushey CJ, Delp EJ, Harray AJ, et al. Image-Based Dietary Assessment and Tailored Feedback Using Mobile Technology: Mediating Behavior Change in Young Adults. Nutrients 2019;11:435 10.3390/nu11020435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Fang S, Shao Z, Kerr DA, Boushey CJ, Zhu F. An End-to-End Image-Based Automatic Food Energy Estimation Technique Based on Learned Energy Distribution Images: Protocol and Methodology. Nutrients 2019;11:877 10.3390/nu11040877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [58].Rhyner D, Loher H, Dehais J, Anthimopoulos M, Shevchik S, Botwey RH, et al. Carbohydrate Estimation by a Mobile Phone-Based System Versus Self-Estimations of Individuals With Type 1 Diabetes Mellitus: A Comparative Study. J Med Internet Res 2016;18:e101 10.2196/jmir.5567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Kawano Y, Yanai K. FoodCam: A real-time food recognition system on a smartphone. Multimed Tools Appl 2015;74:5263–87. 10.1007/s11042-014-2000-8. [DOI] [Google Scholar]

- [60].Zhang W, Yu Q, Siddiquie B, Divakaran A, Sawhney H. “Snap-n-Eat.” J Diabetes Sci Technol 2015;9:525–33. 10.1177/1932296815582222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Jia W, Chen H-C, Yue Y, Li Z, Fernstrom J, Bai Y, et al. Accuracy of food portion size estimation from digital pictures acquired by a chest-worn camera. Public Health Nutr 2014;17:1671–81. 10.1017/S1368980013003236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Cauvain SP, Young LS. Technology of Breadmaking. New York, NY: Springer Science & Business Media; 2013. [Google Scholar]

- [63].Holst A Number of smartphone users worldwide 2014–2020. Statista 2019. https://www.statista.com/statistics/330695/number-of-smartphone-users-worldwide/ (accessed December 26, 2019). [Google Scholar]

- [64].International Data Corporation. Always Connected- How Smartphones And Social Keep Us Engaged. Framingham, MA: International Data Corporation; 2013. [Google Scholar]

- [65].Howes E, Boushey CJ, Kerr DA, Tomayko EJ, Cluskey M. Image-Based Dietary Assessment Ability of Dietetics Students and Interns. Nutrients 2017;9:114 10.3390/nu9020114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [66].Gibson RS, Charrondiere UR, Bell W. Measurement Errors in Dietary Assessment Using Self-Reported 24-Hour Recalls in Low-Income Countries and Strategies for Their Prevention. Adv Nutr 2017;8:980–91. 10.3945/an.117.016980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [67].Maurer J, Taren DL, Teixeira PJ, Thomson CA, Lohman TG, Going SB, et al. The psychosocial and behavioral characteristics related to energy misreporting. Nutr Rev 2006;64:53–66. 10.1301/nr.2006.feb.53-66. [DOI] [PubMed] [Google Scholar]

- [68].Subar AF, Crafts J, Zimmerman TP, Wilson M, Mittl B, Islam NG, et al. Assessment of the accuracy of portion size reports using computer-based food photographs aids in the development of an automated self-administered 24-hour recall. J Am Diet Assoc 2010;110:55–64. 10.1016/j.jada.2009.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]