SUMMARY

To advance the measurement of distributed neuronal population representations of targeted motor actions on single trials, we developed an optical method (COSMOS) for tracking neural activity in a largely uncharacterized spatiotemporal regime. COSMOS allowed simultaneous recording of neural dynamics at ~30 Hz from over a thousand near-cellular resolution neuronal sources spread across the entire dorsal neocortex of awake, behaving mice during a three-option lick-to-target task. We identified spatially distributed neuronal population representations spanning the dorsal cortex that precisely encoded ongoing motor actions on single trials. Neuronal correlations measured at video rate using unaveraged, whole-session data had localized spatial structure, whereas trial-averaged data exhibited widespread correlations. Separable modes of neural activity encoded history-guided motor plans, with similar population dynamics in individual areas throughout cortex. These initial experiments illustrate how COSMOS enables investigation of large-scale cortical dynamics and that information about motor actions is widely shared between areas, potentially underlying distributed computations.

In Brief

Kauvar, Machado, et al. have developed a new method, COSMOS, to simultaneously record neural dynamics at ~30 Hz from over a thousand near-cellular resolution neuronal sources spread across the entire dorsal neocortex of awake, behaving mice. With COSMOS, they observe cortex-spanning population encoding of actions during a three-option lick-to-target task.

INTRODUCTION

Cortical computations may depend on the synchronous activity of neurons distributed across many areas. Anatomical evidence includes the observation that many individual pyramidal cells send axons to functionally distinct cortical areas (Economo et al., 2018; Oh et al., 2014); for example, nearly all layer 2/3 pyramidal cells in primary visual cortex project to at least one other cortical area—often hundreds of microns away (Han et al., 2018). Physiological evidence has shown that ongoing and past sensory information relevant for decision making is widely encoded across cortex (Akrami et al., 2018; Allen et al., 2017; Gilad et al., 2018; Harvey et al., 2012; Hattori et al., 2019; Hernández et al., 2010; Makino et al., 2017; Mante et al., 2013; Mohajerani et al., 2013; Pinto et al., 2019; Vickery et al., 2011). In addition, neural activity tuned to spontaneous or undirected movements is found in many cortical areas (Musall et al., 2019; Stringer et al., 2019). In the motor system, persistent activity may be mediated by inter-hemispheric feedback in mouse motor cortex (Li et al., 2016) in addition to other long-range loops between the motor cortex and the thalamus (Guo et al., 2017; Sauerbrei et al., 2020), and the cerebellum (Chabrol et al., 2019; Gao et al., 2018). Finally, studies in primates have shown that non-motor regions of frontal cortex contain neurons that encode information related to decisions that drive specific motor actions (Campo et al., 2015; Hernández et al., 2010; Lemus et al., 2007; Ponce-Alvarez et al., 2012; Siegel et al., 2015).

Thus, while specialized computations for motor (Georgopoulos, 2015; Mountcastle, 1997) versus sensory (Hubel and Wiesel, 1968) or cognitive (Shadlen and Newsome, 1996) processes may be performed in each cortical area, the results of these computations may be propagated to dozens of other areas via direct, often monosynaptic, pathways. Prior work, often limited by technological capabilities, has primarily focused on the tuning properties of individual neurons or population encoding in individual regions, potentially missing an alternative systems-level viewpoint of how distributed populations together encode behavior (Saxena and Cunningham, 2019; Yuste, 2015). Thus, it remains unclear how widespread population activity is involved in transforming sensory stimuli and contextual information into specific actions.

A technical barrier to studying distributed encoding has been the lack of a method for simultaneously measuring fast, cortex-wide neural dynamics at or near cellular resolution. Despite recent progress in neural recording techniques, persistent limitations have underscored the need for new approaches. Large field-of-view, two-photon microscopes have enabled simultaneous recording from a few cortical areas at single-cell resolution, revealing structured large-scale correlations in neural activity but at low rates (Chen et al., 2015; Lecoq et al., 2014; Sofroniew et al., 2016; Stirman et al., 2016; Tsai et al., 2015). Widefield imaging has also revealed cortex-wide task involvement and activity patterns, albeit with low spatial resolution (Allen et al., 2017; Ferezou et al., 2007; Makino et al., 2017; Mayrhofer et al., 2019; Musall et al., 2019; Pinto et al., 2019; Wekselblatt et al., 2016). Furthermore, multi-electrode extracellular recording has revealed inter-regional correlations in spiking, information flow between a few cortical areas, and phase alignment of local field potentials across a macaque cortical hemisphere (Campo et al., 2015; Dotson et al., 2017; Feingold et al., 2012; Hernández et al., 2008; Ponce-Alvarez et al., 2012). However, despite the merits of these approaches, each is limited by one or more of several key parameters, including field of view, acquisition speed, spatial resolution, and cell-type targeting capability. We thus developed a complementary technique that leveraged multifocal widefield optics to enable high-speed, simultaneous, genetically specified recording of neural activity across the entirety of mouse dorsal cortex at near-cellular resolution. To illustrate utility of this new methodology, we devised a task requiring mice to initiate bouts of targeted licking guided by recent trial history. Imaging fast cortex-wide neural activity during this task revealed a scale-crossing interplay between localized activity and distributed population encoding on single trials.

RESULTS

A Multifocal Macroscope for Imaging the Curved Cortical Surface with High Signal-to-Noise Ratio

We sought to record the activity of neurons dispersed across the entirety of dorsal cortex at fast sampling rates. Since many mouse behaviors, such as licking, can occur at 10 Hz or faster (Boughter et al., 2007), and widely used spike-inference algorithms can only estimate firing rate information up to the data acquisition rate (Pnevmatikakis et al., 2016; Theis et al., 2016), we decided to use one-photon widefield optics with its potential for highly parallel sampling at rates >20 Hz over a large field of view, as well as genetic specificity; this combination is difficult to achieve with other approaches such as two-photon microscopy or electrophysiology (Harris et al., 2016; Weisenburger and Vaziri, 2018). Other imaging techniques either lack the desired sampling rate (Sofroniew et al., 2016; Stirman et al., 2016), spatial resolution (Allen et al., 2017; Kim et al., 2016; Makino et al., 2017; Wekselblatt et al., 2016), or field of view (Bouchard et al., 2015; Lecoq et al., 2014; Nöbauer et al., 2017; Rumyantsev et al., 2020). The approach described here, cortical observation by synchronous multifocal optical sampling (COSMOS), records in-focus projections of 1-cm × 1-cm × 1.3-mm volumes at video rate (29.4 Hz for the presented data), with high light-collection efficiency and resolution across the entire field of view.

In conjunction with this macroscope, we advanced a surgical approach enabling long-term, high-quality optical access to a large fraction of dorsal cortex (based on Kim et al., 2016; Allen et al., 2017). We used a trapezoidal window curved along a 10-mm radius (Figures 1A and S1A), and we performed the craniotomy using a robotic stereotaxic apparatus (Pak et al., 2015; Figures 1B and S1B-S1J).

Figure 1. COSMOS Enables Recovery of High SNR Neural Sources across the Curved Surface of Dorsal Cortex.

(A) Schematic of cortical window superimposed upon the Allen Brain Atlas.

(B) Example preparation.

(C) Transgenic strategy (bottom) to drive sparse GCaMP expression (green; top) in superficial cortical layers.

(D) COSMOS macroscope (left) and lenslet array (right).

(E) Raw macroscope data contain two juxtaposed images focused at different depths (offset by 620 μm).

(F) Point spread function captured using a 10-μm fluorescent source.

(G) Light transmission versus a conventional macroscope at different aperture settings.

(H) Merged image quality versus a conventional macroscope with the same light throughput.

(I) Data processing pipeline.

(J) Procedure for brain atlas alignment using intrinsic imaging.

(K) Neural sources extracted versus a conventional macroscope (one mouse; n = 3 separate recordings per configuration; mean ± SEM; *Corrected p < 0.05, Kruskal-Wallis H test and post hoc t test).

(L) Peak-signal-to-noise ratio (PSNR) for the best 100 sources recorded using each configuration. Circles represent outliers.

(M) Example spatial footprints of extracted sources with f/2 macroscope.

(N) Example spatial footprints with COSMOS. Numbering corresponds to traces in (O).

(O) Example Z-scored traces from COSMOS.

We selectively drove sparse Ca2+ sensor expression in superficial cortico-cortical projection neurons using a Cre-dependent, tetracycline-regulated transactivator (tTA2)-amplified, GCaMP6f reporter mouse line (Ai148) crossed to a Cux2-CreER driver line (Daigle et al., 2018; Franco et al., 2012; Figure 1C). CreER allowed control over the fraction of neurons expressing GCaMP and obviated potential abnormalities from expressing GCaMP during development (Steinmetz et al., 2017). Even 1 year after window implantation, we found little evidence of filled nuclei indicative of impaired cell health (Figures 1C and S1K-S1L). By sparsely labeling only a subset of superficial cortical cells (from layers 2/3 and 4), we biased the widefield signal origin toward somatic sources from cortico-cortical neurons, instead of layer 1 neuropil (Allen et al., 2017). Post-experiment histology (Figure S1L) validated that the GCaMP6f spatial expression pattern was consistent with previous descriptions of Cux2-CreER mice (Franco et al., 2012).

The optical design for the COSMOS macroscope used a dual-focus lenslet array (Figure 1D), balancing high light throughput, long depth-of-field, ease of implementation, and resolution, with modest data processing requirements and reasonable system cost. Theoretical analysis demonstrated that, in terms of light collection, defocus, and extracted neuronal source signal-to-noise ratio (SNR) across the extent of the curved window, the COSMOS macroscope design outperformed other potential solutions (Abrahamsson et al., 2013; Brady and Marks, 2011; Cossairt et al., 2013; Hasinoff et al., 2009; Levin et al., 2009; Schechner et al., 2007; Figure S2). Empirical comparisons demonstrated that a COSMOS macroscope, with focal planes offset by ~600 μm (Figures 1E and 1F), outperformed a comparable conventional macroscope in terms of depth of field while maintaining equivalent light throughput (Figures 1G and 1H).

We captured Ca2+-dependent fluorescence videos with the COSMOS macroscope and extracted putative neuronal sources, taking advantage of an improved version of the constrained non-negative matrix factorization (CNMF) algorithm (Pnevmatikakis et al., 2016), which was designed specifically to handle high-background, one-photon data (CNMF-E; Zhou et al., 2018; Figure 1I; raw data in Videos S1 and S2; for atlas registration methods, see Figure 1J, STAR Methods, Figure S3, and Video S3). In contrast to the output of a conventional macroscope, high-quality sources detected by the COSMOS macroscope spanned the entire curved window, thus providing simultaneous coverage of visual, somatosensory, motor, and association areas. Furthermore, the COSMOS macroscope recovered significantly more sources than a conventional macroscope at any single aperture setting. Nearly twice as many neuronal sources were detected with the COSMOS macroscope, compared to a macroscope with equivalent light collection (aperture open to f/2 setting) and with comparable SNR (Figures 1K-1O).

Characterization of Extracted Neuronal Sources Using a Visual Stimulus Assay

We next assessed whether the sources extracted from COSMOS data originated from single neurons or mixtures of multiple cells. We leveraged the finding that, in rodents, neurons in visual cortex tuned to differently oriented visual stimuli are spatially intermixed in a salt-and-pepper manner (Chen et al., 2013; Niell and Stryker, 2008; Ohki et al., 2005). In our data, merging of adjacent neurons into a single extracted source would, thus, diminish orientation tuning relative to sub-cellular resolution, two-photon measurements.

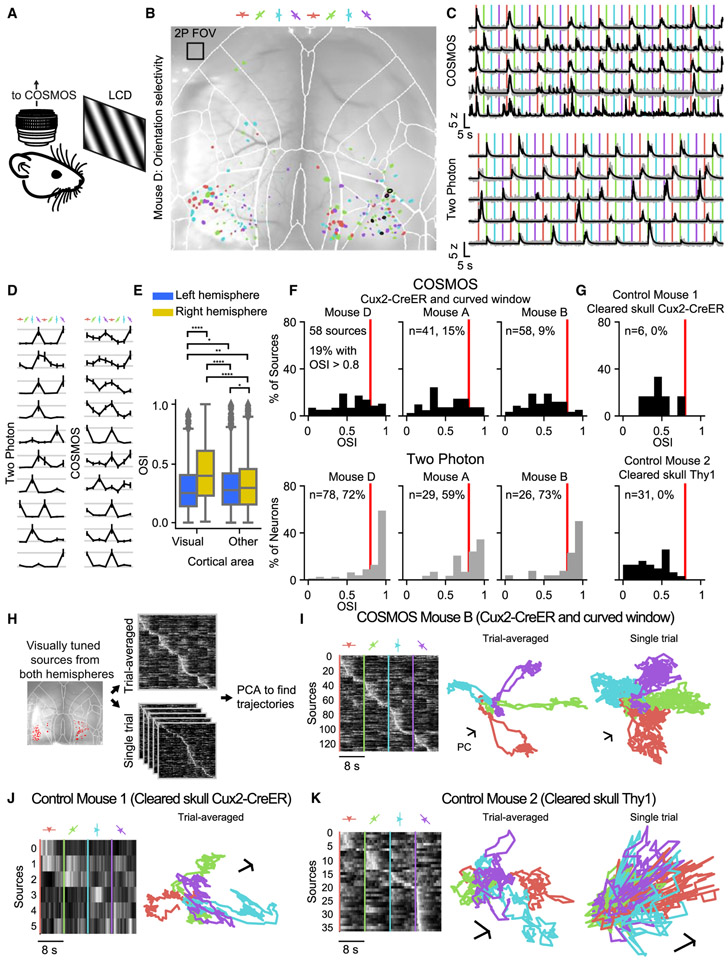

Using COSMOS, we measured orientation tuning in response to a drifting grating stimulus centered on the left eye (Figure 2A; the monitor provided weaker visual input to the right eye). Nearly all orientation-tuned sources were confined to the visual cortex (Figure 2B; visually responsive sources highlighted; one-way ANOVA, p < 0.01; on the superimposed atlas, the border around the visual cortex is indicated with thicker white lines). We then repeated this procedure with each mouse, using a two-photon microscope with a high-magnification objective (Nikon, 16×/0.8 NA) positioned over the right visual cortex (note much smaller size of two-photon imaging field indicated by box in Figure 2B). Both COSMOS and two-photon datasets contained sources exhibiting highly selective orientation tuning consistent with reported single-neuron responses measured with GCaMP6f in primary visual cortex (V1) (Chen et al., 2013) (Figure 2C and 2D). As expected, the average orientation selectivity index (OSI) of COSMOS sources in the right visual cortex was higher than in any other cortical region (Figure 2E; Mann-Whitney U test analyzing all visually responsive sources from 3 different mice; corrected p < 0.0001 for all comparisons versus right visual areas). Furthermore, across 3 mice, 14% of all visually responsive sources had OSIs >0.8 (Figure 2F, top row). In two-photon data from the same mice, 68% of visually responsive sources had orientation tunings >0.8 (Figure 2F, bottom row).

Figure 2. Characterization of COSMOS Sources Using Visual Stimuli.

(A) Sinusoidal grating stimuli were presented to mice during both COSMOS and two-photon imaging, using an identical monitor.

(B) Highlighted COSMOS sources that were stimulus responsive (in a Cux2-CreER; Ai148 mouse; one-way ANOVA, p < 0.01). Box indicates 550 μm x 550 μm field-of-view size for the two-photon microscope used to collect comparative data.

(C and D) Single-trial (C) and peak-normalized trial-averaged (D) responses from selected visually responsive sources (from the mouse in B) from the right visual cortex under the COSMOS macroscope (top in C, right in D; black contours denote selected sources in B) and sources imaged under the two-photon microscope (bottom in C, left in D). In (D), vertical lines indicate grating onset times; error bars represent SEM.

(E) Orientation selectivity index (OSI) distributions for all extracted sources within visual areas compared to sources in all other areas (pooled over three mice; corrected p values from Mann-Whitney U test are indicated).

(F) OSI distributions plotted for all visually responsive sources in right visual areas, across three mice, under COSMOS (top) and two-photon microscopy (bottom). Red lines denote OSI = 0.8. Fraction of sources with OSI > 0.8 indicated as percentages.

(G) OSI distributions for two additional mice (with cleared skulls but no windows).

(H) Generation of neural trajectories using PCA.

(I) Trial-averaged, visually responsive sources pooled across both visual cortices (from a single mouse), imaged under the COSMOS microscope (left). PCA trajectories for trial-averaged (middle) and single-trial data (right). Scale bars are arbitrary units but indicate an equivalent length in each dimension.

(J and K) Trajectories for control mice 1 (J) and 2 (K) lacking cranial windows.

*Corrected p < 0.05; **corrected p < 0.01; ***corrected p < 0.001; ****corrected p < 0.0001.

To further assess the COSMOS sources, we simulated mixtures of single-neuron signals obtained with two-photon data to reproduce the COSMOS OSI distributions. Across mice, the COSMOS OSI distributions could be explained by the presence of sources representing mixtures of signals from 1–15 neurons (Figure S4A). The presence of sources with OSIs >0.8 is not trivial; if we had observed zero high OSI sources, the COSMOS OSI distributions would be, instead, more consistent with mixtures of 11–19 neurons—well outside the single-neuron regime (Figure S4B; STAR Methods; importantly, though, no particular source is required to be a single neuron, and our analyses are structured accordingly).

To test the importance of the overall COSMOS preparation in achieving this key result, we performed the same procedure on conventional cleared-skull widefield preparations with two different genetically specified expression profiles: Thy1-GCaMP6s and Cux2-CreER;Ai148 (Allen et al., 2017; Makino et al., 2017; Wekselblatt et al., 2016; Figures S4C-S4H). Following identical imaging and data processing as with the earlier mice, even in the best of three Thy1-GCaMP6s mice, we found zero neurons with an OSI >0.8 (Figure 2G). Additionally, with both genotypes, fewer total sources were extracted, the spatial footprint of each source was larger, and there were fewer visually responsive sources (Figures S4C-S4F).

To further explore the improved capability of COSMOS relative to existing widefield techniques, we computed a population encoding of the visual stimuli. By applying principal-component analysis (PCA) to trial-averaged traces, we computed a low-dimensional basis for representing high-dimensional trial-averaged or single-trial neural population activity (Figure 2H). Trajectories corresponding to each visual stimulus orientation were well separated with COSMOS (Figure 2I) and trial-averaged two-photon data (compare Figures S4G and S4H) but not with conventional widefield preparations (Figures 2J and 2K). Only with COSMOS could robust trajectories of neural population dynamics be measured that encompassed synchronously recorded activity from across the full extent of the dorsal cortex.

Cortex-wide Recording during a Head-Fixed Lick-to-Target Task

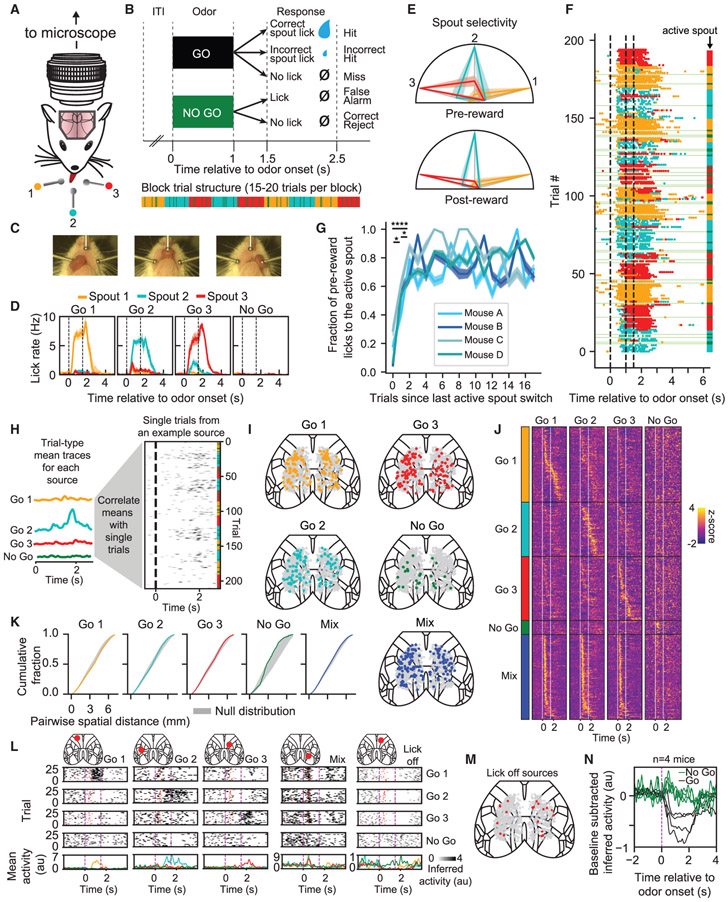

Using COSMOS, we set out to perform a proof-of-principle investigation of cortex-wide representations of targeted actions in the context of a head-fixed lick-to-target task. Mice were trained to lick one of three waterspouts in response to a single “go” odor and to take no action in response to a second “no-go” odor (Figure 3A). In this more complex variant of a previously studied task (Allen et al., 2017; Komiyama et al., 2010), sessions consisted of blocks with 15–20 trials, where a water droplet reward was available from one active spout per block (Figure 3B). The “go” odor remained constant, even as the rewarded active spout changed. Thus, no cue ever indicated which spout was active; the next reward was simply more likely to come from the spout that had delivered the previous reward 5–10 s prior to the current trial. Successful actions were, thus, history guided: they depended upon integrating experience from recent trials, as opposed to just responding to an immediate cue. Mice were rewarded if the first lick following a 0.5-s delay after odor offset was toward the active spout. Licking an inactive spout at this time yielded a penalty (a reduced-size water droplet from the active spout). Although other licks did not affect the outcome, mice tended to lick the active spout shortly after odor onset. To facilitate exploration during the first three trials of each block, a full-sized reward was dispensed from the new active spout if any spout was licked following the “go” odor.

Figure 3. Behavioral and Neural Correlates of Specific Targeted Motor Actions.

(A) Head-fixed behavioral task.

(B) Trial structure.

(C) Video frames illustrating mouse licking each spout.

(D) Lick rate during each trial type averaged across n = 4 mice. Error bars represent SEM across animals.

(E) Lick selectivity averaged across n = 4 mice. Error bars represent SEM across animals. Licks taken after odor presentation but before (left) or after (right) reward delivery. Colored lines represent normalized lick count toward each spout on trials when a given spout is active.

(F) Raster showing all licks during a single experimental session. “No-go” trials are indicated in green.

(G) Lick selectivity after active spout switch (error bars represent SEM; corrected p values from paired t test).

(H) Analysis for establishing tuning of sources to different trial types.

(I) Spatial distribution of task-related classes.

(J) Trial-averaged traces, ordered by task-related class and cross-validated peak time.

(K) Cumulative fraction of source separations at each distance. For this mouse, no task classes were significantly different than the null distribution (p > 0.05).

(L) Example single-trial traces that exhibit different responses to each trial type.

(M) All “lick off” sources from one mouse.

(N) Averaged, baseline-subtracted, “lick off” sources for each mouse.

*Corrected p < 0.05; ****corrected p < 0.0001.

Head-fixed mice reliably learned to lick each spout (Figure 3C; Video S4), with a bias to the active spout (Figures 3D and 3E). Furthermore, consistent with a strategy that integrates information across multiple previous trials (spanning tens of seconds), specificity of pre-reward anticipatory licking to the new active spout progressed over the first three trials of a block (Figures 3F and 3G; lick selectivity increased from trials 1 to 2, and from trials 2 to 3, of each block; corrected p < 0.05, paired t test, data pooled across sessions; n = 4 mice). Mice rarely licked on “no-go” trials (Figures 3D and 3F).

In four well-trained mice, we imaged the dorsal cortex during this task (all mice yielded >1,000 neuronal sources per session; mean = 1,195). After observing sources with reliable trial-type-related dynamics, we assigned sources to one of five task-related classes: responsive selectively for one trial type (go 1, go 2, go 3, or no go) or responsive to a mixture of trial types (mix) (Figures 3H-3J; consistent results were observed across these mice and also in a different genotype, Rasgrf2-dCre;Ai93D;CaMK2a-tTA, also targeting layer 2/3 neurons; Figures S5A and S5B). Sources from each class appeared randomly distributed across the dorsal cortex (Figures 3K and S5C; there was no consistently significant spatial pattern across mice for corrected p < 0.05, permutation test in STAR Methods; this analysis is sensitive to clusters >1 mm in diameter, Figure S5D; Hofer et al., 2005). For each task class, sources were present in all regions (Figure S5E). We also found sources across the cortex with clear encoding of each trial type (Figure 3L) and a subclass of sources with sustained activity during the pre-odor period followed by reduced activity at odor onset of the “go” trials (Figures 3M, 3N, and S5G; fraction of sources across n = 4 mice: 1.8% ± 0.4%, mean ± SD).

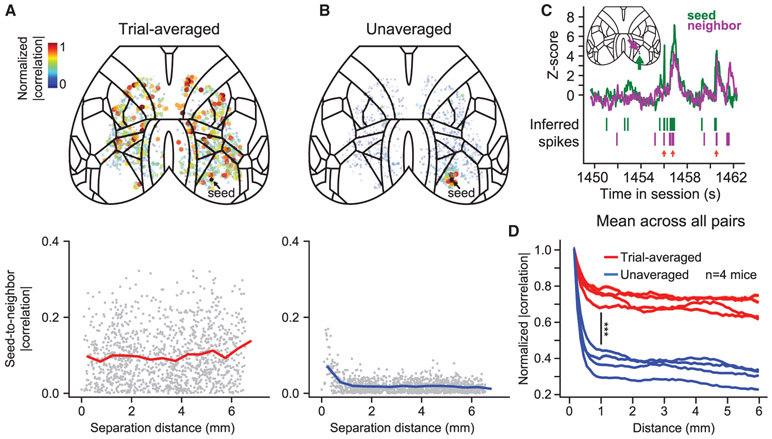

Correlations of Unaveraged Activity Exhibit Localized Spatial Structure

We next investigated the structure of correlated neural activity across cortex—taking advantage of the simultaneity of our large-scale data—via correlation maps, where we computed the correlation magnitude of the 29.4-Hz activity of a seed source with that of every other source (at zero lag with Gaussian-smoothed, SD = 50 ms, deconvolved spiking activity; STAR Methods). We computed this correlation map using either unaveraged traces from the whole session (i.e., the concatenated time series from all single trials after removing the variable-length intertrial interval) or concatenated trial-averaged traces (similar to Figure 3J). With trial-averaged data, sources with high correlation to the seed source were distributed widely, in support of our initial observations (Figure 4A). In contrast, with unaveraged data, we found many instances of localized correlation structure for seeds located throughout the cortex (Figures 4B and S6). This correlation did not result from a localized imaging artifact, as raw extracted fluorescence traces demonstrated that, although neighboring sources exhibited occasionally correlated firing, they had distinct activity patterns (Figure 4C). Additionally, there existed bilaterally symmetric correlations (Figure S6B). When summarizing the correlation versus distance for all pairs of sources, we observed a consistent pattern across mice (Figure 4D). For example, at a separation distance of 1 mm, unaveraged correlations were consistently lower than trial-averaged correlations (p = 0.0001, paired t test; n = 4 mice). Thus, although sources throughout the cortex exhibited similar activity when averaged according to trial type, correlations in unaveraged cortical activity showed increased dependence on spatial proximity.

Figure 4. Unaveraged Data Exhibit More Localized Correlation Structure Than Trial-Averaged Data.

(A) Seeded trial-averaged activity correlations (for a single seed): top, spatial distribution; bottom, correlation versus distance to the seed (black dot).

(B) Seeded unaveraged activity correlations (format matches that in A).

(C) Example illustrating unaveraged activity correlation (locations indicated on atlas inset). Red arrows indicate time points when the seed source and its neighbor are active simultaneously.

(D) Summary across all mice of correlation analyses shown in (A) and (B). Lines for each mouse represent the mean correlation across all pairs of sources (binned and normalized). Statistic shown at 1-mm distance (***corrected p = 0.0001, paired t test; n = 4 mice).

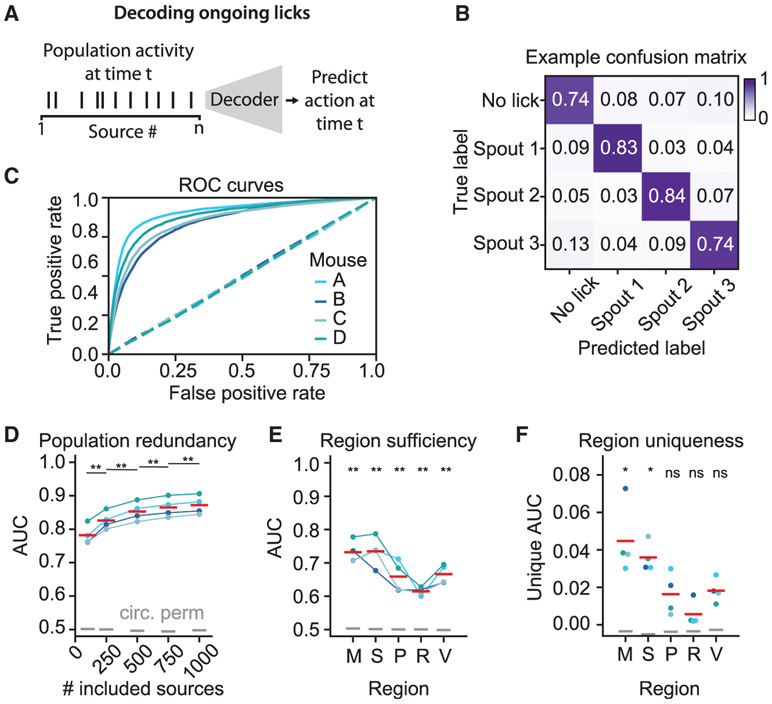

Single-Trial Representations of Distinct Motor Actions Are Distributed across Cortex

We used the synchronous-recording capability of COSMOS to assess how populations of sources jointly encoded information about ongoing behavior on single trials. We first characterized the ability of each individual source to discriminate any of four different ongoing actions: licking to spout 1, spout 2, or spout 3 or not licking at all. We found that most of the sources detected in each mouse exhibited significant discrimination capacity (78% ± 4% of all neuronal sources for n = 4 mice), where discrimination capacity was defined for each source as corrected p < 0.05 (Kruskal-Wallis H test for whether the source time series could discriminate any of the four actions; Figure S7A). These discriminating sources were distributed across all dorsal cortical regions (Figure S7B).

Next, we asked how cortical neurons jointly encoded information about ongoing actions. Across all four mice, a linear decoder could predict lick direction at the frame rate of our deconvolved Ca2+ data (29.4 Hz) with high accuracy on single-trial data (Figures 5A-5C; receiver operating characteristic [ROC] curves shown; STAR Methods). Indeed, as demonstrated in Figure S7C, we could readily decode individual lick bouts to different spouts, even when interleaved within a single trial. Thus, ongoing motor actions of the mouse are represented with high temporal fidelity by neuronal sources in the dorsal cortex.

Figure 5. Representations of Distinct Motor Actions Are Distributed across Dorsal Cortex.

(A) Schematic for decoding ongoing licks.

(B) Row-normalized lick confusion matrix for one mouse.

(C) Receiver operating characteristic (ROC) curve for each mouse, averaged across folds. Dashed lines indicate ROC curves for shuffled data.

(D) Improvement in the area under the ROC curve (AUC) as more neural sources are included. Red lines indicate means across mice. Gray lines indicate circularly permuted control. Corrected p values from paired t test are shown for each of the sources versus the closest evaluated number of sources.

(E) Decoding using only sources from within single cortical regions (using the 75 sources per area with best discrimination ability; M, motor; S, somatosensory; p,= parietal; R, retrosplenial; V, visual). Corrected p values for two-sided t test are shown for each region versus AUC = 0.5.

(F) Unique contribution of each region to decoding accuracy, measured as 1 – AUC (without region)/AUC (with region). Corrected p values from two-sided t test are shown for each region versus AUC = 0.0.

ns denotes corrected p > 0.05; *corrected p < 0.05; **corrected p < 0.01.

Finally, we compared decoding performance when using different numbers of sources. To provide a fair comparison, only the most discriminative sources were used for decoding (according to the ordering in Figure S7A), and all decoding models had the same number of parameters. We found a monotonic increase in decoding performance as more sources were included (Figure 5D, corrected p < 0.01, paired t test comparison versus area under the ROC curve [AUC] with next closest number of sources; n = 4 mice). To further examine this phenomenon, we decoded lick events using only the 75 most discriminative sources from each region (merged across hemispheres). Each region could decode lick direction far above chance (Figure 5E, corrected p < 0.01 for all regions, t test versus AUC = 0.5; n = 4 mice). Finally, by comparing decoding using all but one region with decoding using all regions (again, using only the top 75 sources per region), we demonstrated that at least some cortical regions—somatosensory and motor, in this case—contained significant unique information that was not present in the top sources sampled from other regions (Figure 5F; corrected p < 0.05, t test versus unique AUC = 0; n = 4 mice).

History-Guided Motor Plans Are Encoded by Neuronal Populations across Cortex

In this history-guided task, the mouse must maintain information during the pre-odor intertrial interval about where it plans to lick at odor onset. To detect and localize neural representations of this information, we trained decoders using “pre-odor” denoised neural Ca2+ data taken from the final 2.2 s of the intertrial interval, which preceded any stimulus or licking. We could successfully predict the spout that was most licked between odor and reward onsets (the “preferred spout”) using a linear technique (partial least-squares regression [PLS]; Figure 6A). Trials containing pre-odor licks were not used for prediction (0.1%–10.3% of all recorded licks were during the pre-odor period).

Figure 6. The Direction of Future Licks Is Encoded by Neurons Distributed across the Dorsal Cortex.

(A) Schematic of approach.

(B) Row-normalized confusion matrix predicting preferred spout location from pre-odor neural data (chance is 0.33).

(C) Predictions for one behavioral session (training trials and trials that contain any licks during the pre-odor period are not shown).

(D) Preferred spout neural decoding performance using data from three different time epochs. Red lines denote means across mice. Black lines and gray lines denote random shuffle and circularly permuted controls, respectively.

(E) Pre-odor neural decoding performance quantified for: motor (M), somatosensory (S), parietal (p), retrosplenial (R), and visual (V) areas. Each area-specific decoder used the 75 sources with best discrimination ability. Corrected paired t test values are shown versus both random controls in (D) and (E). Error bars in (D) and (E) show 99% bootstrapped confidence intervals over 20 model fits to different sets of training data.

(F) Pre-reward neural decoding of the spout most licked during the pre-reward period (purple) and fraction of pre-reward licks toward the active spout (cyan), shown as a function of location within a trial block. Note that both sets of lines use identical data taken from testing trials.

(G) Pre-odor behavioral decoding performance using data from both lower and upper cameras and a decoder trained on motion energy principal components derived from both the upper and lower videos (1,000 from each).

ns denotes corrected p > 0.05; *corrected p < 0.05; **corrected p < 0.01; ***corrected p < 0.001.

These PLS-based decoders exhibited above-chance performance, as exemplified in the predictions for a representative dataset (Figures 6B and 6C; four dimensions and up to 500 sources were used for training; see Figures S7D and S7E and STAR Methods for fitting details; sources were ordered by discrimination ability; Figure S7F). These decoders could predict the preferred spout using neural activity taken from the entire trial, the pre-reward period, or just the pre-odor period (Figure 6D). Performance was quantified by comparison with randomized controls with shuffled preferred spout labels. Shuffling was either performed randomly or, more conservatively, by circularly permuting the labels by random numbers of trials. Decoding was significant relative to either control (Figure 6D; corrected p < 0.01, paired t tests versus randomly shuffled; corrected p < 0.01 versus circularly permuted).

We next tested the decoding performance of different cortical regions, using only the 75 most discriminative sources from each region. We found that areas across the cortex yielded above-chance performance (Figure 6E; corrected p < 0.05, paired t tests versus random shuffle; corrected p < 0.05 for all areas but parietal versus circular permutation), including the visual cortex, even though no task elements were visible to the mouse. Decoder prediction of the true active spout was comparable to that of the preferred spout (Figure S7G; corrected p = 0.42, paired t test; n = 4 mice; only trials where the preferred and active spouts were identical were used for model training in all PLS analyses; in the test set; these labels were similar but not identical).

Additionally, we investigated how the ability to predict the preferred spout changed within each block of trials. Multiple trials were required for licking to adapt to a new active spout (Figure 6F, cyan points; comparison of trial 1 to trial 2 or 3; corrected p < 0.01, paired t test). In contrast, preferred spout decoding performance remained relatively constant over this period (Figure 6F, purple points; comparison of trial 1 to trial 2 or 3; corrected p = 0.43). However, we found a relationship between decoding and lick selectivity, with significantly greater performance on trials with >80% of pre-reward licks to a preferred spout compared with trials where <80% of licks were selective (corrected p <0.05, paired t test; n = 4 mice; Figure S7H). Thus, while we can successfully predict future actions throughout trial blocks, performance is reduced when future licking behavior is less selective. Moreover, when decoding the true active spout (instead of the preferred spout), performance with “correct go” trials where >70% of all licks were toward the active spout was significantly higher than either with “incorrect go” trials, where <70% of licks were toward the active spout, or with the error-prone second trials of each block (Figure S7I; corrected p < 0.05, paired t test).

Finally, we explored whether this ability to use neural data to predict upcoming actions might also be manifested in the visible behavior of the animal during the pre-odor period. We attempted to decode the preferred spout using only video of behavior (200-Hz video recordings of the face and body of each animal during neural data acquisition). We predicted the preferred spout using the top 1,000 principal components from each video (and then using PLS and identical training/test trials as with the neural analyses). We found that it was, indeed, possible to decode the preferred spout based on behavior (corrected p < 0.001 versus shuffle; corrected p < 0.01 versus circularly permuted labels; Figure 6G; Figures S8A-S8C). By decoding using specific regions of interest, we determined that movements of the mouth and whiskers contain information about the preferred spout during the pre-odor period, despite exclusion of all trials with detected licking to spouts during the pre-odor period (Figure S8D). Consistent with a neural representation of the upcoming spout target, distributed bodily signals well before lick onset may represent a physical readout of this neurally maintained information.

Distinct Patterns of Population Neural Activity Encode Different Motor Plans and Actions

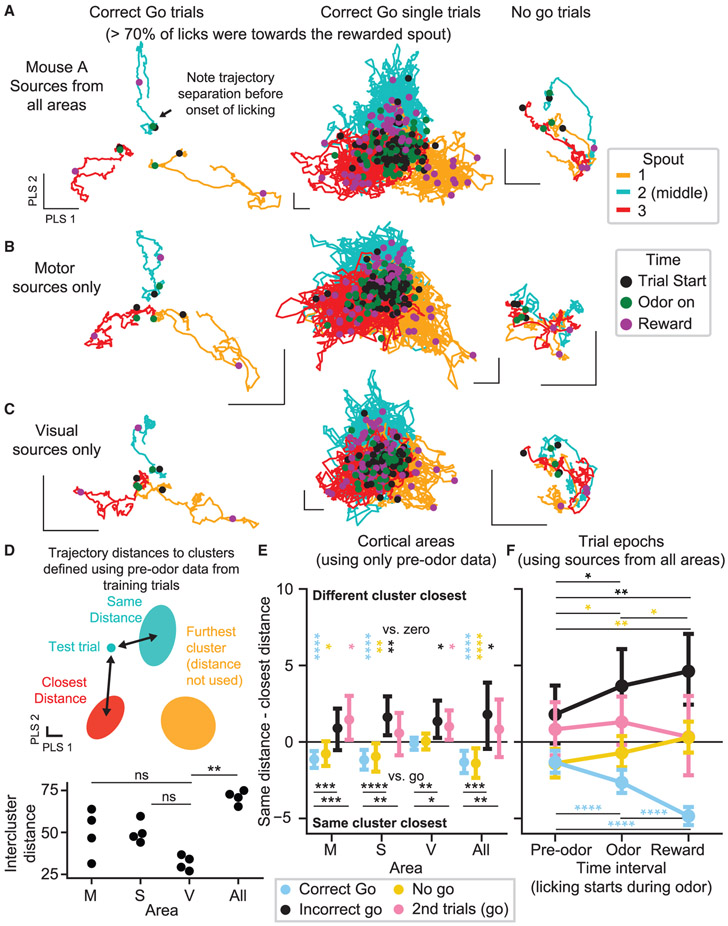

We next examined population dynamics by projecting neural activity onto the four-dimensional PLS basis that defined our decoders (which was optimized to discriminate preferred spout direction, not to explain the most variance; Figure S7J). On correct trials, trial-averaged neural trajectories were already segregated into distinct zones in state space at trial onset (black dots), before diverging further upon lick onset (using held-out “correct go” trials where >70% of all licks were toward the active spout, and, thus, the preferred and active spouts were always identical; Figure 7A, left; Video S5). This dynamical structure appeared reproducibly, albeit with greater noise, when examining held-out single-trial data (Figure 7B, middle). On “no-go” trials, which were indistinguishable from “go” trials before odor onset, we also saw clear separation of the trial types at trial start, but trajectory differences diminished as mice forewent licking. Furthermore, we observed qualitatively consistent dynamics when repeating this analysis with neuronal sources taken from only motor (Figure 7B) or only visual areas (Figure 7C).

Figure 7. Population Neural Activity Encodes Upcoming Lick Bouts toward Specific Spouts.

(A–C) Neural trajectories from mouse A (trial averaged in first and third columns, single-trial in second column). Basis vectors computed as in the previous figure using PLS regression on entire training trials and sources from all (A), only motor (B), or only visual (C) areas. Scale bars are arbitrary units but indicate an equivalent length in each dimension.

(D) Schematic of analysis scheme used in (E) and (F). Bottom panel shows summed intercluster Mahalanobis distance for clusters fit to data from each mouse. Corrected p values from a paired t test are shown versus visual data. M, motor; S, somatosensory; V, visual, All, all sources.

(E) Distributions of (same cluster Mahalanobis distances) – (next closest cluster distances). Data are pooled across four mice. Comparisons versus zero were computed using a Wilcoxon test. Comparisons versus “correct go” trials used a Mann-Whitney U test. 223 “correct go,” 110 “no go,” 29 “incorrect go,” and 37 second trials from 4 mice.

(F) Format matches that of (E), using sources from all areas and comparing pre-odor clusters to single-test-trial trajectories averaged over different time epochs: before odor, during odor, and after reward onset. Statistics were computed across time intervals using a Wilcoxon test. Error bars in (E) and (F) show 99% bootstrapped confidence intervals.

ns denotes corrected p > 0.05; *corrected p < 0.05; **corrected p < 0.01; ***corrected p < 0.001; ****corrected p < 0.0001. All statistical comparisons were FDR (false discovery rate) corrected, and comparisons that yielded corrected p > 0.05 are not shown in (E) and (F).

We next investigated the consistency across single trials of the pre-odor trajectory segregation. Pre-odor population activity occupied clusters in state space corresponding to the preferred spout on that trial (for all training trials used to define clusters, active and preferred spouts were identical). The separation distance between clusters was not the same in each area, with visual cortical clusters significantly closer than all-area clusters (Figure 7D, bottom; corrected p < 0.05, paired t test versus visual). We computed an index representing the distance from the average pre-odor position in state space of a given trial to the cluster corresponding to the preferred spout on that trial minus its distance to the next closest cluster (Figure 7E; see STAR Methods); negative values indicate that population activity is nearest the preferred spout cluster. We found that “correct go” and “no-go” trials had distributions centered below zero (except for the visual cortex, which was not significantly positive).

In contrast, on error trials, we would expect this trend to be weakly present—if present at all—as the trajectory could encode confusion or incorrect spout preference evident in the animal’s subsequent behavior. “Second trials” (where there was uncertainty in behavior after an active spout change; Figure 3G) highlighted data wherein mice often lick to the wrong spout—but after demonstrating awareness of the correct spout on the preceding trial (only 50% ± 38% [mean ± SD] of pre-reward licks on “second trials” were toward the active/correct spout, while 74% ± 17% of reward period licks were toward the active spout on corresponding “first trials”; 37 trials pooled over 4 mice). We found that “incorrect go” (<30% of trial licks were toward the correct spout) and “second trials” (situations with many licks to spouts besides the active/correct one) both had distributions centered above zero. The index was significantly lower for “correct go” trials than for “incorrect go” and “second trials” (corrected p < 0.05 or less, Mann-Whitney U test) across all cortical areas analyzed (consistent with Figure S7I).

We tracked this index across time by repeating the analysis using data following either odor onset or reward onset (Figure 7F; see STAR Methods). During “correct go” trials, the neural trajectories moved even further along the direction of the preferred spout cluster (corrected p < 0.001, Wilcoxon test versus pre-odor). In contrast, “incorrect go” trial trajectories moved away from the preferred spout cluster as mice licked toward incorrect spouts (corrected p < 0.05, pre-odor epoch versus odor and reward epochs). “No-go” trajectories also moved away from the preferred spout cluster as mice suppressed licking (corrected p < 0.05 for all comparisons between epochs).

Together, these findings further support the presence of a population representation of targeted action-related motor plans across the cortex. Additional analyses suggest that there is a distinction between the population representation of motor plans versus motor plan execution (Figures S8E-S8G). Finally, multi-region optogenetic inhibition revealed evidence fora potential causal role of non-motor regions in motor plan execution (Figure S9).

DISCUSSION

Here, we developed a new technique, COSMOS, for simultaneously measuring the activity of over a thousand neuronal sources spread across the entirety of the mouse dorsal cortex. We demonstrated that COSMOS is well suited for studying population dynamics across many cortical areas, with resolution enabling recovery of sources composed of ~1–15 neurons over a centimeter-scale field of view at ~30 Hz. We then used COSMOS to investigate cortical neuronal population dynamics during a three-spout lick-to-target task. We found that, although unaveraged correlations exhibit localized spatial structure, widespread populations of neurons—with no apparent mesoscale spatial structure— encode targeted motor actions and history-guided plans on single trials.

Distributed Cortical Computation

Our observations indicate that ongoing and planned motor actions are encoded in the joint firing of superficial cortico-cortical projection neurons (derived from the Cux2 lineage; Franco et al., 2012; Gil-Sanz et al., 2015) throughout the dorsal cortex. Recent work has demonstrated that many cells throughout the brain exhibit mixed-selectivity tuning, which can be driven strongly by ongoing, spontaneous movement (Allen et al., 2017; Musall et al., 2019; Stringer et al., 2019). Building upon this work, we focused on assessing the extent to which the joint activity of many neurons together could encode targeted motor behaviors, rather than seeking to explain the activity of individual neurons based on a breakdown of contributing behavioral factors. We found that, as more neuronal sources were used for training classifiers, the ability to decode ongoing lick actions improved, a hallmark of distributed codes (Rigotti et al., 2013).

We also found modes of neural activity that predicted future history-guided motor actions. Our ability to simultaneously measure the multi-unit activity of many neurons across the dorsal cortex on single trials—in addition to our specific behavioral task—may account for the fact that we found a population encoding future actions beyond the frontal cortex, unreported in previous work (Steinmetz et al., 2019). Interestingly, on trials with nonselective licking, neural decoding performance for the preferred spout (Figure S7H) and the active spout (Figure S7I) was significantly reduced. On these trials with disorganized behavior, the mouse is potentially in a distinct brain state that does not map onto the subspace defined using correct trial data.

Our results suggest that neural representations of history-guided motor plans may not be confined to cortical regions predicted to be involved in the task, at least for layer 2/3 neurons. We identified a widespread population encoding of targeted motor actions and plans, a lack of structure in the spatial distribution of trial type selective sources, and diffuse trial-averaged seeded correlations. At first glance, these results could be consistent with a non-hierarchical view of cortical computation (Hunt and Hayden, 2017)—or even with a weak version of Lashley’s “law of mass action” (Kolb and Whishaw, 1988)—but we also importantly observed localized spatial structure when analyzing cortex-wide single-trial correlations. Thus, there may be an interplay between local and global computation whereby individual neurons intermittently encode task-related information, but a reliable population code still persists (Gallego et al., 2020).

We propose two potential interpretations for our observations of widespread encoding of motor plans and actions. First, information arising across the cortex (itself predictive of future actions) may converge onto classical motor regions, as local “specialist” areas process and transmit disparate information streams that are integrated into a plan in the motor cortex. Second, an efference-copy-like plan may be generated in the motor cortex and broadcast widely, potentially as a contextual signal to aid in distributed processing or learning. In a predictive coding framework, for example, widespread motor plan encodings could contribute to a predictive signal in each region against which ongoing activity is compared (Friston, 2018; Keller and Mrsic-Flogel, 2018; Schneider et al., 2014). Distinguishing between these hypotheses will likely require the ability to simultaneously record from and inhibit large regions of cortex (Sauerbrei et al., 2020), which could be built upon COSMOS.

Our results also showed that upcoming licking can be decoded from gross body movements observed before the onset of licking. These predictive body movements could represent a consequence, rather than a cause, of our observed predictive cortical activity patterns (like a poker tell or an instance where a latent brain state manifests physically; Dolensek et al., 2020). As the mouse cannot determine which spout is active by sensing the pre-odor environment, a broadcast signal could facilitate global preparation for the upcoming targeted action. Alternatively, these subtle movements could help the mouse remember the information (like a physical mnemonic), guided by centrally derived neural activity. Distinguishing between these possibilities will require targeted manipulations, potentially by disrupting the mouse’s ability to move its body (as in Safaie et al., 2019).

Imaging Large-Scale Population Dynamics

Over the past decade, one-photon Ca2+ imaging—using widefield macroscopes or microendoscopic approaches—has seen renewed popularity due to comparative technical simplicity and compatibility with increasingly sensitive and bright genetically encoded Ca2+ sensors (Allen et al., 2017; Chen et al., 2013; Scott et al., 2018; Ziv et al., 2013). Early microendoscopic imaging in hippocampal CA1 (Ziv et al., 2013), where sparsely active neurons are stratified into a layer only 5–8 cells thick (Mizuseki et al., 2011), provided evidence that activity signals in single neurons could be resolved, but cellular resolution does not appear to hold universally across all systems. In the birdsong system, one-photon imaging data (Liberti et al., 2016) yielded results in conflict with a follow-up two-photon imaging study (Katlowitz et al., 2018) that showed significantly more stable single-neuron representations than in the earlier work.

As our results estimate that each COSMOS source is likely a mixture of 1–15 neurons (akin to multi-unit spiking activity), neuronal sources arising from COSMOS should not be treated as single units unless so validated. High-resolution two-photon or electrophysiological approaches would be better suited for questions that require true single-cell resolution, albeit over smaller fields of view. However, COSMOS data also exist in a regime complementary to previous methods, and, as demonstrated, key population analyses that work with COSMOS cannot be performed using conventional widefield imaging data.

Much work in the realm of large-scale neural population dynamics leverages dimensionality reduction techniques that estimate a neural state vector as a linear combination of the activities of individual neurons (Churchland et al., 2012). Recent work has begun to investigate the idea that major results derived from sorted individual unit recordings can be recapitulated just as well from multi-unit activity (Trautmann et al., 2019) and, likely, also COSMOS data. Indeed, as attention in systems neuroscience increasingly broadens from a focus on individual neurons to more abstract population codes (Saxena and Cunningham, 2019; Yuste, 2015), COSMOS provides a means of measuring distributed codes in genetically defined populations of neurons across cortex and for testing how cortical dynamics vary across diverse behaviors.

STAR★METHODS

RESOURCE AVAILABLILITY

Lead Contact

Further information and requests for requests for resources and reagents may be directed to and will be fulfilled by the Lead Contact, Karl Deisseroth (deissero@stanford.edu).

Materials Availability

This study did not generate new unique reagents. Information about how to build a COSMOS macroscope using publicly available parts can be found at http://clarityresourcecenter.com/.

Data and Software Availability

Pre-processed data generated during this study are available at http://clarityresourcecenter.com/. Owing to the large size of our datasets, raw data and relevant processing code will be made available upon reasonable request.

EXPERIMENTAL MODEL AND SUBJECT DETAILS

All procedures were in accordance with protocols approved by the Stanford University Institutional Animal Care and Use Committee (IACUC) and guidelines of the National Institutes of Health. The investigators were not blinded to the genotypes of the animals. Both male and female mice were used, aged 6 - 12 weeks at time of surgery. Mice were group housed in plastic cages with disposable bedding on a standard light cycle until surgery, when they were split into individual cages and moved to a 12 hr reversed light cycle. Following recovery after surgery, mice were water restricted to 1 mL/day. All experiments were performed during the dark period. The mouse strains used were Tg(Thy1-GCaMP6s)GP4.3Dkim (Thy1-GCaMP6s, JAX024275), Cux2-CreERT2 (gift of S. Franco, University of Colorado), Ai148(TIT2L-GC6f-ICL-tTA2)-D (Ai148, Jax 030328) (gift of H. Zeng, Allen Institute for Brain Science), B6.Cg-Tg(Slc32a1-COP4*H134R/EYFP)8Gfng/J (VGAT-ChR2-EYFP, Jax 014548), B6;129S-Rasgrf2tm1(cre/folA)Hze/J (Rasgrf2-2A-dCre, Jax 022864), and gs7tm93.1(tetO- GCaMP6f)Hze Tg(Camk2a-tTA)1Mmay (Ai93(TITL-GCaMP6f)-D;CaMK2a-tTA, Jax 024108), all bred in a mixed C57BL6/J background. Mice homozygous for Ai148 and heterozygous for the CreER transgenes were bred to produce double transgenic mice with the genotype Cux2-CreER;Ai148.

To induce GCaMP expression in Cux2-CreER;Ai148 mice, tamoxifen (Sigma-Aldrich T5648) was administered at 0.1 mg/g. Preparation of the tamoxifen solution followed (Madisen et al., 2010). Specifically, tamoxifen was dissolved in ethanol (20 mg/1mL). Aliquots of this solution were stored indefinitely at −80 C. On the day of administration, an aliquot was thawed, diluted 1:1 in corn oil (Acros Organics, AC405430025) into microcentrifuge tubes, and then vacuum centrifuged (Eppendorf Vacufuge plus) for 45 minutes (V-AQ setting). After vacuuming, no ethanol should be visible, and the tamoxifen should be dissolved in the oil. Each mouse was weighed, and for every 10 g of mouse, 50 μL of solution was injected intraperitoneally.

To induce GCaMP expression in Rasgrf2-2A-dCre;Ai93D;CaMK2a-tTA mice, trimethoprim (Sigma-Aldrich T7883-25G) was dissolved in DMSO (Sigma-Aldrich 472301) at 10mg/mL and administered at 50 μg/g. Each mouse was weighed, and for every 10 g of mouse, 50 μL of solution was injected intraperitoneally.

METHOD DETAILS

Optical implementation details

The COSMOS macroscope uses a 50 mm f/1.2 camera lens (Nikon) as the main objective. It is mounted on a 60 mm cage cube (Thorlabs LC6W), which was modified to be able to hold a large dichroic (Semrock FF495-Di03 50mm x 75mm). It is also possible, though not optimal, to use an unmodified cage cube with a 50mm diameter dichroic. Illumination is provided by an ultra-high power 475 nm LED (Prizmatix UHP-LED-475), passed through a neutral density filter (Thorlabs NE05A, to ensure that the LED driver was never set to a low-power setting, which could cause flickering in the illumination), an excitation filter (Semrock FF02-472/30), and a 50mm f/1.2 camera lens (Nikon) as the illumination objective. An off-axis beam dump is used to capture any illumination light that passed through the dichroic. The detection path consists of an emission filter (Semrock FF01-520/35-50.8-D), followed by a multi-focal dual-lenslet array which projects two juxtaposed images onto a single sCMOS camera sensor (Photometrics Prime 95B 25mm). The approximate system cost was $40,000 USD, where the Prime 95B camera was ~$30,000. Raw images collected by the COSMOS macroscope contain sub-images from each lenslet, each focused at a different optical plane. The camera has a particularly large area sensor with a 25mm diagonal extent. The lenslet array is fabricated by mounting two modified 25mm diameter, 40mm focal length aspherized achromats (Edmund Optics #49-664) in a custom mount (fabricated by Protolabs.com, CAD file provided upon request). To maximize light throughput as well as position the optical axis of the two lenslets such that the two images fit side-by-side on the sensor, 7.09 mm was milled away from the edge of each lenslet (using the university’s crystal shop). The mount was designed to offset the vertical position and hence the focal plane of each lenslet by a specified amount - in our case 600μm. The mount was further designed to position the camera sensor at the midpoint between the working distance of each lenslet. A small green LED (1mm, Green Stuff World, Spain) was placed close to the primary objective such that it did not obstruct the image but was visible to the sensor and was synchronized to flash at the beginning of each behavioral trial. We measured the point spread function of each sub-image using a 10 μm fluorescent source; the focal planes were offset by 620 μm, close to the designed 600 μm.

There were a number of factors contributing to this final system design, which we describe here.

First, based on our simulation analyses in Figure S2, we determined that a multi-focal approach would yield the highest signal-to-noise ratio (SNR) across the target field of view. In particular, a dual-focal design best leveraged all of the light passing through the main objective, achieving a balance between increasing the total transmitted signal from each neuronal source and keeping the signal from each source compact. Although one obvious approach to increasing the depth of field of an imaging system is to simply close down the aperture, this comes at the cost of reducing the light throughput, SNR, and maximum spatial resolution of the system (Brady and Marks, 2011). Such a trade-off has spurred the development of multiplexed computational imaging approaches for extending the depth of field while maintaining high SNR. Computational imaging yields performance advantages specifically when the average signal level per pixel is lower than the variance of signal-independent noise sources, such as read noise (Cossairt et al., 2013). In particular, multiplexing approaches begin to fail when the photon noise of the signal overwhelms the signal-independent noise (Schechner et al., 2007; Wetzstein et al., 2013). As shown in Figure S2, our imaging paradigm falls within the regime where computational imaging ought to be beneficial. In particular, this is due to the bright background from autofluorescence and out-of-focus fluorescence that adds significant noise to the neuronal signal.

We thus took inspiration from a number of computational imaging techniques to develop an approach suitable for the requirements of our preparation: large field of view, microscopic resolution, high light-collection, high imaging speed, and minimal computational cost. In particular, there exist a number of potentially applicable extended depth of field (EDOF) imaging techniques, including use of a high-speed tunable lens (Liu and Hua, 2011; Wang et al., 2015), multi-focal imaging (Abrahamsson et al., 2013; Levin et al., 2009), light field microscopy (Levoy et al., 2006), and wavefront coding (Dowski and Cathey, 1995). While these techniques extend the depth of field, they require deconvolution to form a final image, which is computationally expensive and, as demonstrated later in our noise analysis, also provides a lower SNR for shot noise-limited applications such as our own. Additionally, further analyses of these techniques have demonstrated that the performance of any EDOF camera is improved if multiple focal settings are used during image capture (Brady and Marks, 2011; Hasinoff et al., 2009; Levin et al., 2009). We thus decided to pursue a multi-focal imaging approach and to design our system such that post-processing did not require a spatial deconvolution step.

Second, we found that the maximum illumination power is limited, and it was therefore essential to optimize the light throughput of the detection path in order to achieve maximum SNR. We found empirically that there was a maximum allowable illumination power density: continuous one-photon illumination intensity of around 500 mW/cm2 yielded adverse effects on the mouse, including an enhanced risk of blood vessel rupture. Thus, indeterminately turning up the illumination power to increase signal is not an option, even if ultra-bright light sources exist.

Third, we require high image quality across a large, centimeter-scale field of view. When paired with the light throughput requirement, this means the optical system must have high etendue; without the use of large and extremely expensive custom optics, it is difficult to simultaneously maintain image quality and prevent light loss when passing the image through relay optics. We thus preferred designs that minimized the number of optical components in the detection path. In particular, rather than demagnifying an image onto a smaller camera sensor, we gained flexibility by using a large area sensor. Furthermore, it was also problematic to use a beamsplitter approach followed by relaying images to separate cameras, in terms of light throughput, image quality, and data acquisition complexity. Not only is a multi-camera beamsplitter approach costly and complex, but the beamsplitter approach is worse than the lenslet approach: in this setup, each image from the beamsplitter shares light that passed through the same central region of the aperture of the main objective; on the other hand, each lenslet image uses light that passed through one of two non-overlapping regions of the aperture of the main objective. Thus, for a given depth of field of each sub-image, and consequent f/# of either the lenslet or post-beamsplitter relay optics, each lenslet image will receive twice as much light as compared with the each beamsplitter image. Finally, because the lenslets themselves are physically large, we needed to be wary of aberrations (geometric and chromatic) induced by the lenslets. For microlenslets, this is less of an issue and is often ignored. The easiest, most cost-effective, and most reproducible way to fabricate high performance lenslets is to leverage the design of commercial off-the-shelf aspherized achromats. We found that with minor machined modifications to existing optics, it was possible to produce lenslets with the right physical dimensions while maintaining the high performance associated with aspheric optics. In the end, our image quality and light throughput of each lenslet image was on par with an image from a simple macroscope with equivalent aperture-size (as shown in Figure 1G, H); the multi-focal design is thus uniformly better than the conventional approach. Note that to generate Figure 1H, we manually merged the two focally offset sub-images (in Photoshop, Adobe). This was the only instance in which we ever needed to merge the image data; for all other processing, we processed each sub-image separately and then merged the extracted neural sources.

We characterize the resolution of our system in Figure S2N, and we find it to be sufficient for our application. The resolution of the system would likely be improved with smaller pixels; at the time of development, the only sCMOS camera available with a large enough sensor and fast enough framerate had 11 μm pixels, which with the magnification of our system yields pixels that sample from 13.75 μm in the specimen. However, the current resolution is likely acceptable for a number of reasons. First, cortical neuron somas are around 10-20 μm in diameter; with scattering, the point spread function of each neuronal source is further enlarged. Second, our current labeling strategy also labels dendrites, which serves to further increase the spatial spread of each source. Third, although an increased resolution could potentially help in distinguishing nearby sources, because of scattering it is unlikely that a slightly increased resolution would fundamentally change the data. Fourth, an increased resolution would lead to larger dataset sizes and consequent processing times without a concomitant increase in capability. Nevertheless, future improvements in the design will likely harness increased resolution. In particular, the most immediate improvements to the system could be achieved by using a custom primary objective with larger numerical aperture, or a camera with a larger or higher resolution sensor. Additionally, use of structured illumination is a viable route for potentially reducing the effect of scattering and for increasing the ability to discriminate between nearby sources.

Neuronal source extraction pipeline

The first step of processing raw videos collected on the COSMOS macroscope was to load the video (i.e., image stack) into memory from a remote data server, followed by cropping and then saving out to a local workstation separate image stacks for the top-focused and bottom-focused regions of interest (ROI). We applied rigid motion correction to each lenslet sub-image independently. Each ROI was motion corrected with a translation shift that was computed using the peak in the autocorrelation of a few sub-ROIs relative to the first frame in the stack. The motion correction was tested by plotting the maximum shift associated with additional test sub-ROIs, as well as manually inspecting each video, to ensure that motion throughout the video is smaller than 1 pixel in radius and that there are no large nonrigid movements. A proper surgery and rigid head fixation were adequate to maintain image stability.

Motion corrected stacks were then processed using Constrained Nonnegative Matrix Factorization for microendoscopic data, implemented in MATLAB (CNMF-E; Zhou et al., 2018). This algorithm is an improved version of the original CNMF (Pnevmatikakis et al., 2016), which has been modified primarily to incorporate better background subtraction, specifically for one-photon data. This background subtraction is very important for COSMOS data, since one-photon widefield recordings can be contaminated by large scale fluctuations in blood-flow related fluorescence modulation (Allen et al., 2017). Importantly, since we are extracting signal from sparse point sources, it is possible to separate the spatially broad background fluctuations from the more spatially compact neuronal signals–this was not the case in previous widefield preparations such as Allen et al. (2017). In particular, the CNMF-E algorithm excels at this background removal. As parameters for CNMF-E, we used a ring background model, with a 21-pixel source diameter initialization. For initializing seed pixels, we used a minimum local correlation of 0.8, and a minimum peak-to-noise ratio of 7. To analyze a 60,000-frame dataset, on a workstation with 512 GB of RAM, we can use 7 cores in parallel without running out of memory. With less available RAM, the number of parallel cores must be correspondingly scaled down. Since the algorithm is factorization based, the time for processing a dataset depends on the number of neuronal sources and the length of the video. Processing the top-focused and bottom-focused videos for a 60,000-frame dataset (equivalent to a 30-minute recording) requires about 36 hours in total. There are a number of paths to making this more efficient in the future: multiple workstations could be used to separately process the top-focused and bottom-focused videos; source extraction could be run only on the in-focus regions of the top-focused and bottom-focused stacks; and the improved background removal of CNMF-E could be applied to OnACID, an online version of the original CNMF algorithm that has demonstrated real-time processing speeds (Giovannucci et al., 2017). While all processing and analysis code for this project was written in Python, we elected to use the MATLAB implementation of CNMF-E because, as of the time when we were implementing our data analysis pipeline (in early 2018), the CNMF-E implementation in Caiman (Giovannucci et al., 2019) returned inferior results because it only initialized neural components with the full CNMF-E background model and then performed iterative update steps using a simpler background model.

Once CNMF-E has extracted neuronal sources (i.e., their spatial footprints and corresponding denoised time series) from the top-focused and bottom-focused videos, we merge the best in-focus sources from each focal plane, while ensuring that no sources were double counted by finding a classification line that spatially segmented the in-focus region of each sub-image. First, using a pair of manually selected keypoints, easily selected just once per dataset, we align the top-focused and bottom-focused coordinate systems. Then, in a semi-automated manner, we draw a separation curve for each cortical hemisphere, such that on one side of the separation curve we use sources extracted from the bottom-focused plane, and on the other side of the curve we use sources from the top-focused plane. This curve traces out the crossover in focus-quality between the two focal settings along the curved cortical surface. Due to different positioning and tilt of the headbar, these curves are not always in the same location across mice, even if the implanted glass window has identical curvature. Here, we use the radius of the source spatial footprints as a proxy for focus-quality across the field of view.

After merging sources from the top-focused and bottom-focused videos, we verify the quality of each source. First, we ensure that for each source the deconvolved trace returned by CNMF-E has a correlation of at least 0.75 with the corresponding non-deconvolved trace. The deconvolution algorithm assumes that the traces are generated by GCaMP, with a fast onset and a slow, exponential decay. Thus, any sources for which the deconvolved trace does not match the raw trace are likely not GCaMP signal. Second, we manually inspect all remaining traces, only keeping sources that are not located over blood vessels, that have radially symmetric spatial footprints, or that have a high signal-to-noise ratio. This process provides confidence that we have high-quality sources with minimal contamination. Finally, we manually align the atlas to each dataset based on the intrinsic imaging alignment assay, such that sources from all mice are situated in the same coordinate system.

Comparison of COSMOS with conventional macroscope

One mouse with good GCaMP expression and a clear window was used for this experiment. Over one hour, three independent videos each of 1800 frames were recorded at each macroscope setting: f/1.2, f/2, f/2.8, f/4, f/5.6, f/8, as well as with the detection lens replaced by the multifocal lenslet array. The mouse was awake but was sitting in a dark and quiet environment while not performing any behavioral task. The recordings for each macroscope setting were interleaved with one another throughout the session, such that recordings of the same setting were not captured sequentially, to mitigate the impact of any changes in the mouse’s behavioral state. The intensity of the excitation light at the sample remained constant throughout the experiment. In particular, changing the detection aperture setting did not alter the illumination, as the aperture was only changed on the detection lens as opposed to on the primary objective. The videos were processed using the same neuronal source extraction pipeline used throughout this paper, with identical parameter settings. During manual quality inspection and culling of the recovered sources, the operator was blinded to the macroscope setting of that video.

The COSMOS macroscope outperformed a comparable conventional macroscope in terms of depth of field while maintaining equivalent light throughput. We qualitatively compared the fidelity and depth-of-field of an image captured with a f/2 macroscope versus an image generated by merging the lenslet sub-images. Whereas a conventional macroscope offered nearly zero contrast at the lateral edges, the COSMOS macroscope provided good contrast laterally with only slightly reduced contrast medially. Light throughput of each lenslet was the same as that of a standard macroscope with the aperture set to f/2, and the light from defocused emitters was not diminished by vignetting.

The resolution of COSMOS was characterized using two approaches. The point spread function was acquired using a 10 μm precision pinhole (Thorlabs P10D) atop a fluorescent slide (Thorlabs FSK5, green). Additionally, an image was acquired of a USAF 1951 resolution chart (Thorlabs R3L3S1N) atop a fluorescent slide.

Intrinsic imaging for atlas alignment

Based on Garrett et al. (2014), Juavinett et al. (2017), and Nauhaus and Ringach (2007), a macroscope was constructed using two back-to-back 50mm f/1.2 F-mount camera lenses (Nikon), mounted using SM2 adapters (Thorlabs), and an sCMOS camera (Hamamatsu Orca Flash v4.0). A 700/10nm optical filter (Edmund Optics) was inserted between the lenses. Illumination was provided using a fiber-coupled 700nm LED (Thorlabs M700F3) that was positioned for each mouse so as to maximize coverage of the left posterior region of cortex (contralateral to the right visual field). A small green LED (1mm, Green Stuff World, Spain) was inserted after the optical filter and was synchronized to flash for 30 ms at the beginning of every trial. Mice were lightly sedated using chlorprothixene (Sigma-Aldrich C1671-1G, 2 mg of chlorprothixene powder in 10 mL of sterilized saline, administered 0.1 mL/20 g per mouse), and inhaled isoflurane at 0.5% concentration throughout the acquisition session. Mice were visually monitored during the session to ensure that they were awake.

The visual stimulus was generated using PsychoPy. Based on Zhuang et al. (2017), it consisted of a bar being swept across the monitor. The bar contained a flickering black-and-white checkerboard pattern, with spherical correction of the stimulus to stimulate in spherical visual coordinates using a planar monitor (Marshel et al., 2011). The pattern subtended 20 degrees in the direction of propagation and filled the monitor in the perpendicular dimension. The checkerboard square size was 20 degrees. Each square alternated between black and white at 6 Hz. The red channel of all displayed images was set to 0, to limit bleed-through onto the intrinsic imaging camera. To generate a map, the bar was swept across the screen in each of the four cardinal directions, crossing the screen in 10 s. A gap of 1 s was inserted between sweeps, resulting in repetition period of 11 s. Owing to the large size of our stimulus monitor, we also used a spherical warping transformation (PsychoPy function psychopy.visual.windowwarp) to simulate the effect of a spherical display using our flat monitor.

Finally, we developed a protocol for aligning a standardized atlas (Lein et al., 2007), shown in Figures 2J, S3C, and S3D, to each recorded video. We take advantage of the retinotopic sign reversal that occurs on the border between visual areas V1 and PM (Garrett et al., 2014). We use optical intrinsic imaging to record low spatial resolution neural activity in response to a drifting bar visual stimulus (Garrett et al., 2014; Juavinett et al., 2017) yielding a clear border between visual regions that can be computationally processed to define a phase map indicating the V1/PM border (Video S3). This landmark, in combination with the midline blood vessel can be used to scale and align the atlas to each mouse (Figure S3C). In Figure S3D, we provide the atlas alignment for all mice in the cohort. We used intrinsic imaging since, due to the sparsity of the cellular labeling in our Cux2-CreER mice, GCaMP imaging did not provide a spatially smooth enough signal to extract a phase map.

We performed 150 repeats of the stimulus. This number of repeats is higher than previous reports likely due to the 10x smaller pixel well capacity of our camera (5e4 electrons, compared with the 5e5 electron well depth of the Dalsa Pantera 1m60 used in Juavinett et al. (2017) and Nauhaus and Ringach (2007)), and subsequent increase in the minimum variance of photon shot noise.

The computer monitor was oriented at 60 degrees lateral to the midline of the mouse, tilted down 20 degrees, and placed 10 cm away from the right eye. Tape was placed around the around the headbar to prevent the mouse’s whisker and body from entering the imaging field of view. The mouse and microscope were covered with black cloth to occlude any external visual stimuli. Video was recorded at 20Hz with 2x2 pixel binning, with an effective pixel size of 13 μm at the sample. These acquisition parameters trade off dynamic range with dataset size. Illumination was adjusted to fill the dynamic range of the camera.

To process the video, it was first scaled down by a factor of 2 in x, y, and t dimensions. Trial start frames were extracted using the flashes from the synchronization LED. Trials of the same orientation were averaged together into an average video. In this average video, one should be able to see a bar propagating in one direction across V1 and a second bar propagating in the opposite direction across AM. A phase map was computed from this video by, for each pixel, finding the frame when the signal reached its minimum (corresponding to maximum hemodynamic absorption when the visual stimulus passes within the retinotopic field of view of that pixel). A 2D top-projection atlas was generated from the annotated Allen Brain Atlas volume, version CCFv3 (Lein et al., 2007), in MATLAB (MathWorks). The atlas was aligned to the phase map based on the location of the border between V1 and AM, and the midline. By aligning the intrinsic imaging field of view to the COSMOS field of view using landmarks along the edge of the window, the atlas could then be aligned to the COSMOS recordings.

Visual orientation selectivity assay

Sinusoidal visual gratings were presented to mice under the COSMOS macroscope using a small 15.5 cm x 8.5 cm (width x height)-sized LCD display mounted horizontally on an optical post (ThorLabs). The monitor (Raspberry Pi Touch Display) was centered 7.5 cm in front of the left eye of the mouse (at a 30° offset from perpendicular with the center of the eye). Contrast on the display was calibrated using a PR-670 SpectraScan Spectroradiometer (Photo Research). Significantly, this orientation of the monitor stretched across the midline of each animal and thus delivered some visual stimulation to both eyes. To block stray stimulation light from reaching the cranial window on the mouse, we attached a light-blocking cone that we designed to attach to the head bar of each animal (Figure S2K).

Gray sinusoidal grating stimuli were generated using PsychoPy (running on a Raspberry Pi 3 Model B). Eight stimuli (separated by 45°) were successively presented to each mouse (4 s per stimulus, with a 4 s intertrial interval). Each stimulus was presented five times, each time in the same order. The spatial frequency of the grating pattern was 0.05° and its temporal frequency was 2 Hz.

Comparison of COSMOS with two-photon imaging

The same three mice that had their visual responses characterized using drifting gratings under the COSMOS microscope were also imaged beneath a two-photon microscope (Neurolabware). Data were obtained at 30 Hz using an 8 kHz resonant scanner. We used a Nikon CFI LWD 16X water dipping objective (Thorlabs N16XLWD-PF) with clear ultrasound gel used as an immersion medium (Aquasonic, Parker Laboratories) between the surface of the cranial window and the objective itself. Following motion correction using moco (Dubbs et al., 2016), activity traces were extracted using the standard CNMF algorithm implemented the February 2018 version of Caiman (Giovannucci et al., 2019).