Abstract

Link prediction in networks has applications in computer science, graph theory, biology, economics, etc. Link prediction is a very well studied problem. Out of all the different versions, link prediction for unipartite graphs has attracted most attention. In this work we focus on link prediction for bipartite graphs that is based on two very important concepts—potential energy and mutual information. In the three step approach; first the bipartite graph is converted into a unipartite graph with the help of a weighted projection, next the potential energy and mutual information between each node pair in the projected graph is computed. Finally, we present Potential Energy-Mutual Information based similarity metric which helps in prediction of potential links. To evaluate the performance of the proposed algorithm four similarity metrics, namely AUC, Precision, Prediction-power and Precision@K were calculated and compared with eleven baseline algorithms. The Experimental results show that the proposed method outperforms the baseline algorithms.

Subject terms: Computational science, Computer science

Introduction

The applications of link prediction in real-world networks has been attracting the attention of researchers from various domains. The real world networks can be represented with the help of graphs, where nodes represent entities that are connected by edges. The edges represent associations or interactions between nodes. These networks are dynamic in nature, where new nodes and edges can be observed at future timestamps. Link prediction task aims to identify the most probable links that may appear in the near future. Some of the popular real-world applications of link prediction are: Prediction of hidden relationships between terrorists, e-commerce recommendation system, prediction of drug side effects, protein-protein interaction prediction, finding missing reactions in metabolic networks, predicting co-authorship. Link prediction problem takes as input a snapshot of the network and computes the likelihood of a connection between two unconnected vertices1–3. The algorithms not only predicts the addition of new edges, it also predicts the connections that may disappear from the network in future. Most of the link prediction algorithms have been designed for unipartite networks. The structure and properties of bipartite networks are different from unipartite ones. For this reason the algorithms that perform well for unipartite networks may not work well for bipartite networks. Link prediction on Bipartite networks have applications in Recommendation systems. Li et al.4 used applied link prediction to build recommender systems based on the concept of graph kernels. They generated random walks starting from a focal user-item pair in the graph kernel. Due to the fact that defining features in the complex graph is challenging, authors used a kernel-based machine learning framework that works on kernel function and this function defines the similarity between data instances. The authors performed testing on three real-world datasets and the it was observed that the proposed method outperformed the baseline algorithms. Kurt et al.5 also proposed a graph-based recommender system (SIMLP) that computed similarity between two nodes in a bipartite network. They assigned complex numbers as the weight of an edge connecting a node pair. SIMLP algorithm was tested on two datasets and the results show that it algorithm performed better than the complex number-based baseline algorithms.

We have used the terms graphs and networks interchangeably throughout the paper. The Link Prediction problem can formally be defined as follows.

Problem statement Given a bipartite graph G(U, V, E), where U and V are two disjoint and independent sets of vertices and E is set of edges at timestamp . The link prediction algorithm aims to predict new links among nodes that can be observed at timestamp , such that . These links may be due to one of the two reasons; either due to the formation of a new connection, or due to revival of an existing missing connection.

In this work, the authors make the following contributions in order to propose new algorithm for the above mentioned problem:

Two parameters have been introduced with respect to link prediction: potential energy and mutual information.

Potential Energy and Mutual Information based similarity metric (PMIS) proposed to compute the weight of the patterns.

The proposed algorithm works on each disconnected node pair in the network instead of the only candidate node pair.

The proposed algorithm evaluates on ten real world datasets and demonstrates superior performance of the proposed algorithm compared to baseline link prediction techniques.

Link prediction algorithms6–10 usually follow one of the following four approaches: Node Based link prediction, Neighbor-based link prediction, Path-based and Social Theory-based link prediction. Liben-Nowell et al.1 analysed various proximity measures and suggested proximity measures for best prediction results among node neighborhoods approach, path-based approach and Higher-level (meta) approaches. Common neighbors, Jaccard coefficient, Adamic/Adar coefficient are common neighbor based approaches. Triangle-closing model states that node-pairs with a high number of common neighbors try to form a triangle in the graph. Such node-pairs have a high probability of forming connections in the future. This concept does not apply to bipartite networks because of their distinct topological structure. Thus common neighbor based approaches can not apply directly on bipartite networks. Hasan et al.11,12 further extended the work of Liben-Nowell et al.1. Link prediction for bipartite networks has been addressed by many researchers4,13–19. Cannistraci et al.20 proposed an algorithm to target not only common neighbors and neighbor’s common neighbors but also to their connections structure. This was the first attempt of bipartite formulations of the Common Neighbor index. Further, the authors in21 used the concept of local community paradigm (LCP-theory) for link prediction and states that the cohort of CNs and their cross interactions form a local-community edge. The cross interactions between common neighbors are called local community edges or links. The LCP-based method presented in21 improves topological prediction in bipartite complex networks13 used the concept of projection and supervised learning for link prediction. They introduced three link prediction metrics for bipartite graphs and implemented these metrics on DBLP dataset.Baltakiene et al. in22 presented the concept of entropy and used the Bipartite Configuration Model (BiCM)23 as a score function for predicting links. As used in Statistical Mechanics, probability per graph can be derived by maximizing the Shannon entropy under the constraint of the degree sequence. So by using the concept of maximal entropy they significantly improved the performance of link prediction. Gao et al. used the concept of projection and CNP(candidate node pairs) for link prediction in the bipartite networks24. The authors first converted the bipartite graph into unipartite graph and then computed the CCNP (Connectivity of candidate node pairs) with the help of pattern weights for link prediction. They evaluated the performance of the algorithm on three datasets and experienced better results than baseline predictor(CN, Katz, ILP18) on AUC. Shakibian et al. proposed another similarity measure based on mutual information and meta-path in heterogeneous networks25. They presented a framework in which link entropy is characterized as a semantic measure for link prediction. To measure the effectiveness of the algorithm, authors compared it with different classes of link prediction algorithms namely, mainstream meta-path based link predictors, effective path-based homogeneous link predictor, and LCP-based link indicators. The authors analysed the performance of algorithm on DBLP network. In 2019 Serpil et al. used strengthened projection technique for link prediction in evolving bipartite graph26. They tried to predict link in large scale bipartite networks. Authors did link prediction in mainly two steps. In the first steps, they extracted potential link set and in the second step, they computed the prediction score of each potential link. For this purpose authors proposed a time aware proximity measure based on network evolution. For the result analysis, they used AUC and precision metrics. In the experiment, the authors compared his method with four baseline algorithms (AA, CN, JA, PA). And they found that his algorithm outperforms the baseline algorithm. It is often observed that the complete information about real-world complex networks is not available. Some examples of such networks are financial networks, social networks and biological networks. Cimini et al.27 presented an exhaustive review on statistical physics based approaches to predict statistically significant patterns in complex networks. They also addressed the reconstruction of network structure in the absence of complete information. Boguna et al.28 presented a review on network based approaches that very effectively identify both physical properties and mathematical properties that are fundamental to networks. They discussed three approaches and proposed interesting future directions. In the review they presented that in case of heterogeneous networks, the models based on hyperbolic space could be better than Euclidean space. In addition the hyperbolic space could be used for link prediction.

Preliminaries

Projection of bipartite graph

Projection of bipartite graphs can be used to convert it into unipartite graphs. For a given bipartite graph , its U-projected graph can be represented as a unipartite graph in which if A and B have at least one neighbor common in G. This means, and can be described as follows:

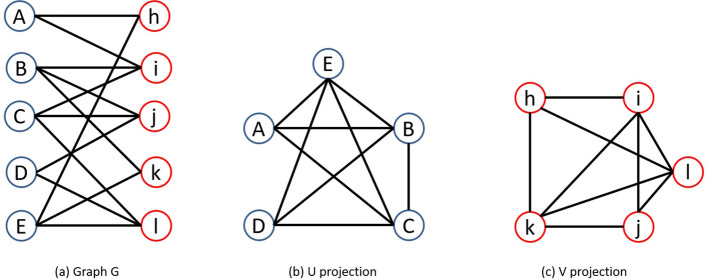

Here two projections of the graph can be taken, one for U and another for V. V-projection can be defined for the graph similar to its U-projection. For a graph G, it’s V-projected graph will be . For example Fig. 1a denotes the graph G where values of U and V are and respectively, Fig. 1b denotes their U-projection and Fig. 1c denotes the V-projection.

Figure 1.

Projection of bipartite graph G.

Pattern and pattern covered by a Node pair

Pattern Suppose A and B are two nodes in a bipartite graph G(U, V, E) and . Then (A, B) forms a pattern if they have at least one common neighbor in V24. Therefore a link (A, B) will be available in the projected graph. We can also say that each link in the projected graph represents a pattern in bipartite graph G.

Pattern covered by a Node pair Suppose (A, i) be a node pair in bipartite graph G and be the projected graph of G. For each node , we call {A, C} a pattern covered by node pair(A, i)24. A node pair may cover one or more patterns in the projected graph. Pattern covered by a node pair simply says that a similar edge has already existed in bipartite graph G. The more patterns a node pair covers, more are the chances that this node pair will be connected in the future. In this manner, the number of patterns secured by a node pair can be utilized to measure the likelihood of its edge presence.

Mutual information for link prediction

Our proposed algorithm uses the concept of mutual information and potential energy for link prediction in the bipartite graph.

Self-information: “Let X be a random variable and x be an outcome of X with probability p(x). Then, the self-information of x quantifies the uncertainty of the outcome x and is defined as follows”29:

| 1 |

Mutual information: “Let X and Y be two random variables and x and y be their outcomes, respectively. The mutual information of X and Y measures the amount of reduction in uncertainty of the outcome x when the outcome y is known, or vice versa, and is defined as follows”30:

| 2 |

Let x, y represent the two nodes in a graph and represent their set of neighbors. Also, common neighbors of x, y is represented by . So we can say that . Now for given node pair(x, y) and common neighbors ; The likelihood score of node pair(x, y) can be computed by the following equation31.

| 3 |

Here is the conditional self-information of the existence of an edge between node pair (x, y) when they have common neighbors . On analysis of self-information of the node-pair, it is found that the smaller is, the higher the probability of the existence of an edge. According to Eq. (2), we can derive the value of as follows:

| 4 |

where represents the self-information of that node pair (x,y) that are already connected. represents the mutual information between node pair (x,y) that has one link between them and the node pair’s common neighbors are known. Now let’s consider that the elements of are independent of each other, then we can find the value of as follows:

| 5 |

Now can be calculated by . is defined as the mean mutual information over all node pairs connected to node z.

| 6 |

Now we can find the value of with the help of Eq. (2).

| 7 |

Here indicates the self-information of node pair (m, n) is connected. is simply the conditional information of that connected node pair (m, n) when node z is one of their common neighbors.

Now can be calculated by the clustering coefficient of node z and the clustering coefficient of z can be calculated as follows:

| 8 |

where represents the clustering coefficient of z. represents the number of triangles passing through node z and represents the degree of node z. Since we have value of we can easily find the value of .

can be computed with the help of . represents the event that there is no edge that exists between node m and node n. It is considered here that no degree correlation exists. The value of can be calculated with the help of path entropy.

| 9 |

Here and are the degree of node m and n, respectively. is the total no of edges in the graph. This formula is symmetric. Thus

| 10 |

So now and can be calculated as follows.

| 11 |

With the help of Eq. (1) we can find the value of and . Collecting these results, we can get the following things.

| 12 |

| 13 |

| 14 |

So with the help of the previous derivation, we have

| 15 |

Here chances of the existence of an edge between node pair(x, y) are directly proportional to . It simply means higher the , the more likely the nodes will be connected.

Methods

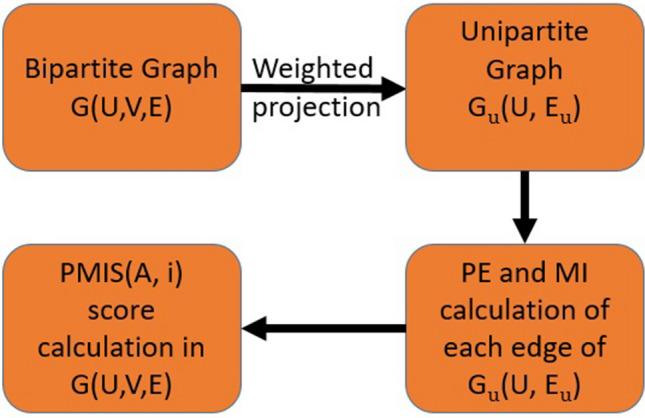

In this section, we are presenting a novel algorithm for link prediction in the bipartite networks based on potential energy and mutual information. The proposed algorithm majorly works on the four concepts projection, potential energy mutual information and PMIS. Figure 2 briefly describes the process for PMIS score calculation.

Figure 2.

Link prediction process.

In this work we define Potential Energy in the context of graphs. We assumed that a pair of nodes act as an object and that the product of the degree of nodes of the graph can represent mass. The gravitational acceleration g can be represented by the sum of the clustering coefficient of common neighbor between two nodes. For a given pair of nodes, sum of the clustering coefficient of common neighbor will be constant, but for a different pair of nodes the value of the sum of the clustering coefficient of common neighbors will be different. Also, we replaced distance (h) by inverse of the shortest distance (sd) between the pair of nodes.

Potential energy PE(A, B) represents the potential energy between nodes A and B. In the context of a graph, this can be defined as the product of three terms; the product of the degree of nodes, the sum of the clustering coefficient of common neighbors and the shortest distance between nodes.

| 16 |

where , are degree of nodes A and B. is clustering coefficient of z . sd(A, B) represents the shortest distance between node A and B. When ; that is no common neighbor between node pair(A, B), and is such cases the value of will be .1(constant).

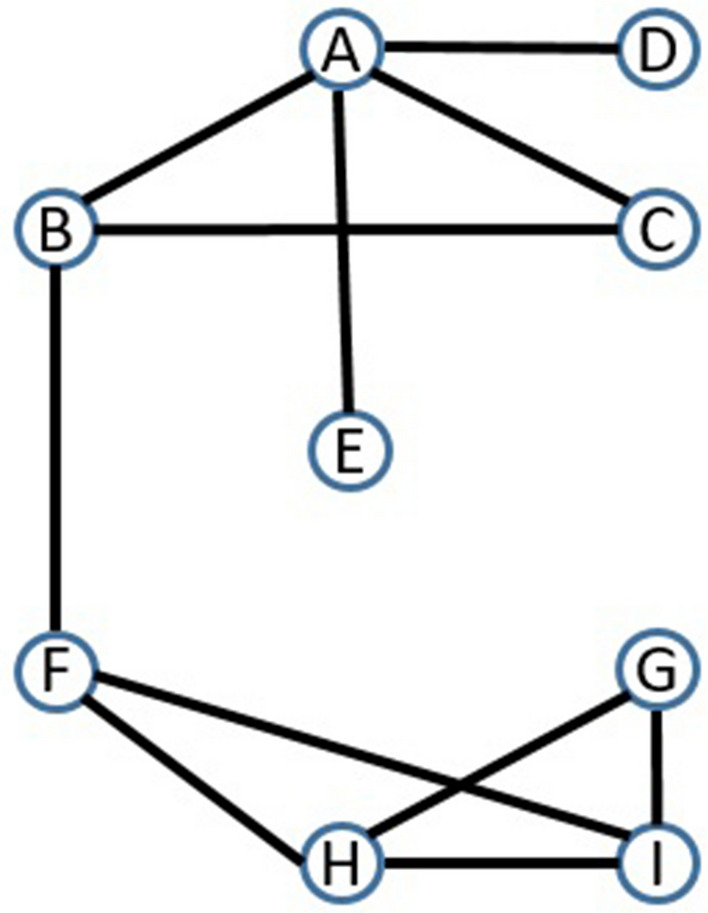

To simplify the understanding of PE, we illustrate it with an example. In Fig. 3, the values of PE between node pairs(B, E), (C, E) and (E, G) are .25, .166, and .04 respectively. We used Eq. (16) for PE calculation and Eq. (8) for calculation of the clustering coefficient of the node. Here PE of node pair(B, E) is greater than (C, E). So it shows that Node pair(B, E) is more likely to be connected than node pair(C, E). PE can distinguish node pairs even if they have no common neighbour. For example, PE of node pair(E, F) and (E, G) are .1 and .04 respectively. So this shows that node pair(E, F) is more likely to be connected than node pair(E, G). The value of the clustering coefficient, product of the degree of nodes and the shortest distance of illustrative examples are given in Table 1.

Figure 3.

Graph G2.

Table 1.

Potential energy computation for some missing edges in Graph G2.

| Edge (x, y) | Product of the degree of nodes | The sum of the clustering coefficient of common neighbors | Inverse of the shortest distance between nodes. | PE(x, y) |

|---|---|---|---|---|

| (B, E) | 3 | .1666 | .50 | .25 |

| (C, E) | 2 | .1666 | .50 | .16 |

| (E, G) | 2 | .1 | .20 | .04 |

| (E, F) | 3 | .1 | .33 | .1 |

The potential energy of the network incorporates three different properties in itself. One part of potential energy talks about the product of the degree of vertices. Higher the values of product, higher the PE. If we think from the social networks point of view, vertex having a higher degree has always high likelihood to connect with another vertex. For example, a new person joining Twitter has a higher likelihood to follow a celebrity than not so popular people. Because the degree of celebrities is usually higher. The second part of PE is the clustering coefficient. The significance of the clustering coefficient in social networks is that a person tends to have friends who are also friends with each other. It is very closely related to triadic closure. Triadic closure plays a very important role in link prediction. So the clustering coefficient has the inherent properties of the link prediction. And the third part of PE is the shortest distance between vertices. This is another important feature of networks. Kleinberg found that most of the nodes in the social networks are connected with a very short distance32. Distance between nodes has inverse effect on link prediction. This is also related to the small world phenomenon. In real social networks if the distance between two people is smaller that means they have a higher chance of becoming friends in the future. Illustrative example and results also show the effectiveness of PE.

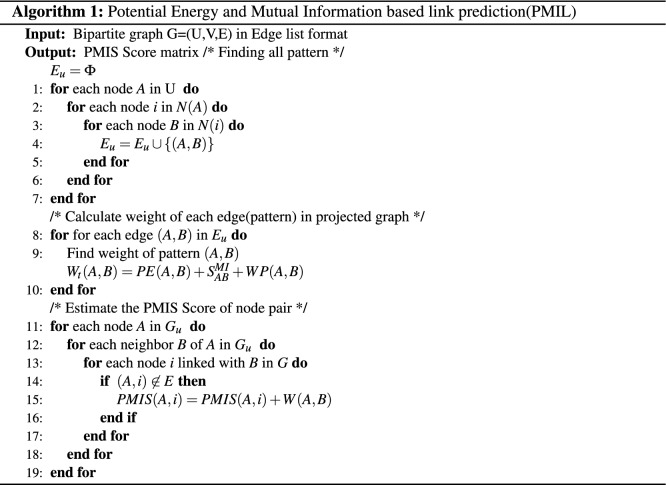

Algorithm framework

The proposed algorithm initially takes a bipartite graph G(U, V, E) as input and using weighted projection transforms it into a unipartite graph . In the unipartite graph , the proposed algorithm computes PE and MI for each node pair using Eqs. (15) and (16) respectively. Then algorithm calculates weight of edge(pattern) of unipartite graph using Eq. (17). After the calculation of the weight of the pattern, the proposed algorithm uses Eq. (18) and compute the PMIS score for each node pair of bipartite graph G(U, V, E).

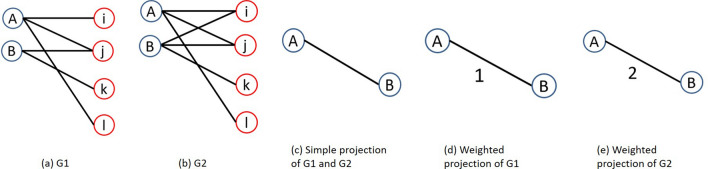

The proposed algorithm takes weighted projection instead of simple projection. The main reason of taking weighted projection is that in simple projection we lose the topological information of the original bipartite graph. To keep such information we use weighted projection. For example, two bipartite graphs in Fig. 4a,b. are different but their simple projection is the same. Figure 4c shows the simple projection of bipartite graph Fig. 4a,b. But if we take weighted projection then we get two different projected graph Fig. 4d,e. In Fig. 4d edge weight is 1 because only one common neighbor is present in the bipartite graph. But in Fig. 4e edge weight is 2 because there are 2 common neighbors in the bipartite graph. WP(A, B) represents the weight of the edge of the projected graph and we calculate it as follows.

Since we have a projected graph of the original bipartite graph, so by using Eq. (15) we can estimate the for each node pair of the projected graph. Or we can say in another way that for every pattern we have .

Figure 4.

Projection of bipartite graph.

The weight of the edge in the projected graph plays a very important role in link prediction. This weight is nothing but the weight of pattern, which we have already defined. So now we can say that for each pattern we have three values PE(A, B), WP(A, B) and . These values declare the importance of pattern.

Definition 0.1

Total Weight of pattern, represents the sum of all types of weight of the pattern (edge A, B in projected graph) and is defined as follows:

| 17 |

Definition 0.2

Potential Energy-Mutual Information based similarity metrics(PMIS): PMIS is the sum of the weight of all pattern covered by a node pair.

If (A, i) is a node pair in bipartite graph then PMIS can also be defined as

| 18 |

Here W(A, B) is the weight of the pattern . And is the set of patterns covered by node pair (A, i). We can find the value of as follows:

So it is clear that every node pair in the bipartite graph cover some pattern. And each pattern has some weight. Therefore PMIS computes the sum of the weight of all pattern covered by a node pair. Here we are using PMIS value as the final link prediction score. Higher PMIS value indicates higher likelihood of the existence of an edge.

Complexity analysis The time complexity of proposed algorithm for a given bipartite graph, G(U, V, E) with two vertex sets, U and V is , where . The algorithm has three steps. Bipartite graph is converted into unipartite graph in the first step with time complexity . Here, d denotes the maximum vertex degree of given bipartite graph. Since d is constant, the complexity of first step can be rewritten as O(|V|). The second step of the algorithm computes PE and MI and the complexity of this step is . PMIS is computed in the last step with time complexity. Therefore, total time complexity of the algorithm is . Moreover, the complexity improves to O(|V|) when algorithm focuses on candidate node pair instead of focusing on each node pair. This complexity is better than time complexity of Katz algorithm.

Results and discussion

All experiments were conducted on a Linux server with an Intel XeonE5-2630 v3 2.40 GHz CPU and 64GB memory running CentOS 7.4-1708. We implemented PMIL and all other algorithms in Python 3.7.0. In all experiments majorly used networkx, pandas, sklearn, numpy, and matplotlib library.

Evaluation metrics

Two standard metrics are generally used to quantify the accuracy of any prediction algorithms, one is area under the receiver operating characteristic curve (AUC)33 and another is Precision34. We have performed an extensive experiment and used four metrics to test the performance of the proposed algorithm. Following are the names of four metrics used for the performance evaluation.

AUC AUC value can be defined as the probability that a randomly chosen missing link (i.e., a link in ) is given a higher score than a randomly chosen nonexistent link (i.e., a link in ). Here if suppose among independent comparisons, times the existing edge having a higher score and times they have the same score,then AUC score can be calculated by following equation.

| 19 |

In general, a larger AUC value indicates high performance. The AUC value of the ideal result is 1.0.

Precision Precision is defined as the ratio of relevant items selected to the number of items selected. After sorting the scores, if there are links belonging to the test set among top-L candidate links, then Precision is obtained by the following equation.

| 20 |

Prediction-Power (PP) This metric is used to check the deviation from the mean random-predictor performance20. PP is computed as follows:

| 21 |

where is the result of random-predictor. And we can compute it by .

Precision@K It is the fraction of correct predictions in top k predictions34. In our paper, we computed Precision@10, Precision@20 and Precision@50. Precision@10 means precision at the top 10 position in the ranking result. The higher the values of the metrics are, the better the algorithm is.

To evaluate the performance of our model, we used the K-fold Cross-Validation. K-Fold CV is a technique in which a given data set is split into a K number of sections/folds. Each time one subset is chosen as a probe set and the rest used as training set. Here we have taken the value of K is 10.

Datasets

Datasets We used ten real-world datasets to test the performance of the proposed algorithm. These ten datasets are the following: (1) MovieLens (ML)35 dataset contains 100,000 ratings from 943 users on 1682 movies. (2) Enzyme (EN)36 is a biological network of drugs and enzymes proteins. It contain 445 drugs nodes, 664 proteins nodes and 2926 drug–target interactions. (3) Southern Women network dataset (SWN)37 represents 18 women who participated in 14 social events. (4) Corporate Leadership bipartite graph dataset (CL)38 contains the person’s name and company name. (5) Club membership dataset (CM)39 contains participation data of corporate officials in social associations. (6) Ionchannels (IC)36 is biological network of drug and ionchanel proteins. (7) Country-organization (C2O) is global network of country and various organization (8) Drug target (Drug)40 is a chemical network of drug target interaction. (9) G-protein coupled receptors (GPC)36 is biological network. (10) Malaria(mal)41 is a genetic network. Table 2 shows the topological features of all the datasets.

Table 2.

Statistics of the dataset, where ML(MovieLens), EN (Enzyme), SWN (Southern Women Network), CL (Corporate Leadership), CM (Club Membership), IC (Ion channels), C2O (Country-Organizations), Mal (Malaria), GPC (G-protein coupled receptors) and Drug (Drug Target) are the names of the datasets and Data Types represent the domain of the datasets.

| Name of dataset | Number of nodes | Number of edges | Average degree | Dataset types |

|---|---|---|---|---|

| ML | 2625 | 85,250 | 32.48 | Entertainment |

| EN | 1109 | 2926 | 5.2 | Biological |

| SWN | 32 | 89 | 5.5 | Social network |

| CL | 64 | 99 | 4.5 | Management |

| CM | 65 | 95 | 4.7 | Social network |

| IC | 414 | 1476 | 3.5 | Biological |

| C2O | 295 | 12,170 | 41.2 | Global network |

| Mal | 1103 | 2965 | 2.6 | Genetic network |

| GPC | 318 | 635 | 2 | Biological |

| Drug | 350 | 454 | 1.3 | Chemical network |

Results To test the strength of the PMIL algorithm, we performed extensive experiments on ten different real-world datasets and compared it with eleven baseline link prediction techniques. Since our proposed algorithm comes under the similarity-based technique, so for the comparison purpose we considered mainly similarity-based algorithm. The baseline link prediction techniques include Common Neighbors (CN), Jaccard Coefficient (JC), Preferential attachment (PA), Cannistraci–Alanis–Ravasi (CAR), Cannistraci–Jaccard (CJC), Cannistraci–Adamic–Adar (CAA), Cannistraci resource allocation (CRA), Nonnegative Matrix Factorization (NMF), Cosine (CS), Potential Link prediction (PLP) and Bipartite projection via Random-walk (BPR)10,21,42. Out of these 11 baseline algorithms, three works on node neighbourhood mechanism, four works on LCP mechanism, three works on projection mechanism and one works on latent feature mechanism.

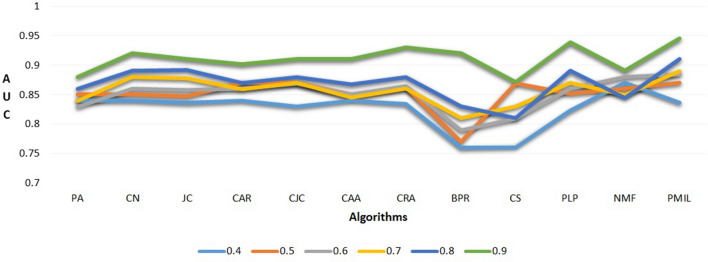

The AUC and Precision values of the proposed algorithm and other baseline algorithms are listed in Tables 3 and 4 respectively. In these tables, each row represents the method used in the experiment and each column represents the datasets. The largest value in each column is represented in bold text. In each of these 10 datasets, the test set contains 10% edges and training set contains 90% edges. Table 3 shows the proposed PMIL algorithm outperforms the ten baseline link prediction algorithms on seven datasets for AUC values. But on the CL and IC dataset winner is CAA and PLP respectively. Interestingly, the value of AUC for CAA and CRA are same on C2O dataset. Since the AUC value of the PMIL algorithm is better so if we draw ROC curve by plotting true-positive rates (TPR) versus false-positive rates (FPR) for varying L values then the total area under the ROC-curve (AUC) will be more. Thus it indicates the better prediction result quality, where L is the list of top links as predicted links. The results in Table 4 demonstrate that PMIL algorithm gives best precision values on six datasets (ML, EN, SWN, CM, Mal, GPC). However, CAA and PLP are winners for CL and IC datasets respectively; and performance of BPR algorithm is best on both C2O and Drug dataset based on the Precision value. Figure 5 shows the effects of the size of the training set on AUC for Drug dataset. We experimented by changing the size of the training set from 40% to 90%. It can be observed from Fig. 5 that on increasing the size of the training set to 0.9, all baseline algorithms as well as PMIL gives better AUC scores.

Table 3.

AUC comparison results on ten datasets (ML, EN, SWN, CL, CM, IC, C2O, Mal, GPC and Drug).

| Dataset | ML | EN | SWN | CL | CM | IC | C2O | Mal | GPC | Drug |

|---|---|---|---|---|---|---|---|---|---|---|

| PA | .881 | .788 | .648 | .773 | .764 | .823 | .901 | .591 | .720 | .880 |

| CN | .871 | .851 | .730 | .811 | .801 | .910 | .990 | .901 | .812 | .920 |

| JC | .791 | .880 | .663 | .821 | .798 | .850 | .950 | .901 | .821 | .910 |

| CAR | .912 | .867 | .726 | .940 | .906 | .916 | .990 | .910 | .811 | .901 |

| CJC | .882 | .867 | .762 | .960 | .940 | .925 | .990 | .910 | .831 | .910 |

| CAA | .910 | .851 | .760 | .968 | .950 | .942 | 1.00 | .920 | .831 | .910 |

| CRA | .921 | .890 | .772 | .945 | .955 | .931 | 1.00 | .910 | .821 | .930 |

| BPR | .911 | .891 | .742 | .943 | .959 | .920 | .990 | .901 | .840 | .920 |

| CS | .831 | .836 | .761 | .775 | .882 | .835 | .960 | .821 | .801 | .871 |

| PLP | .930 | .889 | .936 | .905 | .960 | .945 | .965 | .906 | .849 | .938 |

| NMF | .891 | .761 | .692 | .854 | .846 | .850 | .990 | .861 | .702 | .890 |

| PMIL | .945 | .901 | .945 | .940 | .982 | .938 | .971 | .921 | .867 | .945 |

Each dataset is divided into training set (90%) and test set (10%) and results are computed by averaging over 1000 runs.

Table 4.

Precision comparison results on ten datasets averaged over 1000 runs.

| Dataset | ML | EN | SWN | CL | CM | IC | C2O | Mal | GPC | Drug |

|---|---|---|---|---|---|---|---|---|---|---|

| PA | .153 | .023 | .122 | .110 | .157 | .036 | .871 | .022 | .081 | .313 |

| CN | .141 | .370 | .141 | .210 | .202 | .230 | .871 | .192 | .310 | .610 |

| JC | .001 | .031 | .021 | .042 | .036 | .021 | .601 | .250 | .012 | .383 |

| CAR | .177 | .507 | .188 | .189 | .202 | .432 | .871 | .191 | .332 | .601 |

| CJC | .184 | .496 | .188 | .217 | .231 | .494 | .871 | .232 | .361 | .191 |

| CAA | .181 | .502 | .122 | .662 | .621 | .531 | .870 | .191 | .320 | .591 |

| CRA | .181 | .651 | .210 | .612 | .631 | .560 | .880 | .251 | .373 | .631 |

| BPR | .181 | .501 | .162 | .620 | .641 | .442 | .931 | .253 | .271 | .680 |

| CS | .120 | .330 | .163 | .165 | .455 | .349 | .661 | .142 | .201 | .491 |

| PLP | .191 | .491 | .410 | .210 | .620 | .612 | .631 | .221 | .283 | .301 |

| NMF | .001 | .001 | .031 | .022 | .031 | .011 | .001 | .001 | .012 | .021 |

| PMIL | .210 | .661 | .441 | .205 | .651 | .581 | .601 | .261 | .401 | .310 |

Figure 5.

AUC values for baseline algorithms where the size of the training set varies from 0.4 to 0.9 tested on Drug dataset.

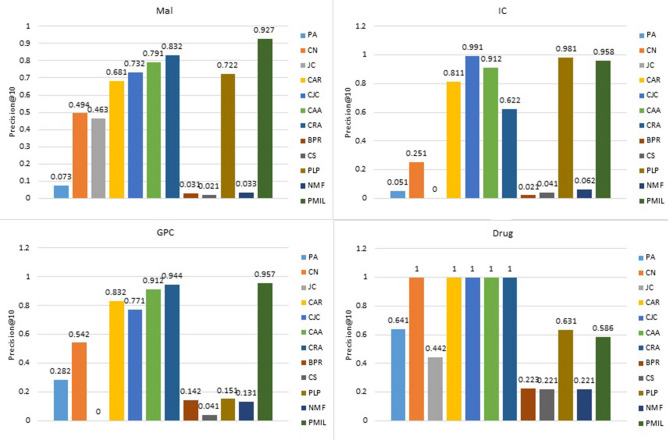

Figures 6, 7 and 8 describes the precision@K for different values of K. To keep the image clear, we presented the result of twelve algorithms on four datasets. In Fig. 6 we presented the value of precision@10 for the Mal, IC, GPC and Drug datasets. For the IC dataset, CJC and PLP have better performance than PMIL. But our proposed algorithm shows a better result than all eleven baseline algorithms on the Mal and GPC dataset. This improvement is very useful in the recommender system. Especially in E-commerce, where we are interested to show only the top 10 or top 20 or top K results among the best results to the customer.

Figure 6.

Comparison of precision@10 on the four datasets.

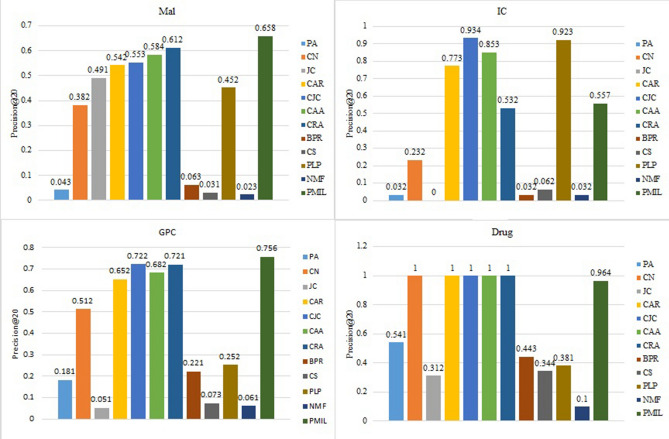

Figure 7.

Comparison of precision@20 on the four datasets.

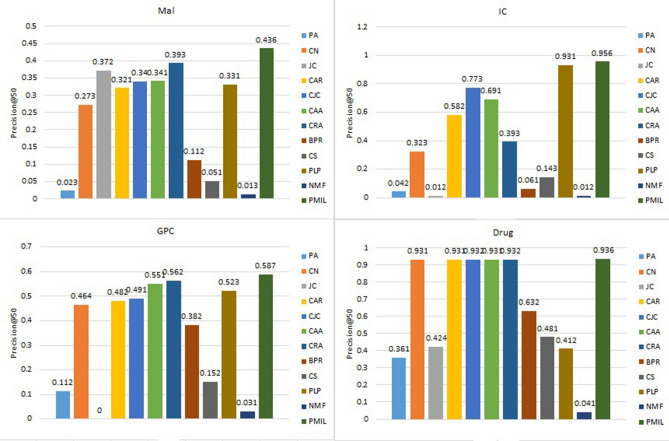

Figure 8.

Comparison of precision@50 on the four datasets.

Figures 7 and 8 show the precision@20 and precision@50 values respectively. The proposed algorithm shows the highest value of Precision@20 for Mal and GPC dataset. The proposed algorithm gives the best result for precision@50 on Mal, IC, GPC and Drug dataset. Interestingly, all the algorithms based on LCP-theory (CAR, CJC, CAA, CRA) and our proposed algorithm show almost similar results for drug dataset.

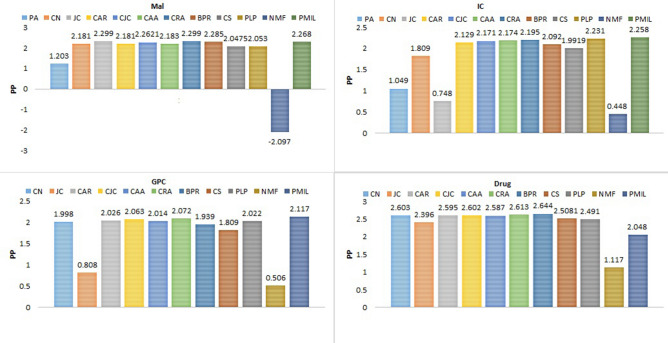

Figure 9 describes the prediction-power of all twelve algorithms over the four datasets. For the IC and GPC datasets, PMIL secured the first position. And for Mal datasets, JC and CRA both are at first position, whereas in case of the Drug dataset winner is BPR. PP metric is very useful when we are interested to find which algorithms have a minimum or maximum deviation from the mean random-predictor. So basically it characterises the deviation of the algorithm from the randomness.

Figure 9.

Comparison of prediction-power on the four datasets.

Conclusion

In this paper, we introduced a novel approach for link prediction in bipartite networks which is based on the concepts of potential energy and mutual information. The performance of the proposed algorithm is evaluated on ten datasets under different classes and compared with eleven baseline predictors on the basis of AUC, Precision, Prediction-Power and Precision@K. We used AUC for evaluation of PMIL, in which we assume that all the links in the networks are independent of each other. However in the real-world networks, links may or may not be independent of each other. PMIL algorithm showed best performance on seven out of the ten datasets used based on AUC score and was reasonably close for the three remaining datasets. There is a possibility that the structural properties of non-social network graphs effect the performance; thus it be interesting to study the structural properties, such as the community structure, of different datasets and compare any significant differences between social networks and other datasets. In social networks a connection implies that there was an interaction in the past, but the complete information about the connections may be missing. The other datasets may be noisy and an example could be biological datasets, where the connections are identified with the help of experiments that are not always accurate. Thus, expanding PMIL such that it is capable of link prediction in weighted bipartite graphs is another future direction of research, where weight could be the probability of the occurrence of edge. There are many applications of link prediction, such as recommender systems, community detection, finding hidden relationships, etc. Thus, it would be interesting to explore the future directions and design more efficient algorithms for link prediction in bipartite networks.

Acknowledgements

The authors would like to thank Prof. Karmeshu for insightful discussions and constant support.

Author contributions

D.S. intial idea and conception(s), P.K. conducted the experiment(s), P.K. and D.S. analysed the results.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Liben-Nowell D, Kleinberg J. The link-prediction problem for social networks. J. Am. Soc. Inf. Sci. Technol. 2007;58:1019–1031. doi: 10.1002/asi.20591. [DOI] [Google Scholar]

- 2.Yu H, et al. High-quality binary protein interaction map of the yeast interactome network. Science. 2008;322:104–110. doi: 10.1126/science.1158684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mutinda, F. W., Nakashima, A., Takeuchi, K., Sasaki, Y. & Onizuka, M. Time series link prediction using nmf. In 2019 IEEE International Conference on Big Data and Smart Computing (BigComp) 1–8 (IEEE, 2019).

- 4.Li X, Chen H. Recommendation as link prediction in bipartite graphs: a graph kernel-based machine learning approach. Decis. Supp. Syst. 2013;54:880–890. doi: 10.1016/j.dss.2012.09.019. [DOI] [Google Scholar]

- 5.Kurt Z, Ozkan K, Bilge A, Gerek ON. A similarity-inclusive link prediction based recommender system approach. Elektron. IR Elektrotech. 2019;25:62–69. doi: 10.5755/j01.eie.25.6.24828. [DOI] [Google Scholar]

- 6.Lü L, Zhou T. Link prediction in complex networks: a survey. Physica A Stat. Mech. Appl. 2011;390:1150–1170. doi: 10.1016/j.physa.2010.11.027. [DOI] [Google Scholar]

- 7.Bilgic, M., Namata, G. M. & Getoor, L. Combining collective classification and link prediction. In Seventh IEEE International Conference on Data Mining Workshops (ICDMW 2007) 381–386 (IEEE, 2007).

- 8.Doppa, J. R., Yu, J., Tadepalli, P. & Getoor, L. Chance-constrained programs for link prediction. In NIPS Workshop on Analyzing Networks and learning with graphs (2009).

- 9.Wang, C., Satuluri, V. & Parthasarathy, S. Local probabilistic models for link prediction. In Seventh IEEE International Conference on Data Mining (ICDM 2007) 322–331 (IEEE, 2007).

- 10.Newman ME. Clustering and preferential attachment in growing networks. Phys. Rev. E. 2001;64:025102. doi: 10.1103/PhysRevE.64.025102. [DOI] [PubMed] [Google Scholar]

- 11.Al Hasan, M., Chaoji, V., Salem, S. & Zaki, M. Link prediction using supervised learning. In SDM06: Workshop on Link Analysis, Counter-terrorism and Security (2006).

- 12.Sa, H. R. & Prudencio, R. B. Supervised learning for link prediction in weighted networks. In III International Workshop on Web and Text Intelligence (2010).

- 13.Benchettara, N., Kanawati, R. & Rouveirol, C. Supervised machine learning applied to link prediction in bipartite social networks. In 2010 International Conference on Advances in Social Networks Analysis and Mining 326–330 (IEEE, 2010).

- 14.Chang, Y.-J. & Kao, H.-Y. Link prediction in a bipartite network using Wikipedia revision information. In 2012 Conference on Technologies and Applications of Artificial Intelligence 50–55 (IEEE, 2012).

- 15.Kunegis, J., De Luca, E. W. & Albayrak, S. The link prediction problem in bipartite networks. In International Conference on Information Processing and Management of Uncertainty in Knowledge-based Systems 380–389 (Springer, 2010).

- 16.Xia, S., Dai, B., Lim, E.-P., Zhang, Y. & Xing, C. Link prediction for bipartite social networks: the role of structural holes. In Proceedings of the 2012 International Conference on Advances in Social Networks Analysis and Mining (ASONAM 2012) 153–157 (IEEE Computer Society, 2012).

- 17.Shams B, Haratizadeh S. Sibrank: signed bipartite network analysis for neighbor-based collaborative ranking. Physica A Stat. Mech. Appl. 2016;458:364–377. doi: 10.1016/j.physa.2016.04.025. [DOI] [Google Scholar]

- 18.Allali, O., Magnien, C. & Latapy, M. Link prediction in bipartite graphs using internal links and weighted projection. In 2011 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS) 936–941 (IEEE, 2011).

- 19.Li, R.-H., Yu, J. X. & Liu, J. Link prediction: the power of maximal entropy random walk. In Proceedings of the 20th ACM International Conference on Information and Knowledge Management 1147–1156 (ACM, 2011).

- 20.Cannistraci CV, Alanis-Lobato G, Ravasi T. From link-prediction in brain connectomes and protein interactomes to the local-community-paradigm in complex networks. Sci. Rep. 2013;3:1613. doi: 10.1038/srep01613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Daminelli S, Thomas JM, Durán C, Cannistraci CV. Common neighbours and the local-community-paradigm for topological link prediction in bipartite networks. N. J. Phys. 2015;17:113037. doi: 10.1088/1367-2630/17/11/113037. [DOI] [Google Scholar]

- 22.Baltakiene, M. et al. Maximum Entropy Approach to Link Prediction in Bipartite Networks. arXiv preprint arXiv:1805.04307 (2018).

- 23.Saracco F, Di Clemente R, Gabrielli A, Squartini T. Randomizing bipartite networks: the case of the world trade web. Sci. Rep. 2015;5:10595. doi: 10.1038/srep10595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gao M, et al. Projection-based link prediction in a bipartite network. Inf. Sci. 2017;376:158–171. doi: 10.1016/j.ins.2016.10.015. [DOI] [Google Scholar]

- 25.Shakibian H, Charkari NM. Mutual information model for link prediction in heterogeneous complex networks. Sci. Rep. 2017;7:44981. doi: 10.1038/srep44981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Aslan S, Kaya B, Kaya M. Predicting potential links by using strengthened projections in evolving bipartite networks. Physica A Stat. Mech. Appl. 2019;525:998–1011. doi: 10.1016/j.physa.2019.04.011. [DOI] [Google Scholar]

- 27.Cimini G, et al. The statistical physics of real-world networks. Nat. Rev. Phys. 2019;1:58–71. doi: 10.1038/s42254-018-0002-6. [DOI] [Google Scholar]

- 28.Boguna, M. et al. Network Geometry. arXiv preprint arXiv:2001.03241 (2020).

- 29.Shannon CE. A mathematical theory of communication. ACM sigmobile mob. Comput. Commun. Rev. 2001;5:3–55. doi: 10.1145/584091.584093. [DOI] [Google Scholar]

- 30.Cover TM, Thomas JA. Elements of Information Theory. New York: Wiley; 2012. [Google Scholar]

- 31.Tan F, Xia Y, Zhu B. Link prediction in complex networks: a mutual information perspective. PLoS ONE. 2014;9:e107056. doi: 10.1371/journal.pone.0107056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kleinberg JM. Navigation in a small world. Nature. 2000;406:845–845. doi: 10.1038/35022643. [DOI] [PubMed] [Google Scholar]

- 33.Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143:29–36. doi: 10.1148/radiology.143.1.7063747. [DOI] [PubMed] [Google Scholar]

- 34.Herlocker JL, Konstan JA, Terveen LG, Riedl JT. Evaluating collaborative filtering recommender systems. ACM Trans. Inf. Syst. (TOIS) 2004;22:5–53. doi: 10.1145/963770.963772. [DOI] [Google Scholar]

- 35.Harper FM, Konstan JA. The movielens datasets: history and context. ACM Trans. Interact. Intell. Syst. (tiis) 2016;5:19. [Google Scholar]

- 36.Yamanishi Y, Araki M, Gutteridge A, Honda W, Kanehisa M. Prediction of drug-target interaction networks from the integration of chemical and genomic spaces. Bioinformatics. 2008;24:i232–i240. doi: 10.1093/bioinformatics/btn162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Davis A, Gardner BB, Gardner MR. Deep South: A Social Anthropological Study of Caste and Class. Chicago: The University of Chicago Press; 1941. [Google Scholar]

- 38.Barnes R, Burkett T. Structural redundancy and multiplicity in corporate networks. Int. Netw. Soc. Netw. Anal. 2010;30:4–20. [Google Scholar]

- 39.Faust K. Centrality in affiliation networks. Soc. Netw. 1997;19:157–191. doi: 10.1016/S0378-8733(96)00300-0. [DOI] [Google Scholar]

- 40.Yamanishi Y, et al. Dinies: drug-target interaction network inference engine based on supervised analysis. Nucl. Acids Res. 2014;42:W39–W45. doi: 10.1093/nar/gku337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Rask TS, Hansen DA, Theander TG, Pedersen AG, Lavstsen T. Plasmodium falciparum erythrocyte membrane protein 1 diversity in seven genomes-divide and conquer. PLoS Comput. Biol. 2010;6:e1000933. doi: 10.1371/journal.pcbi.1000933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lee DD, Seung HS. Algorithms for non-negative matrix factorization. Adv. Neural Inf. Process. Syst. 2001;13:556–562. [Google Scholar]