Abstract

Breast CT provides image volumes with isotropic resolution in high contrast, enabling detection of small calcification (down to a few hundred microns in size) and subtle density differences. Since breast is sensitive to x-ray radiation, dose reduction of breast CT is an important topic, and for this purpose, few-view scanning is a main approach. In this article, we propose a Deep Efficient End-to-end Reconstruction (DEER) network for few-view breast CT image reconstruction. The major merits of our network include high dose efficiency, excellent image quality, and low model complexity. By the design, the proposed network can learn the reconstruction process with as few as parameters, where N is the side length of an image to be reconstructed, which represents orders of magnitude improvements relative to the state-of-the-art deep-learning-based reconstruction methods that map raw data to tomographic images directly. Also, validated on a cone-beam breast CT dataset prepared by Koning Corporation on a commercial scanner, our method demonstrates a competitive performance over the state-of-the-art reconstruction networks in terms of image quality. The source code of this paper is available at: https://github.com/HuidongXie/DEER.

Keywords: Breast CT, Deep learning, Few-view CT, Low-dose CT, X-ray CT

I. Introduction

ACCORDING to the American Cancer Society, breast cancer remains the second leading cause of cancer death among women in the United States. Approximately 40,000 people die from breast cancer each year [1]. The chance of a woman having this disease during her life is 1 in 8. The wide use of x-ray mammography, which can detect breast cancer at the early stage, has helped reduce the death rate. Five-year relative survival rates by stage at diagnosis for breast cancer patients with histologic grade 1 are 97% (local stage), 89% (regional stage), and 24% (distant stage) respectively [2]. These data indicate that detection at an early stage plays a crucial role in significantly improving prognosis of breast cancer patients. Therefore, the development of breast imaging techniques with high performance will directly benefit these patients.

Mammography is a 2D imaging technique with structures overlapped along with the x-ray paths, severely degrading image contrast. While breast tomosynthesis is a pseudo-3D imaging technique, breast CT provides an image volume of high quality and promises a superior diagnostic performance. Indeed, CT is one of the most essential imaging moralities extensively used in clinical practice [3]. Although CT brings overwhelming healthcare benefits, it may potentially increase cancer risk due to the involved ionizing radiation [4]. Since breast is particularly sensitive to x-ray radiation, dose reduction of breast CT is directly healthcare relevant. If the effective dose of routine CT examinations is reduced to 1 mSv per scan, the long-term risk of CT scans can be considered negligible. The average mean glandular dose of a typical breast CT scanner ranges between 7 and 13.9 mSv, while the standard radiation dose currently set by the Food and Drug Administration (FDA) is ¡ 6 mSv. This gap demands major research efforts.

In the past years, several deep-learning-based low-dose CT denoising methods were proposed to reduce radiation dose with excellent results [5]-[7]. In parallel, few-view CT is also a promising approach to reduce the radiation dose, especially for breast CT [8] and C-arm CT [9], [10]. Moreover, few-view CT may be implemented in mechanically stationary scanners in the future avoiding all problems associated with a rotating gantry. Recently, data-driven algorithms have shown a great promise to solve the few-view CT problem [11]. For example, FBPConvNet [12] uses the classical U-net [13] structure with conveying paths to remove streak artifacts. The residual convolutional neural network (Residual-CNN) [14] implements residual paths [15] in CNN to link previous layers to later layers. Also, a shallow architecture was developed [16] that learns a weighted combination of multiple filtered back-projection (FBP) [17] reconstructions with different learned filters.

Few-view CT is a hot topic in the field of tomographic image reconstruction. Because of the requirement imposed by the Nyquist sampling theorem [18], reconstructing high-quality CT images from under-sampled data is traditionally considered impossible. When sufficient projection data are acquired, analytical methods such as filtered back-projection (FBP) are widely used for accurate image reconstruction. However, in the few-view CT circumstance, severe streak artifacts are introduced in analytically reconstructed images due to the incompleteness of projection data. To overcome this issue, various iterative techniques were proposed to incorporate prior knowledge in the image reconstruction process. Well-known methods include algebraic reconstruction technique (ART) [19], simultaneous algebraic reconstruction technique (SART) [20], expectation maximization (EM) [21], etc. Nevertheless, these iterative methods are time-consuming and still fail to produce satisfying results in many challenging cases. Recently, deep learning becomes very popular due to the development of neural network, high-performance computing (such as graphics processing unit (GPU)) and big data science and technology. In particular, deep learning has now become a new frontier of CT reconstruction research [11], [22], [23].

In the literature, only a few deep learning methods were proposed for learning a network-based reconstruction algorithm from raw data. Zhu et al. [24] proposed an AUTOMAP, which uses fully-connected layers to learn the mapping from raw k-space data to the corresponding MRI image with parameters, where N denotes the side length of a reconstructed image. There is no doubt that a similar technique can be implemented to learn the mapping from the projection domain to the image domain for CT or other tomographic imaging modalities, as clearly explained in our perspective article [11]. However, importing the whole sinogram into the network requires a huge amount of memory and represents a major computational challenge to train the network for a full-size CT image/volume on commercial GPUs. Moreover, using fully-connected layers to learn the mapping assumes that every single point in the data domain is related to every single point in the image domain. While this assumption is generally correct, it does not utilize the intrinsic structure of the tomographic imaging process. In the case of CT scanners, x-rays generate line integrals from different angles over a field of view (FOV) for image reconstruction. Therefore, there are various degrees of correlation between projection data within each view and at different orientations. Würfl et al. [25] replaces the fully-connected layer in the network with a back-projection operator to reduce the computational burden. Even though their method reduces the memory cost by not storing a large matrix in the GPU, the back-projection process is no longer learnable. Hu et al. introduced a learned experts’ assessment-based reconstruction network (LEARN) [26] for few-view CT. LEARN maps few-view projection data to a reconstructed image through unrolling a classic iterative process in a data-driven manner. The main difference between our method and their method is that the back-projection operation in LEARN is fixed and not learnable. In contrast, our method learns an improved network-based back-projection to address the model mismatch problem encountered by analytical reconstruction methods in few-view settings and enhance reconstruction quality accordingly. Another proposed deep-learning-based CT reconstruction method [27], known as the iCT-Net, uses multiple small fully-connected layers and incorporates the viewing-angle information in learning the mapping from sinograms to images. iCT-Net reduces the computational complexity from for the network by Zhu et al. to , where Nd denotes the number of CT detector elements. In most CT scanners, Nd is usually equal to or greater than N. The complexity is still large for CT reconstruction. He et al. proposed an iRadonMAP framework [28] to simulate the inverse Radon transform by deep learning, in which a sinusoid in the filtered sinogram contributes to a single point in the image domain.

Here we propose a Deep Efficient End-to-end Reconstruction (DEER) network for low-dose few-view breast CT image reconstruction from raw measurement data. The major merits of our network include high dose efficiency, excellent image quality, and low model complexity. Computationally, the number of parameters required by DEER is as few as . In this study, the number of parameters is set to for better performance, where Nv is the number of projections. Network that utilizes parameters will also be presented in Section III-D. In the few-view CT case, Nv is much less than Nd which is a favorable comparison to the complexity of iCT-Net. Lastly, our network design allows us to split the reconstruction algorithm into several branches so that DEER can be trained to reconstruct large breast CT images (1024×1024) directly on a regular GPU. Different from the iRadonMAP method, DEER learns the reconstruction process by letting every single point in the projection domain relates to a line in the image domain. Our proposed DEER network is more efficient than the iRadonMAP network as the DEER network does not require interpolation in the projection domain, and DEER does not need a fully-connected layer for filtering. Moreover, pretraining using another dataset is not necessary in DEER, which simplifies and speeds up the training process. Lastly, DEER can be applied to real cone-beam data obtained on a commercial scanner, while iRadonMAP is designed for parallel-beam data. In this study, real cone-beam CT data provided by Koning Corporation were used to train, validate, and test the proposed method.

The proposed DEER is inspired by the well-known filtered back-projection mechanism, and designed to learn a refined filtration and back-projection for data-driven image reconstruction. As a matter of fact, every point in the sinogram domain only relates to pixels/voxels on a single X-ray path through a FOV. This means that line integrals acquired by different detector elements at a particular angle are not directly related to each other. Also, after an appropriate filtering operation, a filtered projection profile must be smeared back over the FOV. These two ray-oriented processes suggest that the reconstruction process can be, to a large degree, learned in a point-wise manner, which is the main idea of the DEER network to reduce the memory burden. By directly comparing DEER with other recently published state-of-the-art reconstruction algorithms, based on a breast CT dataset collected by Koning Corporation on a commercial scanner, the experimental results empirically demonstrate DEER has a superior performance over existing reconstruction methods in terms of image quality.

The rest of the paper is organized as follows. In Section II, the methodology is detailed. In Section III, the experimental setup is described, along with representative results and ablation study. In Section IV, we discuss relevant issues and clinical applications of the proposed method and conclude this paper.

II. Methodology

A. Proposed Framework

Image reconstruction for few-view CT can be expressed as follows:

| (1) |

where is an image of N × N pixels, is the sinogram of Nv×Nd data, where Nd represents number of detectors, subscripts FullV and FewV stand for full-view and few-view respectively, and denotes an inverse transform [29], [30] such as FBP in the case of sufficient 2D projection data. Alternatively, CT image reconstruction can also be transformed into a problem of solving a system of linear equations. Ideally, the FBP method produces satisfying results when sufficient high-quality projection data are available. However, when the number of linear equations is less than the number of unknown pixels/voxels in the few-view CT setting, image reconstruction becomes an undetermined problem, and even an iterative algorithm cannot reconstruct satisfactory images in difficult cases. Recently, deep learning provides a novel way to extract features of raw data for image reconstruction. With a deep neural network, training data can be utilized as strong prior knowledge to establish the relationship between a sinogram and the corresponding CT image, efficiently solving this undetermined problem.

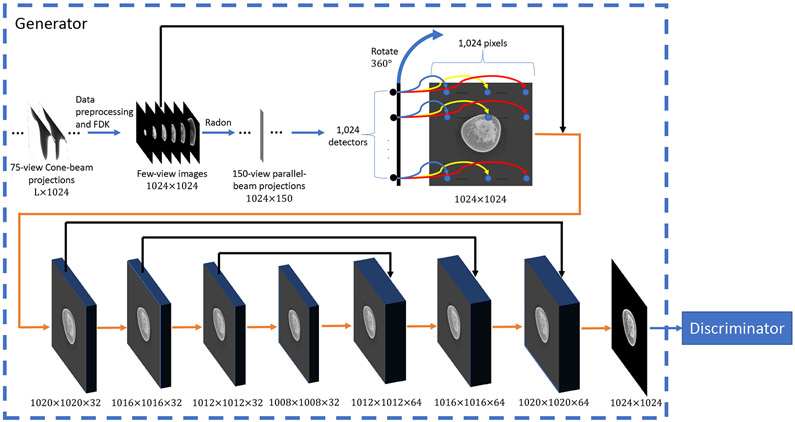

Note that in this pilot study, DEER is designed to perform 2D breast image reconstruction from 3D cone-beam data so that the memory requirement is met by our current GPU workstation. As a matter of fact, the proposed method could perform 3D CT image reconstructions because the embedded physical proprieties are the same in both 2D and 3D cases. However, graphical memory burden is still an issue for processing 3D raw data directly. The dimensionality of the raw scan provided by Koning is 1024 × 300 × L (1024 × Nv × L in few-view cases, where Nv is much less than 300. Nv was selected as 75 in this study), where L depends on breast size. Since the entire cone-beam dataset is needed for reconstructing a 3D image volume, there is no feasible way for us to process 3D data on our current hardware. Hence, we have developed a strategy to overcome this difficulty and still utilize the raw data from Koning. Our strategy and overall workflow of the DEER network are presented in Fig. 1. First, the Feldkamp, Davis, and Kress (FDK) algorithm [31] is used to process Koning 75-view cone-beam projections (the original input) via intermediate FDK-type image reconstruction into 150-view parallel-beam projections (the intermediate output) through a pre-selected transverse slice (at a pre-specified longitudinal position). By doing so, DEER can circumvent the above-mention memory limitation and perform slice-based image reconstruction. Since parallel-beam projections are based on few-view images instead of the ground-truth image volume, the resultant parallel-beam projections are of low quality. To integrate all the information, the intermediate FDK-type few-view images are also included in the overall workflow from raw few-view data to the final image reconstruction, as illustrated in Fig. 1. Our ablation studies and the corresponding results will be presented in Section III-C to demonstrate that DEER is better than competing image-domain methods. Moreover, our previous conference publication [32], which is fully based on simulations, already shows that a network-based reconstruction algorithm is better than post-processing networks in terms of image quality.

Fig. 1:

Workflow of the proposed DEER network. The numbers below each block indicate its dimensionality. L is breast length. Orange arrows indicate 5×5 convolutional layers with 32 filters, stride 1, and no zero-padding. Black arrows indicate concatenate operations.

The DEER network is empowered in the Wasserstein Generative Adversarial Network [33] (WGAN) framework. Compared with the original generative adversarial network (GAN) [34], WGAN is more stable and less sensitive to the network topology. The effectiveness of the WGAN framework will also be demonstrated in Section III-C In this study, the proposed framework consists of two components: a generator network G and a discriminator network D. G aims at reconstructing images directly from a batch of few-view sinograms. D receives images from either G or the ground-truth dataset, and intends to distinguish whether the input image is real (the ground-truth) or fake (from G). Both networks can optimize themselves in the training process. If an optimized network D can hardly distinguish fake images from real ones, then we say that generator G can fool discriminator D, which is the goal of WGAN. By design, the network D also helps improve the texture of the final image and prevent over-smoothing from occurring.

Different from the vanilla GAN [34], WGAN replaces the cross-entropy loss function with the Wasserstein distance, improving the training stability. In the WGAN framework, the 1-Lipschitz function is assumed with weight clipping. However, it was pointed out [35] that weight clipping may be problematic in WGAN, and it can be replaced with a gradient penalty, which is implemented in our proposed framework. Hence, the objective function of the network D is expressed as follows:

| (2) |

where SFewV and IFullV represent few-view sinograms and ground-truth images respectively, denotes the expectation of b as a function of a, θG and θD represent the trainable parameters of networks G and D respectively, , and α is uniformly sampled from the interval [0,1]. In other words, represents images between fake and real images. denotes the gradient of D with respect to , and λ is a parameter used to balance the Wasserstein distance term and gradient penalty term. As suggested in [33]-[35], the networks D and G are updated alternatively.

B. Generator Network

The input to DEER is the original 75-view cone-beam data. First, a normalized logarithm operation is taken to convert the raw intensity profiles to line integrals in terms of linear attenuation coefficients as along with the other data preprocessing steps suggested by Koning. Further data preprocessing steps include applying Shepp-Logan filter and excluding abnormal detector measurements. The FDK few-view reconstructions are then performed to offer a basis for estimating parallel beam projections through a pre-specified transverse image slice. After that, 150-view parallel beam projections are produced via ray-tracing at an adequate sampling rate, and further filtered using the Shepp-Logan kernel, which is the suggested filter choice by Koning. The reason why we only estimated 150-view parallel-beam projections from the FDK-reconstructed volumes is that 150-view is the largest number of views that our current workstation can handle in an acceptable amount of training time. The conversion from divergent beam data to parallel beam data is to speed up the training process within the available GPU memory (otherwise, we need to load the entire cone-beam data volume into the GPU memory). In DEER, the filtration is performed in the Fourier domain via multiplication. Then, the filtered sinogram data are passed into the generator network G. The network G learns a network-based back-projection and outputs a reconstructed image. This generator network can be divided into two components: back-projection and refinement.

First, the filtered sinogram data are passed into the back-projection part of the network G. This part aims at reconstructing a batch of images from projection data. As illustrated in Fig. 1, the reconstruction algorithm is inspired by the following intuition: every point in the sinograms only relates to pixel values on the associated x-ray path through the underlying image, and other pixels contribute little to it. With this intuition, the reconstruction process is learned in a point-wise manner using a point-wise fully-connected layer, and DEER can truly learn the back-projection process with a computational complexity of parameters, thereby reducing the memory overhead. Put differently, for a parallel-beam sinogram with dimensionality of Nv × N, there is a total of Nv × N small fully-connected layers in the proposed network. The input to each of these small fully-connected layers is a single point in the sinogram domain, and the output is a line-specific vector of N × 1 elements. After this point-wise fully-connected layer, rotation and summation are applied to simulate the FBP method, putting all the learned lines back to where they should be. Bilinear interpolations [36] are used to keep the rotated images on a Cartesian grid. This network design allows the neural network to learn the reconstruction process with only N parameters if all the small fully-connected layers share the same weight. However, due to the complexity of medical images, incomplete projection data, and involved bilinear interpolations, N parameters are not sufficient to produce high-quality images. Therefore, we increased the number of parameters to for this point-wise learning network. That is, we made network parameters specific to both viewing angles and X-ray paths to compensate for artifacts from bilinear interpolations and other factors. There is no bias term in the back-projection process.

Images reconstructed in the back-projection portion are concatenated with the intermediate few-view FDK images and then feed into the refinement portion of the network G. Although the proposed filtration and back-projection parts learn a refined FBP method, streak artifacts cannot be completely eliminated. The refinement part of G is used to remove the remaining artifacts. It is a 9-layer U-net [13]. U-net was originally designed for image segmentation and has been utilized in various medical imaging applications. For example, [5], [37] use U-net with conveying paths for CT images denoising, [12], [38] for few-view CT, and [39] for sparse data MRI [40]. Each layer in the proposed U-net is followed by a rectified linear unit (ReLU). 32 kernels of 5×5 are used in both convolutional and transpose-convolutional layers in the U-net. A stride of 1 is used for all down-sampling and up-sampling layers. Zero-padding is not implemented in the U-net. Conveying paths are implemented to reserve high-level information and improve reconstruction quality.

C. Discriminator Network

The discriminator network D takes an image from either G and the ground-truth dataset, trying to distinguish whether the input is real or fake. The discriminator network has 6 convolutional layers with 64, 64, 128, 128, 256, 256 filters respectively, which are followed by 2 fully-connected layers with the number of neurons 1024 and 1 respectively. The leaky ReLU activation function is enforced after each layer with a slope of 0.2 in the negative part. Convolution operations are performed with 3 × 3 windowing and zero-padding for all convolutional layers. Stride equals to 1 for odd layers and 2 for even layers.

D. Objective Functions for the Generator Network

The objective function used to optimize the network G involves mean absolute error (MAE) [41] and structural similarity index (SSIM) [42]. Compared with the popular mean square error (MSE) [37], [43], which is also a mean-based measurement, MAE does not over-penalize significant differences and does not tolerate subtle errors in the reconstructed images. Therefore, MAE addresses some disadvantages of the MSE loss such as the over-smoothed issue. The formula of the MAE loss is expressed as follows:

| (3) |

where Nb, W and H denote the number of batches, the width and height of involved images, Yi and Xi represent the ground-truth images and images reconstructed by the network G respectively.

To ensure that the back-projection portion of the generator network G can learn an accurate reconstruction from projection data. MAE between the images reconstructed by the back-projection part and the ground-truth images is also added as part of the objective function. The formula is expressed as follows:

| (4) |

where denotes the image reconstructed by the back-projection portion.

Since MAE does not address the inter-dependencies between pixels in medical images, SSIM is introduced in the objective function to acquire visually appealing results. SSIM measures structural similarity between two images. The convolution window used to measure SSIM is set to 11 × 11. The SSIM formula is expressed as follows:

| (5) |

where C1 = (K1 · R)2 and C2 = (K2 · R)2 are constants to stabilize the ratios when the denominator is too small, R stands for the dynamic range of pixel values, often times K1 = 0.01, K2 = 0.03, μY, μX, σY2, σX2 and σY X are the means of Y and X, deviations of Y and X, and the correlation between Y and X respectively. Then, the structural loss becomes the following:

| (6) |

The adversarial loss helps the generator network produce faithful images that are indistinguishable by the discriminator network. In reference to Eq. (2), the adversarial loss is expressed as follows:

| (7) |

The overall objective function of G is then summarized as follows:

| (8) |

where λal and λsl are hyper-parameters to balance different loss components.

E. Parameter Settings and Training Details

The hyper-parameter selection is an important issue. To balance the Wasserstein distance and the gradient penalty, the hyperparameter λ was set to 10, as suggested in the original paper [35], while λal = 0.0025, and λsl = 0.8 were experimentally obtained on the validation dataset. These hyperparameters were adjusted for the best SSIM, since it is a widely preferred indicator over MSE and MAE for image quality. All codes were implemented on the TensorFlow platform [44] using an NVIDIA Titan RTX GPU with a graphical memory of 24 gigabytes. The Adam optimizer optimized the parameters [45] with β1 = 0.9 and β2 = 0.999.

Network training can be divided into two phases. In the first phase, the back-projection part of the network was first pretrained for 10 epochs. In the second phase, the network was trained as a whole, but the learning rate for the parameters in the back-projection portion was ten times smaller than the learning rate for the other parts of the network. A batch size of 5 was used for training in the first training phase and 3 in the second training phase.

III. Results

A. Data and Experimental Design

A clinical female breast dataset was used to train the proposed DEER method and evaluate its performance. The dataset was generated and prepared by Koning Corporation. These breast images were acquired on a state-of-the-art breast CT scanner designed and manufactured by Koning. Totally, 19,575 breast CT images were acquired from 42 patients. All the images were reconstructed from 300 cone-beam projections at 42 peak kilovoltage (kVp), which were used as the ground-truth images to train the proposed network. The distance between the x-ray source and the patient is 650 millimeters, while the distance between the patient and the detector array is 273 millimeters. All the images are of 1024 × 1024 (four times larger than clinical CT slices). Totally, 32 patients (13,970 images) were randomly selected for training, 3 patients (1,197 images) for validation, and 7 patients (4,408 images) for testing. In the training, validation, and testing phases, 75-view cone-beam projections provided by Koning were used as the input to the DEER network.

For qualitative assessment, we compared DEER with two state-of-the-art deep learning methods, including FBPConvNet [12] and Residual-CNN [14]. Both of these methods are considered as image-domain methods, in which the FBP operation is fixed and not learnable. There was no need for parameter fine-tuning since each method only used one training loss. The Euclidean loss was used in FBPConvNet, and the structural similarity index (SSIM) was used in Residual-CNN. Lastly, images reconstructed by the FDK algorithm from 75-view cone-beam raw data were also added as a baseline for comparison. Raw 75-view equidistant cone-beam projections were selected from the 300-view cone-beam projections for FDK reconstructions. Since we were not able to train iCT-Net and AUTOMAP for 1024×1024 image reconstruction on our GPU workstation due to their huge memory requirements (in the case of 1024×1024 image reconstruction, instead of 512×512 or even smaller-scale image reconstruction), these two methods are not included for assessment in this study.

B. Comparison With Other Deep-learning Methods

To visualize the performance of different methods, a few representative slices were selected from the testing dataset. Figs. 2 and 3 show results reconstructed using different methods from 75-view cone-beam projections. For better evaluation of the image quality, the regions of interest (ROIs) marked in the red/yellow boxes in both figures are magnified. Three metrics, including Peak Signal to Noise Ratio (PSNR) [46], SSIM [42], and MAE [41], were computed for quantitative assessment. The quantitative results are shown in Table I.

Fig. 2:

Representative slices reconstructed using different methods for Koning dataset. (a) Ground-truth, (b) FDK from 75-view cone-beam data, (c) Residual-CNN, (d) FBPConvNet, (e) DEER. The red boxes mark the Regions of Interest (ROIs). Red circles mark some subtle details in the ROIs. The display window is [−200, 200] HU for visualizing breast details. Note that the final reconstructions were post-processed to remove irrelevant structures outside the field of view.

Fig. 3:

Representative slices reconstructed using different methods for Koning dataset. (a) Ground-truth, (b) FDK from 75-view cone-beam data, (c) Residual-CNN, (d) FBPConvNet, (e) DEER. The red and yellow boxes mark the Regions of Interest (ROIs). Red and yellow circles mark some subtle details in the ROIs. The display window is [−200, 200] HU. Note that the final reconstructions were post-processed to remove irrelevant structures outside the field of view.

TABLE I:

Quantitative assessments on different methods (MEAN ± STD) for 75-view reconstructions. For each metric, the best result is marked in bold face. The measurements were obtained by averaging the values in the testing set.

| FDK | FBPConvNet | Residual-CNN | DEER | |

|---|---|---|---|---|

| PSNR | 27.5738 ± 8.9023 | 32.9347 ± 6.8931 | 32.6871 ± 9.3807 | 31.9676 ± 6.0831 |

| SSIM | 0.8988 ± 0.0978 | 0.9088 ± 0.0679 | 0.9363 ± 0.0651 | 0.9372 ± 0.0644 |

| MAE | 0.0158 ± 0.0166 | 0.0099 ± 0.0111 | 0.0091 ± 0.0111 | 0.0087 ± 0.0108 |

Our proposed DEER network produced few-view denoising results comparable or superior to that from both image-domain methods. All of the deep-learning methods could effectively remove streak artifacts introduced by the few-view constraint. Particularly, the DEER network produces better reconstructions in the selected ROIs. Both image-domain methods tend to smooth out some subtle details embedded in the noisy background due to insufficient data. For example, in Fig. 2, the breast feature inside the yellow circle is hardly distinguishable in Fig. 2 (c) and (d). Also, in Fig. 3, the feature in the red circle of red ROI and the features in the yellow ROI are hardly visible in the image reconstructed by FBPConvNet.

For the Residual-CNN method, the intensity of certain features is dimmer than expected in the reconstructed breast slices (feature in the yellow circle of Fig. 2, and features in the yellow ROI of Fig. 3). Moreover, the FBP method is associated with artifacts that do not exist in the ground-truth images, and in some cases, the image-domain methods cannot remove these artifacts through convolutional operations. For example, the artifact in the yellow circle of red ROI in Fig. 3 is clearly visible in the image produced by Residual-CNN, but this artifact is mostly removed by DEER through a learnable network-based reconstruction algorithm.

For the quantitative assessments, DEER had better SSIM and MAE values and a slightly lower PSNR value than FBPConvNet. FBPConvNet achieved the best PSNR value due to the implementation of the Mean Squared Error (MSE) based objective function. However, the literature has discussed that higher PSNR values do not guarantee the denoising performance, especially the textural/visual similarities relative to the ground-truth images [5], [47]. Also, it should be noted that since both FBPConvNet and residual-CNN only use a single loss function for optimization, these two methods may be subject to potential losses in visual performance. Both loss functions have their own limitations, and one should not solely rely on them for estimating image quality [48], [49]. Even though DEER does not achieve significant improvements quantitatively, images reconstructed by DEER present promising visual comparisons. Moreover, as presented in Figs. 2 and 3, the images reconstructed by FBPConvNet appear over-smoothed with less visual image texture, which is not desirable in clinical diagnosis. Lastly, the implementation of WGAN framework may negatively affect the quantitative measurements but it provides better recovery of subtle details and structural features [5], [50]. Compared with the other deep learning methods, DEER demonstrates a competitive performance in removing artifacts and reserving subtle but vital details compared with the other methods. In terms of reconstruction time, DEER takes about 0.1422 seconds to reconstruct a single 2D slice (1024 × 1024) on an NVIDIA Titan RTX GPU.

C. Ablation Studies and Other Relevant Experiments

To demonstrate the effectiveness of the proposed method, three additional networks were trained and compared with the DEER network. In the first network, few-view images reconstructed by the FDK algorithm are eliminated from the DEER network, and the 150-view parallel-beam projections become the only input to the network. In the second network, few-view images reconstructed by the FDK algorithm are used as the only input to the network, and the back-projection part of the generator network G is eliminated. Lastly, another DEER network without the WGAN component is trained and included for comparison to demonstrate the effectiveness of the WGAN framework in this task. The first network is denoted as DEER-Sino, the second network is denoted as DEER-FBP, and the third network is denoted as DEER-NoWGAN. Note that in the DEER network, the dimensionality of the input to the refinement portion of the generator network G is Nb × 1024 × 1024 × 2. For a fair comparison, two copies of the input image were concatenated as the input to the DEER-Sino and DEER-FBP networks (i.e., in DEER-Sino, two copies of the image reconstructed by the back-projection part are concatenated as the input to the refinement part of the network; in DEER-FBP, two copies of the image reconstructed by the FDK algorithm are concatenated as the input to the refinement part of the network). The parameters and hyperparameters were fine-tuned for all three networks. In DEER-Sino, λal = 0.002, and λsl = 0.65. In DEER-FBP, λal = 0.0025, and λsl = 0.65. In DEER-NoWGAN, λsl = 0.8.

As presented in Fig. 4, a representative slice is selected to visualize the performance of different methods. The image reconstructed from simulated 150-view parallel-beam projections using FBP, and the output from the back-projection portion in DEER are also included for comparison, which are denoted as FBP and DEER-BP respectively. Corresponding quantitative measurements are shown in Table II. Note that the image quality is well indicated by the three white features in the yellow circle in the zoomed-in areas of Fig. 4. Clearly, relatively simple post-processing methods tend to smooth-out or ignore these subtle but crucial details in the reconstructed images. However, a network-based reconstruction algorithm is better at recovering these details buried in the few-view artifacts. Although DEER-Sino has worse quantitative measurements than that of DEER-FBP, it accurately recovers these subtle features, which is desirable for clinical studies. It should be noted that the quality of the input to the DEER-Sino network is much lower than that of the DEER-FBP. Lastly, while results reconstructed by DEER-NoWGAN and DEER have similar quantitative values, certain features in images produced by DEER-NoWGAN appear to be dimmer than expected (i.e., the white feature at the bottom of the yellow circle in Fig. 4).

Fig. 4:

Representative slices reconstructed using different methods. (a) The ground-truth, (b) FDK reconstruction from 75-view cone-beam projections, (c) FBP from 150 simulated parallel-beam projections, (d) DEER-BP, (e) DEER-NoWGAN, (f) DEER-FBP, (g) DEER-Sino, and (h) DEER. The red boxes mark the Regions of Interest (ROIs). Yellow circles mark some subtle details in the ROIs. Note that the final reconstructions were post-processed to remove irrelevant structures outside the field of view.

TABLE II:

Quantitative assessment on different methods (MEAN ± STD) for 75-view reconstructions. For each metric, the best result is marked in bold face. The measurements were obtained by averaging the values on the testing dataset. Significant streak artifacts outside the FOV were introduced in the Radon/iRadon operations, leading to poor values of the FBP method.

| FDK | FBP | DEER-BP | DEER-NoWGAN | DEER-FBP | DEER-Sino | DEER | |

|---|---|---|---|---|---|---|---|

| PSNR | 27.5738 ± 8.9023 | 22.8804 ± 7.5900 | 25.4696 ± 8.5661 | 31.8683 ± 5.9122 | 31.7790 ± 5.8244 | 29.0397 ± 7.2356 | 31.9676 ± 6.0831 |

| SSIM | 0.8988 ± 0.0978 | 0.3235 ± 0.1577 | 0.8042 ± 0.1000 | 0.9365 ± 0.0649 | 0.9360 ± 0.0656 | 0.9013 ± 0.1041 | 0.9372 ± 0.0644 |

| MAE | 0.0158 ± 0.0166 | 0.0464 ± 0.0282 | 0.0242 ± 0.0234 | 0.0088 ± 0.0110 | 0.0088 ± 0.0109 | 0.0132 ± 0.0161 | 0.0087 ± 0.0108 |

In few-view cases, analytical reconstruction methods such as FBP and FDK cannot produce high-quality results. The reconstructed results from post-processing image-based method, DEER-FBP, presented in Fig. 4 (f), cannot separate true features and artifacts. The main intuition of DEER is to learn a network-based reconstruction algorithm and to address the disadvantages of analytical reconstruction methods. As shown in the ROI of Fig. 4 (d) and Fig. 5, the proposed network-based reconstruction method helps reduce the amplitude of noise, compared with the FBP result presented in Fig. 4 (c). Then, the real features are more visible to the network. Note that as shown in Fig. 4 (d) and Fig. 5, DEER-BP appears to be less sensitive to the breast boundaries, resulting in darker boundaries compared with the image reconstructed by analytical FBP method. However, DEER-BP is an intermediate output from the proposed method, and the issue can be effectively addressed by the refinement portion of the network. As presented in Fig. 4 (g), the DEER-Sino network, in which the input is only the projection data, does not produce images with dark boundaries. Lastly, compared with FBPConvNet, residual-CNN, and DEER-NoWGAN, the WGAN framework was implemented in DEER for better recovery of subtle details and structural features but may result in a cost of compromising the quantitative measurements such as SSIM, and PSNR [5].

Fig. 5:

Absolute noise maps generated from images reconstructed by different methods, referenced to the ground-truth image. (a) FBP from 150-view simulated data, (b) DEER-BP.

It is clearly shown in Fig. 4 that the back-projection portion can learn a network-based reconstruction that is similar to the classic FBP method. The learned FBP allows the refinement part to recover some subtle features lost in image-domain networks. As presented in Fig. 5, the learned back-projection produces images with overall lower noise contents than the counterparts reconstructed by FBP, and removes most of the streak artifacts outside the FOV.

D. View-independent Network

As described in Section II, DEER utilized to improve quality of reconstructed images. However, the proposed ray-tracing idea could be trained with as low as parameters. In this subsection, another DEER network (denoted as DEER-Lite) was built with parameters by sharing all the parameters in the reconstruction part. To be specific, in DEER, projections at different angles are back-projected to the FOV using different sets of trainable parameters (e.g., projection data at 0° are back-projected using trainable parameters at 0°, and projection data at 90° are back-projected using trainable parameters at 90°). On the other hand, in DEER-Lite, projections at different angles are back-projected to the FOV using the same set of parameters. The amount of trainable parameters is reduced to by sharing the parameters at different view angles. To further reduce the number of trainable parameters to , parameters in DEER-Lite are also shared between all ray-tracing lines (i.e., all the points in the sinograms use the same set of parameters for back-projecting over the FOV). DEER is classified as a view-dependent network since different sets of parameters are used for different angles. DEER-Lite is a view-independent network since all projection angles use the same set of parameters.

DEER-Lite was trained in the same way as described in Section II, except DEER-Lite uses parameters, while DEER uses parameters in the back-projection part. As shown in Fig. 6, a representative slice was selected to present the difference in reconstruction quality of DEER and DEER-Lite. The corresponding quantitative assessments are shown in Table III. Both networks demonstrate superior denoising performance. Even though DEER only shows slight improvements in terms of selected quantitative measurements, it demonstrates more stable and clinically-favorable reconstructions in certain testing patients. For example, in the red ROI of Fig. 6, DEER-Lite produces some fake features that do not exist in both ground-truth images and results produced by DEER. Moreover, these unreal features are visible throughout this image volume. One possible reason for this phenomenon in DEER-Lite is that, since the proposed network-based reconstruction algorithm is learning a revised back-projection mechanism, it learns to compensate for some noise and artifacts during the reconstruction. And because the parameters are shared in all views, these compensations may produce artifacts in other views. On the other hand, DEER does not have this problem because the parameters are view-dependent in the reconstruction part, and compensations at a specific angle will not affect back-projection at another angle. Lastly, since the back-projected profiles are rotated to the corresponding angles during reconstructions, and the involved interpolations can negatively affect the image quality, a view-dependent network provides more degrees of freedom to address this issue by learning different ray-tracing patterns using different sets of parameters.

Fig. 6:

Representative slice reconstructed using different methods for Koning dataset. (a) Ground-truth, (b) FDK from 75-view cone-beam data, (c) DEER-Lite, (d) DEER. The red boxes mark the Regions of Interest (ROIs). Yellow circles mark some subtle details in the ROIs. The display window is [−200, 200] HU. Note that the final reconstructions were post-processed to remove irrelevant structures outside the field of view.

TABLE III:

Quantitative assessment on DEER and DEER-Lite (MEAN ± STD) for 75-view reconstructions. For each metric, the best result is marked in bold face. The measurements were obtained by averaging the values on the testing dataset.

| FDK | DEER-Lite | DEER | |

|---|---|---|---|

| PSNR | 27.5738 ± 8.9023 | 31.7925 ± 5.8961 | 31.9679 ± 6.0831 |

| SSIM | 0.8988 ± 0.0978 | 0.9367 ± 0.0695 | 0.9372 ± 0.0644 |

| MAE | 0.0158 ± 0.0166 | 0.0089 ± 0.0109 | 0.0087 ± 0.0108 |

Since the trainable parameters in DEER-Lite are view-independent, it can be applied to other numbers of views directly without further re-training. To show that DEER-Lite can be used for reconstructions in different few-view conditions, the network (which was trained on 75-view data) was tested for 30-view reconstructions. The testing details are the same as described above. First, few-view reconstructed volumes were produced from 30-view cone-beam projections using the FDK algorithm. Then, 200-view parallel-beam projections were estimated from the reconstructed volumes (we simulated 200-view parallel-beam projections instead of 150-view to show that DEER-Lite can handle other numbers of views directly). Note that because the reconstructed image from the back-projection part in DEER (and its variances) is a summation of back-projected profiles at different view angles, the magnitude of the learned reconstructed image will be changed with different numbers of views. Therefore, simple linear re-scaling is necessary to adjust the magnitude of the reconstructed image from the back-projection part according to the view number. Lastly, the trained DEER-Lite model, which was trained for 75-view reconstructions, was directly used to produce 30-view results. A representative slice is shown in Fig. 7. The reconstructed image is compared with the ground-truth image, the FDK result from 30-view cone-beam data, and the FBP result from simulated 200-view parallel-beam data. The corresponding quantitative measurements are shown in Table IV.

Fig. 7:

Representative slice reconstructed using different methods for Koning dataset for directly testing on 30-view data using a trained 75-view network. (a) Ground-truth, (b) FDK from 30-view cone-beam data, (c) FBP from 200-view simulated parallel-beam, (d) DEER-Lite. The display window is [−200, 200] HU. Note that the final reconstructions were post-processed to remove irrelevant structures outside the field of view.

TABLE IV:

Quantitative assessment on DEER-Lite (MEAN ± STD) for directly testing on 30-view data using a trained 75-view network. The measurements were obtained by averaging the values on the testing dataset.

| FDK | DEER-Lite | |

|---|---|---|

| PSNR | 22.1137 ± 6.3728 | 28.0983 ± 8.4834 |

| SSIM | 0.7795 ± 0.1485 | 0.9018 ± 0.0985 |

| MAE | 0.0289 ± 0.0270 | 0.0148 ± 0.0167 |

IV. Discussions

Breast CT improves the detection and characterization of breast cancer, with the potential to become a primary breast screening tool. Our Deep Efficient End-to-end Reconstruction (DEER) network has been shown to be feasible, with a great potential for further improvements for few-view CT image reconstructions. This is achieved by directly mapping sinogram data to CT images, lowering the involved x-ray radiation dose well under the FDA threshold in a data-driven fashion. Also, the DEER network improves the computational complexity by orders of magnitude relative to the state of the art networks that map raw tomographic data to reconstructed images.

This paper has introduced a novel approach for reconstructing CT images directly from under-sampled projection data, referred to as DEER. This approach is featured by (1) an adjustable network-based reconstruction algorithm, (2) the Wasserstein GAN framework for optimizing parameters, (3) a convolutional encoder-decoder network, and (4) an experimentally optimized objective function. The proposed end-to-end strategy has been applied to learn the mapping from sinogram domain to image domain, requesting significantly less computational burden than prior arts. Zhu et al. [24] published the first method for learning a network-based reconstruction algorithm for medical imaging. They used several fully-connected layers to learn the mapping between MRI raw-data and an underlying image directly. But their method poses a severe challenge for reconstructing normal-size images due to extremely large matrix multiplications in the fully-connected layers. Additionally, even though this technique could be applied to CT images, using fully-connected layers to learn the mapping does not use the full information embedded in the sinograms, resulting in redundant network parameters. iCT-Net [27] utilizes angular information and reduces the number of parameters from to . Instead of feeding the whole sinogram directly into the network, iCT-Net uses Nd fully-connected layers, each takes a single projection and reconstructs a corresponding intermediate image component. DEER takes full advantage of all the information embedded in the sinograms by utilizing the angular information similar to what the iCT-Net does and also assuming every single point in the sinogram is only related to reconstructing pixels along the associated X-ray path. The proposed method is a fully learnable mapping from the sinogram to the image domain. By taking advantage of the geometric constraint imposed by line integrals, our network is much more efficient than other end-to-end methods. The intuition of DEER is to learn a better filtering and back-projection process using deep-learning approaches. With this intuition and the novel network-based end-to-end method, DEER can be trained with parameters. Moreover, with this design, the reconstruction process can be learned with as few as parameters if all the angles and ray-tracing lines share the same training parameters during the back-projection procedure. If the network only uses parameters (which means parameters are not view-dependent), the proposed back-projection mechanism could be applied to other numbers of views directly even without re-training due to the learned ray-tracing idea.

The method proposed in this paper can be easily extended to the clinical cone-beam setting by inputting 3D cone-beam data to the network and trying to learn the mapping between 3D cone-beam data and 3D image volumes directly in a similar manner as long as the GPU memory is sufficiently large. Since few-view artifacts, calcified deposits, and subtle details appear in 3D geometry, 3D networks can capture full spatial context and has a great potential to improve image quality [51], [52]. Nevertheless, training 3D networks directly from cone-beam data is not feasible on our current hardware. Once we have a workstation with larger GPU memory, by addressing the divergent cone-beam geometry and ray-specific varying weights, our DEER network can be modified and re-trained to handle cone-beam projection data directly for cone-beam image volume reconstruction.

Moreover, the proposed method can be easily implemented into other imaging modalities, such as single-photon emission computed tomography (SPECT) and positron emission tomography (PET) since their forward imaging models are similar to CT and their memory requirements are much more manageable. Translating the DEER network into SPECT and PET could have a particularly high impact in applications of organ-specific scanners with special geometry and new scanner designs using the same number of detector modules to cover a larger FOV.

In conclusion, we have presented a novel network-based reconstruction algorithm for few-view CT reconstruction. The proposed method outperforms existing deep-learning-based methods with significantly less memory burden and higher computational efficiency. In the future, we plan to further improve this network for direct cone-beam CT 3D reconstruction and other imaging modalities, and translate it into clinical applications.

Acknowledgments

This work was supported in part by the NIH/NCI under Award R01CA233888 and Award R01CA237267, in part by the NIH/NIBIB under Award R01EB026646, and in part by the NIH/NHLBI under Award R01HL151561.

References

- [1].Smith BD, Jiang J, McLaughlin SS, Hurria A, Smith GL, Giordano SH, and Buchholz TA, “Improvement in Breast Cancer Outcomes Over Time: Are Older Women Missing Out?,” JCO, vol. 29, pp. 4647–4653, November 2011. [DOI] [PubMed] [Google Scholar]

- [2].Henson DE, Ries L, Freedman LS, and Carriaga M, “Relationship among outcome, stage of disease, and histologic grade for 22,616 cases of breast cancer. The basis for a prognostic index,” Cancer, vol. 68, no. 10, pp. 2142–2149, 1991. [DOI] [PubMed] [Google Scholar]

- [3].Brenner DJ and Hall EJ, “Computed Tomography — An Increasing Source of Radiation Exposure,” N Engl J Med, vol. 357, pp. 2277–2284, November 2007. [DOI] [PubMed] [Google Scholar]

- [4].Lin EC, “Radiation Risk From Medical Imaging,” Mayo Clin Proc, vol. 85, pp. 1142–1146, December 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Shan H, Zhang Y, Yang Q, Kruger U, Kalra MK, Sun L, Cong W, and Wang G, “3-D Convolutional Encoder-Decoder Network for Low-Dose CT via Transfer Learning From a 2-D Trained Network,” IEEE Transactions on Medical Imaging, vol. 37, pp. 1522–1534, June 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Chen H, Zhang Y, Zhang W, Liao P, Li K, Zhou J, and Wang G, “Low-dose CT via convolutional neural network,” Biomed Opt Express, vol. 8, pp. 679–694, January 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Shan H, Padole A, Homayounieh F, Kruger U, Khera RD, Nitiwarangkul C, Kalra MK, and Wang G, “Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction,” Nature Machine Intelligence, vol. 1, pp. 269–276, June 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Pacilè S, Brun F, Dullin C, Nesterest YI, Dreossi D, Mohammadi S, Tonutti M, Stacul F, Lockie D, Zanconati F, Accardo A, Tromba G, and Gureyev TE, “Clinical application of low-dose phase contrast breast CT: methods for the optimization of the reconstruction workflow,” Biomed Opt Express, vol. 6, pp. 3099–3112, July 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Floridi C, Radaelli A, Abi-Jaoudeh N, Grass M, Lin MD, Chiaradia M, Geschwind J-F, Kobeiter H, Squillaci E, Maleux G, Giovagnoni A, Brunese L, Wood B, Carrafiello G, and Rotondo A, “C-arm cone-beam computed tomography in interventional oncology: technical aspects and clinical applications,” Radiol Med, vol. 119, pp. 521–532, July 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Orth RC, Wallace MJ, Kuo MD, and Technology Assessment Committee of the Society of Interventional Radiology, “C-arm cone-beam CT: general principles and technical considerations for use in interventional radiology,” J Vasc Interv Radiol, vol. 20, pp. S538–544, July 2009. [DOI] [PubMed] [Google Scholar]

- [11].Wang G, “A Perspective on Deep Imaging,” IEEE Access, vol. 4, pp. 8914–8924, 2016. [Google Scholar]

- [12].Jin KH, McCann MT, Froustey E, and Unser M, “Deep Convolutional Neural Network for Inverse Problems in Imaging,” IEEE Transactions on Image Processing, vol. 26, pp. 4509–4522, September 2017. [DOI] [PubMed] [Google Scholar]

- [13].Ronneberger O, Fischer P, and Brox T, “U-Net: Convolutional Networks for Biomedical Image Segmentation,” in Medical Image Computing and Computer-Assisted Intervention MICCAI 2015 (Navab N, Hornegger J, Wells WM, and Frangi AF, eds.), Lecture Notes in Computer Science, (Cham: ), pp. 234–241, Springer International Publishing, 2015. [Google Scholar]

- [14].Cong W, Shan H, Zhang X, Liu S, Ning R, and Wang G, “Deep-learning-based breast CT for radiation dose reduction,” in Developments in X-Ray Tomography XII, vol. 11113, p. 111131L, International Society for Optics and Photonics, September 2019. [Google Scholar]

- [15].He K, Zhang X, Ren S, and Sun J, “Deep Residual Learning for Image Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778, June 2016. ISSN: 1063-6919. [Google Scholar]

- [16].Pelt DM and Batenburg KJ, “Fast Tomographic Reconstruction From Limited Data Using Artificial Neural Networks,” IEEE Transactions on Image Processing, vol. 22, pp. 5238–5251, December 2013. Conference Name: IEEE Transactions on Image Processing. [DOI] [PubMed] [Google Scholar]

- [17].Wang G, Ye Y, and Yu H, “Approximate and exact cone-beam reconstruction with standard and non-standard spiral scanning,” Phys Med Biol, vol. 52, pp. R1–13, March 2007. [DOI] [PubMed] [Google Scholar]

- [18].Landau HJ, “Sampling, data transmission, and the Nyquist rate,” Proceedings of the IEEE, vol. 55, pp. 1701–1706, October 1967. [Google Scholar]

- [19].Gordon R, Bender R, and Herman GT, “Algebraic reconstruction techniques (ART) for three-dimensional electron microscopy and x-ray photography.,” Journal of theoretical biology, vol. 29, no. 3, pp. 471–481, 1970. [DOI] [PubMed] [Google Scholar]

- [20].Andersen A, “Simultaneous Algebraic Reconstruction Technique (SART): A superior implementation of the ART algorithm,” Ultrasonic Imaging, vol. 6, pp. 81–94, January 1984. [DOI] [PubMed] [Google Scholar]

- [21].Dempster AP, Laird NM, and R. DBR “Maximum Likelihood from Incomplete Data via the EM Algorithm,” Journal of the Royal Statistical Society. Series B (Methodological), vol. 39, no. 1, pp. 1–38, 1977. [Google Scholar]

- [22].Wang G, Butler A, Yu H, and Campbell M, “Guest Editorial Special Issue on Spectral CT,” IEEE Transactions on Medical Imaging, vol. 34, pp. 693–696, March 2015. [DOI] [PubMed] [Google Scholar]

- [23].Wang G, Ye JC, Mueller K, and Fessler JA, “Image Reconstruction is a New Frontier of Machine Learning,” IEEE Trans Med Imaging, vol. 37, no. 6, pp. 1289–1296, 2018. [DOI] [PubMed] [Google Scholar]

- [24].Zhu B, Liu JZ, Cauley SF, Rosen BR, and Rosen MS, “Image reconstruction by domain-transform manifold learning,” Nature, vol. 555, pp. 487–492, March 2018. [DOI] [PubMed] [Google Scholar]

- [25].Würfl T, Hoffmann M, Christlein V, Breininger K, Huang Y, Unberath M, and Maier AK, “Deep Learning Computed Tomography: Learning Projection-Domain Weights From Image Domain in Limited Angle Problems,” IEEE Transactions on Medical Imaging, vol. 37, pp. 1454–1463, June 2018. [DOI] [PubMed] [Google Scholar]

- [26].Chen H, Zhang Y, Chen Y, Zhang J, Zhang W, Sun H, Lv Y, Liao P, Zhou J, and Wang G, “LEARN: Learned Experts’ Assessment-Based Reconstruction Network for Sparse-Data CT,” IEEE Transactions on Medical Imaging, vol. 37, pp. 1333–1347, June 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Li Y, Li K, Zhang C, Montoya J, and Chen G, “Learning to Reconstruct Computed Tomography (CT) Images Directly from Sinogram Data under A Variety of Data Acquisition Conditions,” IEEE Transactions on Medical Imaging, pp. 1–1, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].He J, Wang Y, and Ma J, “Radon Inversion via Deep Learning,” IEEE Transactions on Medical Imaging, vol. 39, pp. 2076–2087, June 2020. Conference Name: IEEE Transactions on Medical Imaging. [DOI] [PubMed] [Google Scholar]

- [29].Barrett HH, “III The Radon Transform and Its Applications,” in Progress in Optics (Wolf E, ed.), vol. 21, pp. 217–286, Elsevier, January 1984. [Google Scholar]

- [30].Barrett HH, “Fundamentals of the Radon Transform,” in Mathematics and Computer Science in Medical Imaging (Viergever MA and Todd-Pokropek A, eds.), NATO ASI Series, pp. 105–125, Springer Berlin Heidelberg, 1988. [Google Scholar]

- [31].Feldkamp LA, Davis LC, and Kress JW, “Practical cone-beam algorithm,” J. Opt. Soc. Am. A, JOSAA, vol. 1, pp. 612–619, June 1984. Publisher: Optical Society of America. [Google Scholar]

- [32].Xie H, Shan H, Cong W, Zhang X, Liu S, Ning R, and Wang G, “Dual network architecture for few-view CT - trained on ImageNet data and transferred for medical imaging,” in Developments in X-Ray Tomography XII, vol. 11113, p. 111130V, International Society for Optics and Photonics, September 2019. [Google Scholar]

- [33].Arjovsky M, Chintala S, and Bottou L, “Wasserstein Generative Adversarial Networks,” in International Conference on Machine Learning, pp. 214–223, July 2017. [Google Scholar]

- [34].Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, and Bengio Y, “Generative Adversarial Nets,” in Advances in Neural Information Processing Systems 27 (Ghahramani Z, Welling M, Cortes C, Lawrence ND, and Weinberger KQ, eds.), pp. 2672–2680, Curran Associates, Inc., 2014. [Google Scholar]

- [35].Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, and Courville AC, “Improved training of wasserstein gans,” in Advances in neural information processing systems, pp. 5767–5777, 2017. [Google Scholar]

- [36].Gribbon KT and Bailey DG, “A novel approach to real-time bilinear interpolation,” in Proceedings. DELTA 2004. Second IEEE International Workshop on Electronic Design, Test and Applications, pp. 126–131, January 2004. [Google Scholar]

- [37].Chen H, Zhang Y, Kalra MK, Lin F, Chen Y, Liao P, Zhou J, and Wang G, “Low-Dose CT With a Residual Encoder-Decoder Convolutional Neural Network,” IEEE Transactions on Medical Imaging, vol. 36, pp. 2524–2535, December 2017. Conference Name: IEEE Transactions on Medical Imaging. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Lee H, Lee J, Kim H, Cho B, and Cho S, “Deep-Neural-Network-Based Sinogram Synthesis for Sparse-View CT Image Reconstruction,” IEEE Transactions on Radiation and Plasma Medical Sciences, vol. 3, pp. 109–119, March 2019. [Google Scholar]

- [39].Quan TM, Nguyen-Duc T, and Jeong W-K, “Compressed Sensing MRI Reconstruction Using a Generative Adversarial Network With a Cyclic Loss,” IEEE Transactions on Medical Imaging, vol. 37, pp. 1488–1497, June 2018. Conference Name: IEEE Transactions on Medical Imaging. [DOI] [PubMed] [Google Scholar]

- [40].Donoho DL, “Compressed sensing,” IEEE Transactions on Information Theory, vol. 52, pp. 1289–1306, April 2006. [Google Scholar]

- [41].Willmott CJ and Matsuura K, “Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance,” Climate Research, vol. 30, pp. 79–82, December 2005. [Google Scholar]

- [42].Wang Z, Bovik AC, Sheikh HR, and Simoncelli EP, “Image quality assessment: from error visibility to structural similarity,” IEEE Transactions on Image Processing, vol. 13, pp. 600–612, April 2004. [DOI] [PubMed] [Google Scholar]

- [43].Wolterink JM, Leiner T, Viergever MA, and Išgum I, “Generative Adversarial Networks for Noise Reduction in Low-Dose CT,” IEEE Transactions on Medical Imaging, vol. 36, pp. 2536–2545, December 2017. [DOI] [PubMed] [Google Scholar]

- [44].Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado G, Davis A, Dean J, Devin M, Ghemawat S, Goodfellow I, Harp A, Irving G, Isard M, Jia Y, Jozefowicz R, Kaiser L, Kudlur M, Levenberg J, Man D, Monga R, Moore S, Murray D, Olah C, Schuster M, Shlens J, Steiner B, Sutskever I, Talwar K, Tucker P, Vanhoucke V, Vasudevan V, Vigas F, Vinyals O, Warden P, Wattenberg M, Wicke M, Yu Y, and Zheng X, “Tensorflow: Large-scale machine learning on heterogeneous distributed systems,” 2015. [Google Scholar]

- [45].Kingma DP and Ba J, “Adam: A method for stochastic optimization,” in 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings (Bengio Y and LeCun Y, eds.), 2015. [Google Scholar]

- [46].Korhonen J and You J, “Peak signal-to-noise ratio revisited: Is simple beautiful?,” in 2012 Fourth International Workshop on Quality of Multimedia Experience, pp. 37–38, July 2012. [Google Scholar]

- [47].Yang Q, Yan P, Zhang Y, Yu H, Shi Y, Mou X, Kalra MK, Zhang Y, Sun L, and Wang G, “Low-Dose CT Image Denoising Using a Generative Adversarial Network With Wasserstein Distance and Perceptual Loss,” IEEE Transactions on Medical Imaging, vol. 37, pp. 1348–1357, June 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Hor A and Ziou D, “Is there a relationship between peak-signal-to-noise ratio and structural similarity index measure?,” IET Image Processing, vol. 7, pp. 12–24, February 2013. Conference Name: IET Image Processing. [Google Scholar]

- [49].Hor A and Ziou D, “Image Quality Metrics: PSNR vs. SSIM,” in 2010 20th International Conference on Pattern Recognition, pp. 2366–2369, August 2010. ISSN: 1051-4651. [Google Scholar]

- [50].Ledig C, Theis L, Huszr F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, and Shi W, “Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 105–114, July 2017. ISSN: 1063-6919. [Google Scholar]

- [51].Xie H, Shan H, and Wang G, “Deep Encoder-Decoder Adversarial Reconstruction (DEAR) Network for 3d CT from Few-View Data,” Bioengineering, vol. 6, p. 111, December 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Xie H, Shan H, and Wang G, “3D Few-View CT Image Reconstruction with Deep Learning,” in 2020 IEEE 17th International Symposium on Biomedical Imaging Workshops (ISBI Workshops), pp. 1–4, April 2020. [Google Scholar]