Highlights

-

•

fNIRS and looking responses to emotional faces were measured in 5- and 7-month-olds.

-

•

Emotional faces had varying identities within happy, angry, and fearful blocks.

-

•

Temporo-parietal and frontal activations were observed, particularly to happy faces.

-

•

Infants looked longer to the mouth region of angry faces.

-

•

No difference in behavior or neural activity observed between 5- and 7-month-olds.

Keywords: Infant, Face processing, Emotion, fNIRS, Eye-tracking

Abstract

The processing of facial emotion is an important social skill that develops throughout infancy and early childhood. Here we investigate the neural underpinnings of the ability to process facial emotion across changes in facial identity in cross-sectional groups of 5- and 7-month-old infants. We simultaneously measured neural metabolic, behavioral, and autonomic responses to happy, fearful, and angry faces of different female models using functional near-infrared spectroscopy (fNIRS), eye-tracking, and heart rate measures. We observed significant neural activation to these facial emotions in a distributed set of frontal and temporal brain regions, and longer looking to the mouth region of angry faces compared to happy and fearful faces. No differences in looking behavior or neural activations were observed between 5- and 7-month-olds, although several exploratory, age-independent associations between neural activations and looking behavior were noted. Overall, these findings suggest more developmental stability than previously thought in responses to emotional facial expressions of varying identities between 5- and 7-months of age.

1. Introduction

The discrimination and identification of facial expressions of emotion form an important channel for human non-verbal communication. The behavioral ability to differentiate among different facial expressions (such as between happy and angry or fearful faces) across changes in face identity is thought to emerge between 5 and 7 months in typically developing infants, a potential first step in the developmental emergence of facial expression understanding (Leppänen and Nelson, 2009). For example, 7-month-olds differentiate between some expressions (e.g. happy versus fear, happy versus angry, or fear versus angry) in behavioral paradigms, even when expressions are modelled by faces of different identities (Bayet and Nelson, 2019; Leppänen and Nelson, 2009).

Eye-tracking studies suggest that infants’ developing abilities to differentiate between facial expressions mature along with their ability to focus on internal facial features, such as the eyes and mouth (Hunnius et al., 2011; Soussignan et al., 2017), that are central to human communication (Gliga and Csibra, 2007) and convey distinguishing information about facial expressions (Smith et al., 2005). Preliminary evidence additionally suggests that individual differences in eye-looking durations (e.g. infants being eye or mouth-lookers) relate to differences in facial emotion discrimination abilities (Amso et al., 2010). Looking behavior, and in particular looking towards the eyes of emotional faces, may thus drive or reflect infants’ abilities to extract information from and discriminate between facial emotions (Bayet and Nelson, 2019).

Heart rate has been found to index attention in infancy (Courage et al., 2006; Richards and Casey, 1991), including attention to facial emotions. Transient heart rate decelerations are thought to reflect attentional orienting (Perdue et al., 2017), with larger and longer heart rate deceleration to fearful as compared to non-fearful faces by 7 months of age (Peltola et al., 2013).

Functional near-infrared spectroscopy (fNIRS) has provided some information about which cortical regions of the infant brain are specifically activated in response to facial expressions of emotion. In adults, processing facial expressions engages a distributed set of coactive brain regions (Fusar-Poli et al., 2009; Kesler-West et al., 2001) that include the fusiform face area, superior temporal gyrus (STG; Narumoto et al., 2001; Winston et al., 2004), amygdala, and frontal cortices (Nakamura et al., 1999; Vuilleumier et al., 2001). While not all brain regions implicated in emotional face processing in adults are accessible by fNIRS due to limited depth penetration, superficial temporal and frontal cortices can be accessed. Prior infant fNIRS work has shown differential brain responses to happy and angry faces in the temporal cortex from 6 to 7 months of age (Nakato et al., 2011) and differential responses to happy, angry, and/or fearful faces in the right inferior frontal cortex at 7-months of age when modelled in conjunction with certain individual epigenetic differences (Grossmann et al., 2018; Krol et al., 2019). fNIRS has also revealed that medial frontal regions are implicated in processing happy faces in infants from 9 to 13 months (Minagawa-Kawai et al., 2009) and linked individual differences in frontal responses to emotional faces with earlier epigenetic changes (Krol et al., 2019) and later behavior (Grossmann et al., 2018). However, no fNIRS studies to date have directly tested for differences in neural responses to facial expressions between 5 and 7 months, a critical time window during which differential responses to fearful faces are often first observed with ERP (Leppänen et al., 2009; Xie et al., 2018), or examined fNIRS responses to facial expressions in these age groups in relation to simultaneous looking behavior to facial features. In addition, while fNIRS and behavioral findings suggest that infants as young as 7 months (though not younger) can process face identity invariantly from changes of expression (Ichikawa et al., 2018; Kobayashi et al., 2014), no prior infant fNIRS study has examined responses to facial emotions when facial identity varies, a focus of classic behavioral studies in this age group (Bayet and Nelson, 2019; Leppänen and Nelson, 2009). Such a test would greatly extend past work by providing a more robust indicator of facial emotion discrimination than fNIRS neural responses to facial expressions of emotions from the same model. The current study sought to address these gaps by examining the neural, behavioral, and autonomic correlates of emotional face processing in cross-sectional groups of 5- and 7-month-old infants. These age groups were chosen based on previous findings of developmental changes in behavioral and ERP responses to emotional facial expressions in infancy (Bayet and Nelson, 2019; Leppänen and Nelson, 2009), and align with at least one previous fNIRS study that found developmental changes during this period in the ability to extract face identity across changes of expression (Kobayashi et al., 2014). In line with existing studies in this field, we focused on canonical happy, fearful and angry facial expressions. Facial identity varied within each trial to isolate responses to emotional categories independently of the identity of the model, rather than to expression changes of a single model.

2. Materials and methods

2.1. Participants

Cross-sectional groups of 48 5-month olds (26 males, mean age 153, ± 4 days) and 52 7-month olds (30 males, mean age 212 ± 5 days) formed the final sample. All were typically developing, with no known pre- or perinatal complications, and born after 37-weeks of gestation. Data from an additional 23 5-month-olds and 34 7-month-olds were collected but excluded due to cap refusal (n = 2 5-month-olds, n = 1 7-month-old), completing fewer than 3 trials per condition (n = 3 5-month-olds), inaccurate cap placement (deviation from ideal by more than 1.5 cm in any direction as determined by post-hoc review of placement photos; n = 5 5-month-olds, n = 10 7-month-olds), over 25 % (12 of 46) channels unusable (n = 11 5-month-olds, n = 19 7-month-olds), technical malfunction (n = 1 5-month-old, n = 4 7-month-olds) or experimenter error (n = 1 5-month-old). Additionally, 5 participants were excluded due to self-report of maternal opioid or antipsychotic medication use during pregnancy (n = 3 5-month-olds) or subsequent ASD diagnosis (n = 1 5-month-old, n = 2 7-month-old). Eye-tracking data from 2 included participants were excluded due to calibration failure (n = 1 7-month-old) or technical problems with the eye-tracker (n = 1 7-month-old). The study was approved by the Institutional Review Board of Boston Children’s Hospital. Written informed consent was provided by the infant’s parent before starting the session.

2.2. Stimuli

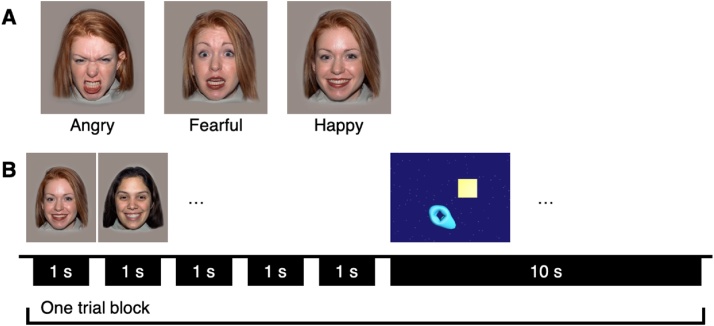

Photographs of women with happy, fearful and angry open-mouth face expressions from the NimStim dataset (Tottenham et al., 2009) were presented in a block design. Faces were in full color with open mouths and visible teeth. In each block, 5 faces of different women with the same expression (i.e. all happy, all angry, or all fearful) were presented for 1-second each with a random inter-stimulus interval of 0.2−0.4 seconds (Fig. 1). The race of the 5 women in the presented stimuli was matched to maternal self-reported race (Asian: models 15, 16, 17, 18, and 19; Black: models 11, 12, 13, 14, with model 11 shown twice; White: counterbalanced to either models 01, 02, 03, 05, and 06, or models 06, 07, 08, 09, and 10). Stimulus blocks were followed by a 10-second full color video of abstract moving shapes drawn out of 6 possible videos (Fig. 1B). Blocks were presented in a counterbalanced sequence, such that up to 10 blocks were presented for each emotional condition for a total of up to 30 blocks as tolerated.

Fig. 1.

Paradigm. A. Example stimuli. B. Example trial in the happy condition. The 5 faces shown within a happy block varied in identity, and all displayed a happy expression.

2.3. Experimental procedure

fNIRS data were collected using a Hitachi ETG-4000 continuous-wave system (wavelengths at 695 nm and 830 nm) with 46 channels distributed over the bilateral frontal and temporal regions (Fig. 2; infant probes with source-detector distances of approximately 3 cm), sampling at 10 Hz. Simultaneous binocular eye-tracking data was recorded with a Tobii T120 eye-tracker sampling at 60 Hz. Infants were held on a caregiver’s lap while they viewed a 17-inch screen approximately 60 cm away. Face stimuli were presented at a visual angle of 14.3° high by 12.2° wide. Parents wore visors to block their view of the presented stimuli and were asked to refrain from talking to the infants during the experiment. Eye-tracker calibration was performed using a 5-point calibration procedure. Once sufficient eye-tracking calibration was obtained (minimum of 3 out of 5 locations) or failed after two attempts (in which case the eye-tracking data was excluded, n = 1 7-month-old), the paradigm began. Stimulus presentation was experimenter-controlled in an adjacent room using E-Prime 2.0 (Psychological Software Products, Harrisburg, PA). Blocks were initiated when the infant was looking at the screen. Another experimenter was seated next to the infant, redirecting attention to the screen between blocks if necessary.

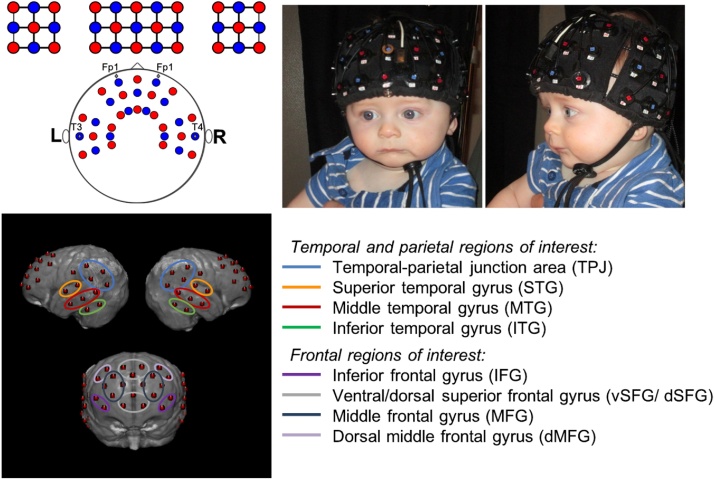

Fig. 2.

Top left, diagram of sources (red) and detectors (blue) and their placement relative to the 10-20 system. Top right, front and side photo of probe on 7-month-old infant. Bottom, modeled channel locations and ROI designations displayed on a 7.5-month-old MRI atlas (Richards et al., 2016). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article).

2.4. Video coding

Infants were video recorded during the experiment. Videos were coded offline for percent looking to the screen by trained coders. Infants were considered to have attended to a trial block if they looked at the screen for at least 2.5 s of the presented face stimuli (5 s). Inter-coder agreement was assessed from a randomly selected 20 % of the sample. Average inter-coder agreement was 95 % for trial include/exclude decision; 80 % of trials were within 10 % agreement for percent looking between coders. Percent looking to the screen as determined by video coding was only used for fNIRS data quality control. Baseline periods were not coded for infant attention. However, blocks were only initiated when infants were looking at the screen, so that in the time period immediately preceding the stimulus the infants were looking at the crosshair on the screen.

The mean number of accepted blocks per emotional category was 7.1 at 5 months and 7.8 at 7 months. The effect of condition (within-subject factor of emotional category) on the number of accepted blocks, as a main effect or in interaction with age, was not significant (ps >.4). On average, 7-month-olds had more accepted blocks (main effect of age, F(1, 98) = 4.16, p = .044)

Average video-coded percent looking in accepted trials was 88.3 % at 5-months and 88.2 % at 7-months. There were no significant effects of condition, age, or their interaction (all ps >.2) on percent looking in accepted trials.

Despite good eye-tracking calibration before the experiment was started, some participants had large discrepancies between eye-tracker reported looking and video-coded looking time, usually due to participant position changes during the experiment leading to the calibration no longer being valid. Participants’ data were removed from eye-tracking analyses if they did not have at least 3 blocks for every condition with total looking as measured by eye-tracker in agreement (within 25 %) of video coded looking time (n = 17 5-month-olds and n = 4 7-month-olds), leaving n = 30 5-month-olds and n = 47 7-month-olds in combined eye-tracking and fNIRS analyses.

2.5. Anatomical localization

Age-appropriate MRIs were chosen from a database (Richards et al., 2016; Sanchez et al., 2012) to model the underlying anatomy of each channel. A group of 13 MRIs (resp. 15) was used for 5-month-olds (resp. 7-month-olds), with age and head circumference matched to the experimental group (Age of MRI participants: 5 mo 148 ± 13 days, 7 mo 212 ± 17 days, Head circumference of MRI participants: 5 mo 42.8 cm ± 1.6 cm, 7 mo 44.4 ± 2.1 cm). Head probe geometry and cap placement photographs were used to virtually place the optodes on each MRI using previously developed methods (Lloyd-Fox et al., 2014). Photon propagation modeling (Fang, 2010) estimated diffuse optical tomography sensitivity functions from each source-detector pair comprising a channel (Fu and Richards, 2020), confirmed with previously described geometrical methods (Lloyd-Fox et al., 2014; Okamoto and Dan, 2005). Intersecting cortical regions were labeled using the LONI atlas (Fillmore et al., 2015). Structural regions of interest (ROIs) were defined based on the averaged localization of each channel over the group of MRIs. As localization was similar for the 5- and 7-month-olds, the same ROIs were used across ages. Channel location estimation was also done on an average MRI 7.5 month-old template for visualization (Richards et al., 2016). ROIs contained 2–4 channels each, subdividing the bilateral Inferior (ITG), Middle (MTG), and Superior Temporal Gyri (STG), bilateral Temporal-Parietal Junction (TPJ), bilateral Inferior (IFG), Middle (MFG), and dorsal Middle (dMFG) Frontal Gyri, and medial ventral (vSFG) and dorsal (dSFG) Superior Frontal Gyri (Fig. 2). Participants had to have greater than 50 % of the channels valid in each ROI to be included in the group analysis for a particular region, leading to some non-uniformity in the number of participants included in each ROI.

2.6. fNIRS data analysis

fNIRS data analysis was performed using Homer2 (Huppert et al., 2009) in MATLAB. Unusable channels were defined as having values greater than 98 % or less than 2% of the total raw range of intensity data for more than 5 s and excluded. Raw data were converted to optical density, and wavelet motion correction with iqr = 0.5 was applied (Behrendt et al., 2018). Residual motion artifacts were identified (tMotion = 1, tMask = 1, stdevThresh = 50, ampThresh = 1); affected blocks were excluded. Data were bandpass filtered from 0.05 to 0.8 Hz to eliminate drift and cardiac artifact. The modified Beer-Lambert law (DPF = 5) was applied to convert optical density to chromophore concentration (Duncan et al., 1995). Blocks were extracted from 2-seconds prior to 16-seconds after-stimulus onset, using the pre-stimulus time for baseline correction. As blocks were spaced 18-seconds apart or more, they did not overlap. Concentrations were z-scored by dividing by the standard deviation over the pre-stimulus baseline period.

The grand mean hemodynamic response over all participants and ROIs was used to select a time-window of interest ± 2 s around its peak. Group responses were statistically assessed by one sample t-tests vs. zero response, corrected for multiple comparisons at the False Discovery Rate (alpha = 0.05).

Block-related heart rate changes were extracted from the fNIRS data using previously described methods (Perdue et al., 2014). Changes in heart rate from the baseline period were calculated by averaging over the first 6-seconds of the trial, when the stimulus was presented. Changes in heart rate could be positive or negative; positive heart rate responses (accelerations) would indicate increases in arousal, while negative heart rate responses (decelerations) would indicate increases in attention.

2.7. Eye-tracking analysis

Rectangular “eyes” and “mouth” areas of interest (AOI) of equal sizes were defined, bounded by the sides of the face and by either the hairline on the top and the midpoint of the face as the bottom (“eye” AOI), or the midpoint of the face on the top and the chin of the face as the bottom (“mouth” AOI). Total looking time to the eye and mouth AOIs while the face stimulus was on the screen was calculated for each block, then averaged over blocks for each condition and participant. In contrast to using percent looking time to the eye and mouth as a percentage of total looking time, this approach was meant to derive an index of the amount of “eye” or “mouth” visual information processed rather than characterize the relative allocation of attention to each feature controlling for total looking time.

2.8. Statistical analysis

Participants were included in a particular analysis if they had complete data to fit the model being tested. Differences in oxyHb responses, looking times, mouth-looking times, and heart rate responses based on the effects of condition (happy, fear, angry; within-subject), age (5-months, 7-months; between-subject), and their interaction were tested using repeated-measures ANOVAs. Separate models were run for each ROI. When no significant interaction term was found, the interaction was removed, and the model was rerun. Separate models were run to test for condition-by-eye-looking or condition-by-mouth-looking effects on oxyHb responses in each ROI.

3. Results

3.1. fNIRS activations

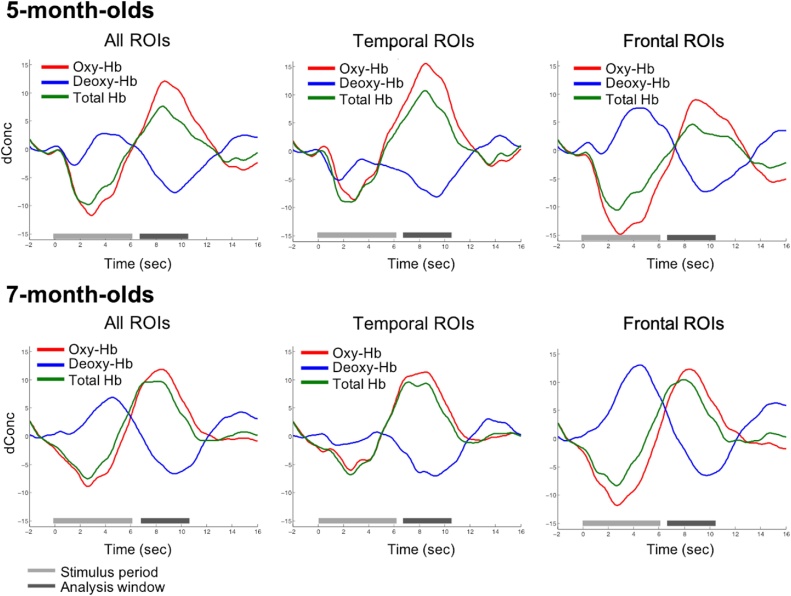

Grand-average peak oxyHb activation occurred at 8.6 s post-onset (Fig. 3; 5-month-olds: 8.7 s over all ROIs, 8.5 s over temporal ROIs only, and 9.0 s over frontal ROIS only; 7-month-olds: 8.4 s over all ROIs, temporal ROIs only, and frontal ROIS only). Therefore, a time-window of interest from 6.6 to 10.6 seconds was chosen to extract mean oxyHb activations for each participant in each condition and ROI. To align with prior work in infants, our analysis focused on oxyHb activation, but we also report deoxyHb for completeness. Grand-average peak deoxyHb activation (negative deflection) occurred at 9.5 s post-onset (9.5 s in 5-month-olds, 9.4 s for 7-month-olds).

Fig. 3.

Time-course of grand-mean hemodynamic response averaged over conditions, participants, and ROIs for each age.

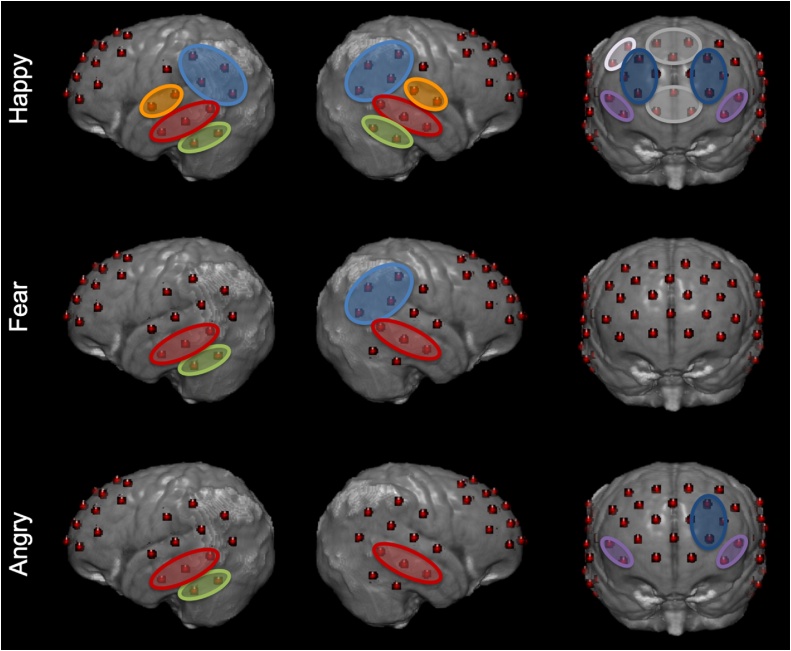

Significant activations after FDR correction are reported in Table 1 and Fig. 4. Significant oxyHb activation to happy faces was found in the vSFG, dSFG, right dMFG, and bilateral MFG, IFG, MTG, STG, ITG and TPJ. OxyHb activation to fearful faces was seen in the bilateral MTG, rTPJ, and left ITG. OxyHb activation to angry faces was seen in the left MFG and ITG, and in the bilateral IFG and MTG. Significant deoxyHb activations to happy faces were seen in the bilateral MFG, bilateral IFG, left dMFG, left STG, and right TPJ, and in the left MTG for fearful faces. No significant deoxyHb activations were seen to angry faces after FDR correction. Results in each age group are reported in Supplemental Tables 1–2 and Supplemental Figs. 1–2.

Table 1.

ROIs with significant activation in combined 5- and 7-month-old cohort.

| ROI | Corrected p-value |

t-statistic |

df | |||||

|---|---|---|---|---|---|---|---|---|

| Happy | Fear | Angry | Happy | Fear | Angry | |||

| oxyHb | ||||||||

| Frontal | vSFG | .009 | 2.74 | 99 | ||||

| dSFG | .0014 | 3.61 | 87 | |||||

| rMFG | .049 | 2.02 | 93 | |||||

| lMFG | .0065 | .015 | 2.95 | 2.84 | 96 | |||

| rIFG | .0065 | .0068 | 2.95 | 3.45 | 91 | |||

| lIFG | <.001 | .012 | 3.84 | 3.13 | 96 | |||

| rdMFG | .002 | 3.46 | 79 | |||||

| Temporal | rMTG | <.001 | . < .001 | .014 | 5.27 | 4.54 | 2.95 | 98 |

| lMTG | <.001 | .011 | <.001 | 4.05 | 3.16 | 4.46 | 99 | |

| rSTG | .0074 | 2.89 | 77 | |||||

| lSTG | .013 | 2.60 | 68 | |||||

| rTPJ | . < .001 | .018 | 4.72 | 2.92 | 95 | |||

| lTPJ | .0026 | 3.32 | 94 | |||||

| rITG | .001 | 3.74 | 90 | |||||

| lITG | .009 | .0027 | .014 | 2.76 | 3.73 | 2.93 | 90 | |

| deoxyHb | ||||||||

| Frontal | rMFG | .046 | −2.83 | 93 | ||||

| lIFG | .014 | −3.44 | 96 | |||||

| Temporal | lMTG | .031 | −3.1826 | 99 | ||||

Fig. 4.

Significant oxyHb activations for each emotional category after correcting for multiple comparisons displayed on a 7.5-month-old MRI atlas (Richards et al., 2016).

No significant effect of age or age-by-condition interactions on oxyHb responses were found in any ROI. There was an exploratory effect of condition on oxyHb responses in the dSFG, with greater activation to happy versus fearful faces (Supplementary Results, Supplementary Fig. 3). However, this effect did not survive FDR-correction for multiple comparisons over ROIs.

3.2. Eye-tracking

Infants looked to the eyes for 3.5-seconds and to the mouth for 1.0-seconds on average during included blocks. Eye-looking and mouth-looking were negatively correlated (r2 = .53, p < 0.001). No significant effect of age or age-by-condition interaction on eye-looking or mouth-looking were found. A marginally significant effect of condition on eye-looking was found (F(2, 224) = 2.85, p = .060), with more looking to the eyes of fear faces compared to angry faces (t(75)=2.33, p = .023) but no significant difference in eye-looking between happy and angry (t(75) = 0.73, p = .46) or happy and fear faces (t(75) = 1.62, p = .11). A significant effect of condition on mouth looking was found (F(2, 224) = 4.40, p = .013), with more looking to the mouth of angry faces as compared to happy (t(75) = 2.39, p = .019) and fearful faces (t(75) = 2.67, p = .009), but no difference in mouth-looking between happy and fearful faces (t(75) = 0.48, p = .64). These effects were observed despite all stimuli having open mouths with visible teeth.

3.3. Heart rate responses

No significant effects of age, condition, or their interaction on heart rate responses were found (all ps > 0.5)

3.4. Combined fNIRS and eye-tracking analyses

We next investigated whether fNIRS responses varied as a function of the interaction of accumulated looking time to the eyes or mouth and emotion condition, as emotional category affects which feature, eyes or mouth, may be most salient or diagnostic. Differences in oxyHb responses based on looking time were tested using repeated-measures condition-by-accumulated-looking-time ANOVAs in each ROI, separately for eye- and mouth-looking. While we did not find differences in responses due to age in looking behaviors or brain activation on their own, models controlled for age to eliminate the possibility that any relationships could be driven by age-related effects. Exploratory results, uncorrected for multiple comparisons over ROIs, are detailed in the Supplementary Results and Supplementary Figs. 4−5. Briefly, exploratory associations were found between greater eye looking and lower activations in the dSFG, greater mouth-looking and greater activations in the dSFG and rIFG, greater eye-looking and lower rSTG activations to angry faces, and greater mouth-looking and lTPJ activations to happy faces (Supplementary Results and Supplementary Figs. 4–5). None of these effects survived FDR-correction for multiple-comparisons, and no other significant effects were found.

4. Discussion

We observed significant frontal and temporal fNIRS activation to emotional faces at both 5 and 7-months of age in a paradigm that systematically varied facial identity within each facial expression block. Activations were observed in areas broadly consistent with previous adult fMRI studies, including temporal areas (Haxby et al., 2001), IFG (Sabatinelli et al., 2011), TPJ, and medial prefrontal SFG (Etkin et al., 2011). Specifically, we found broad bilateral temporal and frontal responses to happy faces, in line with previous infant studies in which facial identity remained unvarying within each block (Grossmann et al., 2018; Krol et al., 2019; Minagawa-Kawai et al., 2009). Exploratory analyses of the current data revealed greater frontal (dSFG) activation to happy versus fearful faces, robust to changes in facial identity; this finding did not survive correction for multiple comparisons. At least one fMRI study in adults (Zhang et al., 2016) and another fNIRS study in infants (Grossmann et al., 2018) also do not report differential activations to different types of facial emotions over temporal cortices. Interestingly, and contrary to previous reports in behavioral and ERP paradigms (Leppänen and Nelson, 2009; Xie et al., 2018), no differences between 5- and 7-month-olds were found. Because ERP and fNIRS paradigm differ in paradigm design, response temporality, and underlying neural sources, some or all of these differences may account for the differences in findings. That is, 5- and 7-month-olds may differ in their fast, neurophysiological responses to isolated facial expression stimuli, but not in their slow-building hemodynamic responses to block of facial expression stimuli of varying identities. Alternatively, neural sources accounting for differential neurophysiological responses depending on age or facial expression condition as captured by ERPs may simply not be accessible by fNIRS. For example, Xie et al. (2018) estimated source activity from ERP responses to facial expressions of emotion (happy, fear, anger) in 5-, 7-, and 12-month-old infants: differential responses based on emotion or age were most evident in regions of interest corresponding to the occipital face area, posterior cingulate, and fusiform face area, neither of which were directly accessible by the fNIRS apparatus used in the current study. Xie et al. (2018) also reported a lack of differential responses to different types of emotional facial expressions in the superior temporal region of interests, which is consistent with the current fNIRS findings.

Behavioral studies have demonstrated that the ability to extract the emotional expression of faces across identities emerges later in development than the ability to extract the emotional expression of a single model (Bayet and Nelson, 2019). The broad neural activations to happy expressions that are reported here, occurring as face identity varied within each block, may be interpreted as further evidence that infants at this age can extract happy expressions from faces of different identities. Variations of facial identity within each block likely explain the lack of differential responses to types of emotional faces over temporal areas, in contrast to Nakato et al. (2011), and over the right IFG, in contrast to Krol et al. (2019). Alternatively, other methodological differences, such as source-detector distances (we used 3 cm; Nakato et al. used 2 cm and Krol et al. used 2.5 cm) may explain these different findings.

Infants looked marginally longer to the eyes of fearful faces, and more to the mouth of angry faces, regardless of age. These differences may reflect special salience of fearful eyes (Adolphs et al., 2005; Dadds et al., 2008; Eisenbarth and Alpers, 2011). More looking to the mouth for angry faces has not been consistently reported in adults (Beaudry et al., 2014). Critically, all expressions presented in this study had open “toothy” mouths, suggesting that the low-level perception of “toothiness” (Caron et al., 1985) alone cannot account for this finding. We did not observe condition differences in overall looking time or heart rate responses, suggesting that emotional category did not impact arousal or attentiveness. The current block design, and variations of facial identity within each block, likely explain the differences in findings compared to earlier findings (Peltola et al., 2013, 2011). The current negative results are aligned with the notion that young infants may not necessarily extract or infer affective meaning from the emotional facial expressions that they can perceptually differentiate (Ruba and Repacholi, 2019).

Exploratory associations were found between looking behavior and brain responses in the dSFG, rIFG, rSTG, and lTPJ. As none survived correction for multiple comparisons over ROIs, these findings will be discussed only briefly. Less looking to the eyes and more looking to the mouth was related to greater dSFG activations across all emotion conditions. The eye region is particularly relevant for decoding facial expressions (Eisenbarth and Alpers, 2011), and prefrontal deactivation is associated with visual attention in children (Fekete et al., 2014), adults (Lachaux et al., 2008), and infants (Xu et al., 2017). Thus, a decrease in dSFG activations with more eye looking may reflect increased attentional engagement. An exploratory relationship between rIFG activation and greater mouth-looking was found, in line with another study reporting a positive correlation between mouth-looking and IFG activation in infants during speech perception (Altvater-Mackensen and Grossmann, 2016). Increased mouth-looking to happy faces was also associated with increased lTPJ activation, consistent with the diagnostic value of the mouth-region in decoding happy faces (Smith et al., 2005) and the sensitivity of the infant lTPJ to social stimuli (McDonald and Perdue, 2018). Greater eye-looking to angry faces was associated with decreased activation in the rSTG, perhaps reflecting the failure to adequately detect and process the angry mouth.

These findings should be interpreted in the light of the following limitations. The relatively high attrition rate, while typical of infant neuroimaging studies (Lloyd-Fox et al., 2010), may limit the generalizability of current findings. Our correlational approach cannot determine if differences in brain activations were causally driving differences in looking behavior, or vice-versa, and other factors might mediate the associations reported. The spatial resolution of the fNIRS apparatus used in the current study did not allow us to examine fine-grained, multivariate patterns of response that may distinguish between facial emotions in infants as they do in adults (Peelen et al., 2010; Skerry and Saxe, 2014). Cross-sectional designs are inherently limited in their ability to detect developmental changes. Future work including high-density fNIRS in a longitudinal prospective design may provide a more complete picture of emotional face processing in infancy. While all stimuli blocks were presented while infants were looking to the screen, there is still a possibility that signals in the baseline period may contain responses to uncontrolled stimuli in the testing environment. This is a common concern with infant studies that may be alleviated in future work by attempting to enforce infant looking during baseline periods.

In conclusion, we simultaneously recorded fNIRS neural activation, eye-tracking, and heart rate responses to happy, fearful, and angry facial expressions of faces of varying identities in 5- and 7-month-old infants. Temporo-parietal and frontal activations to happy and angry faces and temporo-parietal activations to fear faces were observed; activations were particularly evident in response to happy faces. Infants looked longer to the mouth region of angry than happy or fearful faces, and no differences in behavior or neural activations were observed between 5- and 7-month-olds.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

We thank the infants and their families for their participation. This work was supported by the National Institutes of Health [NIMH #MH078829 awarded to CAN, NICHD #HD018942 to JER] and a Fulbright grant awarded to HFB. Assistance with data collection was provided by Lindsay Bowman, Dana Bullister, Anna Fasman, Sarah McCormick, Lina Montoya, Ross Vanderwert, and Anna Zhou. Assistance with eye-tracking data processing was provided by Helga Miguel. Statistical advice was provided by Kush Kapur through the Harvard Catalyst program.

Footnotes

Supplementary material related to this article can be found, in the online version, at doi: https://doi.org/10.1016/j.dcn.2020.100882.

Appendix A. Supplementary data

The following is Supplementary data to this article:

References

- Adolphs R., Gosselin F., Buchanan T.W., Tranel D., Schyns P., Damasio A.R. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433(7021):68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Altvater-Mackensen N., Grossmann T. The role of left inferior frontal cortex during audiovisual speech perception in infants. NeuroImage. 2016;133:14–20. doi: 10.1016/j.neuroimage.2016.02.061. [DOI] [PubMed] [Google Scholar]

- Amso D., Fitzgerald M., Davidow J., Gilhooly T., Tottenham N. Visual exploration strategies and the development of infants’ facial emotion discrimination. Front. Psychol. 2010;1:180. doi: 10.3389/fpsyg.2010.00180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayet L., Nelson C.A. The perception of facial emotion in typical and atypical development. In: Lobue V., Perez-Edgar K., Buss K.A., editors. Handbook of Emotional Development. 2019. [Google Scholar]

- Beaudry O., Roy-Charland A., Perron M., Cormier I., Tapp R. Featural processing in recognition of emotional facial expressions. Cogn. Emot. 2014;28(3):416–432. doi: 10.1080/02699931.2013.833500. [DOI] [PubMed] [Google Scholar]

- Behrendt H.F., Firk C., Nelson C.A., III, Perdue K.L. Motion correction for infant functional near-infrared spectroscopy with an application to live interaction data. Neurophotonics. 2018;5(1):015004. doi: 10.1117/1.NPh.5.1.015004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caron R.F., Caron A.J., Myers R.S. Do infants see emotional expressions in static faces? Child Dev. 1985;56:1552–1560. [PubMed] [Google Scholar]

- Courage M.L., Reynolds G.D., Richards J.E. Infants’ attention to patterned stimuli: developmental change from 3 to 12 months of age. Child Dev. 2006;77(3):680–695. doi: 10.1111/j.1467-8624.2006.00897.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dadds M.R., El Masry Y., Wimalaweera S., Guastella A.J. Reduced eye gaze explains “fear blindness” in childhood psychopathic traits. J. Am. Acad. Child Adolesc. Psychiatry. 2008;47(4):455–463. doi: 10.1097/CHI.0b013e31816407f1. [DOI] [PubMed] [Google Scholar]

- Duncan A., Meek J.H., Clemence M., Elwell C.E., Tyszczuk L., Cope M., Delpy D. Optical pathlength measurements on adult head, calf and forearm and the head of the newborn infant using phase resolved optical spectroscopy. Phys. Med. Biol. 1995;40:295–304. doi: 10.1088/0031-9155/40/2/007. [DOI] [PubMed] [Google Scholar]

- Eisenbarth H., Alpers G.W. Happy mouth and sad eyes: scanning emotional facial expressions. Emotion. 2011;11:860–865. doi: 10.1037/a0022758. [DOI] [PubMed] [Google Scholar]

- Etkin A., Egner T., Kalisch R. Emotional processing in anterior cingulate and medial prefrontal cortex. Trends Cogn. Sci. 2011;15(2):85–93. doi: 10.1016/j.tics.2010.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fang Q. Mesh-based Monte Carlo method using fast ray-tracing in Plücker coordinates. Biomed. Opt. Express. 2010;1(1):165–175. doi: 10.1364/BOE.1.000165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fekete T., Beacher F.D.C.C., Cha J., Rubin D., Mujica-Parodi L.R. Small-world network properties in prefrontal cortex correlate with predictors of psychopathology risk in young children: a NIRS study. NeuroImage. 2014;85:345–353. doi: 10.1016/j.neuroimage.2013.07.022. [DOI] [PubMed] [Google Scholar]

- Fillmore P.T., Richards J.E., Phillips-Meek M.C., Cryer A., Stevens M. Stereotaxic magnetic resonance imaging brain atlases for infants from 3 to 12 months. Dev. Neurosci. 2015;37(6):515–532. doi: 10.1159/000438749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu X., Richards J.E. Age-related changes in diffuse optical tomography sensitivity to the cortex in infancy. BioRxiv (Preprint) 2020 doi: 10.1101/2020.08.22.262477. [DOI] [Google Scholar]

- Fusar-Poli P., Placentino A., Carletti F., Landi P., Allen P., Surguladze S. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci. 2009;34:418–432. doi: 10.1016/S1180-4882(09)50077-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gliga T., Csibra G. Seeing the face through the eyes: a developmental perspective on face expertise. Prog. Brain Res. 2007;164:323–339. doi: 10.1016/S0079-6123(07)64018-7. [DOI] [PubMed] [Google Scholar]

- Grossmann T., Missana M., Krol K.M. The neurodevelopmental precursors of altruistic behavior in infancy. PLoS Biol. 2018;16 doi: 10.1371/journal.pbio.2005281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby J.V., Gobbini I.M., Furey M.L., Ishai A., Schouten J.L., Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Hunnius S., de Wit T.C.J., Vrins S., von Hofsten C. Facing threat: infants’ and adults’ visual scanning of faces with neutral, happy, sad, angry, and fearful emotional expressions. Cogn. Emot. 2011;25(2):193–205. doi: 10.1080/15298861003771189. [DOI] [PubMed] [Google Scholar]

- Huppert T.J., Diamond S.G., Franceschini M.A., Boas D.A. HomER: a review of time-series analysis methods for near-infrared spectroscopy of the brain. Appl. Opt. 2009;48(10):280–298. doi: 10.1016/j.drugalcdep.2008.02.002.A. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ichikawa H., Kanazawa S., Yamaguchi M.K. Infants recognize identity in a dynamic facial animation that simultaneously changes its identity and expression. Vis. cogn. 2018;26(3):156–165. doi: 10.1080/13506285.2017.1399949. [DOI] [Google Scholar]

- Kesler-West M.L., Andersen A.H., Smith C.D., Avison M.J., Davis C.E., Kryscio R.J., Blonder L.X. Neural substrates of facial emotion processing using fMRI. Cogn. Brain Res. 2001;11:213–226. doi: 10.1016/S0926-6410(00)00073-2. [DOI] [PubMed] [Google Scholar]

- Kobayashi M., Otsuka Y., Kanazawa S., Yamaguchi M.K., Kakigi R. The processing of faces across non-rigid facial transformation develops at 7 month of age: a fNIRS-adaptation study. BMC Neurosci. 2014;15:81. doi: 10.1186/1471-2202-15-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krol K.M., Puglia M.H., Morris J.P., Connelly J.J., Grossmann T. Epigenetic modification of the oxytocin receptor gene is associated with emotion processing in the infant brain. Dev. Cogn. Neurosci. 2019;37 doi: 10.1016/j.dcn.2019.100648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachaux J.P., Jung J., Mainy N., Dreher J.C., Bertrand O., Baciu M. Silence is golden: transient neural deactivation in the prefrontal cortex during attentive reading. Cereb. Cortex. 2008;18(2):443–450. doi: 10.1093/cercor/bhm085. [DOI] [PubMed] [Google Scholar]

- Leppänen J.M., Nelson C.A. Tuning the developing brain to social signals of emotions. Nature Review Neuroscience. 2009;10:37–47. doi: 10.1038/nrn2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen J.M., Richmond J., Vogel-Farley V.K., Moulson M.C., Nelson C.A. Categorical representation of facial expressions in the infant brain. Infancy. 2009;14(3):346–362. doi: 10.1080/15250000902839393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd-Fox S., Blasi A., Elwell C.E. Illuminating the developing brain: the past, present and future of functional near infrared spectroscopy. Neurosci. Biobehav. Rev. 2010;34:269–284. doi: 10.1016/j.neubiorev.2009.07.008. [DOI] [PubMed] [Google Scholar]

- Lloyd-Fox S., Richards J.E., Blasi A., Murphy D.G.M., Elwell C.E., Johnson M.H. Coregistering functional near-infrared spectroscopy with underlying cortical areas in infants. Neurophotonics. 2014;1 doi: 10.1117/1.NPh.1.2.025006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald N.M., Perdue K.L. The infant brain in the social world: moving toward interactive social neuroscience with functional near-infrared spectroscopy. Neurosci. Biobehav. Rev. 2018;87:38–49. doi: 10.1016/j.neubiorev.2018.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y., Matsuoka S., Dan I., Naoi N., Nakamura K., Kojima S. Prefrontal activation associated with social attachment: facial-emotion recognition in mothers and infants. Cereb. Cortex. 2009;19:284–292. doi: 10.1093/cercor/bhn081. [DOI] [PubMed] [Google Scholar]

- Nakamura K., Kawashima R., Ito K., Sugiura M., Kato T., Nakamura A. Activation of the right inferior frontal cortex during assessment of facial emotion. J. Neurophysiol. 1999;82(3):1610–1614. doi: 10.1152/jn.1999.82.3.1610. [DOI] [PubMed] [Google Scholar]

- Nakato E., Otsuka Y., Kanazawa S., Yamaguchi M.K., Kakigi R. Distinct differences in the pattern of hemodynamic response to happy and angry facial expressions in infants - A near-infrared spectroscopic study. NeuroImage. 2011;54:1600–1606. doi: 10.1016/j.neuroimage.2010.09.021. [DOI] [PubMed] [Google Scholar]

- Narumoto J., Okada T., Sadato N., Fukui K., Yonekura Y. Attention to emotion modulates fMRI activity in human right superior temporal sulcus. Cogn. Brain Res. 2001;12(2):225–231. doi: 10.1016/s0926-6410(01)00053-2. [DOI] [PubMed] [Google Scholar]

- Okamoto M., Dan I. Automated cortical projection of head-surface locations for transcranial functional brain mapping. NeuroImage. 2005;26(1):18–28. doi: 10.1016/j.neuroimage.2005.01.018. [DOI] [PubMed] [Google Scholar]

- Peelen M.V., Atkinson A.P., Vuilleumier P. Supramodal representations of perceived emotions in the human brain. J. Neurosci. 2010;30:10127–10134. doi: 10.1523/jneurosci.2161-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola M.J., Leppänen J.M., Hietanen J.K. Enhanced cardiac and attentional responding to fearful faces in 7-month-old infants. Psychophysiology. 2011;48:1291–1298. doi: 10.1111/j.1469-8986.2011.01188.x. [DOI] [PubMed] [Google Scholar]

- Peltola M.J., Hietanen J.K., Forssman L., Leppänen J.M. The emergence and stability of the attentional bias to fearful faces in infancy. Infancy. 2013;18:905–926. doi: 10.1111/infa.12013. [DOI] [Google Scholar]

- Perdue K.L., Westerlund A., McCormick S.A., Nelson C.A. Extraction of heart rate from functional near-infrared spectroscopy in infants. J. Biomed. Opt. 2014;19 doi: 10.1117/1.JBO.19.6.067010. 067010–067010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perdue K.L., Edwards L.A., Tager-Flusberg H., Nelson C.A. Differing developmental trajectories in heart rate responses to speech stimuli in infants at high and low risk for autism spectrum disorder. J. Autism Dev. Disord. 2017 doi: 10.1007/s10803-017-3167-4. [DOI] [PubMed] [Google Scholar]

- Richards J.E., Casey B.J. Heart rate variability during attention phases in young infants. Psychophysiology. 1991;28(1):43–53. doi: 10.1111/j.1469-8986.1991.tb03385.x. [DOI] [PubMed] [Google Scholar]

- Richards J.E., Sanchez C., Phillips-Meek M., Xie W. A database of age-appropriate average MRI templates. NeuroImage. 2016;124:1254–1259. doi: 10.1016/j.neuroimage.2015.04.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruba A.L., Repacholi B.M. Do preverbal infants understand discrete facial expressions of emotion? Emot. Rev. 2019:1–16. doi: 10.1177/1754073919871098. [DOI] [Google Scholar]

- Sabatinelli D., Fortune E.E., Li Q., Siddiqui A., Krafft C., Oliver W.T. Emotional perception: meta-analyses of face and natural scene processing. NeuroImage. 2011;54(3):2524–2533. doi: 10.1016/j.neuroimage.2010.10.011. [DOI] [PubMed] [Google Scholar]

- Sanchez C.E., Richards J.E., Almli C.R. Neurodevelopmental MRI brain templates for children from 2 weeks to 4 years of age. Dev. Psychobiol. 2012;54:77–91. doi: 10.1002/dev.20579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skerry A.E., Saxe R. A common neural code for perceived and inferred emotion. J. Neurosci. 2014;34:15997–16008. doi: 10.1523/JNEUROSCI.1676-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith M.L., Cottrell G.W., Gosselin F., Schyns P.G. Transmitting and decoding facial expressions. Psychol. Sci. 2005;16(3):184–189. doi: 10.1111/j.0956-7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- Soussignan R., Dollion N., Schaal B., Durand K., Reissland N., Baudouin J.Y. Mimicking emotions: how 3–12-month-old infants use the facial expressions and eyes of a model. Cogn. Emot. 2017;32(4):827–842. doi: 10.1080/02699931.2017.1359015. [DOI] [PubMed] [Google Scholar]

- Tottenham N., Tanaka J.W., Leon A.C., McCarry T., Nurse M., Hare T.A. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006.The. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P., Armony J., Driver J., Dolan R. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Winston J.S., Henson R.N.A., Fine-Goulden M.R., Dolan R.J. fMRI-adaptation reveals dissociable neural representations of identity and expression in face perception. J. Neurophysiol. 2004;92(3):1830–1839. doi: 10.1152/jn.00155.2004. [DOI] [PubMed] [Google Scholar]

- Xie W., McCormick S.A., Westerlund A., Bowman L.C., Nelson C.A. Neural correlates of facial emotion processing in infancy. Dev. Sci. 2018 doi: 10.1111/desc.12758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu M., Hoshino E., Yatabe K., Matsuda S., Sato H., Maki A. Prefrontal function engaging in external-focused attention in 5- to 6-month-old infants: a suggestion for default mode network. Front. Hum. Neurosci. 2017;10:676. doi: 10.3389/fnhum.2016.00676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang H., Japee S., Nolan R., Chu C., Liu N., Ungerleider L.G. Face-selective regions differ in their ability to classify facial expressions. NeuroImage. 2016;130:77–90. doi: 10.1016/j.neuroimage.2016.01.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.