Abstract

Background

The availability of valid and reliable instruments, based on current competency frameworks, is essential to respond to the need for accurate measurement of the competency of registered nurses in evidence‐based practice (EBP).

Aims

To develop and validate a questionnaire capable of measuring EBP competencies in registered nurses following the competency framework developed by Melynk et al. (2014).

Methods

The study was developed in two stages: (a) creation of the questionnaire based on an operational definition of the construct, its face, and content validation by 10 experts, and cognitive piloting; (b) psychometric evaluation of the questionnaire by a cross‐sectional, and multicenter study between February and November 2018. Analyses were conducted of the questionnaire’s reliability and construct validity (exploratory [EFA] and confirmatory [CFA] factor analyses).

Results

First phase: The initial version of EBP‐COQ© Prof contained 50 items grouped into four dimensions (attitudes, knowledge, skills, and utilization). After two expert validation rounds, a 35‐item version was obtained with content validity index of 0.86. Second phase: The questionnaire was completed by 579 nurses; EFA with PROMAX rotation revealed that the four‐factor model had the best fit (χ2 = 311.32; p = .001, root mean square error of approximation [RMSEA] = 0.000, 90% confidence interval [CI] = 0.000 – 0.010; comparative fit index [CFI] = 1), and it showed a good CFA fit index: CFI = 0.932, and RMSEA = 0.093 (90% CI = 0.097 − 0.108). Cronbach’s α for each factor ranged from 0.817 (factor III) to 0.948 (factor II).

Linking Evidence to Action

EBP‐COQ Prof© is a valid, reliable, and easily administered questionnaire that measures the self‐perceived competency of registered nurses in EBP based on an updated and specific competency framework. It permits the independent evaluation of attitudes, knowledge, and skills related to EBP and of its utilization in hospital and primary care settings, allowing the monitoring of compliance with EBP.

Keywords: evidence‐based practice, validation, competency, nurse, instrument development, questionnaire

INTRODUCTION

The World Health Organization declared the promotion of evidence‐based practice (EBP) to be a priority field of action to increase the contribution of nurses to the health of citizens (World Health Organization, 2017). Various studies have demonstrated that EBP implementation improves the health outcomes and safety of patients by increasing the quality of their care (Coster et al., 2018; Melnyk, Gallagher‐Ford, Troseth et al., 2014). However, there can often be a long delay between the generation and application of research results, and the adoption of EBP remains a challenge for the nursing profession. A systematic review of 37 studies (N = 18,355 nurses) concluded that a large proportion of nurses are not prepared for EBP, regardless of their function, clinical setting, or country (Saunders & Vehviläinen‐Julkunen, 2015). It has also been reported that nurses are generally familiar with the concept and have favorable attitudes toward EBP but around three‐quarters of them want more knowledge and training on this approach (Melnyk et al., 2012) and do not feel competent to apply EBP in their work (Melnyk et al., 2018).

BACKGROUND

Over the past few years, interest has grown in the setting of professional standards for nursing, including the development of EBP‐related competencies (American Nurses Association, 2015). In 2015, a competency framework based on European Directive 2013/55/EU included the implementation of scientific findings in EBP as a central competency (European Federation of Nurses Associations, 2015). Various authors have reviewed the concept of EBP competency in nursing (Laibhen‐Parkes, 2014) and developed different EBP competency frameworks for nurses (Leung et al., 2016; Melnyk, Gallagher‐Ford, Long et al., 2014) or healthcare professionals in general (Albarqouni et al., 2018). Laibhen‐Parkes (2014) reported that efforts had been made to describe EBP competencies but the concept of EBP competency had not been clearly defined. He proposed the following definition: “the ability to ask clinically relevant questions for the purposes of acquiring, appraising, applying, and assessing multiple sources of knowledge within the context of caring for a particular patient, group, or community.” This is a very general definition, as pointed out by Leung et al. (2016), and does not cover all aspects of EBP, and it is less descriptive and less specific than the frameworks that have been developed for EBP competencies.

Leung et al. (2016) and Alberqouni and colleagues (2018) organized EBP competencies (knowledge and skills) according to a five‐step model (i.e., ask, acquire, appraise, apply, and assess; Straus et al., 2019), mainly oriented toward the development of educational programs. Melnyk, Gallagher‐Ford, Troseth, et al. (2014) proposed a set of practice competencies for registered and advanced practice nurses that followed a seven‐step model, with the addition of “cultivation of a spirit of clinical inquiry” as step 0, and “dissemination of practice outcome” as step 6 (Melnyk & Fineout‐Overholt, 2015).

Further research is needed to develop valid and reliable instruments for assessing these competencies (Melnyk, Gallagher‐Ford, Troseth, et al. (2014), Melnyk, Gallagher‐Ford, Long, et al., 2014). Various instruments have been proposed to evaluate EBP‐related dimensions in nursing, including barriers, attitudes, values, beliefs, knowledge, skills, and practice, either separately or in combination (Connor et al., 2017; Leung et al., 2014; Oude Rengerink et al., 2013; Shaneyfelt et al., 2006); however, most of them have important psychometric weaknesses. A systematic review of 24 instruments (Leung et al., 2014) for measuring evidence‐based knowledge, skills, and/or attitudes in nursing practice found only one with adequate validity, the Evidence‐Based Practice Questionnaire (Upton & Upton, 2006), which measures knowledge/skills, practice and attitudes. Three new instruments have been validated since then: the Quick‐EBP‐VIK for measuring nurses’ value, implementation, and knowledge of EBP (Connor et al., 2017; Paul et al., 2016); the Nurse Manager EBP Competency Scale targeted specifically on nurse managers (Shuman et al., 2018); and the Evidence‐based Nursing Practices Assessment Tool (EBNPAT; Leung et al., 2018), which uses clinical scenarios to evaluate EBP competency but has only demonstrated adequate content validity to date. However, these instruments gather only partial information on EBP steps (Oude Rengerink et al., 2013) or do not use updated EBP competency frameworks, and there remains a need for valid and reliable instruments to precisely measure the EBP competencies of registered nurses.

The objective of this study was to develop and validate a questionnaire to measure EBP competencies in registered nurses, based on the competency framework developed by Melnyk et al. (Melnyk, Gallagher‐Ford, Long, et al., 2014).

METHODS

Study Design

We developed and psychometrically validated the questionnaire in two stages, following the guidelines of the American Psychological Association (American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, 2014). The global validation process of Evidence Based Practice Competency Questionnaire, Professional version (EBP‐COQ Prof©) is depicted in Figure S1.

First Phase, Questionnaire Creation

Operational definition of the construct and item creation

The design of the questionnaire was based on the definition of competency as “the capacity of nurses to integrate cognitive, affective, and psychomotor abilities in nursing care provision” (Miller et al., 1988). It encompasses a wide range of observable knowledge, skills, attitudes, and behavior patterns, which together constitute the capacity to provide a specific professional service (Neary, 2002). We therefore consider that the development of competency in EBP requires nurses to advance their abilities in all domains of EBP competence required to implement the steps of the EBP process (i.e., knowledge, skills, attitudes/beliefs) and EBP implementation/behaviors (Saunders & Vehviläinen‐Julkunen, 2018).

For the first version, items were selected from questionnaires measuring similar constructs (Connor et al., 2017; Leung et al., 2014; Oude Rengerink et al., 2013; Ruzafa‐Martinez et al., 2013; Shaneyfelt et al., 2006), with the addition of new items on EBP attitudes, knowledge, skills, and utilization. Its design also took account of the framework of EBP competencies for practicing registered nurses proposed by Melnyk et al. (Melnyk, Gallagher‐Ford, Long, et al., 2014). Accordingly, the research team used focus groups to develop a conceptual map in which each competency was associated with the most appropriate EBP step, assigning at least one questionnaire item to each competency (Table S1). Items were written in a manner that allowed responses on a 5‐point Likert scale from 1 = completely disagree to 5 = completely agree.

Face and content validation by experts

An expert group analyzed the dimensionality of the first version of the questionnaire and the adequacy of its items, using the Delphi consensus technique (Falzarano & Pinto Zipp, 2013). The group comprised ten nursing professionals with expertise in EBP, seven with >10 years of experience in research and teaching, and three with >10 years of clinical experience. Each expert received the first version of the questionnaire by e‐mail along with a description of its objectives and dimensions. The experts assessed the adequacy of items, their relevance in the assigned dimension, and their comprehensibility, responding on a 5‐point Likert scale. They were also asked to propose improvements in the wording of items or other aspects when appropriate.

We conducted as many rounds as necessary until consensus was reached on all items. Items with a coefficient validity ratio (CVR) > 0.70 were preserved in the final version of the instrument. We also calculated the content validity index (CVI) for the instrument as a whole, considering a value >0.80 to be adequate (Almanasreh, Moles, & Chen, 2019). Finally, the INFLESZ v1 package was used to determine the Flesch‐Szigriszt Index of Readability (Barrio‐Cantalejo et al., 2008).

Cognitive piloting

Cognitive piloting was conducted in 18 nurses with professional experience of 5–30 years working in hospital and primary care settings for the public health service in the region of Murcia (Spain); the aim was to assess the comprehensibility, acceptability, and completion time of the questionnaire.

Second phase, psychometric evaluation of the questionnaire

An observational, cross‐sectional, and multicenter study was conducted between February and November 2018.

Participants

We selected a non‐probabilistic sample of nursing professionals in the public health services of the autonomous communities of Andalusia and Murcia (Spain). The inclusion criterion was actively working with a minimum experience of one year providing direct care to patients in hospital or primary care settings. Nurses who were managers or from central services were excluded. The recommended sample size for validation studies is between 200 and 400 participants (Conway & Huffcutt, 2003).

Variables and Measurement Instrument

A data‐gathering notebook was used for anonymous and self‐administered completion. It included sections on (a) sociodemographic variables: age, sex; (b) work variables: professional experience, time in this work unit, care setting (hospital/primary care), unit, work shift, highest qualification, and hours of EBP training; (c) version 3 of the 35‐item Evidence‐Based Practice Evaluation Competency Questionnaire for Professionals (EBP‐COQ Prof©; Table S2); (d) evidence‐based practice questionnaire (EBPQ‐19; De Pedro‐Gomez Joan et al., 2009) validated in our setting (to analyze criteria validity), containing 19 items grouped into three dimensions: attitudes, skills/knowledge, and practice in EBP.

Procedure

Questionnaires were delivered to the nursing managers of six health areas, containing a total of six hospitals and 64 health centers, in the Autonomous Communities of Murcia and Andalucía for distribution to the nurses in their areas. The professionals were informed of the study objectives and invited to participate as volunteers. In order to determine the time stability of the questionnaire (test–retest), it was administered twice to 18 individuals with a between‐test interval of 15 days. All questionnaires were identified by codes alone to preserve the anonymity of participants.

Data Analysis

We first performed a descriptive analysis of item results, calculating means, standard deviations [SDs], asymmetry, and kurtosis. Item discrimination was evaluated by corrected item‐total correlation (Carretero‐Dios & Pérez, 2005). Exploratory factor analysis (EFA) and confirmatory factor analysis (CFA) were then performed to analyze the degree to which scale items conformed to the established construct (Elosua‐Oliden, 2003), using a structural equation model (SEM). In brief, the sample was randomly divided into two subsamples: One was studied by EFA to identify the factorial structure underlying the items, using various models; the other was used to confirm this structure using CFA, which imposes greater restrictions (Brown, 2006).

For the EFA, factor extraction used the unweighted least squares (ULS) method with PROMAX rotation (oblique rotation), determining the number of factors by the optimal implementation of parallel analysis (Timmerman & Lorenzo‐Seva, 2011). This analysis was performed on a matrix of 290 participants (half of the sample) using the FACTOR package (Lorenzo‐Seva & Ferrando, 2006). For the CFA, we used the weighted least squares means and variance adjusted (WLSMV) method, indicated for categorically ordered data (Muthén & Muthén, 2010). This analysis was performed on a matrix of 289 participants (the other half of the sample) using Mplus 7 (Muthén & Muthén, 2010). The fit of data to the models was assessed using χ2/df, comparative fit index (CFI), Tucker–Lewis index (TLI), and root mean square error of approximation (RMSEA). The fit was considered adequate with χ2/df < 5, CFI > 0.90, TLI > 0.90, and RMSEA < 0.08 (Kline, 2011).

The selection of items was based on their descriptive statistics, their factorial load in their dimension (Lloret‐Segura et al., 2014), and confirmation that the content of the dimensions was represented by the final items. Reliability was analyzed as internal consistency using Cronbach’s alpha (α) for each dimension of the scale, and the time stability of item scores was assessed with the intraclass correlation coefficient (ICC), interpreted in accordance with Landis and Koch (Landis & Koch, 1977). External validity was evaluated according to (a) criterion validity obtained from the correlations between the scores of each dimension in the EBP‐COQ Prof© and EBPQ‐19 scales; (b) predictive validity, based on the hypothesis that professionals with more EBP training would obtain higher scale dimension scores and assessed using one‐factor ANOVA; each scale dimension and the variable “hours of training in EBP” SPSS 22.0, Mplus 7.0 (Muthén & Muthén, 2010) and FACTOR (Lorenzo‐Seva & Ferrando, 2006) packages were used for the statistical analyses.

Ethical Considerations

The study was approved by the Ethics Committee of the University of Murcia (registry no. 1404).

RESULTS

First Phase

The initial version of EBP‐COQ Prof© (version 0) contained 50 items grouped into four dimensions (attitudes, knowledge, skills, and utilization). The experts achieved consensus on all items after two validation rounds. After the first expert validation round, CVR results showed that 74% (n = 37) of items were acceptable, whereas items with CVR ≤ 0.70 (n = 13) were eliminated. After considering the suggestions of experts, minor modifications were made in 34 items, obtaining version 1. In the second expert validation round, minor modifications were made to 21 of the items and two were eliminated, resulting in a 35‐item version 2 of the questionnaire. The I‐CVI for version 2 was 0.86 (Figure S1).

After cognitive piloting, four of the items were rewritten to improve their comprehensibility. The time taken to complete the questionnaire was 8–11 min. Version 3 of the questionnaire comprised 35 items that covered all of the competencies proposed by Melnyk et al. (Melnyk, Gallagher‐Ford, Long, et al., 2014; Table S1). The Flesch‐Szigriszt Index was 55.18, indicating normal difficulty.

Second Phase

Sample description

The questionnaire was completed by 579 nurses from the autonomous communities of Andalusia (69.9%, n = 405) and Murcia (28.5%, n = 165); 76% (n = 440) were females; the mean age was 43 years (SD = 9.2) and mean professional experience was 20 years (SD = 9.7). The main nursing activity of 69.8% of the nurses (n = 404) was in a hospital setting.

Item Analysis

Medium–high scores were obtained for all items and tended toward a normal distribution (asymmetry and kurtosis values ranging from 1.5 to −1.5). Corrected item‐total correlation was >0.30 for all items except for no. 28, although the value was very close to 0.30 (Table S3).

Internal Structure of the Scale

The Kaiser–Meyer–Olkin (KMO) test of sampling adequacy was 0.89 and Bartlett´s statistic 6336.0 (p < .001). The EFA obtained the best fit for the four‐factor model (Table 1). All items had factorial loads >0.30 in the rotated matrix (Table S4). Factor I was formed by items 1–8, corresponding to attitudes toward EBP, factor II by items 9–19, corresponding to EBP knowledge, factor III by items 20–23, 27, and 28, corresponding to EBP skills, and factor IV by items 24–26 and 29–35, corresponding to EBP utilization.

Table 1.

Goodness‐of‐fit Indexes for the 1‐Factor, 2‐Factor, 3‐Factor, and 4‐Factor EFA Models With PROMAX Rotation of the EBP‐COQ Prof

| Models | χ2 | df | p | RMSEA (90% CI) | TLI | CFI |

|---|---|---|---|---|---|---|

| 1 factor | 3,012.83 | 560 | <.001 | 0.115 (0.101 – 0.123) | 0.862 | 0.871 |

| 2 factors | 1,301.65 | 526 | <.001 | 0.072 (0.500 – 0.800) | 0.946 | 0.952 |

| 3 factors | 568.49 | 493 | .001 | 0.039 (0.010 – 0.050) | 0.984 | 0.987 |

| 4 factors | 311.32 | 461 | .001 | 0.000 (0.000 – 0.010) | 1.000 | 1.000 |

χ2: chi‐square test; df: degrees of freedom; RMSEA: root mean square error of approximation; CI: confidence interval; TLI: Tucker–Lewis index; CFI: comparative fit index

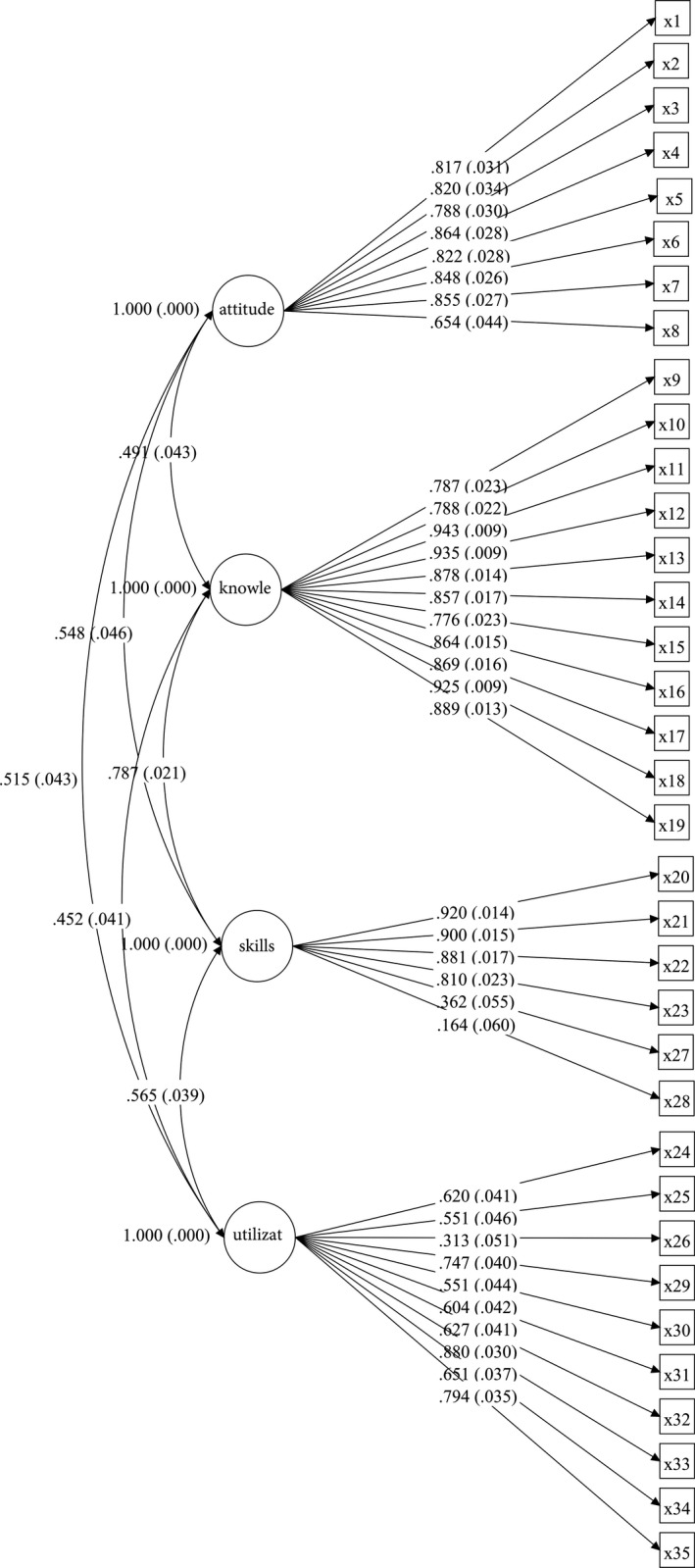

The final model of four oblique factors with 35 items was then tested, obtaining CFA fit index values of χ2 = 1,935.92 (df = 554; p < .001), χ2/df = 3.49, CFI = 0.932, TLI = 0.927, and RMSEA = 0.093 (90% CI = 0.097 − 0.108). Factorial loads ranged between 0.164 for item 28 and 0.94 for item 11, as shown in the path diagram (Figure 1). According to these results, the fit of data to the model can be considered adequate.

Figure 1.

Confirmatory factor analysis of the EBP‐COQ Prof ©.

Reliability Analysis

Internal consistency (Cronbach’s α) for each scale dimension was 0.888 for factor I (attitude toward EBP), 0.948 for factor II (EBP knowledge), 0.817 for factor III (EBP skills), and 0.840 for factor IV (EBP utilization). ICCs showed high concordance between test and retest scores: factor I (attitude toward EBP) = 0.840 (p < .001) 95% CI (0.574–0.940); factor II (EBP knowledge) = 0.966 (p < .001) 95% CI (0.908–0.987); factor III (EBP skills) = 0.815 (p < .001) 95% CI (0.505–0.931); and factor IV (EBP utilization) = 0.876 (p < .001) 95% CI (0.669–0.954).

Evidence of Validity

Correlations obtained between EBP‐COQ Prof© and EBPQ‐19 dimensions were statistically significant (p < .01) with high coefficients ranging from 0.295 to 0.711, which were higher in the dimensions measuring the same content (Table 2). One‐factor ANOVA revealed statistically significant differences in all EBP‐COQ© Prof dimensions according to the hours of EBP training undergone by the professionals; the scores were higher in those who had undergone > 40 hr of training (Table 3).

Table 2.

Bivariate Correlations Between Dimensions of EBP‐COQ Prof© and EBPQ‐19 (N = 384)

| EBP‐COQ Prof | EBPQ‐19 | |||

|---|---|---|---|---|

| Attitude | Knowledge and skills | Practice | Total | |

| Attitude | .491** | .391** | .314** | .490** |

| Knowledge | .346** | .688** | .507** | .649** |

| Skills | .318** | .582** | .470** | .579** |

| Utilization | .295** | .411** | .476** | .505** |

| Total | .449** | .675** | .568** | .711** |

p < .01.

Table 3.

Means and Standard Deviations of Scores for Each EBP‐COQ Prof© Dimension as a Function of Hours of EBP Training (N = 376)

| EBP‐COQ PROF |

Nonea n = 85 |

<40 hb n = 106 |

40‐150 hc n = 99 |

>150 hd n = 86 |

p | ||||

|---|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | M | SD | M | SD | ||

| Attitude | 4.11c | 0.56 | 4.29 | 0.47 | 4.38 | 0.58 | 4.33 | 0.57 | .008 |

| Knowledge | 2.53bcd | 0.79 | 2.98acd | 0.74 | 3.41ab | 0.69 | 3.51ab | 0.78 | <.001 |

| Skills | 3.20cd | 0.67 | 3.40cd | 0.68 | 3.72ab | 0.61 | 3.85ab | 0.53 | <.001 |

| Utilization | 3.00cd | 0.65 | 3.19 | 0.60 | 3.29a | 0.59 | 3.34a | 0.65 | .002 |

| TOTAL | 3.21bcd | 0.49 | 3.47acd | 0.50 | 3.70ab | 0.49 | 3.76ab | 0.48 | <.001 |

M: mean; SD: standard deviation

abcd indicates the “hours of training” category with which there was a statistically significant difference (p < .05 in post hoc analysis).

DISCUSSION

EBP‐COQ Prof© is an easily and rapidly administered 35‐item questionnaire validated for use in registered nurses. It evaluates the degree of self‐perceived EBP competency in relation to attitudes, knowledge, skills, and utilization. High mean scores (range 1–5) signify a high level of competency in each dimension and globally.

Construction of the questionnaire was based on a complete and exhaustive definition of competency (Miller et al., 1988) and EBP steps (Melnyk & Fineout‐Overholt, 2015). Items were selected and redacted to closely reflect clinical realities in hospital and primary care settings and to gather all operative manifestations in the EBP competency framework for practicing registered nurses (Melnyk, Gallagher‐Ford, Long, et al., 2014). The distribution of the four dimensions of the competency construct follows the time sequence of EBP. Thus, the attitude dimension shows a greater presence in step 0 (cultivates a spirit of enquiry), crucial to the beginning of the process. The knowledge dimension is mainly grouped into steps 1–3 (PICO question, search for evidence, and critical appraisal) as in other instruments that specifically measure this dimension (Tilson et al., 2011). Acquisition of these competencies is essential for the subsequent development of relevant skills, cross‐sectionally distributed throughout all EBP steps. Finally, the utilization dimension mainly concentrates on steps related to EBP application and evaluation.

Confirmatory and exploratory factorial analyses demonstrated the adequate fit of the four‐factor model and confirmed the internal structure of the four dimensions of the competency construct. Hence, this questionnaire combines in a single instrument the dimensions of EBP attitudes, knowledge, skills, and also utilization, which is considered especially important (Saunders & Vehviläinen‐Julkunen, 2018) but is not usually included in EBP measurement instruments (Leung et al., 2014).

The questionnaire was found to have acceptable time stability and internal consistency, with Cronbach’s α > 0.8 for all dimensions, considered adequate when the objective is diagnosis and classification (Carretero‐Dios & Pérez, 2007). In contrast, the original EBPQ (Upton et al., 2014) and its Spanish adaptation (EBPQ‐19; Sese‐Abad et al., 2014) have demonstrated poor reliability in the attitude dimension. External evidence of validity was also obtained for the questionnaire, which showed significant correlations with EBPQ‐19 dimensions and, as observed in other studies (Fernandez‐Dominguez et al., 2017; Ramos‐Morcillo et al., 2015), a positive relationship between questionnaire scores and hours of EBP training.

With respect to its dimensionality and implementation, it should be clarified that the attitude, knowledge, and skills dimensions represent potential rather than actual behaviors, given that the implementation of competencies depends on the circumstances and setting (Caprara & Cervone, 2003). However, inclusion of the utilization dimension means that EBP‐COQ Prof© is also suitable for assessing real behaviors and can be useful to evaluate the influence of factors related to individuals or the practice setting on the EBP competency of nurses.

The advantages of EBP‐COQ Prof© over existing questionnaires include the improvement of methodological weaknesses related to an inadequate description of validation processes or of the metric properties or dimensions considered (Connor et al., 2017; Leung et al., 2014, 2018; Paul et al., 2016). In addition, the development of items was based on an updated EBP competency framework for nurses (Melnyk, Gallagher‐Ford, Long, et al., 2014). The demonstrated comprehensibility, acceptability, and completion time of the questionnaire make it easy to apply for the evaluation of nurses’ EBP competency, facilitating the study of associated individual and institutional factors. It can also be useful to evaluate the effect of EBP training programs and of organizational interventions designed to improve EBP competency.

In addition, the seven‐step theoretic framework used to develop EBP‐COQ Prof© facilitates identification of the stage in which nurses perceive themselves as less competent. This allows interventions to be adapted to the competency level of specific populations and to be targeted where most needed.

Limitations

The factorial load of item 28 “In clinical decision‐making I consider my professional experience” was low in the CFA, possibly due to the insufficient development of EBP in the daily practice of nurses (Saunders et al., 2019). Nevertheless, this item was maintained so that one of the basic postulates of EBP was not missing (Sackett et al., 1996).

There is currently debate around the relationship of scores obtained using a self‐perception questionnaire such as the EBP‐COQ Prof© with the results of more objective instruments (Hagedorn Wonder et al., 2017; McCluskey & Lovarini, 2005; Snibsøer et al., 2018). Further research is warranted to compare the EBP‐COQ© Prof with objective tests, to examine its performance in different clinical settings by professionals with different profiles, and to measure its sensitivity to changes after EBP interventions.

Further research is warranted to determine the functioning of the questionnaire in different clinical settings and with other professional profiles and to measure its sensitivity to change after EBP interventions.

CONCLUSIONS

The EBP‐COQ Prof was constructed under robust theoretical postulates and demonstrated adequate internal consistency and good reliability. It allows evaluation of the self‐perceived competency of registered nurses in EBP and yields information related to four dimensions: attitudes, knowledge, skills, and utilization.

Linking Evidence to Action.

It is especially important to precisely determine the utilization of EBP by nurses.

EBP‐COQ Prof© is a valid and reliable questionnaire with a robust competency framework. It is useful to determine both potential and actual behaviors by assessing EBP utilization in a clinical setting as well as EBP‐related attitudes, knowledge, and skills.

EBP‐COQ Prof© can also be useful to study individual factors related to the EBP competency of nurses and the influence of the practice setting.

Acknowledgement

We thank to the experts and all the professionals’ participants for the selfless time dedicated to this investigation.

Supporting information

Figure S1. Process of EBP‐COQ Prof© development

Table S1. Relationship among EBP steps, EBP competencies, and EBP‐COQ Prof items.

Table S2. Questionnaire to Evaluate the Competency in Evidence‐Based Practice of Registered Nurses (EBP‐COQ Prof)

Table S3. Descriptive statistics and corrected item‐total correlation of EBP‐COQ Prof.

Table S4. Factorial loads of the four EFA models.

This study was co‐funded by the Erasmus+Programme of the European Union under grant number 2018‐1‐ES01‐KA203‐050216.

“European Commission support for the production of this publication does not constitute an endorsement of its contents, which reflect the views only of the authors. The Commission cannot be held responsible for any use which may be made of the information contained therein.”

References

- Albarqouni, L. , Hoffmann, T. , Straus, S. , Olsen, N. R. , Young, T. , Ilic, D. , Shaneyfelt, T. , Haynes, R. B. , Guyatt, G. & Glasziou, P. (2018). Core competencies in evidence‐based practice for health professionals. JAMA Network Open, 1(2), e180281 10.1001/jamanetworkopen.2018.0281 [DOI] [PubMed] [Google Scholar]

- Almanasreh, E. , Moles, R. , & Chen, T. F. (2019). Evaluation of methods used for estimating content validity. Research in Social and Administrative Pharmacy, 15(2), 214–221. 10.1016/J.SAPHARM.2018.03.066 [DOI] [PubMed] [Google Scholar]

- American Educational Research Association , American Psychological Association & National Council on Measurement in Education . (2014). The standards for educational and psychological testing. American Educational Research Association. [Google Scholar]

- American Nurses Association . (2015). Nursing scope and standards of practice (3rd ed.). www.Nursingworld.org

- Barrio‐Cantalejo, I. M. , Simón‐Lorda, P. , Melguizo, M. , Escalona, I. , Marijuán, M. I. , & Hernando, P. (2008). Validation of the INFLESZ scale to evaluate readability of texts aimed at the patient. Anales del sistema sanitario de Navarra, 31(2), 135–152. 10.4321/S1137-66272008000300004 [DOI] [PubMed] [Google Scholar]

- Brown, T. (2006). Confirmatory factor analysis for applied research. Guilford Press. [Google Scholar]

- Caprara, G. V. , & Cervone, D. (2003). A conception of personality for a psychology of human strengths: Personality as an agentic, self‐regulating system In Aspinwall U. M. S. L. G. (Ed.), A psychology of human strengths (pp. 61–74). American Psychological Association. [Google Scholar]

- Carretero‐Dios, H. , & Pérez, C. (2005). Standards for the development and review of instrumental studies [Normas para el desarrollo y revisión de estudios instrumentales]. International Journal of Clinical and Health Psychology, 5(3), 521–551. [Google Scholar]

- Carretero‐Dios, H. , & Pérez, C. (2007). Standards for the development and review of instrumental studies: Considerations on test selection in psychological research [Normas para el desarrollo y revisión de estudios instrumentales: consideraciones sobre la selección de tests en la investigación psicológica]. International Journal of Clinical and Health Psychology, 7(3), 863–882. [Google Scholar]

- Connor, L. , Paul, F. , McCabe, M. , & Ziniel, S. (2017). Measuring nurses’ value, implementation, and knowledge of evidence‐based practice: Further psychometric testing of the quick‐EBP‐VIK survey. Worldviews on Evidence‐Based Nursing, 14(1), 10–21. 10.1111/wvn.12190 [DOI] [PubMed] [Google Scholar]

- Conway, J. M. , & Huffcutt, A. I. (2003). A review and evaluation of exploratory factor analysis practices in organizational research. Organizational Research Methods, 6(2), 147–168. 10.1177/1094428103251541 [DOI] [Google Scholar]

- Coster, S. , Watkins, M. , & Norman, I. (2018). What is the impact of professional nursing on patients’ outcomes globally? An overview of research evidence. International Journal of Nursing Studies, 78, 76–83. 10.1016/J.IJNURSTU.2017.10.009 [DOI] [PubMed] [Google Scholar]

- De Pedro‐Gomez, J. , Morales‐Asencio, J. M. , Sesé Abad, A. , Bennasar Veny, M. , Ruiz Roman, M. J. , & Muñoz Ronda, F. (2009). Validation of the Spanish version of the Evidence Based Practice Questionnaire in Nurses. Revista Española De Salud Pública, 83(4), 577–586. [DOI] [PubMed] [Google Scholar]

- Elosua‐Oliden, P. (2003). About the validity of the tests [Sobre la validez de los test]. Psicothema, 15(2), 315–321. [Google Scholar]

- European Federation of Nurses Associations . (2015). EFN competency framework. EFN guideline to implement article 31 into national nurses’ education programmes. Brussels, Belgium. http://www.efnweb.be/?page_id=6897

- Falzarano, M. , & Pinto Zipp, G. (2013). Seeking consensus through the use of the Delphi technique in health sciences research. Journal of Allied Health, 42(2), 99–105. [PubMed] [Google Scholar]

- Fernandez‐Dominguez, J. C. , de Pedro‐Gomez, J. E. , Morales‐Asencio, J. M. , Bennasar‐Veny, M. , Sastre‐Fullana, P. , & Sese‐Abad, A. (2017). Health Sciences‐Evidence Based Practice questionnaire (HS‐EBP) for measuring transprofessional evidence‐based practice: Creation, development and psychometric validation. PLoS One, 12(5), e0177172 10.1371/journal.pone.0177172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagedorn Wonder, A. , McNelis, A. M. , Spurlock, D. , Ironside, P. M. , Lancaster, S. , Davis, C. R. , Gainey, M. , & Verwers, N. (2017). Comparison of nurses’ self‐reported and objectively measured evidence‐based practice knowledge. The Journal of Continuing Education in Nursing, 48(2), 65–70. 10.3928/00220124-20170119-06 [DOI] [PubMed] [Google Scholar]

- Kline, R. B. (2011). Principles and practice of structural equation modeling, 3rd ed Guildford Press. [Google Scholar]

- Laibhen‐Parkes, N. (2014). Evidence‐based practice competence: A concept analysis. International Journal of Nursing Knowledge, 25(3), 173–182. 10.1111/2047-3095.12035 [DOI] [PubMed] [Google Scholar]

- Landis, J. R. , & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33(1), 159–174. [PubMed] [Google Scholar]

- Leung, K. , Trevena, L. , & Waters, D. (2014). Systematic review of instruments for measuring nurses’ knowledge, skills and attitudes for evidence‐based practice. Journal of Advanced Nursing, 70(10), 2181–2195. 10.1111/jan.12454 [DOI] [PubMed] [Google Scholar]

- Leung, K. , Trevena, L. , & Waters, D. (2016). Development of a competency framework for evidence‐based practice in nursing. Nurse Education Today, 39, 189–196. 10.1016/J.NEDT.2016.01.026 [DOI] [PubMed] [Google Scholar]

- Leung, K. , Trevena, L. , & Waters, D. (2018). Content validation of the evidence‐based nursing practice assessment tool. Nurse Researcher, 26(1), 33–40. 10.7748/nr.2018.e1544 [DOI] [PubMed] [Google Scholar]

- Lloret‐Segura, S. , Ferreres‐Traver, A. , Hernández‐Baeza, A. , & Tomás‐Marco, I. (2014). Exploratory Item Factor Analysis: A practical guide revised and updated.. Anales de Psicología, 30(3), 1151–1169. 10.6018/analesps.30.3.199361 [DOI] [Google Scholar]

- Lorenzo‐Seva, U. , & Ferrando, P. (2006). FACTOR: A computer program to fit the exploratory factor analysis model. Behavior Research Methods, 38(1), 88–91. [DOI] [PubMed] [Google Scholar]

- McCluskey, A. , & Lovarini, M. (2005). Providing education on evidence‐based practice improved knowledge but did not change behaviour: A before and after study. BMC Medical Education, 5, 40 10.1186/1472-6920-5-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Melnyk, B. M. , & Fineout‐Overholt, E. (2015). Evidence‐based practice in nursing and healthcare. A guide to best practice, 3rd ed Kluwer, Wolter. [Google Scholar]

- Melnyk, B. M. , Fineout‐Overholt, E. , Gallagher‐Ford, L. , & Kaplan, L. (2012). The state of evidence‐based practice in US nurses. JONA: The Journal of Nursing Administration, 42(9), 410–417. 10.1097/NNA.0b013e3182664e0a [DOI] [PubMed] [Google Scholar]

- Melnyk, B. M. , Gallagher‐Ford, L. , Long, L. E. , & Fineout‐Overholt, E. (2014). The establishment of evidence‐based practice competencies for practicing registered nurses and advanced practice nurses in real‐world clinical settings: Proficiencies to improve healthcare quality, reliability, patient outcomes, and costs. Worldviews on Evidence‐Based Nursing, 11(1), 5–15. 10.1111/wvn.12021 [DOI] [PubMed] [Google Scholar]

- Melnyk, B. M. , Gallagher‐Ford, L. , Troseth, M. , & Wyngarden, K. (2014). A national survey & forum for nurse executives: Leveraging evidence‐based practice to enhance healthcare quality, reliability, patient outcomes and cost containment. https://www.elsevier.com/__data/assets/pdf_file/0019/184051/A‐National‐Survey‐Forum‐for‐Nurse‐Executives_Final‐APRIL2016.pdf

- Melnyk, B. M. , Gallagher‐Ford, L. , Zellefrow, C. , Tucker, S. , Bindu, T. , Sinnott, L. , & Tan, A. (2018). The first U.S. study on nurses’ evidence‐based practice competencies indicates major deficits that threaten healthcare quality, safety, and patient outcomes. Worldviews on Evidence‐Based Nursing, 15(1), 16–25. 10.1111/wvn.12269 [DOI] [PubMed] [Google Scholar]

- Miller, C. , Hoggan, J. , Pringle, S. , & West, C. (1988). Credit where credit’s due. The report of the accreditation of work‐based learning project. Scotvec. [Google Scholar]

- Muthén, L. , & Muthén, B. (2010). Statistical analysis with latent variables user’s guide Mplus. Muthén & Muthén. [Google Scholar]

- Neary, M. (2002). Curriculum studies in post‐compulsory and adult education: A study guide for teachers and student teachers. OUP Oxford. [Google Scholar]

- Oude Rengerink, K. , Zwolsman, S. E. , Ubbink, D. T. , Mol, B. W. J. , van Dijk, N. , & Vermeulen, H. (2013). Tools to assess evidence‐based practice behaviour among healthcare professionals. Evidence Based Medicine, 18(4), 129–138. 10.1136/eb-2012-100969 [DOI] [PubMed] [Google Scholar]

- Paul, F. , Connor, L. , McCabe, M. , & Ziniel, S. (2016). The development and content validity testing of the Quick‐EBP‐VIK: A survey instrument measuring nurses’ values, knowledge and implementation of evidence‐based practice. Journal of Nursing Education and Practice, 6(5), 118 10.5430/jnep.v6n5p118 [DOI] [Google Scholar]

- Ramos‐Morcillo, A. J. , Fernandez‐Salazar, S. , Ruzafa‐Martinez, M. , & Del‐Pino‐Casado, R. (2015). Effectiveness of a brief, basic evidence‐based practice course for clinical nurses. Worldviews on Evidence‐Based Nursing, 12(4), 199–207. 10.1111/wvn.12103 [DOI] [PubMed] [Google Scholar]

- Ruzafa‐Martinez, M. , Lopez‐Iborra, L. , Moreno‐Casbas, T. , & Madrigal‐Torres, M. (2013). Development and validation of the competence in evidence based practice questionnaire (EBP‐COQ) among nursing students. BMC Medical Education, 13, 19 10.1186/1472-6920-13-19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sackett, D. L. , Rosenberg, W. M. , Gray, J. A. , Haynes, R. B. , & Richardson, W. S. (1996). Evidence based medicine: What it is and what it isn’t. BMJ, 312(7023), 71–72. 10.1136/BMJ.312.7023.71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saunders, H. , Gallagher‐Ford, L. , Kvist, T. , & Vehviläinen‐Julkunen, K. (2019). Practicing healthcare professionals’ evidence‐based practice competencies: An overview of systematic reviews. Worldviews on Evidence‐Based Nursing, 16(3), 176–185. 10.1111/wvn.12363 [DOI] [PubMed] [Google Scholar]

- Saunders, H. , & Vehviläinen‐Julkunen, K. (2015). The state of readiness for evidence‐based practice among nurses: An integrative review. International Journal of Nursing Studies, 56, 128–140. 10.1016/j.ijnurstu.2015.10.018 [DOI] [PubMed] [Google Scholar]

- Saunders, H. , & Vehviläinen‐Julkunen, K. (2018). Key considerations for selecting instruments when evaluating healthcare professionals’ evidence‐based practice competencies: A discussion paper. Journal of Advanced Nursing, 74(10), 2301–2311. 10.1111/jan.13802 [DOI] [PubMed] [Google Scholar]

- Sese‐Abad, A. , De Pedro‐Gomez, J. , Bennasar‐Veny, M. , Sastre, P. , Fernandez‐Dominguez, J. C. , & Morales‐Asencio, J. M. (2014). A multisample model validation of the Evidence‐Based Practice Questionnaire. Research in Nursing & Helath, 37(5), 437–446. 10.1002/nur.21609 [DOI] [PubMed] [Google Scholar]

- Shaneyfelt, T. , Baum, K. D. , Bell, D. , Feldstein, D. , Houston, T. K. , Kaatz, S , Whelan, C. , & Green, M. (2006). Instruments for evaluating education in evidence‐based practice. JAMA, 296(9), 1116 10.1001/jama.296.9.1116 [DOI] [PubMed] [Google Scholar]

- Shuman, C. J. , Ploutz‐Snyder, R. J. , & Titler, M. G. (2018). Development and testing of the nurse manager EBP Competency Scale. Western Journal of Nursing Research, 40(2), 175–190. 10.1177/0193945917728249 [DOI] [PubMed] [Google Scholar]

- Snibsøer, A. K. , Ciliska, D. , Yost, J. , Graverholt, B. , Nortvedt, M. W. , Riise, T. , & Espehaug, B. (2018). Self‐reported and objectively assessed knowledge of evidence‐based practice terminology among healthcare students: A cross‐sectional study. PLoS One, 13(7), e0200313 10.1371/journal.pone.0200313 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Straus, S. E. , Glasziou, P. , Richardson, W. S. , Haynes, R. B. , Pattani, R. , & Veroniki, A. A. (2019). How to practice and teach EBM, 5th ed. Elsevier. [Google Scholar]

- Tilson, J. K. , Kaplan, S. L. , Harris, J. L. , Hutchinson, A. , Ilic, D. , Niederman, R. , Potomkova, J. , & Zwolsman, S. E. (2011). Sicily statement on classification and development of evidence‐based practice learning assessment tools. BMC Medical Education, 11(1), 78 10.1186/1472-6920-11-78 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Timmerman, M. E. , & Lorenzo‐Seva, U. (2011). Dimensionality assessment of ordered polytomous items with parallel analysis. Psychological Methods, 16(2), 209–220. 10.1037/a0023353 [DOI] [PubMed] [Google Scholar]

- Upton, D. , & Upton, P. (2006). Development of an evidence‐based practice questionnaire for nurses. Journal of Advanced Nursing, 53(4), 454–458. 10.1111/j.1365-2648.2006.03739.x [DOI] [PubMed] [Google Scholar]

- Upton, D. , Upton, P. , & Scurlock‐Evans, L. (2014). The reach, transferability, and impact of the evidence‐based practice questionnaire: A methodological and narrative literature review. Worldviews on Evidence‐Based Nursing, 11(1), 46–54. 10.1111/wvn.12019 [DOI] [PubMed] [Google Scholar]

- World Health Organization . (2017). European strategic directions for strengthening nursing and midwifery towards Health 2020 goals. http://www.euro.who.int/_data/assets/pdf_file/0004/274306/European‐strategic‐directions‐strengthening‐nursing‐midwifery‐Health2020_en‐REV1.pdf?ua=1 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figure S1. Process of EBP‐COQ Prof© development

Table S1. Relationship among EBP steps, EBP competencies, and EBP‐COQ Prof items.

Table S2. Questionnaire to Evaluate the Competency in Evidence‐Based Practice of Registered Nurses (EBP‐COQ Prof)

Table S3. Descriptive statistics and corrected item‐total correlation of EBP‐COQ Prof.

Table S4. Factorial loads of the four EFA models.