Abstract

As policy makers debate engaging hospitals in voluntary or mandatory bundled payment mechanisms, they lack evidence about how participants in existing programs compare. We analyzed data from Medicare and the American Hospital Association Annual Survey to compare characteristics and baseline performance among hospitals in Medicare’s voluntary (Bundled Payments for Care Improvement initiative, or BPCI) and mandatory (Comprehensive Care for Joint Replacement program, or CJR) joint replacement bundled payment programs. BPCI hospitals had higher mean patient volume and were larger and more teaching intensive than were CJR hospitals, but the two groups had similar risk exposure and baseline episode quality and cost. BPCI hospitals also had higher cost attributable to institutional postacute care, largely driven by inpatient rehabilitation facility cost. These findings suggest that while both voluntary and mandatory approaches can play a role in engaging hospitals in bundled payment, mandatory programs can produce more robust, generalizable evidence. Either mandatory or additional targeted voluntary programs may be required to engage more hospitals in bundled payment programs.

Bundled payment is a cornerstone of the Centers for Medicare and Medicaid Services (CMS) strategy to increase health care value. As a key Medicare Alternative Payment Model, bundled payment holds health care organizations accountable for spending across an episode of care, providing financial incentives to maintain quality and contain spending below a predefined benchmark.

After several small demonstration projects that began in the 1990s, CMS expanded bundled payment nationwide in late 2013 via the Bundled Payments for Care Improvement (BPCI) initiative. The largest voluntary program to date, the initiative has included 1,201 hospitals. The most popular episode among participating hospitals was major joint replacement of the lower extremity (hereafter “joint replacement”) via the initiative’s model 2, in which 313 hospitals bundled hospitalization and up to ninety days of postacute care.1 Based on expanding participation in BPCI over time and reports of financial savings,2,3 CMS used BPCI’s bundled payment design as the basis for the Comprehensive Care for Joint Replacement (CJR) Model beginning in April 2016. The first mandatory Alternative Payment Model, CJR required nearly 800 hospitals in 67 urban markets (areas with a population of at least 50,000 people) to bundle joint replacement through the program’s first year.

Though CJR largely follows BPCI in terms of design, the two programs differ along several key dimensions. They represent different mechanisms of participation: Hospitals may volunteer to participate in BPCI because they expect to succeed under bundled payment, whereas Medicare required all acute care hospitals in markets selected for CJR to participate in it. These markets were selected to oversample those with above-average episode expenditures and an annual joint replacement surgery volume of more than 400 episodes.3 BPCI also includes forty-seven other conditions besides joint replacement, while CJR focuses on joint replacement alone.

BPCI’s model 2 is open to hospitals and physician practices nationwide, and as of January 1, 2018 539 organizations (most of which are hospitals) participate. In contrast, CJR limits participation to hospitals. BPCI uses historical hospital expenditures to set spending benchmarks, but CJR shifts benchmarks over time using information about market-level averages. An early CMS evaluation of organizations bundling joint replacement in BPCI reported a 10 percent decrease in average spending per Medicare episode and stable or improved quality, while other work has demonstrated that hospitals participating in BPCI can reduce expenditures by up to 20 percent through organizational focus on supply costs, care standardization, and postacute care.4–11 Preliminary results from Medicare indicate that through the first year of CJR, nearly half of all participating hospitals achieved episode savings, with an average bonus of $1,134 per episode of care.12 However, because CJR was implemented so recently, no formal program evaluation or results are yet available.

CMS introduced CJR as a mandatory program in Medicare primarily to generate robust evidence about the impact of bundled payment on the quality and cost of care, presumably as a precursor to a broader rollout of bundled payment. Because CJR hospitals cannot opt out or shift sicker, higher-risk patients to nonparticipating hospitals, their performance provides evidence that is more rigorous than that from BPCI hospitals. The health and human services secretary could use these results to scale bundled payment nationally.

However, some stakeholders have complained about how the mandatory nature of CJR unfairly forces hospitals to assume financial risk for care beyond their four walls (for example, Medicare payments for all health care services incurred by eligible beneficiaries for ninety days after discharge). Of particular concern is that CJR could unfairly disadvantage hospitals located in underserved areas or with low procedural volume, potentially forcing them to stop performing joint replacement procedures and hindering patient access.13

The policy salience of the distinction between mandatory and voluntary programs has increased substantially in the wake of the recent CMS decision to cancel several planned mandatory cardiac bundles in Medicare, end mandated participation for approximately half of the CJR participants, and expand voluntary bundled payment through the recently announced BPCI Advanced model.2,14,15 This abrupt policy shift reflects the affinity for physician-focused models of the administration of President Donald Trump, as well as a potential preference for broader experimentation even at the expense of generating deeper evidence—as initially planned through CJR.16

As policy makers weigh stakeholders’ feedback and determine the direction of future bundled payment policy, they would greatly benefit from empirical evidence. It is unclear whether engagement under voluntary programs is limited to certain hospital types, geographic regions, health care markets, or hospital service areas. It is also unknown whether hospitals targeted by mandatory programs exhibit important attributes for success in bundled payment (such as institutional experience with financial risk, procedural volume, postacute care spending, and baseline quality). Because of its inclusion in both voluntary (BPCI) and mandatory (CJR) programs, joint replacement provides a unique opportunity to make such comparisons. Therefore, we analyzed data from Medicare and the American Hospital Association (AHA) Annual Survey to answer two key questions. First, how do hospitals participating in joint replacement bundles under BPCI and CJR compare? Second, does the breakdown of episode spending components vary between the two hospital groups?

Study Data And Methods

Data

We used publicly available data from CMS to identify hospitals participating in joint replacement bundles through BPCI or CJR17,18 and data on a 20 percent national sample of Medicare beneficiaries for the period 2010–16 to construct bundled payment episodes for patients admitted nationwide for joint replacement surgery under Medicare Severity–Diagnosis Related Groups (MS-DRGs) 469 and 470 (these groups are for major hip and knee joint replacement or reattachment of lower extremity with or without major complicating or comorbid condition, respectively). To construct these episodes, we used patient-level Medicare inpatient, outpatient, carrier (Part B physician fees and outpatient facility), inpatient rehabilitation facility, skilled nursing facility, home health agency, and durable medical equipment claims files to assess episode characteristics, volume, quality, and spending measures. We also used publicly available data provided by CMS via Hospital Compare to evaluate joint replacement complication rates.19

Additionally, we used data for 2013–15 from the AHA Annual Survey to describe organizational characteristics that have been associated with hospital quality and payment, including ownership, urban versus rural location, teaching status, safety-net status,20 and size. The AHA survey also includes information about hospitals’ financial performance and risk-contracting experience, which allowed us to contextualize their volume of Medicare bundled payment episodes with their overall degree of risk exposure.

Hospital Groups

We defined groups of hospitals that participated in joint replacement bundles through either BPCI’s model 2 voluntarily (“BPCI hospitals”) or CJR by mandate (“CJR hospitals”) through March 2017. We focused our BPCI analysis on hospitals in model 2 because of its shared features with and comparability to CJR.1,17

Hospitals that participated in BPCI’s model 2 at any point were counted as BPCI hospitals, including those that subsequently left the program or were located in CJR markets but elected to continue in BPCI according to program rules. Hospitals that participated in CJR at any point were categorized as CJR hospitals. Our sample consisted of 302 BPCI and 783 CJR hospitals.

Although our primary focus was to compare BPCI hospitals to those originally mandated to participate in CJR, recent rule changes have reduced the number of markets and hospitals that will be required to participate in CJR.2 Therefore, we also defined a subset of CJR hospitals in the thirty-four Metropolitan Statistical Areas that are required to remain in the program going forward (“CJR mandate hospitals”).

Episode Definition And Construction

We defined patient-level bundled payment episodes using a methodology similar to that used by CMS and researchers in prior work.4,5,9,10,21 Specifically, episodes began with an index admission for MS-DRG 469 or 470 and included all services and corresponding Medicare Parts A and B payments through ninety days after discharge from the index hospitalization. Case-mix severity was assessed using the Elixhauser Comorbidity Index.22,23

To enable valid baseline comparisons of quality, spending, and utilization across hospitals while accounting for the fact that hospitals began participating in BPCI at different times and that timing might be related to these measures, we constructed episodes for each BPCI hospital using a hospital-specific baseline consisting of data from the four quarters preceding its start in BPCI. Episodes for CJR hospitals were constructed using four quarters of data before the program start date of April 2016. People younger than age sixty-five with a diagnosis of end-stage renal disease were excluded, to increase sample homogeneity.4,5

Measures Of Baseline Quality

We calculated levels and quarterly trends for risk-standardized mortality and readmission rates, complication rates, and prolonged length-of-stay.19,24,25 The last is a validated measure for joint replacement complications, as a condition-specific quality measure.

Measures Of Baseline Episode Spending

We evaluated Medicare episode spending by standardizing payments to allow for comparisons across hospitals nationwide,21,26 following prior methods (see online appendix exhibit A1 for details).27 We evaluated spending related to the following nine components of care: index hospitalization; readmissions; care at other inpatient facilities (for example, long-term acute care hospitals), skilled nursing facilities, and inpatient rehabilitation facilities; home health agency care; outpatient care; physician services; and durable medical equipment. Total ninety-day episode spending was calculated by aggregating payments across these nine components.

Statistical Analysis

We used census regions to indicate the geographic distribution of BPCI and CJR hospitals nationwide. We also illustrated the distribution of hospitals within geographic health care markets. We compared BPCI and CJR hospitals in terms of organizational characteristics, baseline episode quality and spending, and episode volume (using a 20 percent claims sample). We performed unadjusted analyses by computing means for organizational characteristics and baseline performance and comparing them across the two hospital groups. We used chi-square tests to compare categorical variables and t-tests, Kruskal Wallis tests, and Wilcoxon rank sum tests to compare continuous variables.

We also compared baseline quality (prolonged lengths-of-stay, mortality, and readmissions) and standardized episode spending across hospital groups by applying hierarchical multivariable linear regression models to patient-level data from the four quarters immediately preceding bundled payment initiation for each hospital. Models were run for each measure of baseline quality and episode spending, by MS-DRGs 469 and 470 separately, and adjusted for patients’ age, sex, race/ethnicity, dual eligibility for Medicare and Medicaid, Elixhauser comorbidities,22,28,29 calendar quarter, and hospital-specific time of initiation in bundled payment, with fixed effects for hospital group (BPCI or CJR) and standard errors clustered at the hospital level. We used ordinary least squares models in adjusted analyses for all outcomes except total episode spending, for which we used generalized linear models with a log link and gamma distribution.22

Total standardized episode spending by Medicare was compared across hospital groups both as total ninety-day episode spending and spending for each of the nine components listed above. Spending was adjusted for inflation and transformed into 2016 dollars.

All statistical tests were two-tailed and considered significant at α = 0.05.30 All standard errors corrected for heteroscedasticity using the Huber-White correction.31 Analyses were performed using SAS, version 9.4. The University of Pennsylvania Institutional Review Board approved the study.

Limitations

Our study was subject to several limitations. First, because it was a descriptive analysis, we were unable to evaluate the relationship between bundled payment participation and changes in quality or costs over time. However, our goal was to describe the types of hospitals that participate in mandatory and voluntary programs and form a basis for future work that will compare the impact of different policies over time—information currently unavailable, given how recently the CJR program began.

Second, because we evaluated only hospital participation, our findings might not be generalizable to providers other than acute care hospitals (such as physician groups) or bundles for which the trigger is not an inpatient admission. However, because the majority of providers in BPCI are hospitals and CJR is limited to hospitals, our results are largely representative and allow direct policy comparison.

Third, while our analysis did not account for specific bundle durations for each hospital in BPCI, which are established between individual hospitals and CMS, our methodology adhered closely to methods used by CMS in CJR, for consistency and comparability.

Fourth, though our results were subject to the limitations in risk adjustment based on claims data, we adopted validated measures and methods used in prior literature.

Fifth, while this study did not include the other three models in BPCI, model 2 was the largest in terms of enrollment and represented the basis for CJR and other emerging mandatory bundles.

Finally, specific CJR market selection rules could differ from those that Medicare might use in future mandatory programs. However, given the objective of reducing Medicare spending, the general approach is likely to be consistent with oversampling markets with above-average volume and spending.

Study Results

Geographic Distribution

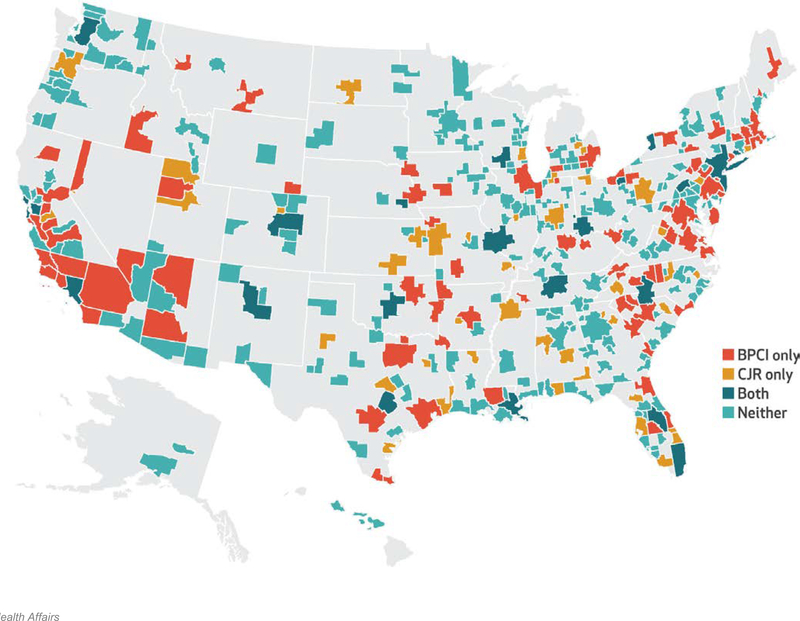

The geographic distribution of BPCI and CJR hospitals varied across urban markets as defined by Metropolitan Statistical Areas (exhibit 1). Of the 382 areas, 88 (23 percent) contained at least one BPCI hospital but no CJR hospitals; 46 (12 percent) contained at least one CJR hospital without any BPCI hospitals; and 21 (5 percent) were mandated CJR markets with at least one BPCI hospital. There were no BPCI or CJR hospitals in 227 (59 percent) of the urban markets. The geographic distribution of hospitals also varied across markets defined by hospital referral region (see appendix exhibit A2).27

Exhibit 1.

Hospital participation in Medicare’s voluntary Bundled Payments for Care Improvement (BPCI) initiative and mandatory Comprehensive Care for Joint Replacement (CJR) program in 2016, by Metropolitan Statistical Area

Source: SOURCE Authors’ analysis of data for 2013–15 from the American Hospital Association Annual Survey and of publicly available Medicare data for 2013–16 on bundled payment program participants.

Neither BPCI nor CJR hospitals were distributed uniformly across census regions nationwide. Nearly 40 percent of the 302 BPCI hospitals and over 30 percent of the 783 CJR hospitals were located in the South (exhibit 2). Just over 25 percent of CJR hospitals were in the West, but no other region accounted for a quarter of either type of hospital.

Exhibit 2:

Characteristics of hospitals participating in BPCI and CJR

| Characteristic | Hospitals in BPCI | Hospitals in CJR | p value |

|---|---|---|---|

| Mean size (number of beds) | 325 | 264 | <0.001 |

| Size (number of beds) | |||

| Small (fewer than 100) | 10.6% | 22.0% | <0.001 |

| Medium (100–399) | 63.9 | 58.8 | |

| Large (400 or more) | 25.5 | 19.3 | |

| Ownership status | |||

| For profit | 20.2 | 25.2 | <0.001 |

| Not for profit | 76.2 | 60.9 | |

| Government owned | 3.64 | 13.9 | |

| Teaching status | |||

| Majora | 13.9 | 10.1 | <0.001 |

| Minorb | 60.3 | 47.0 | |

| Nonteaching | 25.8 | 42.9 | |

| Census region | |||

| South | 37.4 | 32.4 | 0.02 |

| Midwest | 19.5 | 20.7 | |

| West | 18.5 | 26.7 | |

| Northeast | 24.5 | 20.2 | |

| Urban or rural status | |||

| Urban | 94.4 | 100.0 | <0.001 |

| Rural | 5.6 | 0.0 | |

| Mean annual Medicare patient volume | 7,193 | 5,264 | <0.001 |

| Mean patient mix | |||

| Medicare utilization ratio | 52.8 | 50.2 | <0.001 |

| Medicaid utilization ratio | 19.3 | 20.7 | 0.06 |

| Medicare share of admissions | 47.5% | 46.1% | 0.02 |

| Mean Elixhauser Comorbidity Index score | |||

| MS-DRG 469 | 17.1 | 13.5 | <0.001 |

| MS-DRG 470 | 4.3 | 3.8 | <0.001 |

| Mean hospital marginc | 6.5 | 8.0 | 0.42 |

| Joint Commission accreditation | 87.4% | 85.3% | 0.37 |

| Sole community provider | 3.0% | 0.5% | <0.001 |

| Safety-net hospitald | 25.0% | 31.0% | 0.04 |

| Net patient revenue paid on a shared risk basise | 2.4% | 2.6% | 0.76 |

SOURCE Authors’ analysis of data for 2013–15 from the American Hospital Association (AHA) Annual Survey. NOTES Joint replacement is major joint replacement of the lower extremity. There were 302 hospitals in the Bundled Payments for Care Initiative (BPCI) sample and 783 hospitals in the Comprehensive Joint Replacement program (CJR) sample, except where indicated. Volumes adjusted to reflect a 100 percent Medicare beneficiary inpatient sample. A two-tailed p value of 0.05 was considered significant. MS-DRG is Medicare Severity–Diagnosis Related Group.

Members of the Council of Teaching Hospitals (COTH).

Non–COTH members that had a medical school affiliation reported to the American Medical Association.

759 CJR hospitals.

According to AHA designation.

236 BPCI hospitals and 514 CJR hospitals.

Organizational Characteristics

BPCI and CJR hospitals differed with respect to a number of organizational characteristics. In particular, BPCI hospitals tended to be significantly larger than CJR hospitals (mean number of beds: 325 versus 264, respectively) and had a larger mean annual Medicare patient volume (nearly 7,200 versus about 5,300, respectively) (exhibit 2). BPCI hospitals were also more likely to be not for profit, teaching hospitals, and sole community providers but were less likely to be safety-net hospitals. Compared to CJR hospitals, BPCI hospitals had a greater share of utilization from Medicare beneficiaries, while there were no significant differences in hospital profit margins or exposure to at-risk contracts (as defined by net patient revenue paid based on a shared risk basis). BPCI hospitals also had a higher case-mix severity for both MS-DRGs 469 and 470. While CJR hospitals were urban by policy design, 94 percent of BPCI hospitals were also urban. The results of comparisons between CJR mandate hospitals (the subset of CJR hospitals in the thirty-four Metropolitan Statistical Areas required to remain in the program) and BPCI hospitals followed similar patterns (appendix exhibit A3).27

Baseline Performance

BPCI hospitals were significantly higher volume than CJR hospitals for MS-DRGs 469 and 470 (exhibit 3).

Exhibit 3.

Unadjusted and adjusted (risk-standardized) joint-replacement episode baseline performance measures for BPCI hospitals versus CJR hospitals

| Measure | Hospitals in BPCI (n = 302) |

Hospitals in CJR (n = 783) |

p value |

|---|---|---|---|

| Median episode volume per hospitala | |||

| MS-DRG 469 | 10 | 5 | 0.01 |

| MS-DRG 470 | 130 | 65 | <0.001 |

| Mortality ratea | |||

| MS-DRG 469 | 11.9 | 14.4 | 0.21 |

| MS-DRG 470 | 0.6 | 0.6 | 0.51 |

| Readmission rateb | |||

| MS-DRG 469 | 21.7 | 24.5 | 0.37 |

| MS-DRG 470 | 7.8 | 7.6 | 0.63 |

| Prolonged length-of-stay, mean | |||

| MS-DRG 469 | 42.5% | 43.4% | 0.81 |

| MS-DRG 470 | 11.9 | 10.8 | 0.01 |

| Episode spending, mean | |||

| MS-DRG 469 | $46,723 | $41,937 | 0.62 |

| MS-DRG 470 | 22,090 | 21,716 | <0.001 |

| Joint replacement complication rate | |||

| Adjusted results (risk-standardized) | |||

| Mortality ratea | |||

| MS-DRG 469 | 10.4 | 14.4 | 0.41 |

| MS-DRG 470 | 0.56 | 0.66 | 0.64 |

| Readmission rateb | |||

| MS-DRG 469 | 29.3 | 23.0 | 0.37 |

| MS-DRG 470 | 7.53 | 7.83 | 0.71 |

| Prolonged length-of-stay, mean | |||

| MS-DRG 469 | 51.0 | 43.4 | 0.36 |

| MS-DRG 470 | 13.7 | 12.1 | 0.38 |

| Episode spending, mean | |||

| MS-DRG 469 | $45,137 | $41,995 | 0.32 |

| MS-DRG 470 | 21,748 | 22,058 | 0.40 |

SOURCE Authors’ analysis of Medicare claims data for 2010–16. NOTES Joint replacement is major joint replacement of the lower extremity. There were 295 BPCI hospitals and 658 hospitals that had nonzero volume and contributed to outcomes in our claims-based analysis. The baseline period for BPCI hospitals is the four quarters before they enrolled in the initiative, while for CJR hospitals it is the four quarters before April 2016. Hospitals that transitioned from the BPCI initiative to the CJR program are considered CJR hospitals. Volumes have been adjusted to reflect a 100 percent Medicare beneficiary inpatient sample. 469 and 470 refer to Medicare Severity-Diagnosis Related Groups. A two-tailed p value of 0.05 was considered significant.

Number of beneficiaries who died during an episode in the measurement period over the total number of episodes in the hospital group.

Number of episodes with a readmission over the total number of episodes with a discharge alive in the hospital group.

Unadjusted comparisons of baseline performance measures between BPCI and CJR hospitals did not reveal significant differences with respect to baseline readmission rates and mortality rates for either MS-DRG 469 or 470 (exhibit 3). For MS-DRG 470, BPCI hospitals exhibited slightly higher baseline episode spending (by about 1 percent) and had a higher percentage of cases with prolonged length-of-stay. In adjusted multivariable analysis, the two hospital groups did not exhibit significant differences in baseline mortality rates, readmission rates, episode cost, or prolonged length-of-stay.

Hospitals continuing in CJR by mandate exhibited similar patterns of volume and spending when compared to BPCI hospitals (appendix exhibit A4).27 However, they had 7 percent higher baseline episode spending than BPCI hospitals for MS-DRG 470 in an adjusted analysis.

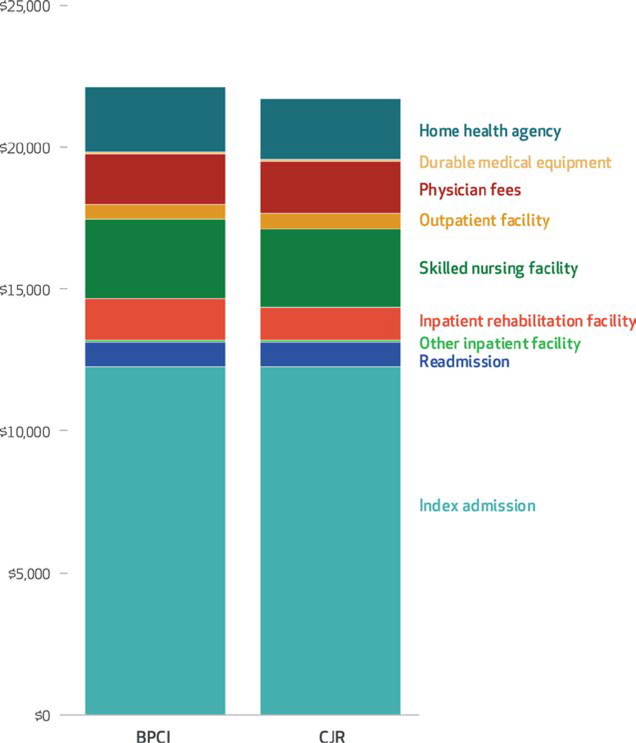

Episode Cost Components

The breakdown of baseline episode spending was generally similar between BPCI and CJR hospitals for MS-DRG 470 (exhibit 4). However, BPCI hospitals had higher spending (a difference of 3 percent or more) on postacute care provided by some types of institutions (including inpatient rehabilitation facilities and home health agencies) but not others (such as skilled nursing facilities). BPCI hospitals also had marginally lower spending attributable to outpatient facility services and durable medical equipment.

Exhibit 4.

Average episode spending breakdown by component for major hip and knee joint replacement for hospitals participating in the Bundled Payments for Care Improvement (BPCI) initiative and Comprehensive Care for Joint Replacement (CJR) program, 2016

Source/Notes: SOURCE Authors’ analysis of Medicare claims data for 2010–16. NOTES Episode spending is for Medicare Severity–Diagnosis-Relate Group (MS-DRG) 470, major hip and knee joint replacement or reattachment of lower extremity without a major complicating or comorbid condition. Other inpatient facilities include long-term acute care facilities, inpatient psychiatric facilities, and critical access hospitals.

Compared to BPCI hospitals, CJR mandate hospitals exhibited significantly higher baseline spending (a difference of 7 percent or more) for inpatient rehabilitation facilities, skilled nursing facilities, home health agencies, and professional fees. Additional comparisons of BPCI and CJR mandate hospitals are available in the appendix.27

Discussion

In this first study to compare hospitals participating in Medicare’s mandatory and voluntary joint replacement bundled payment programs, we found that the hospital groups exhibited significant differences in organizational characteristics without large differences in baseline quality or spending performance. Specifically, BPCI hospitals had higher volumes and also differed from CJR hospitals with respect to key characteristics such as size, profit status, and Medicare utilization, but the two groups were similar with respect to exposure to financial risk and risk-standardized measures of baseline quality and episode spending. While BPCI hospitals had higher spending on institutional postacute care than CJR hospitals, these differences represented small proportions of total episode spending.

Taken together, these findings have three important implications for policy makers debating how to most effectively implement voluntary or mandatory bundled payment.16 First, the differences in organizational characteristics between BPCI and CJR hospitals demonstrate that Medicare’s voluntary and mandatory programs have engaged different types of hospitals to date and that results from BPCI might not be as generalizable as those from CJR. While Medicare may have intended to select a different set of hospitals in the two programs, our analysis is the first to empirically describe on which characteristics the two hospital groups differ.

On one hand, the observed differences imply that required participation can provide more generalizable evidence about the impact of bundled payment as a national value-based Alternative Payment Model. Furthermore, CJR has engaged hospitals that, on average, perform similarly to hospitals that chose to volunteer for BPCI. On the other hand, the differences highlight the possibility that extending voluntary programs such as BPCI to hospitals that would not otherwise volunteer (like most in CJR) might not achieve the same results if required participants had fewer resources, weaker leadership, or less capacity to perform in at-risk arrangements. To that point, it is notable that CJR hospitals had lower joint replacement volumes in the year preceding participation (exhibit 3).

These findings could temper policy makers’ expectations that either voluntary or mandatory programs alone can achieve the desired broad impact. Instead, our results suggest that both voluntary and mandatory approaches can play an important role in engaging hospitals across the country, and that perhaps policy makers should not restrict policy options to one approach over the other. Our study highlights the fact that whichever path or paths CMS selects, emerging bundled payment policies, to reach beneficiaries across the US, should engage a broad range of hospitals for cost savings and quality improvement. This would require approaches that use either mandatory programs or additional targeted voluntary programs, as exemplified by the approach that Medicare has adopted in its accountable care organization policies: While early programs tended to engage large, urban provider organizations, emerging models focus explicitly on rural and small physician-led organizations.32

Second, based on the similarities observed in baseline quality and spending performance between the hospital groups, existing mandates do not appear, on average, to have disadvantaged mandatory participants compared to voluntary participants with respect to reducing spending or improving quality. This could mitigate some concerns about fairness under mandatory bundled payment. With the exception of baseline episode spending, BPCI hospitals and those in the thirty-four markets continuing in CJR were also similar. These results underscore the fact that mandatory programs may represent one way to shift the nation toward value appropriately by engaging hospitals that otherwise would not participate in voluntary programs.

Third, our results call attention to future work needed to address key questions about voluntary and mandatory orthopedic bundled payment programs. While we identified differences in key organizational characteristics (such as profit and teaching status and bed number), it remains unclear whether or how these differences reflect hospitals’ ability to ultimately succeed in joint replacement programs. Because our analysis demonstrates that BPCI and CJR hospitals are similar with respect to several organizational characteristics, such evidence would help clarify the utility of implementing mandatory versus voluntary programs.

Similarly, although we found similar baseline quality and spending, future work must fully evaluate the impact of voluntary and mandatory joint replacement programs on spending and patient outcomes. It would be particularly helpful to compare outcomes between hospitals that would participate only if mandated to do so and those that volunteer—comparisons that could provide empirical evidence about whether mandatory models provide additional benefit beyond voluntary models. Such data are particularly important given reductions in the numbers of markets and hospitals required to participate in CJR going forward (see online appendix for analyses comparing BPCI hospitals to those in the 34 markets continuing in CJR).27

Ultimately, outcome data from both original CJR hospitals and future CJR mandate hospitals are necessary to help policy makers determine how to apply bundled payment to joint replacement in broader geographical areas or other procedures and episodes.

Conclusion

Hospitals that bundle joint replacement in the voluntary and mandatory CMS programs vary with respect to a number of organizational characteristics but not baseline spending or quality. Hospitals in voluntary programs spend more on institutional postacute care at baseline, though the differences are small. As policy makers debate how to most effectively implement bundled payment, these findings suggest that mandatory programs may be required to generate robust evidence and that either mandatory or additional targeted voluntary programs may be required to engage more hospitals in bundled payment.

Supplementary Material

Acknowledgment

This work was funded by the Commonwealth Fund. Amol Navathe serves as adviser to Navvis and Company, Navigant Inc., and Indegene Inc.; receives an honorarium from Elsevier Press; and receives grant funding from Oscar Health Insurance and Hawaii Medical Services Association, none of which are related to this study. Josh Rolnick is a consultant to Tuple Health, Inc., which is not related to this study. Ezekiel Emanuel is a frequent paid event speaker at numerous conventions, committee meetings, and professional health care gatherings and is a venture partner with Oak HC/FT, none of which are related to this study. The authors thank participants in the Division of General Internal Medicine work-in-progress seminar at the University of Pennsylvania.

Bios for 2017–1358_Navathe

Bio 1: Amol S. Navathe (amol@pennmedicine.upenn.edu) is an assistant professor in the Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania, in Philadelphia.

Bio 2: Joshua M. Liao is an assistant professor in the Department of Medicine at the University of Washington School of Medicine, in Seattle.

Bio 3: Daniel Polsky is the Robert D. Eilers Professor in Health Care Management and Economics and executive director of the Leonard Davis Institute of Health Economics, both at the University of Pennsylvania.

Bio 4: Yash Shah is a research assistant in the Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania.

Bio 5: Qian Huang is a statistical analyst in the Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania.

Bio 6: Jingsan Zhu is assistant director of data analytics in the Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania.

Bio 7: Zoe M. Lyon is a senior research coordinator, Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania.

Bio 8: Robin Wang is an undergraduate student in the College of Arts and Sciences, Cornell University, in Ithaca, New York.

Bio 9: Josh Rolnick is an associate fellow at the Leonard Davis Institute of Health Economics, University of Pennsylvania.

Bio 10: Joseph R. Martinez is an MD-PhD student in the Perelman School of Medicine, University of Pennsylvania.

Bio 11: Ezekiel J. Emanuel is the Diane V. S. Levy and Robert M. Levy University Professor, chair of the Department of Medical Ethics and Health Policy, and vice provost for global initiatives, at the University of Pennsylvania.

Contributor Information

Amol S. Navathe, Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania, in Philadelphia..

Joshua M. Liao, Department of Medicine at the University of Washington School of Medicine, in Seattle..

Daniel Polsky, Robert D. Eilers Professor in Health Care Management and Economics and executive director of the Leonard Davis Institute of Health Economics, both at the University of Pennsylvania..

Yash Shah, Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania..

Qian Huang, Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania..

Jingsan Zhu, Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania..

Zoe M. Lyon, Department of Medical Ethics and Health Policy, Perelman School of Medicine, University of Pennsylvania..

Robin Wang, College of Arts and Sciences, Cornell University, in Ithaca, New York..

Josh Rolnick, Leonard Davis Institute of Health Economics, University of Pennsylvania..

Joseph R. Martinez, Perelman School of Medicine, University of Pennsylvania..

Ezekiel J. Emanuel, Diane V. S. Levy and Robert M. Levy University Professor, chair of the Department of Medical Ethics and Health Policy, and vice provost for global initiatives, all at the University of Pennsylvania.

NOTES

- 1.CMS.gov. Bundled Payments for Care Improvement (BPCI) initiative: general information [Internet]. Baltimore (MD): Centers for Medicare and Medicaid Services; [last updated 2018 Feb 16; cited 2018 Mar 8]. Available from: https://innovation.cms.gov/initiatives/bundled-payments/ [Google Scholar]

- 2.Centers for Medicare and Medicaid Services. Medicare Program; cancellation of Advancing Care Coordination through Episode Payment and Cardiac Rehabilitation Incentive Payment Models; changes to Comprehensive Care for Joint Replacement Payment Model (CMS-5524-P). Federal Register [serial on the Internet]. 2017. August 17 [cited 2018 Mar 7]. Available from: https://www.gpo.gov/fdsys/pkg/FR-2017-08-17/pdf/2017-17446.pdf [PubMed]

- 3.Centers for Medicare and Medicaid Services. Medicare Program; Comprehensive Care for Joint Replacement Payment Model for acute care hospitals furnishing lower extremity joint replacement services. Final rule. Fed Regist. 2015;80(226):73273–554. [PubMed] [Google Scholar]

- 4.Dummit LA, Kahvecioglu D, Marrufo G, Rajkumar R, Marshall J, Tan E, et al. Association between hospital participation in a Medicare bundled payment initiative and payments and quality outcomes for lower extremity joint replacement episodes. JAMA. 2016;316(12):1267–78. [DOI] [PubMed] [Google Scholar]

- 5.Navathe AS, Troxel AB, Liao JM, Nan N, Zhu J, Zhong W, et al. Cost of joint replacement using bundled payment models. JAMA Intern Med. 2017;177(2):214–22. [DOI] [PubMed] [Google Scholar]

- 6.Liao JM, Holdofski A, Whittington GL, Zucker M, Viroslav S, Fox DL, et al. Baptist Health System: succeeding in bundled payments through behavioral principles. Healthc (Amst). 2017;5(3):136–40. [DOI] [PubMed] [Google Scholar]

- 7.Chen LM, Ryan AM, Shih T, Thumma JR, Dimick JB. Medicare’s Acute Care Episode Demonstration: effects of bundled payments on costs and quality of surgical care. Health Serv Res. 2017. March 28 [Epub ahead of print]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ellimoottil C, Ryan AM, Hou H, Dupree J, Hallstrom B, Miller DC. Medicare’s new bundled payment for joint replacement may penalize hospitals that treat medically complex patients. Health Aff (Millwood). 2016;35(9):1651–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Group Lewin. CMS Bundled Payments for Care Improvement (BPCI) initiative models 2–4: year 1 evaluation and monitoring annual report [Internet]. Baltimore (MD): Centers for Medicare and Medicaid Services; 2015. February [cited 2018 Mar 8]. Available from: https://innovation.cms.gov/Files/reports/BPCI-EvalRpt1.pdf [Google Scholar]

- 10.Group Lewin. CMS Bundled Payments for Care Improvement initiative models 2–4: year 2 evaluation and monitoring annual report [Internet]. Baltimore (MD): Centers for Medicare and Medicaid Services; 2016. August [cited 2018 Mar 8]. Available from: https://innovation.cms.gov/Files/reports/bpci-models2-4-yr2evalrpt.pdf [Google Scholar]

- 11.Ryan AM, Krinsky S, Adler-Milstein J, Damberg CL, Maurer KA, Hollingsworth JM. Association between hospitals’ engagement in value-based reforms and readmission reduction in the Hospital Readmission Reduction Program. JAMA Intern Med. 2017;177(6):862–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Centers for Medicare and Medicaid Services. Preliminary performance year 1 reconciliation payments, Comprehensive Care for Joint Replacement Model 2017. [Internet]. Baltimore (MD): CMS; [cited 2018 Apr 25]. Available for download from: https://innovation.cms.gov/Files/x/cjr-py1reconpym.xlsx [Google Scholar]

- 13.Ibrahim SA, Kim H, McConnell KJ. The CMS comprehensive care model and racial disparity in joint replacement. JAMA. 2016;316(12):1258–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Centers for Medicare and Medicaid Services. Medicare Program; cancellation of Advancing Care Coordination through Episode Payment and Cardiac Rehabilitation Incentive Payment Models; changes to Comprehensive Care for Joint Replacement Payment Model: extreme and uncontrollable circumstances policy for the Comprehensive Care for Joint Replacement Payment Model. Final rule; interim final rule with comment period. Fed Regist. 2017;82(230):57066–104. [PubMed] [Google Scholar]

- 15.CMS.gov. BPCI Advanced [Internet]. Baltimore (MD): Centers for Medicare and Medicaid Services; [last updated 2018 Mar 7; cited 2018 Mar 8]. Available from: https://innovation.cms.gov/initiatives/bpci-advanced [Google Scholar]

- 16.Verma S Medicare and Medicaid need innovation. Wall Street Journal. 2017. September 19. [Google Scholar]

- 17.CMS.gov. Comprehensive Care for Joint Replacement model [Internet]. Baltimore (MD): Centers for Medicare and Medicaid Services; [last updated 2018 Mar 8; cited 2018 Mar 8]. Available from: https://innovation.cms.gov/initiatives/cjr [Google Scholar]

- 18.CMS.gov. BPCI model 2: retrospective acute and post acute care episode [Internet]. Baltimore (MD): Centers for Medicare and Medicaid Services; [last updated 2018 Feb 16; cited 2018 Mar 8]. Available from: https://innovation.cms.gov/initiatives/BPCI-Model-2/index.html [Google Scholar]

- 19.Medicare.gov Hospital Compare: complication rate for hip/knee replacement patients [Internet]. Baltimore (MD): Centers for Medicare and Medicaid Services; [cited 2018 Mar 8]. Available from: https://www.medicare.gov/hospitalcompare/Data/Surgical-Complications-Hip-Knee.html [Google Scholar]

- 20.Werner RM, Goldman LE, Dudley RA. Comparison of change in quality of care between safety-net and non-safety-net hospitals. JAMA. 2008;299(18):2180–7. [DOI] [PubMed] [Google Scholar]

- 21.Tsai TC, Joynt KE, Wild RC, Orav EJ, Jha AK. Medicare’s bundled payment initiative: most hospitals are focused on a few high-volume conditions. Health Aff (Millwood). 2015;34(3):371–80. [DOI] [PubMed] [Google Scholar]

- 22.Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998;36(1):8–27. [DOI] [PubMed] [Google Scholar]

- 23.Van Walraven C, Austin PC, Jennings A, Quan H, Forster AJ. A modification of the Elixhauser comorbidity measures into a point system for hospital death using administrative data. Med Care. 2009;47(6):626–33. [DOI] [PubMed] [Google Scholar]

- 24.Silber JH, Rosenbaum PR, Koziol LF, Sutaria N, Marsh RR, Even-Shoshan O. Conditional length of stay. Health Serv Res. 1999;34(1 Pt 2):349–63. [PMC free article] [PubMed] [Google Scholar]

- 25.Sedrakyan A, Kamel H, Mao J, Ting H, Paul S. Hospital readmission and length of stay over time in patients undergoing major cardiovascular and orthopedic surgery: a tale of 2 states. Med Care. 2016;54(6):592–9. [DOI] [PubMed] [Google Scholar]

- 26.Joynt KE, Gawande AA, Orav EJ, Jha AK. Contribution of preventable acute care spending to total spending for high-cost Medicare patients. JAMA. 2013;309(24):2572–8. [DOI] [PubMed] [Google Scholar]

- 27. To access the appendix, click on the Details tab of the article online.

- 28.Southern DA, Quan H, Ghali WA. Comparison of the Elixhauser and Charlson/Deyo methods of comorbidity measurement in administrative data. Med Care. 2004;42(4):355–60. [DOI] [PubMed] [Google Scholar]

- 29.Buntin MB, Zaslavsky AM. Too much ado about two-part models and transformation? Comparing methods of modeling Medicare expenditures. J Health Econ. 2004;23(3):525–42. [DOI] [PubMed] [Google Scholar]

- 30.Abdi H Holm’s sequential Bonferroni procedure In: Salkind NJ, editor. Encyclopedia of research design. Thousand Oaks (CA): Sage Publications; 2010. 2:573–575]. [Google Scholar]

- 31.MacKinnon JG, White H. Some heteroskedasticity-consistent covariance matrix estimators with improved finite sample properties. J Econom. 1985;29(3):305–25. [Google Scholar]

- 32.CMS.gov. ACO Investment Model [Internet]. Baltimore (MD): Centers for Medicare and Medicaid Services; [last updated 2017 Mar 27; cited 2018 Mar 8]. Available from: https://innovation.cms.gov/initiatives/ACO-Investment-Model/ [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.