Abstract

Objective

To evaluate the comparative effectiveness of external facilitation (EF) vs external + internal facilitation (EF/IF), on uptake of a collaborative chronic care model (CCM) in community practices that were slower to implement under low‐level implementation support.

Study Setting

Primary data were collected from 43 community practices in Michigan and Colorado at baseline and for 12 months following randomization.

Study Design

Sites that failed to meet a pre‐established implementation benchmark after six months of low‐level implementation support were randomized to add either EF or EF/IF support for up to 12 months. Key outcomes were change in number of patients receiving the CCM and number of patients receiving a clinically significant dose of the CCM. Moderators’ analyses further examined whether comparative effectiveness was dependent on prerandomization adoption, number of providers trained or practice size. Facilitation log data were used for exploratory follow‐up analyses.

Data Collection

Sites reported monthly on number of patients that had received the CCM. Facilitation logs were completed by study EF and site IFs and shared with the study team.

Principal Findings

N = 21 sites were randomized to EF and 22 to EF/IF. Overall, EF/IF practices saw more uptake than EF sites after 12 months (ΔEF/IF‐EF = 4.4 patients, 95% CI = 1.87‐6.87). Moderators' analyses, however, revealed that it was only sites with no prerandomization uptake of the CCM (nonadopter sites) that saw significantly more benefit from EF/IF (ΔEF/IF‐EF = 9.2 patients, 95% CI: 5.72, 12.63). For sites with prerandomization uptake (adopter sites), EF/IF offered no additional benefit (ΔEF/IF‐EF = −0.9; 95% CI: −4.40, 2.60). Number of providers trained and practice size were not significant moderators.

Conclusions

Although stepping up to the more intensive EF/IF did outperform EF overall, its benefit was limited to sites that failed to deliver any CCM under the low‐level strategy. Once one or more providers were delivering the CCM, additional on‐site personnel did not appear to add value to the implementation effort.

Keywords: collaborative care models, depression, facilitation, health behavior change, implementation

WHAT THIS STUDY ADDS.

1. Section 1

Implementation strategies, or theory‐based tools and processes that help address barriers to evidence‐based practice adoption, can be effective but vary significantly in cost, intensity, and burden.

Facilitation is a flexible and often effective implementation strategy that can be delivered in several ways, including: using an external facilitator (EF) who helps with strategic thinking about site barriers; or combining EF with support for an on‐the‐ground internal facilitator (EF/IF) who also helps align the evidence‐based practice with site values and priorities. Although support for an internal facilitator increases the cost of facilitation, few studies have yet to compare the effects of EF vs EF/IF on evidence‐based practice uptake.

2. Section 2

On average, sites that were slower to implement an evidence‐based collaborative chronic care model that were stepped up to receive EF/IF saw slightly more uptake than sites stepped up to EF alone.

Moderation analyses showed that the benefit of EF/IF over EF was most substantial for sites that had no early adoption of a collaborative chronic care model; sites with any adoption fared equally well under EF or EF/IF.

1. INTRODUCTION

Implementation scientists generally acknowledge that, in many settings, passive, low‐level implementation support, such as manualization and training, will not suffice supporting adoption of mental health evidence‐based practices (EBPs). 1 , 2 , 3 However, little work to‐date has examined the best way to step up support for sites that are slower to implement under low‐intensity, low‐cost implementation support. This knowledge gap is particularly salient for smaller, community‐based practices that are often under‐resourced and facing numerous, heterogeneous barriers to adoption of new EBPs, 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 yet nonetheless see the vast majority of behavioral health care patients. 11

Collaborative chronic care models (CCMs) have faced numerous barriers to widespread community implementation in spite of strong evidence that they improve physical and mental health outcomes for persons with mental disorders. 12 CCMs, which provide proactive care in a medical home environment, including patient self‐management support, guideline support, and care management, 12 , 13 are especially warranted for mood disorders (depression, bipolar disorder) given their association with substantial morbidity, mortality, and health care costs. 14 , 15 Continued failure to adopt CCMs in community practices leads to adverse public health impacts, notably increased medical costs and patient impairment, morbidity, and mortality. 13 , 16 , 17 , 18

Implementation strategies are theory‐based methods or techniques designed to mitigate barriers to EBP adoption, implementation, and sustainability. 19 Implementation strategies can enhance adoption of EBPs like CCMs, but can also vary significantly in their cost and intensity, 20 , 21 , 22 and evidence of their comparative effectiveness on uptake is limited, especially within smaller, community‐based settings. 1 , 11 Passive strategies (eg, EBP manualization, short‐term training) are inexpensive and easily scalable, but are not likely to be effective in addressing all organizational barriers to uptake, 1 and practices may require more intensive support. More intensive strategies, however, may impose cost or other burdens on practices that could further encumber implementation efforts.

Facilitation, or interactive strategic thinking support for providers to encourage uptake of EBPs, is an implementation strategy that has been identified as central to successful implementation efforts. 23 , 24 , 25 , 26 Facilitation has also been shown to encourage EBP uptake—including for CCMs 27 , 28 , 29 —in a variety of health care settings. 25 , 26 , 27 , 28 , 29 Facilitation is a flexible strategy wherein a facilitator with expertise in the EBP and organizational change works with providers implementing the EBP to address organizational and strategic barriers to EBP adoption, including competing priorities, leadership support, and/or resource deficits. 26 , 30 , 31 , 32

Within this flexible framework, however, different models of facilitation, of varying intensities, have been operationalized. 25 , 26 , 27 , 31 , 33 , 34 , 35 , 36 External facilitation (EF) relies on a facilitator that is external to the site and provides expert support and mentoring on both the EBP and strategic thinking. EF combined with internal facilitation (EF/IF) further adds protected time for an on‐site clinical manager to help align EBP activities with site priorities. Relative to EF alone, EF/IF is thought to better embed the EBP into clinical practice by combining external strategic support with on‐the‐ground credibility and direct access to leadership. 32 Adding IF, however, also adds burden, with respect to cost and need for identifying, training, and repurposing personnel. 34 Thus, there are open questions as to whether the added effort (and expense) of EF/IF results in higher uptake.

This question is particularly salient for practices that are slower to implement, or have failed to meet implementation benchmarks under lower‐level implementation support and require more intensive implementation support to achieve EBP implementation. Relative to EF alone, EF/IF may be necessary to overcome entrenched barriers. However, sites that have had limited success in implementing the EBP may also view IF as adding further burden to an implementation effort already misaligned with practice or provider needs. 37 This may be particularly true for practices that have had trouble engaging with the implementation effort—for example, having providers attend training or having any EBP adoption.At these sites, identifying and garnering support for an IF may be difficult. 38 Differences in practice size may also affect the effectiveness of EF/IF over EF, as larger community‐based practices may require dedicated on‐the‐ground support to overcome bureaucratic barriers or navigate larger administrative networks.

We examined these questions using data from the Adaptive Implementation of Effective Programs Trial (ADEPT). ADEPT, full details for which are available elsewhere, 34 was a clustered nonresponder sequential multiple assignment randomized trial (SMART) 39 , 40 , 41 designed to determine the best way to step up implementation support for community‐based practices that were slower to adopt a CCM, Life Goals (LG), under low‐level implementation support (slower implementers). As a secondary outcome of ADEPT, we analyzed the comparative effectiveness of stepping slower‐implementer sites up to EF vs EF/IF on site‐level delivery of LG over 12 months. We also examined whether this comparative effectiveness was moderated by practice size or two measures of early engagement, number of providers trained in LG and any LG adoption under the low‐level strategy. After analyses were completed, we also used task tracking data from the study EF and site‐identified IFs to explore potential mechanisms.

1.1. Hypothesis 1

Prior to analysis, we hypothesized that slower‐implementer sites initially randomized to receive EF/IF would show more uptake of the Life Goals CCM after 12 months than sites randomized to EF.

1.2. Hypothesis 2

We also hypothesized that, relative to EF alone, EF/IF would work best at slower‐implementer sites that were larger, and at sites that showed more early engagement and/or early adoption under the lower‐level support.

2. METHODS

Full details of the ADEPT study design have been published previously, 34 and the full study design is available as Figure S1. ADEPT was designed to develop an adaptive sequence of implementation strategies for encouraging uptake of LG for treating patients with depression at community‐based mental health and primary care clinics in Michigan and Colorado. Sites were eligible to participate if they had at least 100 unique patients diagnosed with depression (see Table S1 for list of ICD‐10 codes included) and could designate at least one provider with a background in mental health that could deliver individual or group LG sessions.

The study primary outcomes, patient‐level change in mental health quality of life and depression symptoms, were published previously. 33 Those analyses, however, only included ADEPT sites that had identified at least one patient (N = 27). Analyses presented here also include a further N = 16 sites that were randomized as part of ADEPT but had not identified patients, for a total of N = 43 sites. The study was approved by local institutional review boards and prospectively registered at clinicaltrials.gov.

2.1. The Life Goals Collaborative Care Model Intervention

ADEPT was designed to encourage delivery of Life Goals (LG), an evidence‐based CCM, by existing community‐based providers. LG focuses on three core components central to effective CCMs: patient self‐management, clinical information systems, and care management, 12 , 13 and has been shown effective at improving physical and mental health outcomes for patients with bipolar and other depressive disorders in several randomized trials. 18 , 42 , 43 , 44 , 45 , 46

The ADEPT LG program provided sites with a manualized program of psychosocial sessions and self‐management tools customizable to patient needs. The LG self‐management program comprised six sessions, to be delivered in groups or to individuals, lasting 50‐90 minutes each. LG patients completed an “Introduction” module first and a “Managing Your Care” session last; the four remaining sessions were chosen among a number of available mental health and wellness topics, including depression, anxiety, mania, physical activity, sleep, and substance abuse (Table S2). LG‐trained providers, who were predominantly clinical social workers, were encouraged to tailor content delivery to individual and/or group needs. ADEPT sites were also provided with manualized implementation support for patient care management and provider decision support, including a template for tracking patient progress and a guide to common medications used for mood disorders; however, ADEPT’s primary implementation target was encouraging delivery of LG sessions to patients in need.

2.2. Study design and implementation strategies

2.2.1. Run‐in phase

ADEPT was designed to compare the effectiveness of two augmentations to initial, low‐level implementation support for sites that had not met prespecified implementation benchmarks after the six‐month run‐in period. During this run‐in phase, sites received support informed by Replicating Effective Programs (REP). REP, based on the Centers for Disease Control's Research‐to‐Practice framework, 47 , 48 , 49 is derived from Social Learning Theory 50 and Rogers’ Diffusion of Innovation models. 51 For ADEPT, REP provided sites with an LG manual, secure access to a website with LG materials, a one‐day didactic training for providers and supervisors that covered LG content and delivery in both individual and group formats, and information on customizing program delivery (eg, tailoring modules to patient needs) and program advertising. 1 , 49 , 52 Technical assistance was also provided through quarterly newsletters, regular conference calls, and monthly progress reports. As part of the implementation effort, sites were asked to report two metrics of implementation progress to the technical assistant each month: (1) the number of patients that had completed any LG sessions; and (2) the number of patients that had completed a clinically significant dose of LG, defined as ≥3 sessions.

2.2.2. Identification and randomization of “slower‐implementer” sites for trial participation

After six months of support under REP, sites’ LG implementation was evaluated. Sites reporting LG delivery to ≥10 patients with ≥50% completing ≥3 or more sessions were considered early implementers and did not enter the trial. Sites that failed to reach at least one of these benchmarks—for example, delivering LG to <10 patients or <50% of patients received a clinically significant dose—were considered slower implementers and entered the trial. This cutoff was determined based on prior work that showed that sites that had not reached this level of implementation under low‐level support after six months were unlikely to achieve meaningful implementation with fidelity (ie, to adequate dose) without additional support. 33 , 53 , 54

At month six, slower‐implementer sites were randomized to step up their support by adding either EF only or EF/IF. Randomization was stratified by state (Colorado or Michigan), practice type (primary care or mental health), and site‐averaged patient mental health quality of life (MH‐QOL, measured by the SF‐12 55 ; three categories: low [<40]; high [≥40]; no patients listed). The study analyst used SAS to generate stratified permuted‐block random allocation lists, and the study EF communicated intervention assignment during initial outreach.

2.2.3. Facilitation strategies: external facilitation vs external + internal facilitation

All slower‐implementer sites received support from the study EF, a licensed clinical social worker that received training through the Behavioral Health QUERI implementation facilitation program 31 , 56 and had extensive experience delivering mental health EBPs in clinical settings. The EF, who was study‐funded, mentored sites in addressing barriers to EBP adoption through a minimum of 12 semi‐structured biweekly calls with each site. Table S3 provides an overview of the general EF and EF/IF facilitation processes, as well as the different operationalized implementation strategies 20 that facilitators might use during each phase.

Sites that stepped up immediately to EF/IF supplemented EF with protected time for a clinical manager identified by the site to serve as IF, or the on‐the‐ground liaison for the EF, addressing implementation barriers or needs and helping to entrench site adoption of LG by, for example, aligning LG with provider and/or leadership priorities. 31 , 57 Sites randomized to EF/IF were offered $5500 for each six‐month period of EF/IF (up to $11 000 total) to protect time for the IF to work on LG implementation. This amount was based on previous research that estimated per‐site costs of deploying IF. 58 IF identification started at training, with providers from all sites asked to identify one or more individuals at their site that could generally “get things done.” The EF then worked with sites randomized to EF/IF to select and train the IF. IFs were expected to participate in biweekly calls with the EF for a minimum of 12 weeks in addition to performing their on‐site implementation duties.

2.2.4. Facilitation tracking data

Due to concerns about cost and burden on the smaller community practices being studied, a formal process evaluation was not conducted as part of the ADEPT study. However, as part of their study duties, facilitators (EF and site IFs) were asked to track the time and content of their facilitation work using a short tracking form that asked for the date, contact type (phone call, email, etc), time spent, other personnel involved, and primary activity type for each facilitation task (Figure S2). Activity type was coded as one of 10 standardized categories developed for tracking facilitator duties. 34 EF files also recorded whether and when sites opted to discontinue facilitation, ending all EF (and, as applicable, IF) activity.

3. ANALYSES

Our intent‐to‐treat analytical sample included all slower‐implementer sites randomized after the six‐month run‐in phase. Descriptive statistics and bivariate analyses compared characteristics for sites stepped up to EF and EF/IF. Mixed‐effects models were used to compare slow‐implementer sites randomized to the initial EF augmentation vs EF/IF on longitudinal change in site‐level number of patients receiving any LG and the number of patients that received ≥3 LG sessions in the 12 months postrandomization. These outcomes were reported by sites monthly from the start of the run‐in period through 12 months postrandomization. When months were not reported, the prior month's numbers were carried forward. All sites provided baseline and 12‐month numbers.

3.1. Main effect analyses

The primary contrast for both outcomes was the between‐groups difference in site change over the 12‐month period. The unit of analysis was the site and all outcomes are site‐level (see Smith et al, 2019 for patient‐level results 33 ). Fixed effects were included for the intercept, time (in months), and a group‐by‐time interaction, where group was an indicator of initial randomization to EF vs EF/IF augmentation. Models also included a random effect for site and were adjusted for state, practice type, site‐aggregated MH‐QOL stratum, and site size (number of patients, logged to account for strong positive skew).

3.2. Moderator analyses

Moderators analyses examined whether the comparative effectiveness of initial augmentation with EF vs EF/IF varied significantly by (a) site size, as defined above; (b) number of providers that received LG training during the run‐in period; and/or (c) whether sites showed any adoption of LG during the run‐in phase (adopters vs nonadopters). Moderator models followed the same structure as above but added a three‐way interaction between the moderator of interest, group, and time, as well as any necessary lower‐order terms.

3.3. Exploratory facilitation analyses

Key metrics from site‐level tracking files for the study EF and all site IFs were summarized and combined. Descriptive statistics examined variability in total time spent by study EF and site IFs across sites. Bivariate analyses examined how total EF and IF time and facilitation discontinuation differed by treatment arm and moderators of interest, as informed by significant model findings.

All analyses were performed in Stata version 15.1, with criteria for statistical significance set at alpha = 0.05.

4. RESULTS

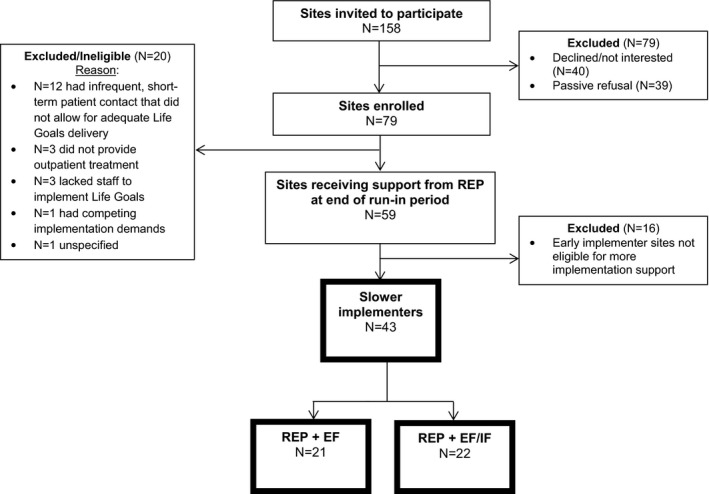

Seventy‐nine sites received support through REP for implementing LG. During the prerandomization run‐in phase, N = 20 dropped or were deemed ineligible for participation, leaving N = 59 sites. After the six‐month run‐in phase, N = 16 were designated as early implementers, leaving N = 43 slower‐implementer sites to be randomized for ADEPT. N = 21 sites were randomized to begin EF and N = 22 EF/IF (see Figure 1 for full study flow diagram). Sites were predominantly community mental health and rural, and slightly more were in Colorado than Michigan (Table 1). Median site size was 1000 patients (interquartile range (IQR): 400‐4000). Sites trained an average of 2.8 providers (range: 1‐8) and N = 22 (51%) failed to adopt any LG in the prerandomization run‐in period (ie, were nonadopters).

FIGURE 1.

Full study flow diagram. Note: EF = External Facilitation; EF/IF = External +Internal Facilitation. All analyses examined the N = 43 sites that were deemed slower implementers after the six‐month run‐in period and randomized to either EF or EF/IF, shown in boxes with dark outline.

TABLE 1.

Descriptive statistics for sites, overall and by treatment

| Overall (N = 43) | EF (N = 21) | EF/IF (N = 22) | P‐value | |

|---|---|---|---|---|

| Demographics | ||||

| Michigan (vs Colorado) | 17 (40%) | 8 (38%) | 9 (41%) | .850 |

| Community Mental Health (vs Primary Care) | 37 (86%) | 18 (86%) | 19 (86%) | .951 |

| Number of patients (Mean, SD) | 3891 (15 124) | 6301 (21 500) | 1589 (2418) | .313 |

| Rural (vs urban/suburban) | 24 (56%) | 10 (48%) | 14 (64%) | .290 |

| Prerandomization (baseline) implementation outcomes | ||||

| Number of providers trained (Mean, SD) | 2.8 (1.6) | 2.38 (1.02) | 3.23 (3.54) | .087 |

| Number of patients receiving Life Goals (Mean, SD) | 3.05 (4.61) | 3.33 (5.37) | 2.77 (3.85) | .695 |

| Number of patients receiving ≥ 3 sessions of Life Goals (Mean, SD) | 1.63 (2.48) | 1.62 (2.31) | 1.64 (2.68) | .982 |

| Adopter sites (vs nonadopters) | 21 (49%) | 11 (52%) | 10 (45%) | .650 |

| End‐of‐study implementation outcomes | ||||

| Number of patients receiving any Life Goals (Mean, SD) | 7.93 (11.40) | 6.52 (8.61) | 9.27 (13.62) | N/A |

| Number of patients receiving ≥ 3 sessions of Life Goals (Mean, SD) | 4.86 (7.46) | 3.81 (4.74) | 5.86 (9.37) | N/A |

Adopter status refers to whether site reported delivering Life Goals to ≥ 1 patient prior to randomization.

Abbreviations: EF, External Facilitation; EF/IF, External +Internal Facilitation; SD, standard deviation.

The study EF documented facilitation tasks for all 43 sites (median tasks = 23; range = 7‐50). For N = 22 EF/IF sites, N = 14 (64%) successfully identified an IF and had an IF record at least one task (median = 24; range = 4‐55). N = 12 sites discontinued facilitation during the 12‐month study, including N = 7 EF/IF and N = 5 EF sites; however, all sites remained enrolled in the study, reported study outcomes, and were included in analyses.

4.1. Main effects

On average, sites delivered LG to 4.9 additional patients (3.2 patients with ≥3 sessions) over the 12 months postrandomization; however, this distribution was highly skewed (median = 0; range = 0‐64). N = 14 sites (32.5%) failed to deliver LG to any patients by study end.

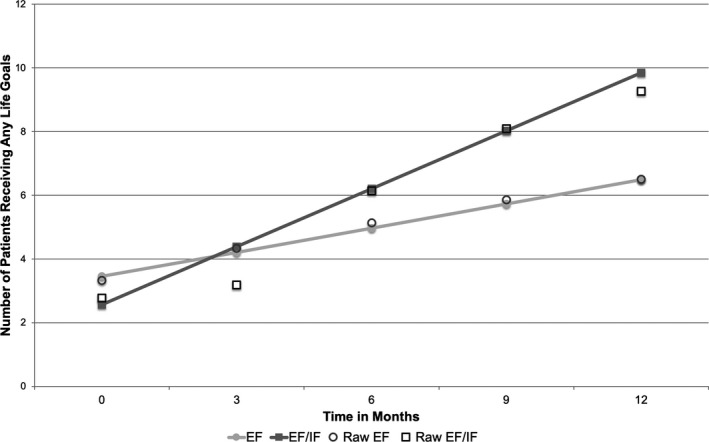

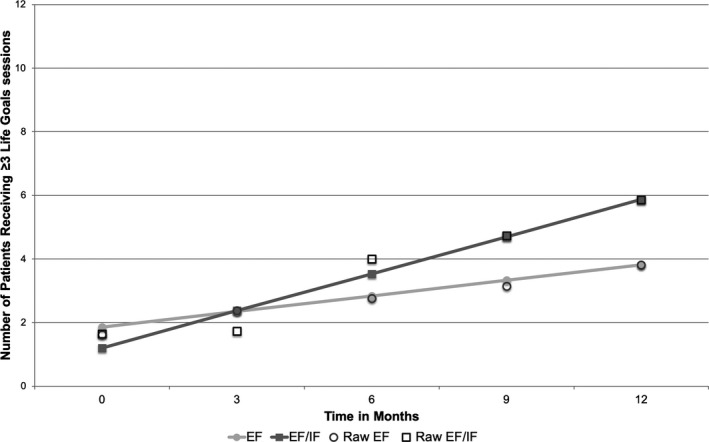

As we hypothesized, overall, sites that stepped up immediately to EF/IF saw more uptake after 12 months than sites stepped up to EF. EF sites had an average increase of 3.2 LG patients (median: 0; range: 0‐22) compared to 6.5 patients at EF/IF sites (median: 0.5; range: 0‐64). Adjusted models predicted that EF/IF resulted in an additional 4.37 patients receiving LG over 12 months relative to EF sites (95% CI = 1.87‐6.87, P = .001) (Figure 2). Similar results were found for change in LG patients receiving ≥3 sessions; adjusted models found EF/IF resulted in an average of 2.70 more patients receiving ≥3 sessions relative to EF sites (95% CI = 0.99‐4.48, P = .002) (Figure 3). Full model results are shown in Table S4.

FIGURE 2.

Longitudinal change in site reports of number of patients receiving any Life Goals, by treatment arm (Site N = 43). Note: EF = External Facilitation; EF/IF = External +Internal Facilitation. Site‐level data on patient receipt of Life Goals were reported monthly by each site; predictions and raw data are shown for months 0, 3, 6, 9, and 12 after randomization.

FIGURE 3.

Longitudinal change in site reports of number of patients receiving ≥ 3 Life Goals sessions, by treatment arm (Site N = 43). Note: EF = External Facilitation; EF/IF = External +Internal Facilitation. Site‐level data on patient receipt of Life Goals were reported monthly by each site; predictions and raw data are shown for months 0, 3, 6, 9, and 12 after randomization.

4.2. Moderator analyses

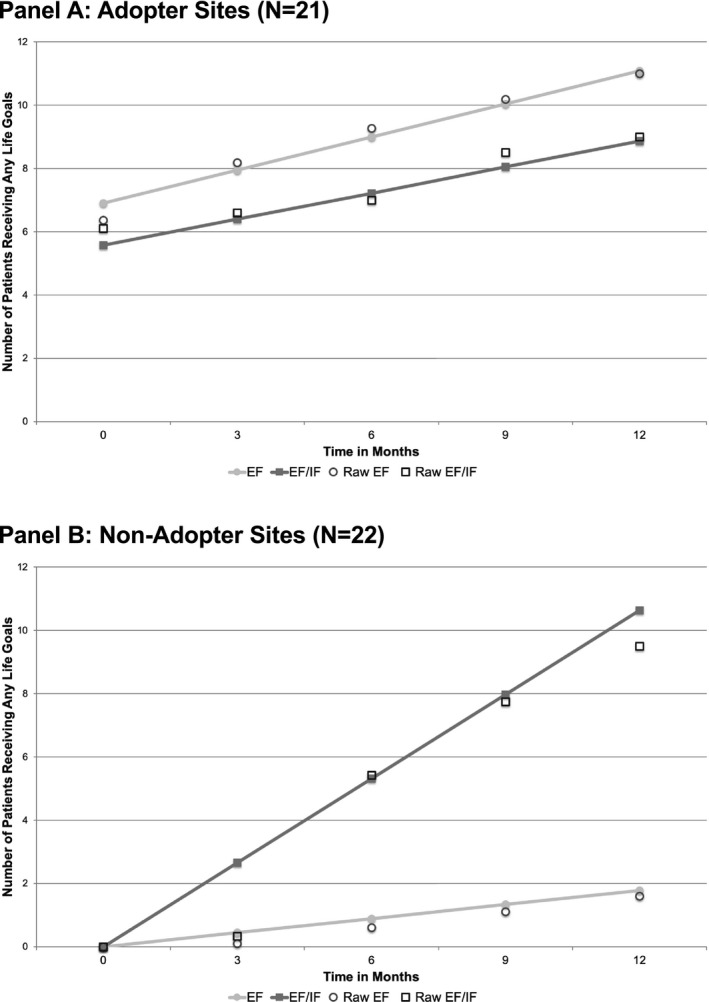

Moderator analyses confirmed our hypotheses that the comparative benefit of EF/IF over EF differed by site characteristics, but not in the expected direction. Rather, sites that had not delivered any LG under REP during the run‐in phase (nonadopter sites) saw significantly more benefit from EF/IF over EF than sites that had delivered LG (adopter sites). For nonadopter sites, EF/IF resulted in, on average, an additional 11 patients receiving LG after 12 months compared to 1.8 additional patients under EF, a difference of 9.2 patients (95% CI: 5.72, 12.63; P < .001) (Figure 4, Panel B). At adopter sites, however, EF resulted in an average increase of 4.2 LG patients, compared to 3.3 patients under EF/IF, or an advantage of 0.9 more patients for EF (95% CI: −4.40, 2.60; P = .62) (Figure 4, Panel A). Similar results were found for change in LG patients receiving ≥3 sessions. For nonadopter sites, EF/IF yielded, on average, 6.1 more patients than EF after 12 months, while for adopter sites EF yields 0.9 more patients than EF/IF sites.

FIGURE 4.

Longitudinal change in site reports of number of patients receiving any Life Goals sessions, by treatment arm and adopter status (Site N = 43). Note: EF = External Facilitation; EF/IF = External +Internal Facilitation. Site‐level data on patient receipt of Life Goals were reported monthly by each site; predictions and raw data are shown for months 0, 3, 6, 9, and 12 after randomization. Adopter status refers to whether the site reported delivering Life Goals to ≥1 patient prior to randomization.

Neither providers trained nor site size were significant moderators of either outcome. Full moderator model results are shown in Table S5A‐C.

4.3. Sensitivity analyses

One EF/IF site saw much higher uptake during the 12‐month period, offering LG to an additional 64 patients by the end of the study (vs an average of 3.5 additional patients for all other sites). As this site was also a nonadopter, we re‐ran main effect and adopter/nonadopter moderation models excluding this site to test whether it was driving all results. All models retained significant (though smaller magnitude) end‐of‐study differences in predicted uptake.

4.4. Exploratory facilitation analyses

The study EF recorded a median of 5.4 hours at EF sites and 5.1 hours at EF/IF sites (P = .88), and time spent did not differ significantly by adopter status (P = .88). Facilitation discontinuation patterns, however, did vary by adopter status. Although overall more EF/IF sites discontinued facilitation, among nonadopters discontinuation was more prevalent at EF (4/10) than EF/IF sites (3/12). Adopter sites, however, saw more facilitation discontinuation at EF/IF sites (4/10) than EF sites (1/11).

IF logs revealed that nonadopter EF/IF sites were more likely to successfully identify an IF (8/12) than adopter sites (5/10). Once identified, IFs at nonadopter sites also logged more facilitation time (8.3 hours) than at adopter sites (5.2 hours).

5. DISCUSSION

These secondary analyses of the ADEPT study sought to examine, for sites that were not able to successfully implement the Life Goals CCM under low‐level implementation support, whether augmenting this support with more intensive and expensive EF/IF resulted in more LG delivery after 12 months than augmentation with less expensive and intensive EF alone. In line with our first hypothesis, main effect analyses revealed that EF/IF did outperform EF for both outcomes of interest. However, these differences were not large, with EF/IF yielding just over four additional patients receiving any LG (three receiving a clinically significant dose) over 12 months, for an additional cost of $11 000 per site, which raises questions about taking EF/IF to full scale.

Our moderator analyses, however, helped to pinpoint which slow‐implementer sites most benefitted from stepping up to EF/IF vs EF. In particular, we found that sites that failed to deliver any LG during the prerandomization run‐in phase (nonadopter sites) improved significantly more under EF/IF than EF; at adopter sites, where one or more providers had delivered LG, however, there was no evidence of a difference in outcomes, on average, of EF/IF over EF alone.

These findings build on our prior patient‐level findings which showed that, contrary to hypotheses, patients at slow‐implementer sites randomized to augment with EF saw significantly more improvement in their mental health quality of life and depression scores than patients at sites randomized to EF/IF. Analyses presented here, however, include an additional N = 16 sites that were randomized as part of ADEPT but did not identify patients and thus could not be included in patient‐level analyses. N = 13 (81%) of these sites were also nonadopters, which likely explains the different main effect findings of these two analyses.

5.1. Early adoption as a moderator of implementation strategy effectiveness

Facilitation has been an effective and oft‐used implementation strategy, especially for CCMs. While specific mechanisms of facilitation effectiveness are still largely unknown, 26 , 59 facilitation is thought to be effective because facilitators have a diverse array of skills that allow for flexibility in accommodating site needs as they change over time and encouraging organizational learning. 30 , 59 , 60 With few notable exceptions, 61 , 62 , 63 few studies have compared the effectiveness of different forms of facilitation, or examined whether this comparative effectiveness is moderated by organizational characteristics or earlier implementation progress. Our results, however, suggested that sites that were further along in the implementation process benefitted differently—and, in fact, less—from more intensive EF/IF than sites that were further behind. Indeed, it was only for sites that had not seen any LG adoption that we found evidence of a positive effect of EF/IF over EF alone.

Of course, the more interesting question is why these differences emerged; while our process data for this study were limited, facilitation tracking data suggested some potential mechanisms, particularly for our adopter/nonadopter moderation finding that ran contrary to our hypothesis that sites with more early engagement or adoption would benefit more from the more intensive EF/IF augmentation. Acknowledging the additional burden of identifying and supporting an IF, as well as prior evidence that IF can be difficult to scale up with fidelity, 33 , 61 we hypothesized that sites that had any LG adoption might have more program buy‐in and be more willing to trade off the additional burden for increased program adoption. Rather, our exploratory findings suggested the opposite—adopter sites were more likely to end engagement with facilitation under EF/IF and also had less success identifying an IF than nonadopter sites. This suggests the additional burden of IF may have been viewed differently by adopter vs nonadopter sites, and that perhaps adopter sites—where one or more providers were delivering the intervention—viewed the addition of new, nonprovider personnel spearheading further adoption as unnecessary. From an organizational perspective, adding an IF to liaise for an EBP that providers may have already had ownership of may have even spurred role conflict or ambiguity, 64 , 65 , 66 especially if providers delivering LG were already filling designated IF roles, such as advocating for LG with leadership. Alternatively, given that these were slower‐implementer sites, prerandomization use at these sites could also indicate that providers had tried LG and found it to be a poor fit, in which case sites may have seen little benefit in taking on the burden of identifying an IF, or even continuing the facilitation process. While our limited process data could not confirm these mechanisms, higher rates of facilitation discontinuation and lower IF activity at adopter sites are suggestive. Conversely, at nonadopter sites, where providers had not delivered LG, facilitation data suggested that adding an IF may have protected against dropout; nonadopter IFs also logged nearly 60% more IF time than adopter IFs. These findings suggest that at nonadopter sites, offering support for an IF may have resulted in newly‐invested personnel who could revive interest and/or coalesce new support for LG, even six months following its initial introduction.

5.2. Limitations

This study had several important limitations. Most notably, LG uptake outcomes were site reported and may be subject to reporting bias; however, as outcomes were collected by the technical assistant unrelated to EF or IF, bias is unlikely to be associated with treatment. Second, we lacked data on LG implementation fidelity beyond patient receipt of a clinically significant dose; we also did not collect outcomes related to the implementation of the clinical decision support or care management components of LG. Third, given the lack of harmonized data collection systems across our community‐based sites as well as concerns about study burden, collection of organizational or formal process evaluation data, including characteristics of site IFs, as applicable, was limited. As such, it is possible that the adopter/nonadopter moderation effect was proxying for other unmeasured differences, such as leadership engagement or organizational climate, or for barriers to adoption that were better addressed by IF. Finally, the facilitation task data used in our exploratory analyses were also self‐reported by study and site facilitators and may also be subject to reporting bias.

5.3. When was EF/IF better for slower implementers?

Given the multitude and complexity of factors influencing EBP implementation, implementation efforts have frequently employed more complex implementation strategies, or assumed that multifaceted forms of implementation support were necessary for success, in spite of their added cost and burden. 67 , 68 , 69 , 70 In line with other studies showing that more complex implementation interventions are not necessarily more effective, 61 , 67 our study found that, for sites that were slower to implement under low‐level implementation support, stepping up to the more intensive strategy (EF/IF) was not always more effective than stepping up to a lower‐intensity strategy (EF). Rather, evidence of additional effectiveness was only found for those sites that showed no adoption of the EBP during the run‐in period; at sites where providers were or had already delivered LG, no evidence of a benefit was found. This suggests that, in a resource‐constrained environment that aims to step up support for sites that are slower to implement, sites with no prior EBP adoption should be prioritized to step up to EF/IF, while sites with prior adoption could receive either EF or EF/IF.

These findings also suggest that offering more intensive implementation support may only be more effective when it aligns with current implementation progress and barriers to implementation, and when the potential benefits of the additional resources proffered are neither duplicative of existing efforts (eg, providers already implementing the EBP) nor offset by the additional burden of implementing that support. Of course, our study only speaks to the comparative effectiveness of EF/IF vs EF for slower‐implementer sites; it is quite possible—and indeed, our study provides some preliminary support for the idea—that had EF/IF been introduced earlier for sites, it may have led to a different outcome.

As the number, intensity, and cost of implementation strategies continue to grow, implementation scientists and practitioners have increasing opportunities to tailor implementation strategy provision to current site needs, including current implementation success or engagement. Understanding how dynamic implementation efforts may alter the mechanisms or effectiveness of potential implementation strategies offers a promising and exciting opportunity for furthering both implementation science and success. Further work should explore how the support offered by different implementation strategies—and particularly perceived potential benefit and/or burden—may be viewed differently by different sites at different stages of the implementation process, and further understanding as to when more intensive support is likely to also be more effective support.

Supporting information

Author matrix

Appendix S1

ACKNOWLEDGMENT

Joint Acknowledgment/Disclosure Statement: This work was supported by the National Institute of Mental Health [R01MH099898]. We thank Dr Daniel Almirall (University of Michigan, Ann Arbor) for his contributions to the design of the study and interpretation of the results. Dr Bauer receives royalties for publications related to Life Goals from Springer and New Harbinger publishing companies. No other disclosures.

Smith SN, Liebrecht CM, Bauer MS, Kilbourne AM. Comparative effectiveness of external vs blended facilitation on collaborative care model implementation in slow‐implementer community practices. Health Serv Res. 2020;55:954–965. 10.1111/1475-6773.13583

REFERENCES

- 1. Kilbourne AM, Abraham KM, Goodrich DE, et al. Cluster randomized adaptive implementation trial comparing a standard versus enhanced implementation intervention to improve uptake of an effective re‐engagement program for patients with serious mental illness. Implement Sci. 2013;8:136 10.1186/1748-5908-8-136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82(4):581‐629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Fixsen DL, Naoom SF, Blase KA, et al. Implementation research: a synthesis of the literature. Published online 2005.

- 4. Roy‐Byrne PP, Sherbourne CD, Craske MG, et al. Moving treatment research from clinical trials to the real world. Psychiatr Serv. 2003;54(3):327‐332. 10.1176/appi.ps.54.3.327 [DOI] [PubMed] [Google Scholar]

- 5. Kilbourne AM, Schulberg HC, Post EP, Rollman BL, Belnap BH, Pincus HA. Translating evidence‐based depression management services to community‐based primary care practices. Milbank Q. 2004;82(4):631‐659. 10.1111/j.0887-378X.2004.00326.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Wells K, Miranda J, Bruce ML, Alegria M, Wallerstein N. Bridging community intervention and mental health services research. Am J Psychiatry. 2004;161(6):955‐963. 10.1176/appi.ajp.161.6.955 [DOI] [PubMed] [Google Scholar]

- 7. Hovmand PS, Gillespie DF. Implementation of evidence‐based practice and organizational performance. J Behav Health Serv Res. 2010;37(1):79‐94. 10.1007/s11414-008-9154-y [DOI] [PubMed] [Google Scholar]

- 8. Goodman RM, Steckler A, Kegler MC. Mobilizing organizations for health enhancement: theories of organizational change. Health Behav Health Educ Theory Res Pract. 1997;2:287‐312. [Google Scholar]

- 9. Schutte K, Yano EM, Kilbourne AM, Wickrama B, Kirchner JE, Humphreys K. Organizational contexts of primary care approaches for managing problem drinking. J Subst Abuse Treat. 2009;36(4):435‐445. [DOI] [PubMed] [Google Scholar]

- 10. Insel TR. Translating scientific opportunity into public health impact: a strategic plan for research on mental illness. Arch Gen Psychiatry. 2009;66(2):128‐133. [DOI] [PubMed] [Google Scholar]

- 11. Bauer MS, Deane Leader HU, Lai Z, Kilbourne AM. Primary care and behavioral health practice size: the challenge for healthcare reform. Med Care. 2012;50(10):843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Woltmann E, Grogan‐Kaylor A, Perron B, Georges H, Kilbourne AM, Bauer MS. Comparative effectiveness of collaborative chronic care models for mental health conditions across primary, specialty, and behavioral health care settings: systematic review and meta‐analysis. Am J Psychiatry. 2012;169(8):790‐804. [DOI] [PubMed] [Google Scholar]

- 13. Miller CJ, Grogan‐Kaylor A, Perron BE, Kilbourne AM, Woltmann E, Bauer MS. Collaborative chronic care models for mental health conditions: cumulative meta‐analysis and meta‐regression to guide future research and implementation. Med Care. 2013;51(10):922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Kessler RC, Wang PS. The descriptive epidemiology of commonly occurring mental disorders in the United States. Annu Rev Public Health. 2008;29:115‐129. 10.1146/annurev.publhealth.29.020907.090847 [DOI] [PubMed] [Google Scholar]

- 15. Parks J, Svendsen D, Singer P, Foti ME, Mauer B. Morbidity and mortality in people with serious mental illness. Alex VA Natl Assoc State Ment Health Program Dir NASMHPD Med Dir Counc. 2006;25(4):1‐87. [Google Scholar]

- 16. Katon W. Collaborative depression care models: from development to dissemination. Am J Prev Med. 2012;42(5):550‐552. 10.1016/j.amepre.2012.01.017 [DOI] [PubMed] [Google Scholar]

- 17. Katon W, Unützer J. Collaborative care models for depression: time to move from evidence to practice. Arch Intern Med. 2006;166(21):2304‐2306. 10.1001/archinte.166.21.2304 [DOI] [PubMed] [Google Scholar]

- 18. Bauer MS, McBride L, Williford WO, et al. Collaborative care for bipolar disorder: Part II. Impact on clinical outcome, function, and costs. Psychiatr Serv. 2006;57(7):937‐945. [DOI] [PubMed] [Google Scholar]

- 19. Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8(1):139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Waltz TJ, Powell BJ, Matthieu MM, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. 2015;10(1):109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Hoomans T, Severens JL. Economic evaluation of implementation strategies in health care. BioMed Central. 2014;9:168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Stetler CB, Damschroder LJ, Helfrich CD, Hagedorn HJ. A Guide for applying a revised version of the PARIHS framework for implementation. Implement Sci. 2011;6:99 10.1186/1748-5908-6-99 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Kitson AL, Rycroft‐Malone J, Harvey G, McCormack B, Seers K, Titchen A. Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implement Sci. 2008;3:1 10.1186/1748-5908-3-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Harvey G, Loftus‐Hills A, Rycroft‐Malone J, et al. Getting evidence into practice: the role and function of facilitation. J Adv Nurs. 2002;37(6):577‐588. [DOI] [PubMed] [Google Scholar]

- 26. Stetler CB, Legro MW, Rycroft‐Malone J, et al. Role of “external facilitation” in implementation of research findings: a qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implement Sci. 2006;1:23 10.1186/1748-5908-1-23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Kirchner J, Edlund CN, Henderson K, Daily L, Parker LE, Fortney JC. Using a multi‐level approach to implement a primary care mental health (PCMH) program. Fam Syst Health. 2010;28(2):161. [DOI] [PubMed] [Google Scholar]

- 28. Bauer MS, Miller CJ, Kim B, et al. Effectiveness of implementing a collaborative chronic care model for clinician teams on patient outcomes and health status in mental health: a randomized clinical trial. JAMA Netw Open. 2019;2(3):e190230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Solberg LI, Crain AL, Jaeckels N, et al. The DIAMOND initiative: implementing collaborative care for depression in 75 primary care clinics. Implement Sci. 2013;8(1):135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Dogherty EJ, Harrison MB, Graham ID. Facilitation as a role and process in achieving evidence‐based practice in nursing: A focused review of concept and meaning. Worldviews Evidence‐Based Nurs. 2010;7(2):76‐89. [DOI] [PubMed] [Google Scholar]

- 31. Kirchner JE, Ritchie MJ, Dollar KM, Gundlach P, Smith JL. Implementation facilitation training manual: Using external and internal facilitation to improve care in the Veterans Health Administration. Published online 2014.

- 32. Ritchie MJ, Parker LE, Edlund CN, Kirchner JE. Using implementation facilitation to foster clinical practice quality and adherence to evidence in challenged settings: a qualitative study. BMC Health Serv Res. 2017;17(1):294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Smith SN, Almirall D, Prenovost K, et al. Change in patient outcomes after augmenting a low‐level implementation strategy in community practices that are slow to adopt a Collaborative Chronic Care Model. Med Care. 2019;57(7):503‐511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Kilbourne AM, Almirall D, Eisenberg D, et al. Protocol: Adaptive Implementation of Effective Programs Trial (ADEPT): cluster randomized SMART trial comparing a standard versus enhanced implementation strategy to improve outcomes of a mood disorders program. Implement Sci. 2014;9:132 10.1186/s13012-014-0132-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Knox L, Brach C. The practice facilitation handbook: training modules for new facilitators and their trainers. Rockv MD Agency Healthc Res Policy. Published online 2013; 131‐144. [Google Scholar]

- 36. Liddy C, Laferriere D, Baskerville B, Dahrouge S, Knox L, Hogg W. An overview of practice facilitation programs in Canada: current perspectives and future directions. Healthc Policy. 2013;8(3):58. [PMC free article] [PubMed] [Google Scholar]

- 37. Stewart RE, Beidas RS, Mandell DS. Stop calling them laggards: strategies for encouraging nonadopters to incorporate evidence‐based practices. Psychiatr Serv. 2019;70(10):958‐960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Smith SN, Almirall D, Prenovost K, et al. Organizational culture and climate as moderators of enhanced outreach for persons with serious mental illness: results from a cluster‐randomized trial of adaptive implementation strategies. Implement Sci. 2018;13(1):93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Almirall D, Compton SN, Gunlicks‐Stoessel M, Duan N, Murphy SA. Designing a pilot sequential multiple assignment randomized trial for developing an adaptive treatment strategy. Stat Med. 2012;31(17):1887‐1902. 10.1002/sim.4512 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Lei H, Nahum‐Shani I, Lynch K, Oslin D, Murphy SA. A “SMART” design for building individualized treatment sequences. Annu Rev Clin Psychol. 2012;8:21‐48. 10.1146/annurev-clinpsy-032511-143152 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. NeCamp T, Kilbourne A, Almirall D. Comparing cluster‐level dynamic treatment regimens using sequential, multiple assignment, randomized trials: Regression estimation and sample size considerations. Stat Methods Med Res. 2017;26(4):1572‐1589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Kilbourne AM, Goodrich DE, Nord KM, et al. Long‐term clinical outcomes from a randomized controlled trial of two implementation strategies to promote collaborative care attendance in community practices. Adm Policy Ment Health Ment Health Serv Res. 2015;42(5):642‐653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Kilbourne AM, Li D, Lai Z, Waxmonsky J, Ketter T. Pilot randomized trial of a cross‐diagnosis collaborative care program for patients with mood disorders. Depress Anxiety. 2013;30(2):116‐122. [DOI] [PubMed] [Google Scholar]

- 44. Kilbourne AM, Goodrich DE, Lai Z, Clogston J, Waxmonsky J, Bauer MS. Life Goals Collaborative Care for patients with bipolar disorder and cardiovascular disease risk. Psychiatr Serv. 2012;63(12):1234‐1238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Kilbourne AM, Post EP, Nossek A, Drill L, Cooley S, Bauer MS. Improving medical and psychiatric outcomes among individuals with bipolar disorder: a randomized controlled trial. Psychiatr Serv. 2008;59(7):760‐768. 10.1176/appi.ps.59.7.760 [DOI] [PubMed] [Google Scholar]

- 46. Simon GE, Ludman EJ, Bauer MS, Unützer J, Operskalski B. Long‐term effectiveness and cost of a systematic care program for bipolar disorder. Arch Gen Psychiatry. 2006;63(5):500‐508. [DOI] [PubMed] [Google Scholar]

- 47. Neumann MS, Sogolow ED. Replicating effective programs: HIV/AIDS prevention technology transfer. AIDS Educ Prev. 2000;12(5 Suppl):35‐48. [PubMed] [Google Scholar]

- 48. Kilbourne AM, Neumann MS, Waxmonsky J, et al. Public‐academic partnerships: evidence‐based implementation: the role of sustained community‐based practice and research partnerships. Psychiatr Serv. 2012;63(3):205‐207. 10.1176/appi.ps.201200032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, Stall R. Implementing evidence‐based interventions in health care: application of the replicating effective programs framework. Implement Sci. 2007;2:42 10.1186/1748-5908-2-42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Bandura A, Walters RH. Social Learning Theory, vol. 1 Prentice‐hall; 1977. [Google Scholar]

- 51. Rogers EM. Diffusion of Innovations. Simon and Schuster; 2010. [Google Scholar]

- 52. Kilbourne AM, Goodrich DE, Lai Z, et al. Reengaging veterans with serious mental illness into care: preliminary results from a national randomized trial. Psychiatr Serv. 2014;66(1):90‐93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Kilbourne AM, Prenovost KM, Liebrecht C, et al. Randomized Controlled Trial of a collaborative care intervention for mood disorders by a National Commercial Health Plan. Psychiatr Serv. 2019;70(3):219‐224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Kilbourne AM, Bramlet M, Barbaresso MM, et al. SMI Life Goals: description of a randomized trial of a Collaborative Care Model to improve outcomes for persons with serious mental illness. Contemp Clin Trials. 2014;39(1):74‐85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Ware J, Kosinski M, Keller SD. A 12‐Item Short‐Form Health Survey: construction of scales and preliminary tests of reliability and validity. Med Care. 1996;34(3):220‐233. [DOI] [PubMed] [Google Scholar]

- 56. Kirchner JE, Ritchie MJ, Pitcock JA, Parker LE, Curran GM, Fortney JC. Outcomes of a partnered facilitation strategy to implement primary care–mental health. J Gen Intern Med. 2014;29(4):904‐912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Ritchie MJ, Kirchner JE, Parker LE, et al. Evaluation of an implementation facilitation strategy for settings that experience significant implementation barriers In: Implementation Science, vol. 10 Springer; 2015:A46. [Google Scholar]

- 58. Waxmonsky J, Kilbourne AM, Goodrich DE, et al. Enhanced fidelity to treatment for bipolar disorder: results from a randomized controlled implementation trial. Psychiatr Serv. 2014;65(1):81‐90. 10.1176/appi.ps.201300039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Berta W, Cranley L, Dearing JW, Dogherty EJ, Squires JE, Estabrooks CA. Why (we think) facilitation works: insights from organizational learning theory. Implement Sci. 2015;10(1):141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Ritchie MJ, Parker LE, Kirchner JE. From novice to expert: a qualitative study of implementation facilitation skills. Implement Sci Commun. 2020;1(1):1‐12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Harvey G, McCormack B, Kitson A, Lynch E, Titchen A. Designing and implementing two facilitation interventions within the ‘Facilitating Implementation of Research Evidence (FIRE)’study: a qualitative analysis from an external facilitators’ perspective. Implement Sci. 2018;13(1):1‐14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Seers K, Cox K, Crichton NJ, et al. FIRE (Facilitating Implementation of Research Evidence): a study protocol. Implement Sci. 2012;7(1):25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Seers K, Rycroft‐Malone J, Cox K, et al. Facilitating Implementation of Research Evidence (FIRE): an international cluster randomised controlled trial to evaluate two models of facilitation informed by the Promoting Action on Research Implementation in Health Services (PARIHS) framework. Implement Sci. 2018;13(1):137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Rizzo JR, House RJ, Lirtzman SI. Role conflict and ambiguity in complex organizations. Adm Sci Q. Published online 1970;15(2):150‐163. [Google Scholar]

- 65. House RJ, Rizzo JR. Role conflict and ambiguity as critical variables in a model of organizational behavior. Organ Behav Hum Perform. 1972;7(3):467‐505. [Google Scholar]

- 66. Bedeian AG, Armenakis AA. A path‐analytic study of the consequences of role conflict and ambiguity. Acad Manage J. 1981;24(2):417‐424. [PubMed] [Google Scholar]

- 67. Squires JE, Sullivan K, Eccles MP, Worswick J, Grimshaw JM. Are multifaceted interventions more effective than single‐component interventions in changing health‐care professionals’ behaviours? An overview of systematic reviews. Implement Sci. 2014;9(1):152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Grol R, Wensing M, Eccles M, Davis D. Improving Patient Care: The Implementation of Change in Health Care. John Wiley & Sons; 2013. [Google Scholar]

- 69. Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implement Sci. 2012;7(1):50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Harvey G, Kitson A. Translating evidence into healthcare policy and practice: single versus multi‐faceted implementation strategies–is there a simple answer to a complex question? Int J Health Policy Manag. 2015;4(3):123. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Author matrix

Appendix S1