Abstract

Powdery mildews present specific challenges to phenotyping systems that are based on imaging. Having previously developed low-throughput, quantitative microscopy approaches for phenotyping resistance to Erysiphe necator on thousands of grape leaf disk samples for genetic analysis, here we developed automated imaging and analysis methods for E. necator severity on leaf disks. By pairing a 46-megapixel CMOS sensor camera, a long-working distance lens providing 3.5× magnification, X-Y sample positioning, and Z-axis focusing movement, the system captured 78% of the area of a 1-cm diameter leaf disk in 3 to 10 focus-stacked images within 13.5 to 26 seconds. Each image pixel represented 1.44 μm2 of the leaf disk. A convolutional neural network (CNN) based on GoogLeNet determined the presence or absence of E. necator hyphae in approximately 800 subimages per leaf disk as an assessment of severity, with a training validation accuracy of 94.3%. For an independent image set the CNN was in agreement with human experts for 89.3% to 91.7% of subimages. This live-imaging approach was nondestructive, and a repeated measures time course of infection showed differentiation among susceptible, moderate, and resistant samples. Processing over one thousand samples per day with good accuracy, the system can assess host resistance, chemical or biological efficacy, or other phenotypic responses of grapevine to E. necator. In addition, new CNNs could be readily developed for phenotyping within diverse pathosystems or for diverse traits amenable to leaf disk assays.

1. Introduction

Phenomics is revolutionizing plant phenotyping with high-throughput, objective disease assessment. In particular, machine vision approaches have enabled rapid progress in trait analysis under controlled conditions, including the analysis of quantitative trait loci for host resistance [1]. At its simplest, machine vision involves image capture and image analysis, both of which can be automated for higher throughput. Applied to plant disease quantification, image capture approaches have included batch imaging with a smartphone [2], flatbed scanner [3], or multispectral imager [4], among other devices. Image analysis approaches range from processes that result in pixel counting metrics, as in the above cases, to algorithms for detection of complex structures [5].

Classification algorithms are an area of machine vision that has experienced tremendous growth over the past decade with the development of convolutional neural networks (CNNs), a form of artificial intelligence that is loosely based on the neural architecture of animal visual systems [6, 7]. For a description of CNNs and recent advances in machine vision the reader is directed to review articles on this topic [8, 9]. Recent advances in deep learning CNNs have brought their performance to levels that rival human observers for correctly classifying labeled images. CNNs have been successfully applied to many biological classification problems including the classification of leaf images for species identification and the detection of different diseases and stresses [10–12].

Of particular significance to this study, Google® researchers developed a competition-winning network in 2014 called GoogLeNet [13] that successfully classifies images depicting English language nouns from the ImageNet database [14]. GoogLeNet is available as freeware for others to use and adapt to their own purposes. Through a process called transfer learning, a neural network trained to classify images according to one set of outcome categories (e.g., English language nouns) can be retrained to classify images according to a different set of outcome categories (e.g., disease symptoms). Because training a network from scratch is a computationally intensive process that requires a large set of labeled inputs, transfer learning can improve the performance of large CNNs where there are limited training data and computational resources. Such is the benefit of starting with GoogLeNet, a CNN trained using over one million images where the weights and offsets describing the filters and neural interconnects of the network start at values that extract features that work well for classifying a diverse set of different shapes, textures, colors and patterns. Retraining GoogLeNet can be relatively quick compared to training from scratch (hours instead of days or weeks), using a relatively small training set (thousands as compared to millions of images) specific to the task at hand.

Powdery mildews present specific challenges for imaging and machine vision, especially in the earliest stages of development. In live specimens viewed at relatively low magnifications (i.e., 5–30×), hyphae appear transparent and are closely appressed to a leaf surface [15] overlain by a topographically complex and highly reflective wax cuticle prone to emit glare when live specimens are illuminated for microscopy and photomicrography. With appropriate lighting or staining, nascent colonies originating from conidia or ascospores of grapevine powdery mildew (Erysiphe necator) can be resolved using 3–10× magnification within 48 hours after inoculation. The fungal hyphae are approximately 4–5 μm in diameter, hyaline, tubular and superficial on the leaf surface [15]. They produce lobate organs of attachment and penetration (appressoria) at regular intervals. Except for the absorptive haustoria within the living host epidermal cells subtending the appressoria, E. necator is wholly external to the host. Once sufficient host tissue is colonized (generally within 5 to 7 days after inoculation), the colony becomes sporulation-competent and synchronously produces upright conidiophores over much of the colony surface. These upright conidiophores and the chains of conidia that they bear lend the macroscopically powdery appearance to the colony for which the pathogen group is named.

Grapevine powdery mildew caused by E. necator presents a significant management challenge everywhere grapes are grown. For example, powdery mildew management in California accounts for 9% to 20% of cultural costs for grape production, primarily from fungicide applications [16], as nearly all cultivated Vitis vinifera grapevines are highly susceptible. As a result, a major effort is underway to genetically map host resistance loci for introgression from wild Vitis into domesticated V. vinifera [17–20]. In previous studies of host resistance to E. necator and pathogen resistance to fungicides, controlled phenotyping of E. necator on grape leaf tissue used 1-cm diameter circular leaf disks cut from living grape leaves, arrayed on agar within Petri dishes or glass trays [21–23]. For host resistance assessment at 2 to 10 days after inoculation, the disks were destructively sampled by bleaching the leaf samples and then staining with a dye to make the hyphae more visible for phenotypic analysis under brightfield microscopy at 100× to 400× [21]. Severity of infection was estimated by hyphal transects, a point-intercept method adapted from vegetation analysis, where the number of hyphal interceptions of axial transects in the field of view was recorded as the response variable. These hyphal transect interceptions have proven to be an effective means of quantifying disease severity in large experiments to detect quantitative trait loci (QTL) in segregating populations [21]. The high magnification (400×) required by human observers to accurately assess and quantify hyphal growth, and the resultant shallow depth of focus (2 μm) and small field of view (0.045 cm) makes the foregoing a relatively slow process. For example, obtaining accurate assessments of hyphal growth in experiments involving 1600 leaf disks required approximately 20 to 60 person-days of microscopic observation.

Parallel to advances in CNNs, the pixel density now available in highly sensitive, high-resolution CMOS sensors used in full-frame (24×36 mm) Digital Single Lens Reflex (DSLR) cameras now approaches 50 megapixels. Paired with long-working distance high-resolution optics, this advancement now allows the synoptic capture of nearly the total area of a powdery mildew colony borne on a 1-cm leaf disk in a single high-resolution image. Focus-stacking algorithms can now rapidly assemble a fully focused image from stacks of partially focused images representing optical sections of a specimen to accommodate the complex topography of a leaf surface. The capacity to rapidly collect high-resolution and fully focused images of a 1-cm diameter area (compared to 0.045 cm under 400× microscopy) strengthens the case for machine vision, which could then process the images far more rapidly than a human observer. The goals of our study were to develop an Automated Phenotyping System (APS) that could

image at a rate of 1600 leaf disk samples per 8-hour day to provide the throughput required for QTL analysis;

nondestructively analyze and track progression of pathogen growth over several days;

quantify severity with a level of accuracy similar to that of trained human observers, with a metric that correlates well with counts from the standard hyphal transect technique.

2. Materials and Methods

2.1. Experimental Design

Three unreplicated experiments were undertaken to evaluate the performance of the APS and demonstrate its capabilities.

Experiment 1: Expert comparisons.

Experiment 2: Time-series mapping of growth.

Experiment 3: Comparison to hyphal transect technique.

2.1.1. Plant and Pathogen Material

Isolate NY90 of E. necator was used in all experiments except full-sibling progeny 452033, 452036, and 452051 described below. For these three samples, Musc4 was used in the time-series experiment (experiment 2, described below) and NY1-137 in the hyphal transect comparison experiment (experiment 3) because these isolates were being used by the VitisGen project [24] to map resistance in that family. All isolates were previously described, and their phenotypes can be summarized by their differential virulence on RUN1 vines: avirulent NY90, fully virulent Musc4, and moderately virulent NY1-137 [18, 25]. Several grape varieties were used in the experiments described here to challenge the system with different amounts of leaf hairs and levels of susceptibility to E. necator, including 10 different resistance loci (Table 1). Leaf sampling and processing for phenotyping resistance to E. necator was done as described by Cadle-Davidson et al. [21]. Briefly, leaves were sampled from the third node of a grape shoot (these leaves are typically translucent and about half the width of a fully expanded leaf), then surface sterilized, subsampled using a 1-cm cork borer, and arrayed on 1% agar media in a Petri dish or 32 × 26 × 2 cm Pyrex® tray (adaxial surface up). Inoculum consisted of E. necator conidiospores (5 × 104 per mL) suspended in distilled water containing 0.001% Tween-20. The leaf disks were inoculated by spraying them with an aerosol of the above suspension until the leaf surface bore visible droplets approximately 5- to 10-μl in volume. The droplets were allowed to dry, then the trays were immediately covered to maintain high humidity and were incubated at 23°C for a 12-hour photoperiod with 45 μmol∗m−2∗s−1 of photosynthetically active radiation (PAR) irradiance until and between imaging. Covers used to maintain humidity were removed for imaging and replaced immediately afterward.

Table 1.

Plant Material. The resistance locus status of grapevine germplasm samples used for neural network training and the three performance experiments: (1) expert comparison, (2) time series, and (3) hyphal transect comparison.

| Expected response | Sample ID∗ | Expected resistance loci † | Task |

|---|---|---|---|

| Resistant | Ren-stack | RUN1, REN1, REN6, and REN7 | Experiments 2 and 3 |

| Resistant | DVIT2732-9 and DVIT2732-81 | REN4 | Experiment 1 |

| Moderate | DVIT2732-6 | unknown | Experiment 1 |

| Moderate | Vitis cinerea B9 | REN2 | Training |

| Moderate | 452033, 452036, and 452051 | REN3/REN9 or similar | Experiments 2 and 3 |

| Moderate | Bloodworth 81-107-11 | RUN2.1 | Training |

| Moderate | V. vinifera “Chardonnay” old | Ontogenically resistant leaves | Training |

| Susceptible | V. vinifera “Chardonnay” | SEN1 susceptibility | Training, Experiments 1, 2 and 3 |

∗ The bold terms are used in the remainder of the text, tables, and figures for simplicity.

† The resistance loci (alleles) present in each vine are listed here, based on AmpSeq analysis of previously published markers [17–20]. DVIT2732-6 lacks REN4 but has moderate resistance from an unknown pollen donor. The full-sibling progeny (452033, 452036, and 452051) of the biparental cross “Horizon” × V. rupestris B38 likely carries the REN3/REN9 locus conferring moderate resistance.

2.1.2. APS Description

(0) Overview. To progress from the aforementioned single-point microscopy and human observer-based methodology toward a high-throughput, repeated measures phenotyping system, an APS was developed, detailed in subsequent sections. The system paired a high-resolution DSLR camera and a long-working distance macrofocusing lens. Relatively low magnification (3.5×) and long-working distance (5 cm) of the optical system resulted in a depth of focus of 200 μm compared to the 2 μm depth of focus obtained at 400× in the human-based system. This allowed the system to image the entire disk synoptically in 3 to 10 focus-stacked images. The stacked images were assembled into a single fully focused image through a focus-stacking algorithm [26].

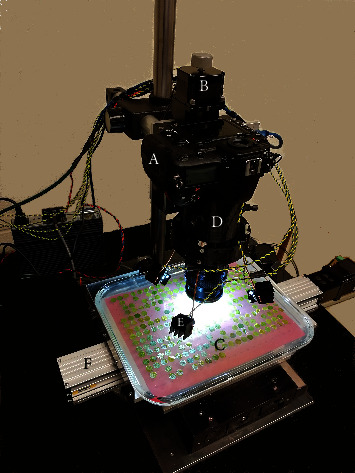

To move from one sample to the next, the APS used an X-Y motorized stage (Figure 1(F)) to move a tray (Figure 1(C)) holding up to 330 1-cm grape leaf disks beneath the camera (Figure 1(A)), and a computerized and integrated control and image analysis system to capture high-resolution images of nascent E. necator colonies at high speed. The grape powdery mildew pathosystem was used as a model to assess changes in disease severity in the context of a grape breeding project screening diverse Vitis germplasm across North America [24]. The APS enabled live imaging and processing an entire tray without operator intervention. With the tray resting on a two-axis translation stage, samples were automatically moved into position for imaging. Important characteristics of the positioning and camera mounting system include agile movement across different focusing planes for dynamic depth-of-field enhancement, stability, minimal vibrations that are quickly damped after movement and quick sample-to-sample movements to help meet our throughput goal. The images were analyzed for infection after being saved.

Figure 1.

Assembled system for image capture. A Nikon model D850 46 MP digital SLR camera and 60mm F/2.8 D Micro Autofocus lens (A) were suspended from an automated robotic Z-axis positioner (B) above a sample tray (C) carrying 1-cm leaf disk samples arrayed in a 22 by 15 matrix. The lens was stabilized by an accessory collar (D) that also bore the four white LEDs (E) that illuminated the samples. The tray was supported on an automated robotic stage (F) to provide movement in the X and Y planes.

(1) Positioning and Imaging Hardware. Three linear actuator stages were orthogonally arranged to provide the camera with three axes of positioning movement. The range of motion of the X and Y axes provided a working sample area measuring approximately 20 × 30 cm. The Z-axis had 5 cm of travel for finding focus and generating a stack of images for the enhanced depth-of-field image processing that was employed [27, 28]. All stages were controlled by a program written in MATLAB® 2017B [29]. Stepper motors were driven using trapezoidal velocity profiles, accelerations of 1250 and 10 mm·s−2 for the X and Y axes, respectively, and maximum velocities of 50 and 8.75 mm·s−1. The Z-axis had an asymmetrical acceleration/deceleration of 55 mm·s−2 and -20 mm·s−2 to decrease settling time when stopping. The maximum velocity of the Z-axis was 55 mm·s−1.

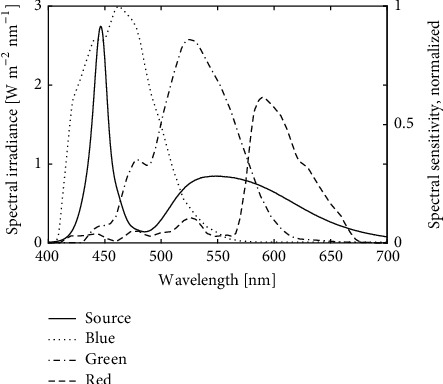

The system paired a DSLR camera with a 46 MP 24 × 36 mm CMOS sensor (Nikon D850, Figure 1(A)) and a long-working distance macrofocusing lens (Nikon Nikkor 60mm F/2.8 D Micro autofocus with four PK-12 extension tubes) with a RGB color registration filter (Figure 2, [30]). This configuration obtained 3.5× magnification and a 1.0 × 0.67 cm field of view. At this magnification each square image pixel represents 1.44 μm2 ((1.0 cm per image length/8256 pixels per image length)2). A custom-designed 3-D printed support for the camera lens tube was used to stabilize the assembled lens and extension tubes (Figure 1(D)). The lens support also contained provisions for mounting the four LEDs (Figure 1(E)) which were supported on 75-mm lengths of 2-mm diameter copper wire. The illumination angle was approximately 80 degrees with respect to the sample surface normal. The light sources were phosphor-converted cool white LEDs (CREE XML2-W318) with direct emission peaking at 446 nm (Figure 2) coupled to narrow-spot collimating lenses. These LEDs provided an irradiance of 170 W·m−2 (50,000 lux) on the leaf sample measured by a spectroradiometer (Photoresearch model PR740) viewing a white reflectance standard (Labsphere, model SRT-99-050). The shutter speed was 1/500 seconds with an ISO setting of 1000.

Figure 2.

Sample illumination and camera sensitivity. Measured spectral irradiance of grape leaf disks (solid black line) as samples were illuminated by four phosphor-converted Indium Gallium Nitride (InGaN) “white” light emitting diodes. The spectral matching between the illuminant and the camera is compared to the reported spectral sensitivity of the red, green, and blue channels (dashed, dot-dashed, and dotted curves, respectively) for an RGB CMOS sensor [30] similar to that of the Nikon model D850 camera used in the present study.

(2) Image Capturing. The sample tray was positioned against corner guide rails on the stage platform for accurate and repeatable placement. Even though the samples were placed on a grid, they might not be fully centered in the image, and the placement of the grid might differ from tray to tray. Therefore, we developed a procedure implemented in software to find the approximate center of a sample and move it to the center of the image. This process was repeated until the change in position became sufficiently small, or the program had iterated 10 times.

Due to the irregular surface of a leaf sample (±500 μm or more), and the magnification needed to resolve the hyphae, the limited depth of focus of the lens system (approximately ±100 μm) would not be able to bring the whole sample into focus. Instead, multiple images at varying focus heights around the center image focus were taken so that when combined using an image stacking software program, most if not all of the processed image was well focused. We devised an automated procedure to determine appropriate focus heights. Depending on the variations in sample height, three to ten images were then taken at different focus heights using the maximum camera resolution (8256 × 5504 pixels). Helicon Focus 6 [26] software was used to stack the images using the “Method C” setting which Helicon specifies as being the most useful for images with multiple crossing lines and complex shapes, but with the potential for increased glare in an image [31]. The processed images were saved for offline analysis using computer vision to detect and quantify hyphae.

(3) Image Analysis. The approach taken to determine the amount of infection in a leaf sample was to divide the image into an array of smaller subimages and then classify each subimage as either containing hyphae or not. Each subimage measured 224 x 224 pixels yielding 864 nonoverlapping, adjacent subimages per leaf disk image. The amount of hyphae present in the whole image was then estimated by the percentage of subimages containing hyphae. This formulation of the problem yielded a quantitative measure of infection from binary image classifications.

We modified GoogLeNet from the MATLAB® Deep Learning Toolbox, version 18.1.0, to be a two-output classifier (subimage infected or not infected). Each subimage was 224 × 224 pixels to match the input layer dimensions of GoogLeNet without resizing. The last three network layers of GoogLeNet were removed and replaced by three new layers: (1) a 2-neuron fully connected layer, (2) a softmax layer, and (3) a classification layer. Other than the three modified network layers the network weights and offsets were initialized to the pretrained values in the distributed ImageNet version. Initialization values for the three new layers were randomly chosen from a Gaussian distribution with zero mean and standard deviation 0.01 for the weights and zero for the offsets. We named this new network “GPMNet” for grapevine powdery mildew modification of GoogLeNet.

The training dataset consisted of 14,180 subimages from 19 whole leaf disk images. Only subimages that contained at least 90% leaf surface by area were used for training. The training subimages were generated from four categories of leaf disk images, each representing one of three varieties: Chardonnay (young and old leaves), Bloodworth 81-107-11 and V. cinerea B9. These samples exhibited a range of different characteristics including different amounts of leaf hairs, color differences, and texture differences (e.g., glossy/dull, smooth/rough). Two authors, AB and TL, independently labeled the training set subimages; AB provided roughly 75% of the labels. A separate independent dataset was collected for validating the CNN as described in the Performance Evaluations section. Training was done using MATLAB® Neural Network Toolbox™ [29] with GoogLeNet add-on package. The following hyperparameters were used for training:

Solver type: Stochastic Gradient Descent

Initial learning rate: 2×10−4

Learning Rate Schedule: piecewise (decreases by a factor of 0.63 every epoch), learning rate multiplier of 3 for the added fully connected layer

Momentum: 0.9

L2 Regularization factor (weight decay): 0.0001

Batch size: 32

70/30 split of the 14180 subimages randomly assigned into groups of training/validation datasets

Training set augmentation: 3× by including copies of the subimages that were flipped horizontally and vertically about the image centerlines

Training stopped when the cross entropy of the outcome and known responses of the validation set stopped decreasing by meeting the criterion of 20 direction reversals when computed once every 1600 image iterations. The image analysis software is available at https://github.com/LightingResearchCenter/GPMNet.

2.1.3. Performance Evaluations

(1) Experiment 1: Expert Comparisons. New samples from four varieties of grape were selected based on resistance to E. necator: susceptible Chardonnay, moderately resistant DVIT2732-6, and highly resistant DVIT2732-9 and DVIT2732-81. Images taken 3 days and 9 days after inoculation (dpi) were included for the low and moderately resistant varieties, while only 9 dpi images were included for the highly resistant varieties because there was no change over time in the infection state for these highly resistant varieties. The six images were distributed to members of the research team (AB, TL, and SS) experienced in identifying hyphae. A custom application was programmed in MATLAB® to display 224 × 224 pixel subimages and record the experts' responses of whether the subimages contained hyphae or not. In addition to showing a subimage and response buttons, the program displayed a second window showing the whole leaf disk with the subimage demarcated with a red outline. This second image could be panned and zoomed to allow the person classifying the subimage to see the image in the context of the whole leaf disk. The same leaf subimages, approximately 800 per leaf disk, were classified by both humans and the CNN.

Statistical Analyses: Percent agreement, calculated as (true positives + true negatives)/(number of images), was calculated for all pairs of experts and the APS. The correlation (Pearson's r) was calculated among the different experts and the APS.

(2) Experiment 2: Time-Series Mapping of Growth. Three sets of three grape varieties were selected: highly susceptible Chardonnay with three replicate leaf disks (here named as 165-Chardonnay-t1, 165-Chardonnay-t3 and 330-Chardonnay); unreplicated moderately resistant full-sibling progenies from the biparental cross “Horizon” × V. rupestris (here named as 24-452033, 27-452036 and 38-452051); and unreplicated highly resistant Ren-stack progeny containing RUN1, REN1, REN6, and REN7 genes (here named as 157-Ren-stack and 316-Ren-stack). The leaf disks were imaged and analyzed by the automated system once per day on days 2, 4, 6, and 9 after inoculation.

Statistical Analyses: Area under the disease progress curve (AUDPC) was calculated by the simple midpoint (trapezoidal) rule [32].

(3) Experiment 3: Comparison to Hyphal Transect Technique. After imaging the leaf disks of experiment 2 at 9 days after inoculation, the leaf disks were bleached, stained and the state of infection was quantified by the hyphal transect method, which quantifies the number of times an imaginary vertical and horizontal transect is crossed by hyphae [21].

Statistical Analyses: Comparisons between the hyphal transect technique and the APS results were evaluated by R2 values modeling the APS percent infected subimages as a linear function of the hyphal transect count. The hyphal transect count was also compared to the AUDPC from 2 to 9 dpi (Exp. 2), and to the growth rate coefficient for a simple logistic population growth curve, originally proposed by Pierre-François Verhulst in 1838, using R2 for a linear model.

3. Results

3.1. Image Capture and Throughput

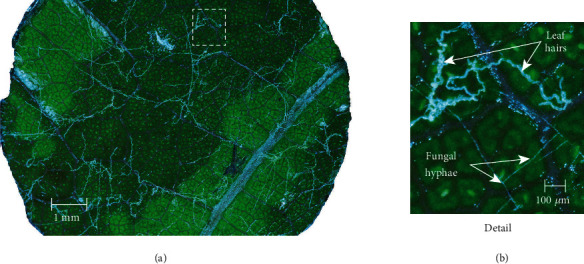

Based on informal evaluation, an illumination angle of roughly 80° measured from the surface normal was practically achievable to provide high contrast of hyphae with low background illumination of the leaf surface while minimizing shadows and not interfering with adjacent samples. The chosen magnification allowed for approximately 78% of leaf disk area to be captured in a single focus-stacked processed image while still resolving hyphae with high contrast (Figure 3). The time needed to image each leaf disk varied depending on the flatness of the leaf disk which in turn affected the focusing and number of focus-stack images needed. Times typically ranged from 13.5 to 26 seconds (Table 2). Thus, between 1100 and 2100 images could be collected in 8 hours depending on the flatness of the samples, resulting in a single focus-stack-processed image in 24-bit tiff format, 8256 × 5504 pixels, for each leaf disk.

Figure 3.

Full resolution (46 megapixel) image produced through focus-stack processing of images captured in the Z-plane. (a) Leaf disk sample imaged 3 days after inoculation with Erysiphe necator conidiospores. Illumination of the live sample at near-grazing angles revealed detail of the hyaline hyphae without excessive glare from the highly reflective leaf cuticle. (b) Detailed area of image illustrating morphology of fungal hyphae and nearby leaf trichomes (hairs).

Table 2.

Image capture throughput for obtaining a composite, Z-stacked leaf disk image (total), and a breakdown of the steps involved.

| Task | Time required per leaf disk sample |

|---|---|

| Move to center of sample | 0.5 seconds |

| Focus on the center of the image | 3 to 4 seconds |

| Determine Z-stack focusing range | 2.5 to 4 seconds |

| Move Z-axis and capture images | 2.5 seconds per image, typically 3–7 images |

| Total | 13.5 to 26 seconds |

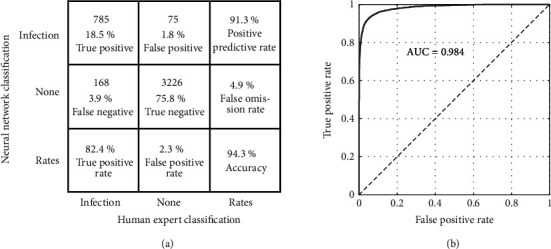

3.2. Neural Network Training Results

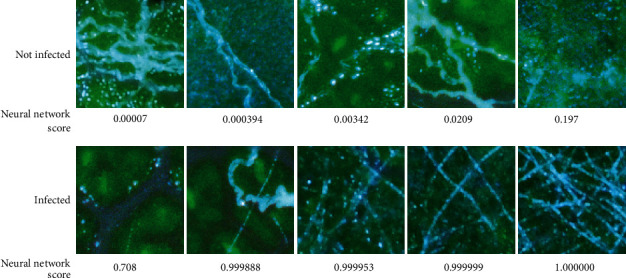

Retraining of GoogLeNet required 3.4 hours of computation time using an Intel Xeon CPU E31225 at 3.1 GHz with an Nvidia GeForce GTX 1050 Ti GPU and iterated through the set of 9920 training images (70% of labeled dataset) 32 times. The resulting CNN had a classification accuracy of 94.3% and ROC area under curve of 0.984 (Figure 4) for the validation subset of the training images for correctly classifying the subimages as infected or not (Figure 5). This accuracy is based on a criterion set at 0.5 on a scale from zero to one, but the criterion could be set to other levels depending on the desire to either reduce false-positive responses (higher criterion) or increase sensitivity by reducing false negatives (lower criterion).

Figure 4.

Confusion Matrix and Receiver Operating Curve. Confusion Matrix (a) and Receiver Operating Curve (b) for GoogLeNet retraining outcome assessment for the training validation data set

Figure 5.

Leaf disk subimages classified by a human expert along with corresponding neural network scores. Subimages of leaf disks classified by a human expert as either free of visible signs of infection (Not infected) or containing hyphae of Erysiphe necator (Infected), along with corresponding neural network scores.

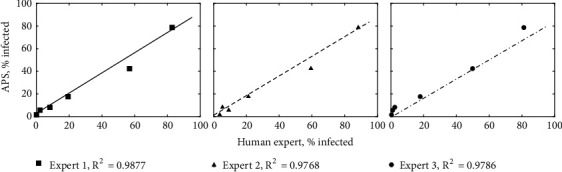

Experiment 1: Expert Comparisons. The three experts and the neural network were in agreement for 89.3% to 94.8% of subimages (Table 3). As expected, E. necator hyphae were rarely detected in resistant leaf disks at 9 dpi (no more than 3.7% of subimages), and moderately resistant DVIT2732-6 was intermediate between susceptible Chardonnay and the two resistant samples (Table 4). As operated with a false-positive rate of 2.3%, the neural network was slightly less sensitive than human observers in detecting hyphae as given by the slope of the linear trend line being 0.87 (Figure 6). The correlation between experts and the APS was 0.977 or greater for all pairings (Table 5). The highest correlations between experts and the APS were for Expert 1 (AB), followed by Expert 3 (SS), while the highest agreement with the APS was Expert 1 followed by Expert 2 (TL).

Table 3.

Agreement, calculated as (true positives + true negatives)/(number of images), between different experts and between experts and the neural network for classifying as infected or not infected for 4000 subimages from 6 different Erysiphe necator inoculated leaf disk samples (given in Table 4).

| % Agreement | Expert 1 | Expert 2 | Expert 3 | GPMNet |

|---|---|---|---|---|

| Expert 1 | 100 | 94.8 | 92.9 | 91.7 |

| Expert 2 | 94.8 | 100 | 92.3 | 91.0 |

| Expert 3 | 92.9 | 92.3 | 100 | 89.3 |

| GPMNet | 91.7 | 91.0 | 89.3 | 100 |

Table 4.

Image assessments of Erysiphe necator infection by different human experts and the APS (GPMNet) in terms of the percent of subimages containing hyphae at 3 or 9 days postinoculation (dpi).

| Grape variety | 3 dpi | 9 dpi | ||

|---|---|---|---|---|

| DVIT2732-6 | Expert 1 | 2.4% | Expert 1 | 49.9% |

| Expert 2 | 8.2% | Expert 2 | 56.8% | |

| Expert 3 | 5.4% | Expert 3 | 59.4% | |

| GPMNet | 8.2% | GPMNet | 55.4% | |

|

| ||||

| Chardonnay | Expert 1 | 17.9% | Expert 1 | 81.2% |

| Expert 2 | 19.3% | Expert 2 | 82.9% | |

| Expert 3 | 21.2% | Expert 3 | 88.3% | |

| GPMNet | 17.6% | GPMNet | 84.1% | |

|

| ||||

| DVIT2732-81 | Expert 1 | 0.4% | ||

| Expert 2 | 0.0% | |||

| Expert 3 | 3.7% | |||

| GPMNet | 1.5% | |||

|

| ||||

| DVIT2732-9 | Expert 1 | 1.1% | ||

| Expert 2 | 2.2% | |||

| Expert 3 | 3.7% | |||

| GPMNet | 1.5% | |||

Figure 6.

Relationship between severity of infection as determined by the Automated Phenotyping System (APS) neural network versus three independent human experts. Human experts rated the same subimages analyzed by the neural network, and results were analyzed by linear regression. The coefficients of correlation of neural network scores and human observer scores were 0.9877, 0.9768, and 0.9786 respectively for Experts 1, 2, and 3.

Table 5.

Symmetrical correlation matrix (Pearson's r) of different assessors (human experts and the APS (GPMNet)) for determining percent infection with E. necator for 6 leaf-disk samples as described in Table 4.

| Expert 1 | Expert 2 | Expert 3 | Human average | GPMNet | |

|---|---|---|---|---|---|

| Expert 1 | 1.000 | 0.993 | 0.995 | 0.998 | 0.988 |

| Expert 2 | 0.993 | 1.000 | 0.991 | 0.997 | 0.977 |

| Expert 3 | 0.995 | 0.991 | 1.000 | 0.998 | 0.979 |

| Human average | 0.998 | 0.997 | 0.998 | 1.000 | 0.983 |

| GPMNet | 0.988 | 0.977 | 0.979 | 0.983 | 1.000 |

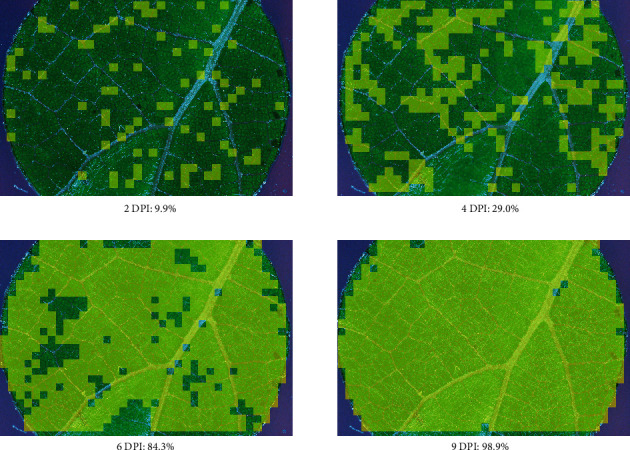

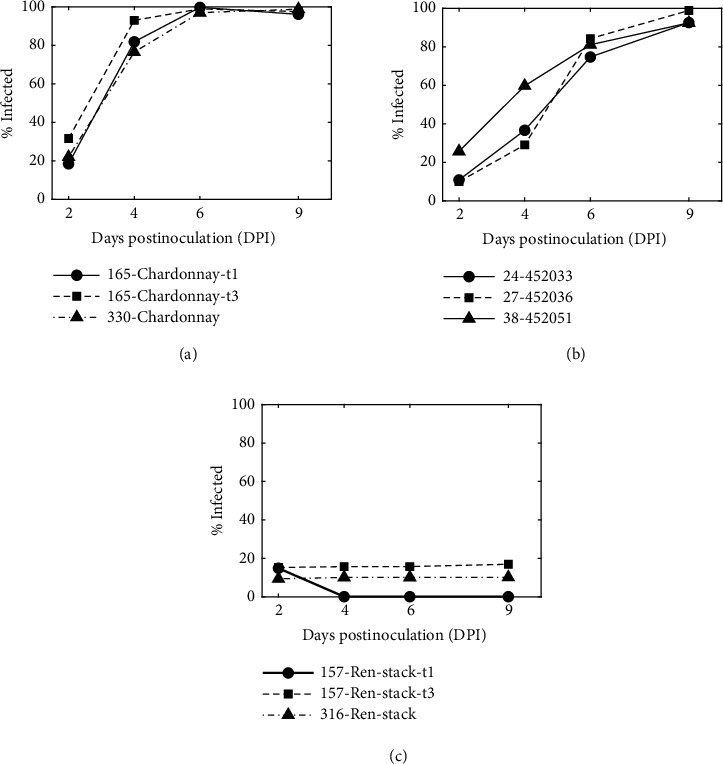

Experiment 2: Time-Series Mapping of Growth. From 2 to 9 dpi, the percentage of subimages with E. necator on susceptible or moderate samples increased along a logarithmic or sigmoidal curve, saturating near 100% at 6 or 9 dpi, respectively (e.g., Figure 7), while detection on resistant samples did not increase (Figure 8).

Figure 7.

Leaf disks imaged and scored for disease severity. A leaf disk of 452036 (“Horizon” × V. rupestris B38) inoculated with Erysiphe necator isolate Musc4 was imaged and scored for disease severity successively at 2, 4, 6, and 9 days after inoculation (DPI). The images are overlaid with lighter shade to show subimages that were classified as infected (score > 0.50). The total percentage of infected leaf disk area, calculated as the percentage of subimages classified as infected, is given below each image.

Figure 8.

Neural network determination of leaf disk infection as a function of time after inoculation. (a) Susceptible varieties, (b) moderate varieties, and (c) resistant varieties are shown here. In the figure legend, disk number relates each sample to the sample names shown in Table 5. Replicate disks t1 and t3 were from the same vine and were incubated and imaged on two different trays.

Experiment 3: Comparison to Hyphal Transect Technique. R2 values modeling time-series outcomes as a linear function of hyphal transect counts were stronger for growth rate (R2 = 0.933, p < 0.001) and AUDPC (R2 = 0.951, p < 0.001) than for percent of infected subimages at 9 dpi (R2 = 0.867, p < 0.001; Table 6).

Table 6.

Hyphal transect counts and neural network results (% infected subimages) for 6 leaf disks measured 9 days after inoculation. Growth rate coefficient and AUDPC were calculated from data shown in Figure 8.

| Sample Name | Category | Transect Count (manual) | % Infected (APS) | Growth rate coefficient | AUDPC | ||

|---|---|---|---|---|---|---|---|

| H | V | H+V | % | Normalized (max = 100) | |||

| 157-Ren-stack-t1 (R) | No infection | 0 | 0 | 0 | 0.1 | 0.041 | 2.6 |

| 316-Ren-stack (R) | No infection | 0 | 0 | 0 | 10.2 | 0.30 | 11.5 |

| 157-Ren-stack-t3 (R) | No infection | 0 | 0 | 0 | 15.8 | 0.36 | 18.3 |

| 24-452033 (M) | Moderate | 83 | 197 | 280 | 92.6 | 0.97 | 67.0 |

| 27-452036 (M) | Moderate | 146 | 147 | 293 | 98.9 | 0.99 | 69.8 |

| 38-452051 (M) | Moderate | 130 | 169 | 299 | 92.4 | 1.23 | 79.6 |

| 165-Chardonnay-t1 (S) | Severe | 222 | 139 | 361 | 96.1 | 1.53 | 94.2 |

| 330-Chardonnay (S) | Severe | 237 | 246 | 483 | 98.8 | 1.48 | 92.6 |

| 165-Chardonnay-t3 (S) | Severe | 237 | 253 | 490 | 97.6 | 1.89 | 100.0 |

4. Discussion

In this study, we developed an APS system capable of imaging 1100 to 2100 leaf disks in an 8-hour workday, passing those images to a CNN capable of accurately detecting the presence or absence of E. necator hyphae in each of 800 subimages per disk, and capable of capturing time-course data. With this throughput and accuracy, which represents a 20- to 60-fold increase in throughput over manual assessment, our APS can now be implemented for phenotyping E. necator growth for various research applications, including host resistance, fungicide resistance, and other treatment effects. While CNNs have been previously applied to macroscopic images of plant leaves for disease assessment [e.g., [33]], and even for quantitative assessment of the severity of powdery mildew infection [34], to our knowledge our system is the first to apply CNN techniques to the microscopic detection of fungal hyphae before sporulation occurs. Microscopic detection of hyphae enables early detection of infection and growth rates, which increases capabilities in testing for treatment effects, such as host resistance or other disease management strategies.

The goal of the system is to have automated scoring that is highly correlated with human observers assessing the severity of infection. The outcome was much better than correlations previously obtained (r = 0.43 and 0.80) in a leaf disk-based computer vision system using a smartphone and pixel counting to quantify downy mildew caused by Plasmopara viticola [2] and similar (r = 0.94) to a flatbed scanner and pixel counting used to quantify Septoria tritici blotch caused by Zymoseptoria tritici [3]. The agreement between experts and the APS reflects the amount of training set classifications provided by each; experts supplying more training data had higher agreement with the APS. However, correlations between experts and the APS did not strictly follow this ordering. Agreement among the experts was higher than agreement between any of the experts and the APS, suggesting that any bias introduced by which expert to use for CNN training was small. However, the accuracy among experts was only slightly higher than that between experts and the APS suggesting that new methodologies would need to be developed replacing human experts to assess further improvements in AI performance without observer biases.

The datasets used for evaluating GPMNet were acquired after the training dataset and included different grape germplasm, although Chardonnay was included in both. This approach ensured that the testing dataset was independent of the training dataset and perhaps provided a more rigorous test than randomly dividing a single image database into training, validation and test images as is commonly done [10–12]. Having a CNN that generalizes well to new accessions reduces the need to continually retrain the CNN, and is an important consideration when working with diverse breeding germplasm with a broad set of grape leaf characteristics.

An instance of different germplasm challenging the generality of the CNN is the higher than expected false-positive rate (10–16%) for the resistant samples 157-Ren-stack and 316-Ren-stack (Table 6) compared to the false-positive rate for the training data (2.3%). While this is likely a CNN generalization issue, other differences in the experiment execution (such as quantity of viable inoculum applied) or sample images (such as the lighting or image focus), can also negatively affect the results. As a case in point, sample 157-Ren-stack-t1 exhibited a decrease in infection on day 4 and thereafter. Inspection of the images revealed that starting on day 4 roughly half the image was not in sharp focus, probably due to the leaf bending up off the agar along a major vein by a distance more than could be accounted for by the focus-stacking process. Whatever caused the false-positives in the sharply focused images was no longer present in the blurred images. A way to mitigate the training generalization problem is to make the training image set as inclusive as possible [35], which in this case means representative of the different grape samples that will be later analyzed.

Optical techniques for making hyphae more visually prominent are limited, which likely explains why previous image-based phenotyping usually required destructive sampling and staining [5]. Despite these imaging limitations, CNN-based machine vision systems can produce results similar to destructive sampling techniques without the need for staining and with much greater throughput. However, a large part of the success of the APS is in achieving high-resolution, high contrast images. Highest contrast of hyphae against the leaf background is attained by illumination at a high angle of incidence on the sample. Presumably, this is due to the 3-D structure of the hyphae intercepting the light and redirecting it to the imaging lens, while providing relatively ineffective illumination of the leaf surface itself.

Choosing an illuminating spectrum that minimizes reflection from the leaf surface also increases the contrast of the hyphae against the leaf background. Leaf reflectance is lowest for wavelengths less than 460 nm [36]. As with most biological tissues, the scattering coefficient of the hyaline hyphae can be expected to increase with shorter wavelengths [37], thereby increasing their visibility as the illuminating wavelength is decreased. Considering both effects, a light source having significant spectral output circa 450 nm can increase the brightness of the hyphae in the image while keeping the surrounding leaf surface dim. While even shorter wavelength illumination could further enhance this effect, the silicon-based image sensors in commercial cameras, which employ red, green, and blue sensor channels (RGB), rapidly lose sensitivity for still shorter wavelengths and image quality degrades as commercial optics are not optimized for wavelengths shorter than approximately 430 nm. Thus, with appropriate lighting, as employed by the APS system, the 4–5 μm in diameter fungal hyphae in nascent colonies of E. necator can be resolved on live samples, using 3× magnification within 48 hours after inoculation.

The hyphal transect method represents the previous gold standard for manual quantification of grapevine powdery mildew disease severity [21] and aside from throughput and repeated measures, there are strengths and weaknesses in data quality compared to the APS developed here. The primary weakness of hyphal transects comes from subsampling only along the vertical and horizontal transects, thus missing any fungal growth that occurs away from these lines. In the APS, once hyphae are present in nearly every subimage the neural network metric saturates at 100%; however, hyphal transect counts can continue to increase as the density of hyphae increases. Thus, if a graded response among susceptible individuals is important, APS data need to be analyzed sooner after inoculation. The CNN could be modified from detecting presence of hyphae in subimages to also estimating the number of hyphae in subimages. A smaller subimage size could potentially better reveal hyphae density differences, but smaller subimages provide less information for determining an accurate classification, so this approach would have limited applicability.

Another approach to improving the correlation between APS results and the hyphal transect method is to use the time-series data to mathematically model fungal growth and predict hyphal transect counts at time points after inoculation. Susceptible samples showed rapid growth saturating near 100% infected area by day 6, while moderate samples showed delayed exponential growth saturating near day 9. These examples demonstrate the utility of the time-series data for providing growth information, even for the small sample size presented here. These or other time-series analyses, such as area under the disease progress stairs [32], may more accurately describe disease progress in other datasets.

While we chose GoogLeNet for the current study, more recent image classification networks are available (e.g., Inception-V3 [38] or ResNet [39]) that have surpassed GoogLeNet for accuracy in classifying labeled images, but versions of these networks are often much larger than GoogLeNet, thus requiring more time and computer resources to train and utilize. Meanwhile, their efficiency in terms of accuracy per computational unit can be significantly lower; to the point where computation time is so large that it limits sample throughput [40]. To verify the lower efficiency of larger networks on our classification problem we tried several training runs using an Inception-V3 network, modified similarly to how GoogLeNet was modified to fit our needs and using the same training dataset. Inception-V3 had at most a 1% increase in training set accuracy but required twice the computation time.

Acknowledgments

We thank Mary Jean Welser, Deb Johnston, Xia Xu, and Mike Colizzi for support in propagating and maintaining the germplasm described here, Bruce Reisch for providing the Ren-stack and V. rupestris × “Horizon” samples, and Bernie Prins, John Preece, and the USDA-ARS National Clonal Germplasm Repository for providing the DVIT2732 samples. The US Department of Agriculture, National Institute of Food and Agriculture, Specialty Crop Research Initiative provided funding for this project [awards 2011-51181-30635 and 2017-51181-26829].

Data Availability

All data are available upon request. Please contact the corresponding author. The imaging control software is available at https://github.com/LightingResearchCenter/Plant-Imaging-Platform. The image analysis software (i.e., the neural network) is available at https://github.com/LightingResearchCenter/GPMNet.

Disclosure

Mention of trade names or commercial products is solely for the purpose of providing specific information and does not imply recommendation or endorsement by the US Department of Agriculture. USDA is an equal opportunity provider and employer.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this article.

Authors' Contributions

All authors participated in team discussions, designing and planning the study, and reviewing the manuscript. Andrew Bierman and Tim LaPlumm developed and coded the automation algorithms, developed the CNN, collected and analyzed the data, and wrote sections of the manuscript. Lance Cadle-Davidson originated the idea for automating the process, provided expertise on phenotyping grapevine, and wrote sections of the manuscript. David Gadoury provided expertise on powdery mildew and optical imaging and contributed to sections of the manuscript. Dani Martinez provided camera interface software code and collected data. Surya Sapkota prepared samples and collected data. Mark Rea provided project oversight and guidance.

References

- 1.Mutka A. M., Bart R. S. Image-based phenotyping of plant disease symptoms. Frontiers in Plant Science. 2015;5, article no. 734 doi: 10.3389/fpls.2014.00734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Divilov K., Wiesner-Hanks T., Barba P., Cadle-Davidson L., Reisch B. I. Computer vision for high-throughput quantitative phenotyping: a case study of grapevine downy mildew sporulation and leaf trichomes. Journal of Phytopathology. 2017;107(12):1549–1555. doi: 10.1094/PHYTO-04-17-0137-R. [DOI] [PubMed] [Google Scholar]

- 3.Stewart E. L., Hagerty C. H., Mikaberidze A., Mundt C. C., Zhong Z., McDonald B. A. An improved method for measuring quantitative resistance to the wheat pathogen Zymoseptoria tritici using high-throughput automated image analysis. Journal of Phytopathology. 2016;106(7):782–788. doi: 10.1094/PHYTO-01-16-0018-R. [DOI] [PubMed] [Google Scholar]

- 4.Rousseau C., Belin E., Bove E., et al. High throughput quantitative phenotyping of plant resistance using chlorophyll fluorescence image analysis. Plant Methods. 2013;9(1):p. 17. doi: 10.1186/1746-4811-9-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Seiffert U., Schweizer P. A pattern recognition tool for quantitative analysis of in planta hyphal growth of powdery mildew fungi. Molecular Plant-Microbe Interactions. 2005;18(9):906–912. doi: 10.1094/MPMI-18-0906. [DOI] [PubMed] [Google Scholar]

- 6.Roska T., Hamori J., Labos E., et al. The use of CNN models in the subcortical visual pathway. IEEE Transactions on Circuits and Systems I: Fundamental Theory and Applications. 1993;40(3):182–195. doi: 10.1109/81.222799. [DOI] [Google Scholar]

- 7.Horvath A., Hillmer M., Lou Q., Hu X. S., Niemier M. Cellular neural network friendly convolutional neural networks - CNNs with CNNs. Proceedings of the 20th Design, Automation and Test in Europe (DATE '17); March 2017; Lausanne, Switzerland. pp. 145–150. [Google Scholar]

- 8.Liu W., Wang Z., Liu X., Zeng N., Liu Y., Alsaadi F. E. A survey of deep neural network architectures and their applications. Neurocomputing. 2017;234:11–26. doi: 10.1016/j.neucom.2016.12.038. [DOI] [Google Scholar]

- 9.Rawat W., Wang Z. Deep convolutional neural networks for image classification: a comprehensive review. Neural Computation. 2017;29(9):2352–2449. doi: 10.1162/neco_a_00990. [DOI] [PubMed] [Google Scholar]

- 10.DeChant C., Wiesner-Hanks T., Chen S., et al. Automated identification of northern leaf blight-infected maize plants from field imagery using deep learning. Journal of Phytopathology. 2017;107(11):1426–1432. doi: 10.1094/PHYTO-11-16-0417-R. [DOI] [PubMed] [Google Scholar]

- 11.Mohanty S. P., Hughes D. P., Salathé M. Using deep learning for image-based plant disease detection. Frontiers in Plant Science. 2016;7, article 1419 doi: 10.3389/fpls.2016.01419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ghosal S., Blystone D., Singh A. K., Ganapathysubramanian B., Singh A., Sarkar S. An explainable deep machine vision framework for plant stress phenotyping. Proceedings of the National Acadamy of Sciences of the United States of America. 2018;115(18):4613–4618. doi: 10.1073/pnas.1716999115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Szegedy C., Liu W., Jia Y., et al. Going deeper with convolutions. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR '15); June 2015; Boston, Mass, USA. IEEE; pp. 1–9. [DOI] [Google Scholar]

- 14. Image-net.org, http://image-net.org/

- 15.Gadoury D. M., Cadle-davidson L., Wilcox W. F., Dry I. B., Seem R. C., Milgroom M. G. Grapevine powdery mildew (Erysiphe necator): a fascinating system for the study of the biology, ecology and epidemiology of an obligate biotroph. Molecular Plant Pathology. 2012;13(1):1–16. doi: 10.1111/j.1364-3703.2011.00728.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fuller K. B., Alston J. M., Sambucci O. S. The value of powdery mildew resistance in grapes: evidence from California. Wine Economics and Policy. 2014;3(2):90–107. doi: 10.1016/j.wep.2014.09.001. [DOI] [Google Scholar]

- 17.Barba P., Cadle-Davidson L., Harriman J., et al. Grapevine powdery mildew resistance and susceptibility loci identified on a high-resolution SNP map. Theoretical and Applied Genetics. 2014;127(1):73–84. doi: 10.1007/s00122-013-2202-x. [DOI] [PubMed] [Google Scholar]

- 18.Feechan A., Kocsis M., Riaz S., et al. Strategies for RUN1 deployment using RUN2 and REN2 to manage grapevine powdery mildew informed by studies of race specificity. Journal of Phytopathology. 2015;105(8):1104–1113. doi: 10.1094/PHYTO-09-14-0244-R. [DOI] [PubMed] [Google Scholar]

- 19.Fresnedo-Ramírez J., Yang S., Sun Q., et al. An integrative AmpSeq platform for highly multiplexed marker-assisted pyramiding of grapevine powdery mildew resistance loci. Molecular Breeding. 2017;37(12) [Google Scholar]

- 20.Pap D., Riaz S., Dry I. B., et al. Identification of two novel powdery mildew resistance loci, Ren6 and Ren7, from the wild Chinese grape species Vitis piasezkii. BMC Plant Biology. 2016;16(1) doi: 10.1186/s12870-016-0855-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cadle-Davidson L., Gadoury D., Fresnedo-Ramírez J., et al. Lessons from a phenotyping center revealed by the genome-guided mapping of powdery mildew resistance loci. Journal of Phytopathology. 2016;106(10):1159–1169. doi: 10.1094/PHYTO-02-16-0080-FI. [DOI] [PubMed] [Google Scholar]

- 22.Teh S. L., Fresnedo-Ramírez J., Clark M. D., et al. Genetic dissection of powdery mildew resistance in interspecific half-sib grapevine families using SNP-based maps. Molecular Breeding. 2017;37(1):p. 1. doi: 10.1007/s11032-016-0586-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Frenkel O., Cadle-Davidson L., Wilcox W. F., Milgroom M. G. Mechanisms of resistance to an azole fungicide in the grapevine powdery mildew Fungus, Erysiphe necator. Journal of Phytopathology. 2015;105(3):370–377. doi: 10.1094/PHYTO-07-14-0202-R. [DOI] [PubMed] [Google Scholar]

- 24. VitisGen2, https://www.vitisgen2.org/

- 25.Barba P., Cadle-Davidson L., Galarneau E., Reisch B. Vitis rupestris B38 confers isolate-specific quantitative resistance to penetration by Erysiphe necator. Journal of Phytopathology. 2015;105(8):1097–1103. doi: 10.1094/PHYTO-09-14-0260-R. [DOI] [PubMed] [Google Scholar]

- 26. Helicon Focus 6, 2017.

- 27.Huang W., Jing Z. Evaluation of focus measures in multi-focus image fusion. Pattern Recognition Letters. 2007;28(4):493–500. doi: 10.1016/j.patrec.2006.09.005. [DOI] [Google Scholar]

- 28.Hovden R., Xin H. L., Muller D. A. Extended depth of field for high-resolution scanning transmission electron microscopy. Microscopy and Microanalysis. 2011;17(1):75–80. doi: 10.1017/S1431927610094171. [DOI] [PubMed] [Google Scholar]

- 29. MATLABⓇ 2017b, Mathworks, 2017.

- 30.Sigernes F., Dyrland M., Peters N., et al. The absolute sensitivity of digital colour cameras. Optics Express. 2009;17(22):p. 20211. doi: 10.1364/OE.17.020211. [DOI] [PubMed] [Google Scholar]

- 31.Helicon Focus 6, Rendering Methods. 2017, https://www.heliconsoft.com/focus/help/english/HeliconFocus.html#HF_METHODS.

- 32.Simko I., Piepho H. The area under the disease progress stairs: calculation, advantage, and application. Journal of Phytopathology. 2012;102(4):381–389. doi: 10.1094/PHYTO-07-11-0216. [DOI] [PubMed] [Google Scholar]

- 33.Mohanty S. P., Hughes D. P., Salethe M. Using deep learning for image-based plant disease detection. Frontiers in Plant Science. 2016;7:p. 1419. doi: 10.3389/fpls.2016.01419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lin K., Gong L., Huang Y., Liu C., Pan J. Deep learning-based segmentation and quantification of cucumber powdery mildew using convolutional neural network. Frontiers in Plant Science. 2019;10:p. 155. doi: 10.3389/fpls.2019.00155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Sladojevic S., Arsenovic M., Anderla A., Culibrk D., Stefanovic D. Deep neural networks based recognition of plant diseases by leaf image classification. Computational Intelligence and Neuroscience. 2016;2016:11. doi: 10.1155/2016/3289801.3289801 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hall A. Remote sensing applications for viticultural terroir analysis. Elements. 2018;14(3):185–190. doi: 10.2138/gselements.14.3.185. [DOI] [Google Scholar]

- 37.Jacques S. L. Optical properties of biological tissues: a review. Physics in Medicine and Biology. 2013;58(11):R37–R61. doi: 10.1088/0031-9155/58/11/R37. [DOI] [PubMed] [Google Scholar]

- 38.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. Rethinking the inception architecture for computer vision. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR '16); July 2016; IEEE; pp. 2818–2826. [Google Scholar]

- 39.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. Frontiers in Psychology. 2015;4 [Google Scholar]

- 40.Canziani A., Paszke A., Culurciello E. An analysis of deep neural network models for practical applications. 2016, https://arxiv.org/abs/1605.07678.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data are available upon request. Please contact the corresponding author. The imaging control software is available at https://github.com/LightingResearchCenter/Plant-Imaging-Platform. The image analysis software (i.e., the neural network) is available at https://github.com/LightingResearchCenter/GPMNet.