Abstract

The rate of entropy production by a stochastic process quantifies how far it is from thermodynamic equilibrium. Equivalently, entropy production captures the degree to which global detailed balance and time-reversal symmetry are broken. Despite abundant references to entropy production in the literature and its many applications in the study of non-equilibrium stochastic particle systems, a comprehensive list of typical examples illustrating the fundamentals of entropy production is lacking. Here, we present a brief, self-contained review of entropy production and calculate it from first principles in a catalogue of exactly solvable setups, encompassing both discrete- and continuous-state Markov processes, as well as single- and multiple-particle systems. The examples covered in this work provide a stepping stone for further studies on entropy production of more complex systems, such as many-particle active matter, as well as a benchmark for the development of alternative mathematical formalisms.

Keywords: entropy production, active matter, exact results, stochastic thermodynamics

1. Introduction

Stochastic thermodynamics has progressively evolved into an essential tool in the study of non-equilibrium systems as it connects the quantities of interest in traditional thermodynamics, such as work, heat and entropy, to the properties of microscopically resolved fluctuating trajectories [1,2,3]. The possibility of equipping stochastic processes with a consistent thermodynamic and information-theoretic interpretation has resulted in a number of fascinating works, with the interface between mathematical physics and the biological sciences proving to be a particularly fertile ground for new insights (e.g., [4,5,6,7,8]). The fact that most of the applications live on the small scale is not surprising, since it is precisely at the microscopic scale that fluctuations start to play a non-negligible role.

The concept of entropy and, more specifically, entropy production has attracted particular interest, as a consequence of the quantitative handle it provides on the distinction between equilibrium systems, passive systems relaxing to equilibrium and genuinely non-equilibrium, ‘active’ systems. While there exist multiple routes to the mathematical formulation of entropy production [9,10,11,12,13,14], the underlying physical picture is consistent: the entropy production associated with an ensemble of stochastic trajectories quantifies the degree of certainty with which we can assert that a particular event originates from a given stochastic process or from its suitably defined conjugate (usually, its time-reverse). When averaged over long times (or over an ensemble), a non-vanishing entropy production signals time-reversal symmetry breaking at the microscopic scale. This implies, at least for Markovian systems, the existence of steady-state probability currents in the state space, which change sign under time-reversal. When a thermodynamically consistent description is available, the average rate of entropy production can be related to the rate of energy or information exchange between the system, the heat bath(s) it is connected to, and any other thermodynamic entity involved in the dynamics, such as a measuring device [15,16,17]. Whilst the rate of energy dissipation is of immediate interest since it captures how ‘costly’ it is to sustain specific dynamics (e.g., the metabolism sustaining the development of an organism [18,19]), entropy production has also been found to relate non-trivially to the efficiency and precision of the corresponding process via uncertainty relations [3,20]. Entropy production along fluctuating trajectories also plays a fundamental role in the formulation of various fluctuation theorems [12].

Given the recent interest in stochastic thermodynamics and entropy production in particular, as well as the increasing number of mathematical techniques implemented for the quantification of the latter, it is essential to have available a few, well-understood reference systems, for which exact results are known. These can play the role of benchmarks for new techniques, while helping neophytes to develop intuition. This is not to say that exact results for more complicated systems are not available, see for example [21], however they are usually limited to small systems and/or require numerical evaluation. In this work, we will present results exclusively in the framework proposed by Gaspard [11], specifically in the form of Equations (4), (15) and (16), which we review and contextualise by deriving them via different routes in Section 2. In Section 3, we begin the analysis with processes in discrete state space (Section 3.1, Section 3.2, Section 3.3, Section 3.4, Section 3.5, Section 3.6, Section 3.7 and Section 3.8), and subsequently extend it to the continuous case (Section 3.9, Section 3.10 and Section 3.11). Finally, in Section 3.12 and Section 3.13 we consider processes that involve both discrete and continuous degrees of freedom. Time is taken as a continuous variable throughout.

2. Brief Review of Entropy Production

Entropy production of jump processes. The concept of time-dependent informational entropy associated with a given ensemble of stochastic processes was first introduced by Shannon [22]. For an arbitrary probability mass function of time t over a discrete set of states , the Shannon entropy is defined as

| (1) |

with the convention henceforth of for . It quantifies the inherent degree of uncertainty about the state of a process. In the microcanonical ensemble is constant in t and n and upon providing an entropy scale in the form of the Boltzmann constant , Shannon’s entropy reduces to that of traditional thermodynamics given by Boltzmann’s , where is the cardinality of . In Markovian systems, the probability depends on n and evolves in time t according to the master equation

| (2) |

with non-negative transition rates from state m to state . Equation (2) reduces to by imposing the Markov condition , equivalently , which we will use in the following. For simplicity, we will restrict ourselves to time-independent rates but as far as the following discussion is concerned, generalising to time-dependent rates is a matter of replacing by . The rate of change of entropy for a continuous time jump process can be derived by differentiating in Equation (1) with respect to time and substituting (2) into the resulting expression [11,23], thus obtaining

| (3) |

where we define

| (4a) |

| (4b) |

with arbitrary positive rate to restore dimensional consistency, that cancel trivially. Here we follow the convention [1] to split the rate of entropy change into two contributions: the first, Equation (4a), commonly referred to as “external” entropy production or entropy flow, is denoted by . It contains a factor corresponding, for systems satisfying local detailed balance, to the net change in entropy of the reservoir(s) associated with the system’s transition from state n to state m. For such thermal systems, can thus be identified as the rate of entropy production in the environment [9,24]. The second contribution, Equation (4b), termed “internal” entropy production and denoted by is non-negative because for any two real, positive x, y and using the convention for . The internal entropy production vanishes when the global detailed balance condition is satisfied for all pairs of states. In this sense, a non-vanishing is the fingerprint of non-equilibrium phenomena. Defining as the probability mass function at steady-state, the internal entropy production rate can be further decomposed into two non-negative contributions, , of the form

| (5a) |

| (5b) |

These contributions are usually referred to as adiabatic (or housekeeping) and non-adiabatic (or excess) entropy production rates, respectively [1]. The non-adiabatic contribution vanishes at steady-state, . While these quantities have received attention in the context of fluctuation theorems [23], they will not be discussed further here. At steady-state, namely when for all n, in Equation (3) vanishes by construction, so that the internal and external contributions to the entropy production cancel each other exactly, , while they vanish individually only for systems at equilibrium. Equation (4) will be used throughout the present work to compute the entropy productions of discrete-state processes.

Entropy production as a measure of time-reversal-symmetry breaking. As it turns out, a deeper connection between internal entropy production and time-reversal symmetry breaking can be established [11]. The result, which we re-derive below, identifies as the relative dynamical entropy (i.e., the Kullback–Leibler divergence [25]) per unit time of the ensemble of forward paths and their time-reversed counterparts. To see this, we first need to define a path as a set of trajectories starting at time and visiting states at successive discrete times with , equally spaced by a time interval . For a time-homogeneous Markovian jump process in continuous time, the joint probability of observing a particular path is

| (6) |

where is the probability of observing the system in state at time , while is the probability that the system is in state time after being in state . This probability can be expressed in terms of the transition rate matrix w with elements . It is , the matrix elements of the exponential of the matrix with the Markov condition imposed. It can be expanded in small as

| (7) |

where is the Kronecker- function. We can now define a dynamical entropy per unit time [22] as

| (8) |

where the limit is to be considered a continuous time limit taken at fixed [26], thus determining the sampling interval , and the sum runs over all possible paths . Other than , the paths are the only quantity on the right-hand side of Equation (8) that depend on M. The dynamical entropy may be considered the expectation of across all paths. Similarly to the static Shannon entropy, the dynamical entropy quantifies the inherent degree of uncertainty about the evolution over a time of a process starting at a given time . To compare with the dynamics as observed under time-reversal, one introduces the time-reversed path and thus the time-reversed dynamical entropy per unit time as

| (9) |

While similar in spirit to , the physical interpretation of as the expectation of under the forward probability is more convoluted since it involves the forward and the backward paths simultaneously, which have potentially different statistics. However, time-reversal symmetry implies precisely identical statistics of the two ensembles, whence . The motivation for introducing is that the difference of the two dynamical entropies defined above is a non-negative Kullback–Leibler divergence given by

| (10) |

Using Equation (6) in (10) with Equation (7) provides the expansion

| (11) |

which is an instantaneous measure of the Kullback–Leibler divergence. The limit of in small is finite and identical to the internal entropy production (4b) derived above. This result establishes the profound connection between broken global detailed balance, Equation (4), and Kullback–Leibler divergence, Equation (11), both of which can thus be recognised as fingerprints of non-equilibrium systems. In light of this connection, it might not come as a surprise that the steady-state rate of entropy production is inversely proportional to the minimal time needed to decide on the direction of the arrow of time [27].

Entropy production for continuous degrees of freedom. The results above were obtained for Markov jump processes within a discrete state space. However, the decomposition of the rate of change of entropy in Equation (3) into internal and external contributions can be readily generalised to Markovian processes with continuous degrees of freedom, for example a spatial coordinate. For simplicity, we will restrict ourselves to processes in one dimension but as far as the following discussion is concerned, generalising to higher dimensions is a matter of replacing spatial derivatives and integrals over the spatial coordinate with their higher dimensional counterparts. The dynamics of such a process with probability density to find it at x at time t are captured by a Fokker–Planck equation of the form , with j the probability current, augmented by an initial condition . Starting from the Gibbs–Shannon entropy for a continuous random variable with some arbitrary density scale for dimensional consistency, we differentiate with respect to time and substitute for to obtain

| (12) |

where the second equality follows upon integration by parts using by normalisation. For the paradigmatic case of an overdamped colloidal particle, which will be discussed in more detail below (Section 3.9, Section 3.10 and Section 3.11), the probability current is given by with local, time-dependent force . We can then decompose the entropy production into internal and external contributions as

| (13) |

and

| (14) |

respectively. The Kullback–Leibler divergence between the densities of forward and time-reversed paths can be calculated as outlined above for discrete state systems, thus producing an alternative expression for the internal entropy production in the form

| (15) |

Here we have introduced the propagator , the probability density that a system observed in state will be found at x time later. In general, here and above, the density depends on the absolute time t, which we have omitted here for better readability. The corresponding expression for the entropy flow is obtained by substituting (15) into the balance equation , whence

| (16) |

Since [28] and , the factor in front of the logarithm in (15) and (16) vanishes in the limit of small , . Together with the prefactor , this necessitates the use of L’Hôpital’s rule

| (17) |

where we used the shorthand

| (18) |

which is generally given by the Fokker–Planck equation of the process, so that

| (19) |

In the continuum processes considered below, in particular Section 3.11, Section 3.12 and Section 3.13, is a kernel in the form of Dirac -functions and derivatives thereof, acting under the integral as the adjoint Fokker–Planck operator on . With Equation (17) the internal entropy production of a continuous process (15) may conveniently be written as

| (20a) |

| (20b) |

| (20c) |

with suitable constants and . Correspondingly, the (external) entropy flow (16) is

| (21a) |

| (21b) |

| (21c) |

All of these expressions assume that the limits of the logarithms exist. Naively replacing them by produces a meaningless expression with a Dirac -function in the denominator. Equations (20) and (21) are identically obtained in the same manner as Equation (4) with the master Equation (2) replaced by the Fokker–Planck Equation (19). All of these expressions, Equations (4), (20) and (21), may thus be seen as Gaspard’s [11] framework.

Langevin description and stochastic entropy. We have seen in Equations (13) and (14) how the notion of entropy production can be extended to continuous degrees of freedom by means of a Fokker–Planck description of the stochastic dynamics. The Fokker–Plank equation is a deterministic equation for the probability density and thus provides a description at the level of ensembles, rather than single fluctuating trajectories. A complementary description can be provided by means of a Langevin equation of motion, which is instead a stochastic differential equation for the continuous degree of freedom [29]. The presence of an explicit noise term, which usually represents faster degrees of freedom or fluctuations induced by the contact with a heat reservoir, allows for a clearer thermodynamic interpretation. A paradigmatic example is that of the overdamped colloidal particle mentioned above, whose dynamics is described by

| (22) |

with a mobility, a generic force and a white noise term with covariance . For one-dimensional motion on the real line, the force can always be written as the gradient of a potential , namely , so that it is conservative. For time-independent, stable potentials, , this leads over long timeframes to an equilibrium steady-state. This property does not hold in higher dimensions and for different boundary conditions (e.g., periodic), in which case the force need not have a corresponding potential for which [30].

The concept of entropy is traditionally introduced at the level of ensembles. However, due to its role in fluctuation theorems [1,24], a consistent definition at the level of single trajectories is required. This can be constructed along the lines of [12] by positing the trajectory-dependent entropy where is a random trajectory as given by Equation (22) and

| (23) |

Here, denotes the probability density of finding a particle at position x at time t as introduced above and is a scale as used above to maintain dimensional consistency. Given that is a random variable, so is , which may be regarded as an instantaneous entropy. Taking the total derivative with respect to t produces

| (24) |

where we have used the processes’ Fokker–Planck equation with . The total time derivative has been taken as a conventional derivative implying the Stratonovich convention indicated by ∘, which will become relevant below. The term in (24) containing accounts for changes in the probability density due to its temporal evolution, such as relaxation to a steady state, and any time-dependent driving protocol. The product can be interpreted as a power expended by the force and in the absence of an internal energy of the particle, dissipated in the medium. With Einstein’s relation defining the temperature of of the medium, the last term may be written as

| (25) |

and thus interpreted as the entropy change in the medium. Together with the entropy change of the particle, this gives the total entropy change of particle and medium,

| (26) |

which is a random variable, as it depends on the position . It also draws on and which are properties of the ensemble. To make the connection to the entropies constructed above we need to take an ensemble average of the instantaneous . To do so, we need an interpretation of the last term of (26), where the noise of , Equation (22), multiplies . Equivalently, we need the joint density of position x and velocity at time t. In the spirit of Ito, this density trivially factorises into a normally distributed and as the increment on the basis of (22) depends only on the particle’s current position . However, this is not so in the Stratonovich interpretation of , as here the increment depends equally on and [1,31,32]. Taking the ensemble average of thus produces

| (27) |

where and are now dummy variables. The first term on the right hand side vanishes, because is the marginal of and by normalisation. The integral over in the second term produces the expected particle velocity conditional to its position,

| (28) |

in the Stratonovich sense, where it gives rise to the current [12], , so that

| (29) |

which vanishes only in the absence of any probability current, i.e., in thermodynamic equilibrium. In the Ito sense, the conditional expectation (28) would have instead given rise to the ensemble-independent drift, . Comparing to Equation (13), the expectation turns out to be the internal entropy production , so that of Equation (26) may be regarded as its instantaneous counterpart.

Path integral methods. An interesting aspect of working with the Langevin description is the possibility of casting probability densities for paths with into path integrals, for example in the Onsager–Machlup formalism [33,34]. For the colloidal particle introduced in (22), it gives with the action functional

| (30) |

in the Stratonovich discretisation, which differs from the Ito form only by the second term ([34], Section 4.5), which is the Jacobian of the transform of the noise to , Equation (22). The Stratonovich form is needed so that the action does not give preference to a particular time direction [35]. This choice plays a role in every product of white noise, as is implicit to , and a random variable. We therefore indicate the choice by a ∘ also in powers, reminding us that should be read as and as with discretisation time step . Evaluating the action for the reversed path then gives

| (31) |

| (32) |

If the force is even under time reversal, , in particular when it is independent of time, the path probability density obeys

| (33) |

with random variables multiplied with Stratonovich convention. With Equation (25), the integral in Equation (33) can be identified as the entropy of the medium. When the driving is time-independent and the system’s probability distribution eventually becomes stationary, such that , Equation (23), the only contribution to the total entropy change is due to change of entropy in the medium, Equation (26). Assuming that the system is ergodic, we have the equivalence , where denotes an ensemble average. Using Equations (13) and (29) gives . Equation (33) can therefore be used directly to compute the steady-state internal entropy production rate. The equivalence between the long-time limit and the ensemble average holds only for ergodic systems, whose unique steady-state does not depend on the specific initialisation . This connection between stochastic thermodynamics and field theory has stimulated a number of works aimed at characterising the non-equilibrium features of continuum models of active matter [13,36]. Extensions of this formalism to systems driven by correlated noise have also been proposed [37].

3. Systems

In this section, we calculate the entropy production rate on the basis of Gaspard’s framework [11], Equations (4), (15) and (16), for different particle systems. We cover the systems listed in Table 1, with both discrete and continuous states and with one or multiple particles.

Table 1.

List of particle systems for which we have calculated their entropy production .

| Section | System | |

|---|---|---|

| Section 3.1 | Two-state Markov process | (37) |

| Section 3.2 | Three-state Markov process | (41) |

| Section 3.3 | Random walk on a complete graph | (44), (45) |

| Section 3.4 | N independent, distinguishable Markov processes | (52) |

| Section 3.5 | N independent, indistinguishable two-state Markov processes | (55b) |

| Section 3.6 | N independent, indistinguishable d-state processes | (68) |

| Section 3.7 | Random Walk on a lattice | (82) |

| Section 3.8 | Random Walk on a ring lattice | (87), (89) |

| Section 3.9 | Driven Brownian particle | (94) |

| Section 3.10 | Driven Brownian particle in a harmonic potential | (100) |

| Section 3.11 | Driven Brownian particle on a ring with potential | (113d) |

| Section 3.12 | Run-and-tumble motion with diffusion on a ring | (121) |

| Section 3.13 | Switching diffusion process on a ring | (128) |

3.1. Two-State Markov Process

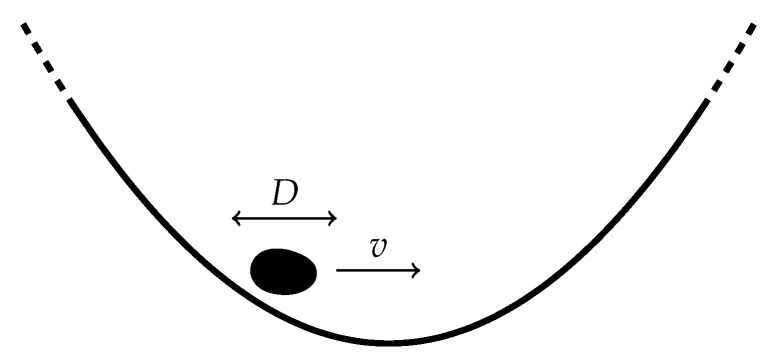

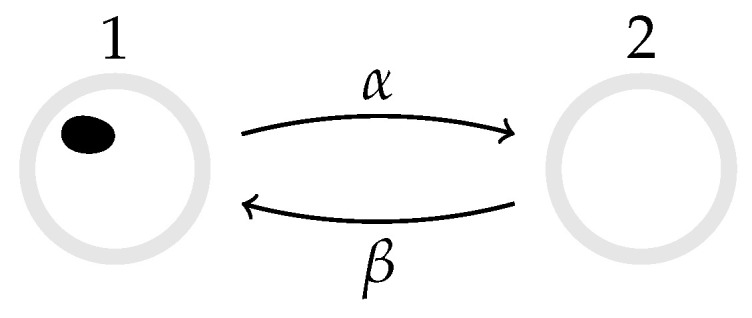

Consider a particle that hops between two states, 1 and 2, with transition rates and , see Figure 1 [23,38], and using the notation in Equation (18) for discrete states. The rate-matrix (see Equation (7)) may thus be

| (34) |

with the probability of the particle to be in state 1 or 2 respectively as a function of time. By normalisation, , with probabilistic initial condition . Solving the master equation in Equation (2) yields

| (35) |

with , corresponding to an exponentially decaying probability current

| (36) |

Figure 1.

Two-state Markov chain in continuous time. The black blob indicates the current state of the system. Independently of the choice of and , this processes settles into an equilibrium steady-state over long timeframes (in the absence of an external time-dependent diving).

The internal entropy production (4b) is then

| (37) |

see Figure 2, and the entropy flow (4a),

| (38) |

At stationarity, and therefore the two-state Markov process reaches equilibrium. In this example, the topology of the transition network does not allow a sustained current between states, which inevitably leads to equilibrium in the steady state and, therefore, there is production of entropy only due to the relaxation of the system from the initial state.

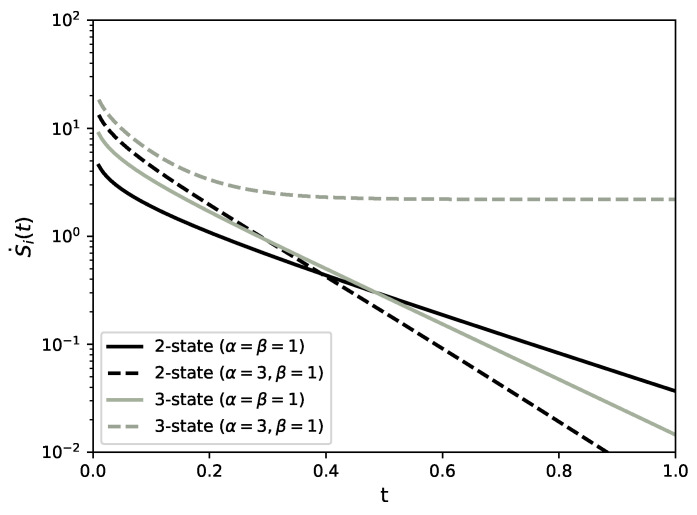

Figure 2.

Entropy production of the two- and three-state Markov processes (black and grey lines respectively) discussed in Section 3.1 and Section 3.2 as a function of time. For the two-state system we plot Equation (37) with both for the symmetric, (solid lines), and for the asymmetric case, (dashed lines). In both, the entropy production decays exponentially over long timeframes. For the three-state system, Equation (41), the asymmetric case displays a finite entropy production rate over long timeframes, consistent with Equation (42) and the condition that at stationarity .

3.2. Three-State Markov Process

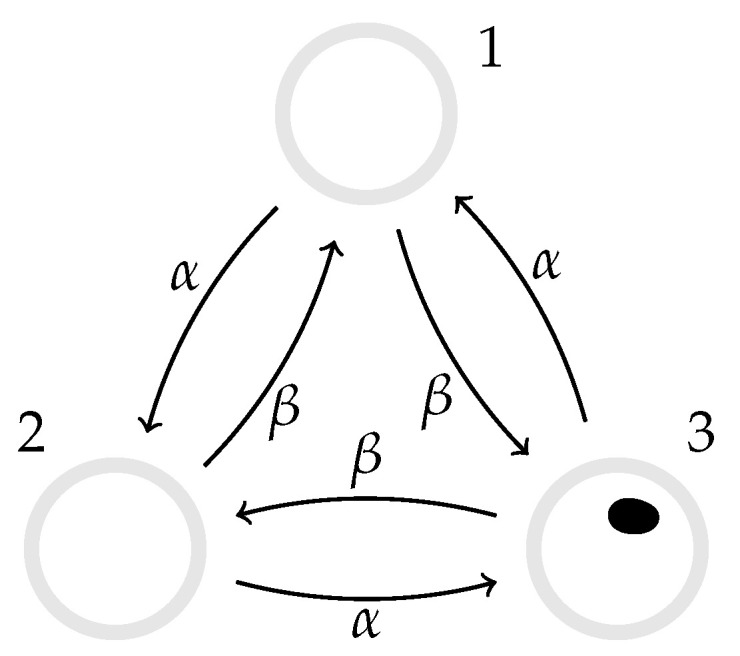

We extend the system in Section 3.1 to three states, 1, 2 and 3, with transition rates , , , , , and , see Figure 3, and using the notation Equation (18) for discrete states. The rate matrix (see Equation (7)) is then

| (39) |

Assuming the initial condition , the probabilities of states 1, 2 and 3 respectively, evolve according to Equation (2), which has solution

| (40a) |

| (40b) |

| (40c) |

with and .

Figure 3.

Three-state Markov chain in continuous time. The black blob indicates the current state of the system. Symmetry under cyclic permutation is introduced by imposing identical transition rates and for counter-clockwise and clockwise transition, respectively.

The entropy production (4b) is then, using (40),

| (41) |

see Figure 2, and the entropy flow (4a),

| (42) |

which is constant throughout. At stationarity, the system is uniformly distributed and, if , the entropy production and flow satisfy . If , the particle has a net drift that sustains a probability current in the system, which prevents the system from reaching equilibrium. This setup can be generalised straightforwardly to M-state Markov processes characterised by the same cyclic structure and , to find that the steady-state entropy production is independent of M. These can be seen as simple models of protein conformational cycles driven by instantaneous energy inputs of order , for example ATP hydrolysis [39].

3.3. Random Walk on a Complete Graph

Consider a random walker on a complete graph with d nodes, where each node is connected to all other nodes, and the walker jumps from node to node , , with rate , see Figure 4. These are the off-diagonal elements of the corresponding Markov matrix whose diagonal elements are . The probability vector has components that are the probability that the system is in state j at time t. The general case of arbitrary transition rates is impossible to discuss exhaustively. In the uniform case, , the Markov matrix has only two distinct eigenvalues, namely eigenvalue with degeneracy and eigenvalue 0 with degeneracy 1. Assuming an arbitrary initial condition , the probability distribution at a later time t is

| (43) |

The steady state, which is associated with the vanishing eigenvalue, is the uniform distribution for all . The entropy production (4b) of the initial state relaxing to the uniform state is

| (44) |

and the entropy flow (4a) is throughout. If the walker is initially located on node k, so that , the entropy production simplifies to

| (45) |

We can see that the system reaches equilibrium at stationarity, since . Over long timeframes (), the asymptotic behaviour of is

| (46) |

by expanding the logarithm in the small exponential.

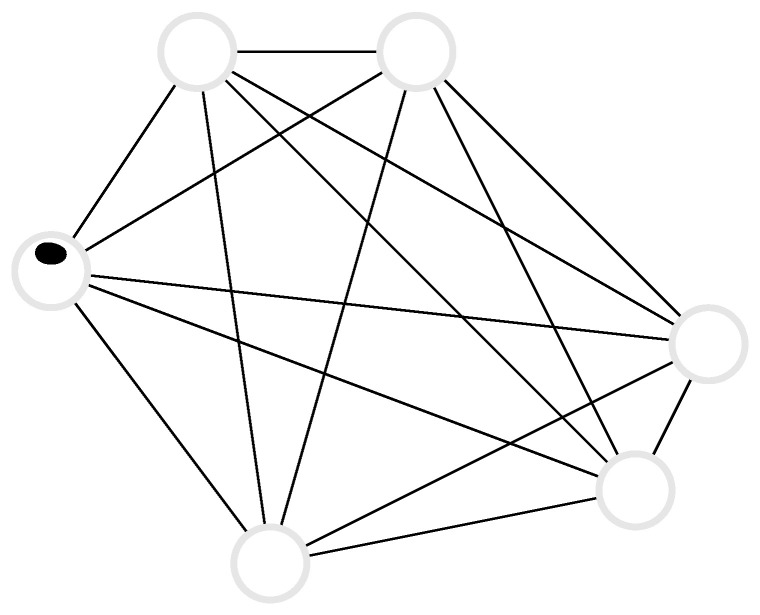

Figure 4.

Random walk on a complete graph of d nodes (here shown for ). The black blob indicates the current state of the system. For uniform transition rates, the symmetry under node relabelling leads to an equilibrium, homogeneous steady-state with for all j.

3.4. N Independent, Distinguishable Markov Processes

In the following, we consider N non-interacting, distinguishable particles undergoing Markovian dynamics on a discrete state space, see Figure 5. Each of the N particles carries an index and is in state , so that the state of the entire system is given by an N-particle state . Particle distinguishability implies the factorisation of state and transition probabilities into their single-particle contributions, whence the joint probability of an N-particle state factorises into a product of single particle probabilities of particle ℓ to be in state ,

| (47) |

Further, the Poissonian rate from N-particle state to N-particle state vanishes for all transitions that differ in more than one component ℓ, i.e., unless there exists a single such that for all , in which case , the transition rates of the single particle transition of particle ℓ.

Figure 5.

Example of non-interacting, distinguishable processes with , , , and . The black blobs indicate the current state of each sub-system.

The entropy production of this N-particle system according to Equation (4b),

| (48) |

simplifies considerably due to , as the sum may be re-written as

| (49) |

with so that and

| (50) |

Since for any inside the curly bracket, we may write

| (51) |

The product can thus be taken outside the curly bracket in Equation (50) and be summed over, as well as cancelled in the logarithm. After changing the dummy variables in the remaining summation from and to n and m respectively, the entropy production is

| (52) |

which is the sum of the entropy productions of the single particle , Equation (4b), irrespective of how each particle is initialised. The same argument applies to , the entropy flow Equation (4a). The entropy production and flow obviously simplify to an N-fold product of the single particle expressions if do not depend on ℓ and all particles are initialised by the same independent of ℓ. This result may equally be found from the dynamical entropy per unit time, Equation (8).

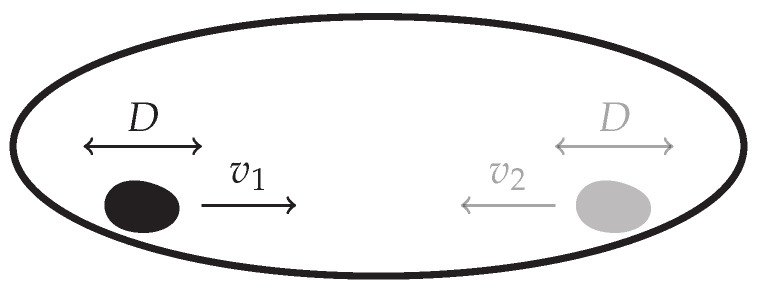

3.5. N Independent, Indistinguishable Two-State Markov Processes

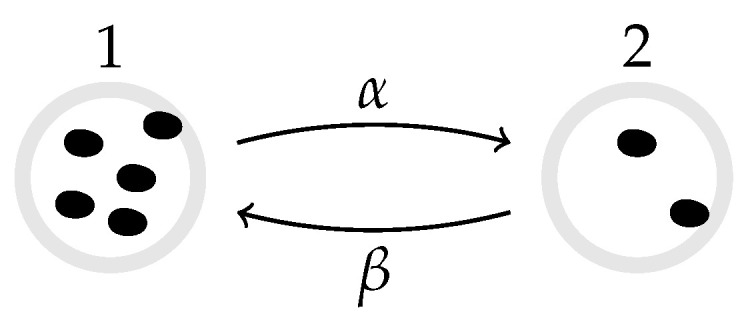

Suppose that N identical, indistinguishable, non-interacting particles follow the two-state Markov process described in Section 3.1, Figure 6 [23]. There are distinct states given by the occupation number of one of the two states, say state 1, as the occupation number of the other state follows as given the particle number N is fixed under the dynamics. In the following, denotes the probability of finding n particles in state 1 at time t. The master equation is then

| (53) |

Figure 6.

N independent, indistinguishable two-state Markov processes in continuous time. The black blobs indicate the current state of the single-particle sub-system. Since processes are indistinguishable, states are fully characterised by the occupation number of either state, if the total number of particles is known.

The state space and the evolution in it can be thought of as a hopping process on a one-dimensional chain of states with non-uniform rates. Provided initially follows a binomial distribution, with probability p for a particle to be placed in state 1 initially, the solution of Equation (53) is easily constructed from the solution in Equation (35) of Section 3.1 via

| (54) |

with , as , which can be verified by substituting Equation (54) into Equation (53). Using Equations (34) and (54) in (4b), the entropy production reads

| (55a) |

| (55b) |

which is the N-fold multiple of the result of the corresponding single particle system, Equation (37). This result, Equation (55b), depends on the initialisation being commensurable with Equation (54) which otherwise is recovered only asymptotically and only if the stationary distribution is unique.

Further, the entropy production of N indistinguishable particles being the N-fold entropy production of a single particle does not extend to the external entropy flow, which lacks the simplification of the logarithm and gives

| (56) |

thus picking up a correction in the form of the additional sum in the curly bracket that vanishes only at or , but does not contribute at stationarity because of the overall prefactor that converges to 0. To make sense of this correction in relation to particle indistinguishability, with the help of Equation (54) we can rewrite the difference between the right hand side of Equation (56) and the N-fold entropy flow of a single two-state system (38) as

| (57) |

which now explicitly involves the net probability current from the occupation number state with particles in state A to that with n particles in state A, as well as a the logarithm

| (58) |

Written in terms of the same combinatorial factors appearing in Equation (54), the logarithm (58) can be interpreted as a difference of microcanonical (Boltzmann) entropies, defined as the logarithm of the degeneracy of the occupation number state if we were to assume that the N particles are distinguishable. With the help of the master Equation (53) as well as Equation (54) and (58), the term Equation (57) may be rewritten to give

| (59) |

This result is further generalised in Equation (70).

3.6. N Independent, Indistinguishable d-State Processes

We generalise now the results in Section 3.3 and Section 3.5 to N independent d-state Markov processes, see Figure 7. These results represent a special case of those obtained in [40] when the N processes are non-interacting. In this section, we consider non-interacting, indistinguishable particles hopping on a graph of d nodes with edge-dependent hopping rates . As in the two-state system in Section 3.5, we find that the internal (but not the external) entropy production of the d-state system is N times the entropy production of the individual processes assuming the initial condition is probabilistically identical for all single-particle sub-systems. The entropy productions of a single such process according to Equation (4) read

| (60a) |

| (60b) |

where is the time-dependent probability of a single-particle process to be in state j, Section 3.3.

Figure 7.

N independent, indistinguishable d-state Markov processes (here shown for and ) in continuous time. Black blobs indicate the current state of the single-particle sub-systems. Due to indistinguishability, multi-particle states are fully characterised by the occupation number of an arbitrary subset of states, if the total number of particles is known.

To calculate the entropy production of the N concurrent indistinguishable processes using the occupation number representation, we first derive the probability of an occupation number configuration , with , which similarly to Equation (54) is given by the multinomial distribution

| (61) |

for the probability of the system to be in state at time t assuming that each particle is subject to the same single-particle distribution , for all t, i.e., in particular assuming that all particles are initialised identically, by placing them all at the same site or, more generally, by placing them initially according to the same distribution . Given this initialisation, Equation (61) solves Equation (2)

| (62) |

with the transition rates discussed below.

For non-interacting processes with a unique stationary distribution, Equation (61) is always obeyed in the limit of long times after initialisation, since the single-particle distributions are identical at steady state. The entropy production Equation (4b) of the entire system has the same form as Equation (48) of Section 3.4 (N independent, distinguishable particles) with , however now the transition rate between the occupation number state with to occupation number state . The rate vanishes except when differs from in exactly two distinct components, say and in which case with the transition rates of a single particle from j to k as introduced above. For such , the rate obeys and the probability fulfills

| (63) |

which simplifies the entropy production Equation (48) to

| (64) |

where the sum runs over all allowed configurations, namely for with and is derived from as outlined above. Strictly, has to be defined to vanish for invalid states , so that the first bracket in the summand of Equation (64) vanishes, in particular when , in which case . To proceed, we introduce the probability

| (65) |

defined to vanish for , so that . The probability is that of finding particles at states and particles at state j. It is Equation (61) evaluated in a system with only particles and configuration a function of . Equation (64) may now be rewritten as

| (66) |

where we have used that the arguments of the logarithm are independent of and . The summation over gives

| (67) |

so that

| (68) |

which is the N-fold entropy production of the single particle system , Equation (60a), or equivalently that of N distinguishable particles, Equation (52), Section 3.4. As in Section 3.5, this dramatic simplification does not carry over to the external entropy flow Equation (4a)

| (69) |

where of the last two terms only the first is the N-fold entropy flow of the single particle system , Equation (60b). The reason for the second term is the lack of a cancellation mechanism to absorb the and from the logarithm. Rewriting the second term as

| (70) |

| (71) |

using Equation (62) where we re-expressed the logarithm as

| (72) |

shows that the correction term has the same form as the corresponding term in the two-state system, Equation (57), namely that of a difference of microcanonical (Boltzmann) entropies of the multi-particle states. It vanishes when all are either 0 or 1, as expected for and also at stationarity when . In that limit when indeed Equation (60a) gives

| (73) |

with . As far as the entropy production is concerned, we thus recover and generalise the result in Section 3.5 on indistinguishable particles in a two-state system, which produce N times the entropy of a single particle. In Section 3.4, it was shown that N distinguishable particles have the same entropy production and flow as the sum of the entropy productions of individual particles. In Section 3.5 and Section 3.6, it was shown that the entropy production of indistinguishable particles, which require the states to be represented by occupation numbers, show the N-fold entropy production of the single particle system, provided suitable initialisation, but asymptotically independent of initialisation, provided the stationary state has a unique distribution. The same does not apply to the entropy flow, which generally acquires additional logarithmic terms accounting for the degeneracy of the occupation number states. The extra terms, however, are bound to vanish at stationarity, when .

3.7. Random Walk on a Lattice

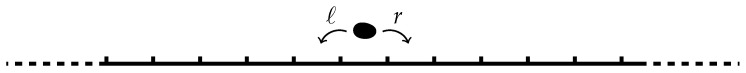

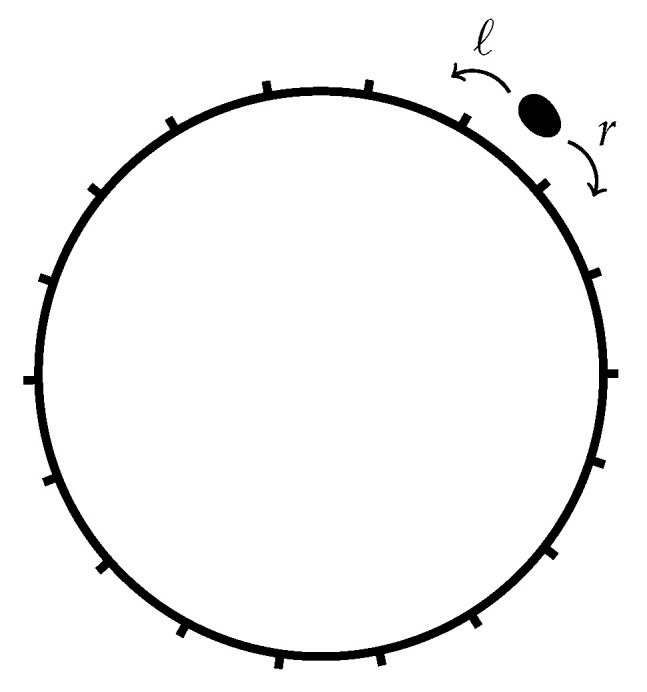

In this section, we study a particle on a one-dimensional lattice that hops to the right nearest neighbouring site with rate r and to the left with rate ℓ, see Figure 8. The case of unequal hopping rates, , is generally referred to as an asymmetric random walk and can be seen as a minimal model for the directed motion of a molecular motor on a cytoskeletal filament [41]. The position x of the particle at time t, after jumps, is

| (74) |

where the random hops are independent and identically distributed, and is the initial position at time . If a is the lattice spacing, the distance increments are with probability and with probability . The probability distribution of the particle position is

| (75) |

where is the probability that by time t, the particle has hopped times, and is the probability that the particle is at position x after n hops starting from . Since jumping is a Poisson process with rate , the random variable has a Poisson distribution,

| (76) |

On the other hand, the distribution of the position x after n jumps is the binomial distribution

| (77) |

where is the number of jumps to the right, with (77) implied to vanish if is not integer. From Equation (74), the parity of and are identical. Using (76) and (77), the probability distribution in (75) reads

| (78) |

where is the modified Bessel function of the first kind of , which is defined as [42]

| (79) |

The transition probability is then

| (80) |

Figure 8.

Simple random walk on an infinite, one-dimensional lattice in continuous time. The black blob indicates the current position of the random walker. The left and right hopping rates, labelled ℓ and r respectively, are assumed to be homogeneous but not equal in general, thus leading to a net drift of the average position.

Using (78) and (80) to calculate the entropy production (4b), we need the following identity for ,

| (81) |

which follows immediately from the definition of the modified Bessel function, Equation (79). It indicates that the only transitions that contribute to the entropy production are those where the particle travels a distance equal to the lattice spacing a. Then, the entropy production reads

| (82) |

see Figure 9. The entropy flow is independent of t, which owes its simplicity to the transition rates being independent of the particle’s position. We are not aware of a method to perform the sum in (82) in closed form and, given that this expression involves terms competing at large times t, we cannot calculate the stationary entropy production . If we naively assume that the sum in Equation (82) converges such that it is suppressed by the exponential , then the entropy production appears to converge to . If that were the case, would converge to a negative constant, while , Equation (1), which vanishes at given the initialisation of , is bound to be strictly positive at all finite t. Given that does not converge, not much else can be said about or . Numerically, using the GNU Scientific Library [43] implementation of Bessel functions, we find that, asymptotically for large times, Equation (82) is

| (83) |

if and if , see Figure 9.

Figure 9.

Entropy production of a random walk on a one-dimensional lattice (RW on ), for symmetric and asymmetric hopping rates, as a function of time, Equation (82) (solid lines). The asymptotic behaviour at large t, Equation (83) (dotted lines), decays algebraically in the symmetric case () and converges to a positive constant in the asymmetric case ().

To take the continuum limit of the probability distribution (78), we define v and D such that and . Using the asymptotic expansion of in m [42]

| (84) |

which is valid for , we obtain in fact the Gaussian distribution,

| (85) |

which corresponds to the distribution of a drift–diffusive particle, which is studied in Section 3.9. Therefore, all results derived in Section 3.9, apply to the present system in the continuum limit.

3.8. Random Walk on a Ring Lattice

In this section, we extend the system in Section 3.7 to a random walk on a ring lattice of length , so that , see Figure 10. The probability distribution of the particle on the ring follows from the distribution on the one-dimensional lattice in (78), by mapping all positions on the one-dimensional lattice to position on the ring with j being the winding number irrelevant to the evolution of the walker. Then, the distribution on the ring lattice reads,

| (86) |

and similarly for the transition probability . To calculate the entropy production (4b), each pair of points on the lattice is mapped to a pair of points on the ring.

Figure 10.

Simple random walk on an periodic, one-dimensional ‘ring’ lattice in continuous time. This model generalises the three-state Markov chain discussed in Section 3.2 to L states. The black blob indicates the current position of the random walker. Due to the finiteness of the state space, this process is characterised by a well defined steady-state, which is an equilibrium one for symmetric rates .

For , as only transitions to distinct, nearest neighbours contribute and the expression for the entropy production simplifies dramatically,

| (87) |

and similarly for

| (88) |

While the entropy flow on a ring is thus identical to that of a particle on a one-dimensional lattice, the entropy production on a ring is in principle more complicated, but with a lack of cancellations of in the logarithm as found in Section 3.7 and reaching stationarity comes the asymptote

| (89) |

This is easily derived from taken into the finite sum of Equation (87). It follows that converges to 0 at large t, as expected for a convergent stationary distribution.

The case and the less interesting case are not covered above, because of the different topology of the phase space of compared to . The difference can be observed in the different structure of the transition matrices (34) and (39). The framework above is based on each site having two outgoing and two incoming rates, in total. However, for there are only two transitions, which cannot be separated into four to fit the framework above, because even when rates of concurrent transitions between two given states are additive, their entropy production generally is not. The case of is recovered in the two-state system of Section 3.1 with , which is at equilibrium in the stationary state.

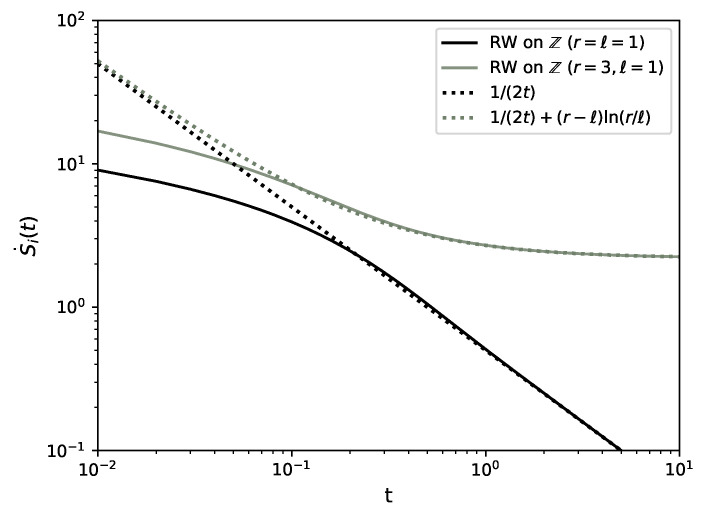

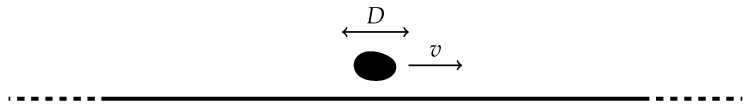

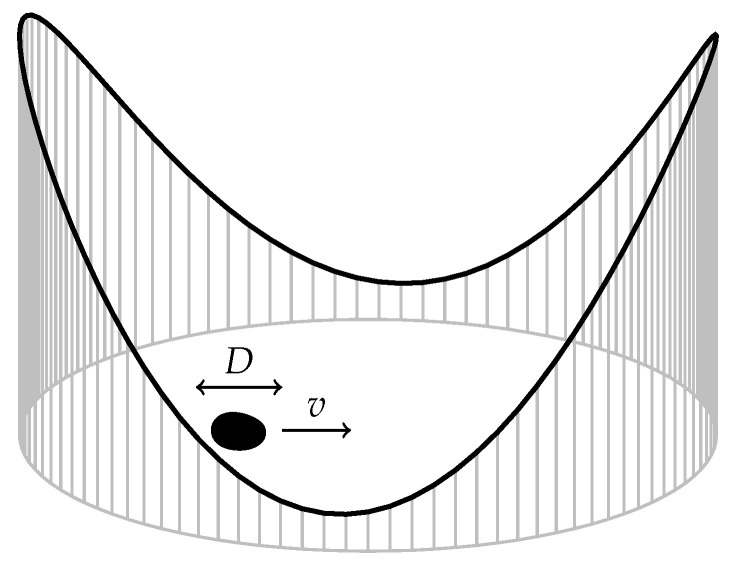

3.9. Driven Brownian Particle

In continuum space, the motion of a freely diffusive particle with diffusion constant D and drift v is governed by the Langevin equation , where is a Gaussian white noise with zero mean, , and covariance , see Figure 11 [44]. The corresponding Fokker–Planck equation for the probability distribution is [45]

| (90) |

Assuming the initial condition , the solution to the Fokker–Planck equation is the Gaussian distribution

| (91) |

which is also the Green function of the Fokker–Planck Equation (90). We therefore also have the transition probability density from state x to state y over an interval ,

| (92) |

Figure 11.

Driven Brownian particle on the real line. The black blob indicates the particle’s current position.

Substituting (91) and (92) into Equation (15) for the internal entropy production of a continuous system gives

| (93) |

where the Gaussian integrals can be evaluated in closed form, . Taking the limit then gives the entropy production rate [44,46,47],

| (94) |

see Figure 12. Similarly, following (16), the entropy flow reads independent of time t. As , we see that for finite t or , the system is out of equilibrium with a sustained probability current, so that there is in fact no steady-state distribution. We can verify Equation (94) for the time-dependent internal entropy production by computing the probability current

| (95) |

and substituting it together with (91), into (29). As expected, the two procedures return identical results. The independence of the transient contribution to the internal entropy production on the diffusion constant is remarkable although necessary on dimensional grounds, as a consequence of having dimensions of inverse time. The diffusion constant characterising the spatial behaviour of diffusion suggests that it is the temporal, rather than the spatial, features of the process that determine its initial entropy production.

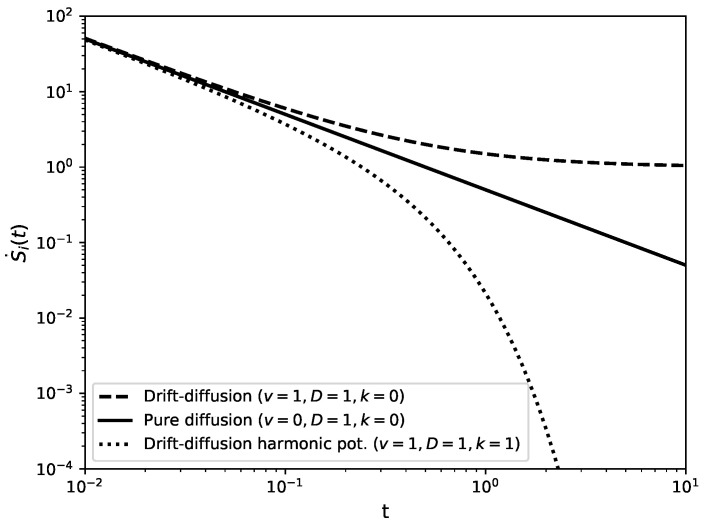

Figure 12.

Entropy production of the drift–diffusion process in an external potential, Equation (100), as a function of time for different parameter combinations. For vanishing potential stiffness, , we recover Equation (94) for a free drift–diffusion particle. In particular, for the entropy production decays algebraically, while for it converges to the constant value . For , the algebraic decay is suppressed exponentially over long timeframes as the process settles into its equilibrium steady-state.

3.10. Driven Brownian Particle in a Harmonic Potential

Consider a drift–diffusive particle such as in Section 3.9 that is confined in a harmonic potential , where k is the potential stiffness, see Figure 13 [48]. The Langevin equation is , where and and the Fokker–Planck equation for is [45]

| (96) |

Assuming the initial condition , the solution to the Fokker–Plank equation is the Gaussian distribution

| (97) |

corresponding to a probability current of the form

| (98) |

The transition probability density within is then also of Gaussian form, namely

| (99) |

Figure 13.

Driven Brownian particle in a harmonic potential. This process reduces to the standard Ornstein–Uhlenbeck process upon rescaling . The black blob indicates the particle’s current position. The presence of a binding potential implies that the system relaxes to an equilibrium steady-state over long timeframes.

Using (97) and (99) in (15) gives the entropy production rate

| (100) |

see Figure 12, and in (16) the external entropy flow

| (101) |

In the limit , the system will reach equilibrium as in Equation (97) converges to the Boltzmann distribution of the effective potential at temperature D. This is consistent with (100) and (101), since . Similarly to drift diffusion on the real line, Equation (94), there is a transient contribution to the entropy production that is independent of the diffusion constant D but does now depend on the stiffness k, which has dimensions of inverse time, through the rescaled time .

3.11. Driven Brownian Particle on a Ring with Potential

Consider a drift–diffusive particle on a ring in a smooth potential , Figure 14, initialised at position . The Langevin equation of the particle is [49,50,51] , where is Gaussian white noise. The Fokker–Planck equation is then

| (102) |

with and boundary condition for for all derivatives and . At stationarity, in the limit , where , the solution to the Fokker–Planck Equation (102) is [29,51,52]

| (103) |

where is the normalisation constant. The corresponding steady-state probability current is independent of x by continuity, , and reads [45]

| (104) |

Figure 14.

Driven Brownian particle on a ring with a periodic potential satisfying . Any finite diffusion constant results in a stationary state over long timeframes that is non-equilibrium for . The black blob indicates the particle’s current position.

In order to calculate the entropy production according to (15) and (16) using (17), we need for small . As discussed after Equation (17), obeys the Fokker–Planck Equation (102) in the form

| (105) |

with , so that

| (106) |

to be evaluated under an integral, where will require an integration by parts. As for the logarithmic term, we use [28,45]

| (107) |

so that

| (108) |

The entropy flow Equation (16) in the more convenient version Equation (21a) can be obtained easily using Equations (106) and (108)

| (109) |

| (110) |

| (111) |

after suitable integration by parts, whereby derivatives of the -function are conveniently interpreted as derivatives with respect to y to avoid subsequent differentiation of . Since , the factor needs to be differentiated for a term to contribute. In the absence of a potential, at stationarity, so that Equation (111) simplifies to and , Equation (94). Using the probability current , the entropy flow simplifies further to

| (112) |

so that at stationarity, when the current is spatially uniform, as the potential is periodic, entering only via the current.

An equivalent calculation of on the basis of (20a) gives

| (113a) |

| (113b) |

| (113c) |

| (113d) |

with the last line identical to Equation (13).

By considering the functional derivative in of Equation (103), one can show that the stationary current Equation (104) is extremal for constant , indicating that the magnitude of the stationary entropy flow Equation (113d) is maximised in a constant potential.

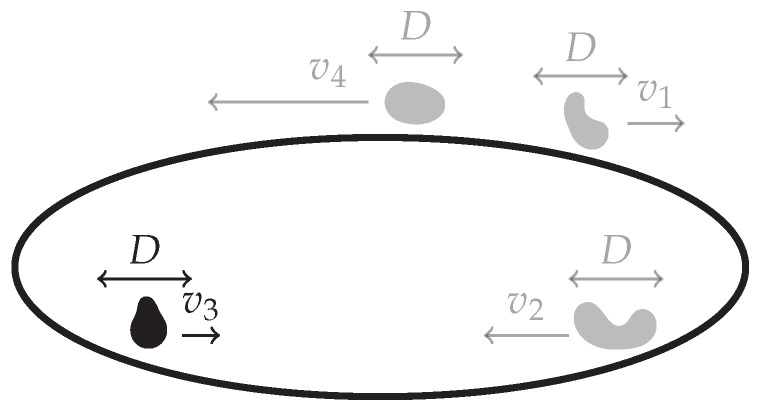

3.12. Run-and-Tumble Motion with Diffusion on A ring

Consider the dynamics of a run-and-tumble particle on a ring with Langevin equation , where the drift is a Poisson process with rate that alternates the speed of the particle between the constants and , and is Gaussian white noise, Figure 15. Run-and-tumble particles are widely studied as a model of bacterial motion [53]. The drift being or will be referred to as the mode of the particle being 1 or 2 respectively. Defining and as the joint probabilities that the particle is at position x at time t and in mode 1 or 2 respectively, the coupled Fokker–Planck equations for and are

| (114a) |

| (114b) |

whose stationary solution is the uniform distribution , as is easily verified by direct substitution. The corresponding steady-state probability currents thus read and .

Figure 15.

Run-and-tumble motion with diffusion on a ring . A run-and-tumble particle switches stochastically, in a Poisson process with rate , between two modes 1 and 2 characterised by an identical diffusion constant D but distinct drift velocities and . The two modes are here represented in black and grey, respectively. For arbitrary positive diffusion constant D or tumbling rate with , the steady state is uniform but generally non-equilibrium.

In the following, we denote by the propagator the probability density that a particle at position x in mode Q is found time later at position y in mode R. For , this propagation is a sum over all even numbers m of Poissonian switches, that occur with probability , which includes the probability of not switching at all over a total of time . For , the propagation is due to an odd number of switches.

For , the contribution to is thus , with of a drift diffusion particle on a ring, Section 3.11, but without potential, approximated at short times by the process on the real line, Equation (92) with drift or according to the particle’s mode. For , the contribution is a single convolution over the time at which the particle changes mode, most easily done after Fourier transforming. Before presenting this calculation in real space, we argue that any such convolution will result in some approximate Gaussian with an amplitude proportional to multiplied by a term of order . In small , therefore only the lowest orders need to be kept, for and for .

More concretely,

| (115) |

| (116) |

which in small , when , so that , expands to

| (117) |

whereas , the propagator with an even number of mode switches, is given by Equation (92) to leading order in ,

| (118) |

Much of the calculation of the entropy production follows the procedure in Section 3.9 and Section 3.11 to be detailed further below. To this end, we also need

| (119) |

As far as processes are concerned that involve a change of particle mode, therefore only the transition rates enter, not diffusion or drift. Given a uniform stationary spatial distribution of particles of any mode, mode changes between two modes cannot result in a sustained probability current, even when the switching rates differ,

| (120) |

for at stationarity as in the process discussed in Section 3.1. A probability current and thus entropy production can occur when different particle modes result in a different distribution, Section 3.10, or when mode switching between more than two modes results in a current in its own rights, Section 3.2 and Section 3.13.

Since the full time-dependent density is beyond the scope of the present work, we calculate entropy flow and production at stationary on the basis of a natural extension of Equations (4), (16) and (21a) to a mixture of discrete and continuous states

| (121) |

| (122) |

which immediately follows from Section 3.9 and Section 3.11, as the stationary density is constant, , and only contribute, with

| (123) |

If the drifts are equal in absolute value , then we recover the entropy production of a simple drift–diffusive particle, . This is because we can think of run-and-tumble as a drift–diffusion particle that changes direction instantly. Since changing the direction produces no entropy, the total entropy production rate should be the same as a drift–diffusion particle. The entropy production can alternatively be derived via (29) by computing with the steady-state currents stated above.

3.13. Switching Diffusion Process on a Ring

The dynamics of a one-dimensional run-and-tumble particle discussed above can be readily generalised to the so called switching diffusion process [54] by allowing for an extended set of drift modes , Figure 16. The corresponding Langevin equation for the particle position on a ring is almost identical to that of run-and-tumble, namely , with the exception that the process is now an M-state Markov process. In the general case, a single switching rate is thus not sufficient and the full transition rate matrix needs to be provided. In this formulation, the run-and-tumble dynamics Section 3.12 correspond to the choice with symmetric rates . Defining as the joint probability that at time t the particle is at position x and in mode i, thereby moving with velocity , the system (114) of Fokker–Planck equations generalises to

| (124) |

where the transmutation rates from mode i to mode j are assumed to be independent of position. To ease notation we use the convention . For a non-vanishing diffusion constant, the stationary solution is uniform for all modes and given by , where is the ith element of the eigenvector satisfying and the eigenvalue relation , which we assume to be unique for simplicity.

Figure 16.

Switching diffusion process on a ring in continuous time. A switching diffusion process involves a stochastic switching between M modes characterised by an identical diffusion constant D but distinct drifts (). The marginal switching dynamics are characterised as an M-state Markov process with transition rates from mode i to mode j.

The calculation of the steady-state entropy production follows very closely that of run-and-tumble presented above. The conditional transition probabilities including up to one transmutation event read to leading order

| (125) |

so that

| (126) |

We could perform the calculation of the entropy production using the procedure of Section 3.9 rather than drawing on the operator for , which, however, is used in the following for convenience, see Section 3.11. Substituting (125) and (126) into (20a) and assuming steady-state densities, we arrive at

| (127) |

where we have used Equation (126) in the operators containing the -functions and Equation (125) in the logarithms. The term is obtained by the same expansion as used in Equation (117), Section 3.12. Both terms contributing to the entropy production above are familiar from previous sections: the first is a sum over the entropy production of M drift–diffusion processes with characteristic drift , Section 3.11 without potential, weighted by the steady-state marginal probability for the particle to be in state i; the second is the steady-state entropy production of an M-state Markov process with transition rate matrix , which reduces to Equation (4) after integration. Carrying out all integrals, we finally have

| (128) |

Unlike run-and-tumble, Section 3.12, the transmutation process in switching diffusion does in general contribute to the entropy production for , since the stationary state generally does not satisfy global detailed balance. However, contributions to the total entropy production originating from the switching and those from the diffusion parts of the process are effectively independent at steady state, as only the stationary marginal probabilities of the switching process feature as weights in the entropy production of the drift–diffusion. Otherwise, the parameters characterising the two processes stay separate in Equation (128). Further, the drift–diffusion contributions of the form are invariant under the time-rescaling . This property originates from the steady-state distributions being uniform and would generally disappear in a potential, Section 3.10.

4. Discussion and Concluding Remarks

In this work, we calculate the rate of entropy production within Gaspard’s framework [11] from first principles in a collection of paradigmatic processes, encompassing both discrete and continuous degrees of freedom. Based on the Markovian dynamics of each system, where we can, we derive the probability distribution of the particle (or particles) as a function of time from Dirac or Kronecker- initial conditions , from which the transition probability follows straightforwardly. In some cases, we determine only the stationary density and the (short-time) propagator to leading order in . We then use Equation (4) for discrete systems or Equations (20) and (21) for continuous systems to calculate the time-dependent entropy production. We set out to give concrete, exact results in closed form, rather than general expressions that are difficult to evaluate, even when we allowed for general potentials in Section 3.11. In summary, the ingredients that are needed to calculate the entropy production in closed form in the present framework are: (a) the probability (density) to find the system in state x ideally as a function of time t and (b) the propagator , the probability (density) that the system is found at a certain state y after some short time given an initial state x. If the propagator is known for any time , it can be used to calculate the probability for some initial state . However, this full time dependence is often difficult to obtain. The propagator is further needed in two forms, firstly when it is most elegantly written as an operator in continuous space, and secondly .

For completeness, where feasible, we have calculated the probability current in continuous systems at position x. The mere presence of such a flow indicates broken time-reversal symmetry and thus non-equilibrium. Our results on the discrete systems (Section 3.1, Section 3.2, Section 3.3, Section 3.4, Section 3.5, Section 3.6, Section 3.7 and Section 3.8) illustrate two important aspects of entropy production. First, the need of a probability flow between states: in the two-state system Section 3.1 there are no transition rates and such that there is a sustained probability flow and therefore, the system inevitably relaxes to equilibrium. However, in the three-state system Section 3.2 the transition rates can be chosen so that there is a perpetual flow between any two states and therefore there is entropy production not only during relaxation but also at stationarity. Hence, we can ascertain these as non-equilibrium steady states in the long term limit due to the non-vanishing rate of internal entropy production. Uniformly distributed steady states can be far from equilibrium, as a rigorous analysis on the basis of the microscopic dynamics reveals, although an effective dynamics may suggest otherwise.

Second, we see how the extensivity of entropy production arises in the N-particle systems (Section 3.4, Section 3.5 and Section 3.6), independently of whether the particles are distinguishable or not. We therefore conclude that the number of particles in the system must be accounted for when calculating the entropy production, and doing otherwise will not lead to a correct result. This is sometimes overlooked, especially when using effective theories. In the continuous systems (Section 3.9, Section 3.10 and Section 3.11), which involve a drift v and a diffusion constant D, we always find the contribution to the entropy production emerging one way or another. Moreover, in the case of drift–diffusion on the real line (Section 3.9) we find that the contribution due to the relaxation of the system is independent of any of the system parameters.

Finally, we have studied two systems (Section 3.12 and Section 3.13) where the state space has a discrete and a continuous component. The discrete component corresponds to the transmutation between particle species, i.e., their mode of drifting, whereas the continuous component corresponds to the particle motion. We find that both processes, motion and transmutation, contribute to the entropy production rate essentially independently since any term that combines both processes is a higher-order term contribution in , and therefore vanishes in the limit .

This work has applications to the field of active particle systems, where particles are subject to local non-thermal forces. In fact, the systems studied in Section 3.2, Section 3.8, Section 3.9, Section 3.10, Section 3.11, Section 3.12 and Section 3.13 are prominent examples of active systems. We have shown that their entropy production crucially relies on the microscopic dynamics of the system, which are captured by the Fokker–Planck equation (or the master equation for discrete systems) and its solution. However, in interacting many-particle systems, such a description is not available in general. Instead, we may choose to use the Doi–Peliti formalism [55,56,57,58,59,60,61,62,63] to describe the system, since it provides a systematic approach based on the microscopic dynamics and which retains the particle entity.

Acknowledgments

The authors would like to thank Letian Chen, Greg Pavliotis and Ziluo Zhang for discussions and kind advice. The authors gratefully acknowledge Kin Tat (Kenneth) Yiu’s much earlier, related work [64].

Author Contributions

Formal analysis, L.C., R.G.-M., Z.Z., B.B. and G.P.; Supervision, G.P.; Writing—original draft, L.C., R.G.-M., Z.Z. and B.B.; Writing—review & editing, G.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Seifert U. Stochastic thermodynamics, fluctuation theorems, and molecular machines. Rep. Prog. Phys. 2012;75:126001. doi: 10.1088/0034-4885/75/12/126001. [DOI] [PubMed] [Google Scholar]

- 2.Jiang D.Q., Qian M., Qian M.P. Mathematical Theory of Nonequilibrium Steady States: On the Frontier of Probability and Dynamical Systems. Springer; Berlin/Heidelberg, Germany: 2004. Lecture notes in Mathematics. [Google Scholar]

- 3.Seifert U. Stochastic thermodynamics: From principles to the cost of precision. Physica A. 2018;504:176–191. doi: 10.1016/j.physa.2017.10.024. [DOI] [Google Scholar]

- 4.Barato A.C., Hartich D., Seifert U. Efficiency of cellular information processing. New J. Phys. 2014;16:103024. doi: 10.1088/1367-2630/16/10/103024. [DOI] [Google Scholar]

- 5.Lan G., Tu Y. Information processing in bacteria: Memory, computation, and statistical physics: A key issues review. Rep. Prog. Phys. 2016;79:052601. doi: 10.1088/0034-4885/79/5/052601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cao Y., Wang H., Ouyang Q., Tu Y. The free-energy cost of accurate biochemical oscillations. Nat. Phys. 2015;11:772–778. doi: 10.1038/nphys3412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Schmiedl T., Seifert U. Stochastic thermodynamics of chemical reaction networks. J. Chem. Phys. 2007;126:044101. doi: 10.1063/1.2428297. [DOI] [PubMed] [Google Scholar]

- 8.Pietzonka P., Fodor É., Lohrmann C., Cates M.E., Seifert U. Autonomous Engines Driven by Active Matter: Energetics and Design Principles. Phys. Rev. X. 2019;9:041032. doi: 10.1103/PhysRevX.9.041032. [DOI] [Google Scholar]

- 9.Schnakenberg J. Network theory of microscopic and macroscopic behavior of master equation systems. Rev. Mod. Phys. 1976;48:571–585. doi: 10.1103/RevModPhys.48.571. [DOI] [Google Scholar]

- 10.Maes C. The Fluctuation Theorem as a Gibbs Property. J. Stat. Phys. 1999;95:367–392. doi: 10.1023/A:1004541830999. [DOI] [Google Scholar]

- 11.Gaspard P. Time-Reversed Dynamical Entropy and Irreversibility in Markovian Random Processes. J. Stat. Phys. 2004;117:599–615. doi: 10.1007/s10955-004-3455-1. [DOI] [Google Scholar]

- 12.Seifert U. Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Let. 2005;95:040602. doi: 10.1103/PhysRevLett.95.040602. [DOI] [PubMed] [Google Scholar]

- 13.Nardini C., Fodor É., Tjhung E., van Wijland F., Tailleur J., Cates M.E. Entropy Production in Field Theories without Time-Reversal Symmetry: Quantifying the Non-Equilibrium Character of Active Matter. Phys. Rev. X. 2017;7:021007. doi: 10.1103/PhysRevX.7.021007. [DOI] [Google Scholar]

- 14.Landi G.T., Tomé T., de Oliveira M.J. Entropy production in linear Langevin systems. J. Phys. A Math. Theor. 2013;46:395001. doi: 10.1088/1751-8113/46/39/395001. [DOI] [Google Scholar]

- 15.Munakata T., Rosinberg M.L. Entropy production and fluctuation theorems for Langevin processes under continuous non-Markovian feedback control. Phys. Rev. Lett. 2014;112:180601. doi: 10.1103/PhysRevLett.112.180601. [DOI] [PubMed] [Google Scholar]

- 16.Loos S.A.M., Klapp S.H.L. Heat flow due to time-delayed feedback. Sci. Rep. 2019;9:2491. doi: 10.1038/s41598-019-39320-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ouldridge T.E., Brittain R.A., Wolde P.R.T. The power of being explicit: Demystifying work, heat, and free energy in the physics of computation. In: Wolpert D.H., editor. The Energetics of Computing in Life and Machines. SFI Press; Santa Fe, NM, USA: 2018. [Google Scholar]

- 18.Rodenfels J., Neugebauer K.M., Howard J. Heat Oscillations Driven by the Embryonic Cell Cycle Reveal the Energetic Costs of Signaling. Dev. Cell. 2019;48:646–658. doi: 10.1016/j.devcel.2018.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Song Y., Park J.O., Tanner L., Nagano Y., Rabinowitz J.D., Shvartsman S.Y. Energy budget of Drosophila embryogenesis. Curr. Biol. 2019;29:R566–R567. doi: 10.1016/j.cub.2019.05.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Horowitz J.M., Gingrich T.R. Thermodynamic uncertainty relations constrain non-equilibrium fluctuations. Nat. Phys. 2020;16:15–20. doi: 10.1038/s41567-019-0702-6. [DOI] [Google Scholar]

- 21.Dorosz S., Pleimling M. Entropy production in the nonequilibrium steady states of interacting many-body systems. Phys. Rev. E. 2011;83:031107. doi: 10.1103/PhysRevE.83.031107. [DOI] [PubMed] [Google Scholar]

- 22.Shannon C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948;27:379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x. [DOI] [Google Scholar]

- 23.Esposito M., Van den Broeck C. Three faces of the second law. I. Master equation formulation. Phys. Rev. E. 2010;82:011143. doi: 10.1103/PhysRevE.82.011143. [DOI] [PubMed] [Google Scholar]

- 24.Lebowitz J.L., Spohn H. A Gallavotti–Cohen-Type Symmetry in the Large Deviation Functional for Stochastic Dynamics. J. Stat. Phys. 1999;95:333–365. doi: 10.1023/A:1004589714161. [DOI] [Google Scholar]

- 25.Kullback S., Leibler R.A. On Information and Sufficiency. Ann. Math. Stat. 1951;22:79–86. doi: 10.1214/aoms/1177729694. [DOI] [Google Scholar]

- 26.Diana G., Esposito M. Mutual entropy production in bipartite systems. J. Stat. Mech. Theory Exp. 2014;2014:P04010. doi: 10.1088/1742-5468/2014/04/p04010. [DOI] [Google Scholar]

- 27.Roldán E., Neri I., Dörpinghaus M., Meyr H., Jülicher F. Decision Making in the Arrow of Time. Phys. Rev. Lett. 2015;115:250602. doi: 10.1103/PhysRevLett.115.250602. [DOI] [PubMed] [Google Scholar]

- 28.Wissel C. Manifolds of equivalent path integral solutions of the Fokker-Planck equation. Z. Phys. B Condens. Matter. 1979;35:185–191. doi: 10.1007/BF01321245. [DOI] [Google Scholar]

- 29.Pavliotis G.A. Stochastic Processes and Applications—Diffusion Processes, the Fokker-Planck and Langevin Equations. Springer; New York, NY, USA: 2014. [Google Scholar]

- 30.Wang J. Landscape and flux theory of non-equilibrium dynamical systems with application to biology. Adv. Phys. 2015;64:1–137. [Google Scholar]

- 31.Seifert U. [(accessed on 2 November 2020)];Lecture Notes: Soft Matter. From Synthetic to Biological Materials. 2008 39th IFF Spring School, Institut of Solid State Research, Jülich. Available online: https://www.itp2.uni-stuttgart.de/dokumente/b5_seifert_web.pdf.

- 32.Pietzonka P., Seifert U. Entropy production of active particles and for particles in active baths. J. Phys. A Math. Theor. 2017;51:01LT01. [Google Scholar]

- 33.Onsager L., Machlup S. Fluctuations and Irreversible Processes. Phys. Rev. 1953;91:1505–1512. [Google Scholar]

- 34.Täuber U.C. Critical Dynamics: A Field Theory Approach to Equilibrium and Non-Equilibrium Scaling Behavior. Cambridge University Press; Cambridge, UK: 2014. [DOI] [Google Scholar]

- 35.Cugliandolo L.F., Lecomte V. Rules of calculus in the path integral representation of white noise Langevin equations: The Onsager–Machlup approach. J. Phys. A Math. Theor. 2017;50:345001. doi: 10.1088/1751-8121/aa7dd6. [DOI] [Google Scholar]

- 36.Fodor É., Nardini C., Cates M.E., Tailleur J., Visco P., van Wijland F. How far from equilibrium is active matter? Phys. Rev. Lett. 2016;117:038103. doi: 10.1103/PhysRevLett.117.038103. [DOI] [PubMed] [Google Scholar]

- 37.Caprini L., Marconi U.M.B., Puglisi A., Vulpiani A. The entropy production of Ornstein–Uhlenbeck active particles: A path integral method for correlations. J. Stat. Mech. Theory Exp. 2019;2019:053203. doi: 10.1088/1742-5468/ab14dd. [DOI] [Google Scholar]

- 38.Lesne A. Shannon entropy: A rigorous notion at the crossroads between probability, information theory, dynamical systems and statistical physics. Math. Struct. Comput. Sci. 2014;24:e240311. doi: 10.1017/S0960129512000783. [DOI] [Google Scholar]

- 39.Zhang X.J., Qian H., Qian M. Stochastic theory of nonequilibrium steady states and its applications. Part I. Phys. Rep. 2012;510:1–86. doi: 10.1016/j.physrep.2011.09.002. [DOI] [Google Scholar]

- 40.Herpich T., Cossetto T., Falasco G., Esposito M. Stochastic thermodynamics of all-to-all interacting many-body systems. New J. Phys. 2020;22:063005. doi: 10.1088/1367-2630/ab882f. [DOI] [Google Scholar]

- 41.Lacoste D., Lau A.W., Mallick K. Fluctuation theorem and large deviation function for a solvable model of a molecular motor. Phys. Rev. E. 2008;78:011915. doi: 10.1103/PhysRevE.78.011915. [DOI] [PubMed] [Google Scholar]

- 42.Magnus W., Oberhettinger F., Soni R.P. Formulas and Theorems for the Special Functions of Mathematical Physics. Springer; Berlin, Germany: 1966. [Google Scholar]

- 43.Galassi M., Davies J., Theiler J., Gough B., Jungman G., Alken P., Booth M., Rossi F. GNU Scientific Library Reference Manual. 3rd ed. Network Theory Ltd.; 2009. [(accessed on 27 October 2020)]. p. 592. Available online: https://www.gnu.org/software/gsl/ [Google Scholar]

- 44.Van den Broeck C., Esposito M. Three faces of the second law. II. Fokker-Planck formulation. Phys. Rev. E. 2010;82:011144. doi: 10.1103/PhysRevE.82.011144. [DOI] [PubMed] [Google Scholar]

- 45.Risken H., Frank T. The Fokker-Planck Equation—Methods of Solution and Applications. Springer; Berlin/Heidelberg, Germany: 1996. [Google Scholar]

- 46.Maes C., Redig F., Moffaert A.V. On the definition of entropy production, via examples. J. Math. Phys. 2000;41:1528–1554. doi: 10.1063/1.533195. [DOI] [Google Scholar]

- 47.Spinney R.E., Ford I.J. Entropy production in full phase space for continuous stochastic dynamics. Phys. Rev. E. 2012;85:051113. doi: 10.1103/PhysRevE.85.051113. [DOI] [PubMed] [Google Scholar]

- 48.Andrieux D., Gaspard P., Ciliberto S., Garnier N., Joubaud S., Petrosyan A. Thermodynamic time asymmetry in non-equilibrium fluctuations. J. Stat. Mech. Theory Exp. 2008;2008:01002. doi: 10.1088/1742-5468/2008/01/P01002. [DOI] [Google Scholar]

- 49.Reimann P., Van den Broeck C., Linke H., Hänggi P., Rubi J.M., Pérez-Madrid A. Giant Acceleration of Free Diffusion by Use of Tilted Periodic Potentials. Phys. Rev. Lett. 2001;87:010602. doi: 10.1103/PhysRevLett.87.010602. [DOI] [PubMed] [Google Scholar]

- 50.Pigolotti S., Neri I., Roldán É., Jülicher F. Generic properties of stochastic entropy production. Phys. Rev. Lett. 2017;119:140604. doi: 10.1103/PhysRevLett.119.140604. [DOI] [PubMed] [Google Scholar]

- 51.Neri I., Roldán É., Pigolotti S., Jülicher F. Integral fluctuation relations for entropy production at stopping times. J. Stat. Mech. Theory Exp. 2019;2019:104006. doi: 10.1088/1742-5468/ab40a0. [DOI] [Google Scholar]

- 52.Horsthemke W., Lefever R. Noise-Induced Transitions-Theory and Applications in Physics, Chemistry, and Biology. Springer; Berlin/Heidelberg, Germany: 1984. [Google Scholar]

- 53.Schnitzer M.J. Theory of continuum random walks and application to chemotaxis. Phys. Rev. E. 1993;48:2553–2568. doi: 10.1103/PhysRevE.48.2553. [DOI] [PubMed] [Google Scholar]

- 54.Yang S.X., Ge H. Decomposition of the entropy production rate and nonequilibrium thermodynamics of switching diffusion processes. Phys. Rev. E. 2018;98:012418. doi: 10.1103/PhysRevE.98.012418. [DOI] [PubMed] [Google Scholar]

- 55.Doi M. Second quantization representation for classical many-particle system. J. Phys. A Math. Gen. 1976;9:1465–1477. doi: 10.1088/0305-4470/9/9/008. [DOI] [Google Scholar]

- 56.Peliti L. Path integral approach to birth-death processes on a lattice. J. Phys. 1985;46:1469–1483. doi: 10.1051/jphys:019850046090146900. [DOI] [Google Scholar]

- 57.Täuber U.C., Howard M., Vollmayr-Lee B.P. Applications of field-theoretic renormalization group methods to reaction-diffusion problems. J. Phys. A Math. Gen. 2005;38:R79–R131. doi: 10.1088/0305-4470/38/17/R01. [DOI] [Google Scholar]

- 58.Smith E., Krishnamurthy S. Path-reversal, Doi-Peliti generating functionals, and dualities between dynamics and inference for stochastic processes. arXiv. 20181806.02001 [Google Scholar]

- 59.Bordeu I., Amarteifio S., Garcia-Millan R., Walter B., Wei N., Pruessner G. Volume explored by a branching random walk on general graphs. Sci. Rep. 2019;9:15590. doi: 10.1038/s41598-019-51225-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Lazarescu A., Cossetto T., Falasco G., Esposito M. Large deviations and dynamical phase transitions in stochastic chemical networks. J. Chem. Phys. 2019;151:064117. doi: 10.1063/1.5111110. [DOI] [Google Scholar]

- 61.Pausch J., Pruessner G. Is actin filament and microtubule growth reaction-or diffusion-limited? J. Stat. Mech. Theory Exp. 2019;2019:053501. doi: 10.1088/1742-5468/ab081c. [DOI] [Google Scholar]