Abstract

The ability to inhibit motor response is crucial for daily activities. However, whether brain networks connecting spatially distinct brain regions can explain individual differences in motor inhibition is not known. Therefore, we took a graph-theoretic perspective to examine the relationship between the properties of topological organization in functional brain networks and motor inhibition. We analyzed data from 141 healthy adults aged 20 to 78, who underwent resting-state functional magnetic resonance imaging and performed a stop-signal task along with neuropsychological assessments outside the scanner. The graph-theoretic properties of 17 functional brain networks were estimated, including within-network connectivity and between-network connectivity. We employed multiple linear regression to examine how these graph-theoretical properties were associated with motor inhibition. The results showed that between-network connectivity of the salient ventral attention network and dorsal attention network explained the highest and second highest variance of individual differences in motor inhibition. In addition, we also found those two networks span over brain regions in the frontal-cingulate-parietal network, suggesting that these network interactions are also important to motor inhibition.

Introduction

The ability to refrain from the action when a readied response is no longer adequate is one of the crucial survival skills for an individual. Therefore, it is important to evaluate an individual’s ability to withhold an action [1]. In the laboratory, one of the conventional paradigms developed to evaluate an individual’s such inhibition efficacy is the stop-signal task (SST) [2, 3]. The SST assesses the motor inhibition by instructing participants to withhold a motor response, and how effectively the participants can withhold this response can be calculated as the stop-signal reaction time (SSRT) [4]. Utilizing this paradigm, researchers have observed decreased motor inhibition in various clinical disorders, such as attention deficit hyperactivity disorder [5] and substance use disorders [6, 7]. The deficiency in motor inhibition can also be found in normal aging [8–13].

Neuroimaging research has identified brain regions associated with motor inhibition, such as the right inferior frontal cortex (rIFC; [14, 15]), right frontal-opercular regions (including the right inferior frontal cortex and the right anterior insula; [16]), subcortical regions (thalamus and basal ganglia; [17]), and presupplementary motor areas [18]. These studies employed the task-evoked functional magnetic resonance image (fMRI) technique, in which an individual’s brain activations were quantified and correlated with task performance. However, in some scenarios, individuals, such as clinical patients or the elderly, may have difficulties in completing the SST, a time-consuming computer task, in a scanner. Therefore, developing an alternative neuroimaging technique to evaluate an individual’s performance variance without time-consuming SST inside an MRI scanner is useful for clinical applications (e.g., [19, 20]) as well as for the assessment of individual differences among the normal population (e.g., [21, 22]). The resting-state fMRI measures brain activity in a task-free setting, estimates an individual’s intrinsic functional organization of the brain [23]. Acquisition of neuroimaging data at rest (i.e., resting-state fMRI) may be helpful to capture the individual differences of performance variance in cognitive performance (e.g., [24]).

Resting-state fMRI (rs-fMRI) reflects the spontaneous but organized synchrony of the low-frequency fluctuations in the blood oxygen level-dependent (BOLD) signals emerging from some brain regions at rest [25]. The analysis of temporal correlations between the spontaneous BOLD-signals in different brain regions allows for the quantification of functional connectivity [26] and the investigation of intrinsic connectivity networks [27]. Important advantages of the rs-fMRI approach are that rs-fMRI can minimize confounds of differences in the level of task engagement, be used to detect differences in brain function between certain patients and normal populations, and correlate the differences in functional connectivity to clinical applications.

Given the advantages of the rs-fMRI approach, some (but not many) prior research has employed this method to correlate the intrinsic functional connectivity derived from rs-fMRI data with motor inhibition performance measured by the SST. However, this research used different analysis methods. For example, Tian and Ren [28] used regional homogeneity (ReHo) as a method to examine the relationship between performances of motor inhibition and changes of spontaneous fluctuations in brain activity. ReHo is a voxel-based measure of brain activity that evaluates the similarity or synchronization between the time series of a given voxel and its nearest neighbors [29, 30]. Tian and Ren [28] found local synchronization of the spontaneous fluctuations in brain activity changes in three brain regions within the default mode network––that is, the bilateral medial prefrontal cortex, the bilateral precuneus, and the left inferior parietal lobule were associated with performance measured by SST. Their results based on ReHo can be understood as an index of network centrality for characterizing the voxel-wise consistency of signals in a region in the human functional connectome.

Other analysis methods, such as the fractional amplitude of low-frequency spontaneous fluctuations (fALFF) in brain activity have also been employed and associated with SSRT. For example, Hu and Chao [31] used the fALFF analysis method and found that the pre-supplementary motor area (pre-SMA) and sensorimotor area were negatively correlated to SSRT. More recently, Lee and Hsieh [12] further jointly combined these two analysis methods (i.e., ReHo and fALFF) and have reported that bilateral inferior frontal gyrus and parts of the default mode network were correlated with individual differences in SSRT.

In addition to within-cluster and whole-brain spontaneous fluctuations, changes correspondingly measured by ReHo and fALFF––or other methods, such as independent component analysis (ICA), which decomposes the signal from whole-brain voxels to spatially and temporally independent components––were also reported regarding the association between individual brain differences and the motor inhibition. For example, Tian and Kong [32] applied the ICA method and found the components of the motor network, motor control network, visual network, dorsal attention network, and task-activation network were associated with individual differences of SSRT. Their results suggest that spontaneous fluctuations in brain activity changes are associated with individual performance differences in motor inhibition. However, neither intrinsic brain activity in clusters of voxels (e.g., ReHo) [28] nor whole-brain spontaneous fluctuations (e.g., fALFF and ICA) [12, 31, 32] offer information regarding the organization of the functional network. Comprehension of the organizational principles in brain networks may provide a key to understanding the interplay between functional segregation and integration of large-scale networks and ultimately the emergence of cognition and adaptive behaviors. Investigations of large-scale dynamic functional networks derived from brain imaging data (e.g., see Fig 1A) have revealed valuable insights about the topological organization of the human brain in health and disease [33]. The application of graph-theoretic methods can identify the topological organization of brain networks for assessing the brain organization and the functional network properties [34, 35]. In a recent study by Kumar and colleagues [24], they used the graph-theory quantity (i.e., maximum flow between nodes, whose capacities are defined with transfer entropy) to predict the attention ability of SST. However, their study did not investigate the association between functional network organization properties and motor inhibition. As such, the goal of this study is to investigate whether functional networks organization properties are associated with the performance of motor inhibition.

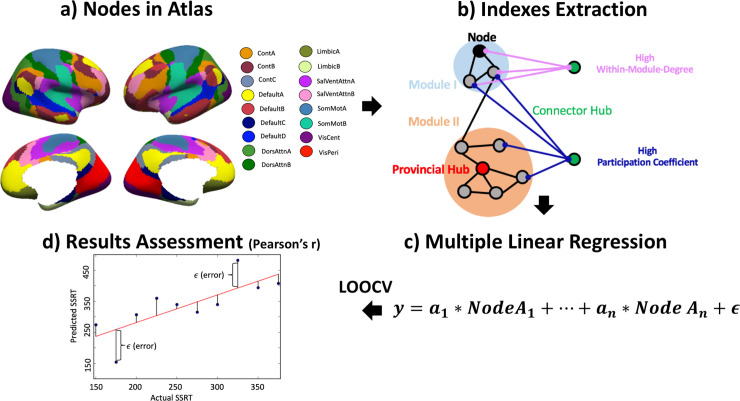

Fig 1. Experimental method.

(a) Nodes in the atlas: nodes used from Schaefer and Kong [36] atlas. (b) Indexes extraction: indexes calculation for each node, including within-module-degree (WMD) and participation coefficient (PC). WMD represents the degree of a node’s connectome level within a module, while the PC represents the degree of a node’s connectome level between other networks. (c) Multiple linear regression: we set PCs/WMDs of each network as a pattern and used multiple linear regression to find the relationship between the linear combination of network property pattern and the SSRT. (d) Result assessment: all the prediction models were tested by Pearson’s correlation between predicted stop-signal reaction time (SSRT) and the actual SSRT.

Graph-theoretic network analysis [37] provides a set of calculations to estimate and analyze the properties and functions of complex networks (see Fig 1B). The brain can be modeled as a complex network for graph-theoretic analysis, in which brain regions are treated as nodes in the network, and between-regional connections are treated as edges. Graph-theoretic network analysis can assess network properties and functions of different brain regions. For example, the PCs of each node in each network represent the degree of the between-network connectivity. The nodes with high PC are called “connector hub” and the networks having more and stronger “connector hubs” are presumed to mediate interactions between networks. In contrast, the node that has more within-networks connectivity, which is assessed by the WMD, is called the “provincial hub”, and the network with more provincial hubs is presumed to promote the within-network interaction for conducting the particular function of the network [38]. These two indexes (i.e., PC and WMD) can not only reveal the connectivity properties of networks in healthy, normal population (e.g., [39]) but also distinguish normative brain origination from the abnormal ones, such as frontal lobe epilepsy [40]. The principal contribution of our study is to examine the within- and between-module network connectivity in relation to motor inhibition. To this end, we implemented multiple linear regression (see Fig 1C) to identify the relationship between brain network properties and motor inhibition.

The goal of this study was to examine the relationship between the properties of topological organization in the functional brain network and inter-individual differences in motor inhibition. Specifically, we first performed a graph-theoretic network analysis on rs-fMRI data for measuring functional network organization properties. We then used multiple linear regression with leave-one-out cross validation to estimate the relationship between graph metrics and SSRT (see Fig 1C). Finally, we performed Pearson’s correlation to estimate if, across subjects, the predicted SSRT is correlated to the actual SSRT (see Fig 1D). The result was also verified by permutation test with 10000 iterations to calculate the p-value from the empirically derived null distribution. We aimed to test whether within-network or between-network module connectivity in a large-scale functional network explains the performance variances on individual differences of motor inhibition.

Method and materials

This study protocol was approved by the Human Research Ethics Committee of the National Cheng Kung University, Tainan, Taiwan, R.O.C. [Contract No. 104–004] to protect the participants’ right according to the Declaration of Helsinki and the rule of research at the University. All participants signed an informed consent form before participating in the experiments.

Participants

One hundred eighty-three right-handed participants without a history of psychological and neurological disorders were recruited. Montreal Cognitive Assessment (MoCA; [41]) and Beck Depression Inventory-II (BDI-II; [42]) were assessed for participants, and those with scores lower than 22 in MoCA (n = 4) or higher than 13 in BDI-II (n = 7) were excluded before the final analysis. Additionally, 31 participants were excluded due to MRI technical problems, failed to learn the behavioral task (SST), incomplete data, or outlier calculated by Whisker method (below 25th percentile– 1.5 x interquartile range or over 75th percentile + 1.5 x interquartile range) in behavioral performance. Therefore, a total of 141 healthy volunteers aged 20–78 years (67 females, mean age 46.34, SD = 16.18) were included in the final analysis. Table 1 showed the demographic information on the participants included in the final analysis. All participants acquired their behavioral task and rs-fMRI during day time and were asked to star at a white cross during rs-fMRI acquisition.

Table 1. 141 participants’ demographic information.

| Age group (years) | Age (mean/SD) | Sample size (male/female) | MoCA (mean/SD) | BDI-II (mean/SD) |

|---|---|---|---|---|

| 20–30 | 24.09/2.91 | 19/13 | 28.56/0.98 | 5.06/3.75 |

| 30–40 | 33.64/2.82 | 9/9 | 27.39/2.00 | 6.39/4.38 |

| 40–50 | 44.70/3.01 | 14/9 | 26.39/2.15 | 6.35/4.05 |

| 50–60 | 55.19/3.06 | 14/21 | 26.86/1.88 | 5.23/4.02 |

| 60–70 | 64.50/2.36 | 12/13 | 27.12/1.90 | 4.08/4.30 |

| 70–80 | 73.13/2.52 | 6/2 | 25.88/2.30 | 2.88/2.36 |

SD: standard deviation; MoCA: Montreal Cognitive Assessment; BDI-II: Beck Depression Inventory II

Behavioral task: Stop-signal task

Participants were instructed to respond as quickly and correctly as possible to the on-screen target stimuli “O” and “X” by correspondingly pressing the “z” and “/” buttons on a keyboard with their left and right index fingers, respectively. Also, they were asked to inhibit their responses if they heard auditory stop signal “beeps”, which were 500 Hz and lasted for 300 ms after the stimulus. The target stimulus was colored white with a size of 2 cm and at a visual angle of 0.64° in the center of a black screen.

Two practice blocks were conducted before the formal experiment started. In the first practice block, participants were instructed to perform a choice reaction-time task and tried their best to respond to the stimulus as soon and accurately as possible. The “beep” sounds were still presented in the background, but participants were asked to ignore this sound. In the second practice block, participants were instructed to react to the “beep” sound following the stimulus onset by stopping the response immediately. Participants were told not to slow down their reaction to waiting for the stop signal to occur.

After the practice, the formal experiment commenced, and all of the settings and rules were the same as for the second practice block. In the formal experiment, 5 blocks were included (40 stop-trials and 100 go-trials per block). The start stop-signal delay (SSD) was first selected from one of two interleaved staircases, 150 ms and 350 ms. The SSD then varied according to the participants’ response on stop-trials, which made the following SSD increased by 50 ms while one successfully stopped and made the SSD decreased by 50 ms while one did not successfully stop [43]. The SSD range was fixed between 0 and 800 ms. The staircase procedure ensured that the subject's likelihood of stopping converged to a 50% chance. The inter-stimulus interval varied from 1,300 to 4,800 ms, and the completion time was approximately 30 min, including instruction and practice time.

The block-wised integration method [44] was used for the SSRT calculation. Recently, Verbruggen and Chambers [44] suggested that a block-wise integration method for SSRT calculation could reduce bias from skewness and slow the degree of response. In light of the improvement of this method, a block-wise calculation was performed in this study, which is the least biased approach currently available for SSRT calculation. We subtracted the nth go RT from the mean SSD block by block, where n was the number of RTs in the go-RT distribution multiplied by the p(response|stop). The mean of the five-block values was the block-wise integration SSRT.

Image parameters and data analysis

Image acquisition

MRI images were acquired using a GE MR750 3T scanner (GE Healthcare, Waukesha, WI, USA) at the Mind Research Imaging center at National Cheng Kung University. Resting-state functional images were acquired with a gradient-echo echo-planar imaging (EPI) pulse sequence (TR = 2,000 ms, TE = 30 ms, flip angle = 77, 64 × 64 matrices, FOV = 22 × 22 cm2, slice thickness = 4 mm, no gap, voxel size = 3.4375 mm × 3.4375 mm × 4 mm, 32 axial slices covering the entire brain). A total of 245 volumes were acquired; the first five served as dummy scans and were discarded to avoid T1 equilibrium effects. Participants were instructed to remain awake with their eyes open and to fixate on a central white cross shown on the screen during the scans. High-resolution anatomical T1 images were acquired using fast-SPGR which consisted of 166 axial slices (TR = 7.6 ms, TE = 3.3 ms, flip angle = 12°, 224 × 224 matrices, slice thickness = 1 mm) lasting 218 seconds.

For quality control, the subjects with 2.5 mm and 2.5 degrees in max head motion were asked to be scanned again. No maximum numbers of censored volumes were set as criteria for including a subject, but volumes of the scrubbing over 0.9 mm subject-motion were included as a covariate for avoiding confounding.

Image pre-processing

Functional images were preprocessed using CONN toolbox 18a (www.nitrc.org/projects/conn) and SPM 12 (http://www.fil.ion.ucl.ac.uk/spm) implemented in Matlab (The MathWorks, Inc., Natick, MA, USA). We employed a pre-processing protocol modified from Geerligs and Tsvetanov [45]. First, slice timing, realignment, normalization, and smoothing with an 8-mm Gaussian kernel were conducted. Second, we calculated nuisance covariates (denoted as R), including movement parameters (translations along the x, y, and z axes and translations in three rotation angles: roll, yaw, and pitch), white matter signal (WM), and cerebral spinal fluid (CSF). Third, we regressed out bad frames at the subject level detected by “head motion censoring” [46] and [R R2 Rt-1 R2t-1], where t and t-1 refer to the current and immediately preceding timepoint, and R refers to nuisance covariates [47]. Finally, a band-pass filter at 0.008–0.1 Hz was applied to nuisance covariates and fMRI data simultaneously [48]. To further control for potential motion confounds, we ran partial Pearson correlations between observed scores with predicted scores while controlling for head motion (defined as the average of the mean frame-to-frame motion).

Identifying functional networks and organization and their properties

We used a whole-brain parcellation template [36] to define cortical regions of interests (ROI), and calculated functional connectivity between these ROIs. It contains 400 nodes of brain regions, and these nodes can be further categorized into 17 networks, including four subnetworks of the default mode network, two subnetworks of the somatomotor network, three subnetworks of the control network, two subnetworks of the visual network, two subnetworks of the dorsal attention network, two subnetworks of the salient ventral attention network, and two subnetworks of the limbic network (see S1 Table for more detail). The PC and WMD of each node were calculated by the method mentioned by Guimera and Amaral [49]. For a review of these four measures, please see Rubinov and Sporns [50].

Specifically, PC can be estimated as the degree to which a node is connected to external networks, with values ranging from 0 to 1. Nodes that are associated solely with other nodes within a single network would have a PC of 0, while nodes with many distributed associates with many different networks would have a PC closer to 1 [51]. PC is normalized by the degree of the node. The PC value of a region is defined as follows: , where Ki is the sum of the connectivity weight of i, K is the sum of the connectivity weight between i and the cortical networks, and NM is the total number of networks [38, 39]. Moreover, WMD is calculated as follows: binarized correlation matrices were setting weights above the density threshold to 1. WMD values were calculated across a range of density thresholds (ranged from 0.1 to 0.15), and averaged across thresholds. WMD is calculated as: , where is the average number of connections among all cortical ROIs within cortical network s, and σCWs is the SD of the number of connections of all ROIs in networks. Ki is the number of connections between i and all cortical ROIs in networks [38, 39]. WMD scores of each network’s ROI were calculated using the mean and SD of the within-network degree (number of intra-network connections) calculated from each cortical functional network. WMD is normalized by the number of regions within the associated functional network. Higher WMD values reflect more within-network connections of the voxels within the network it was assigned to.

Multiple linear regression

For the organization and properties of functional networks in the whole brain, the participation coefficient (PC) and within-module-degree (WMD) measures of each node in each network were independently extracted as predictors and used with multiple linear regression. For example, there were 12 nodes in the Control C network, with 12 PCs and 12 WMDs contained in the Control C network and these PCs and WMDs in each network were individually calculated by multiple linear regression (Eq 1). The multiple linear regression model was conducted repeatedly for each of the 34 network properties, including 17 PC and 17 WMD networks. The equation for each network model is as follows:

| (Eq 1) |

where yN is the SSRT to be predicted with the N network, and Node1,N,P, …, Nodek,N,P are the property P of k nodes in the N network. The property P represents either PC or WMD. The K nodes represent one of the nodes in the N network, which represents one of the 17 networks. Each of the predictor variables is numerical. The coefficients β1, …, βk measure the effect of each predictor after considering the effects of all the other predictors in the model. Thus, the coefficients measure the marginal effects of the predictor variables.

We conducted post-hoc power analysis for all the regression models to estimate the observed power of our analyses. For this analysis, the degree of freedoms and R-squared was extracted from each multiple linear regression model, and the alpha was set as 0.05. The desired observed power for the motion-controlled partial correlation models should conventionally be at least higher than 0.80 [52]. Also, all the predictions in each network were assessed with Pearson’s correlation (head-motion was partialled out) to estimate if, across subjects, the predicted SSRT is correlated with the actual SSRT (see Fig 1D). Leave-one-out cross-validation was applied to the model training for each individual, using n-1 subjects for model training and predicting the leaved-out subject’s behavior [53]. To assess the significance between actual SSRT and predicted SSRT generated from leave-one-out cross-validation, the non-parametric p-value using a permutation test with 10000 iterations with an alpha of 0.05 for significance. For the permutation test, we ran a leave-one-out cross-validation 10000 times, and for each time, random-shuffled actual SSRT was used for model training, and then the Pearson’s r value was calculated as mentioned above. This resulted in a null distribution composed of 10000 r values, and the p values were calculated by the percentile where the generated r values were equal to or larger than the null values [54]. Only the networks that had a significant result in the permutation test and a positive correlation result were retained, which was considered to be a robust and successful prediction and would be interpreted in the Discussion section.

Also, for those retained networks, the Bayes Factor (BF10) was further conducted to evaluate the strength of evidence for the presence or absence of correlation between predicted and observed SSRT [55]. The BF10 is the ratio of the likelihood of an alternative hypothesis (the presence of correlation) to the likelihood of the null hypothesis (the absence of correlation). For instance, BF10 = 4 may be interpreted as the data being 4 times more likely to occur under the alternative hypothesis than under the null hypothesis. The interpretation of BF10 can base on Wetzels and Wagenmakers [56], which were built from methods proposed by Jeffreys [57]. They suggested that the criteria of 1 < BF10 < 3 can only be anecdotal evidence for the alternative hypothesis, while the criteria of 3 < BF10 < 10 can be interpreted as moderate evidence sufficiently supporting the alternative hypothesis. For a detailed explanation of BF10, see [58].

Results

Behavior performance

Table 2 displays behavioral data. For the go trials, the mean accuracy was 90.98 ± 0.81%, and the mean nth RT was 611.51 ± 9.21 ms. For stop trials, the stop inhibition rate (stop success rate) was 54.57 ± 0.60%, which was close to the 50% aimed at the staircase algorithm. The average of SSRT was 219.56 ± 7.02 ms.

Table 2. Behavioral data.

| Go trials | % of accuracy | RT (ms) | Choice Error (%) | Omission (%) |

|---|---|---|---|---|

| 90.98 (0.81) | 611.51 (9.21) | 1.53 (0.12) | 7.49 (0.75) | |

| Stop trials | % of inhibit | False inhibit RT (ms) | SSD (ms) | SSRT (ms) |

| 54.57 (0.60) | 563.55 (8.78) | 391.95 (13.38) | 219.56 (7.02) |

(standard error (SE) between parentheses) (1) Mean reaction time of five blocks’ nth go reaction times (RT), percentage of choice error (%) and omission (%) associated with go-trials; (2) mean percentage of inhibition (%), mean RT associated with stop-failure trials, mean stop-signal delay of five blocks’ mean stop-signal delay (SSD; ms) and stop-signal RT (SSRT; ms) associated with stop-success trials.

Multiple linear regression

Table 3 and Fig 2 showed the result of Pearson’s correlation and permutation test. The top two highest correlations between predicted and actual SSRT were participation coefficients (PCs) of salient attention A network and PCs of dorsal attention A network. The permutation test showed that Pearson’s correlation results were statistically significant. The results implied that the individual network property of salient ventral attention A network (BF10 = 4.01) and dorsal attention A network (BF10 = 1.96) could predict motor inhibition ability.

Table 3. Summary of correlation results.

| Property | Network | r value | Permutation p-value | Observed Power (%) |

|---|---|---|---|---|

| PC | ContA | -5.76 x10-2 | 6.01 x10-1 | 59.52 |

| ContB | -1.51 x10-1 | 8.48 x10-1 | 48.58 | |

| ContC | 3.82 x10-2 | 2.72 x10-1 | 39.69 | |

| DefaultA | -2.20 x10-2 | 5.14 x10-1 | 86.36 | |

| DefaultB | 1.11 x10-1 | 1.31 x10-1 | 91.81 | |

| DefaultC | -2.10 x10-3 | 3.94 x10-1 | 36.66 | |

| DefaultD | -2.47 x10-2 | 4.79 x10-1 | 43.32 | |

| DorsAttnA* | 1.85 x10-1 | 4.07 x10-2 | 90.36 | |

| DorsAttnB | -4.07 x10-2 | 5.63 x10-1 | 60.74 | |

| LimbicB | -3.31 x10-1 | 9.80 x10-1 | 10.52 | |

| LimbicA | -1.00 x10-1 | 6.86 x10-1 | 29.38 | |

| SalVentAttnA* | 2.11 x10-1 | 2.39 x10-2 | 94.47 | |

| SalVentAttnB | -2.91 x10-1 | 9.74 x10-1 | 20.80 | |

| SomMotA | 3.82 x10-2 | 3.28 x10-1 | 90.88 | |

| SomMotB | -4.47 x10-3 | 4.60 x10-1 | 77.95 | |

| VisCent | -1.21 x10-1 | 7.88 x10-1 | 43.48 | |

| VisPeri | -2.14 x10-1 | 9.33 x10-1 | 32.03 | |

| WMD | ContA | 7.86 x10-2 | 1.94 x10-1 | 80.44 |

| ContB | -3.00 x10-2 | 5.27 x10-1 | 64.50 | |

| ContC | 8.65 x10-2 | 1.55 x10-1 | 41.37 | |

| DefaultA | -1.09 x10-1 | 7.72 x10-1 | 73.60 | |

| DefaultB | -4.67 x10-2 | 5.78 x10-1 | 80.26 | |

| DefaultC | -4.27 x10-2 | 5.20 x10-1 | 29.56 | |

| DefaultD | -1.87 x10-1 | 8.85 x10-1 | 23.55 | |

| DorsAttnA | -1.24 x10-1 | 7.94 x10-1 | 52.21 | |

| DorsAttnB | -1.75 x10-1 | 8.80 x10-1 | 41.63 | |

| LimbicB | 2.53 x10-2 | 3.06 x10-1 | 31.71 | |

| LimbicA | 3.42 x10-2 | 2.77 x10-1 | 34.17 | |

| SalVentAttnA | 7.18 x10-2 | 2.43 x10-1 | 89.71 | |

| SalVentAttnB | -3.84 x10-1 | 9.95 x10-1 | 15.66 | |

| SomMotA | 1.69 x10-1 | 5.60 x10-2 | 97.22 | |

| SomMotB | -1.51 x10-1 | 8.55 x10-1 | 63.90 | |

| VisCent | -9.28 x10-2 | 7.08 x10-1 | 56.96 | |

| VisPeri | -3.81 x10-2 | 5.45 x10-1 | 55.58 |

Note: (1) Network: Cont: control network; Default: default mode network; DorsAttn: dorsal attention network; Limbic: limbic network; SalVentAttn: salience ventral attention network; SomMot: somatomotor network; VisCent: visual central network; VisPeri: visual peripheral; (2) assessment: r value, Pearson’s correlation coefficient; Permutation p-value, the p value was estimated by permutation test with 10000 iterations; observed power, the power of the correlation.

* represent the significance of uncorrected p-value at alpha level < 0.05.

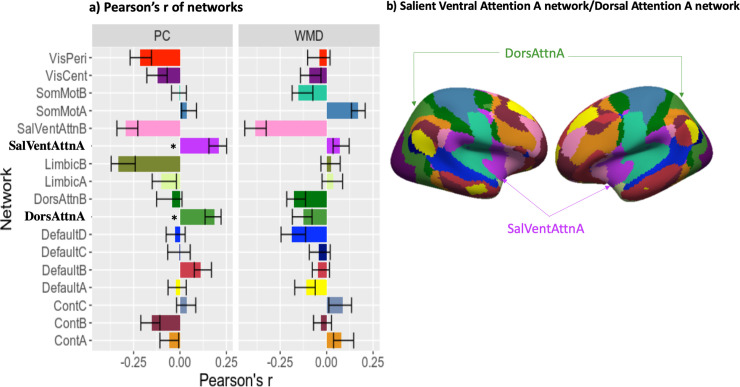

Fig 2. Result plot.

(a) Pearson’s r of networks: summary of the Pearson’s correlation between prediction stop-signal reaction time (SSRT) and the actual SSRT. Error bars were plotted with 0.95 CI of 10000-iteration bootstrapping estimation and the asterisks (i.e., “*”) showed the significant result of the permutation test. Here, PCs of salient ventral attention A network (SalVentAttnA) and dorsal attention A network (DorsAttnA) showed significant correlation between the predicted and actual SSRT in the permutation test. (b) Brain regions of salient attention A network (SalVentAttnA) and dorsal attention A network (DorsAttnA).

Discussion

The current study aimed to examine whether brain functional network properties, within-network connectivity (i.e., within-module-degree, WMD), or between-network connectivity (i.e., participation coefficient, PC), among whole brain 17 networks in rs-fMRI are associated with performance on motor inhibition. Our results showed that the between-networks connectivity in the regions of the salient attention A network and in the dorsal attention A network explained the most individual variances in motor inhibition. Specifically, results showed significant correlations with SSRT performance in nodal PCs of those two networks. This suggests that functional connectivity for salient attention A and dorsal attention A networks with the other functional networks exhibited a strong association with inhibitory control. Noted that according to the Bayes Factor, evidence for salient attention A network was stronger for supporting the presence of correlation, while for the dorsal attention A network evidence is relatively weak. On the other hand, we found top highest positive correlations results, including nodal PCs of salient ventral attention A network, nodal PCs of dorsal attention A network, nodal WMDs of somatomotor A network, nodal PCs of default B network, and nodal WMDs of Control C network, covered most regions within the frontal-cingulate-parietal cortices, which contributes to cognitive control [16, 59].

The between-network connectivity of the salient ventral attention network and dorsal attention network is associated with motor inhibition

Frontal-cingulate-parietal network

Previous studies investigating the association between rs-fMRI and SSRT showed mixed results regarding which brain networks in the resting state are most likely involved in motor inhibition. These networks include the control network (frontoparietal regions), default mode network (medial frontal and posterior cingulate gyrus), motor control network (pre-supplementary motor area (pre-SMA) and sensorimotor), visual network, and dorsal attention network [12, 27, 31, 32]. Those studies used the methods contained in the independent component analysis, fALFF, and ReHo that were similar to the present study, indicating that not only one network but multiple networks are associated with the motor inhibition. However, despite the discrepancy of methodology and results, we found the highest correlations with motor inhibition for properties in salient ventral attention A network, dorsal attention A network, somatomotor A network, default B network, and Control C network, although only salient ventral attention A network and dorsal attention A network showed significance. These five networks cover most regions within the frontal-cingulate-parietal cortices, which is considered important to cognitive control [16, 59], and most of these five networks properties resulted in their between-network connectivity (i.e., PC), suggesting that as motor inhibition is a high-level cognitive control function, the interactions across these five networks within the frontal-cingulate-parietal network are keys to motor inhibition.

Salient ventral attention a network

The salient ventral A attention covered the brain regions of the salience network and ventral attention network. The salience network plays an important role in the tasks involving attention and response to unexpected but salient stimuli (e.g., stop signal) [60, 61], while the ventral attention network plays an important role in the bottom-up attentional process [62]. Therefore, salient attention A network may be responsible for the unexpected but salient stimuli from the environment, such as the stop signal in SST. Also, our result, which the between-network connectivity (e.g., PC) in the salient ventral attention A network is associated with motor inhibition, echoes a previous study conducted by Seely and Menon [27]. Using the ICA method, Seely and Menon [27] further separated the intrinsic connectome of the frontal-cingulate-parietal network into two networks: salient network and executive-control network, which are anchored in the prefrontal, insular, cingulate, and parietal cortexes. Our result echoed their result, suggesting that the salient network is also important to the motor inhibition [16, 59], and further suggested that the importance of the salient network in motor inhibition is contributed by its connectivity to the other networks.

Dorsal attention a network

The dorsal attention network is responsible for the top-down attentional process [62]. Our another significant result in the present study, which the between-network connectivity (i.e., PC) of dorsal attention A network is associated with motor inhibition, may imply the top-down attentional control with other network is important for motor inhibition. Also, this result of the present study echoes a previous study conducted by Tian and Kong [32]. Tian and Kong [32] applied the ICA method and found the components of the motor network, motor control network, visual network, dorsal attention network, and task-activation network were associated with individual differences of SSRT. For motor inhibition, our result showed not only the importance of the dorsal attention A network but also the contribution of between-network connectivity of dorsal attention A network to the other networks.

Limitations and contributions

In this study, a few limitations of experimental design may undermine the results. First, the indirect link between spontaneous brain activity and behavior performance needs to be confirmed by task fMRI. Future studies may use task fMRI combined with network analysis to test if our results would be replicated using task-evoked functional connectivity. Second, the scope of interpretability on the machine learning prediction model is limited by feature selection and training dataset, hence another learning model may provide an alternative insight into prediction results. This requires further study to clarify. Third, the generalizability of the prediction model may be varied by the number of datasets, sample sizes and populations that completely separated from ours. Despite these limitations, our results provide a new perspective of the clinical implication that application of machine learning prediction of task-free fMRI data on motor inhibition, accelerating screening impulsive behavior related neurological and psychiatric disorders. Our findings suggested that whole-brain functional integration and between-network connection instead of functional segregation and within-network connection could predict motor inhibition.

Conclusion

In conclusion, we investigated the relationship between network properties with graphic-theoretic metrics and applied a multiple linear regression model to predict SSRT using resting-state fMRI data. Our findings showed that the between-network connectivity of the salient ventral attention network and dorsal attention network explain the majority of individual differences in motor inhibition. In addition, the between-network connectivity within the frontal-cingulate-parietal network can also moderately explain individual differences in motor inhibition. Our study provides new insight into the predictive value of rs-fMRI and its implication for assessing motor inhibition deficit in the clinical population.

Supporting information

[(1) Network: Cont: control network; Default: default mode network; DorsAttn: dorsal attention network; Limbic: limbic network; SalVentAttn: salience ventral attention network; SomMot: somatomotor network; VisCent: visual central network; VisPeri: visual peripheral; (2) AAL, automated anatomical labeling atlas].

(DOCX)

Acknowledgments

We thank Frini Karayanidis, Birte Forstmann, Alexander Conley, and Wouter Boekel for their great help in setting up this study and Meng-Heng Yang, Hsing-Hao Lee, Yu-Chi Lin, and Yenting Yu for their help in collecting data. We thank the Mind Research and Imaging Center (MRIC), supported by MOST, at NCKU for consultation and instrument availability.

Data Availability

We will upload a minimal dataset for Tables 1–2 and Fig 2 once the manuscript is accepted.

Funding Statement

The Ministry of Science and Technology (MOST), Taiwan, for financially supporting this research [Contract No. 104-2410-H-006-021-MY2, 106-2410- H-006-031-MY2, 108-2321-B-006-022-MY2, 108-2410-H-006 -038 -MY3].

References

- 1.Aron AR. The neural basis of inhibition in cognitive control. Neuroscientist. 2007;13(3):214–28. 10.1177/1073858407299288 WOS:000246620200012. [DOI] [PubMed] [Google Scholar]

- 2.Verbruggen F, Logan GD, Stevens MA. STOP IT: Windows executable software for the stop-signal paradigm. Behav Res Methods. 2008;40(2):479–83. 10.3758/brm.40.2.479 WOS:000257227000014. [DOI] [PubMed] [Google Scholar]

- 3.Logan GD, Cowan WB. On the Ability to Inhibit Thought and Action—a Theory of an Act of Control. Psychol Rev. 1984;91(3):295–327. 10.1037/0033-295x.91.3.295 WOS:A1984TA28500002. [DOI] [Google Scholar]

- 4.Jaekel J, Eryigit-Madzwamuse S, Wolke D. Preterm Toddlers' Inhibitory Control Abilities Predict Attention Regulation and Academic Achievement at Age 8 Years. J Pediatr-Us. 2016;169:87–+. 10.1016/j.jpeds.2015.10.029 WOS:000368595300020. [DOI] [PubMed] [Google Scholar]

- 5.Nigg JT. Is ADHD a disinhibitory disorder? Psychological Bulletin. 2001;127(5):571–98. 10.1037/0033-2909.127.5.571 WOS:000170786300001. [DOI] [PubMed] [Google Scholar]

- 6.Zhang YH, Zhang S, Ide JS, Hu SE, Zhornitsky S, Wang WY, et al. Dynamic network dysfunction in cocaine dependence: Graph theoretical metrics and stop signal reaction time. NeuroImage-Clin. 2018;18:793–801. 10.1016/j.nicl.2018.03.016 WOS:000433169000082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wang W, Worhunsky PD, Zhang S, Le TM, Potenza MN, Li CSR. Response inhibition and fronto-striatal-thalamic circuit dysfunction in cocaine addiction. Drug Alcohol Depen. 2018;192:137–45. 10.1016/j.drugalcdep.2018.07.037 WOS:000449447400019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hu SE, Job M, Jenks SK, Chao HH, Li CSR. Imaging the effects of age on proactive control in healthy adults. Brain Imaging and Behavior. 2019;13(6):1526–37. 10.1007/s11682-019-00103-w WOS:000506622200003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hsieh S, Lin YC. Strategies for stimulus selective stopping in the elderly. Acta Psychol. 2017;173:122–31. 10.1016/j.actpsy.2016.12.011 WOS:000393724800015. [DOI] [PubMed] [Google Scholar]

- 10.Yang MH, Yao ZF, Hsieh S. Multimodal neuroimaging analysis reveals age-associated common and discrete cognitive control constructs. Hum Brain Mapp. 2019. 10.1002/hbm.24550 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hsieh S, Lin YC. Stopping ability in younger and older adults: Behavioral and event-related potential. Cogn Affect Behav Ne. 2017;17(2):348–63. 10.3758/s13415-016-0483-7 WOS:000398958300008. [DOI] [PubMed] [Google Scholar]

- 12.Lee HH, Hsieh S. Resting-State fMRI Associated with Stop-Signal Task Performance in Healthy Middle-Aged and Elderly People. Front Psychol. 2017;8:766 10.3389/fpsyg.2017.00766 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hu S, Chao HHA, Winkler AD, Li CSR. The effects of age on cerebral activations: internally versus externally driven processes. Front Aging Neurosci. 2012;4 ARTN 4 10.3389/fnagi.2012.00004 WOS:000312344100001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Levy BJ, Wagner AD. Cognitive control and right ventrolateral prefrontal cortex: reflexive reorienting, motor inhibition, and action updating. Ann Ny Acad Sci. 2011;1224:40–62. 10.1111/j.1749-6632.2011.05958.x WOS:000290238100004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Swick D, Ashley V, Turken U. Are the neural correlates of stopping and not going identical? Quantitative meta-analysis of two response inhibition tasks. Neuroimage. 2011;56(3):1655–65. 10.1016/j.neuroimage.2011.02.070 . [DOI] [PubMed] [Google Scholar]

- 16.Cai W, Ryali S, Chen T, Li CS, Menon V. Dissociable roles of right inferior frontal cortex and anterior insula in inhibitory control: evidence from intrinsic and task-related functional parcellation, connectivity, and response profile analyses across multiple datasets. J Neurosci. 2014;34(44):14652–67. 10.1523/JNEUROSCI.3048-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li CSR, Yan P, Sinha R, Lee TW. Subcortical processes of motor response inhibition during a stop signal task. Neuroimage. 2008;41(4):1352–63. 10.1016/j.neuroimage.2008.04.023 WOS:000256620400017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Duann JR, Ide JS, Luo X, Li CR. Functional Connectivity Delineates Distinct Roles of the Inferior Frontal Cortex and Presupplementary Motor Area in Stop Signal Inhibition. J Neurosci. 2009;29(32):10171–9. 10.1523/JNEUROSCI.1300-09.2009 WOS:000268887300025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Manza P, Zhang S, Li CSR, Leung HC. Resting-State Functional Connectivity of the Striatum in Early-Stage Parkinson's Disease: Cognitive Decline and Motor Symptomatology. Hum Brain Mapp. 2016;37(2):648–62. 10.1002/hbm.23056 WOS:000370243000016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhang S, Hu S, Fucito LM, Luo XG, Mazure CM, Zaborszky L, et al. Resting-State Functional Connectivity of the Basal Nucleus of Meynert in Cigarette Smokers: Dependence Level and Gender Differences. Nicotine Tob Res. 2017;19(4):452–9. 10.1093/ntr/ntw209 WOS:000402066600009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Xiao T, Zhang S, Lee LE, Chao HH, van Dyck C, Li CSR. Exploring Age-Related Changes in Resting State Functional Connectivity of the Amygdala: From Young to Middle Adulthood. Front Aging Neurosci. 2018;10 ARTN 209 10.3389/fnagi.2018.00209 WOS:000438717300001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Manza P, Zhang S, Hu S, Chao HH, Leung HC, Li CSR. The effects of age on resting state functional connectivity of the basal ganglia from young to middle adulthood. Neuroimage. 2015;107:311–22. 10.1016/j.neuroimage.2014.12.016 WOS:000348043100031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Buckner RL, Krienen FM, Yeo BTT. Opportunities and limitations of intrinsic functional connectivity MRI. Nat Neurosci. 2013;16(7):832–7. 10.1038/nn.3423 WOS:000321180900013. [DOI] [PubMed] [Google Scholar]

- 24.Kumar S, Yoo K, Rosenberg MD, Scheinost D, Constable RT, Zhang S, et al. An information network flow approach for measuring functional connectivity and predicting behavior. Brain Behav. 2019;9(8). ARTN e01346 10.1002/brb3.1346 WOS:000483569700004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Biswal B, Yetkin FZ, Haughton VM, Hyde JS. Functional Connectivity in the Motor Cortex of Resting Human Brain Using Echo-Planar Mri. Magnet Reson Med. 1995;34(4):537–41. 10.1002/mrm.1910340409 WOS:A1995RX75100008. [DOI] [PubMed] [Google Scholar]

- 26.Biswal BB, Mennes M, Zuo XN, Gohel S, Kelly C, Smith SM, et al. Toward discovery science of human brain function. Proc Natl Acad Sci U S A. 2010;107(10):4734–9. 10.1073/pnas.0911855107 WOS:000275368400045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Seeley WW, Menon V, Schatzberg AF, Keller J, Glover GH, Kenna H, et al. Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci. 2007;27(9):2349–56. 10.1523/JNEUROSCI.5587-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tian LX, Ren JJ, Zang YF. Regional homogeneity of resting state fMRI signals predicts Stop signal task performance. Neuroimage. 2012;60(1):539–44. 10.1016/j.neuroimage.2011.11.098 WOS:000301218700055. [DOI] [PubMed] [Google Scholar]

- 29.Jiang LL, Zuo XN. Regional Homogeneity: A Multimodal, Multiscale Neuroimaging Marker of the Human Connectome. Neuroscientist. 2016;22(5):486–505. 10.1177/1073858415595004 WOS:000383020100010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zang YF, Jiang TZ, Lu YL, He Y, Tian LX. Regional homogeneity approach to fMRI data analysis. Neuroimage. 2004;22(1):394–400. 10.1016/j.neuroimage.2003.12.030 WOS:000221190200041. [DOI] [PubMed] [Google Scholar]

- 31.Hu S, Chao HHA, Zhang S, Ide JS, Li CSR. Changes in cerebral morphometry and amplitude of low-frequency fluctuations of BOLD signals during healthy aging: correlation with inhibitory control. Brain Struct Funct. 2014;219(3):983–94. 10.1007/s00429-013-0548-0 WOS:000335737900016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tian LX, Kong YZ, Ren JJ, Varoquaux G, Zang YF, Smith SM. Spatial vs. Temporal Features in ICA of Resting-State fMRI—A Quantitative and Qualitative Investigation in the Context of Response Inhibition. PLoS One. 2013;8(6). ARTN e66572 10.1371/journal.pone.0066572 WOS:000320576400132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fornito A, Zalesky A, Breakspear M. The connectomics of brain disorders. Nat Rev Neurosci. 2015;16(3):159–72. 10.1038/nrn3901 WOS:000350108400011. [DOI] [PubMed] [Google Scholar]

- 34.Wang J, Zuo X, He Y. Graph-based network analysis of resting-state functional MRI. Front Syst Neurosci. 2010;4:16 10.3389/fnsys.2010.00016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.van den Heuvel MP, Hulshoff Pol HE. Exploring the brain network: a review on resting-state fMRI functional connectivity. Eur Neuropsychopharmacol. 2010;20(8):519–34. 10.1016/j.euroneuro.2010.03.008 . [DOI] [PubMed] [Google Scholar]

- 36.Schaefer A, Kong R, Gordon EM, Laumann TO, Zuo XN, Holmes AJ, et al. Local-Global Parcellation of the Human Cerebral Cortex from Intrinsic Functional Connectivity MRI. Cereb Cortex. 2018;28(9):3095–114. 10.1093/cercor/bhx179 WOS:000443545600003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sporns O, Betzel RF. Modular Brain Networks. Annu Rev Psychol. 2016;67:613–40. 10.1146/annurev-psych-122414-033634 WOS:000368344500026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Guimera R, Nunes Amaral LA. Functional cartography of complex metabolic networks. Nature. 2005;433(7028):895–900. Epub 2005/02/25. 10.1038/nature03288 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hwang K, Bertolero MA, Liu WB, D'Esposito M. The Human Thalamus Is an Integrative Hub for Functional Brain Networks. J Neurosci. 2017;37(23):5594–607. 10.1523/JNEUROSCI.0067-17.2017 WOS:000402907200004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Vaessen MJ, Jansen JFA, Braakman HMH, Hofman PAM, De Louw A, Aldenkamp AP, et al. Functional and Structural Network Impairment in Childhood Frontal Lobe Epilepsy. PLoS One. 2014;9(3). ARTN e90068 10.1371/journal.pone.0090068 WOS:000332475500033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Nasreddine ZS, Phillips NA, Bedirian V, Charbonneau S, Whitehead V, Collin I, et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53(4):695–9. 10.1111/j.1532-5415.2005.53221.x . [DOI] [PubMed] [Google Scholar]

- 42.Beck AT, Steer RA, Brown GK. Beck depression inventory-II. San Antonio. 1996;78(2):490–8. [Google Scholar]

- 43.Verbruggen F, Logan GD. Models of response inhibition in the stop-signal and stop-change paradigms. Neurosci Biobehav R. 2009;33(5):647–61. 10.1016/j.neubiorev.2008.08.014 WOS:000266305000004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Verbruggen F, Chambers CD, Logan GD. Fictitious Inhibitory Differences: How Skewness and Slowing Distort the Estimation of Stopping Latencies. Psychol Sci. 2013;24(3):352–62. 10.1177/0956797612457390 WOS:000316640900016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Geerligs L, Tsvetanov KA, Henson RN, Gam CAN. Challenges in measuring individual differences in functional connectivity using fMRI: The case of healthy aging. Hum Brain Mapp. 2017;38(8):4125–56. 10.1002/hbm.23653 WOS:000404963900026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Power JD, Barnes KA, Snyder AZ, Schlaggar BL, Petersen SE. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage. 2012;59(3):2142–54. 10.1016/j.neuroimage.2011.10.018 WOS:000299494000017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Friston KJ, Williams S, Howard R, Frackowiak RSJ, Turner R. Movement-related effects in fMRI time-series. Magnet Reson Med. 1996;35(3):346–55. 10.1002/mrm.1910350312 WOS:A1996TX77500009. [DOI] [PubMed] [Google Scholar]

- 48.Hallquist MN, Hwang K, Luna B. The nuisance of nuisance regression: Spectral misspecification in a common approach to resting-state fMRI preprocessing reintroduces noise and obscures functional connectivity. Neuroimage. 2013;82:208–25. 10.1016/j.neuroimage.2013.05.116 WOS:000324568400023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Guimera R, Amaral LAN. Cartography of complex networks: modules and universal roles. J Stat Mech-Theory E. 2005. Artn P02001 10.1088/1742-5468/2005/02/P02001 WOS:000227304500006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Rubinov M, Sporns O. Complex network measures of brain connectivity: Uses and interpretations. Neuroimage. 2010;52(3):1059–69. 10.1016/j.neuroimage.2009.10.003 WOS:000280181800027. [DOI] [PubMed] [Google Scholar]

- 51.Newman MEJ. Networks: an introduction. Oxford; New York: Oxford University Press; 2010. [Google Scholar]

- 52.Cohen J. Statistical power analysis for the behavioral sciences: Academic press; 2013. [Google Scholar]

- 53.Rosenberg MD, Finn ES, Scheinost D, Papademetris X, Shen XL, Constable RT, et al. A neuromarker of sustained attention from whole-brain functional connectivity. Nat Neurosci. 2016;19(1):165–+. 10.1038/nn.4179 WOS:000367254400027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hsu WT, Rosenberg MD, Scheinost D, Constable RT, Chun MM. Resting-state functional connectivity predicts neuroticism and extraversion in novel individuals. Soc Cogn Affect Neurosci. 2018;13(2):224–32. 10.1093/scan/nsy002 WOS:000427017200009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Boekel W, Hsieh S. Cross-sectional white matter microstructure differences in age and trait mindfulness. PLoS One. 2018;13(10). ARTN e0205718 10.1371/journal.pone.0205718 WOS:000447417900047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Wetzels R, Wagenmakers E-J. A default Bayesian hypothesis test for correlations and partial correlations. Psychon B Rev. 2012;19(6):1057–64. 10.3758/s13423-012-0295-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Jeffreys H. Theory of Probability. 3rd Edition ed: Clarendon Press, Oxford; 1961. [Google Scholar]

- 58.Ly A, Verhagen J, Wagenmakers E-J. An evaluation of alternative methods for testing hypotheses, from the perspective of Harold Jeffreys. Journal of Mathematical Psychology. 2016;72:43–55. [Google Scholar]

- 59.Cai W, Chen T, Ryali S, Kochalka J, Li CS, Menon V. Causal Interactions Within a Frontal-Cingulate-Parietal Network During Cognitive Control: Convergent Evidence from a Multisite-Multitask Investigation. Cereb Cortex. 2016;26(5):2140–53. 10.1093/cercor/bhv046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Sridharan D, Levitin DJ, Menon V. A critical role for the right fronto-insular cortex in switching between central-executive and default-mode networks. Proc Natl Acad Sci U S A. 2008;105(34):12569–74. 10.1073/pnas.0800005105 WOS:000258905700084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Menon V, Uddin LQ. Saliency, switching, attention and control: a network model of insula function. Brain Struct Funct. 2010;214(5–6):655–67. 10.1007/s00429-010-0262-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Vossel S, Geng JJ, Fink GR. Dorsal and Ventral Attention Systems: Distinct Neural Circuits but Collaborative Roles. Neuroscientist. 2014;20(2):150–9. 10.1177/1073858413494269 WOS:000332096100010. [DOI] [PMC free article] [PubMed] [Google Scholar]