Abstract

Order entry via mobile client was associated with decreased after-hours EHR use in a cohort of 139 academic ophthalmologists. EHR audit log data can provide insights into strategies for optimizing efficiency of EHR use.

Electronic health record (EHR) adoption by ophthalmologists has been associated with time investment and negative perceptions.1 EHR audit logs capture time using EHRs and can shed light on workflows and factors related to burnout.2 Several EHR vendors have developed “off-the-shelf” use metrics. Here, we analyzed these metrics and conducted surveys across five independent medical centers to better understand ophthalmologists’ experience using EHRs.

We analyzed EHR use metrics from a de-identified dataset of ophthalmologists across University of California (UC) medical centers (San Diego, Irvine, Los Angeles, San Francisco, and Davis) using four different instances (as San Diego and Irvine use the same tenet) of the same vendor (Epic Systems; Verona, WI). The study was exempted from review by the UC San Diego Institutional Review Board and adhered to the Declaration of Helsinki. We included all 139 UC ophthalmologists using the EHR systems from 11/1/18–10/31/19. Metrics included total time; time spent on notes, orders, clinical review, and in-basket; time outside scheduled hours (number of minutes spent in the system outside of scheduled visits, with a 30-minute buffer before the first appointment and after the last appointment); and use of efficiency tools. All metrics were based on existing vendor definitions. We used multiple analysis of variance testing to evaluate differences between institutions. To identify factors influencing time outside scheduled hours, we developed a mixed effects model with ophthalmologists nested within institutions as random effects using the lme4 and lmerTest packages in R.3,4 P-values<0.05 were statistically significant.

We also surveyed ophthalmology “super users,” chief medical information officers, and other clinical informaticists at each institution. The survey (available at www.aaojournal.org) asked about ophthalmologists’ satisfaction with the EHR, institutional factors influencing efficiency, and lessons learned from prior EHR implementations or transitions.

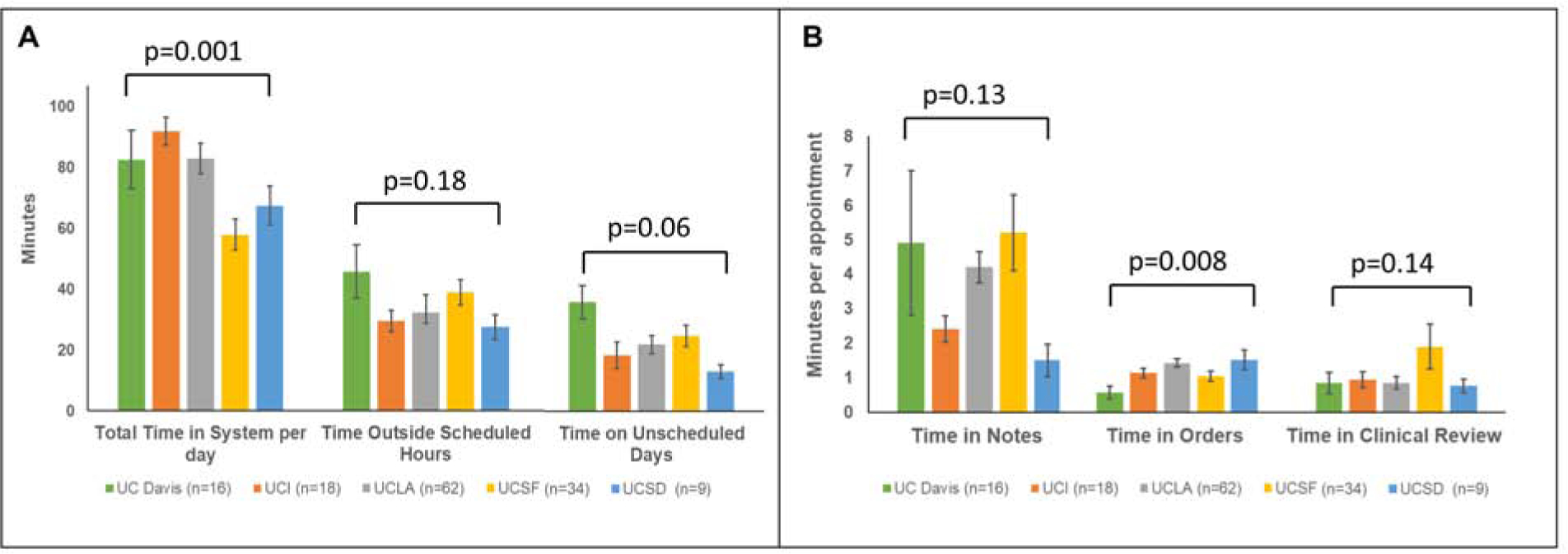

Ophthalmologists spent the most time on notes per appointment (mean [standard deviation, SD] of 3.7 [3.7] minutes), followed by orders (1.2 [0.8]), then clinical review (1.0 [1.7]). Figure 1 depicts EHR use metrics by institution. There were significant differences for time in orders (p=0.008, range 0.6–1.6 minutes), but not for time in notes (p=0.13, range 2.4–5.2 minutes) or clinical review (p=0.14, range 0.8–2.0 minutes). On average, ophthalmologists closed 76% of their charts on the day of the appointment, although this varied by institution (p=0.001, range 65%−96%). Time outside scheduled hours on days with appointments averaged 28.1 (SD 21.1) minutes. On days without appointments, ophthalmologists still spent 23.5 (SD 22.9) minutes in the EHR. A mixed effects model demonstrated that clinical volume; progress note length; and time spent on in-basket, orders, and notes were associated with greater after-hours EHR use (Table S1, available at www.aaojournal.org). Entering orders via mobile client was the only tool associated with significantly less after-hours EHR use (averaging 13.4 fewer minutes outside scheduled hours for ophthalmologists using mobile order entry compared to those who did not if all other factors were held constant, p<0.001). Mobile order entry was used by ophthalmologists at three institutions, although ophthalmologists at all five institutions used some form of mobile EHR functionality (in-basket management, order entry, or note-writing).

Figure 1.

Electronic health record (EHR) use metrics among ophthalmologists across the University of California (UC) for (A) total time in the EHR system per day, time outside scheduled hours, and time on unscheduled days; and (B) time in notes, time in orders, and time in clinical review per appointment, for all ophthalmologists actively using the EHR system from November 1, 2018 to October 31, 2019. Error bars represent standard error. UCI = UC Irvine, UCLA = UC Los Angeles, UCSF = UC San Francisco, and UCSD = UC San Diego.

Thirty-five of 37 invited individuals (95%) completed the survey. Regarding overall satisfaction of ophthalmologists with the EHR, responses were mixed: 1 (3%) extremely satisfied, 8 (23%) moderately satisfied, 7 (20%) slightly satisfied, 5 (14%) neutral, 3 (9%) slightly dissatisfied, 8 (23%) moderately dissatisfied, and 3 (9%) extremely dissatisfied. Satisfaction ratings also varied within each institution. Survey comments (Table S2, available at www.aaojournal.org) highlighted several issues. Time and documentation burden were noted by respondents from every institution. Several endorsed an early emphasis on workflow development. Perceptions of customization varied: some wanted more customization, while others highlighted problems with over-customization, such as difficulty standardizing training and optimization efforts. Respondents from each institution desired greater institutional investment in information technology support for ophthalmology.

In this study, we found significant variations in EHR use among ophthalmologists across multiple domains, despite evaluating physicians in the same specialty, practicing in the same state within the same parent organization using the same vendor. Differences in EHR build and training could potentially explain some of this variation. Although outside this study’s scope, others have found that training quality influences perceived efficiency and EHR satisfaction.5 Due to variations in faculty schedules (i.e. half-day vs. full-day clinics, varying administrative/research effort), we focused on timing metrics per appointment. These variations highlight the potential for quality improvement efforts and opportunities for institutions to learn from each other.

The evidence base for which “efficiency” tools are effective in real-world ophthalmic practice is lacking. Here, mobile order entry was the only tool associated with decreased after-hours EHR use. One possibility is that the mobile client’s simpler interface may have decreased time spent on orders, but a deeper understanding of how mobile EHR systems could improve efficiency requires further investigation. Some efficiency tools may shorten one task while lengthening others. For example, note templates decrease time spent writing notes but increase time on clinical review, as physicians need to read more text to find relevant information. This was illustrated in an analysis of ophthalmology progress note similarity,6 which found that HER design features contribute to increased note length and redundancy.

Ophthalmologists’ satisfaction with EHRs varied substantially, even within the same institution. This highlights the ongoing need for improving the end-user experience, even after initial implementation. Our survey highlighted several areas for improvement. Multiple UC campuses are engaged in interventions such as optimization “sprints” and “home for dinner” programs; EHR metrics could help measure these interventions’ effects more rigorously.

EHR use metrics have limitations. They do not account for factors such as the aforementioned scheduling variations, trainee/scribe involvement, or staff support, all of which can impact productivity.7 Vendor definitions may not align with what physicians perceive, especially regarding what constitutes “outside scheduled hours.” Timing metrics also do not consider documentation quality; faster is not always better. However, these metrics still offer a starting point for understanding EHR use patterns and for informing interventions. Ongoing work to improve satisfaction and mitigate EHR-related burnout is critically important for ophthalmology, a high-volume specialty with unique information system needs.

Supplementary Material

Acknowledgments

This study was supported by the National Institutes of Health (grants T15LM011271 and UL1TR001442) and the Heed Ophthalmic Foundation.

Financial Support: This study was supported by the National Institutes of Health, Bethesda, MD; Grants T15LM011271 and UL1TR001442) and the Heed Ophthalmic Foundation. The sponsor or funding organization had no role in the design or conduct of this research.

Abbreviations and Acronyms:

- ANOVA

Analysis of Variance

- HER

Electronic Health Record

- IT

Information Technology

- UC

University of California

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of Interest: Dr. Brandt reports consulting fees from Verily Life Sciences for device development.

References

- 1.Lim MC, Boland MV, McCannel CA, et al. Adoption of Electronic Health Records and Perceptions of Financial and Clinical Outcomes Among Ophthalmologists in the United States. JAMA Ophthalmol. 2018;136(2):164–170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rule A, Chiang MF, Hribar MR. Using electronic health record audit logs to study clinical activity: a systematic review of aims, measures, and methods. Journal of the American Medical Informatics Association. November 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bates D, Mächler M, Bolker B, Walker S. Fitting Linear Mixed-Effects Models Using lme4. Journal of Statistical Software. 2015;67(1). [Google Scholar]

- 4.Kuznetsova A, Brockhoff PB, Christensen RHB. lmerTest Package: Tests in Linear Mixed Effects Models. Journal of Statistical Software. 2017;82(13). [Google Scholar]

- 5.Longhurst CA, Davis T, Maneker A, et al. Local Investment in Training Drives Electronic Health Record User Satisfaction. Appl Clin Inform. 2019;10(2):331–335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Huang AE, Hribar MR, Goldstein IH, Henriksen B, Lin W-C, Chiang MF. Clinical Documentation in Electronic Health Record Systems: Analysis of Similarity in Progress Notes from Consecutive Outpatient Ophthalmology Encounters. AMIA Annu Symp Proc. 2018;2018:1310–1318. [PMC free article] [PubMed] [Google Scholar]

- 7.Lam JG, Lee BS, Chen PP. The effect of electronic health records adoption on patient visit volume at an academic ophthalmology department. BMC Health Serv Res. 2016;16:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.