Abstract

This paper explores the knowledge of linguistic structure learned by large artificial neural networks, trained via self-supervision, whereby the model simply tries to predict a masked word in a given context. Human language communication is via sequences of words, but language understanding requires constructing rich hierarchical structures that are never observed explicitly. The mechanisms for this have been a prime mystery of human language acquisition, while engineering work has mainly proceeded by supervised learning on treebanks of sentences hand labeled for this latent structure. However, we demonstrate that modern deep contextual language models learn major aspects of this structure, without any explicit supervision. We develop methods for identifying linguistic hierarchical structure emergent in artificial neural networks and demonstrate that components in these models focus on syntactic grammatical relationships and anaphoric coreference. Indeed, we show that a linear transformation of learned embeddings in these models captures parse tree distances to a surprising degree, allowing approximate reconstruction of the sentence tree structures normally assumed by linguists. These results help explain why these models have brought such large improvements across many language-understanding tasks.

Keywords: artificial neural netwok, self-supervision, syntax, learning

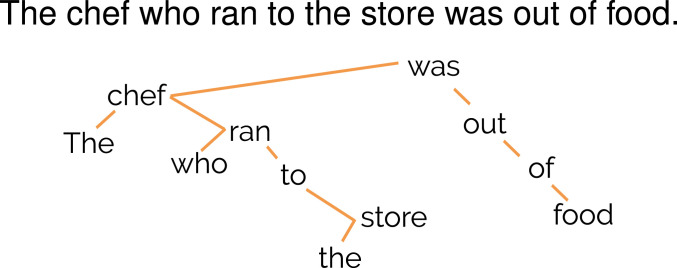

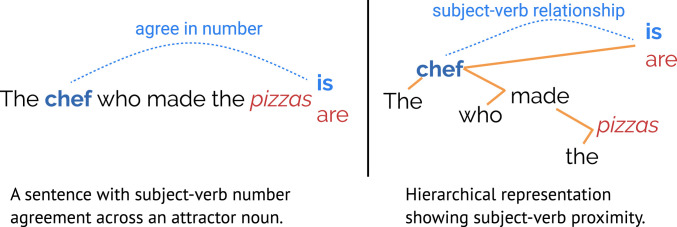

Human language communication is via sequences of words, canonically produced as a mainly continuous speech stream (1). Behind this linear organization is a rich hierarchical language structure with additional links (such as coreference between mentions) that needs to be understood by a hearer (or reader). In Fig. 1, for instance, a hearer has to understand a sentence structure roughly like the one shown to realize that the chef was out of food rather than the store.* Language understanding, like vision, can be seen as an inverse problem (3), where the hearer has to reconstruct structures and causes from the observed surface form.

Fig. 1.

A hearer must reconstruct that the store is in a relative clause modifying the chef to know that it is the chef who is out of food rather than the linearly closer store.

In computational linguistics, the long dominant way of addressing this structure induction problem has been to hand design linguistic representations, broadly following proposals from linguistics proper. Under one set of conventions, the sentence in Fig. 1 would be annotated with the structure shown. Humans then label many natural language sentences with their underlying structure. Such datasets of annotated human language structure, known as treebanks (4, 5), have fueled much of the research in the field in the last 25 y. Researchers train progressively better supervised machine-learning models on the treebank, which attempt to recover this structure for any sentence (6–8). This approach has been very effective as an engineering solution, but beyond the high practical cost of human labeling, it gives no insight into how children might approach structure induction from observed data alone.

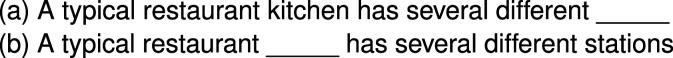

Recently, enormous progress has been made in natural language representation learning by adopting a self-supervised learning approach. In self-supervised learning, a system is given no explicit labeling of raw data, but it is able to construct its own supervised learning problems by choosing to interpret some of the data as a “label” to be predicted.† The canonical case for human language is the language-modeling task of trying to predict the next word in an utterance based on the temporally preceding words (Fig. 2). Variant tasks include the masked language-modeling task of predicting a masked word in a text [a.k.a. the cloze task (11)] and predicting the words likely to occur around a given word (12, 13). Autoencoders (14) can also be thought of as self-supervised learning systems. Since no explicit labeling of the data is required, self-supervised learning is a type of unsupervised learning, but the approach of self-generating supervised learning objectives differentiates it from other unsupervised learning techniques such as clustering.

Fig. 2.

The next word prediction (language-modeling) task (a) and the cloze task (b).

One might expect that a machine-learning model trained to predict the next word in a text will just be a giant associational learning machine, with lots of statistics on how often the word restaurant is followed by kitchen and perhaps some basic abstracted sequence knowledge such as knowing that adjectives are commonly followed by nouns in English. It is not at all clear that such a system can develop interesting knowledge of the linguistic structure of whatever human language the system is trained on. Indeed, this has been the dominant perspective in linguistics, where language models have long been seen as inadequate and having no scientific interest, even when their usefulness in practical engineering applications is grudgingly accepted (15, 16).

Starting in 2018, researchers in natural language processing (NLP) built a new generation of much larger artificial neural network models, which construct rich, word-token specific deep contextual representations of human language as numeric vectors (17, 18). In this paper, we examine how, at this larger scale, there is a dramatic increase in what is modeled by such networks. The simple task of word prediction is a highly effective self-supervision signal: Neural networks can and do improve on this task by inducing their own representations of sentence structure which capture many of the notions of linguistics, including word classes (parts of speech), syntactic structure (grammatical relations or dependencies), and coreference (which mentions of an entity refer to the same entity, such as, e.g., when “she” refers back to “Rachel”). We examine learned attention structure in models and develop simple probes to show that these models know about each of these types of linguistic information. Indeed, the learned encoding of a sentence to a large extent includes the information found in the parse tree structures of sentences that have been proposed by linguists.

This is a startling and intriguing result. Traditionally much of the emphasis in NLP has been on using labels for part of speech, syntax, etc., as an aid in other downstream tasks. This result suggests that large-scale hand construction of syntactically labeled training data may no longer be necessary for many tasks. Despite its simple nature, the generality of word prediction, as a task that benefits from syntactic, semantic, and discourse information, leads to it being a very powerful multidimensional supervision signal.

While the work presented here is interesting food for thought about the starting point and process of human language acquisition, we make no attempt to model human learning. These models are operating in a quite different environment from that of children, with exposure to much more linguistic input but no real-world environment to aid learning. Nevertheless, this work bears on the logical problem of language acquisition (19). Importantly, it shows successful language structure learning from positive evidence alone.

Bidirectional Encoder Representations from Transformers: A Self-Supervised Artificial Neural Network

Current state-of-the-art NLP systems typically involve a deep artificial neural network that was trained on a large corpus of text using self-supervision. As an example, we describe Bidirectional Encoder Representations from Transformers (BERT), a recently proposed Transformer model and training procedure that has gained prominence by dominating multiple key NLP benchmarks (18, 20).

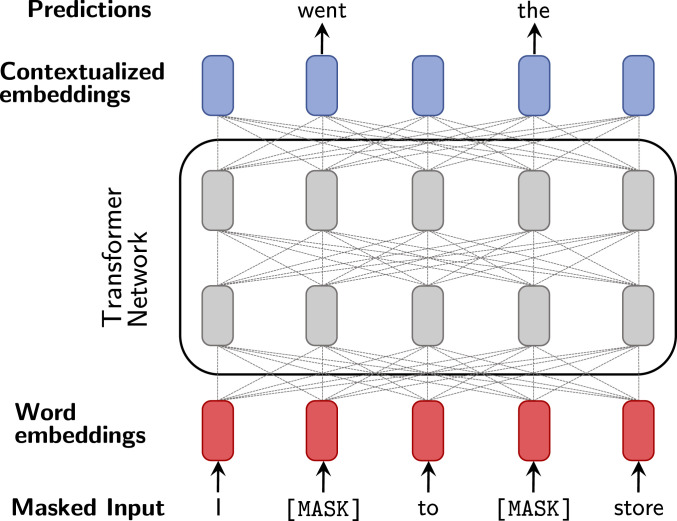

The self-supervision task used to train BERT is the masked language-modeling or cloze task, where one is given a text in which some of the original words have been replaced with a special mask symbol. The goal is to predict, for each masked position, the original word that appeared in the text (Fig. 3). To perform well on this task, the model needs to leverage the surrounding context to infer what that word could be.

Fig. 3.

A high-level illustration of BERT. Words in the input sequence are randomly masked out and then all words are embedded as vectors in . A Transformer network applies multiple layers of multiheaded attention to the representations. The final representations are used to predict the identities of the masked-out input words.

BERT is a Transformer model (21), a neural network architecture, without any recurrent connections (22), which takes a sequence of words (or other symbols) as input and produces a contextualized vector representation of each word as its output (Fig. 3). It contains many millions of trainable parameters in a number of layers, typically requiring massive amounts of data and computation to train. This makes Transformers difficult to train, but also highly expressive models that can outperform other contemporary neural networks when properly optimized.

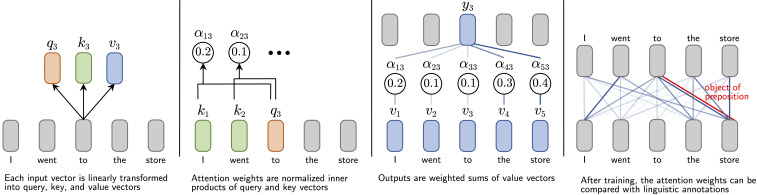

The key mechanism by which Transformers contextualize representations is multiheaded attention (see Fig. 5). Attention (23) dynamically assigns a weight to every pair of words in the sequence, indicating how much the model should “pay attention to” the first word when computing the representation of the second one. Transformers use multiple attention heads in parallel, where each head can potentially capture a completely different word–word relation. Transformers aggregate the information from each head to produce a single output vector representation for each word in the sequence. We provide more mathematical detail below.

Fig. 5.

An overview of the neural attention mechanism. NLP models often contain many attention mechanisms, each producing a different set of attention weights.

The Syntax Sensitivity of Language Models

Models trained to predict words, such as BERT, receive just a list of words as their input and do not explicitly represent syntax. Are they nevertheless able to learn the hierarchical structure of language? One approach to investigating this question is through examining the model’s predictions in syntax-dependent scenarios.

For example, performing English subject–verb agreement requires an understanding of hierarchical structure. In the sentences “The chef is here” and “The chefs are here” the form of the verb depends on whether the subject is singular or plural: “is” agrees with “chef” but “are” does not. It is quite unsurprising that neural language models can learn statistics about sequential co-occurrence, e.g., that a singular noun is often followed by a singular verb. However, subject–verb agreement is based not on the linear ordering of the words, but on the words’ syntactic relationship. For example, in “The chef who made the pizzas is here,” the intervening phrase does not affect the correct agreement of “is” despite the phrase containing an “attractor” noun “pizzas,” which has the opposite number to the verb’s subject (Fig. 4).

Fig. 4.

An example where implicitly modeling syntactic structure may assist in predicting the missing word and improve language-modeling performance.

Linzen et al. (24) first evaluated neural language models on their ability to perform such agreement tasks. Models were asked to predict the next word for inputs with zero or more intervening attractor nouns. Accuracy was measured as how often the model assigns higher probability to the correct verb form. To correctly perform this word form prediction task, the model must ignore the attractor(s) (here, pizzas) and assign the verb’s form based on its syntactic subject (chef), in accord with the sentence’s implied hierarchical structure rather than linear proximity.

Subsequent work has evaluated models on more challenging sentences where potential confounds are removed (25), evaluated models on other grammatical phenomena such as reflexive anaphora (26), evaluated models that explicitly model hierarchy (27), and evaluated BERT on agreement across attractors (28). The studies have found models like BERT to perform well at these tasks; for example, ref. 28 finds that BERT makes as many or fewer mistakes than humans for some types of agreement.‡

Agreement relations highlight the richness of language modeling as a pretraining task, perhaps explaining some of its recent success in NLP. However, while this black box approach teaches us that the model learns some form of syntax, it does not tell us how. We therefore shift our discussion to analyze the internals of BERT and demonstrate two separate mechanisms (attention and structural probes) by which we can observe the structure the model constructs from the input.

Attention Probes

Neural attention is a neural network component prevalent in contemporary state-of-the-art NLP models such as BERT. Here, we provide a precise description of attention and explain how it can be interpreted linguistically. We then apply this interpretation to BERT’s multiple-attention heads and show that many heads are highly correlated with well-known concepts of linguistic structure.

The Neural Attention Mechanism.

Given a query and a set of items , the attention mechanism (23) induces a probability distribution over the item set and then produces an expectation (weighted sum) of the items as the output. Intuitively, attention makes a soft selection of which item is the best fit for the query . More formally, each item is represented by two vectors: key and value . The key is used to determine the distribution via an inner product with the query vector , normalized by a softmax:

| [1] |

The output is then the expectation over the value vectors :

| [2] |

The Transformer network (21) uses a flavor of this mechanism called self-attention, where each input word plays a dual role as both a query and a selectable item. This is implemented by passing the vector representation of every word through three different linear transformations, resulting in query , key , and value vectors. Each query can then attend over all of the key-value pairs in the sequence, producing a different attention distribution (i.e., denotes the attention weight toward position from position ) and output for each word, as shown in Fig. 5.

Attention has been a highly successful neural network component for processing text (31), video (32), image (33), and speech (34) data. A Transformer network consists of multiple layers with each layer containing multiple attention heads. Each head computes its own independent attention weights and output vectors; the output vectors across heads are concatenated together when producing a layer’s output.

Method: Attention Heads as Simple Classifiers.

We quantitatively study the correspondence between attention heads and linguistic phenomena by observing the behavior of attention heads on corpora of annotated data. We focus on data where the annotations assign each pair of words a label that is 1 if a particular linguistic relationship between words holds (e.g., is ’s syntactic head) and is 0 if otherwise. To interpret what an attention head in BERT is computing, we examine the most-attended-to word at each position. More formally, if denotes the attention distribution of head when BERT is run over the sequence of words , we find the most-attended-to word for each position . We then evaluate whether the attention head is expressing a particular linguistic relationship by computing how often the most-attended-to word is in that relationship with the input word (i.e., how often the head “pays attention to” linguistically relevant words). If is the subset of the input expressing the annotated relationship, the precision score for the head is computed as

| [3] |

where is the total number of words in the corpus expressing the relationship. This score can be viewed as evaluating the attention head as a simple classifier that predicts the presence of the linguistic relationship of interest.

Experiments.

We use the original base-sized uncased BERT model from Google, which consists of 12 layers each containing 12 attention heads and 768-dimensional hidden vectors. We use “head -” to denote a particular attention head.

Our first evaluation is on syntactic dependencies, using the Wall Street Journal (WSJ) portion of the Penn Treebank (4, 35) annotated with Stanford Dependencies (SD) (36) as the corpus. In dependency syntax, typed directed edges connect words, forming a tree structure describing the sentence’s syntax. In particular, the tree structure results from each word having exactly one incoming edge, from either another word (the “syntactic head”) or a distinguished sentence root symbol, with a type indicating the grammatical relation (e.g., prepositional object). The tree can be expressed as word-pair labels where is 1 if is ’s head and 0 if otherwise. We also perform more fine-grained analysis over specific grammatical relations by (for example) restricting the label to 1 if is ’s direct object and 0 if otherwise.§ Some dependency relations are simpler to predict than others; e.g., a noun’s determiner is often the immediately preceding word. Therefore, as a point of comparison, we show predictions from a simple fixed-offset baseline. A fixed offset of 2 means the word two positions to the left of the dependent is always considered to be the head; we report scores for the best-performing offset in [5, 5].

We also evaluate BERT attention heads at the semantic phenomenon of coreference. Coreference occurs when two or more mentions (text expressions referring to real-word entities) in a document refer to the same entity (e.g., “London,” “the city,” and “it” could be coreferent mentions). We evaluate the attention heads on coreference resolution using the Conference on Natural Language Learning shared task (CoNLL-2012) dataset (37). We report antecedent selection precision: how often the head word of a coreferent mention most attends to the head word of one of that mention’s antecedents, so when and are head words of coreferent mentions and 0 if otherwise. We compare against two baselines for selecting an antecedent: first, picking the nearest other mention as the antecedent, and second, using a rule-based coreference system. It proceeds through four sieves: 1) full string match, 2) head word match, 3) number/gender/person match, and 4) all other mentions. The nearest mention satisfying the earliest sieve is returned. Although simple, these baselines can perform surprisingly well at coreference (38).

Results.

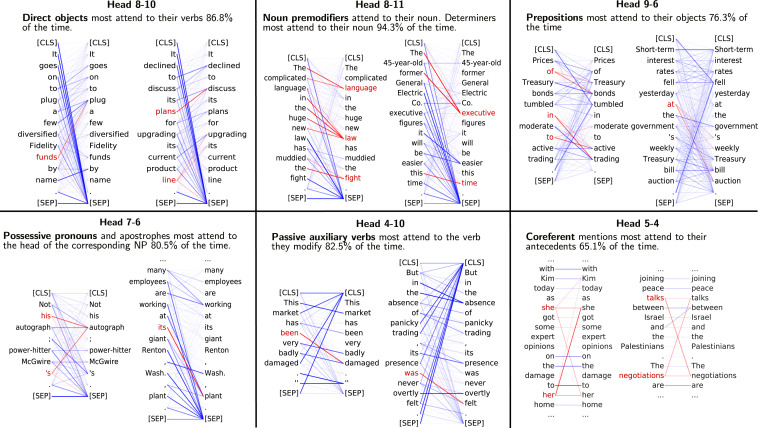

Results for dependency syntax are shown in Table 1. No single attention head corresponds well to dependency syntax overall; the best head gets 34.5% accuracy, which is not much better than the right-branching baseline (26.3% accuracy). However, we find that certain attention heads specialize to specific dependency relations, sometimes achieving high accuracy and substantially outperforming the fixed-offset baseline. Qualitative examples of these attention heads are shown in Fig. 6. Beyond looking at individual attention heads, we also report the combined score when taking the best attention head for each dependency type and find it achieves around 70% precision, substantially higher than the baseline. Explicitly incorporating syntactic information to improve attention has been an active area of NLP research (39–41). Our results suggest that self-supervised training can cause learned syntax-aware attention to arise in NLP models.

Table 1.

Well-performing BERT attention heads on WSJ SD dependency parsing by dependency type

| Attention | Baseline | |

| Relation | precision | precision |

| Microaverage across | ||

| dependency types | ||

| Best single head | 34.5 | 26.3 |

| Best head per dependency type | 69.3 | 50.8 |

| Single heads for individual | ||

| dependency types | ||

| Nominal subject | 58.4 | 45.4 |

| Direct object | 86.8 | 40.0 |

| Clausal complement | 48.8 | 12.4 |

| Object of preposition | 76.3 | 34.6 |

| Predeterminer | 94.3 | 51.7 |

| Marker | 50.7 | 14.5 |

| Passive auxiliary | 82.5 | 40.5 |

| Phrasal verb particle | 99.1 | 91.4 |

Fig. 6.

Some BERT attention heads that appear sensitive to linguistic phenomena, despite not being explicitly trained on linguistic annotations. In the example attention maps, the darkness of a line indicates the size of the attention weight. All attention to/from red words is colored red; these words are chosen to highlight certain of the attention heads’ behaviors. [CLS] (classification) and [SEP] (separator) are special tokens BERT adds to the input during preprocessing. Attention heads are numbered by their layer and index in BERT. Reprinted with permission from ref. 59, which is licensed under CC BY 4.0.

We note that heads can disagree with annotation conventions while still performing syntactic behavior. For example, head 7-6 marks the ’s as the dependent (e.g., Fig. 6, Bottom Left) for the noun possessive relation, while gold-standard labels mark the complement of an ’s as the dependent. There is significant arbitrariness and variation in the annotation conventions of different treebanks and linguistic theories, and such behavior is not clearly wrong. The disagreement highlights how these syntactic behaviors in BERT emerge as a by-product of self-supervised training, not by copying a human design.

Results for coreference are shown in Table 2. One of BERT’s attention heads achieves quite strong performance, outscoring the rule-based system. It is particularly effective on nominal mentions, perhaps because neural representations are well suited to fuzzy matching between synonyms (e.g., Fig. 6, Bottom Right).

Table 2.

Precisions (%) of systems selecting a correct antecedent for a coreferent mention in the CoNLL-2012 data by mention type

| Model | All | Pronoun | Proper | Nominal |

| Nearest | 15 | 23 | 9 | 11 |

| Rule based | 66 | 72 | 73 | 48 |

| Head 5-4 | 70 | 68 | 76 | 64 |

Finding Syntax Trees in Word Representations

Since many of BERT’s attention heads encode individual syntactic relations, it is natural to wonder whether the representation, that is, the vectors that represent the words in each layer of BERT, embed syntax trees. More generally, a fundamental question when investigating linguistic structure in neural networks is whether the internal representations of networks are reconcilable with the tree structures of sentences. At face value, this seems unlikely; trees are discrete structures, not immediately compatible with the high-dimensional real-valued spaces of neural representations.

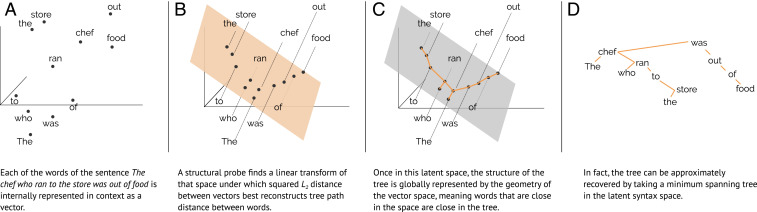

In this section, we explain the structural probe method (42) for finding trees embedded in the internal representations of a network and demonstrate the surprising extent to which syntax trees are linearly extractable from internal representations. At a high level, this method finds a single distance metric on which, when applied to any two word representations constructed by the model for the same sentence, approximates the result of the distance metric defined by the syntax tree of that sentence (Fig. 7).

Fig. 7.

(A–D) An overview of the structural probe method.

The Structural Probe Method.

To test whether trees are embedded in an representation, we require common ground between trees and vector spaces. We observe that the set of nodes in an undirected tree is a metric space, where the metric between nodes and is defined as the number of edges in the path between and . Given only a path metric, one can reconstruct the tree itself by indicating that all elements with equal to 1 are connected by an edge. So, instead of attempting to find trees in , we can look for a distance metric, a more natural notion for a vector space. “Looking for a distance metric” means defining a distance metric with tunable parameters and using supervised data to find the parameters that best fit the data.

Intuitively, we want to construct a distance metric that focuses on certain aspects of the space; we expect only some aspects of the representation to encode syntax, since most will encode sentence meaning. We note that distance on can be parameterized with a positive semidefinite¶ matrix . All such matrices can in turn be represented as for some matrix , leading to a distance of the following form:

| [4] |

The above distance focuses on the aspects of that have high dot product with the rows of .

We want to find a linear transformation such that syntax trees’ path metrics are best approximated by the squared# distance , on internal representations. We approximate this by finding such that it best recreates all pairs of distances for sentences in a dataset of sentences annotated with syntax trees by humans. We consider each sentence , which has tree structure . This tree defines a distance for each pair of words in the sentence. Recalling that the internal representations of and are denoted and , we want the difference between and to be as small as possible in expectation. We implement this by averaging over all sentences in the human-parsed corpus and normalize each sentence’s influence by the number of pairs of words in the sentence, . This leads to the following optimization problem for finding :

| [5] |

We approximate this objective through gradient descent to find .

Once we have a distance metric that approximates tree distance, we can predict trees on new sentences and compare them to human-annotated trees. Recall that given the true tree distances , we can reconstruct the tree by constructing an edge between each pair of words with distance 1. This is equivalent to taking the minimum spanning tree of words in the distance space. To construct trees for a new sentence, we similarly predict distances for all pairs of words and construct the minimum-spanning tree according to the predicted distances (Fig. 7C).

So far in this section, we have discussed syntax trees as being undirected, an assumption we make so that we can view them as distance metrics. However, dependency syntax trees are rooted, and all edges are directed such that every edge moves from the word closer to the root to the word farther away. The directions of these edges reflect which word is governed by the other.

Since distances are unordered functions, we cannot use them to model directed edges; to do this we use a slight variation on the distance probe. We first connect edge orderings and vector spaces through the concept of a norm—a notion of length in a space. Edge orderings in a rooted tree follow the depth norm , equal to the number of edges between a word and the root of the tree. Given that two words have an edge between them, then the word with the greater norm has the edge pointing to it.

Viewing edge orderings as resulting from a norm again helps us connect tree structures to the vector space that internal representations reside in. Like distance metrics, norms are a natural way to look at global properties of a vector space. Our goal is now to find a norm on such that the norm on the vector space approximates the tree depth norm. From here the method is similar to finding the distance metric. We consider an norm parameterized by a matrix , that is, , and attempt to find to make the norm best fit the tree norm in expectation over the corpus:

| [6] |

Experiments.

We analyze the internal representations of BERT, keeping the same dataset as our analysis of attention weights. Because of the supervised nature of the approach, we train our distance metric on the training split of the dataset (sections 2 to 21) and report all tree reconstruction accuracies on the testing split (section 23) of ref. 35.

We evaluate the syntactic distances predicted by the distance structural probe in two ways. The first metric is the undirected unlabeled attachment score (UUAS), which is the fraction of edges in the true syntax tree that are also in the predicted minimum-spanning tree. The second one is the distance Spearman correlation (DSpr.), which measures how close the predicted distances are to recreating the ordering of distances between all pairs of words. We evaluate the syntax depths predicted by a depth structural probe by evaluating the root%, the percentage of tree roots predicted to be the least deep. We also evaluate the norm Spearman correlation (NSpr.), which measures how close the predicted depths are to recreating the ordering of depths defined by the tree.

Because structural probes are supervised models, we want to ensure that the probes themselves are not simply learning to parse on top of BERT, instead of revealing properties of BERT. To do this, we define Proj0 (projection of layer 0), a neural network with random weights that takes in a sequence of noncontextual word representations and outputs a sequence of contextual word representations like the ones we evaluate in BERT. We train and evaluate structural probes on this model’s representations as a baseline against which to compare BERT.∥

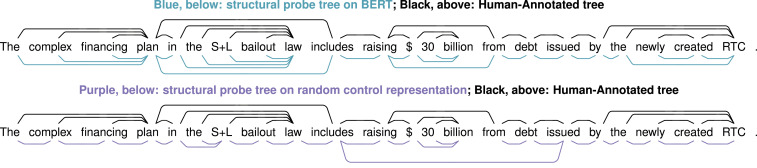

Results.

We find that dependency tree structures are embedded in BERT representations to a striking extent.**

These results are visualized in Figs. 8 and 9. In Table 3, we report our evaluation metrics for BERT, which significantly outperform in terms of UUAS (82.5) compared to our random representation control (59.8) and a baseline that attaches each adjacent word from left to right (48.9).

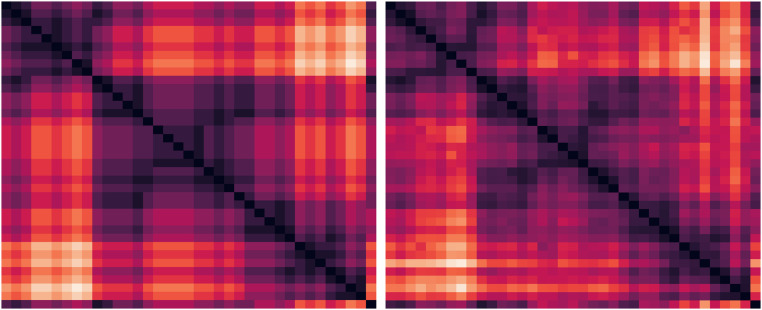

Fig. 8.

Minimum-spanning trees resultant from structural probes on BERT and a random control representation Proj0 compared to the human-annotated parse tree. In the text sentence, “S+L” refers to American savings and loans banks and “RTC” refers to the Resolution Trust Corporation. Reprinted with permission from ref. 42, which is licensed under CC BY 4.0.

Fig. 9.

(Left) Matrix showing gold tree distances between all pairs of words in a sentence, whose linear order runs top to bottom and left to right. Darker colors indicate closer words. (Right) The same distances as embedded by BERT (squared). Reprinted with permission from ref. 42, which is licensed under CC BY 4.0.

Table 3.

Results of structural probes on the WSJ SD test set (baselines in the top half, models hypothesized to encode syntax below)

| Distance | Depth | |||

| Method | UUAS (%) | DSpr. | Root% | NSpr. |

| Linear | 48.9 | 0.58 | 2.9 | 0.27 |

| Proj0 | 59.8 | 0.73 | 64.4 | 0.75 |

| BERT | 81.7 | 0.87 | 90.1 | 0.89 |

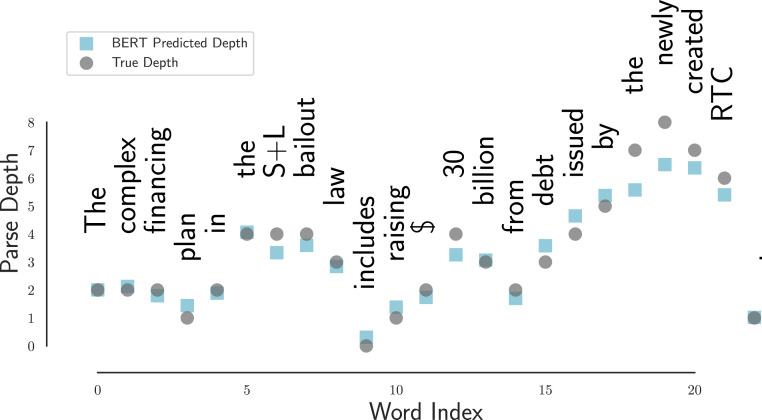

Likewise for parse depth, we find that the structural probe norm reconstructs the dependency tree edge directions to a substantial extent. Fig. 10 shows how predicted depths according to the probe recreate the depths in a true parse tree. Quantitatively (Table 3), the structural probe on BERT correctly identifies the root of the sentence 90.1% of the time, above the baseline of 64.4%.

Fig. 10.

A sentence with human-annotated parse depths (gray circles) and BERT-predicted parse depths (blue squares). Reprinted with permission from ref. 42, which is licensed under CC BY 4.0.

Related Emergent Properties

Our work here studying syntax and coreference is part of a growing body of work exploring what linguistic properties emerge in BERT and related representations. Recent work on deep contextual word representations follows an earlier literature examining distributed representations of word types, with models including latent semantic analysis (44) and neural network models such as word2vec (12) and GloVe (Global Vectors) (13). These models and investigation of them focused much more on lexical semantics. In this section, we focus on probing methods, where a simple supervised model is trained to predict a linguistic property from a fixed representation being examined. We review insights gained not only about BERT, but also about similar neural networks such as ELMo (Embeddings from Language Models) (17).

Syntax.

Predating our attention and structural probes, early work by Shi et al. (45) introduced the probing task of predicting the label of the smallest phrasal constituent above each word in the tree using its representation (similar to “Is this word in a noun phrase, a verb phrase, or other?”). This method has been extended (46, 47) to predicting the label of the dependency edge governing a word (e.g., nominal subject, direct object, etc.), the label of the edge governing the word’s parent, and so on. Predicting these labels requires information about how a word takes part in the meaning of a sentence at multiple levels of hierarchy. Separately, it has been shown that the presence of individual dependency edges can be predicted from probes on pairs of word representations (47, 48).

Parts of Speech.

Word tokens are categorized into classes like nouns, adjectives, and adverbs. The same word can have different parts of speech depending on context, as in “the guard sounds (verb) the alarm upon hearing sounds (noun).” Several studies use part-of-speech tagging as a probing task (48–51) and demonstrate that BERT and related models encode information necessary for predicting the part of speech of each word in context from its vector representation. Considerable part-of-speech information was also available from context-free, word type-level embeddings like word2vec and GloVe (12, 13), as shown by earlier probing work (52), but the more recent studies additionally demonstrate the ability of contextual word representations to encode information about the rest of the sequence useful for disambiguating the part of speech in context, as needed for the above example.

Morphology.

Morphology concerns how the form and meaning of words are derived from subword components, like how “runs” is an inflected form of run, or “silversmith” can be decomposed into silver + smith. Morphological tagging requires the prediction of properties like singular and third person for runs. Such tagging is prevalent in languages with rich morphology like Turkish, where one would predict definite, locative, singular for garajın (of the garage). Conneau et al. (53) found that NLP systems encode information about the number (singular, plural) of the subjects and objects of a sentence even when one represents the whole sentence as a single vector (as opposed to using the vector representation of the subject and object to do so). Belinkov et al. (54) ran probing experiments on neural machine translation systems (like Google Translate), showing that they implicitly learn a considerable amount of information about the morphology of both the input language and the output language.

Semantics.

Semantics refers to how language structures encode information about events and ideas. Probing work has shown that BERT and similar systems encode in each word representation information about the semantic role of each word in the sentence, like agent (the “doer” of an action) and patient (the thing the action is done to) (47, 48). There is also evidence that finer-grained attributes, like whether a doer was aware it did the action, also seem to be encoded (48).‡‡

Named entity recognition (NER) is a word-tagging task (like part-of-speech tagging) which labels words as being part of a type of named entity or not. For example, Apple would be labeled organization, while Tim and Cook would be labeled person. Multiple studies have shown that the word representations of BERT encode information about the category of words in NER (47, 48).

Conclusion

We have demonstrated the surprising extent to which BERT, an NLP representation learner trained via self-supervision on word prediction tasks, implicitly learns to recover the rich latent structure of human language. This result has been demonstrated in attention: how BERT looks at sentential context for encoding a word. Separately, we found a similar result through structural probes on internal vector representations, showing that the hierarchical tree structures of language emerge in BERT vector space. That such rich information emerges through self-supervision is surprising and exciting, with intriguing implications for both NLP research and the logical problem of language acquisition.

Acknowledgments

We thank Tatsunori B. Hashimoto, Abigail See, Siva Reddy, Drew A. Hudson, Roma Patel, Percy Liang, and the PNAS reviewers for helpful comments. K.C. was supported by a Google Fellowship. J.H. and C.D.M. were partly funded by a gift from Tencent Corp.

Footnotes

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “The Science of Deep Learning,” held March 13–14, 2019, at the National Academy of Sciences in Washington, DC. NAS colloquia began in 1991 and have been published in PNAS since 1995. From February 2001 through May 2019 colloquia were supported by a generous gift from The Dame Jillian and Dr. Arthur M. Sackler Foundation for the Arts, Sciences, & Humanities, in memory of Dame Sackler’s husband, Arthur M. Sackler. The complete program and video recordings of most presentations are available on the NAS website at http://www.nasonline.org/science-of-deep-learning.

Competing interest statement: K.C. and U.K. have been/are employed part time at Google Inc., and K.C. has a Google PhD Fellowship. Researchers at Google Inc. developed the BERT model analyzed in this paper.

This article is a PNAS Direct Submission. M.G. is a guest editor invited by the Editorial Board.

Data deposition: Code and most of the data to reproduce the analyses in this paper are freely available at https://github.com/clarkkev/attention-analysis and https://github.com/john-hewitt/structural-probes.

*There are two main approaches to depicting a sentence’s syntactic structure: phrase structure (or constituency) and dependency structure (or grammatical relations). The former is dominant in modern linguistics, but in this paper we use the latter, which is dominant in computational linguistics. Both representations capture similar, although generally not identical, information (2).

†The approach of self-supervised learning has existed for decades, used particularly in robotics, e.g., refs. 9 and 10, but it has recently been revived as a focus of interest, used also for vision and language.

§Intuitively, the precision score in this case measures what percent of the time the most-attended-to word of a verb is that verb’s direct object.

¶Strictly, since is positive semidefinite instead of positive definite, the result is a semimetric.

#We found that squared distance performs substantially better than the true distance at approximating tree distance (42), an observation explored in more depth by ref. 43. If a valid distance metric is desired, using the square root of all predictions results in the same relative distances.

See ref. 42 for more details on these evaluation metrics and Proj0.

**We determined and report the best results, taken from the 16th layer of the original Google BERT large cased model (24 layers, 1,024-dimensional hidden layers, 16 attention heads per layer).

Data Availability.

Code and most of the data to reproduce the analyses in this paper are freely available at https://github.com/clarkkev/attention-analysis and https://github.com/john-hewitt/structural-probes (55, 56); the BERT models are freely available at https://github.com/google-research/bert (20). The syntactic evaluations use the Penn Treebank-3 (PTB), which is available under license from he Linguistic Data consortium at https://catalog.ldc.upenn.edu/LDC99T42 (35).

References

- 1.Kuhl P. K., Early language acquisition: Cracking the speech code. Nat. Rev. Neurosci. 5, 831–843 (2004). [DOI] [PubMed] [Google Scholar]

- 2.Rambow O., “The simple truth about dependency and phrase structure representations: An opinion piece” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Kaplan R., Burstein J., Harper M., Penn G., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2010), pp. 337–340. [Google Scholar]

- 3.Pizlo Z., Perception viewed as an inverse problem. Vis. Res. 41, 3145–3161 (2001). [DOI] [PubMed] [Google Scholar]

- 4.Marcus M. P., Santorini B., Marcinkiewicz M. A., Building a large annotated corpus of English: The Penn treebank. Comput. Ling. 19, 313–330 (1993). [Google Scholar]

- 5.Nivre J., et al. , “Universal dependencies V1: A multilingual treebank collection” in LREC International Conference on Language Resources and Evaluation, Calzolari N., et al., Eds. (European Language Resources Association, Paris, France, 2016), pp. 1659–1666. [Google Scholar]

- 6.Collins M., Head-driven statistical models for natural language parsing. Comput. Ling. 29, 589–637 (2003). [Google Scholar]

- 7.Chen D., Manning C. D., “A fast and accurate dependency parser using neural networks” in Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Moschitti A., Pang B., Daelemans W., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2014), pp. 740–750. [Google Scholar]

- 8.Dozat T., Manning C. D., “Deep biaffine attention for neural dependency parsing.” https://openreview.net/pdf?id=Hk95PK9le. Accessed 21 May 2020.

- 9.Schmidhuber J., “An on-line algorithm for dynamic reinforcement learning and planning in reactive environments” in Proceedings of the International Joint Conference on Neural Networks (IJCNN) (Institute of Electrical and Electronic Engineers, Piscataway, NJ, 1990), pp. 253–258. [Google Scholar]

- 10.Lieb D., Lookingbill A., Thrun S., “Adaptive road following using self-supervised learning and reverse optical flow” in Proceedings of Robotics: Science and Systems (RSS), Thrun S., Sukhatme G. S., Schaal S., Eds. (MIT Press, Cambridge, MA, 2005), pp. 273–280. [Google Scholar]

- 11.Taylor W. L., Cloze procedure: A new tool for measuring readability. Journal. Q. 30, 415–433 (1953). [Google Scholar]

- 12.Mikolov T., Sutskever I., Chen K., Corrado G. S., Dean J., “Distributed representations of words and phrases and their compositionality” in Advances Neural Information Processing Systems 26, Burges C. J. C., Bottou L., Welling M., Ghahramani Z., Weinberger K.Q., Eds. (Curran Associates, Red Hook, NY, 2013), pp. 3111–3119. [Google Scholar]

- 13.Pennington J., Socher R., Manning C., “Glove: Global vectors for word representation” in Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Moschitti A., Pang B., Daelemans W., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2014), pp. 1532–1543. [Google Scholar]

- 14.Bengio Y., Learning deep architectures for AI. Found. Trends Mach. Learn. 2, 1–127 (2009). [Google Scholar]

- 15.Berwick R. C., Pietroski P., Yankama B., Chomsky N., Poverty of the stimulus revisited. Cognit. Sci. 35, 1207–1242 (2011). [DOI] [PubMed] [Google Scholar]

- 16.Griffiths T. L., Rethinking language: How probabilities shape the words we use. Proc. Natl. Acad. Sci. U.S.A. 108, 3825–3826 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Peters M., et al. , “Deep contextualized word representations” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Walker M., Ji H., Stent A., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 2227–2237. [Google Scholar]

- 18.Devlin J., Chang M. W., Lee K., Toutanova K., “BERT: Pre-training of deep bidirectional transformers for language understanding” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Burstein J., Doran C., Solorio T., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2019), pp. 4171–4186. [Google Scholar]

- 19.Chomsky N., Knowledge of Language: Its Nature, Origin, and Use (Praeger, New York, NY, 1986). [Google Scholar]

- 20.Devlin J., Chang M.-W., Lee K., Toutanova K., BERT. https://github.com/google-research/bert. Accessed 14 May 2020.

- 21.Vaswani A., et al. , “Attention is all you need” in Advances in Neural Information Processing Systems 30, Guyon I., et al., Eds. (Curran Associates, Red Hook, NY, 2017), pp. 5998–6008. [Google Scholar]

- 22.Chung J., Gulcehre C., Cho K., Bengio Y., Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv:1412.3555 (11 Dececember 2014).

- 23.Bahdanau D., Cho K., Bengio Y., Neural machine translation by jointly learning to align and translate. arXiv:1409.0473 (16 January 2019).

- 24.Linzen T., Dupoux E., Goldberg Y., Assessing the ability of LSTMs to learn syntax-sensitive dependencies. Trans. Assoc. Comput. Linguist. 4, 521–535 (2016). [Google Scholar]

- 25.Gulordava K., Bojanowski P., Grave E., Linzen T., Baroni M., “Colorless green recurrent networks dream hierarchically” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Walker M., Ji H., Stent A., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 1195–1205. [Google Scholar]

- 26.Marvin R., Linzen T., “Targeted syntactic evaluation of language models” in Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Riloff E., Chiang D., Hockenmaier J., Tsujii J., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 1192–1202. [Google Scholar]

- 27.Kuncoro A., et al. , “LSTMs can learn syntax-sensitive dependencies well, but modeling structure makes them better” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Gurevych I., Miyao Y., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 1426–1436. [Google Scholar]

- 28.Goldberg Y., Assessing BERT’s syntactic abilities. arXiv:1901.05287 (16 January 2019).

- 29.Bock K., Miller C. A., Broken agreement. Cognit. Psychol. 23, 45–93 (1991). [DOI] [PubMed] [Google Scholar]

- 30.Phillips C., Wagers M. W., Lau E. F., “Grammatical illusions and selective fallibility in real-time language comprehension” in Experiments at the Interfaces, Syntax and Semantics, Runner J., Ed. (Emerald Group Publishing Limited, 2011), vol. 37, pp. 147–180. [Google Scholar]

- 31.Luong T., Pham H. Q., Manning C. D., “Effective approaches to attention-based neural machine translation” in Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Màrquez L., Callison-Burch C., Su J., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2015), pp. 1412–1421. [Google Scholar]

- 32.Sharma S., Kiros R., Salakhutdinov R., Action recognition using visual attention. arxiv:1511.04119 (14 February 2016).

- 33.Xu K., et al. , “Show, attend and tell: Neural image caption generation with visual attention” in Proceedings of the International Conference on Machine Learning, Bach F., Blei D., Eds. (Proceedings of Machine Learning Research, Brookline, MA, 2015), pp. 2048–2057. [Google Scholar]

- 34.Chorowski J. K., Bahdanau D., Serdyuk D., Cho K., Bengio Y., “Attention-based models for speech recognition” in Advances Neural Information Processing Systems 28, Cortes C., Lawrence N. D., Lee D. D., Sugiyama M., Garnett R., Eds. (Curran Associates, Red Hook, NY, 2015), pp. 577–585. [Google Scholar]

- 35.Marcus M. P., Santorini B., Marcinkiewicz M. A., Taylor A., Treebank-3. Linguistic Data Consortium LDC99T42. https://catalog.ldc.upenn.edu/LDC99T42. Accessed 14 May 2020.

- 36.de Marneffe M. C., MacCartney B., Manning C. D., “Generating typed dependency parses from phrase structure parses” in LREC International Conference on Language Resources and Evaluation, Calzolari N., et al., Eds. (European Language Resources Association, Paris, France, 2006), pp. 449–454. [Google Scholar]

- 37.Pradhan S., Moschitti A., Xue N., Uryupina O., Zhang Y., “CoNLL-2012 shared task: Modeling multilingual unrestricted coreference in Ontonotes” in Joint Conference on EMNLP and CoNLL – Shared Task, Pradhan S., Moschitti A., Xue N., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2012), pp. 1–40. [Google Scholar]

- 38.Lee H., et al. , “Stanford’s multi-pass sieve coreference resolution system at the CoNLL-2011 shared task” in Proceedings of the Conference on Computational Natural Language Learning: Shared Task, Pradhan S., Ed. (Association for Computational Linguistics, Stroudsburg, PA, 2011), pp. 28–34. [Google Scholar]

- 39.Eriguchi A., Hashimoto K., Tsuruoka Y., “Tree-to-sequence attentional neural machine translation” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Erk K., Smith N. A., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2016), pp. 823–833. [Google Scholar]

- 40.Chen K., Wang R., Utiyama M., Sumita E., Zhao T., “Syntax-directed attention for neural machine translation” in Proceedings of the AAAI Conference on Artificial Intelligence (AAAI Press, Palo Alto, CA, 2018), pp. 4792–4799. [Google Scholar]

- 41.Strubell E., Verga P., Andor D., Weiss D. I., McCallum A., “Linguistically-informed self-attention for semantic role labeling” in Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Riloff E., Chiang D., Hockenmaier J., Tsujii J., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 5027–5038. [Google Scholar]

- 42.Hewitt J., Manning C. D., “A structural probe for finding syntax in word representations” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Burstein J., Doran C., Solorio T., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2019), pp. 4129–4138. [Google Scholar]

- 43.Reif E., et al. , “Visualizing and measuring the geometry of BERT” in Advances in Neural Information Processing Systems 32, Wallach H., et al.,Eds. (Curran Associates, Red Hook, NY, 2019), pp. 8594–8603. [Google Scholar]

- 44.Landauer T. K., Dumais S. T., A solution to Plato’s problem: The latent semantic analysis theory of acquisition, induction, and representation of knowledge. Psychol. Rev. 104, 211–240 (1997). [Google Scholar]

- 45.Shi X., Padhi I., Knight K., “Does string-based neural MT learn source syntax?” in Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Su J., Duh K., Carreras X., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2016), pp. 1526–1534. [Google Scholar]

- 46.Blevins T., Levy O., Zettlemoyer L., “Deep RNNs encode soft hierarchical syntax” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Gurevych I., Miyao Y., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 14–19. [Google Scholar]

- 47.Liu N. F., Gardner M., Belinkov Y., Peters M. E., Smith N. A., “Linguistic knowledge and transferability of contextual representations” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Burstein J., Doran C., Solorio T., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2019), pp. 1073–1094. [Google Scholar]

- 48.Tenney I., et al. , “What do you learn from context? Probing for sentence structure in contextualized word representations.” https://openreview.net/pdf?id=SJzSgnRcKX. Accessed 21 May 2020.

- 49.Peters M., Neumann M., Zettlemoyer L., Yih W. T., “Dissecting contextual word embeddings: Architecture and representation” in Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Riloff E., Chiang D., Hockenmaier J., Tsujii J., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 1499–1509. [Google Scholar]

- 50.Saphra N., Lopez A., “Understanding learning dynamics of language models with SVCCA” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Burstein J., Doran C., Solorio T., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2019), pp. 3257–3267. [Google Scholar]

- 51.Zhang K. W., Bowman S. R., “Language modeling teaches you more syntax than translation does: Lessons learned through auxiliary task analysis” in Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Riloff E., Chiang D., Hockenmaier J., Tsujii J., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 359–361. [Google Scholar]

- 52.Köhn A., “What’s in an embedding? Analyzing word embeddings through multilingual evaluation” in Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Màrquez L., Callison-Burch C., Su J., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2015), pp. 2067–2073. [Google Scholar]

- 53.Conneau A., Kruszewski G., Lample G., Barrault L., Baroni M., “What you can cram into a single \$&!#* vector: Probing sentence embeddings for linguistic properties” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Gurevych I., Miyao Y., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2018), pp. 2126–2136. [Google Scholar]

- 54.Belinkov Y., Durrani N., Dalvi F., Sajjad H., Glass J., “What do neural machine translation models learn about morphology?” in Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Barzilay R., Kan M.-Y., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2017), pp. 861–872. [Google Scholar]

- 55.Clark K., BERT attention analysis. https://github.com/clarkkev/attention-analysis. Deposited 27 June 2019.

- 56.Hewitt J., Structural probes. https://github.com/john-hewitt/structural-probes. Deposited 27 May 2019.

- 57.Oepen S., et al. , “SemEval 2014 task 8: Broad-coverage semantic dependency parsing” in Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), Nakov P., Zesch T., Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2014), pp. 63–72. [Google Scholar]

- 58.Reisinger D., et al. , Semantic proto-roles. Trans. Assoc. Comput. Linguist. 3, 475–488 (2015). [Google Scholar]

- 59.Clark K., Khandelwal U., Levy O., Manning C. D., “What does BERT look at? An analysis of BERT’s attention” in Proceedings of the Second BlackboxNLP Workshop on Analyzing and Interpreting Neural Networks for NLP, Linzen T., Chrupała G., Belinkov Y., Hupkes D., Eds. (Association for Computational Linguistics, Stroudsburg PA, 2019), pp. 276–286. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Code and most of the data to reproduce the analyses in this paper are freely available at https://github.com/clarkkev/attention-analysis and https://github.com/john-hewitt/structural-probes (55, 56); the BERT models are freely available at https://github.com/google-research/bert (20). The syntactic evaluations use the Penn Treebank-3 (PTB), which is available under license from he Linguistic Data consortium at https://catalog.ldc.upenn.edu/LDC99T42 (35).